Is an Unmanned Aerial Vehicle (UAV) Suitable for Extracting the Stand Parameters of Inaccessible Underground Forests of Karst Tiankeng?

Abstract

:1. Introduction

2. Materials and Methods

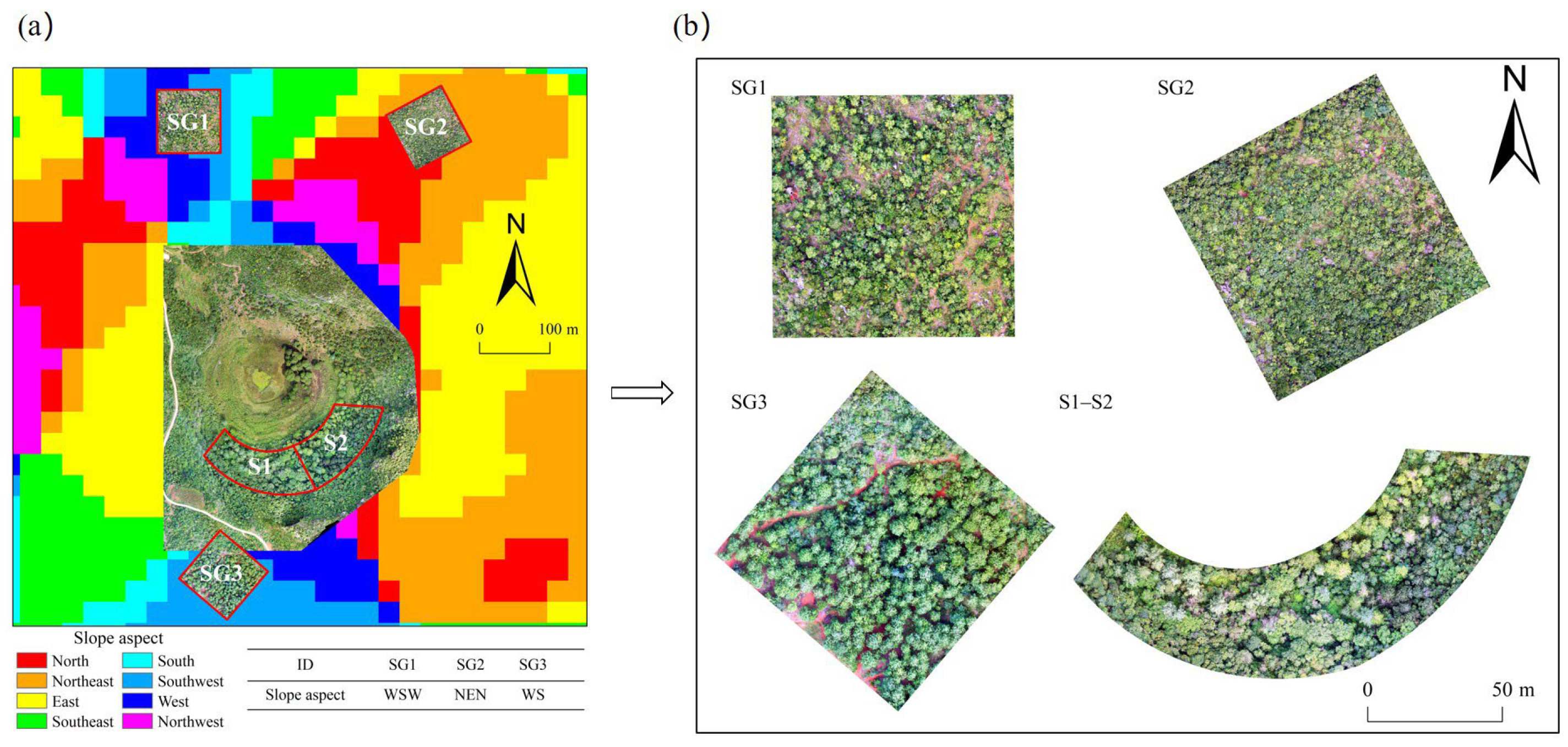

2.1. Study Area

2.2. Data Collections

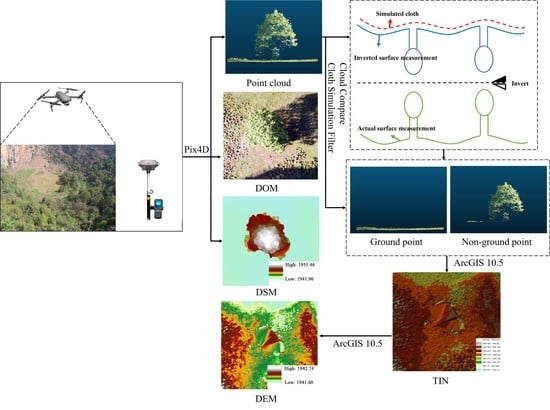

2.3. Data Processing

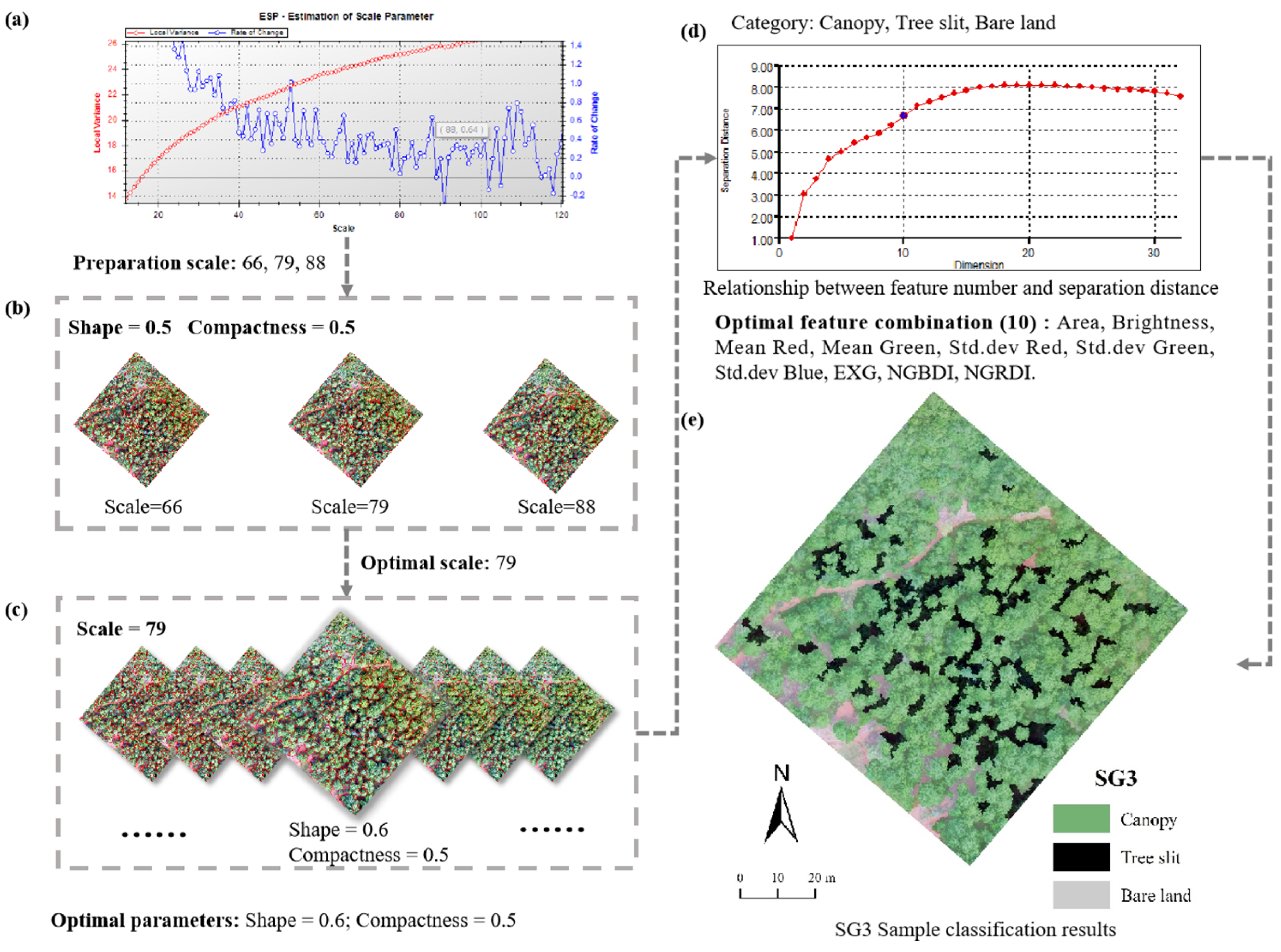

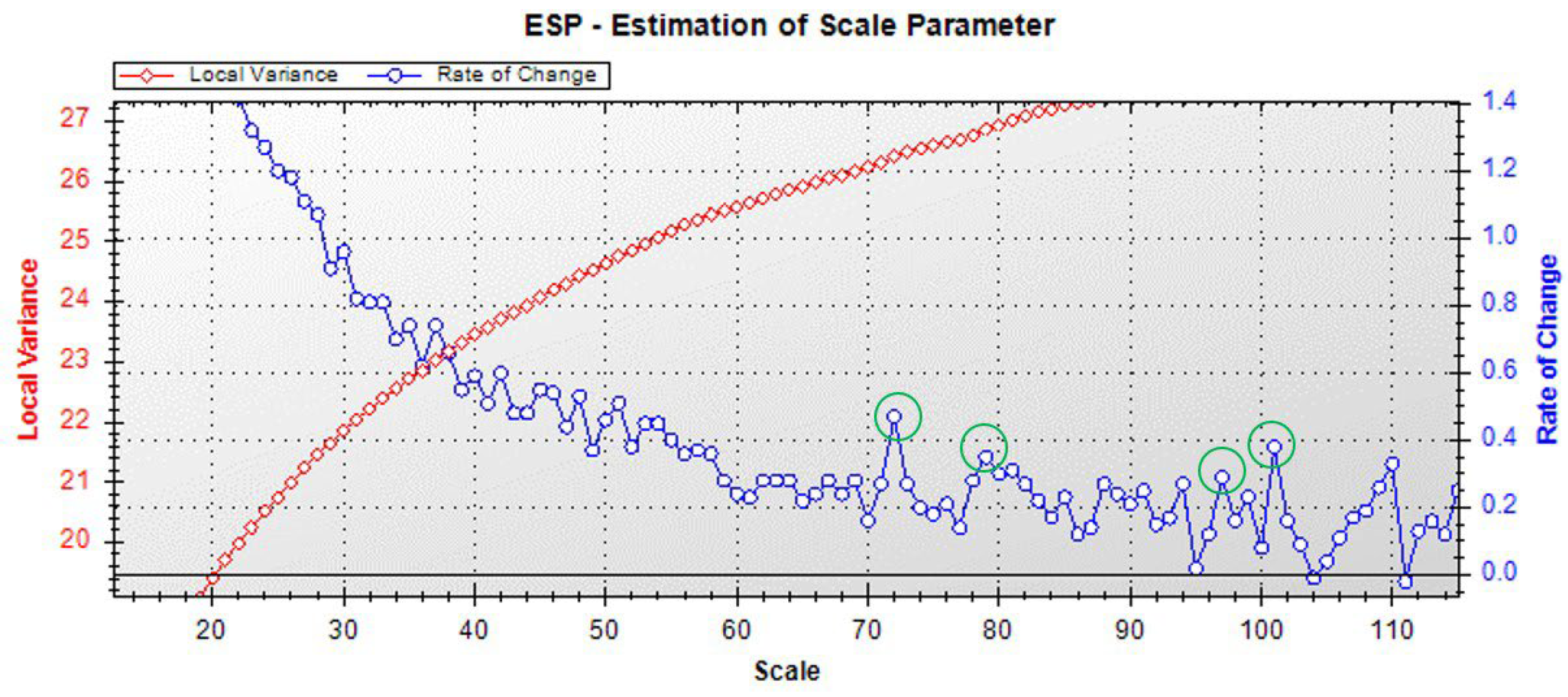

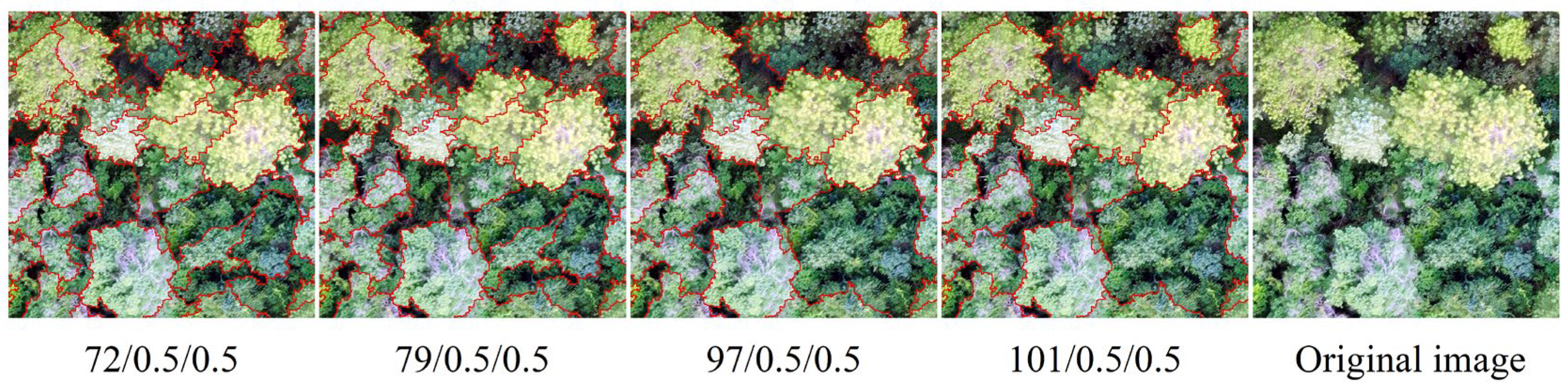

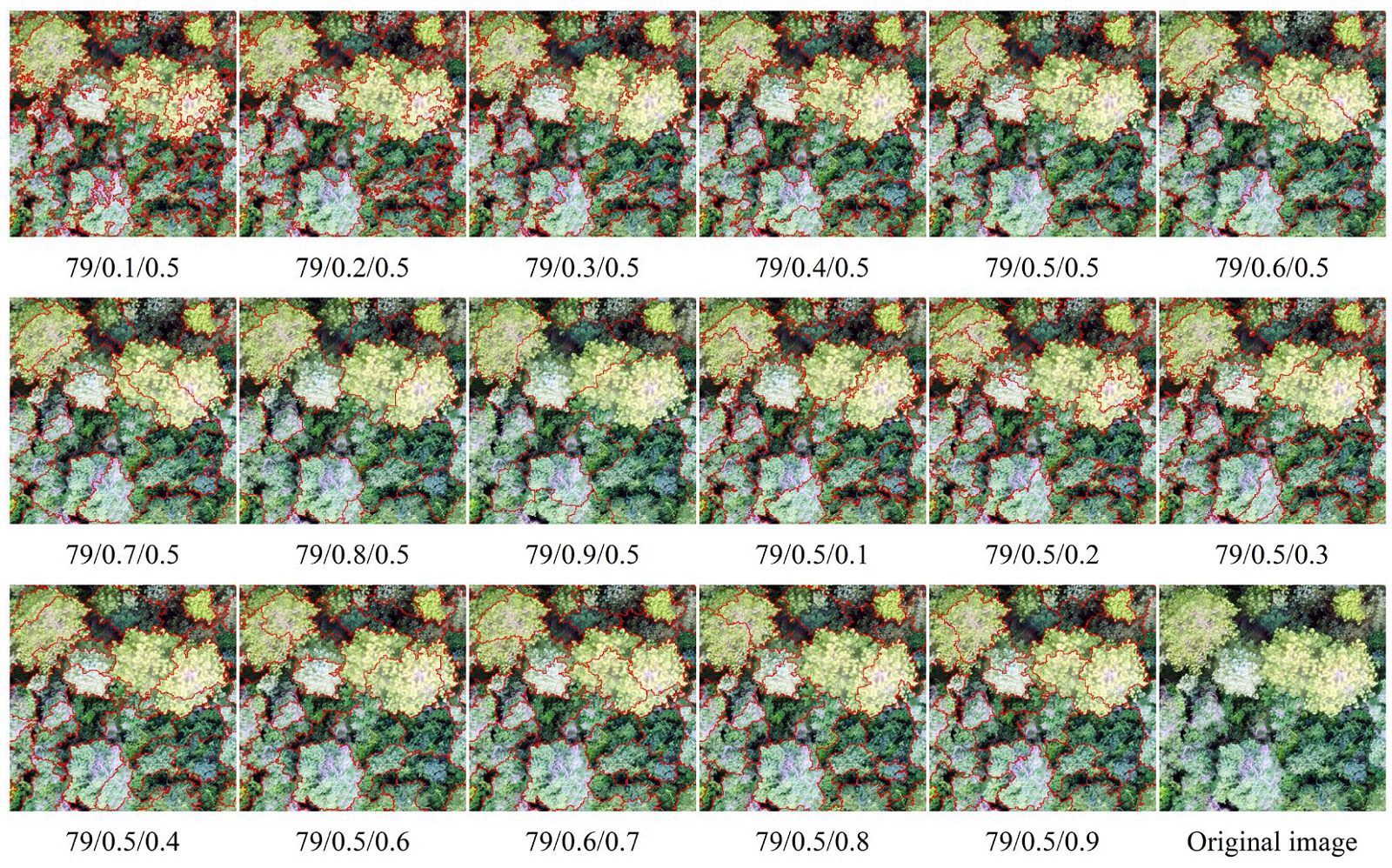

2.4. Multi-Scale Segmentation

2.5. Object-Oriented Classification

2.6. Features Collection

2.6.1. Vegetation Index Characteristics

2.6.2. Spectral Characteristics

2.6.3. Shape Feature

2.6.4. Texture Characteristics

2.6.5. Canopy Height Feature

2.7. Extraction Method of Forest Structure Parameters

2.7.1. Canopy Density

2.7.2. Average Crown Width

2.8. Precision Verification

3. Results

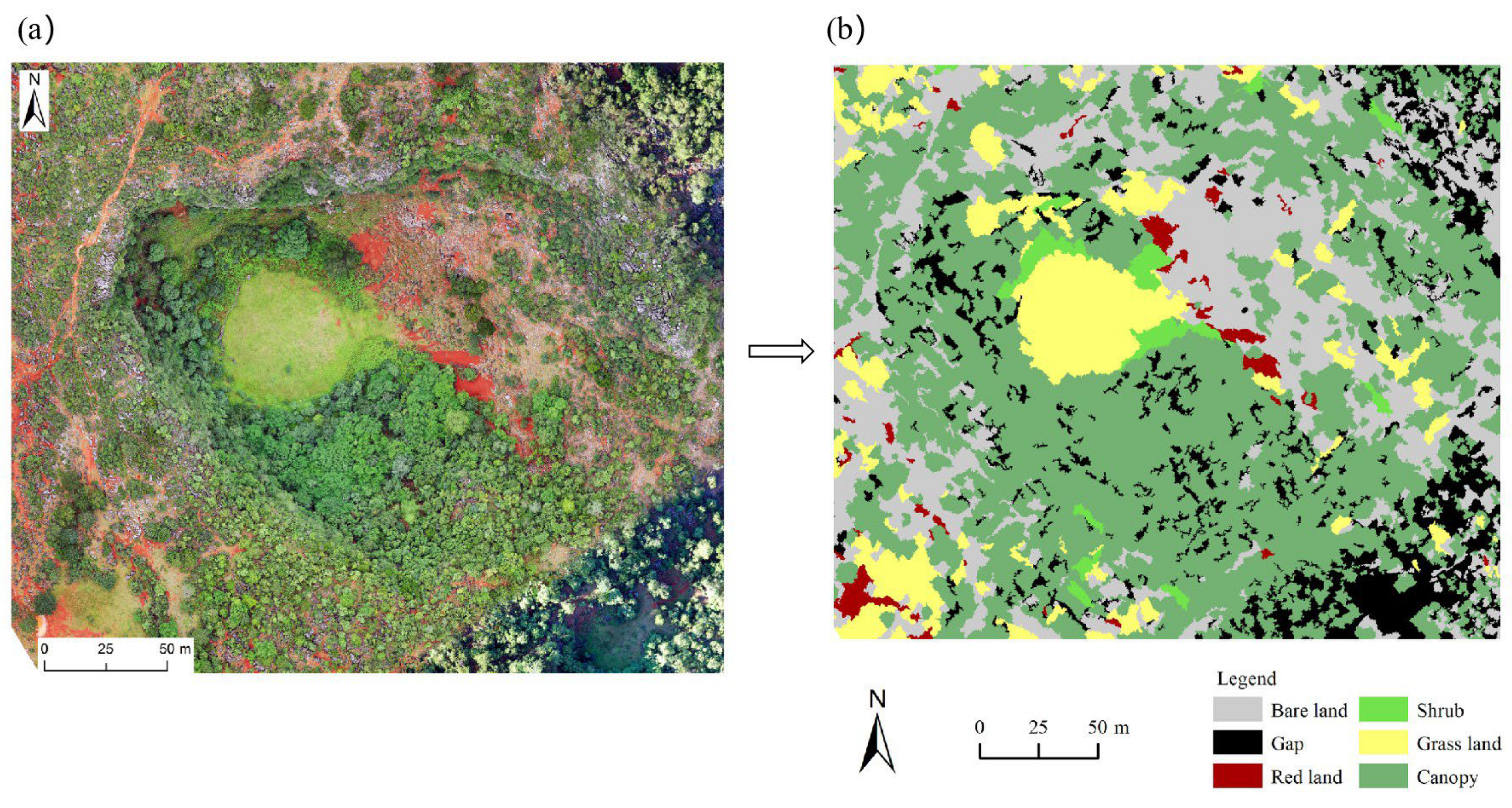

3.1. Canopy Extraction from Tiankengs

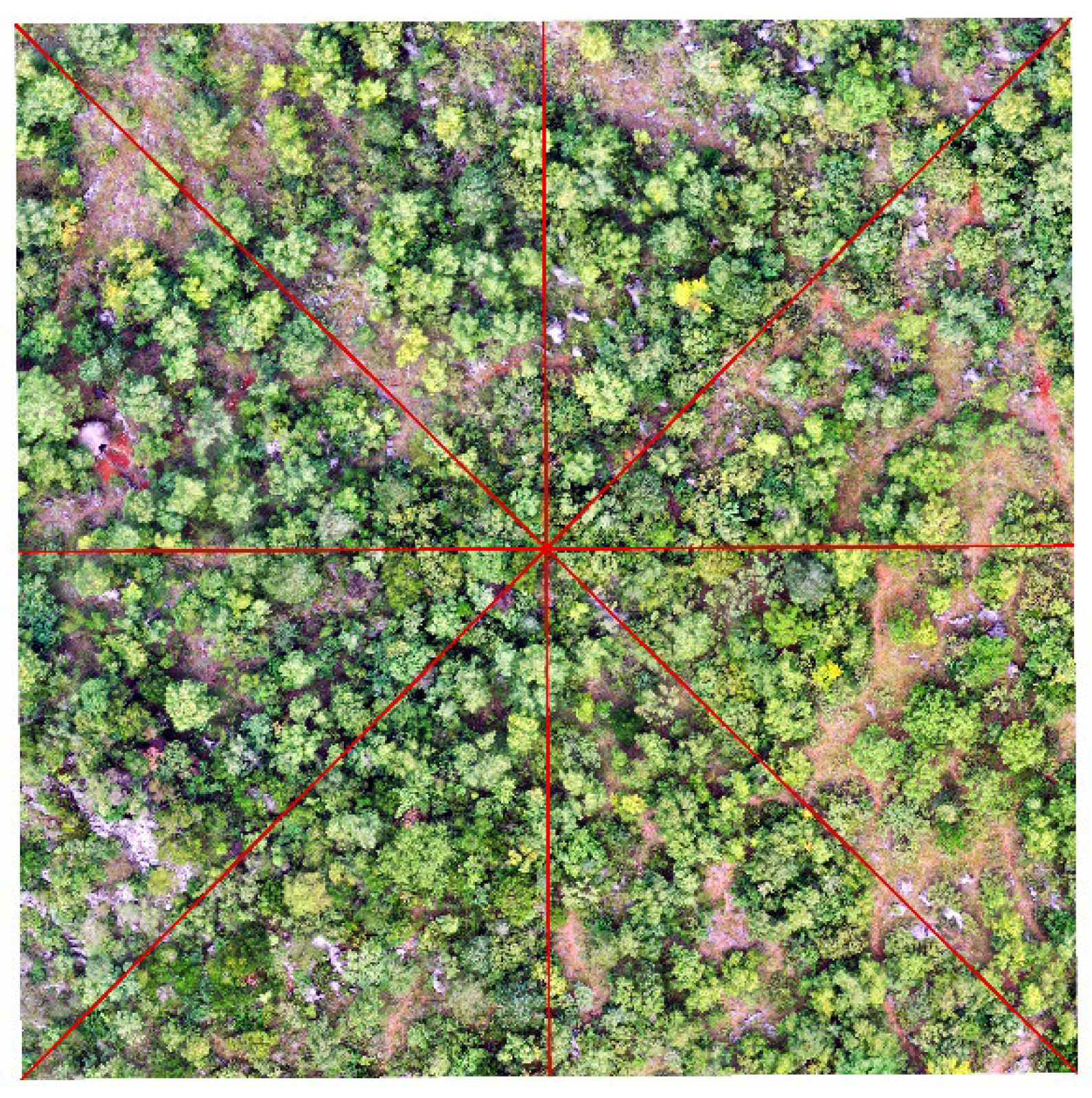

3.2. Canopy Extraction from the Ground Outside the Tiankeng

3.3. Extraction of Forest Stand Parameters

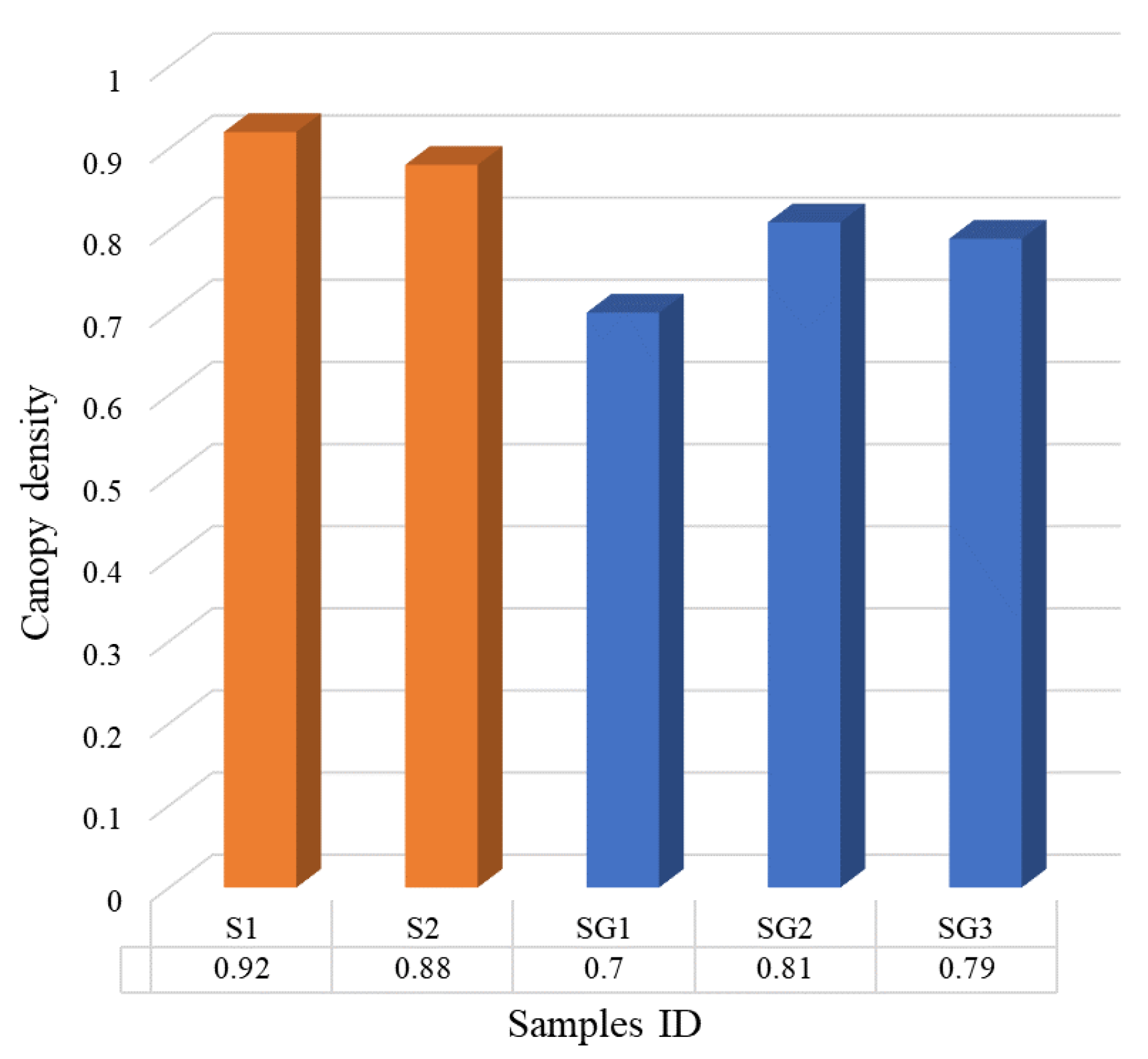

3.3.1. Forest Canopy Density

3.3.2. Average Crown Width

3.4. Accuracy Verification Results

3.4.1. Classification Result Accuracy Verification

3.4.2. Accuracy Verification of Canopy Density Parameters

4. Discussion

4.1. Application Potential of UAV Technology in Tiankeng-like Underground Forests

4.2. Feature Selection Is the Key to Tiankeng Underground Forest Canopy Extraction Based on UAV Images

5. Conclusions

- (1)

- UVA is a reliable technical tool to extract stand parameters in the underground forests of tiankeng. UAV could overcome the problem of inaccessibility of tiankengs. This helped to further explore the plant functional trait variability of underground forests of karst tiankengs. Drone technology has promoted plant ecology research.

- (2)

- The forest quality inside the tiankeng underground forest was better than those outside the tiankeng. The canopy density of the tiankeng was 0.90 and the average canopy width was 5.38 m. Outside the tiankeng, the canopy density and average crown width were 0.77 and 4.83 m, respectively. Compared with outside the tiankeng, the canopy density and canopy width of the underground forest were significantly larger. The enclosed tiankeng microhabitat provided a good habitat for plant communities.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Sample Code | Canopy (m2) | Tree Slit (m2) | Bare Land (m2) | Bare Rock (m2) |

|---|---|---|---|---|

| SG1 | 5640.8 | 906.3 | 1552.3 | - |

| SG2 | 6545.9 | 1128.6 | 125.3 | 299.9 |

| SG3 | 6404.6 | 1033.1 | 662.2 | - |

References

- Zhu, X.; Waltham, T. Tiankeng: Definition and description. Speleogenesis Evol. Karst Aquifers 2006, 1, 2. [Google Scholar]

- Zhu, X.; Chen, W. Tiankengs in the karst of China. Carsologica Sin. 2006, 7–24. [Google Scholar] [CrossRef]

- Zhu, X.; Zhu, D.; Huang, B.; Chen, W.; Zhang, Y.; Han, D. A brief study on karst tiankeng. Carsologica Sin. 2003, 22, 51–65. [Google Scholar]

- Shui, W.; Chen, Y.; Wang, Y.; Su, Z.; Zhang, S. Origination, study progress and prospect of karst tiankeng research in China. Acta Geogr. Sin. 2015, 70, 431–446. [Google Scholar]

- Shen, L.; Hou, M.; Xu, W.; Huang, Y.; Liang, S.; Zhang, Y.; Jiang, Z.; Chen, W. Research on flora of seed plants in Dashiwei Karst Tiankeng Group of Leye, Guangxi. Guihaia 2020, 40, 751–764. [Google Scholar]

- Shui, W.; Chen, Y.; Jian, X.; Jiang, C.; Wang, Q.; Zeng, Y.; Zhu, S.; Guo, P.; Li, H. Original karst tiankeng with underground virgin forest as an inaccessible refugia originated from a degraded surface flora in Yunnan, China. Sci. Rep. 2022, 12, 9408. [Google Scholar] [CrossRef]

- Pu, G.; Lv, Y.; Xu, G.; Zeng, D.; Huang, Y. Research progress on karst tiankeng ecosystems. Bot. Rev. 2017, 83, 5–37. [Google Scholar] [CrossRef]

- Huang, L.; Yang, H.; An, X.; Yu, Y.; Yu, L.; Huang, G.; Liu, X.; Chen, M.; Xue, Y. Species abundance distributions patterns between tiankeng forests and nearby non-tiankeng forests in southwest China. Diversity 2022, 14, 64. [Google Scholar] [CrossRef]

- Zhu, S.; Jiang, C.; Shui, W.; Guo, P.; Zhang, Y.; Feng, J.; Gao, C.; Bao, Y. Vertical distribution characteristics of plant community in shady slope of degraded tiankeng talus: A case study of Zhanyi Shenxiantang in Yunnan, China. Carsologica Sin. 2020, 31, 1496–1504. [Google Scholar]

- Zang, R.; Jiang, Y. Review on the architecture of tropical trees. Sci. Silvae Sin. 1998, 5, 114–121. [Google Scholar]

- Poorter, L.; Bongers, L.; Bongers, F. Architecture of 54 moist-forest tree species: Traits, trade-offs, and functional groups. Ecology 2006, 87, 1289–1301. [Google Scholar] [CrossRef]

- Kohyama, T. Significance of architecture and allometry in saplings. Funct. Ecol. 1987, 1, 399–404. [Google Scholar] [CrossRef]

- Tan, Y.; Shen, W.; Tian, H.; Fu, Z.; Ye, J.; Zheng, W.; Huang, S. Tree architecture variation of plant communities along altitude and impact factors in Maoer Mountain, Guangxi, China. Chin. J. Appl. Ecol. 2019, 30, 2614–2620. [Google Scholar]

- Qin, X.; Li, Z.; Yi, H. Extraction method of tree crown using high-resolution satellite image. Remote Sens. Technol. Appl. 2005, 2, 228–232. [Google Scholar]

- Wang, M.; Lin, J.; Lin, Y.; Li, Y. Subalpine coniferous forest crown information automatic extraction based on optical UAV remote sensing. For. Resour. Manag. 2017, 4, 82–88. [Google Scholar]

- Sun, Z.; Pan, L.; Sun, Y. Extraction of tree crown parameters from high-density pure Chinese fir plantations based on UAV images. J. Beijing For. Univ. 2020, 42, 20–26. [Google Scholar]

- Yan, L.; Liao, X.; Zhou, C.; Fan, B.; Gong, J.; Cui, P.; Zheng, Y.; Tan, X. The impact of UAV remote sensing technology on the industrial development of China: A review. J. Geo-Inf. Sci. 2019, 21, 476–495. [Google Scholar]

- Zhu, X.; Meng, L.; Zhang, Y.; Weng, Q.; Morris, J. Tidal and meteorological influences on the growth of invasive spartina alterniflora: Evidence from UAV remote sensing. Remote Sens. 2019, 11, 1208. [Google Scholar] [CrossRef]

- Chen, Y.; Hou, C.; Tang, Y.; Zhuang, J.; Lin, J.; He, Y.; Guo, Q.; Zhong, Z.; Lei, H.; Luo, S. Citrus tree segmentation from UAV images based on monocular machine vision in a natural orchard environment. Sensors 2019, 19, 5558. [Google Scholar] [CrossRef]

- Yan, W.; Guan, H.; Cao, L.; Yu, Y.; Li, C.; Lu, J. A self-adaptive mean shift tree-segmentation method using UAV LiDAR data. Remote Sens. 2020, 12, 515. [Google Scholar] [CrossRef]

- Wu, X.; Shen, X.; Cao, L.; Wang, G.; Cao, F. Assessment of individual tree detection and canopy cover estimation using Unmanned Aerial Vehicle based Light Detection and Ranging (UAV-LiDAR) data in planted forests. Remote Sens. 2019, 11, 908. [Google Scholar] [CrossRef]

- Yan, D.; Liu, Z. Application of UAV-Based Multi-Angle hyperspectral remote sensing in fine vegetation classification. Remote Sens. 2019, 11, 2753. [Google Scholar] [CrossRef]

- Avtar, R.; Suab, S.A.; Syukur, M.S.; Korom, A.; Umarhadi, D.A.; Yunus, A.P. Assessing the influence of UAV altitude on extracted biophysical parameters of Young Oil Palm. Remote Sens. 2020, 12, 3030. [Google Scholar] [CrossRef]

- Chen, A.; Yang, X.; Xu, B.; Jin, Y.; Zhang, W.; Guo, J.; Xing, X.; Yang, D. Research on recognition methods of elm sparse forest based on object-based image analysis and deep learning. J. Geo-Inf. Sci. 2020, 22, 1897–1909. [Google Scholar]

- Li, Q.; Liu, J.; Mi, X.; Yang, J.; Yu, T. Object-oriented crop classification for GF-6 WFV remote sensing images based on Convolutional Neural. Natl. Remote Sens. Bull. 2021, 25, 549–558. [Google Scholar]

- Ma, F.; Xu, F.; Sun, C. Land-use information of object-oriented classification by UAV image. J. Appl. Sci. 2021, 39, 312–320. [Google Scholar]

- Zhu, Y.; Zeng, Y.; Zhang, M. Extract of land use/cover information based on HJ satellites data and object-oriented classification. Trans. Chin. Soc. Agric. Eng. 2017, 33, 258–265. [Google Scholar]

- Chang, C.; Zhao, G.; Wang, L.; Zhu, X.; Gao, Z. Land use classification based on RS object-oriented method in coastal spectral confusion region. Trans. Chin. Soc. Agric. Eng. 2012, 28, 226–231. [Google Scholar]

- Sun, Z.; Shen, W.; Wei, B.; Liu, X.; Su, W.; Zhang, C.; Yang, J. Object-oriented land cover classification using HJ-1 remote sensing imagery. Sci. China Earth Sci. 2010, 53, 34–44. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, C.; Li, J.; Zhang, Z. An object-oriented and variogram based method of automatic extraction of tea planting area from high resolution remote sensing imagery. Remote Sens. Inf. 2021, 36, 126–136. [Google Scholar]

- Liu, K.; Gong, H.; Cao, J.; Zhu, Y. Comparison of mangrove remote sensing classification based on multi-type UAV data. Trop. Geogr. 2019, 39, 492–501. [Google Scholar]

- Pont, T.J.; Arbelaez, P.A.; Barron, J.; Marqués, A.F.; Malik, J. Multiscale combinatorial grouping for image segmentation and object proposal generation. IEEE T. Pattern Anal. 2017, 39, 128–140. [Google Scholar] [CrossRef] [PubMed]

- Collins, M.J.; Dymond, C.; Johnson, E.A. Mapping subalpine forest types using networks of nearest neighbour classifiers. Int. J. Remote Sens. 2010, 25, 1701–1721. [Google Scholar] [CrossRef]

- Han, N.; Du, H.; Zhou, G.; Sun, X.; Ge, H.; Xu, X. Object-based classification using SPOT-5 imagery for Moso bamboo forest mapping. Int. J. Remote Sens. 2014, 35, 1126–1142. [Google Scholar] [CrossRef]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An easy-to-use airborne LiDAR data filtering method based on cloth simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Chen, Y.; Feng, T.; Shi, P.; Wang, J. Classification of remote sensing image based on object-oriented and class rules. Geomat. Inf. Sci. Wuhan Univ. 2006, 4, 316–320. [Google Scholar]

- Su, W.; Li, J.; Chen, Y.; Zhang, J.; Hu, D.; Liu, C. Object-oriented urban land-cover classification of multi-scale image segmentation method-a case study in Kuala Lumpur city center, Malaysia. Natl. Remote Sens. Bull. 2007, 4, 521–530. [Google Scholar]

- Ma, Y.; Ming, D.; Yang, H. Scale estimation of object-oriented image analysis based on spectral-spatial statistics. J. Remote Sens. 2017, 21, 566–578. [Google Scholar]

- Dragut, L.; Tiede, D.; Levick, S.R. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geogr. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Dong, M.; Su, J.; Liu, G.; Yang, J.; Chen, X.; Tian, L.; Wang, M. Extraction of tobacco planting areas from UAV remote sensing imagery by object-oriented classification method. Sci. Surv. Mapp. 2014, 39, 87–90. [Google Scholar]

- Liu, Y.; Yu, X.; Fan, J.; Zhou, J.; Cheng, H.; Yao, G.; Meng, F.; Jin, F. Rapid estimation of rural homestead area in Western China based on UVA imagery and object-oriented method. Bull. Surv. Mapp. 2022, 125–129. [Google Scholar] [CrossRef]

- Altman, N.S. An introduction to kernel and nearest-neighbor nonparametric regression. Am. Stat. 1992, 46, 175–185. [Google Scholar]

- Maxwell, A.E.; Warner, T.A.; Fang, F. Implementation of machine-learning classification in remote sensing: An applied review. Int. J. Remote Sens. 2018, 39, 2784–2817. [Google Scholar] [CrossRef]

- Maselli, F.; Chirici, G.; Bottai, L.; Corona, P.; Marchetti, M. Estimation of mediterranean forest attributes by the application of k-NN procedures to multitemporal landsat ETM+Images. Int. J. Remote Sens. 2005, 26, 3781–3796. [Google Scholar] [CrossRef]

- Wu, X.; Kumar, V.; Ross, Q.J.; Ghosh, J.; Yang, Q.; Motoda, H.; McLachlan, G.J.; Ng, A.; Liu, B.; Yu, P.; et al. Top 10 algorithms in data mining. Knowl. Inf. Syst. 2008, 14, 1–37. [Google Scholar] [CrossRef]

- Zhang, Z.; Huang, Y.; Wang, H. A new KNN classification approach. Comput. Sci. 2008, 35, 170–172. [Google Scholar]

- Mui, A.; He, Y.; Weng, Q. An object-based approach to delineate wetlands across landscapes of varied disturbance with high spatial resolution satellite imagery. ISPRS J. Photogramm. 2015, 109, 30–46. [Google Scholar] [CrossRef]

- Zeng, Y.; Yang, Y.; Zhao, L. Pseudo nearest neighbor rule for pattern classification. Expert Syst. Appl. 2009, 36, 3587–3595. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, W.; Li, X.; Zhu, J.; Zhang, H.; Yang, D.; Xu, X. The spatial distribution pattern of rock desertification area based on Unmanned Aerial Vehicle imagery and object-oriented classification method. J. Geo-Inf. Sci. 2020, 22, 2436–2444. [Google Scholar]

- Huang, S.; Xu, W.; Xiong, Y.; Wu, C.; Dai, F.; Xu, H.; Wang, L.; Kou, W. Combining Textures and Spatial Features to Extract Tea Plantations Based on Object-Oriented Method by Using Multispectral Image. Spectrosc. Spect. Anal. 2021, 41, 2565–2571. [Google Scholar]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Kazmi, W.; Garcia-Ruiz, F.J.; Nielsen, J.; Rasmussen, J.; Andersen, J.H. Detecting creeping thistle in sugar beet fields using vegetation indices. Comput. Electron. Agr. 2015, 112, 10–19. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Bargen, K.V.; Mortensen, D.A. Plant species identification, size, and enumeration using machine vision techniques on near-binary images. Proc. SPIE-Int. Soc. Opt. Eng. 1993, 1836, 208–219. [Google Scholar]

- Hunt, E.R.; Cavigelli, M.; Daughtry, C.S.T.; Mcmurtrey, J.E.; Walthall, C.L. Evaluation of digital photography from model aircraft for remote sensing of crop biomass and nitrogen status. Precis. Agric. 2005, 6, 359–378. [Google Scholar] [CrossRef]

- Xie, B.; Yang, W.; Wang, F. A new estimation method for fractional vegetation cover based on UVA visual light spectrum. Sci. Surv. Mapp. 2020, 45, 72–77. [Google Scholar]

- Verrelst, J.; Schaepman, M.E.; Koetz, B.; Kneubühler, M. Angular sensitivity analysis of vegetation indices derived from CHRIS/PROBA data. Remote Sens. Environ. 2008, 112, 2341–2353. [Google Scholar] [CrossRef]

- Gamon, J.A.; Surfus, J.S. Assessing Leaf pigment content and activity with a reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Wang, X.; Wang, M.; Wang, S.; Wu, Y. Extraction of vegetation information from visible unmanned aerial vehicle images. Trans. Chin. Soc. Agric. Eng. 2015, 31, 152–159. [Google Scholar]

- Kwak, G.H.; Park, N.W. Impact of texture information on crop classification with machine learning and UAV images. Appl. Sci. 2019, 9, 643. [Google Scholar] [CrossRef]

- Castro, A.; Peña, J.; Torres-Sánchez, J.; Jiménez-Brenes, F.; López-Granados, F. Mapping cynodon dactylon infesting cover crops with an automatic decision tree-OBIA procedure and UAV imagery for precision viticulture. Remote Sens. 2019, 12, 56. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikainen, M.; Maenpaa, T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 971–987. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, B.; Qin, S.; Li, S.; Huang, X. Review of research and application of forest canopy closure and its measuring methods. World For. Res. 2008, 21, 40–46. [Google Scholar]

- Fiala, A.C.S.; Garman, S.L.; Gray, A.N. Comparison of five canopy cover estimation techniques in the western Oregon Cascades. Forest Ecol. Manag. 2006, 232, 188–197. [Google Scholar] [CrossRef]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef]

- Huang, X.; Xia, K.; Feng, H.; Yang, Y.; Du, X. Research on individual tree crown detection and segmentation using UAV imaging and mask R-CNN. J. For. Eng. 2021, 6, 133–140. [Google Scholar]

- Guo, X.; Liu, Q.; Sharma, R.P.; Chen, Q.; Ye, Q.; Tang, S.; Fu, L. Tree recognition on the plantation using UAV images with ultrahigh spatial resolution in a complex environment. Remote Sens. 2021, 13, 4122. [Google Scholar] [CrossRef]

- Yang, K.; Zhang, H.; Wang, F.; Lai, R. Extraction of Broad-Leaved tree crown based on UAV visible images and OBIA-RF model: A case study for Chinese Olive Trees. Remote Sens. 2022, 14, 2469. [Google Scholar] [CrossRef]

- Li, D.; Zhang, J.; Zhao, M. Extraction of stand factors in UAV image based on FCM and watershed algorithm. Sci. Silvae Sin. 2019, 55, 180–187. [Google Scholar]

- Adhikari, A.; Kumar, M.; Agrawal, S.; Raghavendra, S. An integrated object and machine learning approach for tree canopy extraction from UAV datasets. J. Indian Soc. Remote 2022, 49, 471–478. [Google Scholar] [CrossRef]

- Liu, L.; Xie, Y.; Gao, X.; Cheng, X.; Huang, H.; Zhang, J. A new threshold-based method for extracting canopy temperature from thermal infrared images of Cork Oak Plantations. Remote Sens. 2021, 13, 5028. [Google Scholar] [CrossRef]

- Fraser, B.; Congalton, R. Evaluating the effectiveness of Unmanned Aerial Systems (UAS) for collecting thematic map accuracy assessment reference data in New England Forest. Forests 2019, 10, 24. [Google Scholar] [CrossRef]

- Guo, P.; Shui, W.; Jiang, C.; Zhu, S.; Zhang, Y.; Feng, J.; Chen, Y. Niche characteristics of understory dominant species of talus slope in degraded tiankeng. Chin. J. Appl. Ecol. 2019, 30, 3635–3645. [Google Scholar]

- Shui, W.; Chen, Y.; Jian, X.; Jiang, C.; Wang, Q.; Guo, P. Spatial pattern of plant community in original karst tiankeng: A case study of Zhanyi tiankeng in Yunnan, China. Chin. J. Appl. Ecol. 2018, 29, 4–14. [Google Scholar]

- Jian, X.; Shui, W.; Wang, Y.; Wang, Q.; Chen, Y.; Jiang, C.; Xiang, Z. Species diversity and stability of grassland plant community in heavily-degraded karst tiankeng: A case study of Zhanyi tiankeng in Yunnan, China. Acta Ecol. Sin. 2018, 38, 4704–4714. [Google Scholar]

- Bátori, Z.; Erdős, L.; Gajdács, M.; Barta, K.; Tobak, Z.; Frei, K.; Tolgyesi, C. Managing climate change microrefugia for vascular plants in forested karst landscapes. Forest Ecol. Manag. 2021, 496, 119446. [Google Scholar] [CrossRef]

- Yang, G.; Peng, C.; Liu, Y.; Dong, F. Tiankeng: An ideal place for cli mate warming research on forest ecosystems. Environ. Earth Sci. 2019, 78, 64. [Google Scholar] [CrossRef]

- Su, Y.; Tang, Q.; Mo, F.; Xue, Y. Karst tiankengs as refugia for indigenous tree flora amidst a degraded landscape in southwestern China. Sci. Rep. 2017, 7, 4249. [Google Scholar] [CrossRef]

- Jin, Z.; Cao, S.; Wang, L.; Sun, W. Study on extraction of tree crown information from UVA visible light image of Piceaschrenkiana var. Tianschanica forest. For. Resour. Manag. 2020, 125–135. [Google Scholar] [CrossRef]

- Chung, C.; Wang, J.; Deng, S.; Huang, C. Analysis of canopy gaps of Coastal broadleaf forest plantations in Northeast Taiwan using UAV Lidar and the Weibull distribution. Remote Sens. 2022, 14, 667. [Google Scholar] [CrossRef]

- Jin, C.; Oh, C.; Shin, S.; Njungwi, N.W.; Choi, C. A Comparative study to evaluate accuracy on canopy height and density using UAV, ALS, and fieldwork. Remote Sens. 2020, 11, 241. [Google Scholar] [CrossRef]

- Wang, X.; Huang, H.; Gong, P.; Liu, C.; Li, C.; Li, W. Forest Canopy Height extraction in rugged areas with ICESat/GLAS data. IEEE T. Geosci. Remote 2014, 52, 4650–4657. [Google Scholar] [CrossRef]

| Vegetation Index | Description |

|---|---|

| Excess green [52,53] | |

| Normalized green-blue difference index [52,54] | |

| Normalized green-red difference index [54,55] | |

| Red-green-blue ratio index [56] | |

| Red-green ratio index [57,58] | |

| Visible-band difference vegetation index [59] |

| Spectral Characteristics | Description | Explanation |

|---|---|---|

| Mean | The mean is calculated from values ) of all n pixels that make up an image object. | |

| Brightness | The number of the image object divided by the sum of the mean values that containing spectral information (a mean of the spectral means of the image object). | |

| Std. dev | Standard deviation was calculated from all n pixels values that make up an image object. | |

| Max. diff | The maximum difference between the mean of the Lth layer of an image object and the mean of the Lth layer of the super object ). |

| Shape Feature | Description | Explanation |

|---|---|---|

| Area | For georeferenced object, the area is equal to the number of pixels multiplied by the area value of each raster pixel; for object without a reference system, the area is the number of pixels contained. | |

| Border length | The sum of the boundary of the image object. | |

| Length/Width | The ratio of the length of the image object to the width. The index indicates the narrowness of the object. | |

| Width | The width of image object. | |

| Border index | The border length is twice the sum of the upper length and width. The index reflects the complexity of the object’s boundaries. An object is more irregular, the larger the value of the boundary index. | |

| Compactness | The index measures the degree of fullness of the image object. Compactness increases the closer the image object is to a square shape. | |

| Roundness | The index indicates the degree to which the image object is close to circular. | |

| Shape index | Describe the degree of smoothness of the image object boundary. The larger the index, the more broken the boundary; conversely, the smoother the boundary. |

| Texture Features | Formula | Description |

|---|---|---|

| Homogeneity | Description of the homogeneity of the image. As image element values in GLCM are clustered on the diagonal, the greater the homogeneity of the image and the higher the homogeneity. | |

| Contrast | Reflects the depth of the image texture grooves and the clarity of the image. As the texture grooves are shallower, the contrast value is smaller, and the clarity of the corresponding image decreases; conversely, the contrast is large and the clarity is higher. | |

| Dissimilarity | Similar to contrast. Reflect the degree of difference of the object. As the value of dissimilarity is larger, the greater the change in regional contrast indicated by the value. | |

| Entropy | Measurement the complexity of texture in the image object. As the texture in the image becomes more complex, the entropy value increases; conversely, the entropy value decreases. | |

| ASM | Represents the homogeneity and consistency of image grayscale distribution. The object distribution is concentrated near the main diagonal, and the image grayscale distribution is more uniform in the local area, and the ASM value is larger. On the contrary, if all values of the matrix are equal, the ASM value is smaller. | |

| Mean | Reflect the degree of image texture regularity. As the mean value is larger, the regularity of the texture is stronger, and the texture features are easier to describe; conversely, the texture is more difficult to describe. | |

| Std. dev | Reflect the degree of deviation that occurs between the image element value and the mean value. The standard deviation increase becomes greater as the image grayscale value becomes larger. | |

| Correlation | Measurement the similarity of image element values in the row or column direction, reflecting the local grayscale correlation in the image. Correlation values are larger when the values of matrix elements are close to uniformly equal; conversely, smaller. |

| Samples | Optimal Feature Combination | Canopy Area |

|---|---|---|

| SG1 | Roundness, Mean Red, Mean CHM, Std. dev Green, Std. dev Blue, GLCM Std. dev, EXG | 5640.87 (m2) |

| SG2 | Area, Brightness, Mean Red, Mean Green, Std. dev Red, Std. dev Green, Std. dev Blue, EXG, NGBDI, NGRDI | 6545.99 (m2) |

| SG3 | Area, Compactness, Mean CHM, Mean Green, Mean Red, Std. dev Green, Std. dev CHM, GLCM Homogeneity, EXG, NGBDI, NGRDI | 6404.66 (m2) |

| Reference | Canopy | Bare Land | Grassland | Road | Tree Slit | Total | User Accuracy | |

|---|---|---|---|---|---|---|---|---|

| Classification | ||||||||

| Canopy | 865 | 13 | 45 | 13 | 15 | 951 | 0.91 | |

| Bare land | 9 | 40 | 11 | 0 | 0 | 60 | 0.67 | |

| Grassland | 86 | 2 | 344 | 1 | 6 | 439 | 0.78 | |

| Road | 1 | 0 | 0 | 10 | 0 | 11 | 0.91 | |

| Tree slit | 8 | 0 | 0 | 0 | 31 | 39 | 0.79 | |

| Total | 969 | 55 | 400 | 24 | 52 | 1500 | ||

| Production accuracy | 0.89 | 0.73 | 0.86 | 0.42 | 0.60 | |||

| Overall accuracy = 85.6%; Kappa coefficient = 0.72 | ||||||||

| Sample | Survey Line 1 | Survey Line 2 | Survey Line 3 | Survey Line 4 | Line Method to Extract Values | Object-Oriented Extraction of Values | Accuracy (%) |

|---|---|---|---|---|---|---|---|

| SG1 | 0.61 | 0.76 | 0.57 | 0.53 | 0.62 | 0.70 | 87.51% |

| SG2 | 0.84 | 0.68 | 0.54 | 0.62 | 0.67 | 0.81 | 79.53% |

| SG3 | 0.89 | 0.78 | 0.47 | 0.56 | 0.68 | 0.79 | 83.11% |

| Average | - | - | - | - | 0.66 | 0.77 | 83.38% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shui, W.; Li, H.; Zhang, Y.; Jiang, C.; Zhu, S.; Wang, Q.; Liu, Y.; Zong, S.; Huang, Y.; Ma, M. Is an Unmanned Aerial Vehicle (UAV) Suitable for Extracting the Stand Parameters of Inaccessible Underground Forests of Karst Tiankeng? Remote Sens. 2022, 14, 4128. https://doi.org/10.3390/rs14174128

Shui W, Li H, Zhang Y, Jiang C, Zhu S, Wang Q, Liu Y, Zong S, Huang Y, Ma M. Is an Unmanned Aerial Vehicle (UAV) Suitable for Extracting the Stand Parameters of Inaccessible Underground Forests of Karst Tiankeng? Remote Sensing. 2022; 14(17):4128. https://doi.org/10.3390/rs14174128

Chicago/Turabian StyleShui, Wei, Hui Li, Yongyong Zhang, Cong Jiang, Sufeng Zhu, Qianfeng Wang, Yuanmeng Liu, Sili Zong, Yunhui Huang, and Meiqi Ma. 2022. "Is an Unmanned Aerial Vehicle (UAV) Suitable for Extracting the Stand Parameters of Inaccessible Underground Forests of Karst Tiankeng?" Remote Sensing 14, no. 17: 4128. https://doi.org/10.3390/rs14174128

APA StyleShui, W., Li, H., Zhang, Y., Jiang, C., Zhu, S., Wang, Q., Liu, Y., Zong, S., Huang, Y., & Ma, M. (2022). Is an Unmanned Aerial Vehicle (UAV) Suitable for Extracting the Stand Parameters of Inaccessible Underground Forests of Karst Tiankeng? Remote Sensing, 14(17), 4128. https://doi.org/10.3390/rs14174128