Terrestrial and Airborne Structure from Motion Photogrammetry Applied for Change Detection within a Sinkhole in Thuringia, Germany

Abstract

:1. Introduction

2. Materials and Methods

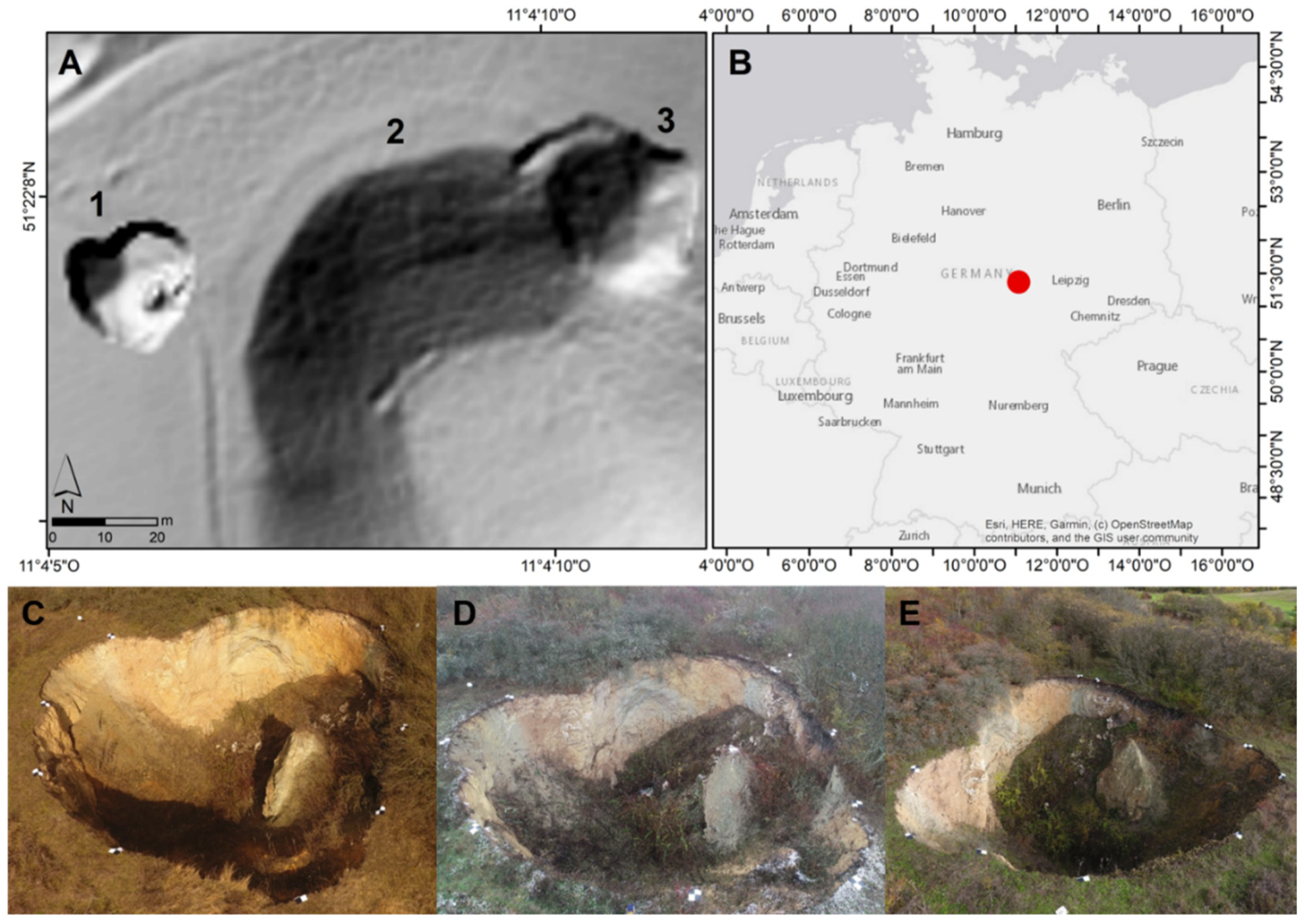

2.1. Study Area

2.2. Data Collection and Preprocessing

2.3. Structure from Motion, Multiview Stereo 3D Reconstruction, and Computation of Precision Maps

2.4. Point Cloud Comparison and Deformation Analysis

3. Results

3.1. Data Collection and Preprocessing

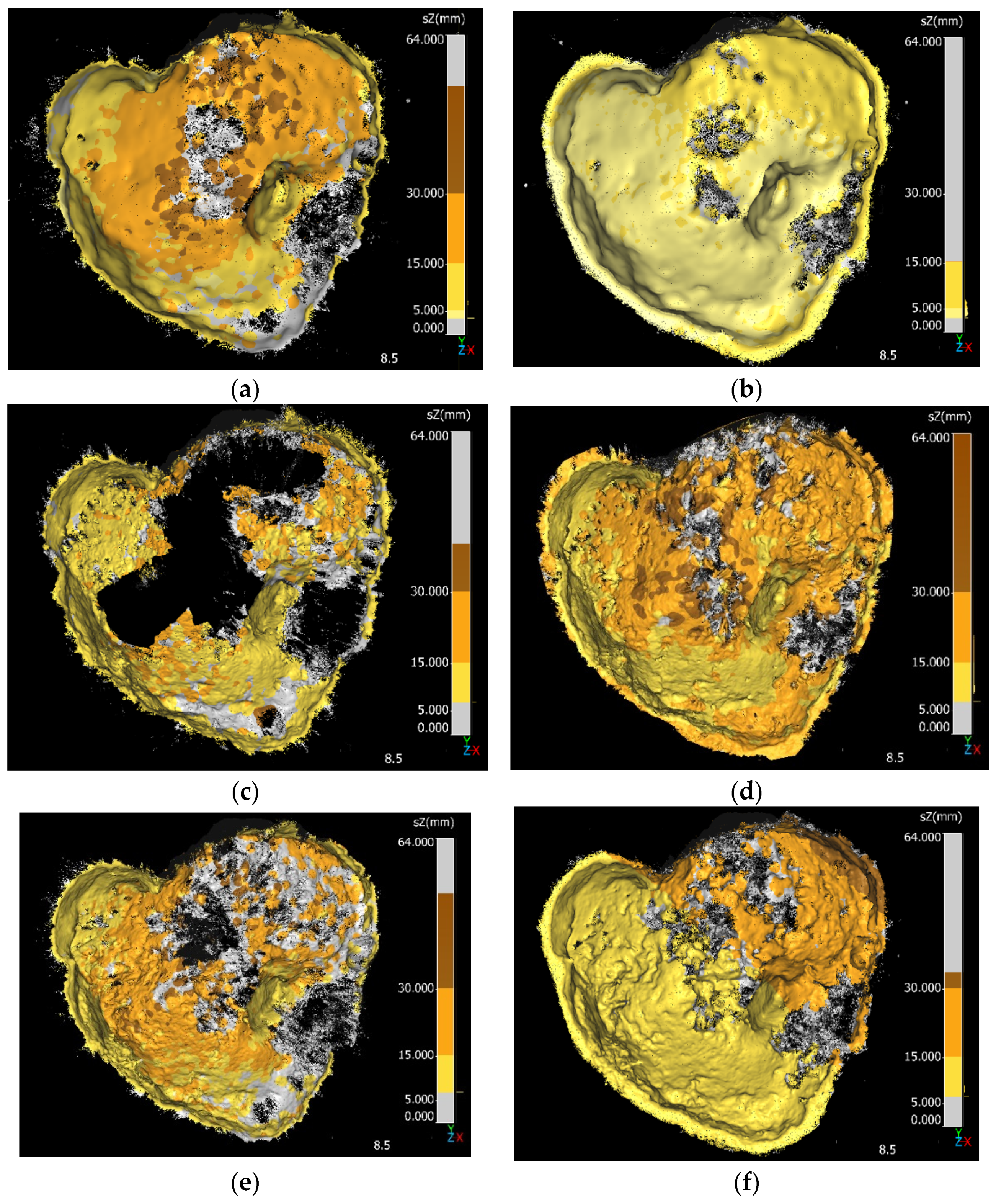

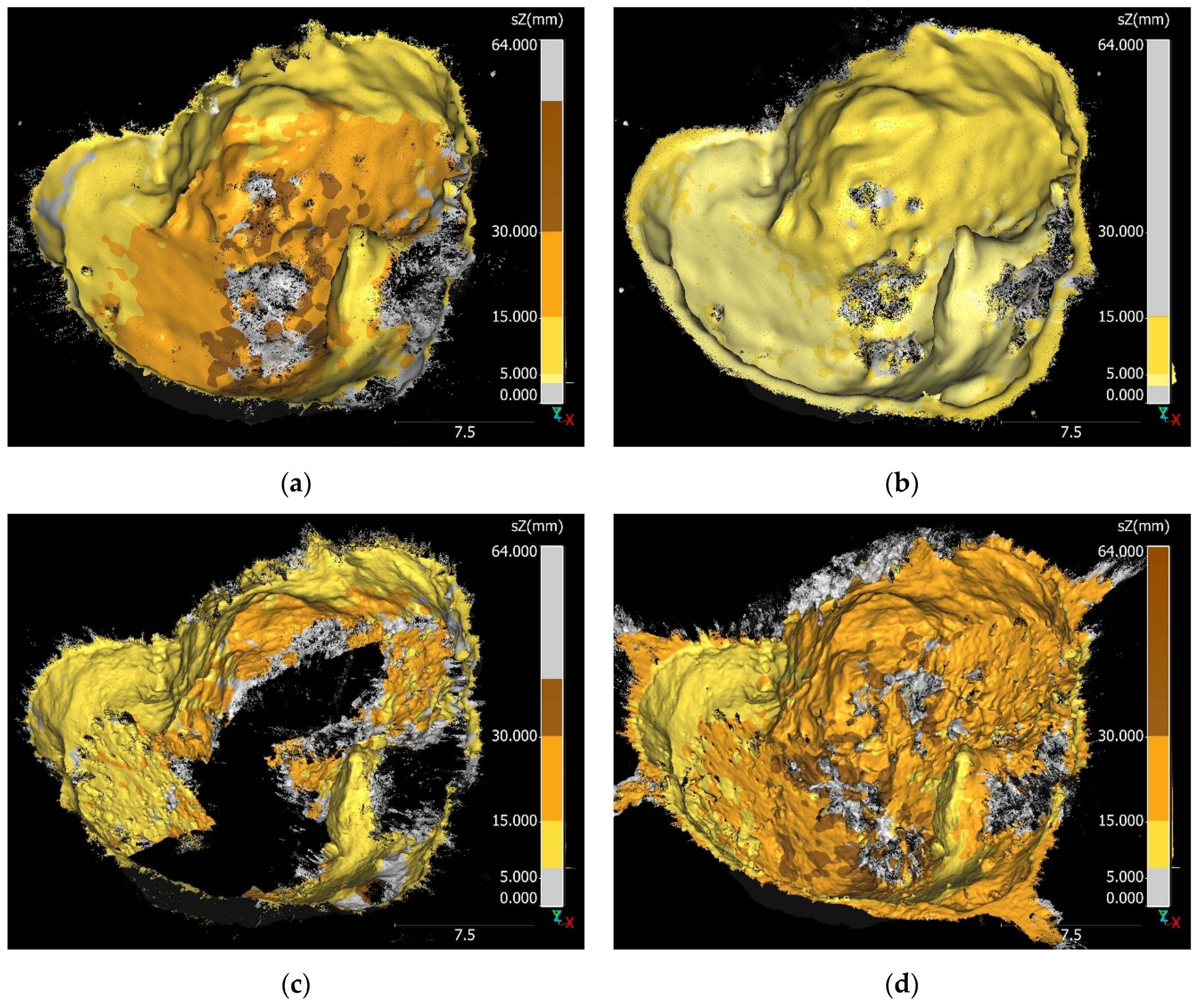

3.2. Structure from Motion, Multiview Stereo 3D Reconstruction, and Computation of Precision Maps

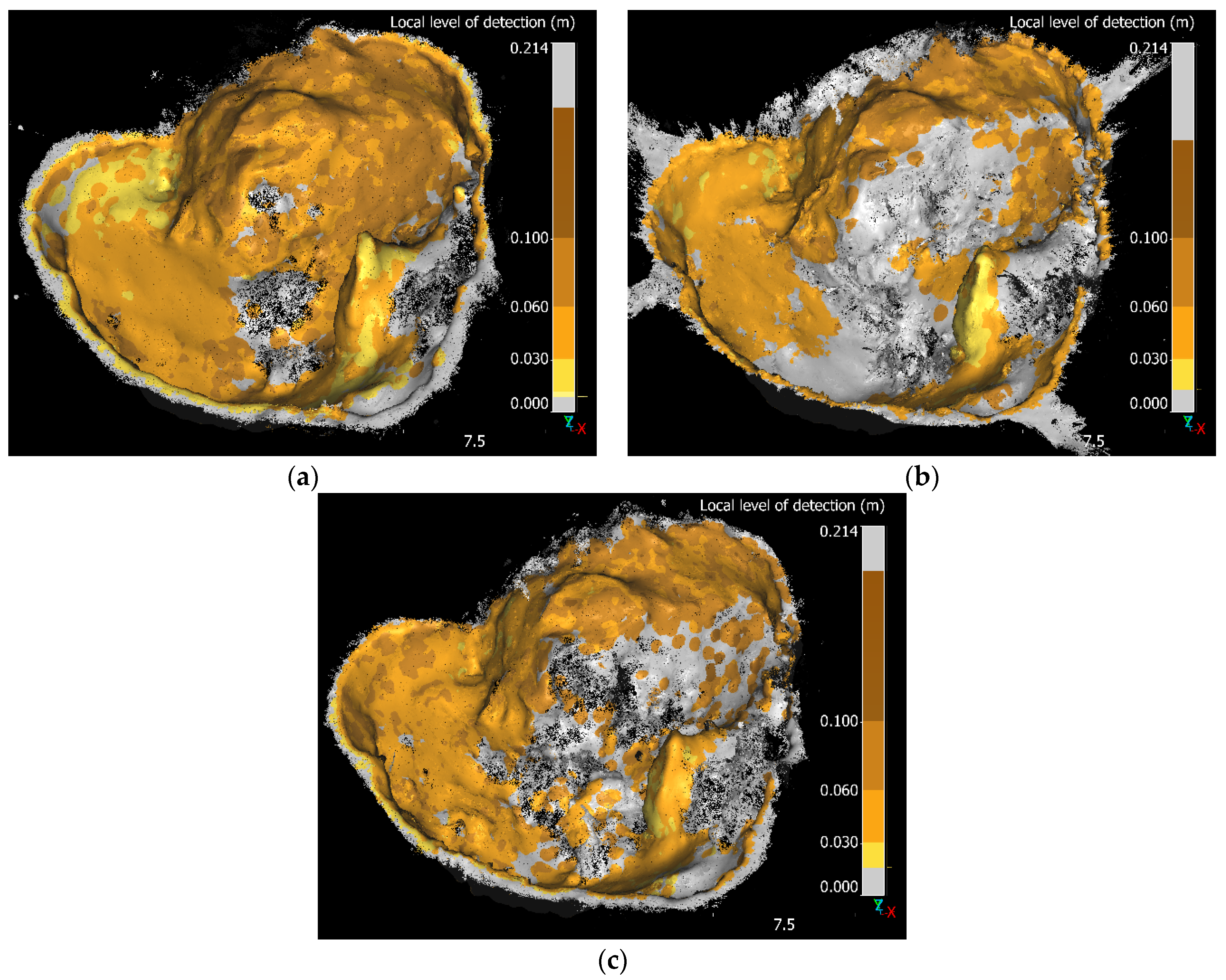

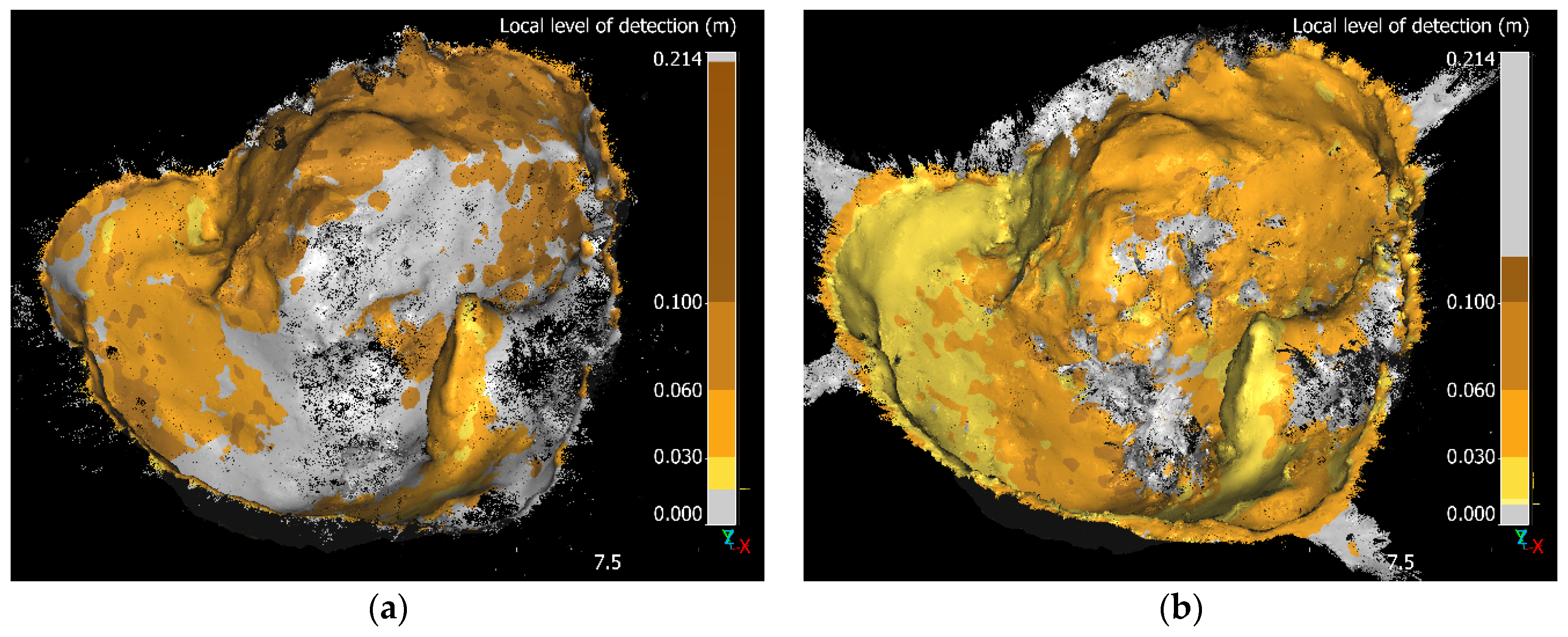

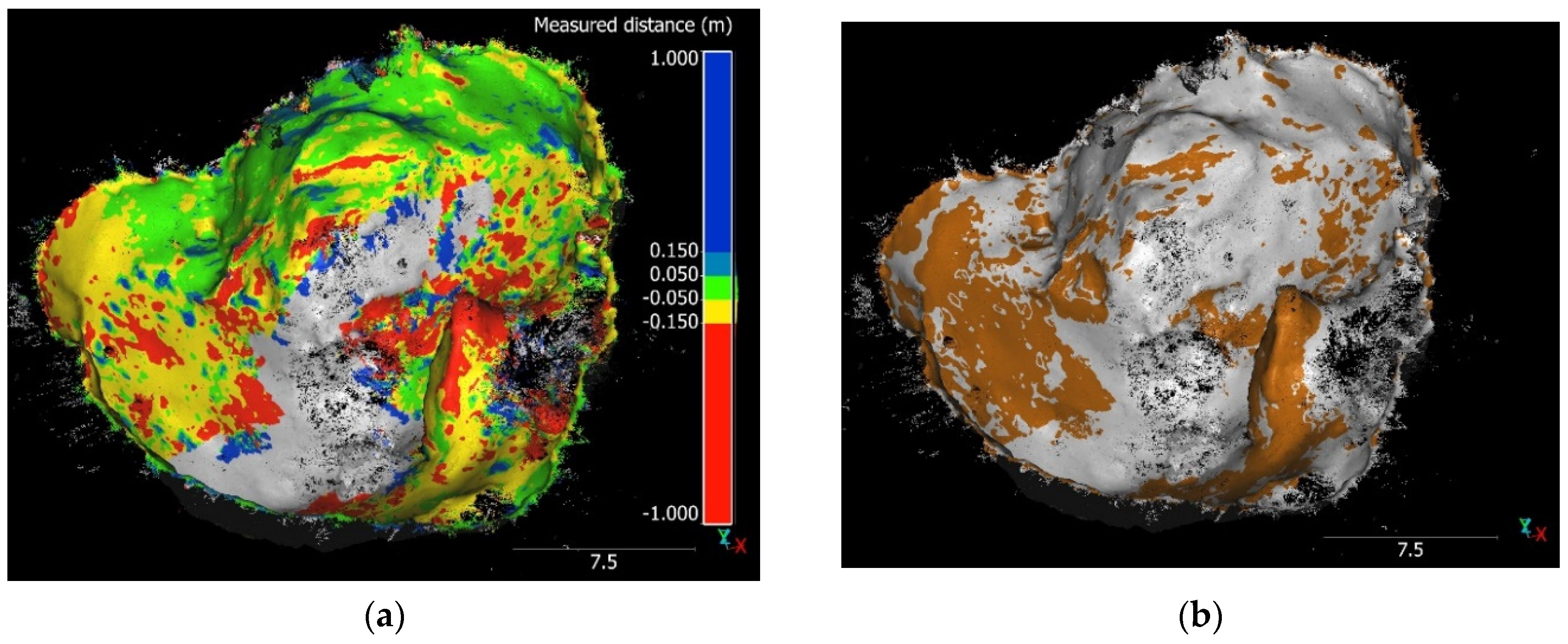

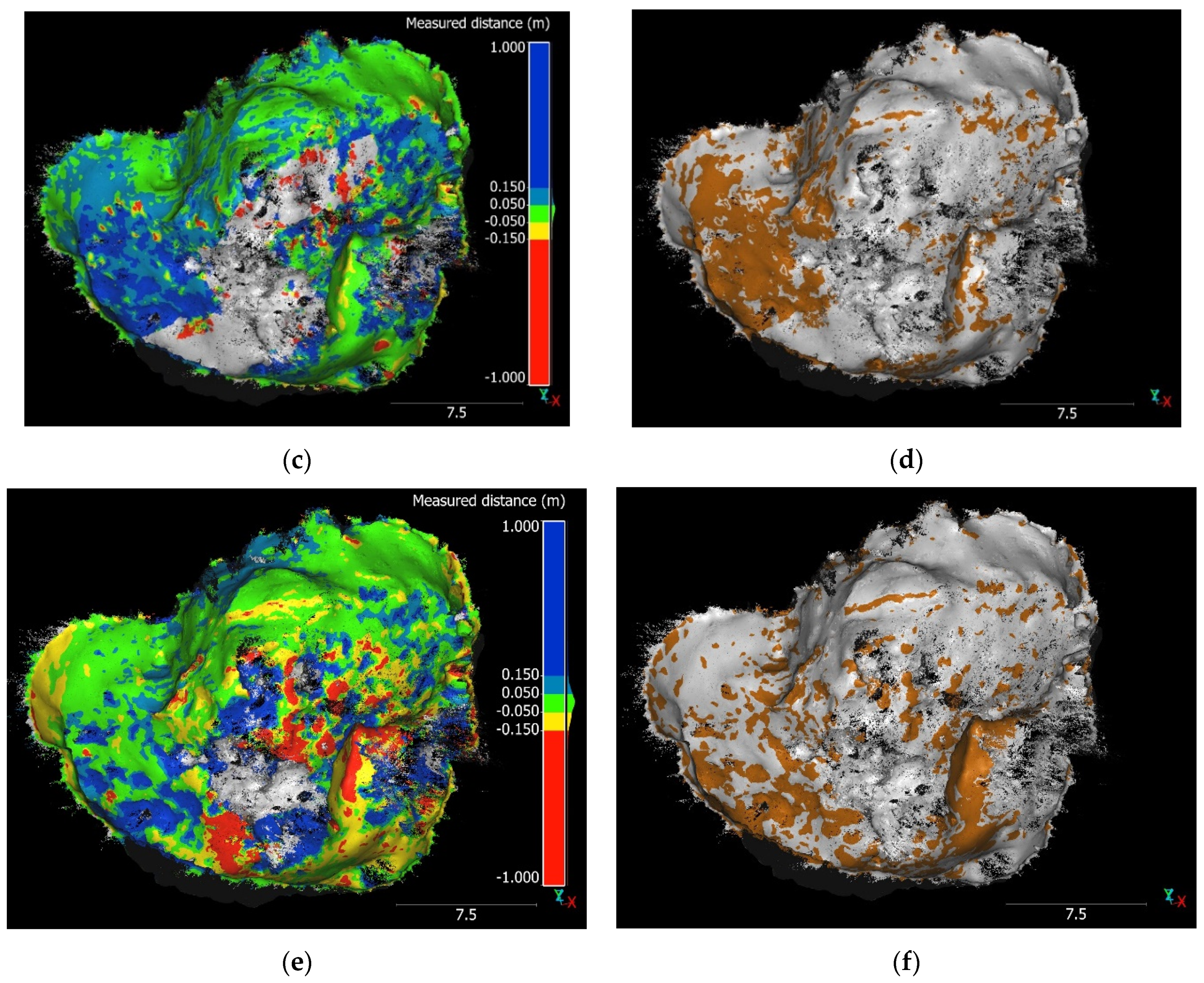

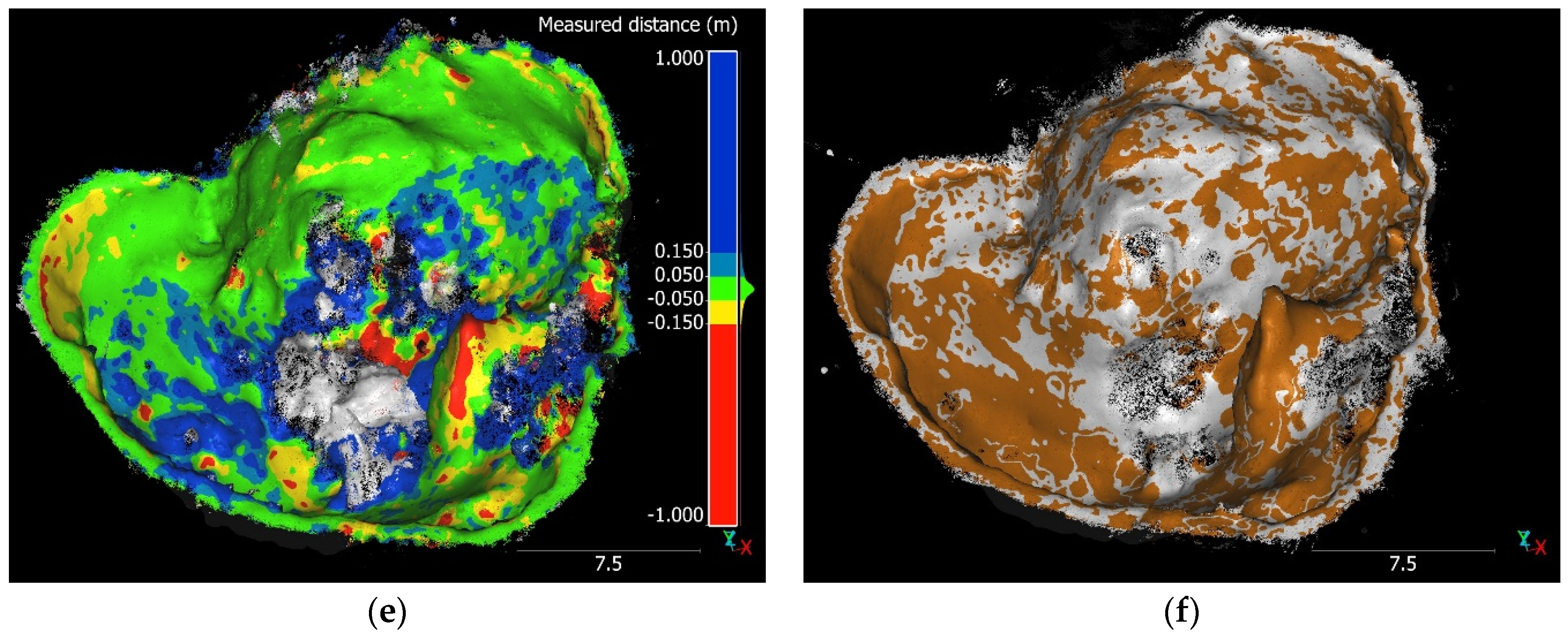

3.3. Point Cloud Comparison and Deformation Analysis

4. Discussion

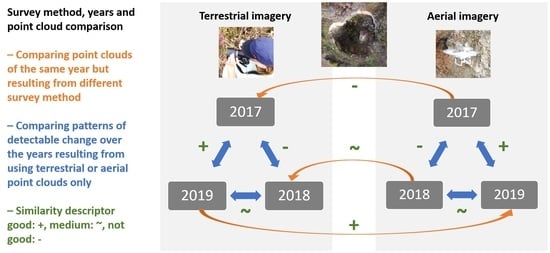

4.1. Comparing Terrestrial and UAV Patterns of Change

4.2. Challenges in Multitemporal/Multisensor Comparison

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1. Camera Positions and Image Overlap

Appendix A.2. Point Cloud Coverage of the Sinkhole

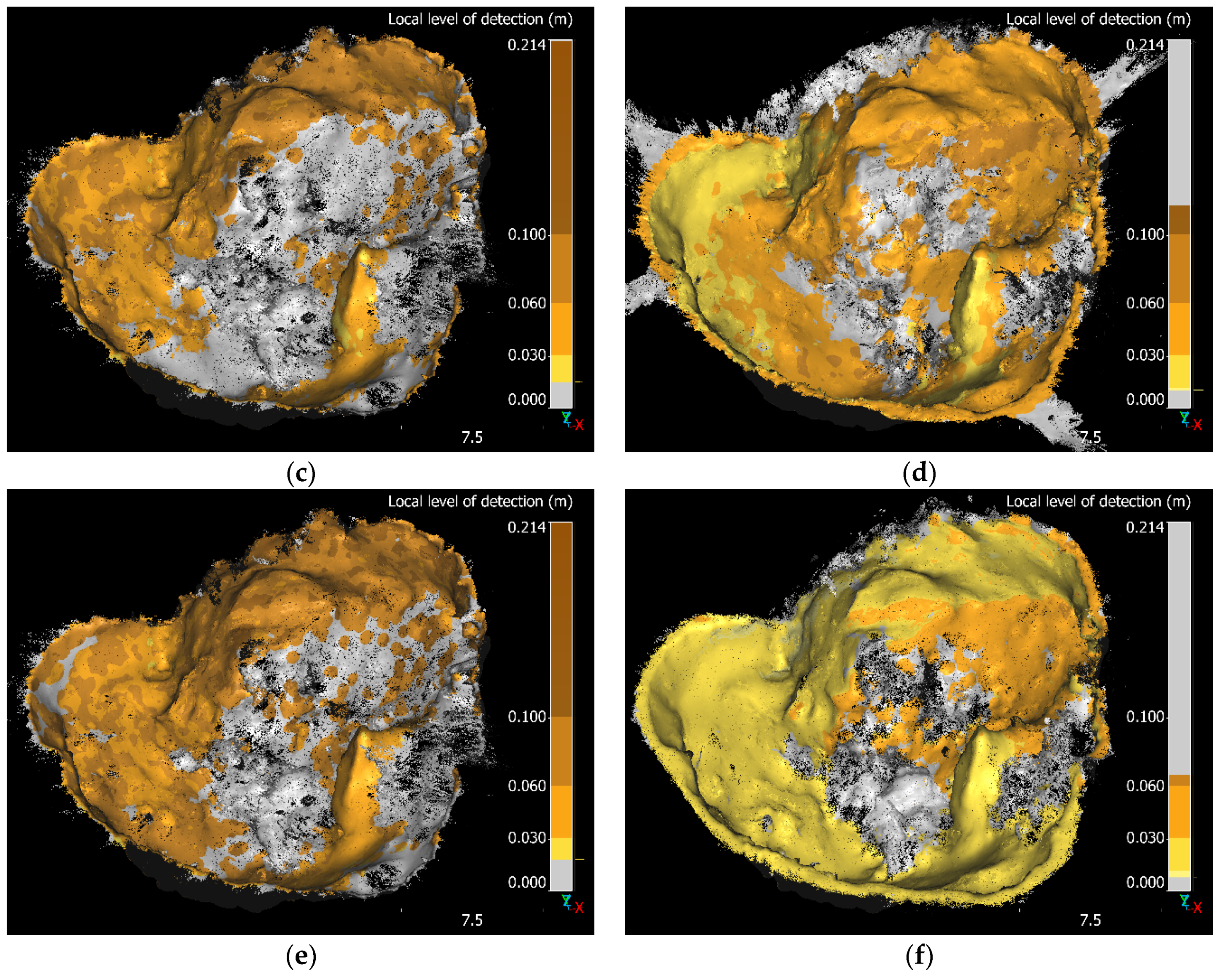

Appendix A.3. Local Levels of Detection of Comparison Pairs

References

- Smith, M.W.; Carrivick, J.L.; Quincey, D.J. Structure from Motion Photogrammetry in Physical Geography. Prog. Phys. Geogr. 2016, 40, 247–275. [Google Scholar] [CrossRef] [Green Version]

- Javernick, L.; Brasington, J.; Caruso, B. Modeling the Topography of Shallow Braided Rivers Using Structure-from-Motion Photogrammetry. Geomorphology 2014, 213, 166–182. [Google Scholar] [CrossRef]

- Micheletti, N.; Chandler, J.H.; Lane, S.N. Structure from Motion (SfM) Photogrammetry. In Geomorphological Techniques; Clarke, L.E., Nield, J.M., Eds.; British Society for Geomorphology: London, UK, 2015; Volume 12. [Google Scholar]

- Eltner, A.; Kaiser, A.; Castillo, C.; Rock, G.; Neugirg, F.; Abellán, A. Image-Based Surface Reconstruction in Geomorphometry—Merits, Limits and Developments. Earth Surf. Dyn. 2016, 4, 359–389. [Google Scholar] [CrossRef] [Green Version]

- Eltner, A.; Sofia, G. Structure from Motion Photogrammetric Technique. In Remote Sensing of Geomorphology; Tarolli, P., Mudd, S.M., Eds.; Developments in Earth Surface Processes; Elsevier: Amsterdam, The Netherlands, 2020; Volume 23, pp. 1–24. ISBN 978-0-444-64177-9. [Google Scholar]

- Marín-Buzón, C.; Pérez-Romero, A.M.; León-Bonillo, M.J.; Martínez-Álvarez, R.; Mejías-García, J.C.; Manzano-Agugliaro, F. Photogrammetry (SfM) vs. Terrestrial Laser Scanning (TLS) for Archaeological Excavations: Mosaic of Cantillana (Spain) as a Case Study. Appl. Sci. 2021, 11, 11994. [Google Scholar] [CrossRef]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic Structure from Motion: A New Development in Photogrammetric Measurement: Topographic Structure from Motion. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef] [Green Version]

- Favalli, M.; Fornaciai, A.; Isola, I.; Tarquini, S.; Nannipieri, L. Multiview 3D Reconstruction in Geosciences. Comput. Geosci. 2012, 44, 168–176. [Google Scholar] [CrossRef] [Green Version]

- Ullman, S. The Interpretation of Structure from Motion. Proc. R. Soc. Lond. Ser. B 1979, 203, 405–426. [Google Scholar]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. ‘Structure-from-Motion’ Photogrammetry: A Low-Cost, Effective Tool for Geoscience Applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Neugirg, F.; Stark, M.; Kaiser, A.; Vlacilova, M.; Della Seta, M.; Vergari, F.; Schmidt, J.; Becht, M.; Haas, F. Erosion Processes in Calanchi in the Upper Orcia Valley, Southern Tuscany, Italy Based on Multitemporal High-Resolution Terrestrial LiDAR and UAV Surveys. Geomorphology 2016, 269, 8–22. [Google Scholar] [CrossRef]

- Cook, K.L. An Evaluation of the Effectiveness of Low-Cost UAVs and Structure from Motion for Geomorphic Change Detection. Geomorphology 2017, 278, 195–208. [Google Scholar] [CrossRef]

- Passalacqua, P.; Tarolli, P.; Foufoula-Georgiou, E. Testing Space-Scale Methodologies for Automatic Geomorphic Feature Extraction from Lidar in a Complex Mountainous Landscape: Testing feature extraction methodologies. Water Resour. Res. 2010, 46, 11. [Google Scholar] [CrossRef] [Green Version]

- Micheletti, N.; Chandler, J.H.; Lane, S.N. Investigating the Geomorphological Potential of Freely Available and Accessible Structure-from-Motion Photogrammetry Using a Smartphone: Digital terrain models using structure-from-motion and a smartphone. Earth Surf. Process. Landf. 2015, 40, 473–486. [Google Scholar] [CrossRef] [Green Version]

- Tavani, S.; Granado, P.; Riccardi, U.; Seers, T.; Corradetti, A. Terrestrial SfM-MVS Photogrammetry from Smartphone Sensors. Geomorphology 2020, 367, 107318. [Google Scholar] [CrossRef]

- An, P.; Fang, K.; Zhang, Y.; Jiang, Y.; Yang, Y. Assessment of the Trueness and Precision of Smartphone Photogrammetry for Rock Joint Roughness Measurement. Measurement 2022, 188, 110598. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S. Straightforward Reconstruction of 3D Surfaces and Topography with a Camera: Accuracy and Geoscience Application. J. Geophys. Res. 2012, 117, F3. [Google Scholar] [CrossRef] [Green Version]

- James, L.A.; Hodgson, M.E.; Ghoshal, S.; Latiolais, M.M. Geomorphic Change Detection Using Historic Maps and DEM Differencing: The Temporal Dimension of Geospatial Analysis. Geomorphology 2012, 137, 181–198. [Google Scholar] [CrossRef]

- Farquharson, J.I.; James, M.R.; Tuffen, H. Examining Rhyolite Lava Flow Dynamics through Photo-Based 3D Reconstructions of the 2011–2012 Lava Flowfield at Cordón-Caulle, Chile. J. Volcanol. Geotherm. Res. 2015, 304, 336–348. [Google Scholar] [CrossRef] [Green Version]

- Bemis, S.P.; Micklethwaite, S.; Turner, D.; James, M.R.; Akciz, S.; Thiele, S.T.; Bangash, H.A. Ground-Based and UAV-Based Photogrammetry: A Multiscale, High-Resolution Mapping Tool for Structural Geology and Paleoseismology. J. Struct. Geol. 2014, 69, 163–178. [Google Scholar] [CrossRef]

- Smith, M.W.; Vericat, D. From Experimental Plots to Experimental Landscapes: Topography, Erosion and Deposition in Sub-humid Badlands from Structure-from-Motion Photogrammetry. Earth Surf. Process. Landf. 2015, 40, 1656–1671. [Google Scholar] [CrossRef] [Green Version]

- Turner, D.; Lucieer, A.; de Jong, S. Time Series Analysis of Landslide Dynamics Using an Unmanned Aerial Vehicle (UAV). Remote Sens. 2015, 7, 1736–1757. [Google Scholar] [CrossRef] [Green Version]

- Bakker, M.; Lane, S.N. Archival Photogrammetric Analysis of River-Floodplain Systems Using Structure from Motion (SfM) Methods: Archival Photogrammetric Analysis of River Systems Using SfM Methods. Earth Surf. Process. Landf. 2017, 42, 1274–1286. [Google Scholar] [CrossRef] [Green Version]

- Piermattei, L.; Carturan, L.; de Blasi, F.; Tarolli, P.; Dalla Fontana, G.; Vettore, A.; Pfeifer, N. Suitability of Ground-Based SfM–MVS for Monitoring Glacial and Periglacial Processes. Earth Surf. Dyn. 2016, 4, 425–443. [Google Scholar] [CrossRef] [Green Version]

- Esposito, G.; Salvini, R.; Matano, F.; Sacchi, M.; Danzi, M.; Somma, R.; Troise, C. Multitemporal Monitoring of a Coastal Landslide through SfM-Derived Point Cloud Comparison. Photogramm. Rec. 2017, 32, 459–479. [Google Scholar] [CrossRef] [Green Version]

- Girod, L.; Nuth, C.; Kääb, A.; Etzelmüller, B.; Kohler, J. Terrain Changes from Images Acquired on Opportunistic Flights by SfM Photogrammetry. Cryosphere 2017, 11, 827–840. [Google Scholar] [CrossRef] [Green Version]

- Goetz, J.; Brenning, A.; Marcer, M.; Bodin, X. Modeling the Precision of Structure-from-Motion Multi-View Stereo Digital Elevation Models from Repeated Close-Range Aerial Surveys. Remote Sens. Environ. 2018, 210, 208–216. [Google Scholar] [CrossRef]

- Peppa, M.V.; Mills, J.P.; Moore, P.; Miller, P.E.; Chambers, J.E. Automated Co-Registration and Calibration in SfM Photogrammetry for Landslide Change Detection: Automated SfM Co-Registration for Landslide Change Detection. Earth Surf. Process. Landf. 2019, 44, 287–303. [Google Scholar] [CrossRef] [Green Version]

- Nesbit, P.; Hugenholtz, C. Enhancing UAV–SfM 3D Model Accuracy in High-Relief Landscapes by Incorporating Oblique Images. Remote Sens. 2019, 11, 239. [Google Scholar] [CrossRef] [Green Version]

- Bash, E.A.; Moorman, B.J.; Menounos, B.; Gunther, A. Evaluation of SfM for Surface Characterization of a Snow-Covered Glacier through Comparison with Aerial Lidar. J. Unmanned Veh. Syst. 2020, 8, 119–139. [Google Scholar] [CrossRef]

- Luo, W.; Shao, M.; Che, X.; Hesp, P.A.; Bryant, R.G.; Yan, C.; Xing, Z. Optimization of UAVs-SfM Data Collection in Aeolian Landform Morphodynamics: A Case Study from the Gonghe Basin, China. Earth Surf. Process. Landf. 2020, 45, 3293–3312. [Google Scholar] [CrossRef]

- Rengers, F.K.; McGuire, L.A.; Kean, J.W.; Staley, D.M.; Dobre, M.; Robichaud, P.R.; Swetnam, T. Movement of Sediment Through a Burned Landscape: Sediment Volume Observations and Model Comparisons in the San Gabriel Mountains, California, USA. J. Geophys. Res. Earth Surf. 2021, 126, e2020JF006053. [Google Scholar] [CrossRef]

- Klawitter, M.; Pistellato, D.; Webster, A.; Esterle, J. Application of Photogrammetry for Mapping of Solution Collapse Breccia Pipes on the Colorado Plateau, USA. Photogramm. Rec. 2017, 32, 443–458. [Google Scholar] [CrossRef] [Green Version]

- CloudCompare [GPL Software]. CloudCompare, (Version 2.10). 2019. Available online: http://www.cloudcompare.org/ (accessed on 5 August 2019).

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D Comparison of Complex Topography with Terrestrial Laser Scanner: Application to the Rangitikei Canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef] [Green Version]

- Nourbakhshbeidokhti, S.; Kinoshita, A.; Chin, A.; Florsheim, J. A Workflow to Estimate Topographic and Volumetric Changes and Errors in Channel Sedimentation after Disturbance. Remote Sens. 2019, 11, 586. [Google Scholar] [CrossRef] [Green Version]

- Zahs, V.; Winiwarter, L.; Anders, K.; Williams, J.G.; Rutzinger, M.; Höfle, B. Correspondence-Driven Plane-Based M3C2 for Lower Uncertainty in 3D Topographic Change Quantification. ISPRS J. Photogramm. Remote Sens. 2022, 183, 541–559. [Google Scholar] [CrossRef]

- James, M.R.; Robson, S.; Smith, M.W. 3-D Uncertainty-Based Topographic Change Detection with Structure-from-Motion Photogrammetry: Precision Maps for Ground Control and Directly Georeferenced Surveys. Earth Surf. Process. Landf. 2017, 42, 1769–1788. [Google Scholar] [CrossRef]

- James, M.R.; Chandler, J.H.; Eltner, A.; Fraser, C.; Miller, P.E.; Mills, J.P.; Noble, T.; Robson, S.; Lane, S.N. Guidelines on the Use of Structure-from-motion Photogrammetry in Geomorphic Research. Earth Surf. Process. Landf. 2019, 44, 2081–2084. [Google Scholar] [CrossRef]

- Clapuyt, F.; Vanacker, V.; Van Oost, K. Reproducibility of UAV-Based Earth Topography Reconstructions Based on Structure-from-Motion Algorithms. Geomorphology 2016, 260, 4–15. [Google Scholar] [CrossRef]

- De Marco, J.; Maset, E.; Cucchiaro, S.; Beinat, A.; Cazorzi, F. Assessing Repeatability and Reproducibility of Structure-from-Motion Photogrammetry for 3D Terrain Mapping of Riverbeds. Remote Sens. 2021, 13, 2572. [Google Scholar] [CrossRef]

- Niederheiser, R.; Mokroš, M.; Lange, J.; Petschko, H.; Prasicek, G.; Oude Elberink, S. Deriving 3D Point Clouds from Terrestrial Photographs—Comparison of Different Sensors and Software. In Proceedings of the ISPRS Archives, Prague, Czech Republic, 12–19 July 2016; pp. 685–692. [Google Scholar]

- Bartlett, J.W.; Frost, C. Reliability, Repeatability and Reproducibility: Analysis of Measurement Errors in Continuous Variables. Ultrasound Obstet. Gynecol. 2008, 31, 466–475. [Google Scholar] [CrossRef]

- Mikita, T.; Janata, P.; Surový, P. Forest Stand Inventory Based on Combined Aerial and Terrestrial Close-Range Photogrammetry. Forests 2016, 7, 165. [Google Scholar] [CrossRef] [Green Version]

- Bauriegl, A.; Biewald, W.; Büchner, K.H.; Deicke, M.; Herold, U.; Kind, B.; Rindfleisch, K.; Schmidt, S.; Schulz, G.; Schulze, S.; et al. Subrosion und Baugrund in Thüringen; Schriftenreihe der Thüringer Landesanstalt für Umwelt und Geologie; Thüringer Landesanstalt für Umwelt und Geologie: Jena, Germany, 2004; p. 136. [Google Scholar]

- Petschko, H.; Goetz, J.; Böttner, M.; Firla, M.; Schmidt, S. Erosion Processes and Mass Movements in Sinkholes Assessed by Terrestrial Structure from Motion Photogrammetry. In WLF: Workshop on World Landslide Forum, Proceedings of the Advancing Culture of Living with Landslides, Ljubljana, Slovenia, 29 May–2 June 2017; Mikos, M., Tiwari, B., Yin, Y., Sassa, K., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2017; pp. 227–235. [Google Scholar]

- Petschko, H.; Goetz, J.; Zehner, M. Terrestrial and Aerial Photos, GCPs and Derived Point Clouds of a Sinkhole in Northern Thuringia [Data set]. Zenodo 2022. [Google Scholar] [CrossRef]

- Brust, M. Neuer Erdfall bei Bad Frankenhausen. Mitt. Verb. Dtsch. Höhlen-Karstforscher EV 2010, 56, 113–115. [Google Scholar]

- Annual Observations of Precipitation in Mm—Station Artern; DWD Climate Data Center (CDC): Offenbach, Germany, 2022.

- Waltham, T.; Bell, F.G.; Culshaw, M. Sinkholes and Subsidence: Karst and Cavernous Rocks in Engineering and Construction; Springer: Berlin/Heidelberg, Germany, 2005; ISBN 978-3-540-26953-3. [Google Scholar]

- Agisoft LLC. Agisoft PhotoScan User Manual Professional Edition, Version 1.2; Frontiers Media SA: Lausanne, Switzerland, 2016. [Google Scholar]

- de Haas, T.; Nijland, W.; McArdell, B.W. Case Report: Optimization of Topographic Change Detection with UAV Structure-from-Motion Photogrammetry Through Survey Co-Alignment. Front. Remote Sens. 2021, 2, 9. [Google Scholar] [CrossRef]

- Goetz, J.; Brenning, A. Quantifying Uncertainties in Snow Depth Mapping From Structure From Motion Photogrammetry in an Alpine Area. Water Resour. Res. 2019, 55, 7772–7783. [Google Scholar] [CrossRef] [Green Version]

- Hendrickx, H.; Vivero, S.; De Cock, L.; De Wit, B.; De Maeyer, P.; Lambiel, C.; Delaloye, R.; Nyssen, J.; Frankl, A. The Reproducibility of SfM Algorithms to Produce Detailed Digital Surface Models: The Example of PhotoScan Applied to a High-Alpine Rock Glacier. Remote Sens. Lett. 2019, 10, 11–20. [Google Scholar] [CrossRef] [Green Version]

- Moreels, P.; Perona, P. Evaluation of Features Detectors and Descriptors Based on 3D Objects. Int. J. Comput. Vis. 2007, 73, 263–284. [Google Scholar] [CrossRef] [Green Version]

- James, M.R.; Robson, S. Mitigating Systematic Error in Topographic Models Derived from UAV and Ground-Based Image Networks. Earth Surf. Process. Landf. 2014, 39, 1413–1420. [Google Scholar] [CrossRef] [Green Version]

- Lin, J.; Wang, R.; Li, L.; Xiao, Z. A Workflow of SfM-Based Digital Outcrop Reconstruction Using Agisoft PhotoScan. In Proceedings of the 2019 IEEE 4th International Conference on Image, Vision and Computing (ICIVC), IEEE, Xiamen, China, 5–7 July 2019; pp. 711–715. [Google Scholar]

- Feurer, D.; Vinatier, F. The Time-SIFT Method: Detecting 3-D Changes from Archival Photogrammetric Analysis with Almost Exclusively Image Information. arXiv 2018, arXiv:1807.09700. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Brodu, N.; Lague, D. 3D Terrestrial Lidar Data Classification of Complex Natural Scenes Using a Multiscale Dimensionality Criterion: Applications in Geomorphology. ISPRS J. Photogramm. Remote Sens. 2012, 68, 121–134. [Google Scholar] [CrossRef] [Green Version]

- Zhang, W.; Qi, J.; Wan, P.; Wang, H.; Xie, D.; Wang, X.; Yan, G. An Easy-to-Use Airborne LiDAR Data Filtering Method Based on Cloth Simulation. Remote Sens. 2016, 8, 501. [Google Scholar] [CrossRef]

| Date | Illumination Conditions | Ground and Weather Conditions | Number of GCP Targets |

|---|---|---|---|

| 22 March 2017 | Steep sun incidence, shadows visible. | Dry and brown soil, almost no foliage, dry stems; sunny, partly overcast, windy. | 8 |

| 22 November 2018 | Diffuse lighting, overcast. | Vegetation with green foliage, partly overgrown and snowy slopes; foggy with some snow. | 8 |

| 5 November 2019 | Steep sun incidence, shadows visible. | Vegetation with green foliage growing over the bottom and southeastern slope of the sinkhole; overcast, partly sunny, windy. | 10 |

| Sensor | Resolution | Focal Length | Number of Images | ||

|---|---|---|---|---|---|

| 2017 | 2018 | 2019 | |||

| Nikon D3000 | 3872 × 2592 | 18 mm | 94 | 166 | 178 |

| DJI FC330 | 4000 × 3000 | 4 mm | 353 | - | 287 |

| DJI FC6310 | 5472 × 3647 | 9 mm | - | 564 | - |

| Year | Survey Method | Number of Images Used | No. of Points Sparse Cloud | No. of Points Dense Cloud | Control Points RMSE * (mm) | Mean Point Density (No. of Neighbors/0.2 m) | Coverage (% of Black Pixels) | Point Precision Estimates (𝜎X) (mm) | Point Precision Estimates (𝜎Y) (mm) | Point Precision Estimates (𝜎Z) (mm) | |||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Std. dev. | Mean | Std. dev. | Mean | Std. dev. | ||||||||

| 2017 | Aerial | 353 | 37,037 | 2663,766 | 12 | 101 | 51 | 3 | 0.5 | 4 | 0.5 | 5 | 1.1 |

| Terrestrial | 94 | 13,051 | 2,487,248 | 35 | 104 | 58 | 25 | 15.1 | 28 | 14.3 | 15 | 8.1 | |

| 2018 | Aerial | 542 | 54,443 | 8,767,159 | 15 | 359 | 51 | 10 | 4.8 | 12 | 6.2 | 17 | 6.8 |

| Terrestrial | 165 | 6326 | 2,267,846 | 25 | 108 | 70 | 20 | 10.1 | 18 | 10.1 | 13 | 3.2 | |

| 2019 | Aerial | 278 | 34,612 | 2,673,191 | 12 | 109 | 52 | 6 | 2.5 | 7 | 2.3 | 16 | 8.2 |

| Terrestrial | 177 | 21,929 | 2,707,662 | 17 | 117 | 65 | 25 | 12.8 | 25 | 12.2 | 16 | 5.9 | |

| Comparison ID | Reference Cloud | Compared Cloud | Mean Measured Distance (mm) | Mean Local Level of Detection (mm) | % of Reference Cloud Points with Detectable Change | ||

|---|---|---|---|---|---|---|---|

| All Points | Points with Detectable Change | All Points | Points with Detectable Change | ||||

| TerrUAV 2017 | 2017 U 1 | 2017 T 2 | 40 | 78 | 54 | 47 | 43 |

| TerrUAV 2018 | 2018 U | 2018 T * | 3 | −11 | 48 | 44 | 14 |

| TerrUAV 2019 | 2019 U | 2019 T | 8 | 19 | 55 | 51 | 11 |

| Terr 2017/18 | 2017 T | 2018 T * | −49 | −103 | 67 | 56 | 33 |

| Terr 2018/19 | 2019 T | 2018 T * | 58 | 136 | 64 | 59 | 25 |

| Terr 2017/19 | 2019 T | 2017 T | 25 | 20 | 73 | 65 | 30 |

| UAV 2017/18 | 2018 U | 2017 U | −27 | −39 | 32 | 30 | 52 |

| UAV 2018/19 | 2018 U | 2019 U | 61 | 101 | 36 | 35 | 38 |

| UAV 2017/19 | 2019 U | 2017 U | 37 | 20 | 21 | 65 | 62 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Petschko, H.; Zehner, M.; Fischer, P.; Goetz, J. Terrestrial and Airborne Structure from Motion Photogrammetry Applied for Change Detection within a Sinkhole in Thuringia, Germany. Remote Sens. 2022, 14, 3058. https://doi.org/10.3390/rs14133058

Petschko H, Zehner M, Fischer P, Goetz J. Terrestrial and Airborne Structure from Motion Photogrammetry Applied for Change Detection within a Sinkhole in Thuringia, Germany. Remote Sensing. 2022; 14(13):3058. https://doi.org/10.3390/rs14133058

Chicago/Turabian StylePetschko, Helene, Markus Zehner, Patrick Fischer, and Jason Goetz. 2022. "Terrestrial and Airborne Structure from Motion Photogrammetry Applied for Change Detection within a Sinkhole in Thuringia, Germany" Remote Sensing 14, no. 13: 3058. https://doi.org/10.3390/rs14133058

APA StylePetschko, H., Zehner, M., Fischer, P., & Goetz, J. (2022). Terrestrial and Airborne Structure from Motion Photogrammetry Applied for Change Detection within a Sinkhole in Thuringia, Germany. Remote Sensing, 14(13), 3058. https://doi.org/10.3390/rs14133058