Abstract

Aiming at the application of close-up space measurement based on time-of-flight (TOF) cameras, according to the analysis of the characteristics of the space background environment and the imaging characteristics of the TOF camera, a physics-based amplitude modulated continuous wave (AMCW) TOF camera imaging simulation method for space targets based on the improved path tracing is proposed. Firstly, the microfacet bidirectional reflection distribution function (BRDF) model of several typical space target surface materials is fitted according to the measured BRDF data in the TOF camera response band to make it physics-based. Secondly, an improved path tracing algorithm is developed to adapt to the TOF camera by introducing a cosine component to characterize the modulated light in the TOF camera. Then, the imaging link simulation model considering the coupling effects of the BRDF of materials, the suppression of background illumination (SBI), optical system, detector, electronic equipment, platform vibration, and noise is established, and the simulation images of the TOF camera are obtained. Finally, ground tests are carried out, and the test shows that the relative error of the grey mean, grey variance, depth mean, and depth variance is 2.59%, 3.80%, 18.29%, and 14.58%, respectively; the MSE, SSIM, and PSNR results of our method are also better than those of the reference method. The ground test results verify the correctness of the proposed simulation model, which can provide image data support for the ground test of TOF camera algorithms for space targets.

1. Introduction

In recent years, time-of-flight (TOF) imaging technology has been widely used in ground robot positioning and navigation, pose estimation, 3D reconstruction, indoor games, and other fields due to its advantages in structure and performance. Significantly, researchers are promoting TOF imaging technology in spatial tasks such as spatial pose estimation and relative navigation [1,2,3,4,5,6]. However, due to the particularity of the space environment, it is difficult to obtain the imaging results of the actual TOF camera before formulating the space mission scheme, planning mission content, and designing the related algorithms, so it is impossible to evaluate the algorithm capability and ensure the smooth implementation of the mission [7]. The imaging simulation method can provide data input for the back-end algorithm test of the space-based TOF camera. Nevertheless, it is different from the imaging simulation methods of the visible light camera [8,9,10,11,12], infrared camera [13,14,15], and radar [16,17], there are few imaging simulation methods of TOF cameras because the imaging principle is essentially different. Therefore, it is of great significance to develop the imaging simulation method of the TOF camera for space targets.

For the imaging simulation method of the TOF camera, the research status is as follows. References [18,19] proposed a real-time TOF simulation framework for simple geometry based on standard graphics rasterization techniques. This method only considers the influence of errors such as dynamic motion blur and flying pixels and does not consider the influence of the background environment, so it is only suitable for indoor scenes. Reference [20] presented an amplitude modulated continuous wave (AMCW) TOF simulation method using global illumination based on the bidirectional path tracing method for indoor scenes such as kitchens. References [21,22] established physics-based TOF camera simulation methods, respectively. In order to evaluate two alternative approaches in continuous-wave TOF sensor design, reference [21] focused on realistic and practical sensor parameterization. Based on the reflective shadow map (RSM) algorithm, reference [22] introduced the bidirectional reflection distribution function (BRDF) data of materials, which has the physical imaging characteristics of actual materials, but this method also does not consider the influence of background illumination. Reference [23] proposed a pulsed TOF simulation method based on Vulkan shader and NVIDIA VKRAY ray tracing for indoor scenes. It can be seen that most of these existing TOF imaging simulation methods are only for indoor scenes, without considering the influence of background illumination, and there is no research on the imaging simulation method of TOF cameras specifically for space targets.

The main difference between the space environment and the indoor environment is the influence of sunlight. The sunlight will affect the signal-to-noise ratio and even cause the detector’s supersaturation. Therefore, the camera’s hardware must consider the suppression of background illumination (SBI). Many TOF detectors considering the SBI function have been developed [24,25,26,27,28]. At the same time, for the TOF camera simulation, SBI must also be considered, and the corresponding simulation model must be developed. Reference [29] constructed a theoretical model of SBI for PMD detectors, which can effectively characterize the detector’s ability to suppress background illumination.

To sum up, this paper proposes a physics-based imaging simulation method of TOF cameras for space targets based on improved path tracing, aiming at the space application of TOF cameras. The main contributions of this method are as follows:

- (1)

- An improved path tracing algorithm is developed to adapt to the TOF camera by introducing a cosine component to characterize the modulated light in the TOF camera.

- (2)

- The background light suppression model is introduced, and the physics-based simulation is realized by considering the BRDF model fitted by the measured data in the near-infrared band of space materials

- (3)

- A ground test scene is built, and the correctness of the proposed TOF camera imaging simulation method is verified by quantitative evaluation between the simulated image and measured image.

2. Materials and Methods

2.1. Imaging Principle of TOF Camera

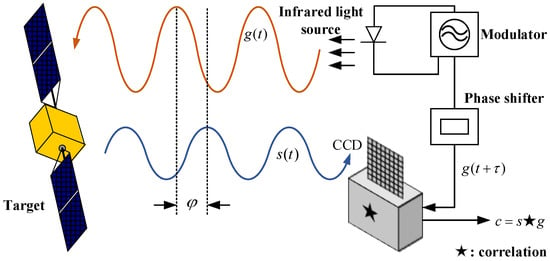

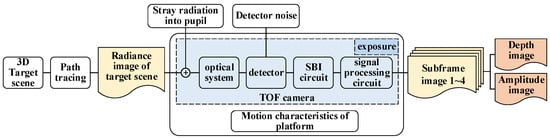

Based on the homodyne detection principle, the AMCW TOF camera measures the distance by measuring the cross-correlation between the reflected light and reference signals. The camera first transmits the near-infrared optical signal modulated by a sine wave. The optical signal is reflected by the target surface and received by the infrared detector. The phase delay of the received signal relative to the transmitted signal is calculated to calculate the target distance information. The specific principle is shown in Figure 1.

Figure 1.

Schematic diagram of the continuous wave TOF system.

It is assumed that the transmitted infrared optical signal is , its amplitude is , is the signal modulation frequency, and the received optical signal is

where is the amplitude of reflected signal light, is the phase delay caused by target distance, and is the offset caused by ambient light. Then, the cross-correlation between the transmitted optical signal and the received optical signal is:

where is the time delay and is the correlation operation symbol.

In order to recover the amplitude and phase of the reflected light signal, four sequence amplitude images are collected generally, which are defined as:

Then, the phase delay , reflected signal amplitude , and offset can be obtained as:

Finally, the distance between the TOF camera and the scene is:

The purpose of imaging simulation is to obtain the distance and the intensity of the reflected signal, which is related to the characteristics of the target material, background, the TOF camera, and so on.

2.2. Imaging Characteristic Modeling

2.2.1. Target Material Characteristics Modeling

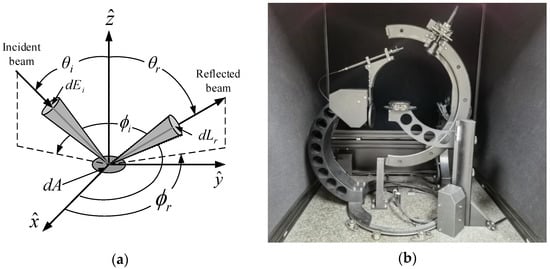

In order to achieve physics-based simulation, it is necessary to introduce the reflection characteristics of the actual material of the target surface. The BRDF is usually used to express the reflection characteristics of the material surface. As shown in Figure 2a, on the surface element , the incident light direction is , and the observation direction is , where and represent the zenith angle and azimuth angle, respectively, and represents the normal direction of the surface. The BRDF is defined as the ratio of the radiance emitted along the direction to the irradiance of the measured surface incident along the direction , and the formula is as follows.

Figure 2.

Schematic diagram of the BRDF geometry and the measuring instrument used in this paper. (a) Schematic diagram of the BRDF geometry; (b) the REFLET-180 BRDF measuring instrument.

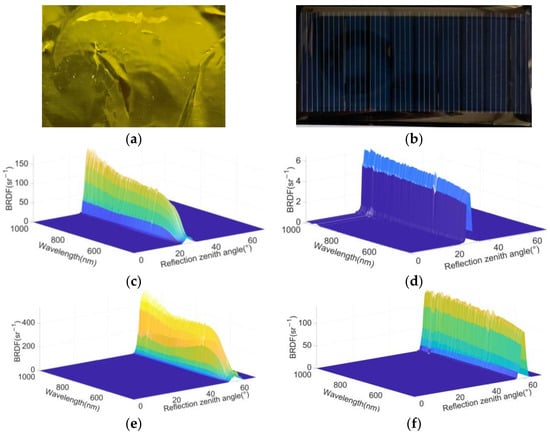

Yellow thermal control material and silicon solar cells are two primary surface materials of space targets. In this paper, their BRDF data are measured by the REFLET-180 BRDF measuring instrument as shown in Figure 2b. The specific material samples and some corresponding measurement results are shown in Figure 3.

Figure 3.

Material samples and some measured BRDF data of yellow thermal control material and silicon solar cells. The brighter the color, the greater the BRDF value. (a) Sample of thermal control material; (b) sample of silicon solar cells; (c) measured BRDF of thermal control material when is 30°; (d) measured BRDF of silicon solar cells when is 30°; (e) measured BRDF of thermal control material when is 60°; (f) measured BRDF of silicon solar cells when is 60°.

At the same time, the above measured BRDF data are theoretically modeled using the microfacet BRDF model [14,15]. The microfacet BRDF model includes the specular reflection term and the diffuse reflection term, and the specular reflection term is the Torrance–Sparrow BRDF model [30]. The definition of the microfacet BRDF model is as follows.

where is the specular reflection coefficient, is the diffuse reflection coefficient, is the micro surface distribution factor, is the Fresnel factor [31], and is the geometric attenuation factor. Their specific definition is as follows.

where and are the root mean square slope of the microfacet, is the Fresnel coefficient at vertical incidence, and is the undetermined coefficient.

Since the wavelength of the TOF camera light source used in this paper is 850 nm, the parameters of the BRDF model at 850 nm are fitted, and the fitting error is expressed by the following formula [32,33].

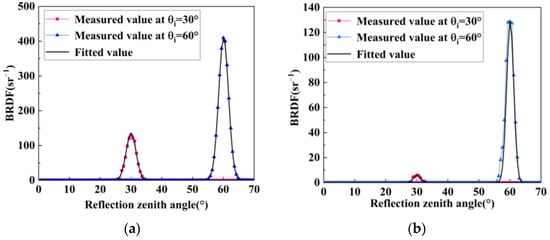

where represents the measured BRDF value, represents the fitted BRDF value, the parameter fitting results are shown in Table 1, and the visualization of fitting results is shown in Figure 4.

Table 1.

Fitting results of BRDF model parameters at 850 nm.

Figure 4.

Measurement and model fitting results of BRDF at 850 nm for yellow thermal control material and silicon solar cells. (a) Fitting results of the BRDF model of yellow thermal control material; (b) fitting results of the BRDF model of silicon solar cells.

2.2.2. Background Characteristics Modeling

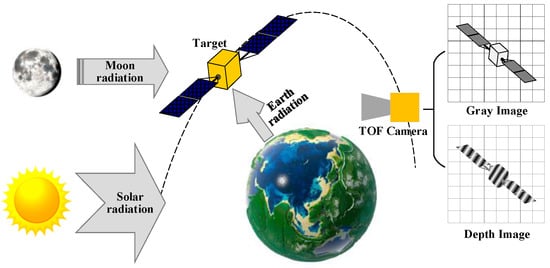

Space targets run in the Earth’s orbit. The radiation in the imaging band of the TOF camera is mainly composed of direct solar radiation, solar radiation reflected by the Earth, solar radiation reflected by the Moon, and stellar radiation. Since the stellar radiation is minimal compared to other radiation, the stellar radiation at the target can be ignored. The surface radiation of the target is shown in Figure 5.

Figure 5.

Physical radiation model of space targets.

- (1)

- The irradiance generated by direct solar radiation at the target is:where is the wavelength in µm; is the first blackbody radiation constant; is the second blackbody radiation constant; is the solar radiation temperature, and K; is the solar radius, and km; is the distance between the Sun and the Earth; is the observation band of the TOF camera.

- (2)

- Assuming that the space target is in a high Earth orbit and the Earth is assumed to be a diffuse sphere, the irradiance generated by solar radiation reflected by the Earth at the target is approximate as follows.where is the average albedo of the Earth and ; is the radius of the Earth and km; is the height of the target from the ground; is the angle between vector and vector , and the value range is .

- (3)

- Similarly, assuming that the Moon is a diffuse sphere, the irradiance generated by solar radiation reflected by the Moon at the target is:where is the average albedo of the Moon and ; is the radius of the Moon and km; is the distance between the target and the center of mass of the Moon; is the angle between vector and vector , and the value range is .

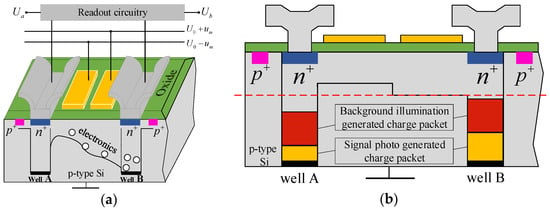

2.2.3. SBI Characteristics Modeling

The TOF sensor is commonly the photonic mixer device (PMD). The PMD is a two-tap sensor, and the structure diagram is shown in Figure 6a [34]. The interference of background light on the actively modulated light leads to the premature saturation of the quantum well of the pixel, so that less reflected active light containing depth information is detected, which will lead to increased noise and a reduced signal-to-noise ratio (SNR). The schematic diagram of the influence of background light on PMD is shown in Figure 6b [35]. Therefore, designing a system that can effectively reduce the impact of background illumination is an important job.

Figure 6.

The structure diagram of the PMD and the schematic diagram of the influence of background light on the PMD. (a) The structure diagram of the PMD; (b) the schematic diagram of the influence of background illumination on the PMD.

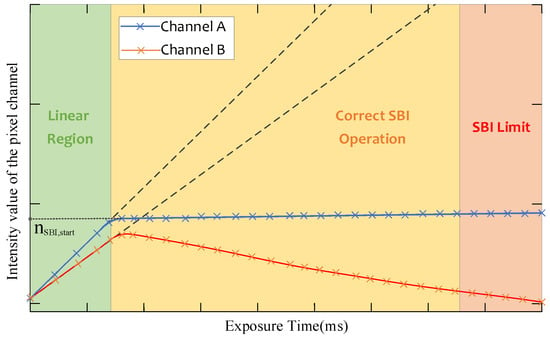

The suppression of background illumination (SBI) developed by PMDTec has been successfully applied to TOF cameras such as CamCube. The SBI is an in-pixel circuitry that subtracts ambient light, which prevents the pixels from saturating. The manufacturer did not publish the specific details of the SBI compensation circuit, but reference [36] summarizes the response relationship of the A and B channels of the PMD pixel to the exposure time through experiments, as shown in Figure 7. The PMD pixel will produce a photoelectric response to both the signal light and the background illumination during the exposure time. In the linear region, the SBI is not activated. When the number of electrons in the quantum well A or B reaches , the SBI is activated. As the exposure time continues to increase, it will enter the SBI Limit region, and the data of this pixel is invalid at this time.

Figure 7.

The response relationship of the A and B channels of the PMD pixel to the exposure time.

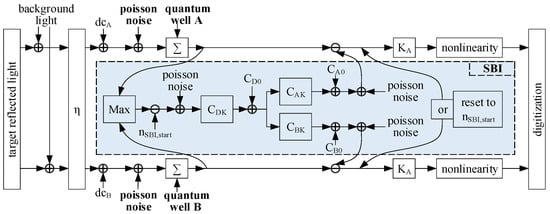

Reference [29] established the SBI model shown in Figure 8 by analyzing the response relationship of the TOF camera to illumination intensity or exposure time. This SBI model is also used in this paper, which can be described as follows. The charge stored in two quantum wells is continuously compared with a reference value , and once the stored charge in one of the quantum wells exceeds this value, that is, the difference between the stored charge and the reference value is positive, a compensation process will be triggered. Two compensation currents are injected into the two quantum wells during the compensation process, respectively, and the number of charges contained in the compensation currents is approximately the same as that of . After compensation, the quantum well containing more electrons is reset to , and the charge of the other quantum well is set to a value lower than .

Figure 8.

The SBI model. and represent the charge-to-voltage coefficient. and represent the charge difference coefficient and offset, respectively. (or ) and (or ) represent the compensation charge coefficient and offset, respectively.

The compensation process does not lose any critical information, as the phase delay can be estimated by keeping only the difference between the charges in the two quantum wells. As shown below, each sub-amplitude image in Equation (4) is the difference between the amplitude images of channels A and B.

where and are amplitude images output by channels A and B, respectively.

As shown in the figure above, both the background illumination and the SBI process introduce additional Poisson noise. The Poisson distribution is given by

where describes the mean of the values, which is here the number of generated electrons. is the probability of detecting k electrons for a given .

2.3. Imaging Simulation Modeling

In order to obtain the simulated image of the actual target, an AMCW TOF camera imaging simulation model based on path tracing is proposed in this paper. Firstly, establish the space three-dimensional target scene, simulate the TOF camera to transmit the modulated light signal to the target scene, and obtain the radiance image of the space target scene through the path tracing method. Secondly, the radiance image is coupled with the imaging chain factors such as platform motion, optical system, and detector, and the influence of background light is suppressed through the SBI module. Then, four frames of amplitude images are obtained through time sampling. Finally, the final depth and grey image are obtained through Formula (4) to Formula (7). The specific simulation model is shown in Figure 9.

Figure 9.

AMCW TOF camera imaging simulation model.

2.3.1. Improved Path Tracing Algorithm of the TOF Camera

The general path tracing algorithm is suitable for the imaging simulation of visible light and infrared cameras. However, since the light signal in the TOF camera is modulated, there is a cosine component that varies with the propagation distance. Therefore, to apply the path tracing algorithm to the TOF camera, an improved path tracing algorithm is developed by introducing a cosine component to characterize the modulated light in the TOF camera, and the improved algorithm is shown as follows.

Define to represent the propagation distance d [m] of the optical signal without attenuation, and its form is as follows.

where represents the DC component, represents the cosine component, and represents the real part of the imaginary number and .

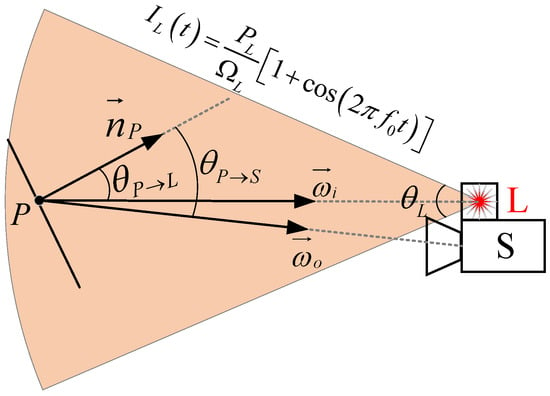

The light source of the TOF camera is assumed to be a uniform point light source, then the light intensity is as follows.

where represents the power of the light, represents the solid angle, and represents the modulation frequency of the optical signal.

The illuminance at the micro-plane perpendicular to the light propagation direction at away from the light source is:

For a point in the scene shown in Figure 10, the illuminance generated by direct lighting at is .

where is the distance between the light source and the point , and is the included angle between the vector of pointing to and the normal vector of the surface at point .

Figure 10.

The schematic diagram of direct lighting.

The direct illumination radiance generated by the infrared light source along the direction of at point is:

where represents the BRDF of the surface. For the irradiance of ambient light sources such as the Sun, the Earth, and the Moon, as shown in Formulas (12)–(14), the cosine component of is 0, only the DC component. Therefore, in the derivation process, this paper only gives the case of the active light of the TOF camera. When encountering ambient light, it only needs to change the cosine component related to ambient light to 0.

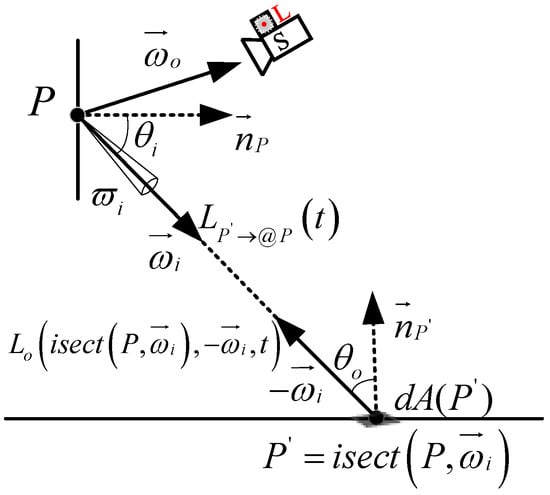

In addition to the direct illumination of light sources, the light signal reflected by other surfaces will also affect the radiance at point , as shown in Figure 11. We call this part indirect illumination radiance denoted by , which is defined as follows.

where represents the radiance generated by at point , represents the solid angle of these surfaces that contribute to the radiance at point , represents the micro solid angle in the direction, and is defined as follows.

Figure 11.

Radiative transfer between two points.

Since the radiation energy of light in the scene is conserved, the radiance at can be associated with the radiance at another point .

where calculates the first intersection between the light propagating along the direction from point and the scene. represents the microfacet at the intersection .

Substituting Equation (23) into Equation (22), we can get:

where is all surfaces of the scene, and represents the visibility between points and . If the two points are visible to each other, ; otherwise, Let.

Then

Combining direct radiance and indirect radiance, the radiance at the point can be expressed as follows.

After the radiance propagate distance , the radiance at the camera sensor is:

The path tracing algorithm [37,38] based on Monte Carlo is utilized to solve the Equation (28), which is approximated as follows.

where represents the maximum bounce times of light, represents the number of light sources, represents the number of samples per pixel, represents the Monte Carlo estimation of the kth sample, as shown below.

where is the sampling probability function at point, which is defined as , is the sum of the surfaces of the scene, and is the sampling probability density function in the direction.

The irradiance received by the pixel of the TOF sensor is as follows.

where, represents the transmittance of the AMCW TOF camera, represents its f-number, and represents the angle between the optical axis and the vector .

2.3.2. Imaging Link Impact Modeling

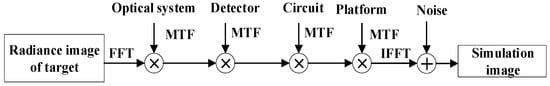

As shown in Figure 9, the target scene radiation forms the conversion process of “radiation-voltage-grey” after passing through each component module of the TOF camera. In this process, the signal is affected by the optical system, detector, circuit processing unit, and platform, which is reflected in the image as the dispersion effect on the radiation image. These dispersion effects can be described by each module’s modulation transmission function (MTF), as shown in Figure 12.

Figure 12.

Mathematical model of the radiative transfer process.

Then, the last output simulation image [39] is:

where represents fast Fourier transform; represents the MTF of the optical system; represents the MTF of the detector; represents the MTF of the signal processing circuit; represents the MTF of the platform motion; represents the noise of the image. MTF models of different processes can be found in references [8,17,40].

3. Results

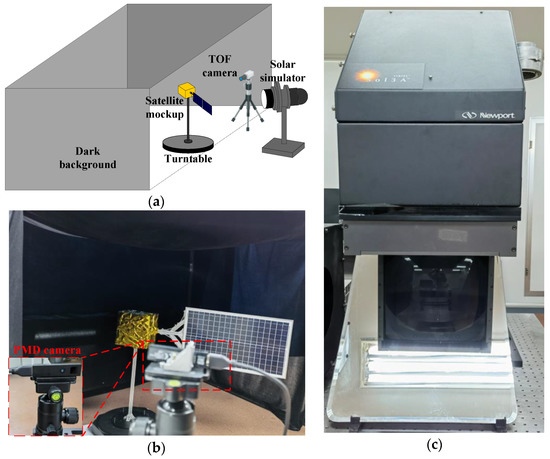

In order to verify the correctness of the proposed TOF camera imaging simulation method for space targets, the ground experiment scene shown in Figure 13 is built. Considering that it is difficult to simulate the radiation of the Earth and the Moon on the ground, and the influence of these radiations is minimal, the ground experiment only considers the direct solar radiation. The experimental scene comprises a satellite model, a TOF camera, a turntable, a black background, and a solar simulator. The surface of the satellite model is mainly composed of yellow thermal control materials and silicon solar cells, and the TOF camera is placed horizontally on a tripod. The TOF camera used in this paper is the PMD CamBoard camera, and its performance indexes are shown in Table 2. The solar simulator used is the Newport Oriel Sol3A, and its performance indexes are shown in Table 3. The experimental background is a dark background composed of black light-absorbing flannel, and the light beam emitted by the solar simulator completely covers the target model.

Figure 13.

Ground experiment scene. (a) Schematic diagram of the ground experiment scene; (b) actual ground experiment scene; (c) the Newport Oriel Sol3A Solar Simulator, Model 94123A.

Table 2.

The performance indexes of PMD CamBoard.

Table 3.

Some performance indexes of the Newport Oriel Sol3A Solar Simulator, Model 94123A.

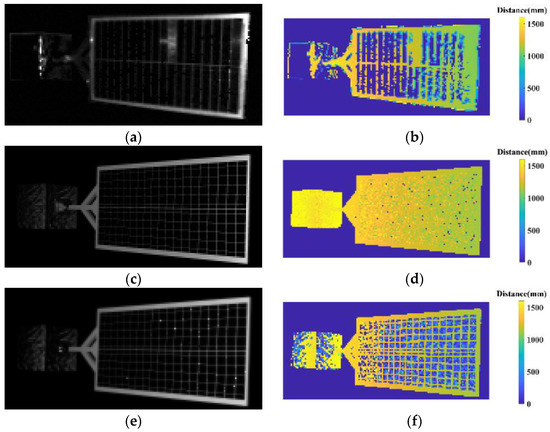

During the experiment, all light sources in the room were turned off, the solar simulator was turned on, the output power of the solar simulator was set to the typical power (1 SUN), and the PMD camera was used to capture the images of the target model. Due to the size of the satellite model and the limited illumination area of the solar simulator, to make the beam of the solar simulator cover the target model entirely, the satellite model is inclined at a certain angle. At the same time, the TOF imaging simulation method proposed in this paper was used to obtain the corresponding simulation image according to the experimental conditions. Other simulation parameters used are shown in Table 4. The measured images and the simulated images are shown in Figure 14. In order to facilitate the comparison with the simulated image, the background area of the measured image is eliminated manually.

Table 4.

Other simulation parameters used.

Figure 14.

The measured images of the ground experiment scene (the background area is eliminated) and the corresponding simulated images. (a) Measured grey image; (b) measured depth image; (c) grey image simulated by the method of Ref. [22]; (d) depth image simulated by the method of Ref. [22]; (e) simulated grey image of ours; (f) simulated depth image of ours. (c,d) only consider the influence of the active light of the TOF camera and do not consider the ambient solar light. (e,f) consider the process of the SBI model suppressing solar ambient illumination.

In Table 4, represents the distance between the PMD TOF camera and satellite body center, represents the angle between the optical axis of the camera and the plane of the solar panel, and represents the angle between the beam of the solar simulator and the plane of the solar panel. According to references [41,42], the value of is about 36,500.

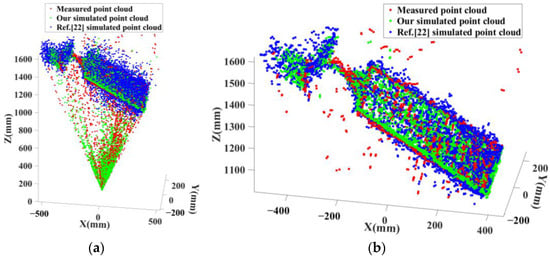

In order to quantitatively evaluate the simulation effect, the mean, variance, mean square error (MSE), structural similarity (SSIM), and peak signal-to-noise ratio (PSNR) of the grey image and depth image are calculated. The smaller the MSE, the larger the SSIM, and the larger the PSNR, indicating the more realistic the simulation results are. These indexes are shown in Table 5, where bold indicates better results. At the same time, the depth image is converted into a point cloud and denoised. The point cloud before and after denoising is shown in Figure 15.

Table 5.

Comparison of indexes between measured images and simulated images.

Figure 15.

The simulated [22] and measured point cloud. (a) Original simulated and measured point clouds; (b) simulation and measured point cloud after denoising.

4. Discussion

As shown in Figure 14, our simulated grey and depth images are very similar to the measured grey and simulation images in visual effect. The gray image simulated by the method of reference [22] is also very similar to the measured gray image. However, the corresponding simulated depth image is quite different from the measured depth. The specific reason is that this method does not consider the error introduced by background lighting, resulting in low depth noise. At the same time, as shown in Figure 15, the point cloud coincidence degree of our method is better than that of reference [22]. In addition, it can be seen from Table 5 that the relative error of the grey mean, grey variance, depth mean, and depth variance is 2.59%, 3.80%, 18.29%, and 14.58%, respectively, which is better than the results of the method in reference [22]. Furthermore, our method’s MSE, SSIM, and PSNR results are also better than those of the method in reference [22].

5. Conclusions

This paper proposed a physics-based AMCW TOF camera imaging simulation method based on the improved path tracing for space targets based on the analysis of space background environment characteristics and the TOF camera imaging mechanism. Firstly, the BRDF data of the yellow thermal control material and the silicon cell at 850 nm was measured, the parameters of the microfacet BRDF model were fitted, and the fitting error was less than 5.2%. Secondly, an improved path tracing algorithm was developed to adapt to the TOF camera by introducing a cosine component to characterize the modulated light in the TOF camera. Then, the imaging link simulation model considering the coupling effects of the BRDF of materials, SBI, optical system, detector, electronic equipment, platform vibration, and noise was established. Finally, the ground experiment was carried out, and the relative error of the grey mean, grey variance, depth mean, and depth variance was 2.59%, 3.80%, 18.29%, and 14.58%, respectively. At the same time, our method’s MSE, SSIM, and PSNR results were also better than those of the reference method. The experimental results verify the correctness of the proposed simulation method and can provide image data support for the ground test of TOF camera algorithms for space targets.

Author Contributions

Conceptualization, H.W.; methodology, Z.Y.; software, Z.Y.; validation, Z.Y., X.L., Q.N. and Y.L.; formal analysis, Z.Y.; investigation, Z.Y., X.L. and Q.N.; writing—original draft preparation, Z.Y.; writing—review and editing, Z.Y. and H.W.; visualization, Z.Y., X.L. and Q.N.; supervision, H.W.; project administration, H.W.; funding acquisition, H.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by National Natural Science Foundation of China, grant number 61705220.

Data Availability Statement

Not applicable.

Acknowledgments

We sincerely thank the National Natural Science Foundation of China for its support.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Opromolla, R.; Fasano, G.; Rufino, G.; Grassi, M. A review of cooperative and uncooperative spacecraft pose determination techniques for close-proximity operations. Prog. Aerosp. Sci. 2017, 93, 53–72. [Google Scholar] [CrossRef]

- Klionovska, K.; Burri, M. Hardware-in-the-Loop Simulations with Umbra Conditions for Spacecraft Rendezvous with PMD Visual Sensors. Sensors 2021, 21, 1455. [Google Scholar] [CrossRef] [PubMed]

- Ravandoor, K.; Busch, S.; Regoli, L.; Schilling, K. Evaluation and Performance Optimization of PMD Camera for RvD Application. IFAC Proc. Vol. 2013, 46, 149–154. [Google Scholar] [CrossRef]

- Potier, A.; Kuwahara, T.; Pala, A.; Fujita, S.; Sato, Y.; Shibuya, Y.; Tomio, H.; Tanghanakanond, P.; Honda, T.; Shibuya, T.; et al. Time-of-Flight Monitoring Camera System of the De-orbiting Drag Sail for Microsatellite ALE-1. Trans. Jpn. Soc. Aeronaut. Space Sci. Aerosp. Technol. Jpn. 2021, 19, 774–783. [Google Scholar] [CrossRef]

- Martínez, H.G.; Giorgi, G.; Eissfeller, B. Pose estimation and tracking of non-cooperative rocket bodies using Time-of-Flight cameras. Acta Astronaut. 2017, 139, 165–175. [Google Scholar] [CrossRef]

- Ruel, S.; English, C.; Anctil, M.; Daly, J.; Smith, C.; Zhu, S. Real-time 3D vision solution for on-orbit autonomous rendezvous and docking. In Spaceborne Sensors III; SPIE: Bellingham, WA, USA, 2006; p. 622009. [Google Scholar] [CrossRef]

- Lebreton, J.; Brochard, R.; Baudry, M.; Jonniaux, G.; Salah, A.H.; Kanani, K.; Goff, M.L.; Masson, A.; Ollagnier, N.; Panicucci, P. Image simulation for space applications with the SurRender software. arXiv 2021, arXiv:2106.11322. [Google Scholar]

- Han, Y.; Lin, L.; Sun, H.; Jiang, J.; He, X. Modeling the space-based optical imaging of complex space target based on the pixel method. Optik 2015, 126, 1474–1478. [Google Scholar] [CrossRef]

- Zhang, Y.; Lv, L.; Yang, C.; Gu, Y. Research on Digital Imaging Simulation Method of Space Target Navigation Camera. In Proceedings of the 2021 IEEE 16th Conference on Industrial Electronics and Applications (ICIEA), Chengdu, China, 1–4 August 2021; pp. 1643–1648. [Google Scholar]

- Li, W.; Cao, Y.; Meng, D.; Wu, Z. Space target scattering characteristic imaging in the visible range based on ray tracing algorithm. In Proceedings of the 12th International Symposium on Antennas, Propagation and EM Theory (ISAPE), Hangzhou, China, 3–6 December 2018; pp. 1–3. [Google Scholar] [CrossRef]

- Xu, C.; Shi, H.; Gao, Y.; Zhou, L.; Shi, Q.; Li, J. Space-Based optical imaging dynamic simulation for spatial target. In Proceedings of the AOPC 2019: Optical Sensing and Imaging Technology, Beijing, China, 7–9 July 2019; p. 1133815. [Google Scholar]

- Wang, H.; Zhang, W. Visible imaging characteristics of the space target based on bidirectional reflection distribution function. J. Mod. Opt. 2012, 59, 547–554. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, W.; Wang, F. Infrared imaging characteristics of space-based targets based on bidirectional reflection distribution function. Infrared Phys. Technol. 2012, 55, 368–375. [Google Scholar] [CrossRef]

- Ding, Z.; Han, Y. Infrared characteristics of satellite based on bidirectional reflection distribution function. Infrared Phys. Technol. 2019, 97, 93–100. [Google Scholar] [CrossRef]

- Wang, H.; Chen, Y. Modeling and simulation of infrared dynamic characteristics of space-based space targets. Infrared Laser Eng. 2016, 45, 0504002. [Google Scholar] [CrossRef]

- Wang, F.; Eibert, T.F.; Jin, Y.-Q. Simulation of ISAR Imaging for a Space Target and Reconstruction under Sparse Sampling via Compressed Sensing. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3432–3441. [Google Scholar] [CrossRef]

- Schlutz, M. Synthetic Aperture Radar Imaging Simulated in MATLAB. Master’s Thesis, California Polytechnic State University, San Luis Obispo, CA, USA, 2009. [Google Scholar]

- Keller, M.; Kolb, A. Real-time simulation of time-of-flight sensors. Simul. Model. Pract. Theory 2009, 17, 967–978. [Google Scholar] [CrossRef]

- Keller, M.; Orthmann, J.; Kolb, A.; Peters, V. A simulation framework for time-of-flight sensors. In Proceedings of the 2007 International Symposium on Signals, Circuits and Systems, Iasi, Romania, 13–14 July 2007; pp. 1–4. [Google Scholar]

- Meister, S.; Nair, R.; Kondermann, D. Simulation of Time-of-Flight Sensors using Global Illumination. In Proceedings of the VMV, Lugano, Switzerland, 11–13 September 2013; pp. 33–40. [Google Scholar]

- Lambers, M.; Hoberg, S.; Kolb, A. Simulation of Time-of-Flight Sensors for Evaluation of Chip Layout Variants. IEEE Sens. J. 2015, 15, 4019–4026. [Google Scholar] [CrossRef]

- Bulczak, D.; Lambers, M.; Kolb, A. Quantified, Interactive Simulation of AMCW ToF Camera Including Multipath Effects. Sensors 2018, 18, 13. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Thoman, P.; Wippler, M.; Hranitzky, R.; Fahringer, T. RTX-RSim: Accelerated Vulkan room response simulation for time-of-flight imaging. In Proceedings of the International Workshop on OpenCL, Munich, Germany, 27–29 April 2020; pp. 1–11. [Google Scholar]

- Cho, J.; Choi, J.; Kim, S.-J.; Park, S.; Shin, J.; Kim, J.D.K.; Yoon, E. A 3-D Camera With Adaptable Background Light Suppression Using Pixel-Binning and Super-Resolution. IEEE J. Solid-State Circuits 2014, 49, 2319–2332. [Google Scholar] [CrossRef]

- Shin, J.; Kang, B.; Lee, K.; Kim, J.D.K. A 3D image sensor with adaptable charge subtraction scheme for background light suppression. In Proceedings of the Sensors, Cameras, and Systems for Industrial and Scientific Applications XIV, Burlingame, CA, USA, 3–7 February 2013; p. 865907. [Google Scholar] [CrossRef]

- Davidovic, M.; Seiter, J.; Hofbauer, M.; Gaberl, W.; Zimmermann, H. A background light resistant TOF range finder with integrated PIN photodiode in 0.35 μm CMOS. In Proceedings of the Videometrics, Range Imaging, and Applications XII; and Automated Visual Inspection, Munich, Germany, 13–16 May 2013; p. 87910R. [Google Scholar] [CrossRef]

- Davidovic, M.; Hofbauer, M.; Schneider-Hornstein, K.; Zimmermann, H. High dynamic range background light suppression for a TOF distance measurement sensor in 180nm CMOS. In Proceedings of the SENSORS, Limerick, Ireland, 28–31 October 2011; pp. 359–362. [Google Scholar] [CrossRef]

- Davidovic, M.; Zach, G.; Schneider-Hornstein, K.; Zimmermann, H. TOF range finding sensor in 90nm CMOS capable of suppressing 180 klx ambient light. In Proceedings of the SENSORS, Waikoloa, HI, USA, 1–4 November 2010; pp. 2413–2416. [Google Scholar] [CrossRef]

- Schmidt, M.; Jähne, B. A Physical Model of Time-of-Flight 3D Imaging Systems, Including Suppression of Ambient Light. In Workshop on Dynamic 3D Imaging; Springer: Berlin/Heidelberg, Germany, 2009; pp. 1–15. [Google Scholar] [CrossRef]

- Torrance, K.E.; Sparrow, E.M. Theory for Off-Specular Reflection from Roughened Surfaces. J. Opt. Soc. Am. 1967, 57, 1105–1114. [Google Scholar] [CrossRef]

- Hou, Q.; Zhi, X.; Zhang, H.; Zhang, W. Modeling and validation of spectral BRDF on material surface of space target. In International Symposium on Optoelectronic Technology and Application 2014: Optical Remote Sensing Technology and Applications; International Society for Optics and Photonics: Bellignham, WA, USA, 2014; p. 929914. [Google Scholar]

- Sun, C.; Yuan, Y.; Zhang, X.; Wang, Q.; Zhou, Z. Research on the model of spectral BRDF for space target surface material. In Proceedings of the 2010 International Symposium on Optomechatronic Technologies, Toronto, ON, Canada, 25–27 October 2010; pp. 1–6. [Google Scholar] [CrossRef]

- Peng, L.I.; Zhi, L.I.; Can, X.U. Measuring and Modeling the Bidirectional Reflection Distribution Function of Space Object’s Surface Material. In Proceedings of the 3rd International Conference on Materials Engineering, Manufacturing Technology and Control (ICMEMTC 2016), Taiyuan, China, 27–28 February 2016. [Google Scholar]

- Schwarte, R.; Xu, Z.; Heinol, H.-G.; Olk, J.; Klein, R.; Buxbaum, B.; Fischer, H.; Schulte, J. New electro-optical mixing and correlating sensor: Facilities and applications of the photonic mixer device (PMD). In Proceedings of the Sensors, Sensor Systems, and Sensor Data Processing, Munich, Germany, 16–17 June 1997; pp. 245–253. [Google Scholar] [CrossRef]

- Ringbeck, T.; Möller, T.; Hagebeuker, B. Multidimensional measurement by using 3-D PMD sensors. Adv. Radio Sci. 2007, 5, 135–146. [Google Scholar] [CrossRef]

- Conde, M.H. Compressive Sensing for the Photonic Mixer Device; Springer: Berlin/Heidelberg, Germany, 2017. [Google Scholar]

- Kajiya, J.T. The rendering equation. In Proceedings of the 13th Annual Conference on Computer Graphics and Interactive Techniques, Dallas, TX, USA, 18–22 August 1986; pp. 143–150. [Google Scholar]

- Pharr, M.; Jakob, W.; Humphreys, G. Physically Based Rendering: From Theory to Implementation; Morgan Kaufmann: Burlington, MA, USA, 2016. [Google Scholar]

- Li, J.; Liu, Z. Image quality enhancement method for on-orbit remote sensing cameras using invariable modulation transfer function. Opt. Express 2017, 25, 17134–17149. [Google Scholar] [CrossRef] [PubMed]

- Sukumar, V.; Hess, H.L.; Noren, K.V.; Donohoe, G.; Ay, S. Imaging system MTF-modeling with modulation functions. In Proceedings of the 2008 34th Annual Conference of IEEE Industrial Electronics, Orlando, FL, USA, 10–13 November 2008; pp. 1748–1753. [Google Scholar]

- Langmann, B.; Hartmann, K.; Loffeld, O. Increasing the accuracy of Time-of-Flight cameras for machine vision applications. Comput. Ind. 2013, 64, 1090–1098. [Google Scholar] [CrossRef]

- Langmann, B. Wide Area 2D/3D Imaging: Development, Analysis and Applications; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).