Inshore Ship Detection in Large-Scale SAR Images Based on Saliency Enhancement and Bhattacharyya-like Distance

Abstract

:1. Introduction

2. Related Works

2.1. Anisotropic Scale Space (ASS)

2.2. Dynamic R-CNN

- Feature extraction: ResNet50 and FPN are used as the backbone and neck networks, respectively, to obtain feature maps;

- Label assignment: Positive and negative samples for training RPN/R-CNN are assigned by calculating the IoU of ground truth boxes and anchor boxes/proposal boxes;

- NMS: The IoU of proposal boxes/predicted boxes is calculated to suppress overlapping boxes;

- Region proposal: RPN is used to obtain proposal boxes, which requires label assignment in the training phase and NMS during the prediction phase;

- RoIAlign: Bilinear interpolation is used to align features;

- Dynamic detection: The IoU threshold T and hyper-parameter of the R-CNN are adjusted gradually with the number of iterations. The R-CNN requires label assignment in the training phase and NMS during the prediction phase.

3. Methodology

3.1. Saliency Enhancement

| Algorithm 1 The proposed DoAp. |

3.2. Bhattacharyya-Like Distance

4. Experimental Results and Analysis

4.1. Data Description and Parameter Settings

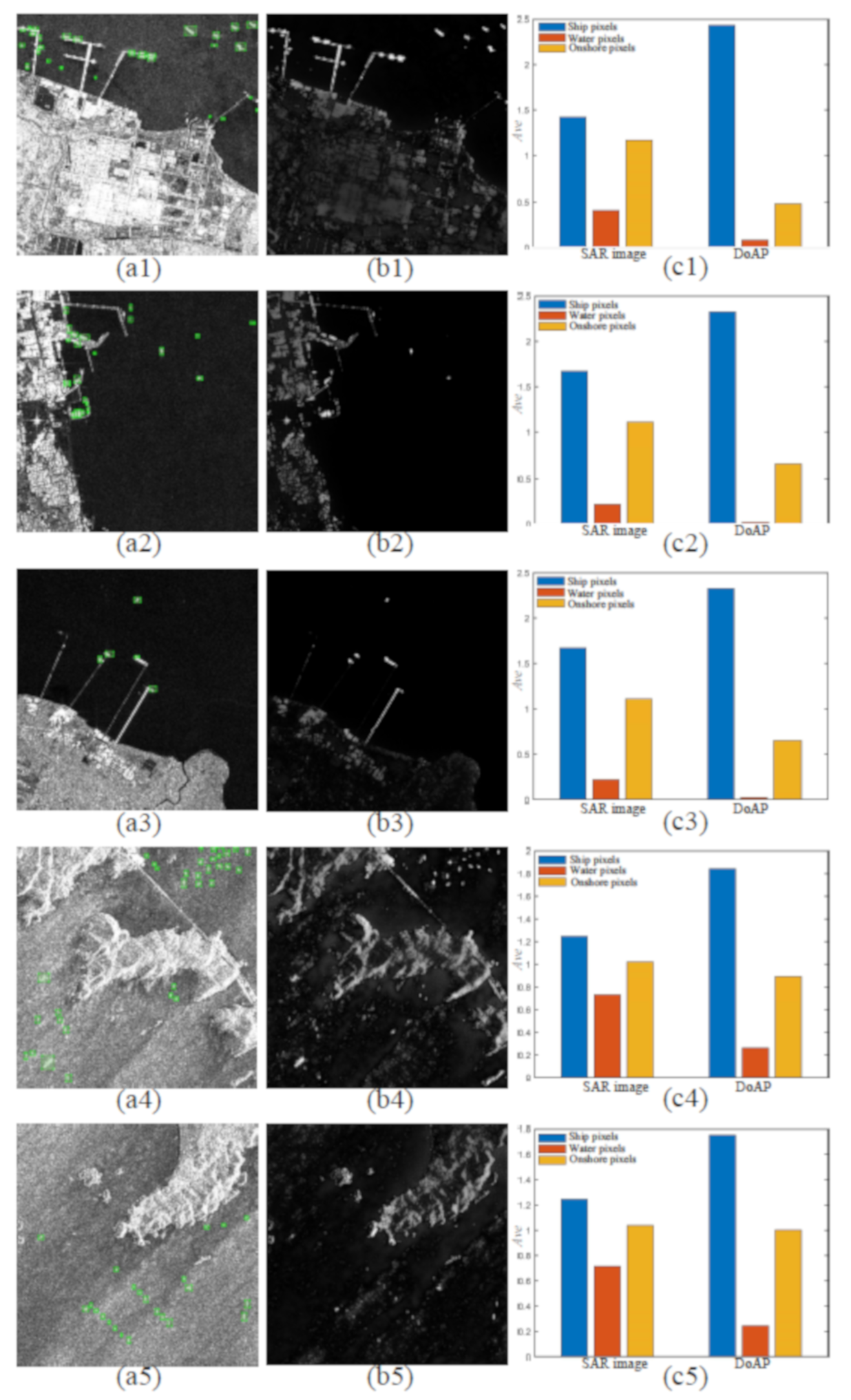

4.2. Final Saliency Enhancement Results

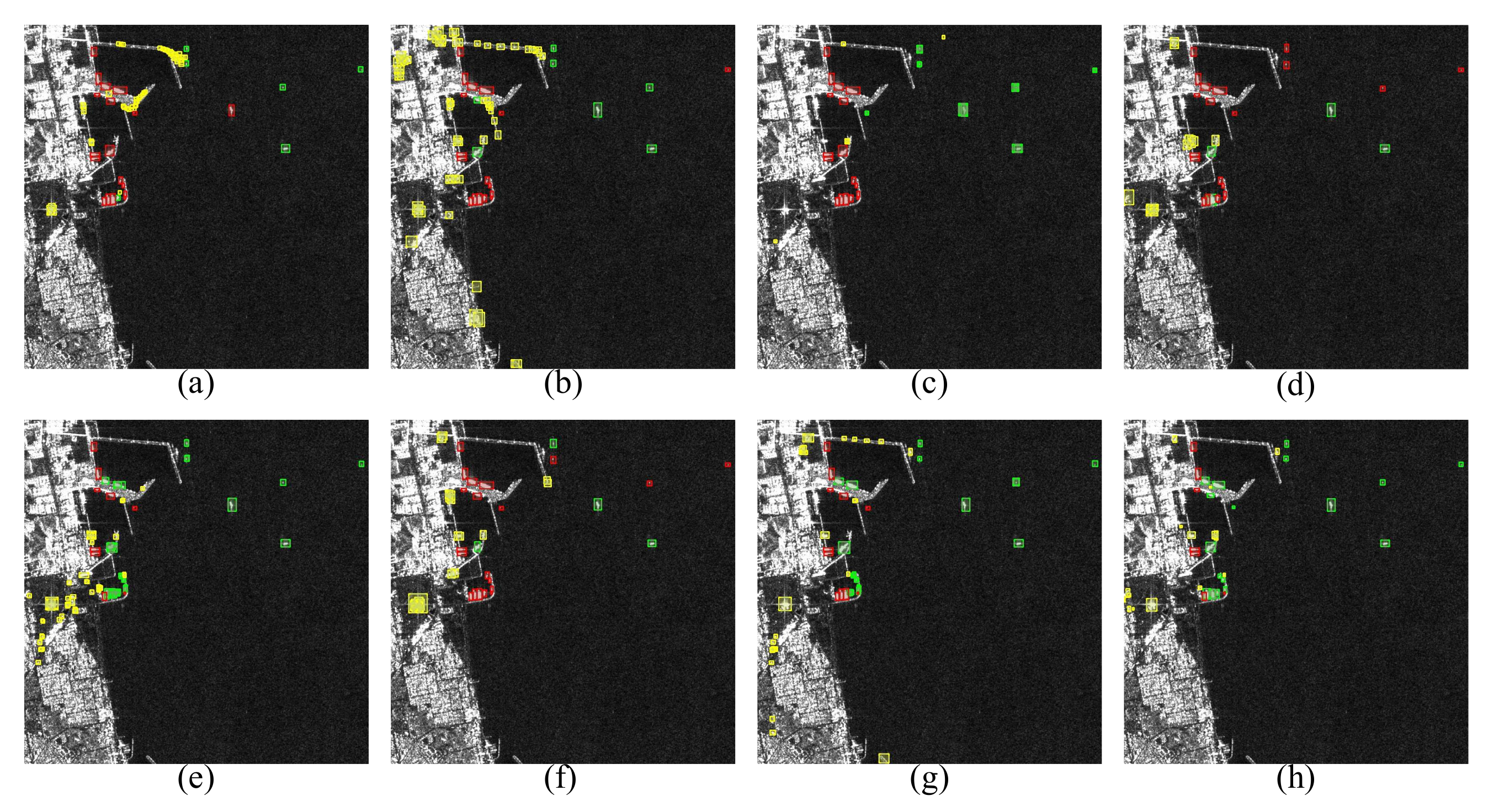

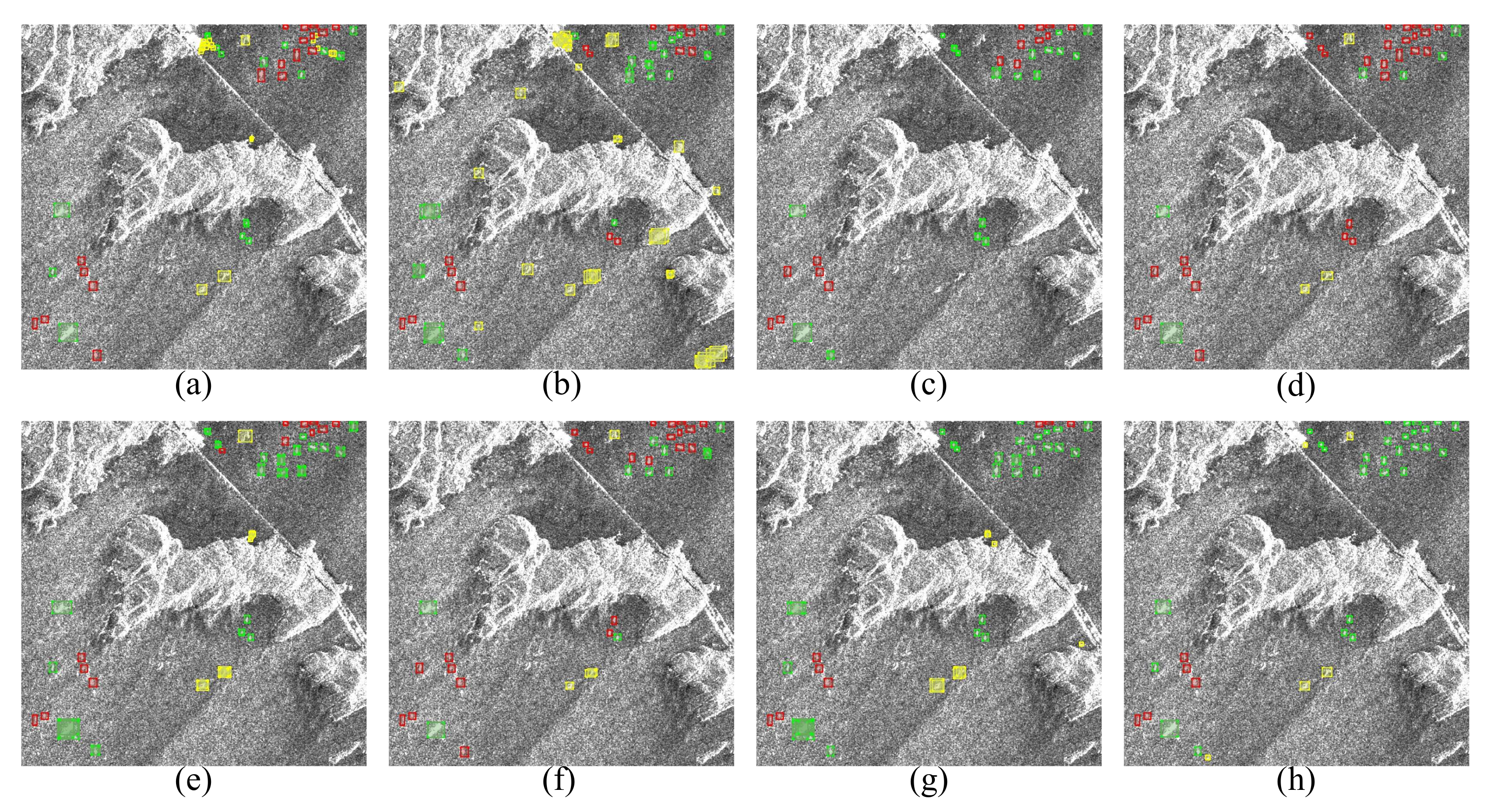

4.3. Comparison with Other Methods

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Renga, A.; Graziano, M.D.; Moccia, A. Segmentation of marine SAR images by sublook analysis and application to sea traffic monitoring. IEEE Trans. Geosci. Remote Sens. 2018, 57, 1463–1477. [Google Scholar] [CrossRef]

- Cheng, J.; Zhang, F.; Xiang, D.; Yin, Q.; Zhou, Y.; Wang, W. PolSAR Image Land Cover Classification Based on Hierarchical Capsule Network. Remote Sens. 2021, 13, 3132. [Google Scholar] [CrossRef]

- Hamasaki, T.; Ferro-Famil, L.; Pottier, E.; Sato, M. Applications of polarimetric interferometric ground-based SAR (GB-SAR) system to environment monitoring and disaster prevention. In Proceedings of the European Radar Conference, Paris, France, 3–4 October 2005; pp. 29–32. [Google Scholar]

- Han, L.; Liu, D.; Guan, D. Ship detection in SAR images by saliency analysis of multiscale superpixels. Remote Sens. Lett. 2022, 13, 708–715. [Google Scholar] [CrossRef]

- Zhang, T.; Yang, Z.; Gan, H.; Xiang, D.; Zhu, S.; Yang, J. PolSAR ship detection using the joint polarimetric information. IEEE Trans. Geosci. Remote Sens. 2020, 58, 8225–8241. [Google Scholar] [CrossRef]

- Zhang, T.; Jiang, L.; Xiang, D.; Ban, Y.; Pei, L.; Xiong, H. Ship detection from PolSAR imagery using the ambiguity removal polarimetric notch filter. ISPRS J. Photogramm. Remote Sens. 2019, 157, 41–58. [Google Scholar] [CrossRef]

- Zhang, T.; Wang, W.; Quan, S.; Yang, H.; Xiong, H.; Zhang, Z.; Yu, W. Region-based Polarimetric Covariance Difference Matrix for PolSAR Ship Detection. IEEE Trans. Geosci. Remote. Sens. 2022, 60, 5222016. [Google Scholar] [CrossRef]

- Eldhuset, K. An automatic ship and ship wake detection system for spaceborne SAR images in coastal regions. IEEE Trans. Geosci. Remote Sens. 1996, 34, 1010–1019. [Google Scholar]

- Novak, L.M.; Burl, M.C.; Irving, W. Optimal polarimetric processing for enhanced target detection. IEEE Trans. Aerosp. Electron. Syst. 1993, 29, 234–244. [Google Scholar] [CrossRef]

- Kuttikkad, S.; Chellappa, R. Non-Gaussian CFAR techniques for target detection in high resolution SAR images. In Proceedings of the 1st International Conference on Image Processing, Austin, TX, USA, 13–16 November 1994; Volume 1, pp. 910–914. [Google Scholar]

- Tao, D.; Anfinsen, S.N.; Brekke, C. Robust CFAR detector based on truncated statistics in multiple-target situations. IEEE Trans. Geosci. Remote Sens. 2015, 54, 117–134. [Google Scholar] [CrossRef]

- Zhao, H.; Wang, Q.; Huang, J.; Wu, W.; Yuan, N. Method for inshore ship detection based on feature recognition and adaptive background window. J. Appl. Remote Sens. 2014, 8, 083608. [Google Scholar] [CrossRef]

- Wang, Q.; Zhu, H.; Wu, W.; Zhao, H.; Yuan, N. Inshore ship detection using high-resolution synthetic aperture radar images based on maximally stable extremal region. J. Appl. Remote Sens. 2015, 9, 095094. [Google Scholar] [CrossRef]

- Li, A.; Chen, Z. Personalized visual saliency: Individuality affects image perception. IEEE Access 2018, 6, 16099–16109. [Google Scholar] [CrossRef]

- Zhang, Q.; Wu, Y.; Zhao, W.; Wang, F.; Fan, J.; Li, M. Multiple-scale salient-region detection of SAR image based on Gamma distribution and local intensity variation. IEEE Geosci. Remote Sens. Lett. 2013, 11, 1370–1374. [Google Scholar] [CrossRef]

- Chen, C.P.; Li, H.; Wei, Y.; Xia, T.; Tang, Y.Y. A local contrast method for small infrared target detection. IEEE Trans. Geosci. Remote Sens. 2013, 52, 574–581. [Google Scholar] [CrossRef]

- Xie, T.; Zhang, W.; Yang, L.; Wang, Q.; Huang, J.; Yuan, N. Inshore ship detection based on level set method and visual saliency for SAR images. Sensors 2018, 18, 3877. [Google Scholar] [CrossRef] [Green Version]

- Itti, L.; Koch, C.; Niebur, E. A model of saliency-based visual attention for rapid scene analysis. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 20, 1254–1259. [Google Scholar] [CrossRef] [Green Version]

- Lai, D.; Xiong, B.; Kuang, G. Weak target detection in SAR images via improved itti visual saliency model. In Proceedings of the 2017 2nd International Conference on Frontiers of Sensors Technologies (ICFST), Shenzhen, China, 14–16 April 2017; pp. 260–264. [Google Scholar]

- Wang, Z.; Du, L.; Zhang, P.; Li, L.; Wang, F.; Xu, S.; Su, H. Visual attention-based target detection and discrimination for high-resolution SAR images in complex scenes. IEEE Trans. Geosci. Remote Sens. 2017, 56, 1855–1872. [Google Scholar] [CrossRef]

- Fan, J.; Wu, Y.; Wang, F.; Zhang, Q.; Liao, G.; Li, M. SAR image registration using phase congruency and nonlinear diffusion-based SIFT. IEEE Geosci. Remote Sens. Lett. 2014, 12, 562–566. [Google Scholar]

- Wang, S.; You, H.; Fu, K. BFSIFT: A novel method to find feature matches for SAR image registration. IEEE Geosci. Remote Sens. Lett. 2011, 9, 649–653. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Wang, Y.; Wang, C.; Zhang, H.; Dong, Y.; Wei, S. Automatic ship detection based on RetinaNet using multi-resolution Gaofen-3 imagery. Remote Sens. 2019, 11, 531. [Google Scholar] [CrossRef] [Green Version]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Lin, Z.; Ji, K.; Leng, X.; Kuang, G. Squeeze and excitation rank faster R-CNN for ship detection in SAR images. IEEE Geosci. Remote Sens. Lett. 2018, 16, 751–755. [Google Scholar] [CrossRef]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 6154–6162. [Google Scholar]

- Cui, Z.; Li, Q.; Cao, Z.; Liu, N. Dense attention pyramid networks for multi-scale ship detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8983–8997. [Google Scholar] [CrossRef]

- Fu, J.; Sun, X.; Wang, Z.; Fu, K. An anchor-free method based on feature balancing and refinement network for multiscale ship detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1331–1344. [Google Scholar] [CrossRef]

- Cui, Z.; Wang, X.; Liu, N.; Cao, Z.; Yang, J. Ship detection in large-scale SAR images via spatial shuffle-group enhance attention. IEEE Trans. Geosci. Remote Sens. 2020, 59, 379–391. [Google Scholar] [CrossRef]

- Du, L.; Li, L.; Wei, D.; Mao, J. Saliency-guided single shot multibox detector for target detection in SAR images. IEEE Trans. Geosci. Remote Sens. 2019, 58, 3366–3376. [Google Scholar] [CrossRef]

- Yu, J.; Zhou, G.; Zhou, S.; Qin, M. A fast and lightweight detection network for multi-scale SAR ship detection under complex backgrounds. Remote Sens. 2021, 14, 31. [Google Scholar] [CrossRef]

- Xu, X.; Zhang, X.; Zhang, T.; Shi, J.; Wei, S.; Li, J. On-Board Ship Detection in SAR Images Based on L-YOLO. In Proceedings of the 2022 IEEE Radar Conference (RadarConf22), New York City, NY, USA, 21–25 March 2022; pp. 1–5. [Google Scholar]

- Xu, X.; Zhang, X.; Zhang, T. Lite-yolov5: A lightweight deep learning detector for on-board ship detection in large-scene sentinel-1 sar images. Remote Sens. 2022, 14, 1018. [Google Scholar] [CrossRef]

- Wang, J.; Xu, C.; Yang, W.; Yu, L. A Normalized Gaussian Wasserstein Distance for Tiny Object Detection. arXiv 2021, arXiv:2110.13389. [Google Scholar]

- Tang, J.; Cheng, J.; Xiang, D.; Hu, C. Large-difference-scale Target Detection Using a Revised Bhattacharyya Distance in SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4506205. [Google Scholar] [CrossRef]

- Zhang, H.; Chang, H.; Ma, B.; Wang, N.; Chen, X. Dynamic R-CNN: Towards high quality object detection via dynamic training. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; pp. 260–275. [Google Scholar]

- Perona, P.; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 629–639. [Google Scholar] [CrossRef] [Green Version]

- Alcantarilla, P.F.; Bartoli, A.; Davison, A.J. KAZE features. In Proceedings of the European Conference on Computer Vision, Florence, Italy, 7–13 October 2012; pp. 214–227. [Google Scholar]

- Niebur, E. Computational architectures for attention. The Attentive Brain; MIT Press: Massachusetts, CA, USA, 1998. [Google Scholar]

- Liu, S.; Cao, Z.; Li, J. A SVD-based visual attention detection algorithm of SAR image. In Proceedings of the Second International Conference on Communications, Signal Processing and Systems, Tianjin, China, 1–2 September 2014; pp. 479–486. [Google Scholar]

- Frintrop, S.; Werner, T.; Martin Garcia, G. Traditional saliency reloaded: A good old model in new shape. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2015; pp. 82–90. [Google Scholar]

- Wang, J.; Yang, W.; Li, H.C.; Zhang, H.; Xia, G.S. Learning center probability map for detecting objects in aerial images. IEEE Trans. Geosci. Remote Sens. 2020, 59, 4307–4323. [Google Scholar] [CrossRef]

- Joyce, J.M. Kullback-leibler divergence. In International Encyclopedia of Statistical Science; Springer: New York, NY, USA, 2011; pp. 720–722. [Google Scholar]

- Menéndez, M.; Pardo, J.; Pardo, L.; Pardo, M. The jensen-shannon divergence. J. Frankl. Inst. 1997, 334, 307–318. [Google Scholar] [CrossRef]

- Schweppe, F.C. On the Bhattacharyya distance and the divergence between Gaussian processes. Inf. Control 1967, 11, 373–395. [Google Scholar] [CrossRef] [Green Version]

- Zhang, T.; Zhang, X.; Ke, X.; Zhan, X.; Shi, J.; Wei, S.; Pan, D.; Li, J.; Su, H.; Zhou, Y.; et al. LS-SSDD-v1. 0: A deep learning dataset dedicated to small ship detection from large-scale Sentinel-1 SAR images. Remote Sens. 2020, 12, 2997. [Google Scholar] [CrossRef]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9759–9768. [Google Scholar]

- Ultralytics. YOLOv5. 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 18 May 2020).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. In Proceedings of the Advances in Neural Information Processing Systems 28 (NIPS 2015), Montreal, QC, Canada, 7–12 December 2015. [Google Scholar]

- Wu, Y.; Chen, Y.; Yuan, L.; Liu, Z.; Wang, L.; Li, H.; Fu, Y. Rethinking classification and localization for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10186–10195. [Google Scholar]

| Platform | Windows 10 |

| Torch | V 1.9.0 |

| CPU | Intel Core i7-10870H |

| Memory | 32 G |

| GPU | Nvidia GeForce RTX 3080 Laptop |

| Video memory | 16 G |

| Method | Recall (%) | mAP (%) |

|---|---|---|

| RetinaNet | 79.70 | 56.80 |

| ATSS | 77.30 | 64.20 |

| YOLOv5 | 81.00 | 70.60 |

| Faster R-CNN | 72.80 | 65.30 |

| Double-head R-CNN | 78.60 | 68.40 |

| Cascade R-CNN | 74.40 | 67.20 |

| Dynamic R-CNN | 79.10 | 69.20 |

| Proposed Method | 83.90 | 72.50 |

| Detector | Saliency Enhancement | BLD | Recall (%) | mAP (%) |

|---|---|---|---|---|

| RetinaNet | × | × | 79.70 | 56.80 |

| ✓ | × | 78.80 | 59.70 | |

| × | ✓ | 76.50 | 64.10 | |

| ✓ | ✓ | 78.40 | 65.20 | |

| Faster R-CNN | × | × | 72.80 | 65.30 |

| ✓ | × | 73.20 | 66.70 | |

| × | ✓ | 80.80 | 67.80 | |

| ✓ | ✓ | 79.50 | 68.30 | |

| Cascade R-CNN | × | × | 74.40 | 67.20 |

| ✓ | × | 74.70 | 68.40 | |

| × | ✓ | 81.00 | 71.20 | |

| ✓ | ✓ | 81.90 | 71.40 | |

| Dynamic R-CNN | × | × | 79.10 | 69.20 |

| ✓ | × | 80.40 | 71.20 | |

| × | ✓ | 83.50 | 71.80 | |

| ✓ | ✓ | 83.90 | 72.50 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, J.; Xiang, D.; Tang, J.; Zheng, Y.; Guan, D.; Du, B. Inshore Ship Detection in Large-Scale SAR Images Based on Saliency Enhancement and Bhattacharyya-like Distance. Remote Sens. 2022, 14, 2832. https://doi.org/10.3390/rs14122832

Cheng J, Xiang D, Tang J, Zheng Y, Guan D, Du B. Inshore Ship Detection in Large-Scale SAR Images Based on Saliency Enhancement and Bhattacharyya-like Distance. Remote Sensing. 2022; 14(12):2832. https://doi.org/10.3390/rs14122832

Chicago/Turabian StyleCheng, Jianda, Deliang Xiang, Jiaxin Tang, Yanpeng Zheng, Dongdong Guan, and Bin Du. 2022. "Inshore Ship Detection in Large-Scale SAR Images Based on Saliency Enhancement and Bhattacharyya-like Distance" Remote Sensing 14, no. 12: 2832. https://doi.org/10.3390/rs14122832

APA StyleCheng, J., Xiang, D., Tang, J., Zheng, Y., Guan, D., & Du, B. (2022). Inshore Ship Detection in Large-Scale SAR Images Based on Saliency Enhancement and Bhattacharyya-like Distance. Remote Sensing, 14(12), 2832. https://doi.org/10.3390/rs14122832