A Review of Remote Sensing of Submerged Aquatic Vegetation for Non-Specialists

Abstract

1. Introduction

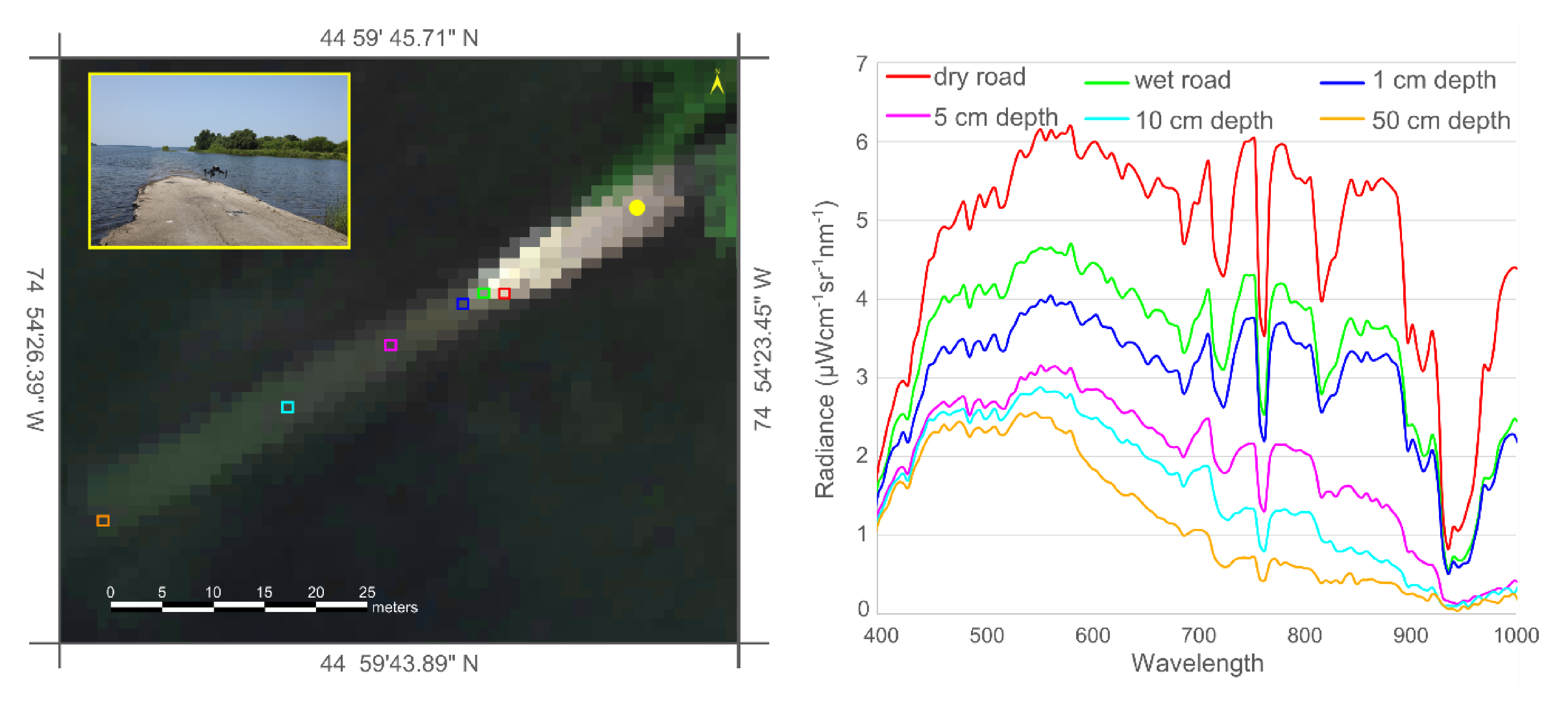

2. Review Article Methodology

3. Technical Background

3.1. Key Concepts in RS for Aquatic Research

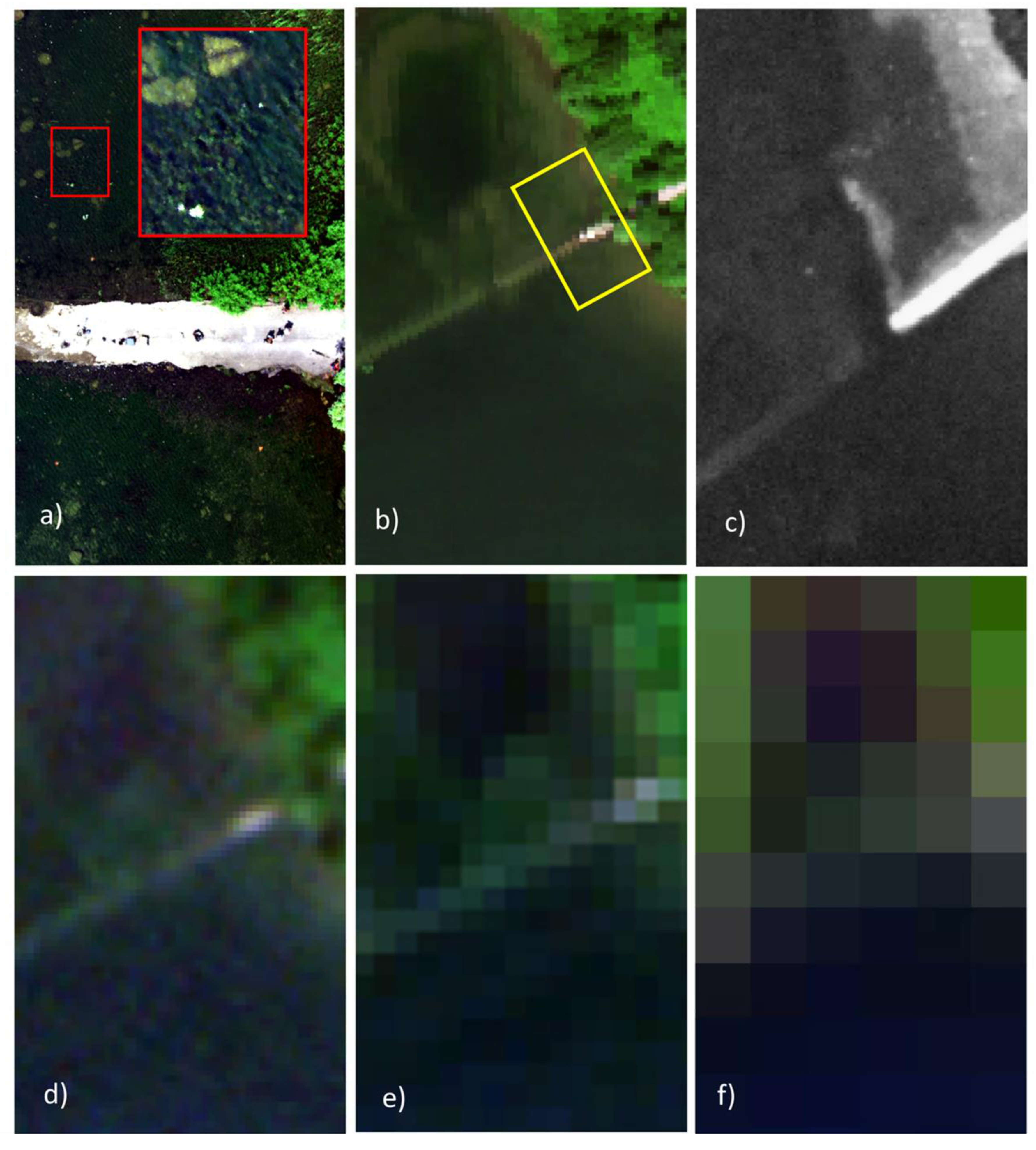

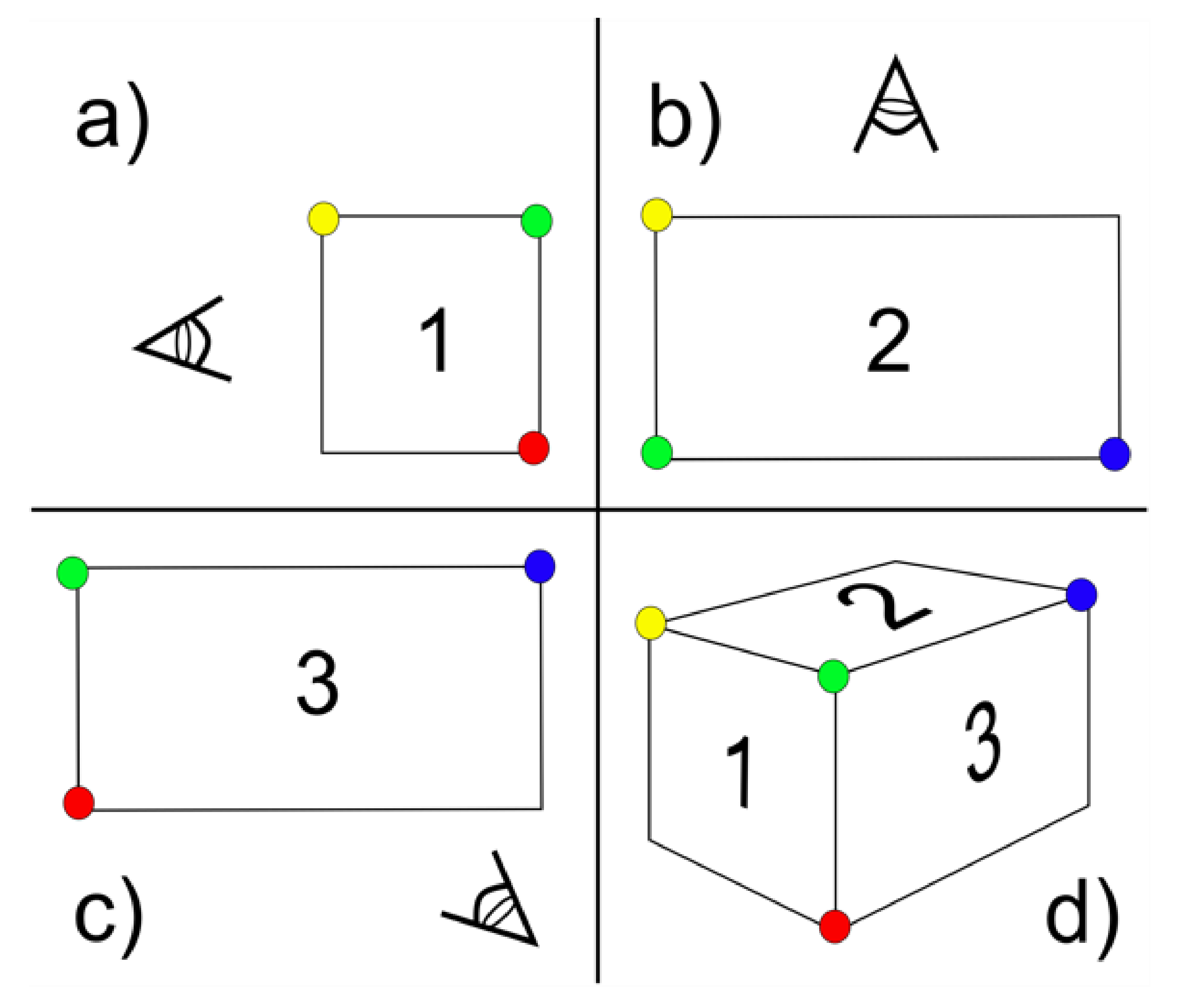

3.2. Resolutions

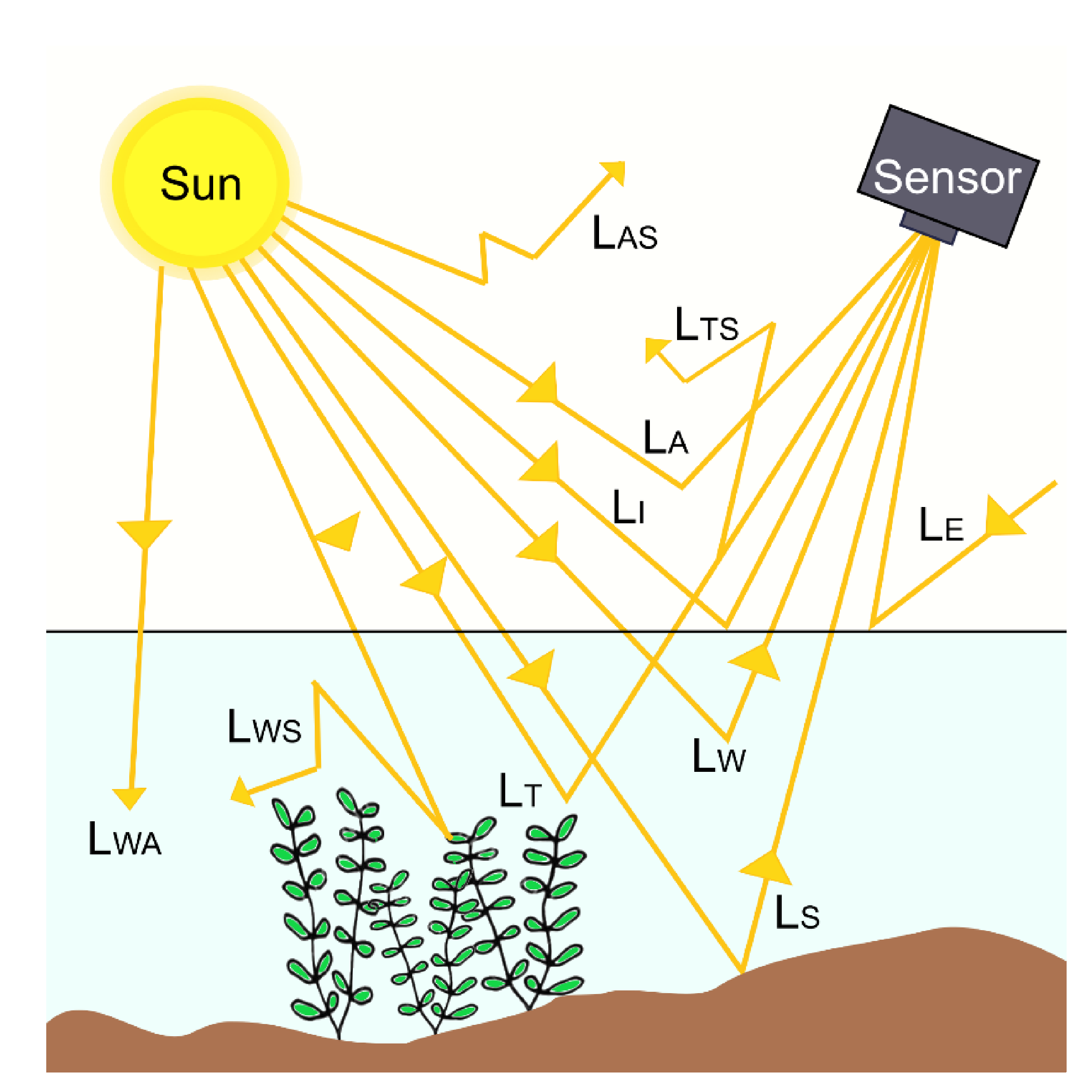

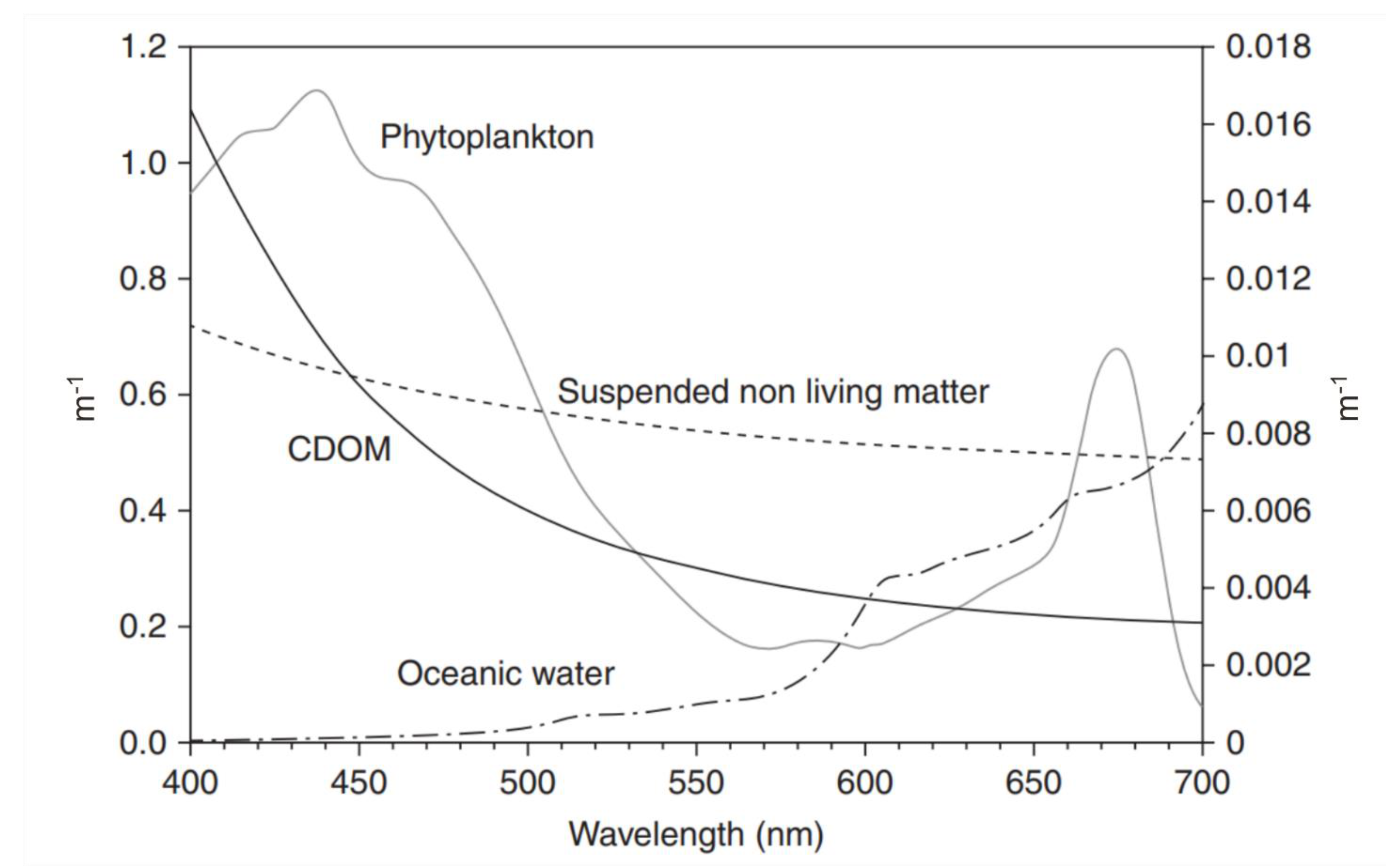

3.3. Underwater Light Environment

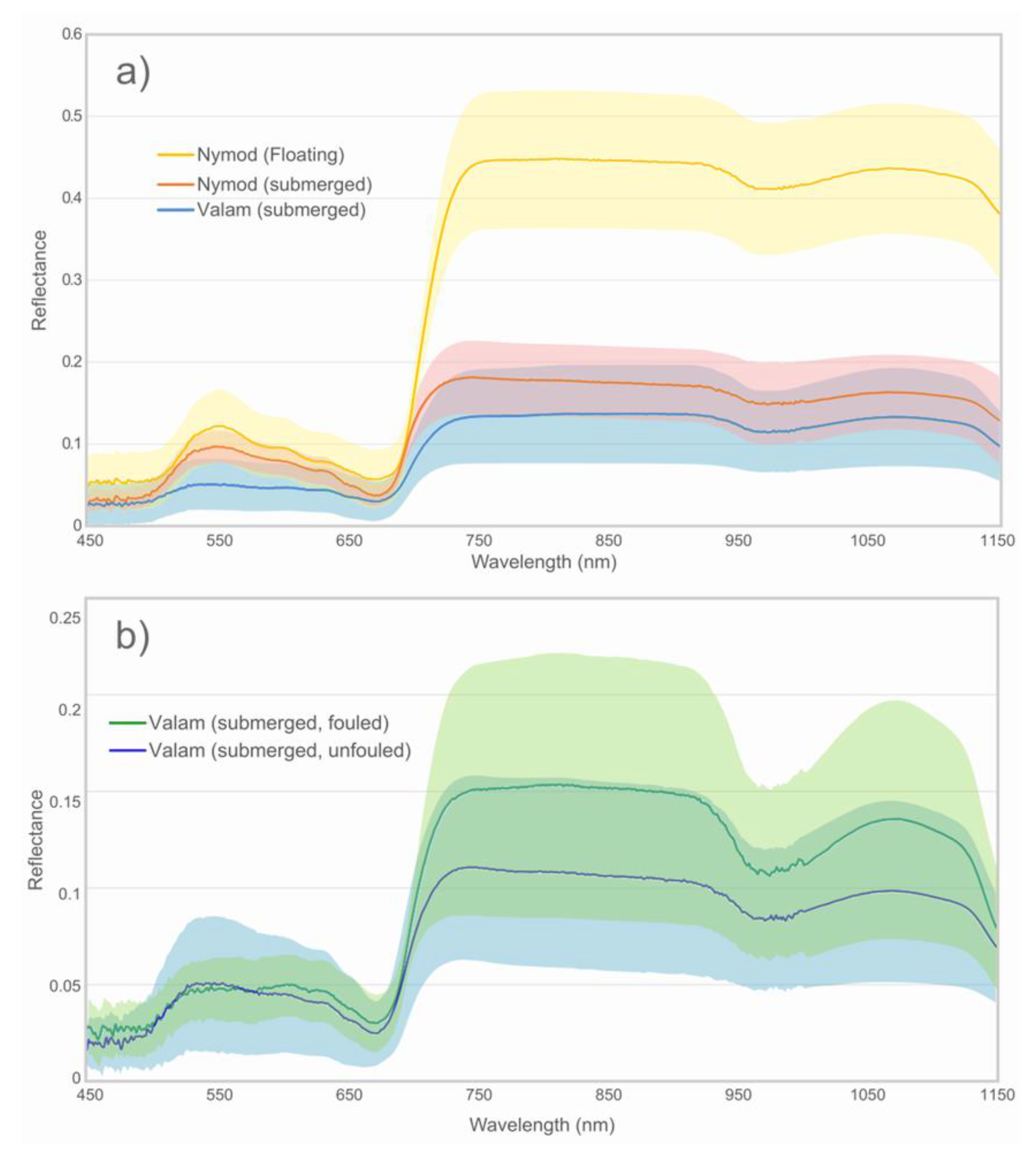

3.4. Spectral Properties of SAV

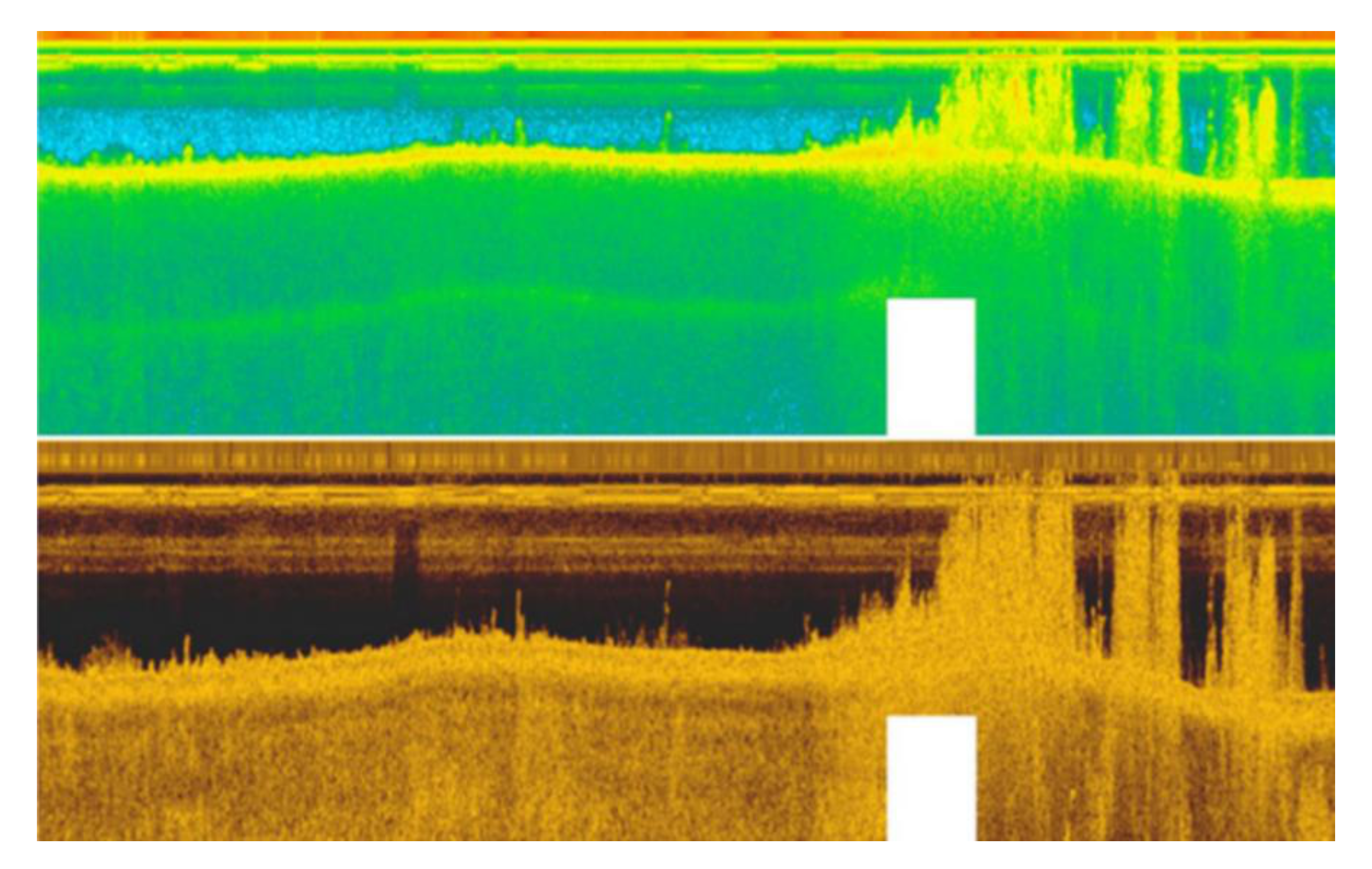

3.5. Supplemental Datasets in Aquatic RS

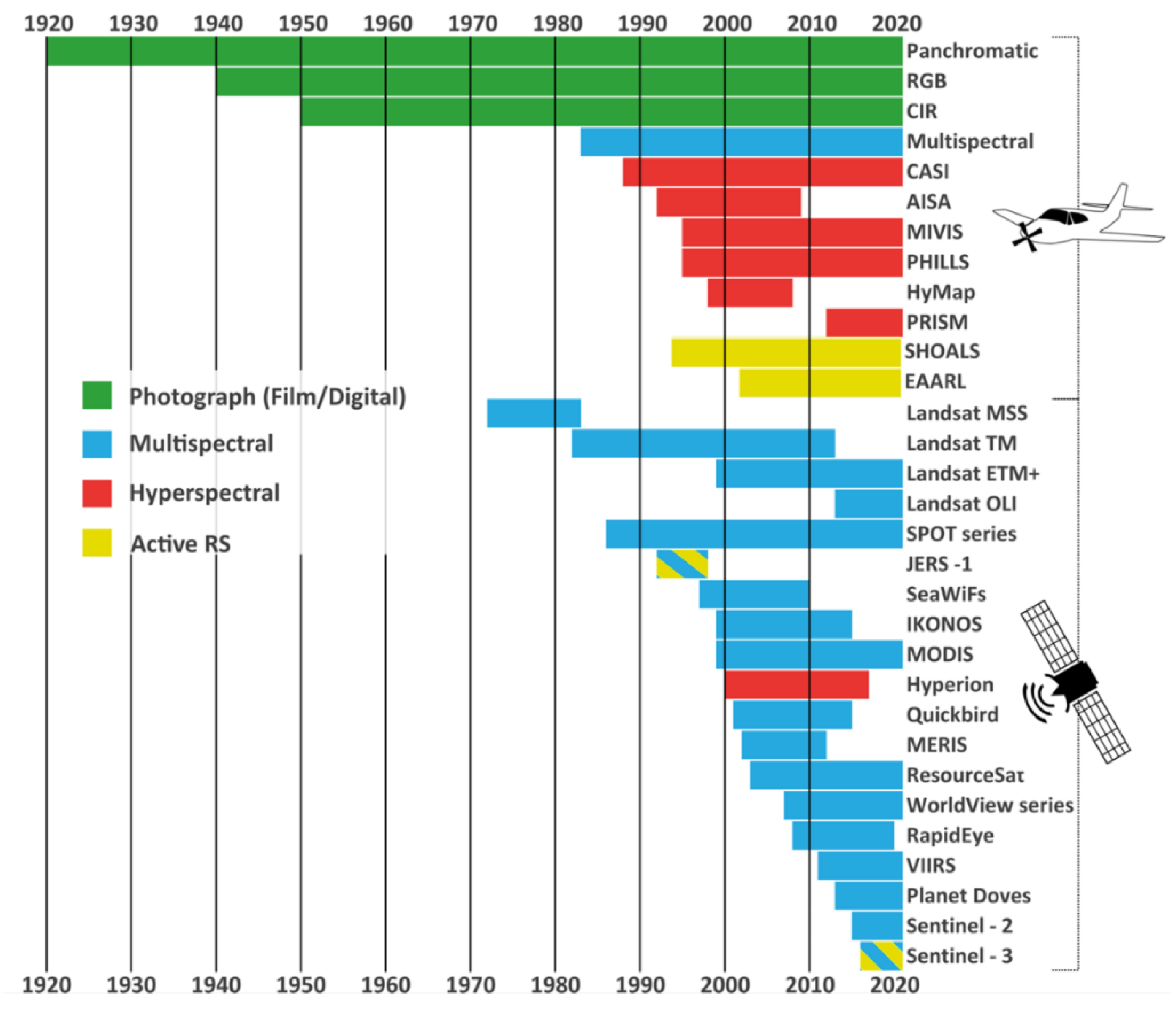

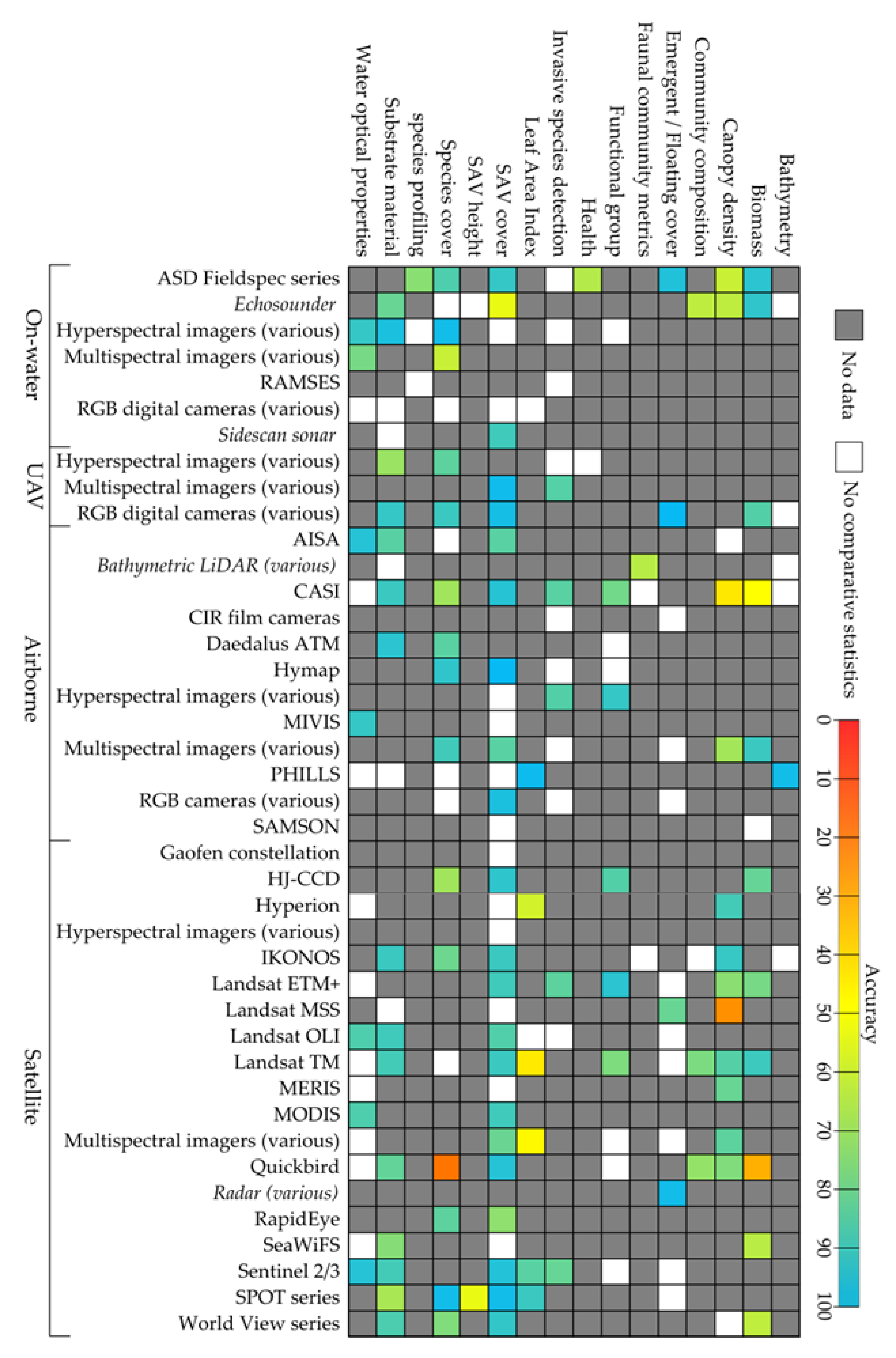

4. Sensors

4.1. Available Sensors

4.2. Advancing Technologies

5. Platforms

5.1. ROVs and AUVs

5.2. Hand-Held, Vessels and Fixed Platforms

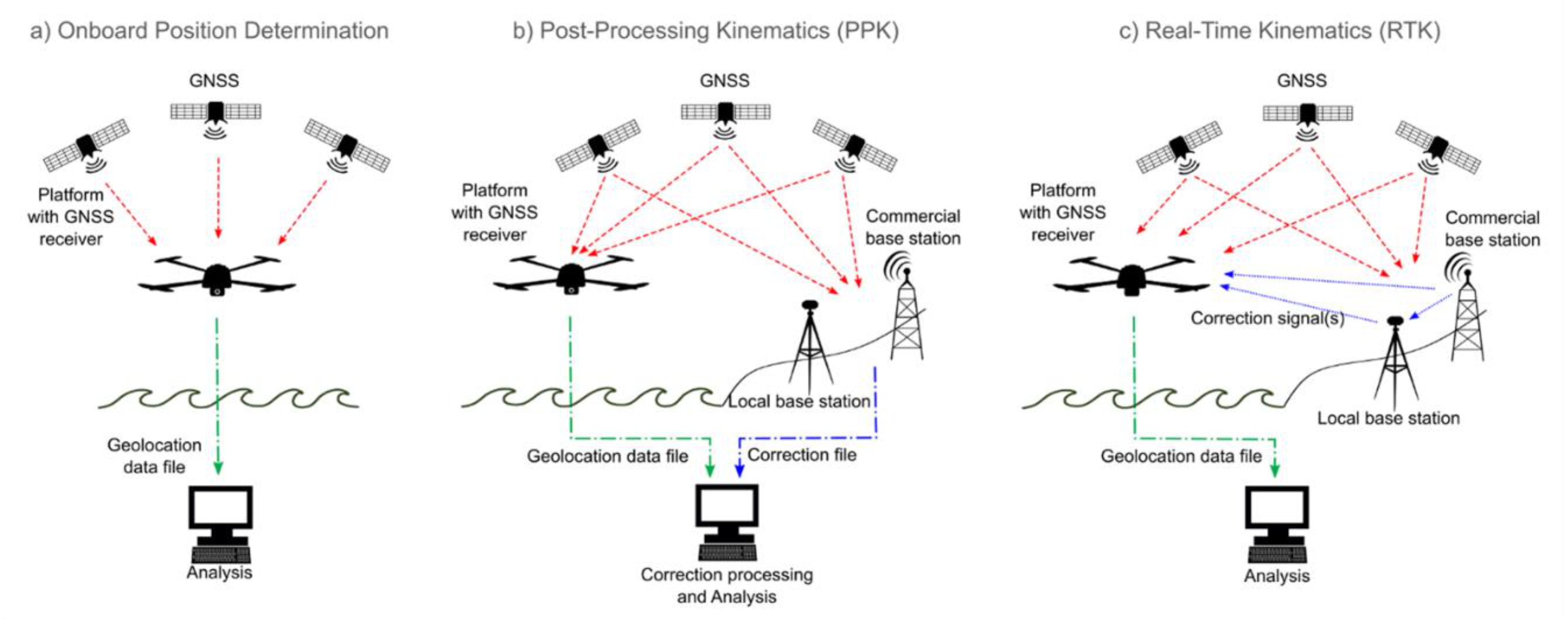

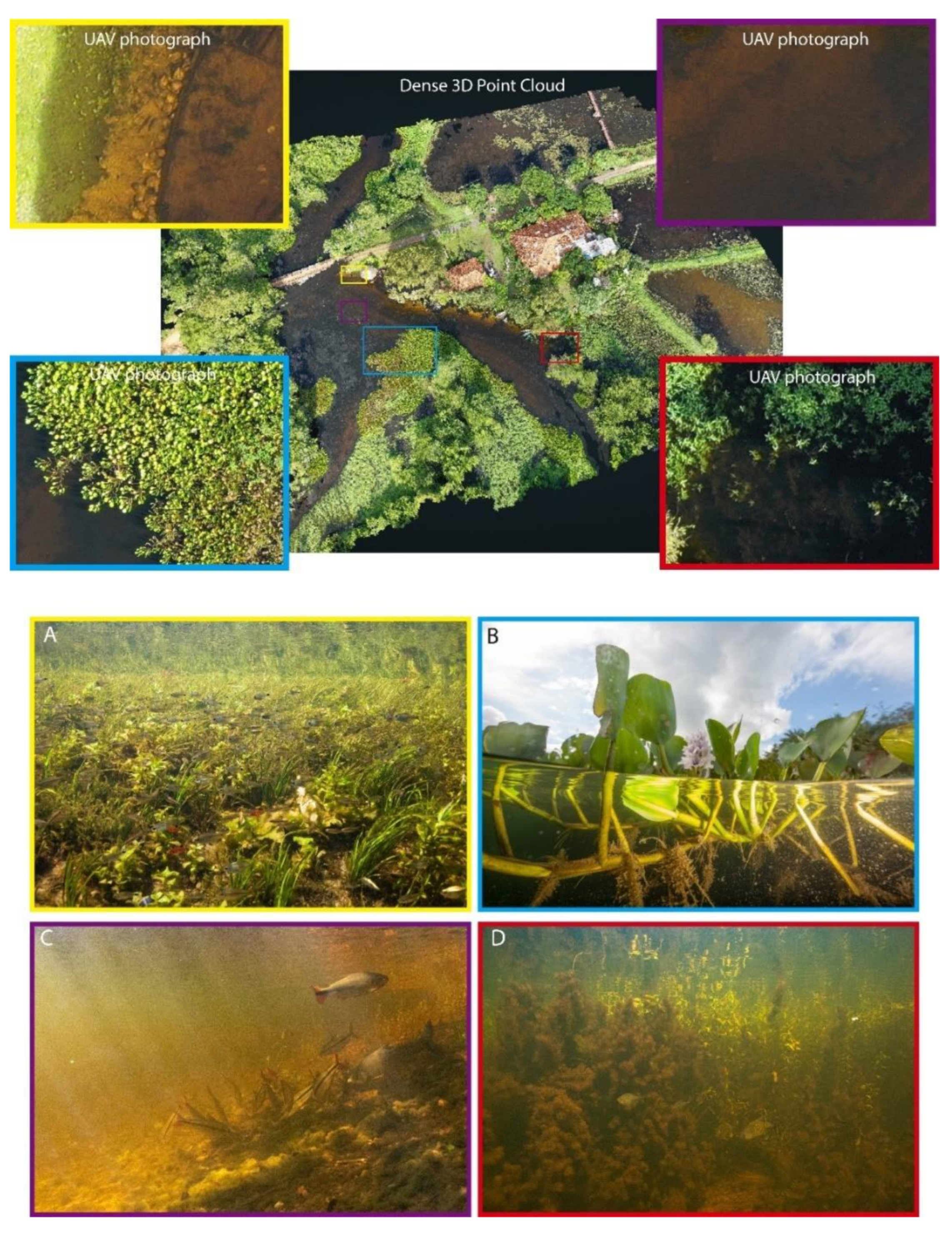

5.3. Unmanned Aerial Vehicles

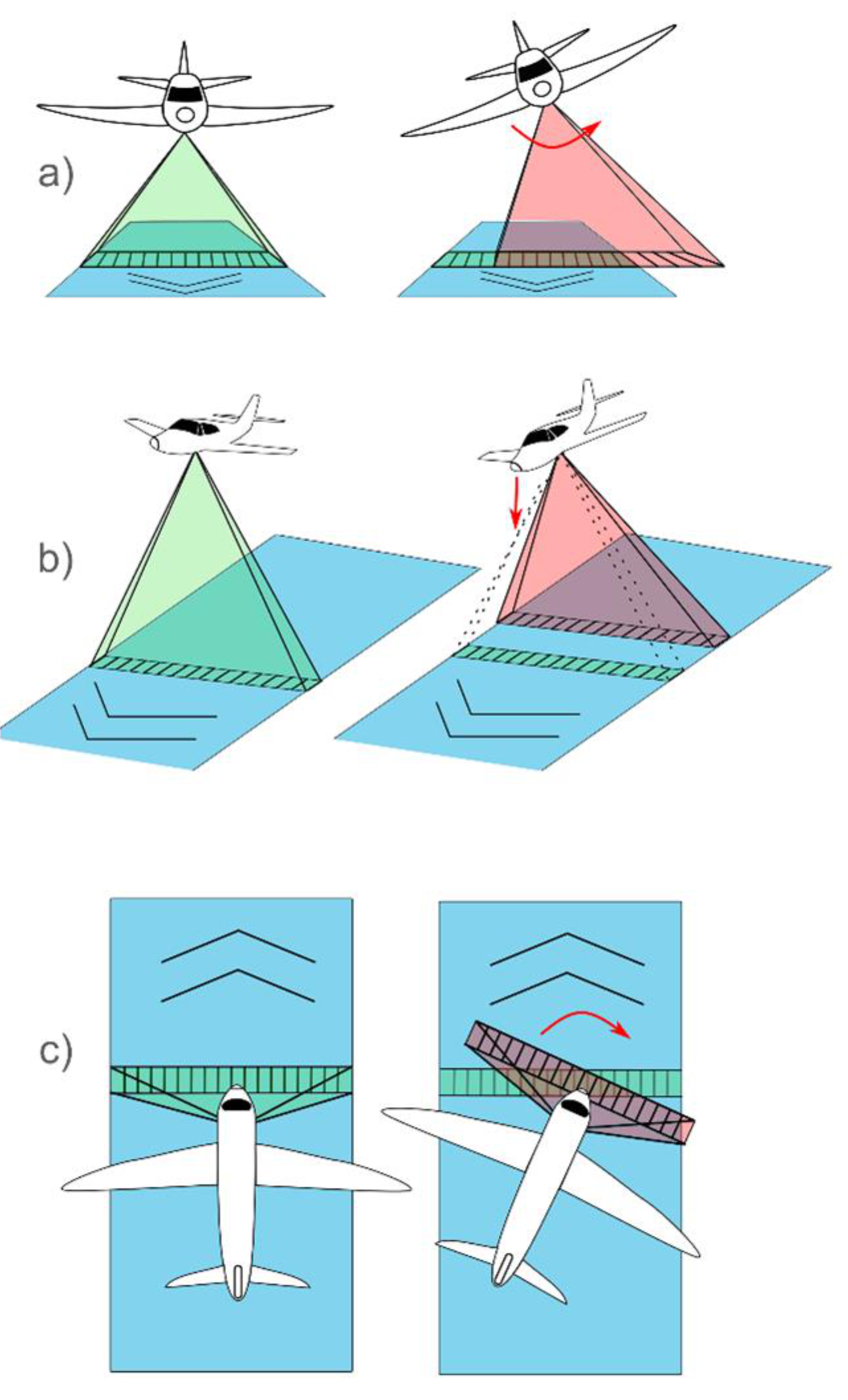

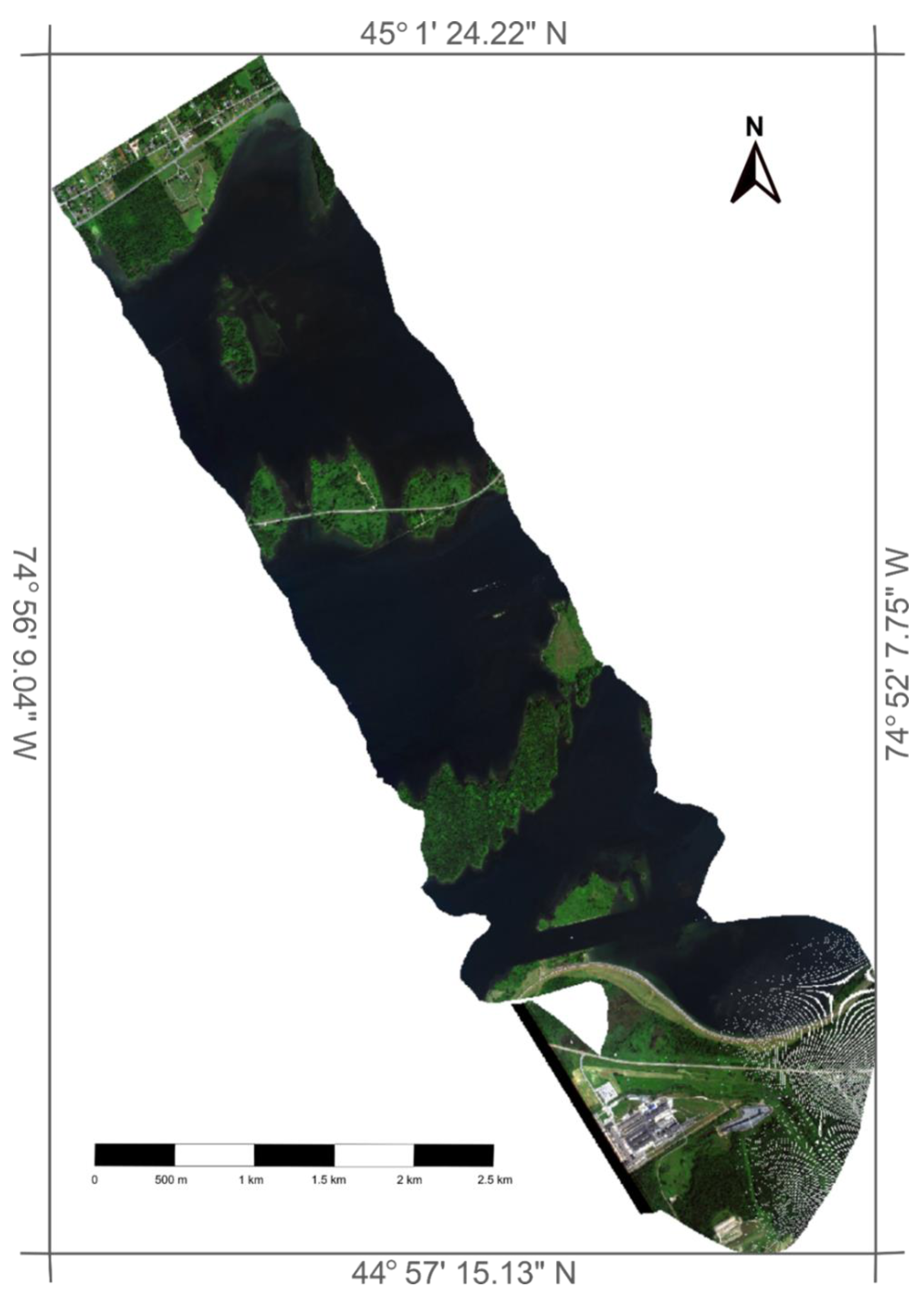

5.4. Manned Aircraft

5.5. Satellite

6. Corrections and Analysis

6.1. Correction of Passive Optical RS Imagery

6.2. Corrections Specific to Aquatic Applications

6.3. Analysis of Passive Optical RS Imagery

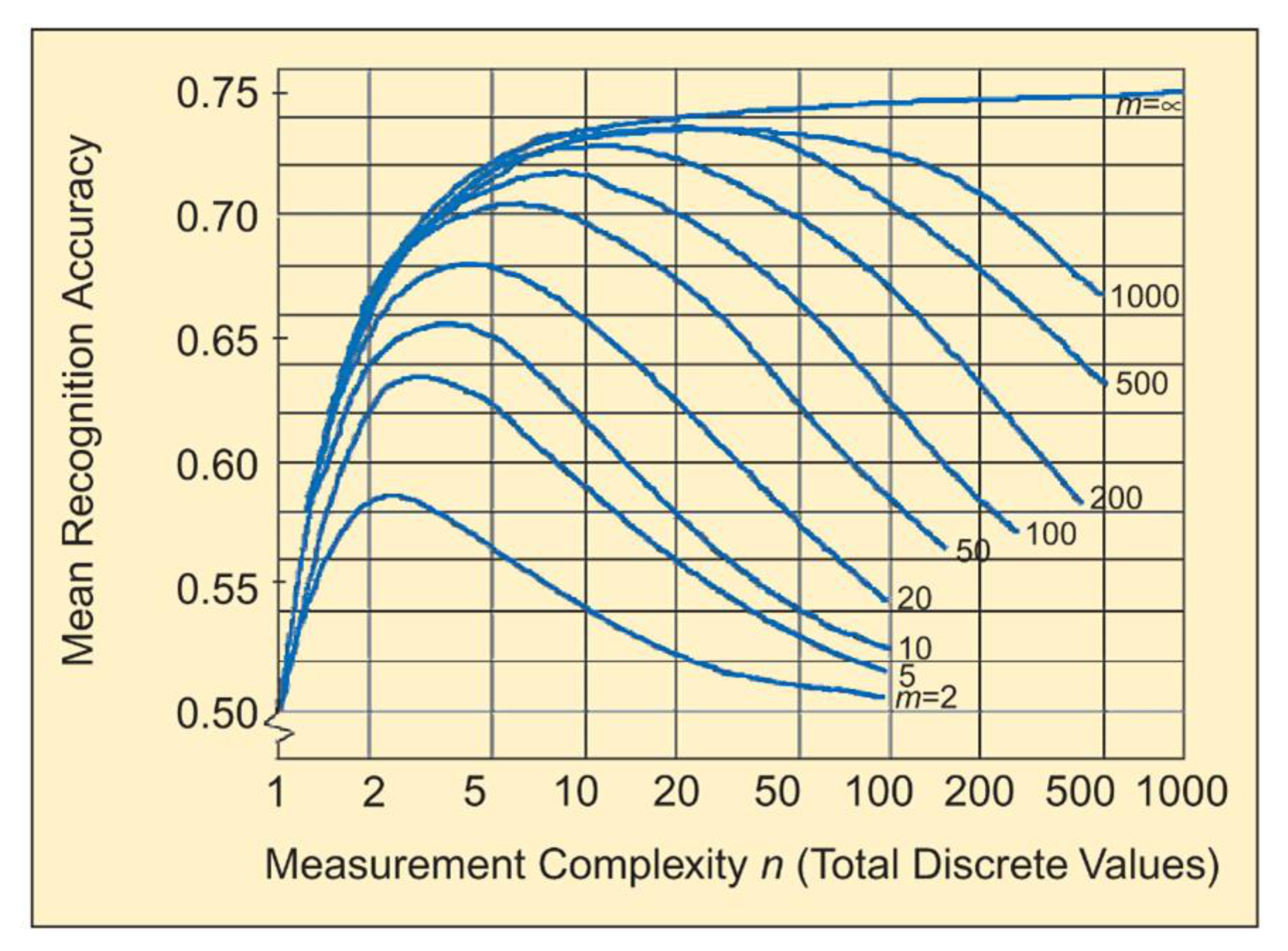

6.3.1. Hyperspectral Dimension Reduction

6.3.2. Indices

6.3.3. Classification and Target Detection

6.3.4. Time-Series and Time Sequence Analyses

6.4. Structure-from-Motion Photogrammetry

7. Applications

7.1. Identification

7.2. Location of SAV (Extent Mapping)

8. Discussion

9. Conclusions

Author Contributions

Funding

Informed Consent Statement

Institutional Review Board System

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- United Nations Environment Programme. Out of the Blue: The Value of Seagrasses to the Environment and to People; UNEP: Nairobi, Kenya, 2020. [Google Scholar]

- Jia, Q.; Cao, L.; Yésou, H.; Huber, C.; Fox, A.D. Combating aggressive macrophyte encroachment on a typical Yangtze River lake: Lessons from a long-term remote sensing study of vegetation. Aquat. Ecol. 2017, 51, 177–189. [Google Scholar] [CrossRef]

- Shinkareva, G.L.; Lychagin, M.Y.; Tarasov, M.K.; Pietroń, J.; Chichaeva, M.A.; Chalov, S.R. Biogeochemical specialization of macrophytes and their role as a biofilter in the selenga delta. Geogr. Environ. Sustain. 2019, 12, 240–263. [Google Scholar] [CrossRef]

- Massicotte, P.; Bertolo, A.; Brodeur, P.; Hudon, C.; Mingelbier, M.; Magnan, P. Influence of the aquatic vegetation landscape on larval fish abundance. J. Great Lakes Res. 2015, 41, 873–880. [Google Scholar] [CrossRef]

- Hughes, A.R.; Williams, S.L.; Duarte, C.M.; Heck, K.L., Jr.; Waycott, M. Associations of concern: Declining seagrasses and threatened dependent species. Front. Ecol. Environ. 2009, 7, 242–246. [Google Scholar] [CrossRef]

- Hestir, E.L.; Schoellhamer, D.H.; Greenberg, J.; Morgan-King, T.; Ustin, S.L. The Effect of Submerged Aquatic Vegetation Expansion on a Declining Turbidity Trend in the Sacramento-San Joaquin River Delta. Estuaries Coasts 2016, 39, 1100–1112. [Google Scholar] [CrossRef]

- Wolter, P.T.; Johnston, C.A.; Niemi, G.J. Mapping submergent aquatic vegetation in the US Great Lakes using Quickbird satellite data. Int. J. Remote Sens. 2005, 26, 5255–5274. [Google Scholar] [CrossRef]

- Malthus, T.J. Bio-optical Modeling and Remote Sensing of Aquatic Macrophytes. In Bio-Optical Modeling and Remote Sensing of Inland Waters; Elsevier: Amsterdam, The Netherlands, 2017; pp. 263–308. [Google Scholar] [CrossRef]

- Duffy, J.E.; Benedetti-Cecchi, L.; Trinanes, J.; Muller-Karger, F.E.; Ambo-Rappe, R.; Bostrom, C.; Buschmann, A.H.; Byrnes, J.; Coles, R.G.; Creed, J.; et al. Toward a Coordinated Global Observing System for Seagrasses and Marine Macroalgae. Front. Mar. Sci. 2019, 6, 317. [Google Scholar] [CrossRef]

- Silva, T.S.F.; Costa, M.P.F.; Melack, J.M.; Novo, E.M.L.M. Remote sensing of aquatic vegetation: Theory and applications. Environ. Monit. Assess. 2008, 140, 131–145. [Google Scholar] [CrossRef]

- Zhang, Y.L.; Jeppesen, E.; Liu, X.H.; Qin, B.Q.; Shi, K.; Zhou, Y.Q.; Thomaz, S.M.; Deng, J.M. Global loss of aquatic vegetation in lakes. Earth Sci. Rev. 2017, 173, 259–265. [Google Scholar] [CrossRef]

- Mcleod, E.; Chmura, G.L.; Bouillon, S.; Salm, R.; Björk, M.; Duarte, C.M.; Lovelock, C.E.; Schlesinger, W.H.; Silliman, B.R. A blueprint for blue carbon: Toward an improved understanding of the role of vegetated coastal habitats in sequestering CO2. Front. Ecol. Environ. 2011, 9, 552–560. [Google Scholar] [CrossRef]

- Bostater, C.R.; Ghir, T.; Bassetti, L.; Hall, C.; Reyier, E.; Lowers, R.; Holloway-Adkins, K.; Virnstein, R. Hyperspectral Remote Sensing Protocol Development for Submerged Aquatic Vegetation in Shallow Water. In Proceedings of the SPIE—The International Society for Optical Engineering, Barcelona, Spain, 8–12 September 2003; pp. 199–215. [Google Scholar]

- Ackleson, S.G.; Klemas, V. Remote sensing of submerged aquatic vegetation in lower chesapeake bay: A comparison of Landsat MSS to TM imagery. Remote Sens. Environ. 1987, 22, 235–248. [Google Scholar] [CrossRef]

- O’Neill, J.D.; Costa, M. Mapping eelgrass (Zostera marina) in the Gulf Islands National Park Reserve of Canada using high spatial resolution satellite and airborne imagery. Remote Sens. Environ. 2013, 133, 152–167. [Google Scholar] [CrossRef]

- Vis, C.; Hudon, C.; Carignan, R. An evaluation of approaches used to determine the distribution and biomass of emergent and submerged aquatic macrophytes over large spatial scales. Aquat. Bot. 2003, 77, 187–201. [Google Scholar] [CrossRef]

- He, K.S.; Bradley, B.A.; Cord, A.F.; Rocchini, D.; Tuanmu, M.N.; Schmidtlein, S.; Turner, W.; Wegmann, M.; Pettorelli, N. Will remote sensing shape the next generation of species distribution models? Remote Sens. Ecol. Conserv. 2015, 1, 4–18. [Google Scholar] [CrossRef]

- Visser, F.; Wallis, C.; Sinnott, A.M. Optical remote sensing of submerged aquatic vegetation: Opportunities for shallow clearwater streams. Limnologica 2013, 43, 388–398. [Google Scholar] [CrossRef]

- Saravia, L.A.; Giorgi, A.; Momo, F.R. A photographic method for estimating chlorophyll in periphyton on artificial substrata. Aquat. Ecol. 1999, 33, 325–330. [Google Scholar] [CrossRef]

- Free, G.; Bresciani, M.; Trodd, W.; Tierney, D.; O’Boyle, S.; Plant, C.; Deakin, J. Estimation of lake ecological quality from Sentinel-2 remote sensing imagery. Hydrobiologia 2020, 847, 1423–1438. [Google Scholar] [CrossRef]

- Costa, V.; Serôdio, J.; Lillebø, A.I.; Sousa, A.I. Use of hyperspectral reflectance to non-destructively estimate seagrass Zostera noltei biomass. Ecol. Indic. 2021, 121, 107018. [Google Scholar] [CrossRef]

- Ashraf, S.; Brabyn, L.; Hicks, B.J.; Collier, K. Satellite remote sensing for mapping vegetation in New Zealand freshwater environments: A review. N. Z. Geogr. 2010, 66, 33–43. [Google Scholar] [CrossRef]

- Kalacska, M.; Chmura, G.L.; Lucanus, O.; Bérubé, D.; Arroyo-Mora, J.P. Structure from motion will revolutionize analyses of tidal wetland landscapes. Remote Sens. Environ. 2017, 199, 14–24. [Google Scholar] [CrossRef]

- Rhee, D.S.; Do Kim, Y.; Kang, B.; Kim, D. Applications of unmanned aerial vehicles in fluvial remote sensing: An overview of recent achievements. KSCE J. Civ. Eng. 2018, 22, 588–602. [Google Scholar] [CrossRef]

- Klemas, V. Remote Sensing of Submerged Aquatic Vegetation. In Seafloor Mapping Along Continental Shelves: Research and Techniques for Visualizing Benthic Environments; Finkl, C.W., Makowski, C., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 13, pp. 125–140. [Google Scholar]

- Palmer, S.C.J.; Kutser, T.; Hunter, P.D. Remote sensing of inland waters: Challenges, progress and future directions. Remote Sens. Environ. 2015, 157, 1–8. [Google Scholar] [CrossRef]

- Linchant, J.; Lisein, J.; Semeki, J.; Lejeune, P.; Vermeulen, C. Are unmanned aircraft systems (UASs) the future of wildlife monitoring? A review of accomplishments and challenges. Mammal. Rev. 2015, 45, 239–252. [Google Scholar] [CrossRef]

- Anderson, K.; Gaston, K.J. Lightweight unmmaned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 2013, 11, 138–146. [Google Scholar] [CrossRef]

- Brando, V.E.; Phinn, S.R. Coastal Aquatic Remote Sensing Applications for Environmental Monitoring and Management. J. Appl. Remote Sens. 2007, 1, 011599. [Google Scholar] [CrossRef][Green Version]

- Visser, F.; Buis, K.; Verschoren, V.; Schoelynck, J. Mapping of submerged aquatic vegetation in rivers from very high-resolution image data, using object-based image analysis combined with expert knowledge. Hydrobiologia 2018, 812, 157–175. [Google Scholar] [CrossRef]

- Dörnhöfer, K.; Oppelt, N. Remote sensing for lake research and monitoring—Recent advances. Ecol. Indic. 2016, 64, 105–122. [Google Scholar] [CrossRef]

- Nelson, S.A.C.; Cheruvelil, K.S.; Soranno, P.A. Satellite remote sensing of freshwater macrophytes and the influence of water clarity. Aquat. Bot. 2006, 85, 289–298. [Google Scholar] [CrossRef]

- Stocks, J.R.; Rodgers, M.P.; Pera, J.B.; Gilligan, D.M. Monitoring aquatic plants: An evaluation of hydroacoustic, on-site digitising and airborne remote sensing techniques. Knowl. Manag. Aquat. Ecosyst. 2019. [Google Scholar] [CrossRef]

- Farster, B.; Huynh, Q.; Messinger, D.; Middleton, C.; Resmini, R.G. Panel: How to Meet the Need for Spectral Expertise. In Spectral Sessions; Schmidt, J., Ed.; L3Harris: Melbourne, FL, USA, 2021; Available online: https://www.l3harrisgeospatial.com/Company/Events/Tradeshows/Spectral-Sessions (accessed on 27 January 2020).

- Hossain, M.S.; Bujang, J.S.; Zakaria, M.H.; Hashim, M. The application of remote sensing to seagrass ecosystems: An overview and future research prospects. Int. J. Remote Sens. 2015, 36, 61–114. [Google Scholar] [CrossRef]

- Collin, A.; Ramambason, C.; Thiault, L.; Nakamura, N.; Pastol, Y.; Casella, E.; Rovere, A.; Espiau, B.; Siu, G.; Lerouvreur, F.; et al. Very high resolution mapping of coral reef state using airborne bathymetric lidar surface-intensity and drone imagery. Int. J. Remote Sens. 2018, 39, 5676–5688. [Google Scholar] [CrossRef]

- Lillesand, T.M.; Kiefer, R.W.; Chipman, J.W. Remote Sensing and Image Interpretation, 6th ed.; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Manolakis, D.; Lockwood, R.; Cooley, T. Hyperspectral Imaging Remote Sensing: Physics, Sensors, and Algorithms; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Huff, L.C. Acoustic Remote Sensing as a Tool for Habitat Mapping in Alaska Waters. In Marine Habitat Mapping Technology for Alaska; Reynolds, J.R., Greene, H.G., Eds.; University of Fairbanks: Fairbanks, AK, USA, 2008. [Google Scholar] [CrossRef]

- Inamdar, D.; Kalacska, M.; LeBlanc, G.; Arroyo-Mora, J.P. Characterizing and Mitigating Sensor Generated Spatial Correlations in Airborne Hyperspectral Imaging Data. Remote Sens. 2020, 12, 641. [Google Scholar] [CrossRef]

- Giardino, C.; Brando, V.E.; Gege, P.; Pinnel, N.; Hochberg, E.; Knaeps, E.; Reusen, I.; Doerffer, R.; Bresciani, M.; Braga, F.; et al. Imaging Spectrometry of Inland and Coastal Waters: State of the Art, Achievements and Perspectives. Surv. Geophys. 2019, 40, 401–429. [Google Scholar] [CrossRef]

- Pu, R.; Bell, S. Mapping seagrass coverage and spatial patterns with high spatial resolution IKONOS imagery. Int. J. Appl. Earth Obs. Geoinf. 2017, 54, 145–158. [Google Scholar] [CrossRef]

- Wolf, P.; Rößler, S.; Schneider, T.; Melzer, A. Collecting in situ remote sensing reflectances of submersed macrophytes to build up a spectral library for lake monitoring. Eur. J. Remote Sens. 2013, 46, 401–416. [Google Scholar] [CrossRef]

- Markham, B.L.; Arvidson, T.; Barsi, J.A.; Choate, M.; Kaita, E.; Levy, R.; Lubke, M.; Masek, J.G. 1.03—Landsat Program. In Comprehensive Remote Sensing; Liang, S., Ed.; Elsevier: Oxford, UK, 2018; pp. 27–90. [Google Scholar] [CrossRef]

- Planet. Planet Imagery Product Specifications. Available online: https://assets.planet.com/docs/Planet_Combined_Imagery_Product_Specs_letter_screen.pdf (accessed on 7 February 2020).

- Klemas, V. Remote Sensing Techniques for Studying Coastal Ecosystems: An Overview. J. Coast. Res. 2011, 27, 2–17. [Google Scholar] [CrossRef]

- Kirk, J.T.O. Light and Photosynthesis in Aquatic Ecosystems, 2nd ed.; Cambridge University Press: Cambridge, UK, 1994; p. 509. [Google Scholar]

- Seyhan, E.; Dekker, A. Application of remote sensing techniques for water quality monitoring. Hydrobiol. Bull. 1986, 20, 41–50. [Google Scholar] [CrossRef]

- Davie, A.; Hartmann, K.; Timms, G.; De Groot, M.; McCulloch, J. Benthic Habitat Mapping with Autonomous Underwater Vehicles. In Proceedings of the OCEANS 2008, Quebec City, QC, Canada, 15–18 September 2008. [Google Scholar]

- Bale, A.J.; Tocher, M.D.; Weaver, R.; Hudson, S.J.; Aiken, J. Laboratory measurements of the spectral properties of estuarine suspended particles. Neth. J. Aquat. Ecol. 1994, 28, 237–244. [Google Scholar] [CrossRef]

- Han, L.; Rundquist, D.C. The spectral responses of Ceratophyllum demersum at varying depths in an experimental tank. Int. J. Remote Sens. 2003, 24, 859–864. [Google Scholar] [CrossRef]

- Johnsen, G.; Volent, Z.; Dierssen, H.; Pettersen, R.; Ardelan, M.V.; Søreide, F.; Fearns, P.; Ludvigsen, M.; Moline, M. Underwater Hyperspectral Imagery to Create Biogeochemical Maps of Seafloor Properties. In Subsea Optics and Imaging; Watson, J., Zielinski, O., Eds.; Woodhead Publishing: Oxford, UK, 2013; pp. 508–540e. [Google Scholar] [CrossRef]

- Chirayath, V.; Earle, S.A. Drones that see through waves—Preliminary results from airborne fluid lensing for centimetre-scale aquatic conservation. Aquat. Conserv. Mar. Freshw. Ecosyst. 2016, 26, 237–250. [Google Scholar] [CrossRef]

- Kislik, C.; Genzoli, L.; Lyons, A.; Kelly, M. Application of UAV imagery to detect and quantify submerged filamentous algae and rooted macrophytes in a non-wadeable river. Remote Sens. 2020, 12, 3332. [Google Scholar] [CrossRef]

- Gates, D.M.; Keegan, H.J.; Schleter, J.C.; Weidner, V.R. Spectral Properties of Plants. Appl. Opt. 1965, 4, 11–20. [Google Scholar] [CrossRef]

- Fyfe, S.K. Spatial and temporal variation in spectral reflectance: Are seagrass species spectrally distinct? Limnol. Oceanogr. 2003, 48, 464–479. [Google Scholar] [CrossRef]

- Cho, H.J. Depth-variant spectral characteristics of submersed aquatic vegetation detected by Landsat 7 ETM+. Int. J. Remote Sens. 2007, 28, 1455–1467. [Google Scholar] [CrossRef]

- Haghi Vayghan, A.; Poorbagher, H.; Taheri Shahraiyni, H.; Fazli, H.; Nasrollahzadeh Saravi, H. Suitability indices and habitat suitability index model of Caspian kutum (Rutilus frisii kutum) in the southern Caspian Sea. Aquat. Ecol. 2013, 47, 441–451. [Google Scholar] [CrossRef]

- Purkis, S.J.; Graham, N.A.J.; Riegl, B.M. Predictability of reef fish diversity and abundance using remote sensing data in Diego Garcia (Chagos Archipelago). Coral Reefs 2008, 27, 167–178. [Google Scholar] [CrossRef]

- Rotta, L.H.S.; Mishra, D.R.; Watanabe, F.S.Y.; Rodrigues, T.W.P.; Alcântara, E.H.; Imai, N.N. Analyzing the feasibility of a space-borne sensor (SPOT-6) to estimate the height of submerged aquatic vegetation (SAV) in inland waters. ISPRS J. Photogramm. Remote Sens. 2018, 144, 341–356. [Google Scholar] [CrossRef]

- Gao, Y.; Li, Q.; Wang, S.; Gao, J. Adaptive neural network based on segmented particle swarm optimization for remote-sensing estimations of vegetation biomass. Remote Sens. Environ. 2018, 211, 248–260. [Google Scholar] [CrossRef]

- Rotta, L.H.; Mishra, D.R.; Alcântara, E.; Imai, N.; Watanabe, F.; Rodrigues, T. K d(PAR) and a depth based model to estimate the height of submerged aquatic vegetation in an oligotrophic reservoir: A case study at Nova Avanhandava. Remote Sens. 2019, 11, 317. [Google Scholar] [CrossRef]

- Hall, C.R.; Bostater, C.R.; Virnstein, R.W. Implementation of a ground truth process for development of a submerged aquatic vegetation (SAV) mapping protocol using hyperspectral imagery. Remote Sens. OceanSea IceLarge Water Reg. 2006, 6360. [Google Scholar] [CrossRef]

- Hugue, F.; Lapointe, M.; Eaton, B.C.; Lepoutre, A. Satellite-based remote sensing of running water habitats at large riverscape scales: Tools to analyze habitat heterogeneity for river ecosystem management. Geomorphology 2016, 253, 353–369. [Google Scholar] [CrossRef]

- Lee, Z.; Carder, K.L.; Chen, R.F.; Peacock, T.G. Properties of the water column and bottom derived from Airborne Visible Infrared Imaging Spectrometer (AVIRIS) data. J. Geophys. Res. Ocean. 2001, 106, 11639–11651. [Google Scholar] [CrossRef]

- Giardino, C.; Candiani, G.; Bresciani, M.; Lee, Z.; Gagliano, S.; Pepe, M. BOMBER: A tool for estimating water quality and bottom properties from remote sensing images. Comput. Geosci. 2012, 45, 313–318. [Google Scholar] [CrossRef]

- Hudon, C.; Lalonde, S.; Gagnon, P. Ranking the effects of site exposure, plant growth form, water depth, and transparency on aquatic plant biomass. Can. J. Fish. Aquat.Sci. 2000, 57, 31–42. [Google Scholar] [CrossRef]

- Hill, V.J.; Zimmerman, R.C.; Bissett, W.P.; Dierssen, H.; Kohler, D.D.R. Evaluating Light Availability, Seagrass Biomass, and Productivity Using Hyperspectral Airborne Remote Sensing in Saint Joseph’s Bay, Florida. Estuaries Coasts 2014, 37, 1467–1489. [Google Scholar] [CrossRef]

- Greene, A.; Rahman, A.F.; Kline, R.; Rahman, M.S. Side scan sonar: A cost-efficient alternative method for measuring seagrass cover in shallow environments. Estuar. Coast. Shelf Sci. 2018, 207, 250–258. [Google Scholar] [CrossRef]

- Bennett, D.L.; Bister, T.J.; Ott, R.A. Using Recreation-Grade Side-Scan Sonar to Produce Classified Maps of Submerged Aquatic Vegetation. N. Am. J. Fish. Manag. 2020, 40, 145–153. [Google Scholar] [CrossRef]

- Mizuno, K.; Asada, A.; Ban, S.; Uehara, Y.; Ishida, T.; Okuda, N. Validation of a high-resolution acoustic imaging sonar method by estimating the biomass of submerged plants in shallow water. Ecol. Inf. 2018, 46, 179–184. [Google Scholar] [CrossRef]

- Abukawa, K.; Yamamuro, M.; Kikvidze, Z.; Asada, A.; Xu, C.; Sugimoto, K. Assessing the biomass and distribution of submerged aquatic vegetation using multibeam echo sounding in Lake Towada, Japan. Limnology 2013, 14, 39–42. [Google Scholar] [CrossRef]

- Wedding, L.M.; Friedlander, A.M.; McGranaghan, M.; Yost, R.S.; Monaco, M.E. Using bathymetric lidar to define nearshore benthic habitat complexity: Implications for management of reef fish assemblages in Hawaii. Remote Sens. Environ. 2008, 112, 4159–4165. [Google Scholar] [CrossRef]

- Nayegandhi, A.; Brock, J.C.; Wright, C.W. Small-footprint, waveform-resolving lidar estimation of submerged and sub-canopy topography in coastal environments. Int. J. Remote Sens. 2009, 30, 861–878. [Google Scholar] [CrossRef]

- Andersson, B. Identification and inventory of aquatic plant communities using remote sensing. Folia Geobot. Phytotaxon. 1990, 25, 227–233. [Google Scholar] [CrossRef]

- Valta-Hulkkonen, K.; Kanninen, A.; Pellikka, P. Remote sensing and GIS for detecting changes in the aquatic vegetation of a rehabilitated lake. Int. J. Remote Sens. 2004, 25, 5745–5758. [Google Scholar] [CrossRef]

- Flynn, K.F.; Chapra, S.C. Remote sensing of submerged aquatic vegetation in a shallow non-turbid river using an unmanned aerial vehicle. Remote Sens. 2014, 6, 12815–12836. [Google Scholar] [CrossRef]

- Nahirnick, N.K.; Hunter, P.; Costa, M.; Schroeder, S.; Sharma, T. Benefits and challenges of UAS imagery for eelgrass (Zostera marina) mapping in small estuaries of the Canadian West Coast. J. Coast. Res. 2019, 35, 673–683. [Google Scholar] [CrossRef]

- Husson, E.; Ecke, F.; Reese, H. Comparison of manual mapping and automated object-based image analysis of non-submerged aquatic vegetation from very-high-resolution UAS images. Remote Sens. 2016, 8, 724. [Google Scholar] [CrossRef]

- Chabot, D.; Dillon, C.; Shemrock, A.; Weissflog, N.; Sager, E.P.S. An object-based image analysis workflow for monitoring shallow-water aquatic vegetation in multispectral drone imagery. ISPRS Int. J. Geo-Inf. 2018, 7, 294. [Google Scholar] [CrossRef]

- Zharikov, V.V.; Bazarov, K.Y.; Egidarev, E.G.; Lebedev, A.M. Application of Landsat Data for Mapping Higher Aquatic Vegetation of the Far East Marine Reserve. Oceanology 2018, 58, 487–496. [Google Scholar] [CrossRef]

- Bakirman, T.; Gumusay, M.U.; Tuney, I. Mapping of the Seagrass Cover Along the Mediterranean Coast of Turkey Using Landsat 8 OLI Images. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Prague, Czech Republic, 12–19 July 2016; pp. 1103–1105. [Google Scholar]

- Hunter, P.D.; Gilvear, D.J.; Tyler, A.N.; Willby, N.J.; Kelly, A. Mapping macrophytic vegetation in shallow lakes using the Compact Airborne Spectrographic Imager (CASI). Aquat. Conserv. Mar. Freshw. Ecosyst. 2010, 20, 717–727. [Google Scholar] [CrossRef]

- Pu, R.; Bell, S.; Meyer, C.; Baggett, L.; Zhao, Y. Mapping and assessing seagrass along the western coast of Florida using Landsat TM and EO-1 ALI/Hyperion imagery. Estuar. Coast. Shelf Sci. 2012, 115, 234–245. [Google Scholar] [CrossRef]

- Klemas, V. Remote Sensing of Emergent and Submerged Wetlands: An Overview. Int. J. Remote Sens. 2013, 34, 6286–6320. [Google Scholar] [CrossRef]

- Costa, M.P.F.; Niemann, O.; Novo, E.; Ahern, F. Biophysical properties and mapping of aquatic vegetation during the hydrological cycle of the Amazon floodplain using JERS-1 and Radarsat. Int. J. Remote Sens. 2002, 23, 1401–1426. [Google Scholar] [CrossRef]

- Idzanovic, M.; Ophaug, V.; Andersen, O.B. Coastal sea-level in Norway from CryoSat-2 SAR altimetry. Adv. Space Res. 2018, 62. [Google Scholar] [CrossRef]

- Wilson, B.A.; Rashid, H. Monitoring the 1997 flood in the Red River Valley using hydrologic regimes and RADARSAT imagery. Can. Geogr. 2005, 49, 100–109. [Google Scholar] [CrossRef]

- Dwivedi, R.S.; Rao, B.R.M.; Bhattacharya, S. Mapping wetlands of the Sundaban Delta and it’s environs using ERS-1 SAR data. Int. J. Remote Sens. 1999, 20, 2235–2247. [Google Scholar] [CrossRef]

- Ford, K.H.; Voss, S.; Evans, N.T. Reproducibility, Precision, and Accuracy of a Hydroacoustic Method to Estimate Seagrass Canopy Height and Percent Cover in Massachusetts. Estuaries Coasts 2019. [Google Scholar] [CrossRef]

- Aasen, H.; Honkavaara, E.; Lucieer, A.; Zarco-Tejada, P.J. Quantitative Remote Sensing at Ultra-High Resolution with UAV Spectroscopy: A Review of Sensor Technology, Measurement Procedures, and Data Correction Workflows. Remote Sens. 2018, 10, 1091. [Google Scholar] [CrossRef]

- Cubert GmbH. Cubert Hyperspectral Video Cameras. Available online: https://cubert-gmbh.com/cubert-spectral-cameras/ (accessed on 14 July 2020).

- Madden, M. Remote sensing and geographic information system operations for vegetation mapping of invasive exotics. Weed Technol. 2004, 18, 1457–1463. [Google Scholar] [CrossRef]

- Valta-Hulkkonen, K.; Kanninen, A.; Ilvonen, R.; Leka, J. Assessment of aerial photography as a method for monitoring aquatic vegetation in lakes of varying trophic status. Boreal Environ. Res. 2005, 10, 57–66. [Google Scholar]

- Jallad, A.-H.; Marpu, P.; Abdul Aziz, Z.; Al Marar, A.; Awad, M. MeznSat—A 3U CubeSat for Monitoring Greenhouse Gases Using Short Wave Infra-Red Spectrometry: Mission Concept and Analysis. Aerospace 2019, 6, 118. [Google Scholar] [CrossRef]

- Byfield, V. Optical Remote Sensing of Marine, Coastal, and Inland Waters. In Handbook of Optoelectronics, Second Edition: Applied Optical Electronics Volume Three; CRC Press: Boca Raton, FL, USA, 2017; pp. 103–114. [Google Scholar] [CrossRef]

- Tatem, A.J.; Goetz, S.J.; Hay, S.I. Fifty Years of Earth-Observation Satellites. Available online: https://www.americanscientist.org/article/fifty-years-of-earth-observation-satellites (accessed on 23 May 2020).

- McIlwaine, B.; Casado, M.R.; Leinster, P. Using 1st Derivative Reflectance Signatures within a Remote Sensing Framework to Identify Macroalgae in Marine Environments. Remote Sens. 2019, 11, 704. [Google Scholar] [CrossRef]

- TriOS. RAMSES. Available online: https://www.trios.de/en/ramses.html (accessed on 24 November 2020).

- Panalytical, M. ASD Range. Available online: https://www.malvernpanalytical.com/en/products/product-range/asd-range (accessed on 20 March 2020).

- Spectra Vista Corporation. HR-640i—High Resolution Field Portable Spectroradiometer. Available online: https://www.spectravista.com/our-instruments/hr-640i/ (accessed on 20 March 2020).

- Ocean Insight. Spectrometers. Available online: https://www.oceaninsight.com/products/spectrometers/ (accessed on 14 July 2020).

- Spectral Evolution. Products. Available online: https://spectralevolution.com/products/hardware/ (accessed on 10 March 2020).

- Muller-Karger, F.E.; Hestir, E.; Ade, C.; Turpie, K.; Roberts, D.A.; Siegel, D.; Miller, R.J.; Humm, D.; Izenberg, N.; Keller, M.; et al. Satellite sensor requirements for monitoring essential biodiversity variables of coastal ecosystems. Ecol. Appl. 2018, 28, 749–760. [Google Scholar] [CrossRef]

- Mouroulis, P.; Van Gorp, B.; Green, R.O.; Dierssen, H.; Wilson, D.W.; Eastwood, M.; Boardman, J.; Gao, B.-C.; Cohen, D.; Franklin, B.; et al. Portable Remote Imaging Spectrometer coastal ocean sensor: Design, characteristics, and first flight results. Appl. Opt. 2014, 53, 1363–1380. [Google Scholar] [CrossRef]

- Qian, S.; Bergeron, M.; Djazovski, O.; Maszkiewicz, M.; Girard, R.; Kappus, M.; Bowles, J.; Mannino, A.; Matuszeski, A.; Furlong, M.; et al. A Spaceborne Coastal and Inland Water Color Hyperspectral Imager. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 447–450. [Google Scholar]

- Achal, S.; SQian, S.-E.; Bergeron, M.; Liu, P.; Umana Diaz, A.; Leung, R. WaterSat Imaging Spectrometer Experiment (WISE) for Canadian Microsatellite Mission. In Proceedings of the Ocean Optics XXIV, Dubrovnik, Croatia, 7–12 October 2018. [Google Scholar]

- Del Castillo, C.; Platnick, S. Pre-Aerosol, Clouds and ocean Ecosystem (PACE) Mission Science Definition Team Report; NASA: Washington, DC, USA, 2012. [Google Scholar]

- Fu, L.-L. SWOT: Tracking Water on Earth from Mountains to the Deep Sea. Available online: https://swot.jpl.nasa.gov/system/documents/files/2229_2229_swot_introduction_fu.pdf?undefined (accessed on 14 July 2020).

- Mission: AirSWOT. Available online: https://swot.jpl.nasa.gov/mission/airswot/ (accessed on 10 December 2020).

- Grewal, M.S.; Weill, L.R.; Andrews, A.P. Fundamentals of Satellite and Inertial Navigation. In Global Positioning Systems, Inertial Navigation, and Integration; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 2006; pp. 18–52. [Google Scholar] [CrossRef]

- Daakir, M.; Pierrot-Deseilligny, M.; Bosser, P.; Pichard, F.; Thom, C.; Rabot, Y.; Martin, O. Lightweight UAV with on-board photogrammetry and single-frequency GPS positioning for metrology applications. ISPRS J. Photogramm. Remote Sens. 2017, 127, 115–126. [Google Scholar] [CrossRef]

- Zhang, H.; Aldana-Jaquw, E.; Clapuyt, F.; Wilken, F.; Vanacker, V.; Van Oost, K. Evaluating the potential of post-processing kinematic (PPK) georeferencing for UAV-based structure-from-motion (SfM) photogrammetry and surface change detection. Earth Surf. Dyn. 2019, 7, 807–827. [Google Scholar] [CrossRef]

- Kalacska, M.; Lucanus, O.; Arroyo-Mora, J.P.; Laliberté, É.; Elmer, K.; Leblanc, G.; Groves, A. Accuracy of 3D Landscape Reconstruction without Ground Control Points Using Different UAS Platforms. Drones 2020, 4, 13. [Google Scholar] [CrossRef]

- Koenig, F.; Wong, D. Real-Time Kinematics Global Positioning System (GPS) Operation and Setup Method for the Synchronous Impulse Reconstruction (SIRE) Radar; U.S. Army Research Laboratory: Adelphi, MD, USA, 2010; p. 22. [Google Scholar]

- Joyce, K.E.; Duce, S.; Leahy, S.M.; Leon, J.; Maier, S.W. Principles and practice of acquiring drone-based image data in marine environments. Mar. Freshw. Res. 2019, 70, 952–963. [Google Scholar] [CrossRef]

- Bostater, C.R.; Bassetti, L. Detecting Submerged Features in Water: Modeling, Sensors and Measurements. In Proceedings of the Remote Sensing of the Ocean and Sea Ice, Maspalomas, Canary Islands, Spain, 13–16 September 2004; pp. 87–97. [Google Scholar]

- Chadwick, B. Remotely Operated Vehicles (ROVs) and Autonomous Underwater Vehicles (AUVs). Available online: https://oceanexplorer.noaa.gov/explorations/02fire/background/rovs_auvs/rov_auv.html (accessed on 14 July 2020).

- Antelme, M.; Boon, J.; Mills, O. Search for Endurance Ends. Available online: https://weddellseaexpedition.org/news/search-for-endurance-ends/ (accessed on 13 December 2020).

- Lippsett, L. RIPABE: The pioneering Autonomous Benthic Explorer is lost at sea. Oceanus 2010, 48, 42–44. [Google Scholar]

- Odetti, A.; Bibuli, M.; Bruzzone, G.; Caccia, M.; Spirandelli, E.; Bruzzone, G. e-URoPe: A reconfigurable AUV/ROV for man-robot underwater cooperation. IFAC-PapersOnLine 2017, 50, 11203–11208. [Google Scholar] [CrossRef]

- Roelfsema, C.; Lyons, M.; Dunbabin, M.; Kovacs, E.M.; Phinn, S. Integrating field survey data with satellite image data to improve shallow water seagrass maps: The role of AUV and snorkeller surveys? Remote Sens. Lett. 2015, 6, 135–144. [Google Scholar] [CrossRef]

- Thaler, A.D.; Freitag, A.; Bergman, E.; Fretz, D.; Saleu, W. Robots as vectors for marine invasions: Best practices for minimizing transmission of invasive species via observation-class ROVs. Trop. Conserv. Sci. 2015, 8, 711–717. [Google Scholar] [CrossRef]

- Elmer, K.; Soffer, R.J.; Arroyo-Mora, J.P.; Kalacska, M. ASDToolkit: A Novel MATLAB Processing Toolbox for ASD Field Spectroscopy Data. Data 2020, 5, 96. [Google Scholar] [CrossRef]

- Watts, A.C.; Ambrosia, V.G.; Hinkley, E.A. Unmanned aircraft systems in remote sensing and scientific research: Classification and considerations of use. Remote Sens. 2012, 4, 1671–1692. [Google Scholar] [CrossRef]

- Hruska, R.; Mitchell, J.; Anderson, M.; Glenn, N.F. Radiometric and Geometric Analysis of Hyperspectral Imagery Acquired from an Unmanned Aerial Vehicle. Remote Sens. 2012, 4, 2736–2752. [Google Scholar] [CrossRef]

- Arroyo-Mora, J.P.; Kalacska, M.; Inamdar, D.; Soffer, R.; Lucanus, O.; Gorman, J.; Naprstek, T.; Schaaf, E.S.; Ifimov, G.; Elmer, K.; et al. Implementation of a UAV–Hyperspectral Pushbroom Imager for Ecological Monitoring. Drones 2019, 3, 12. [Google Scholar] [CrossRef]

- Lucieer, A.; Malenovský, Z.; Veness, T.; Wallace, L. HyperUAS—Imaging Spectroscopy from a Multirotor Unmanned Aircraft System. J. Field Rob. 2014, 31, 571–590. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; McCabe, M.; Parkes, S.; Clarke, I. Pushbroom Hyperspectral Imaging from an Unmanned Aircraft System (Uas)—Geometric Processingworkflow and Accuracy Assessment. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, XLII-2/W6, 379–384. [Google Scholar] [CrossRef]

- Arroyo-Mora, J.P.; Kalacska, M.; Soffer, R.; Ifimov, G.; Leblanc, G.; Schaaf, E.S.; Lucanus, O. Evaluation of phenospectral dynamics with Sentinel-2A using a bottom-up approach in a northern ombrotrophic peatland. Remote Sens. Environ. 2018, 216, 544–560. [Google Scholar] [CrossRef]

- Soffer, R.; Ifimov, G.; Arroyo-Mora, J.P.; Kalacska, M. Validation of Airborne Hyperspectral Imagery from Laboratory Panel Characterization to Image Quality Assessment: Implications for an Arctic Peatland Surrogate Simulation Site. Can. J. Remote Sens. 2019, 45, 476–508. [Google Scholar] [CrossRef]

- Klemas, V. Airborne Remote Sensing of Coastal Features and Processes: An Overview. J. Coast. Res. 2013, 29, 239–255. [Google Scholar] [CrossRef]

- Lehmann, A.; Lachavanne, J.B. Geographic information systems and remote sensing in aquatic botany. Aquat. Bot. 1997, 58, 195–207. [Google Scholar] [CrossRef]

- Heblinski, J.; Schmieder, K.; Heege, T.; Agyemang, T.K.; Sayadyan, H.; Vardanyan, L. High-resolution satellite remote sensing of littoral vegetation of Lake Sevan (Armenia) as a basis for monitoring and assessment. Hydrobiologia 2011, 661, 97–111. [Google Scholar] [CrossRef]

- Planet. 50 cm Skysat Imagery Now Available. Available online: https://www.planet.com/50cm/ (accessed on 11 July 2020).

- Satellite Imaging Corporation. WorldView-3 Satellite Sensor. Available online: https://www.satimagingcorp.com/satellite-sensors/worldview-3/ (accessed on 7 February 2020).

- European Space Agency. The Earth Observation Handbook: Key Tables; European Space Agency: Frascati, Italy, 2010. [Google Scholar]

- Ripley, H.T.; Dobberfuhl, D.; Hart, C. Mapping Submerged Aquatic Vegetation with Hyperspectral Techniques. In Proceedings of the Oceans 2009, Biloxi, MS, USA, 26–29 October 2009; p. 1999. [Google Scholar]

- Lekki, J.; Anderson, R.; Avouris, D.; Becker, R.; Churnside, J.; Cline, M.; Demers, J.; Leshkevich, G.; Liou, L.; Luvall, J.; et al. Airborne Hyperspectral Sensing of Harmful Algal Blooms in the Great Lakes Region: System Calibration and Validation; NASA: Washington, DC, USA, 2017. [Google Scholar]

- Agjee, N.; Mutanga, O.; Ismail, R. Remote sensing bio-control damage on aquatic invasive alien plant species. South. Afr. J. Geomat. 2015, 4, 464–485. [Google Scholar] [CrossRef][Green Version]

- Albright, T.P.; Ode, D.J. Monitoring the dynamics of an invasive emergent macrophyte community using operational remote sensing data. Hydrobiologia 2011, 661, 469–474. [Google Scholar] [CrossRef]

- Armstrong, R.A. Remote sensing of submerged vegetation canopies for biomass estimation. Int. J. Remote Sens. 1993, 14, 621–627. [Google Scholar] [CrossRef]

- Bolpagni, R.; Bresciani, M.; Laini, A.; Pinardi, M.; Matta, E.; Ampe, E.M.; Giardino, C.; Viaroli, P.; Bartoli, M. Remote sensing of phytoplankton-macrophyte coexistence in shallow hypereutrophic fluvial lakes. Hydrobiologia 2014, 737, 67–76. [Google Scholar] [CrossRef]

- Boschi, L.S.; Galo, M.; Rotta, L.H.S.; Watanabe, F.S.Y. Mapping the Bio-volume of Submerged Aquatic Vegetation through Hydro-acoustic Data and High-Resolution Multi-Spectral Imaging. Planta Daninha 2012, 30, 525–539. [Google Scholar] [CrossRef][Green Version]

- Brinkhoff, J.; Hornbuckle, J.; Barton, J.L. Assessment of Aquatic Weed in Irrigation Channels Using UAV and Satellite Imagery. Water 2018, 10, 1497. [Google Scholar] [CrossRef]

- Cai, F.; Lu, W.; Shi, W.; He, S. A mobile device-based imaging spectrometer for environmental monitoring by attaching a lightweight small module to a commercial digital camera. Sci. Rep. 2017, 7. [Google Scholar] [CrossRef]

- Chami, M.; Lenot, X.; Guillaume, M.; Lafrance, B.; Briottet, X.; Minghelli, A.; Jay, S.; Deville, Y.; Serfaty, V. Analysis and quantification of seabed adjacency effects in the subsurface upward radiance in shallow waters. Opt. Express 2019, 27, A319–A338. [Google Scholar] [CrossRef]

- Chami, M.; Harmel, T. Remote Sensing and Ocean Color. In Land Surface Remote Sensing in Urban and Coastal Areas; ISTE Press Ltd.: London, UK, 2016; pp. 141–183. [Google Scholar] [CrossRef]

- Chander, S.; Pompapathi, V.; Gujrati, A.; Singh, R.P.; Chaplot, N.; Patel, U.D. Growth of Invasive Aquatic Macrophytes Over Tapi River. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Dehradun, India, 20–23 November 2018; pp. 829–833. [Google Scholar]

- Cheruiyot, E.K.; Mito, C.; Menenti, M.; Gorte, B.; Koenders, R.; Akdim, N. Evaluating MERIS-based aquatic vegetation mapping in lake victoria. Remote Sens. 2014, 6, 7762–7782. [Google Scholar] [CrossRef]

- Costa, B.M.; Battista, T.A.; Pittman, S.J. Comparative evaluation of airborne LiDAR and ship-based multibeam SoNAR bathymetry and intensity for mapping coral reef ecosystems. Remote Sens. Environ. 2009, 113, 1082–1100. [Google Scholar] [CrossRef]

- Dekker, A.G.; Brando, V.E.; Anstee, J.M. Retrospective seagrass change detection in a shallow coastal tidal Australian lake. Remote Sens. Environ. 2005, 97, 415–433. [Google Scholar] [CrossRef]

- Dekker, A.G.; Phinn, S.R.; Anstee, J.; Bissett, P.; Brando, V.E.; Casey, B.; Fearns, P.; Hedley, J.; Klonowski, W.; Lee, Z.P.; et al. Intercomparison of shallow water bathymetry, hydro-optics, and benthos mapping techniques in Australian and Caribbean coastal environments. Limnol. Oceanogr. Methods 2011, 9, 396–425. [Google Scholar] [CrossRef]

- Dogan, O.K.; Akyurek, Z.; Beklioglu, M. Identification and mapping of submerged plants in a shallow lake using quickbird satellite data. J. Environ. Manag. 2009, 90, 2138–2143. [Google Scholar] [CrossRef]

- Fearns, P.R.C.; Klonowski, W.; Babcock, R.C.; England, P.; Phillips, J. Shallow water substrate mapping using hyperspectral remote sensing. Cont. Shelf Res. 2011, 31, 1249–1259. [Google Scholar] [CrossRef]

- Ferguson, R.L.; Wood, L.L. Mapping Submerged Aquatic Vegetation in North Carolina with Conventional Aerial Photography. Fish. Wildl. Serv. Biol. Rep. 1990, 90, 125–133. [Google Scholar]

- Ferguson, R.L.; Korfmacher, K. Remote sensing and GIS analysis of seagrass meadows in North Carolina, USA. Aquat. Bot. 1997, 58, 241–258. [Google Scholar] [CrossRef]

- Ferretti, R.; Bibuli, M.; Caccia, M.; Chiarella, D.; Odetti, A.; Ranieri, A.; Zereik, E.; Bruzzone, G. Towards Posidonia Meadows Detection, Mapping and Automatic recognition using Unmanned Marine Vehicles. IFAC-PapersOnLine 2017, 50, 12386–12391. [Google Scholar] [CrossRef]

- Fritz, C.; Schneider, T.; Geist, J. Seasonal variation in spectral response of submerged aquatic macrophytes: A case study at Lake Starnberg (Germany). Water 2017, 9, 527. [Google Scholar] [CrossRef]

- Fritz, C.; Dörnhöfer, K.; Schneider, T.; Geist, J.; Oppelt, N. Mapping submerged aquatic vegetation using RapidEye satellite data: The example of Lake Kummerow (Germany). Water 2017, 9, 510. [Google Scholar] [CrossRef]

- Fritz, C.; Kuhwald, K.; Schneider, T.; Geist, J.; Oppelt, N. Sentinel-2 for mapping the spatio-temporal development of submerged aquatic vegetation at Lake Starnberg (Germany). J. Limnol. 2019, 78, 71–91. [Google Scholar] [CrossRef]

- Gao, Y.; Gao, J.; Wang, J.; Wang, S.; Li, Q.; Zhai, S.; Zhou, Y. Estimating the biomass of unevenly distributed aquatic vegetation in a lake using the normalized water-adjusted vegetation index and scale transformation method. Sci. Total Environ. 2017, 601–602, 998–1007. [Google Scholar] [CrossRef] [PubMed]

- Garcia, R.A.; Lee, Z.; Barnes, B.B.; Hu, C.; Dierssen, H.M.; Hochberg, E.J. Benthic classification and IOP retrievals in shallow water environments using MERIS imagery. Remote Sens. Environ. 2020, 249, 112015. [Google Scholar] [CrossRef]

- Ghirardi, N.; Bolpagni, R.; Bresciani, M.; Valerio, G.; Pilotti, M.; Giardino, C. Spatiotemporal Dynamics of Submerged Aquatic Vegetation in a Deep Lake from Sentinel-2 Data. Water 2019, 11, 563. [Google Scholar] [CrossRef]

- Gower, J.; Hu, C.M.; Borstad, G.; King, S. Ocean color satellites show extensive lines of floating sargassum in the Gulf of Mexico. IEEE Trans. Geosci. Remote Sens. 2006, 44, 3619–3625. [Google Scholar] [CrossRef]

- Gullström, M.; Lundén, B.; Bodin, M.; Kangwe, J.; Öhman, M.C.; Mtolera, M.S.P.; Björk, M. Assessment of changes in the seagrass-dominated submerged vegetation of tropical Chwaka Bay (Zanzibar) using satellite remote sensing. Estuar. Coast. Shelf Sci. 2006, 67, 399–408. [Google Scholar] [CrossRef]

- Han, L. Spectral Reflectance of Thalassid Testudinum with Varying Depths. In Proceedings of the IGARSS 2002: IEEE International Geoscience and Remote Sensing Symposium and 24th Canadian Symposium on Remote Sensing, Toronto, ON, Canada, 24–28 June 2002; Volumes I–VI, pp. 2123–2125. [Google Scholar]

- Herkül, K.; Kotta, J.; Kutser, T.; Vahtmäe, E. Relating Remotely Sensed Optical Variability to Marine Benthic Biodiversity. PLoS ONE 2013, 8, e55624. [Google Scholar] [CrossRef] [PubMed]

- Hestir, E.L.; Khanna, S.; Andrew, M.E.; Santos, M.J.; Viers, J.H.; Greenberg, J.A.; Rajapakse, S.S.; Ustin, S.L. Identification of invasive vegetation using hyperspectral remote sensing in the California Delta ecosystem. Remote Sens. Environ. 2008, 112, 4034–4047. [Google Scholar] [CrossRef]

- Hestir, E.L.; Greenberg, J.A.; Ustin, S.L. Classification trees for aquatic vegetation community prediction from imaging spectroscopy. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1572–1584. [Google Scholar] [CrossRef]

- Hoang, T.; Garcia, R.; O’Leary, M.; Fotedar, R. Identification and mapping of marine submerged aquatic vegetation in shallow coastal waters with worldview-2 satellite data. J. Coast. Res. 2016, 1, 1287–1291. [Google Scholar] [CrossRef]

- Hogrefe, K.R.; Ward, D.H.; Donnelly, T.F.; Dau, N. Establishing a baseline for regional scale monitoring of eelgrass (Zostera marina) habitat on the lower Alaska Peninsula. Remote Sens. 2014, 6, 12447–12477. [Google Scholar] [CrossRef]

- Howari, F.M.; Jordan, B.R.; Bouhouche, N.; Wyllie-Echeverria, S. Field and Remote-Sensing Assessment of Mangrove Forests and Seagrass Beds in the Northwestern Part of the United Arab Emirates. J. Coast. Res. 2009, 25, 48–56. [Google Scholar] [CrossRef]

- Hu, C.M.; Lee, Z.P.; Muller-Karger, F.E.; Carder, K.L.; Walsh, J.J. Ocean color reveals phase shift between marine plants and yellow substance. IEEE Geosci. Remote Sens. Lett. 2006, 3, 262–266. [Google Scholar] [CrossRef]

- Huen, W.K.; Zhang, Y. Preliminary studies on coral mapping in tung ping chau of the eastern Hong Kong using high-resolution SPOT satellite imagery. Ann. GIS 2011, 17, 93–98. [Google Scholar] [CrossRef]

- Husson, E.; Reese, H.; Ecke, F. Combining spectral data and a DSM from UAS-images for improved classification of non-submerged aquatic vegetation. Remote Sens. 2017, 9, 247. [Google Scholar] [CrossRef]

- Husson, E.; Hagner, O.; Ecke, F. Unmanned aircraft systems help to map aquatic vegetation. Appl. Veg. Sci. 2014, 17, 567–577. [Google Scholar] [CrossRef]

- Irish, J.L.; Lillycrop, W.J. Monitoring New Pass, Florida, with High Density Lidar Bathymetry. J. Coast. Res. 1997, 13, 1130–1140. [Google Scholar]

- Jakubauskas, M.; Kindscher, K.; Fraser, A.; Debinski, D.; Price, K.P. Close-range remote sensing of aquatic macrophyte vegetation cover. Int. J. Remote Sens. 2000, 21, 3533–3538. [Google Scholar] [CrossRef]

- Jenkins, C.; Eggleton, J.; Barry, J.; O’Connor, J. Advances in assessing Sabellaria spinulosa reefs for ongoing monitoring. Ecol. Evol. 2018, 8, 7673–7687. [Google Scholar] [CrossRef]

- John, C.M.; Nath, K. Integration of Multispectral Satellite and Hyperspectral Field Data for Aquatic Macrophyte Studies. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences—ISPRS Archives, Hyderabad, India, 9–12 December 2014; pp. 581–588. [Google Scholar]

- Kasvi, E.; Salmela, J.; Lotsari, E.; Kumpula, T.; Lane, S.N. Comparison of remote sensing based approaches for mapping bathymetry of shallow, clear water rivers. Geomorphology 2019, 333, 180–197. [Google Scholar] [CrossRef]

- Khanh Ni, T.N.; Tin, H.C.; Thach, V.T.; Jamet, C.; Saizen, I. Mapping submerged aquatic vegetation along the central Vietnamese coast using multi-source remote sensing. ISPRS Int. J. Geo-Inf. 2020, 9, 395. [Google Scholar] [CrossRef]

- Khanna, S.; Santos, M.J.; Hestir, E.L.; Ustin, S.L. Plant community dynamics relative to the changing distribution of a highly invasive species, Eichhornia crassipes: A remote sensing perspective. Biol. Invasions 2012, 14, 717–733. [Google Scholar] [CrossRef]

- Koedsin, W.; Intararuang, W.; Ritchie, R.J.; Huete, A. An integrated field and remote sensing method for mapping seagrass species, cover, and biomass in Southern Thailand. Remote Sens. 2016, 8, 292. [Google Scholar] [CrossRef]

- Kotta, J.; Kutser, T.; Teeveer, K.; Vahtmäe, E.; Pärnoja, M. Predicting Species Cover of Marine Macrophyte and Invertebrate Species Combining Hyperspectral Remote Sensing, Machine Learning and Regression Techniques. PLoS ONE 2013, 8, e63946. [Google Scholar] [CrossRef] [PubMed]

- Lane, C.R.; Anenkhonov, O.; Liu, H.; Autrey, B.C.; Chepinoga, V. Classification and inventory of freshwater wetlands and aquatic habitats in the Selenga River Delta of Lake Baikal, Russia, using high-resolution satellite imagery. Wetl. Ecol. Manag. 2015, 23, 195–214. [Google Scholar] [CrossRef]

- Lathrop, R.G.; Montesano, P.; Haag, S. A multi-scale segmentation approach to mapping seagrass habitats using airborne digital camera imagery. Photogramm. Eng. Remote Sens. 2006, 72, 665–675. [Google Scholar] [CrossRef]

- Liang, Q.; Zhang, Y.; Ma, R.; Loiselle, S.; Li, J.; Hu, M. A MODIS-based novel method to distinguish surface cyanobacterial scums and aquatic macrophytes in Lake Taihu. Remote Sens. 2017, 9, 133. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, Y.; Shi, K.; Zhou, Y.; Tang, X.; Zhu, G.; Qin, B. Mapping aquatic vegetation in a large, shallow eutrophic lake: A frequency-based approach using multiple years of MODIS data. Remote Sens. 2015, 7, 10295–10320. [Google Scholar] [CrossRef]

- Louchard, E.M.; Reid, R.P.; Stephens, F.C.; Davis, C.O.; Leathers, R.A.; Valerie, D.T. Optical remote sensing of benthic habitats and bathymetry in coastal environments at Lee Stocking Island, Bahamas: A comparative spectral classification approach. Limnol. Oceanogr. 2003, 48, 511–521. [Google Scholar] [CrossRef]

- Lu, D.J.; Cho, H.J. An improved water-depth correction algorithm for seagrass mapping using hyperspectral data. Remote Sens. Lett. 2011, 2, 91–97. [Google Scholar] [CrossRef]

- Luo, J.H.; Ma, R.H.; Duan, H.T.; Hu, W.P.; Zhu, J.G.; Huang, W.J.; Lin, C. A New Method for Modifying Thresholds in the Classification of Tree Models for Mapping Aquatic Vegetation in Taihu Lake with Satellite Images. Remote Sens. 2014, 6, 7442–7462. [Google Scholar] [CrossRef]

- Luo, J.H.; Li, X.C.; Ma, R.H.; Li, F.; Duan, H.T.; Hu, W.P.; Qin, B.Q.; Huang, W.J. Applying remote sensing techniques to monitoring seasonal and interannual changes of aquatic vegetation in Taihu Lake, China. Ecol. Indic. 2016, 60, 503–513. [Google Scholar] [CrossRef]

- Luo, J.; Duan, H.; Ma, R.; Jin, X.; Li, F.; Hu, W.; Shi, K.; Huang, W. Mapping species of submerged aquatic vegetation with multi-seasonal satellite images and considering life history information. Int. J. Appl. Earth Obs. Geoinf. 2017, 57, 154–165. [Google Scholar] [CrossRef]

- Lyons, M.; Phinn, S.; Roelfsema, C. Long Term Land Cover and Seagrass Mapping Using Landsat Sensors from 1972–2010 in the Coastal Environment of South East Queensland, Australia. ISPRS J. Photogramm. Remote Sens. 2012, 71, 34–46. [Google Scholar] [CrossRef]

- Macleod, R.D.; Congalton, R.G. A quantitative comparison of change-detection algorithms for monitoring eelgrass from remotely sensed data. Photogramm. Eng. Remote Sens. 1998, 64, 207–216. [Google Scholar]

- Malthus, T.J.; George, D.G. Airborne remote sensing of macrophytes in Cefni Reservoir, Anglesey, UK. Aquat. Bot. 1997, 58, 317–332. [Google Scholar] [CrossRef]

- McLaren, K.; McIntyre, K.; Prospere, K. Using the random forest algorithm to integrate hydroacoustic data with satellite images to improve the mapping of shallow nearshore benthic features in a marine protected area in Jamaica. GIScience Remote Sens. 2019, 56, 1065–1092. [Google Scholar] [CrossRef]

- Mehrubeoglu, M.; Trombley, C.; Shanks, S.E.; Cammarata, K.; Simons, J.; Zimba, P.V.; McLauchlan, L. Empirical Mode Decomposition of Hyperspectral Images for Segmentation of Seagrass Coverage. In Proceedings of the IEEE International Conference on Imaging Systems and Techniques, Santorini, Greece, 14–17 October 2014; pp. 33–37. [Google Scholar]

- Meyer, C.A.; Pu, R. Seagrass resource assessment using remote sensing methods in St. Joseph Sound and Clearwater Harbor, Florida, USA. Environ. Monit. Assess. 2012, 184, 1131–1143. [Google Scholar] [CrossRef] [PubMed]

- Modjeski, A.C. Submerged Aquatic Vegetation (SAV) Aerial Hyperspectral Imaging and Groundtruthing Survey: Use of Aerial Hyperspectral Imaging in Defining Habitat Areas of Particular-Concern for Summer Flounder in a High-Energy Estuarine Environment. In Proceedings of the Environment Concerns in Rights-of-Way Management 8th International Symposium, Saratoga Springs, NY, USA, 12–16 September 2004; Elsevier: Amsterdam, The Netherlands, 2008; pp. 723–728. [Google Scholar] [CrossRef]

- Mumby, P.J.; Edwards, A.J. Mapping marine environments with IKONOS imagery: Enhanced spatial resolution can deliver greater thematic accuracy. Remote Sens. Environ. 2002, 82, 248–257. [Google Scholar] [CrossRef]

- Nelson, T.A.; Gillanders, S.N.; Harper, J.; Morris, M. Nearshore Aquatic Habitat Monitoring: A Seabed Imaging and Mapping Approach. J. Coast. Res. 2011, 27, 348–355. [Google Scholar] [CrossRef]

- Nieder, W.C.; Barnaba, E.; Findlay, S.E.G.; Hoskins, S.; Holochuck, N.; Blair, E.A. Distribution and abundance of submerged aquatic vegetation and Trapa natans in the Hudson River estuary. J. Coast. Res. 2004, 20, 150–161. [Google Scholar] [CrossRef]

- Nobi, E.P.; Thangaradjou, T. Evaluation of the spatial changes in seagrass cover in the lagoons of Lakshadweep islands, India, using IRS LISS III satellite images. Geocarto Int. 2012, 27, 647–660. [Google Scholar] [CrossRef]

- Olmanson, L.G.; Page, B.P.; Finlay, J.C.; Brezonik, P.L.; Bauer, M.E.; Griffin, C.G.; Hozalski, R.M. Regional measurements and spatial/temporal analysis of CDOM in 10,000+ optically variable Minnesota lakes using Landsat 8 imagery. Sci. Total Environ. 2020, 724, 138141. [Google Scholar] [CrossRef]

- Olmanson, L.G.; Brezonik, P.L.; Bauer, M.E. Airborne hyperspectral remote sensing to assess spatial distribution of water quality characteristics in large rivers: The Mississippi River and its tributaries in Minnesota. Remote Sens. Environ. 2013, 130, 254–265. [Google Scholar] [CrossRef]

- O’Neill, J.D.; Costa, M.P.F.; Sharma, T. Remote sensing of shallow coastal benthic substrates: In situ spectra and mapping of eelgrass (Zostera marina) in the Gulf Islands National Park Reserve of Canada. Remote Sens. 2011, 3, 975–1005. [Google Scholar] [CrossRef]

- Orth, R.J.; Carruthers, T.J.; Dennison, W.C.; Duarte, C.M.; Fourqurean, J.W.; Heck, K.L.; Hughes, A.R.; Kendrick, G.A.; Kenworthy, W.J.; Olyarnik, S. A global crisis for seagrass ecosystems. Bioscience 2006, 56, 987–996. [Google Scholar] [CrossRef]

- Oyama, Y.; Matsushita, B.; Fukushima, T. Distinguishing surface cyanobacterial blooms and aquatic macrophytes using Landsat/TM and ETM+ shortwave infrared bands. Remote Sens. Environ. 2015, 157, 35–47. [Google Scholar] [CrossRef]

- Parson, L.E.; Lillycrop, W.J.; Klein, C.J.; Ives, R.C.P.; Orlando, S.P. Use of Lidar Technology for Collecting Shallow Water Bathymetry of Florida Bay. J. Coast. Res. 1997, 13, 1173–1180. [Google Scholar]

- Peneva, E.; Griffith, J.; Carter, G. Seagrass Mapping in the Northern Gulf of Mexico using Airborne Hyperspectral Imagery: A Comparison of Classification Methods. J. Coast. Res. J. Coast. Res. 2008, 24, 850–856. [Google Scholar] [CrossRef]

- Phinn, S.; Roelfsema, C.; Dekker, A.; Brando, V.; Anstee, J. Mapping seagrass species, cover and biomass in shallow waters: An assessment of satellite multi-spectral and airborne hyper-spectral imaging systems in Moreton Bay (Australia). Remote Sens. Environ. 2008, 112, 3413–3425. [Google Scholar] [CrossRef]

- Pinardi, M.; Bresciani, M.; Villa, P.; Cazzaniga, I.; Laini, A.; Tóth, V.; Fadel, A.; Austoni, M.; Lami, A.; Giardino, C. Spatial and temporal dynamics of primary producers in shallow lakes as seen from space: Intra-annual observations from Sentinel-2A. Limnologica 2018, 72, 32–43. [Google Scholar] [CrossRef]

- Pinnel, N.; Heege, T.; Zimmermann, S. Spectral Discrimination of Submerged Macrophytes in Lakes Using Hyperspectral Remote Sensing Data. In Proceedings of the Ocean Optics XVII, Freemantle, Australia, 25–29 October 2004; pp. 1–16. [Google Scholar]

- Pu, R.L.; Bell, S. A protocol for improving mapping and assessing of seagrass abundance along the West Central Coast of Florida using Landsat TM and EO-1 ALI/Hyperion images. ISPRS J. Photogramm. Remote Sens. 2013, 83, 116–129. [Google Scholar] [CrossRef]

- Pu, R.; Bell, S.; Meyer, C. Mapping and assessing seagrass bed changes in Central Florida’s west coast using multitemporal Landsat TM imagery. Estuar. Coast. Shelf Sci. 2014, 149, 68–79. [Google Scholar] [CrossRef]

- Pu, R.; Bell, S.; Baggett, L.; Meyer, C.; Zhao, Y. Discrimination of seagrass species and cover classes with in situ hyperspectral data. J. Coast. Res. 2012, 28, 1330–1344. [Google Scholar] [CrossRef]

- Qing, S.; Runa, A.; Shun, B.; Zhao, W.; Bao, Y.; Hao, Y. Distinguishing and mapping of aquatic vegetations and yellow algae bloom with Landsat satellite data in a complex shallow Lake, China during 1986–2018. Ecol. Indic. 2020, 112, 106073. [Google Scholar] [CrossRef]

- Quintino, V.; Freitas, R.; Mamede, R.; Ricardo, F.; Rodrigues, A.M.; Mota, J.; Pérez-Ruzafa, Á.; Marcos, C. Remote sensing of underwater vegetation using single-beam acoustics. ICES J. Mar. Sci. 2010, 67, 594–605. [Google Scholar] [CrossRef]

- Rahnemoonfar, M.; Yari, M.; Rahman, A.; Kline, R. The First Automatic Method for Mapping the Pothole in Seagrass. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 267–274. [Google Scholar]

- Reshitnyk, L.; Costa, M.; Robinson, C.; Dearden, P. Evaluation of WorldView-2 and acoustic remote sensing for mapping benthic habitats in temperate coastal Pacific waters. Remote Sens. Environ. 2014, 153, 7–23. [Google Scholar] [CrossRef]

- Roessler, S.; Wolf, P.; Schneider, T.; Melzer, A. Multispectral Remote Sensing of Invasive Aquatic Plants Using RapidEye. In Lecture Notes in Geoinformation and Cartography; Springer: Berlin/Heidelberg, Germany, 2013; pp. 109–123. [Google Scholar] [CrossRef]

- Rotta, L.H.D.S.; Imai, N.N. Submerged Macrophytes Height Estimation by Echosounder Data Sample. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012; pp. 808–811. [Google Scholar]

- Sabol, B.M.; Melton, R.E.; Chamberlain, R.; Doering, P.; Haunert, K. Evaluation of a digital echo sounder system for detection of submersed aquatic vegetation. Estuaries 2002, 25, 133–141. [Google Scholar] [CrossRef]

- Saul, S.; Purkis, S. Semi-Automated Object-Based Classification of Coral Reef Habitat using Discrete Choice Models. Remote Sens. 2015, 7, 15894–15916. [Google Scholar] [CrossRef]

- Schweizer, D.; Armstrong, R.A.; Posada, J. Remote sensing characterization of benthic habitats and submerged vegetation biomass in Los Roques Archipelago National Park, Venezuela. Int. J. Remote Sens. 2005, 26, 2657–2667. [Google Scholar] [CrossRef]

- Shapiro, A.C.; Rohmann, S.O. Mapping changes in submerged aquatic vegetation using Landsat imagery and benthic habitat data: Coral reef ecosystem monitoring in Vieques Sound between 1985 and 2000. Bull. Mar. Sci. 2006, 79, 375–388. [Google Scholar]

- Shekede, M.D.; Kusangaya, S.; Schmidt, K. Spatio-temporal variations of aquatic weeds abundance and coverage in Lake Chivero, Zimbabwe. Phys. Chem. Earth. 2008, 33, 714–721. [Google Scholar] [CrossRef]

- Shuchman, R.A.; Sayers, M.J.; Brooks, C.N. Mapping and monitoring the extent of submerged aquatic vegetation in the laurentian great lakes with multi-scale satellite remote sensing. J. Great Lakes Res. 2013, 39, 78–89. [Google Scholar] [CrossRef]

- Silva, T.S.F.; Costa, M.P.F.; Melack, J.M. Spatial and temporal variability of macrophyte cover and productivity in the eastern Amazon floodplain: A remote sensing approach. Remote Sens. Environ. 2010, 114, 1998–2010. [Google Scholar] [CrossRef]

- Soo Lee, B.; McGwire, K.C.; Fritsen, C.H. Identification and quantification of aquatic vegetation with hyperspectral remote sensing in Western Nevada rivers, USA. Int. J. Remote Sens. 2011, 32, 9093–9117. [Google Scholar] [CrossRef]

- Sprenkle, E.S.; Smock, L.A.; Anderson, J.E. Distribution and growth of submerged aquatic vegetation in the Piedmont section of the James river, Virginia. Southeast. Nat. 2004, 3, 517–530. [Google Scholar] [CrossRef]

- Theriault, C.; Scheibling, R.; Hatcher, B.; Jones, W. Mapping the distribution of an invasive marine alga (Codium fragile spp. tomentosoides) in optically shallow coastal waters using the compact airborne spectrographic imager (CASI). Can. J. Remote Sens. 2006, 32, 315–329. [Google Scholar] [CrossRef]

- Thomson, A.G.; Fuller, R.M.; Sparks, T.H.; Yates, M.G.; Eastwood, J.A. Ground and airborne radiometry over intertidal surfaces: Waveband selection for cover classification. Int. J. Remote Sens. 1998, 19, 1189–1205. [Google Scholar] [CrossRef]

- Thorhaug, A.; Richardson, A.D.; Berlyn, G.P. Spectral reflectance of the seagrasses: Thalassia testudinum, Halodule wrightii, Syringodium filiforme and five marine algae. Int. J. Remote Sens. 2007, 28, 1487–1501. [Google Scholar] [CrossRef]

- Torres-Pulliza, D.; Wilson, J.R.; Darmawan, A.; Campbell, S.J.; Andrefouet, S. Ecoregional scale seagrass mapping: A tool to support resilient MPA network design in the Coral Triangle. Ocean. Coast. Manag. 2013, 80, 55–64. [Google Scholar] [CrossRef]

- Traganos, D.; Reinartz, P. Interannual change detection of mediterranean seagrasses using RapidEye image time series. Front. Plant. Sci. 2018, 9, 96. [Google Scholar] [CrossRef] [PubMed]

- Uhl, F.; Bartsch, I.; Oppelt, N. Submerged Kelp Detection with Hyperspectral Data. Remote Sens. 2016, 8, 487. [Google Scholar] [CrossRef]

- Underwood, E.C.; Mulitsch, M.J.; Greenberg, J.A.; Whiting, M.L.; Ustin, S.L.; Kefauver, S.C. Mapping invasive aquatic vegetation in the sacramento-san Joaquin Delta using hyperspectral imagery. Environ. Monit. Assess. 2006, 121, 47–64. [Google Scholar] [CrossRef]

- Villa, P.; Pinardi, M.; Bolpagni, R.; Gillier, J.M.; Zinke, P.; Nedelcut, F.; Bresciani, M. Assessing macrophyte seasonal dynamics using dense time series of medium resolution satellite data. Remote Sens. Environ. 2018, 216, 230–244. [Google Scholar] [CrossRef]

- VonBank, J.A.; Casper, A.F.; Yetter, A.P.; Hagy, H.M. Evaluating a Rapid Aerial Survey for Floating-Leaved Aquatic Vegetation. Wetlands 2017, 37, 753–762. [Google Scholar] [CrossRef]

- Wabnitz, C.C.; Andréfouët, S.; Torres-Pulliza, D.; Müller-Karger, F.E.; Kramer, P.A. Regional-scale seagrass habitat mapping in the Wider Caribbean region using Landsat sensors: Applications to conservation and ecology. Remote Sens. Environ. 2008, 112, 3455–3467. [Google Scholar] [CrossRef]

- Wang, Y.; Traber, M.; Milstead, B.; Stevens, S. Terrestrial and submerged aquatic vegetation mapping in fire Island National Seashore using high spatial resolution remote sensing data. Mar. Geod. 2007, 30, 77–95. [Google Scholar] [CrossRef]

- Wang, L.; Gong, P.; Dronova, I. Aquatic Plant Functional Type Spectral Characteristics Analysis and Comparison Using Multi-Temporal and Multi-Sensor Remote Sensing Over the Poyang Lake Wetland, China. In Proceedings of the 18th International Conference on Geoinformatics, Geoinformatics 2010, Beijing, China, 18–20 June 2010. [Google Scholar]

- Wang, C.-K.; Philpot, W.D. Using airborne bathymetric lidar to detect bottom type variation in shallow waters. Remote Sens. Environ. 2007, 106, 123–135. [Google Scholar] [CrossRef]

- Watanabe, F.S.Y.; Imai, N.N.; Alcântara, E.H.; Da Silva Rotta, L.H.; Utsumi, A.G. Signal classification of submerged aquatic vegetation based on the hemispherical-conical reflectance factor spectrum shape in the yellow and red regions. Remote Sens. 2013, 5, 1856–1874. [Google Scholar] [CrossRef]

- Wilson, K.L.; Skinner, M.A.; Lotze, H.K. Eelgrass (Zostera marina) and benthic habitat mapping in Atlantic Canada using high-resolution SPOT 6/7 satellite imagery. Estuar. Coast. Shelf Sci. 2019, 226, 106292. [Google Scholar] [CrossRef]

- Yadav, S.; Yoneda, M.; Tamura, M.; Susaki, J.; Ishikawa, K.; Yamashiki, Y. A satellite-based assessment of the distribution and biomass of submerged aquatic vegetation in the optically shallow basin of Lake Biwa. Remote Sens. 2017, 9, 966. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H. Mapping three invasive weeds using airborne hyperspectral imagery. Ecol. Inf. 2010, 5, 429–439. [Google Scholar] [CrossRef]

- Yuan, L.; Zhang, L.Q. Mapping large-scale distribution of submerged aquatic vegetation coverage using remote sensing. Ecol. Inf. 2008, 3, 245–251. [Google Scholar] [CrossRef]

- Zhang, X. On the estimation of biomass of submerged vegetation using Landsat thematic mapper (TM) imagery: A case study of the Honghu Lake, PR China. Int. J. Remote Sens. 1998, 19, 11–20. [Google Scholar] [CrossRef]

- Zhao, D.; Jiang, H.; Yang, T.; Cai, Y.; Xu, D.; An, S. Remote sensing of aquatic vegetation distribution in Taihu Lake using an improved classification tree with modified thresholds. J. Environ. Manag. 2012, 95, 98–107. [Google Scholar] [CrossRef]

- Zharikov, V.V.; Bazarov, K.Y.; Egidarev, E.G. Use of remotely sensed data in mapping underwater landscapes of Srednyaya Bay (Peter the Great Gulf, Sea of Japan). Geogr. Nat. Resour. 2017, 38, 188–195. [Google Scholar] [CrossRef]

- Zheng, Y.H.; Duarte, C.M.; Chen, J.; Li, D.; Lou, Z.H.; Wu, J.P. Remote sensing mapping of macroalgal farms by modifying thresholds in the classification tree. Geocarto Int. 2019, 34, 1098–1108. [Google Scholar] [CrossRef]

- Zou, W.; Yuan, L.; Zhang, L. Analyzing the spectral response of submerged aquatic vegetation in a eutrophic lake, Shanghai, China. Ecol. Eng. 2013, 57, 65–71. [Google Scholar] [CrossRef]

- Wendel, A.; Underwood, J. Illumination compensation in ground based hyperspectral imaging. ISPRS J. Photogramm. Remote Sens. 2017, 129, 162–178. [Google Scholar] [CrossRef]

- Schläpfer, D.; Richter, R. Geo-atmospheric processing of airborne imaging spectrometry data. Part 1: Parametric orthorectification. Int. J. Remote Sens. 2002, 23, 2609–2630. [Google Scholar] [CrossRef]

- Seidel, F.; Schlapfer, D.; Nieke, J.; Itten, K. Sensor Performance Requirements for the Retrieval of Atmospheric Aerosols by Airborne Optical Remote Sensing. Sensors 2008, 8, 1901–1914. [Google Scholar] [CrossRef]

- Vincent, J.; Verrelst, J.; Sabater, N.; Alonso, L.; Rivera-Caicedo, J.P.; Martino, L.; Munoz-Mari, J.; Moreno, J. Comparative analysis of atmospheric radiative transfer models using the Atmospheric Look-up table Generator (ALG) toolbox (version 2.0). Geosci. Model. Dev. Discuss. 2019, 13, 1945–1957. [Google Scholar] [CrossRef]

- Smith, G.M.; Milton, E.J. The use of the empirical line method to calibrate remotely sensed data to reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Brook, A.; Dor, E.B. Supervised vicarious calibration (SVC) of hyperspectral remote-sensing data. Remote Sens. Environ. 2011, 115, 1543–1555. [Google Scholar] [CrossRef]

- Berk, A.; Bernstein, L.S.; Robertson, D.C. MODTRAN: A Moderate Resolution Model. for LOWTRAN 7; Geophysical Directorate Phillips Laboratory: Hanscom AFB, MA, USA, 1989; p. 44. [Google Scholar]

- Mayer, B.; Kylling, A. Technical note: The libRadtran software package for radiative transfer calculations—Description and examples of use. Atmos. Chem. Phys. 2005, 5, 1855–1877. [Google Scholar] [CrossRef]

- Vermote, E.F.; Tanre, D.; Deuze, J.L.; Herman, M.; Morcette, J. Second Simulation of the Satellite Signal in the Solar Spectrum, 6S: An Overview. IEEE Trans. Geosci. Remote Sens. 1997, 35, 675–686. [Google Scholar] [CrossRef]

- Lyzenga, D.R. Passive remote sensing techniques for mapping water depth and bottom features. Appl. Opt. 1978, 17, 379–383. [Google Scholar] [CrossRef]

- Gagnon, P.; Scheibling, R.E.; Jones, W.; Tully, D. The role of digital bathymetry in mapping shallow marine vegetation from hyperspectral image data. Int. J. Remote Sens. 2008, 29, 879–904. [Google Scholar] [CrossRef]

- Purkis, S.J.; Pasterkamp, R. Integrating in situ reef-top reflectance spectra with Landsat TM imagery to aid shallow-tropical benthic habitat mapping. Coral Reefs 2004, 23, 5–20. [Google Scholar] [CrossRef]

- Lyzenga, D.R. Remote sensing of bottom reflectance and water attenuation parameters in shallow water using aircraft and Landsat data. Int. J. Remote Sens. 1981, 2, 71–82. [Google Scholar] [CrossRef]

- Sagawa, T.; Boisnier, E.; Komatsu, T.; Mustapha, K.B.; Hattour, A.; Kosaka, N.; Miyazaki, S. Using bottom surface reflectance to map coastal marine areas: A new application method for Lyzenga’s model. Int. J. Remote Sens. 2010, 31, 3051–3064. [Google Scholar] [CrossRef]

- Tassan, S. Modified Lyzenga’s method for macroalgae detection in water with non-uniform composition. Int. J. Remote Sens. 1996, 17, 1601–1607. [Google Scholar] [CrossRef]

- Bierwirth, P.N.; Lee, T.J.; Burne, R.V. Shallow Sea-Floor Reflectance and Water Depth Derived by Unmixing Multispectral Imagery. Photogramm. Eng. Remote Sens. 1993, 59, 7. [Google Scholar]

- Cho, H.J.; Lu, D.J. A water-depth correction algorithm for submerged vegetation spectra. Remote Sens. Lett. 2010, 1, 29–35. [Google Scholar] [CrossRef]

- Akkaynak, D.; Treibitz, T. Sea-Thru: A Method for Removing Water From Underwater Images. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 1682–1691. [Google Scholar]

- Manessa, M.D.M.; Haidar, M.; Budhiman, S.; Winarso, G.; Kanno, A.; Sagawa, T.; Sekine, M. Evaluating the Performance of Lyzenga’s Water Column Correction in Case-1 Coral Reef Water Using a Simulated Wolrdview-2 Imagery. In Proceedings of the 2nd International Conference of Indonesian Society for Remote Sensing (ICOIRS), Yogyakarta, Indonesia, 17–19 October 2016. [Google Scholar]

- Gao, J. Bathymetric mapping by means of remote sensing: Methods, accuracy and limitations. Prog. Phys. Geogr. Earth Environ. 2009, 33, 103–116. [Google Scholar] [CrossRef]

- Kay, S.; Hedley, J.D.; Lavender, S. Sun Glint Correction of High and Low Spatial Resolution Images of Aquatic Scenes: A Review of Methods for Visible and Near-Infrared Wavelengths. Remote Sens. 2009, 1, 697–730. [Google Scholar] [CrossRef]

- Dobson, J.E.; Bright, E.A.; Ferguson, R.L.; Field, D.W.; Wood, L.L.; Haddad, K.D.; Iredale, H., III; Jensen, J.R.; Klemas, V.V.; Orth, R.J.; et al. NOAA Coastal Change Analysis Program (C-Cap): Guidance for Regional Implementation; NOAA Technical Report NMFS 123; Klemas, V., Orth, R.J., Eds.; NOAA: Washington, DC, USA, 2003; p. 140. [Google Scholar]

- Hedley, J.D.; Harborne, A.R.; Mumby, P.J. Technical note: Simple and robust removal of sun glint for mapping shallow-water benthos. Int. J. Remote Sens. 2005, 26, 2107–2112. [Google Scholar] [CrossRef]

- Kutser, T.; Vahtmäe, E.; Paavel, B. Removing Air/Water Interface Effects from Hyperspectral Radiometry Data. In Proceedings of the OCEANS 2012 MTS/IEEE Yeosu: The Living Ocean and Coast—Diversity of Resources and Sustainable Activities, Yeosu, Korea, 21–24 May 2012. [Google Scholar]

- Kutser, T.; Vahtmäe, E.; Praks, J. A sun glint correction method for hyperspectral imagery containing areas with non-negligible water leaving NIR signal. Remote Sens. Environ. 2009, 113, 2267–2274. [Google Scholar] [CrossRef]

- Anker, Y.; Hershkovitz, Y.; Ben Dor, E.; Gasith, A. Application of Aerial Digital Photography for Macrophyte Cover and Composition Survey in Small Rural Streams. River Res. Appl. 2014, 30, 925–937. [Google Scholar] [CrossRef]

- Pe’eri, S.; Morrison, J.R.; Short, F.; Mathieson, A.; Lippmann, T. Eelgrass and Macroalgal Mapping to Develop Nutrient Criteria in New Hampshire’s Estuaries using Hyperspectral Imagery. J. Coast. Res. 2016, 76, 209–218. [Google Scholar] [CrossRef]

- Valta-Hulkkonen, K.; Pellikka, P.; Peltoniemi, J. Assessment of bidirectional effects over aquatic macrophyte vegetation in CIR aerial photographs. Photogramm. Eng. Remote Sens. 2004, 70, 581–587. [Google Scholar] [CrossRef]

- Landgrebe, D. Hyperspectral image data analysis. IEEE Signal. Process. Mag. 2002, 19, 17–28. [Google Scholar] [CrossRef]

- Hughes, G. On the mean accuracy of statistical pattern recognizers. IEEE Trans. Inf. Theory 1968, 14, 55–63. [Google Scholar] [CrossRef]

- Niroumand-Jadidi, M.; Pahlevan, N.; Vitti, A. Mapping substrate types and compositions in shallow streams. Remote Sens. 2019, 11, 262. [Google Scholar] [CrossRef]

- Mathur, A.; Bruce, L.M.; Robles, W.; Madsen, J. Feature Extraction via Spectro-temporal Analysis of Hyperspectral Data for Vegetative Target Detection. In Proceedings of the Third International Workshop on the Analysis of Multi-Temporal Remote Sensing Images 2005, Biloxi, MS, USA, 16–18 May 2005; pp. 64–66. [Google Scholar]

- Bolón-Canedo, V.; Sánchez-Maroño, N.; Alonso-Betanzos, A. Foundations of Feature Selection. In Feature Selection for High-Dimensional Data; Springer International Publishing: Cham, Switzerland, 2015; pp. 13–28. [Google Scholar] [CrossRef]

- Van der Heijden, F.; Duin, R.P.W.; de Ridder, D.; Tax, D.M.J. Classification, Parameter Estimation and State Estimation: An. Engineering Approach Using MATLAB; Wiley: Chichester, UK, 2004; p. 423. [Google Scholar] [CrossRef]

- Keshava, N.; Mustard, J.F. Spectral Unmixing. IEEE Signal. Process. Mag. 2002, 19, 44–57. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring Vegetation Systems in the Great Plains with ERTS. In Proceedings of the Third Earth Resources Technology Satellite-1 Symposium, Greenbelt, MD, USA, 10–15 December 1974; pp. 309–317. [Google Scholar]

- Espel, D.; Courty, S.; Auda, Y.; Sheeren, D.; Elger, A. Submerged macrophyte assessment in rivers: An automatic mapping method using Pléiades imagery. Water Res. 2020, 186, 116353. [Google Scholar] [CrossRef] [PubMed]

- Brooks, C.N.; Grimm, A.G.; Marcarelli, A.M.; Dobson, R.J. Multiscale collection and analysis of submerged aquatic vegetation spectral profiles for Eurasian watermilfoil detection. J. Appl. Remote Sens. 2019, 13, 037501. [Google Scholar] [CrossRef]

- Su, H.; Karna, D.; Fraim, E.; Fitzgerald, M.; Dominguez, R.; Myers, J.S.; Coffland, B.; Handley, L.R.; Mace, T. Evaluation of eelgrass beds mapping using a high-resolution airborne multispectral scanner. Photogramm. Eng. Remote Sens. 2006, 72, 789–797. [Google Scholar] [CrossRef]

- Villa, P.; Mousivand, A.; Bresciani, M. Aquatic vegetation indices assessment through radiative transfer modeling and linear mixture simulation. Int. J. Appl. Earth Obs. Geoinf. 2014, 30, 113–127. [Google Scholar] [CrossRef]

- Villa, P.; Bresciani, M.; Braga, F.; Bolpagni, R. Comparative assessment of broadband vegetation indices over aquatic vegetation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 3117–3127. [Google Scholar] [CrossRef]

- Peñuelas, J.; Gamon, J.A.; Griffin, K.L.; Field, C.B. Assessing community type, plant biomass, pigment composition, and photosynthetic efficiency of aquatic vegetation from spectral reflectance. Remote Sens. Environ. 1993, 46, 110–118. [Google Scholar] [CrossRef]

- Hyun, J.C.; Kirui, P.; Natarajan, H. Test of multi-spectral vegetation index for floating and canopy-forming submerged vegetation. Int. J. Environ. Res. Public Health 2008, 5, 477–483. [Google Scholar] [CrossRef]

- Pande-Chhetri, R.; Abd-Elrahman, A.; Jacoby, C. Classification of submerged aquatic vegetation in black river using hyperspectral image analysis. Geomatica 2014, 68, 169–182. [Google Scholar] [CrossRef]

- Zhou, G.H.; Ma, Z.Q.; Sathyendranath, S.; Platt, T.; Jiang, C.; Sun, K. Canopy Reflectance Modeling of Aquatic Vegetation for Algorithm Development: Global Sensitivity Analysis. Remote Sens. 2018, 10, 837. [Google Scholar] [CrossRef]

- Chen, Q.; Yu, R.; Hao, Y.; Wu, L.; Zhang, W.; Zhang, Q.; Bu, X. A new method for mapping aquatic vegetation especially underwater vegetation in Lake Ulansuhai using GF-1 satellite data. Remote Sens. 2018, 10, 1279. [Google Scholar] [CrossRef]

- Li, F.; Li, C.; Xiao, B.; Wang, Y. Mapping large-scale distribution and changes of aquatic vegetation in Honghu Lake, China, using multitemporal satellite imagery. J. Appl. Remote Sens. 2013, 7, 073593. [Google Scholar] [CrossRef]

- Shaw, G.; Manolakis, D. Signal processing for hyperspectral image exploitation. IEEE Signal. Process. Mag. 2002, 19. [Google Scholar] [CrossRef]

- Thamaga, K.H.; Dube, T. Understanding seasonal dynamics of invasive water hyacinth (Eichhornia crassipes) in the Greater Letaba river system using Sentinel-2 satellite data. GIScience Remote Sens. 2019. [Google Scholar] [CrossRef]

- Tian, Y.Q.; Yu, Q.; Zimmerman, M.J.; Flint, S.; Waldron, M.C. Differentiating aquatic plant communities in a eutrophic river using hyperspectral and multispectral remote sensing. Freshw. Biol. 2010, 55, 1658–1673. [Google Scholar] [CrossRef]

- Tilley, D.R.; Ahmed, M.; Son, J.H.; Badrinarayanan, H. Hyperspectral reflectance response of freshwater macrophytes to salinity in a brackish subtropical marsh. J. Environ. Qual. 2007, 36, 780–789. [Google Scholar] [CrossRef]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.W.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. The spectral image processing system (SIPS)—interactive visualization and analysis of imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Manolakis, D.; Shaw, G. Detection Algorithms for Hyperspectral Imaging Applications. IEEE Signal. Process. Mag. 2002, 19, 29–43. [Google Scholar] [CrossRef]

- Zhu, C.M.; Zhang, X. Coastal Remote Sensing. In Modeling with Digital Ocean and Digital Coast; Zhang, X., Wang, L., Jiang, X., Zhu, C., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 18, pp. 169–203. [Google Scholar]

- Zhao, D.; Lv, M.; Jiang, H.; Cai, Y.; Xu, D.; An, S. Spatio-Temporal Variability of Aquatic Vegetation in Taihu Lake over the Past 30 Years. PLoS ONE 2013, 8. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Gao, Y.; Li, Q.; Gao, J.; Zhai, S.; Zhou, Y.; Cheng, Y. Long-term and inter-monthly dynamics of aquatic vegetation and its relation with environmental factors in Taihu Lake, China. Sci. Total Environ. 2019, 651, 367–380. [Google Scholar] [CrossRef]

- Santos, M.J.; Khanna, S.; Hestir, E.L.; Greenberg, J.A.; Ustin, S.L. Measuring landscape-scale spread and persistence of an invaded submerged plant community from airborne Remote sensing. Ecol. Appl. 2016, 26, 1733–1744. [Google Scholar] [CrossRef]

- Santos, M.J.; Khanna, S.; Hestir, E.L.; Andrew, M.E.; Rajapakse, S.S.; Greenberg, J.A.; Anderson, L.W.J.; Ustin, S.L. Use of hyperspectral remote sensing to evaluate efficacy of aquatic plant management. Invasive Plant. Sci. Manag. 2009, 2, 216–229. [Google Scholar] [CrossRef]

- Kalacska, M.; Lucanus, O.; Sousa, L.; Vieira, T.; Arroyo-Mora, J.P. Freshwater Fish Habitat Complexity Mapping Using Above and Underwater Structure-From-Motion Photogrammetry. Remote Sens. 2018, 10, 1912. [Google Scholar] [CrossRef]

- Carrivick, J.L.; Smith, M.W.; Quincey, D.J. Background to Structure from Motion. In Structure from Motion in the Geosciences; John Wiley & Sons, Ltd.: West Sussex, UK, 2016; pp. 37–59. [Google Scholar] [CrossRef]

- Slocum, R.K.; Wright, W.; Parrish, C.; Costa, B.; Sharr, M.; Battista, T.A. Guidelines for Bathymetric Mapping and Orthoimage Generation Using sUAS and SfM, An Approach for Conducting Nearshore Coastal Mapping; NOAA Technical Memorandum NOS NCCOS 265; NOAA NOS National Center for Coastal Ocean Science: Silver Spring, MD, USA, 2019; p. 83. [Google Scholar] [CrossRef]

- Reichert, J.; Backes, A.R.; Schubert, P.; Wilke, T. The power of 3D fractal dimensions for comparative shape and structural complexity analyses of irregularly shaped organisms. Methods Ecol. Evol. 2017, 8, 1650–1658. [Google Scholar] [CrossRef]

- Storlazzi, C.D.; Dartnell, P.; Hatcher, G.A.; Gibbs, A.E. End of the chain? Rugosity and fine-scale bathymetry from existing underwater digital imagery using structure-from-motion (SfM) technology. Coral Reefs 2016, 35, 889–894. [Google Scholar] [CrossRef]

- Jing, R.; Gong, Z.N.; Zhao, W.J.; Pu, R.L.; Deng, L. Above-bottom biomass retrieval of aquatic plants with regression models and SfM data acquired by a UAV platform—A case study in Wild Duck Lake Wetland, Beijing, China. ISPRS J. Photogramm. Remote Sens. 2017, 134, 122–134. [Google Scholar] [CrossRef]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef]

- Bryson, M.; Ferrari, R.; Figueira, W.; Pizarro, O.; Madin, J.; Williams, S.; Byrne, M. Characterization of measurement errors using structure-from-motion and photogrammetry to measure marine habitat structural complexity. Ecol. Evol. 2017, 7, 5669–5681. [Google Scholar] [CrossRef] [PubMed]

- Leon, J.X.; Roelfsema, C.M.; Saunders, M.I.; Phinn, S.R. Measuring coral reef terrain roughness using ‘Structure-from-Motion’ close-range photogrammetry. Geomorphology 2015, 242, 21–28. [Google Scholar] [CrossRef]

- Everitt, J.H.; Yang, C.; Summy, K.R.; Owens, C.S.; Glomski, L.M.; Smart, R.M. Using in situ hyperspectral reflectance data to distinguish nine aquatic plant species. Geocarto Int. 2011, 26, 459–473. [Google Scholar] [CrossRef]

- Dierssen, H.M.; Chlus, A.; Russell, B. Hyperspectral discrimination of floating mats of seagrass wrack and the macroalgae Sargassum in coastal waters of Greater Florida Bay using airborne remote sensing. Remote Sens. Environ. 2015, 167, 247–258. [Google Scholar] [CrossRef]

- Giardino, C.; Bresciani, M.; Valentini, E.; Gasperini, L.; Bolpagni, R.; Brando, V.E. Airborne hyperspectral data to assess suspended particulate matter and aquatic vegetation in a shallow and turbid lake. Remote Sens. Environ. 2015, 157, 48–57. [Google Scholar] [CrossRef]

- Williams, D.J.; Rybicki, N.B.; Lombana, A.V.; O’Brien, T.M.; Gomez, R.B. Preliminary Investigation of Submerged Aquatic Vegetation Mapping using Hyperspectral Remote Sensing. Environ. Monit. Assess. 2003, 81, 383–392. [Google Scholar] [CrossRef]