Easily Implemented Methods of Radiometric Corrections for Hyperspectral–UAV—Application to Guianese Equatorial Mudbanks Colonized by Pioneer Mangroves

Abstract

:1. Introduction

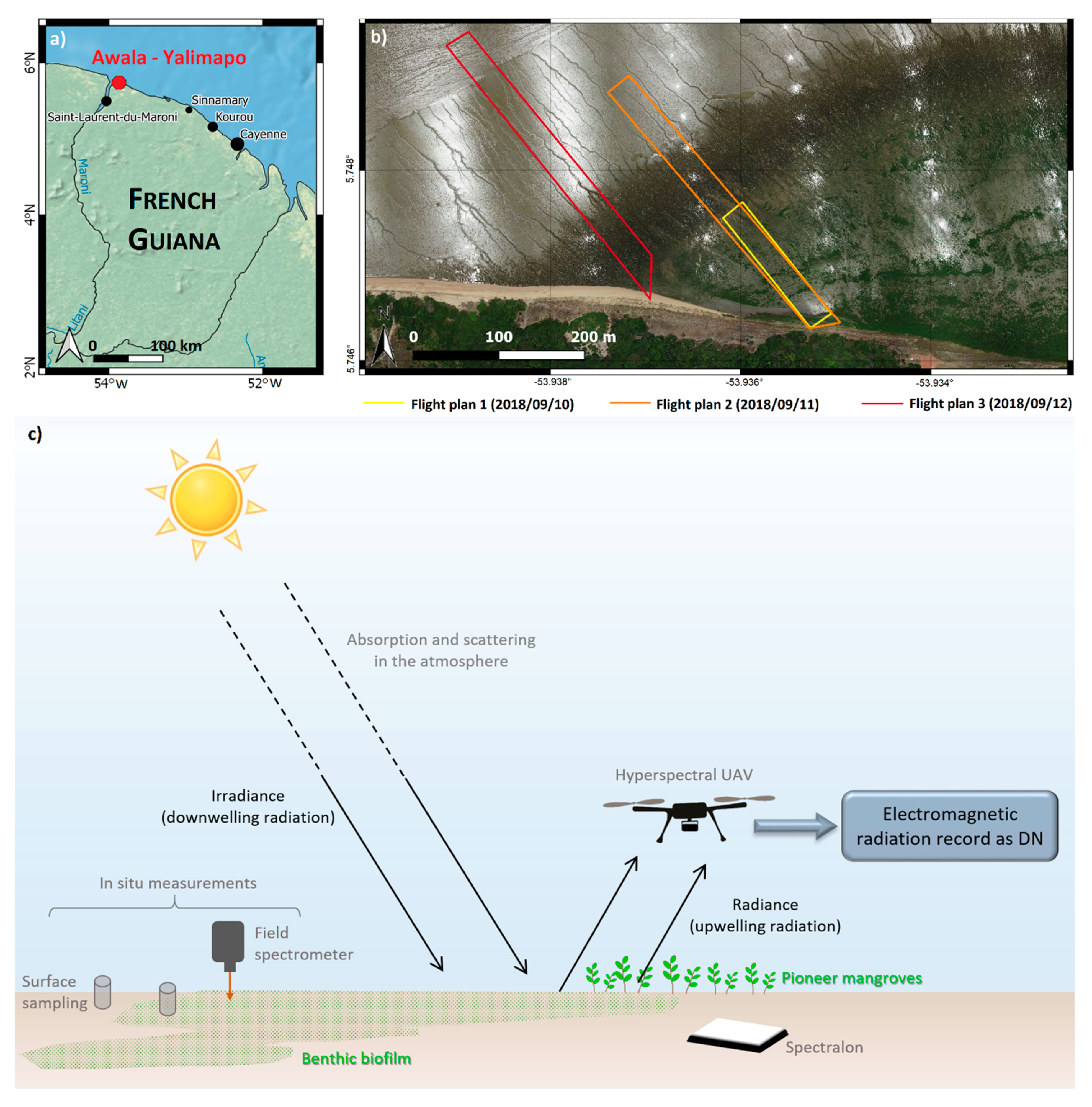

2. Study Area and Survey Setup

3. Materials and Methods

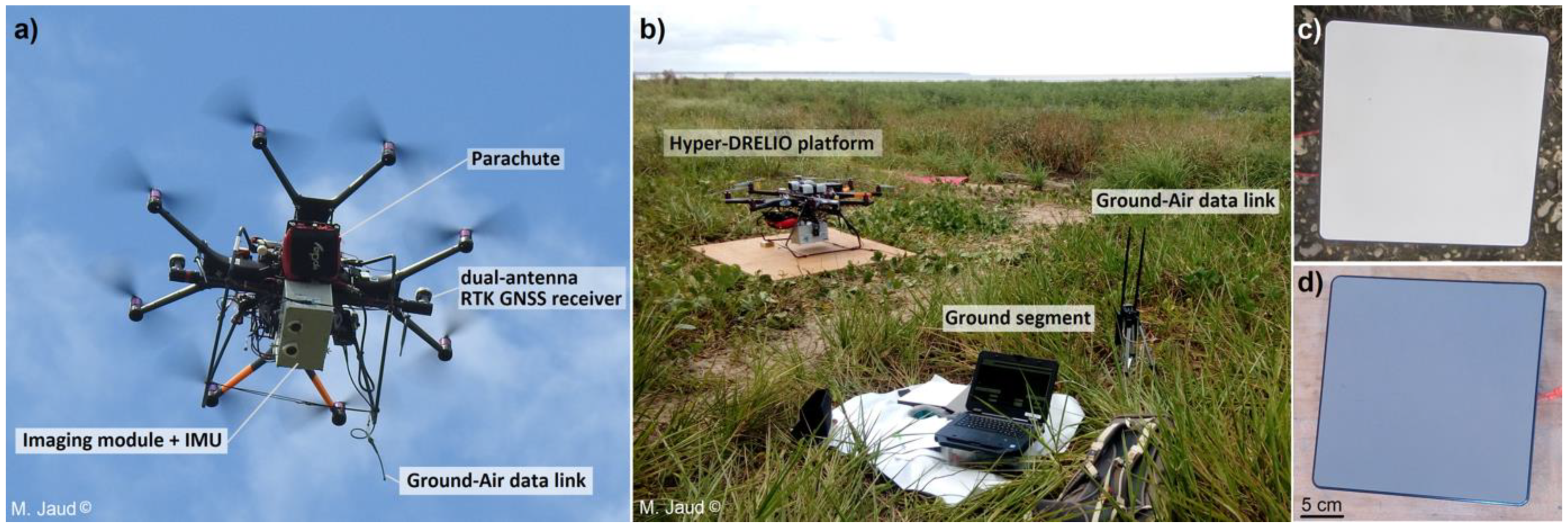

3.1. Hyperspectral UAV

3.2. Ground-Based Measurements

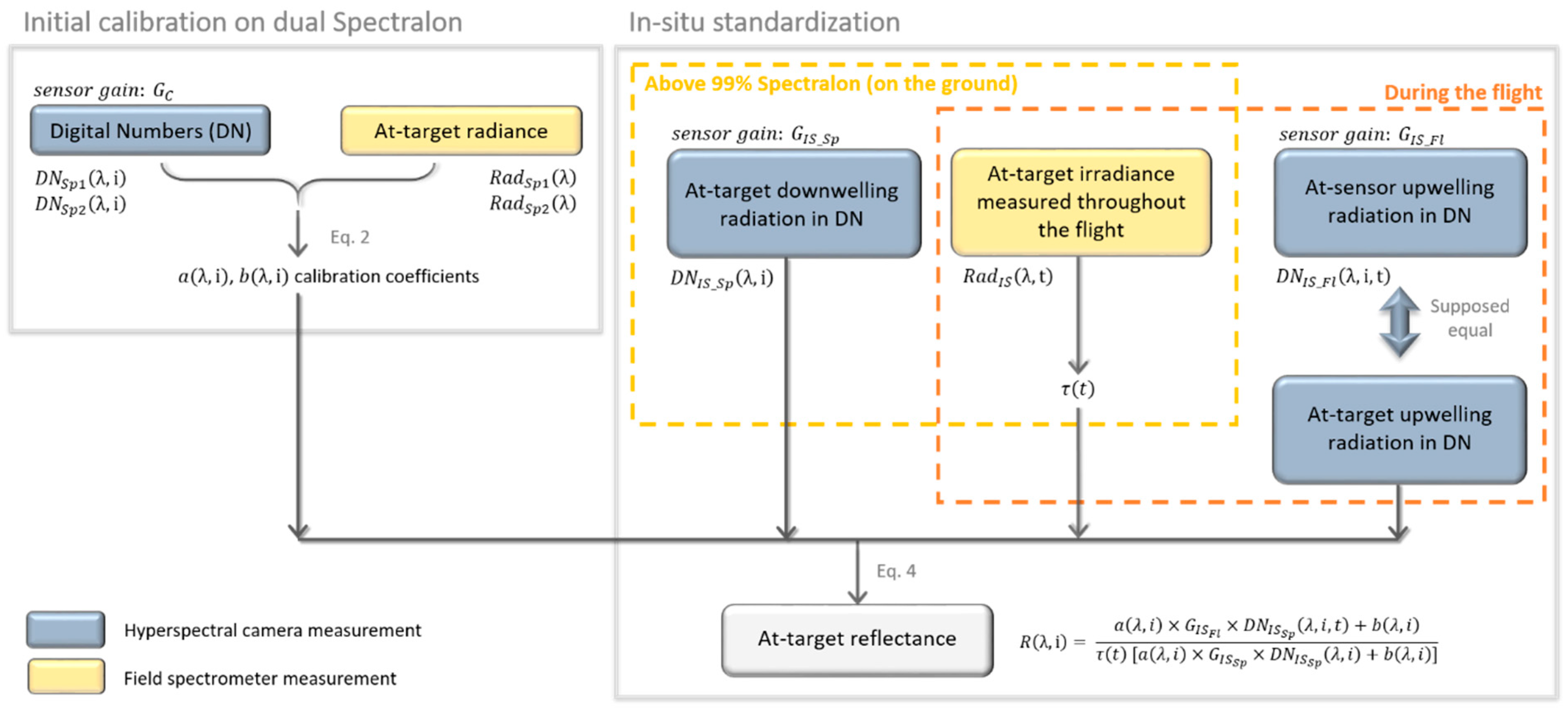

3.3. Radiometric Correction Method

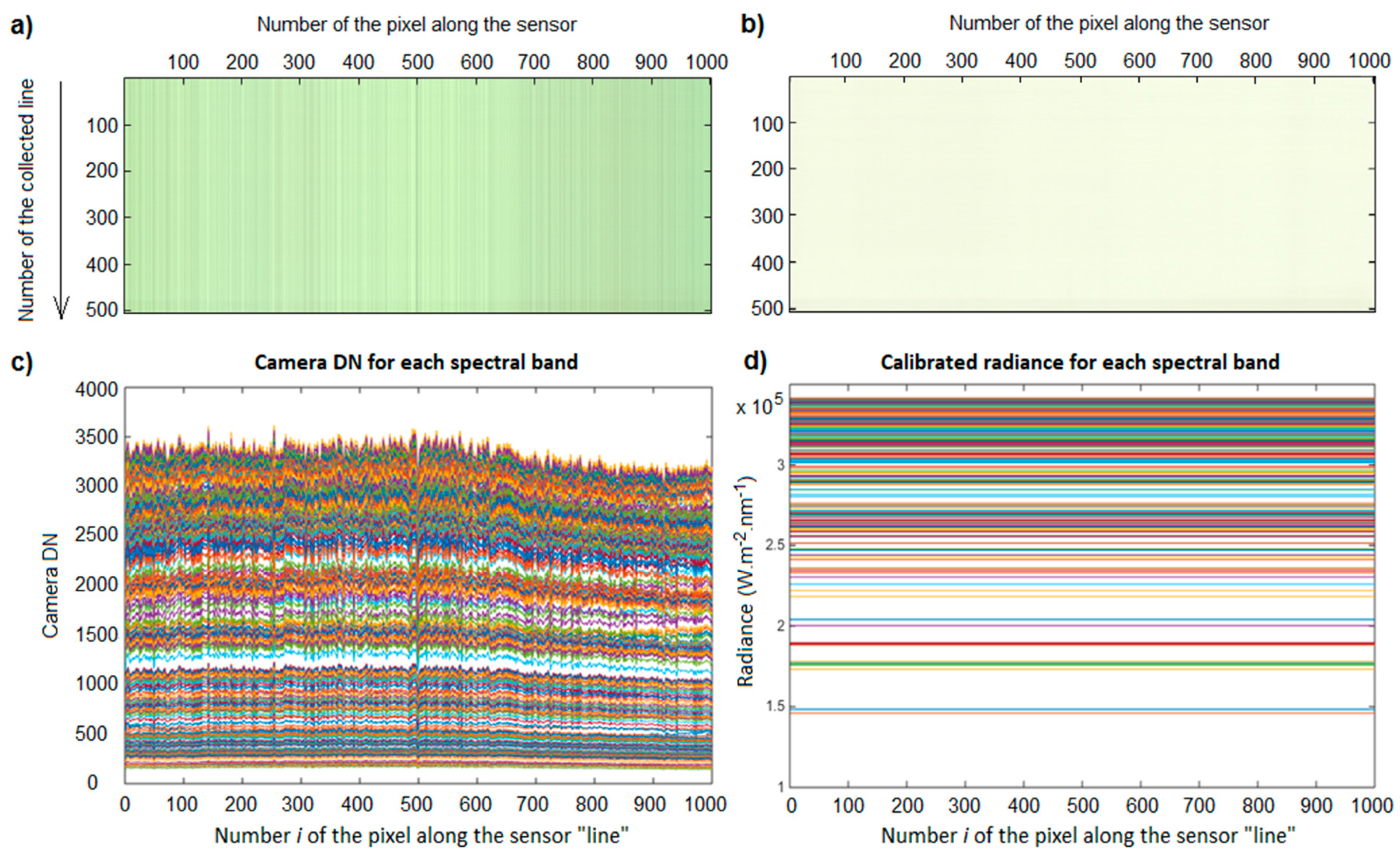

3.3.1. Initial Calibration

- λ: wavelength (nm);

- i: index of the pixel in the sensor array;

- RadC: at-sensor radiance (W·m−2·sr−1) during calibration step;

- GC: sensor gain during calibration step;

- DNC: digital number collected during calibration step;

- a, b: calibration coefficients.

- Sp1, Sp2: ID of each Spectralon used for the calibration;

- RadSp1, Sp2: radiance (W·m−2·sr−1) measured by the field spectrometer above the Sp1 (respectively, Sp2) Spectralon;

- DNSp1, Sp2: digital number collected by the hyperspectral camera above the Sp1 (respectively, Sp2) Spectralon.

3.3.2. In Situ Standardization

- GIS Sp: sensor gain used during in situ measurements of the 99% Spectralon;

- GIS Fl: sensor gain used during the in situ flight;

- DNIS Sp: digital number collected in situ by the hyperspectral camera of the 99% Spectralon;

- DNIS Fl: digital number collected in situ by the hyperspectral camera during the flight;

- R: resulting remote-sensing reflectance.

3.3.3. Taking Temporal Variations of Irradiance into Account

4. Results

4.1. Comparison to Field Spectrometer

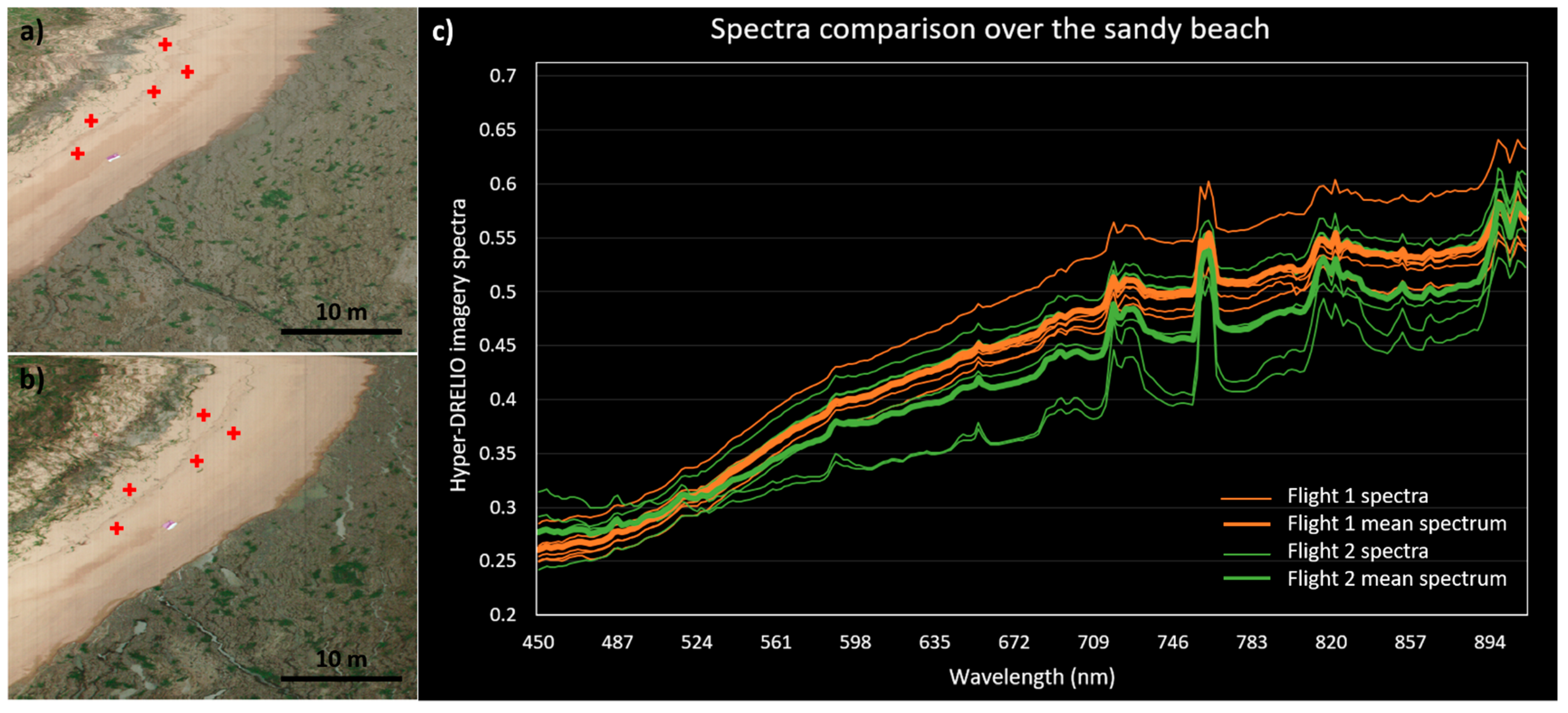

4.2. Relative Comparison over the Sandy Beach

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sandilyan, S.; Kathiresan, K. Mangrove Conservation: A Global Perspective. Biodivers. Conserv. 2012, 21, 3523–3542. [Google Scholar] [CrossRef]

- Alongi, D.M. Mangrove Forests: Resilience, Protection from Tsunamis; and Responses to Global Climate Change. Estuar. Coast. Shelf Sci. 2008, 76, 1–13. [Google Scholar] [CrossRef]

- Thomas, N.; Lucas, R.; Bunting, P.; Hardy, A.; Rosenqvist, A.; Simard, M. Distribution and Drivers of Global Mangrove Forest Change, 1996–2010. PLoS ONE 2017, 12, e0179302. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Green, E.P.; Clark, C.D.; Mumby, P.J.; Edwards, A.J.; Ellis, A.C. Remote Sensing Techniques for Mangrove Mapping. Int. J. Remote Sens. 1998, 19, 935–956. [Google Scholar] [CrossRef]

- Kuenzer, C.; Bluemel, A.; Gebhardt, S.; Quoc, T.V.; Dech, S. Remote Sensing of Mangrove Ecosystems: A Review. Remote Sens. 2011, 3, 878–928. [Google Scholar] [CrossRef] [Green Version]

- Wang, L.; Jia, M.; Yin, D.; Tian, J. A Review of Remote Sensing for Mangrove Forests: 1956–2018. Remote Sens. Environ. 2019, 231, 111223. [Google Scholar] [CrossRef]

- Schaepman, M.E.; Ustin, S.L.; Plaza, A.J.; Painter, T.H.; Verrelst, J.; Liang, S. Earth System Science Related Imaging Spectroscopy—An Assessment. Remote Sens. Environ. 2009, 113, 123–137. [Google Scholar] [CrossRef]

- Proctor, C.; He, Y. Workflow for Building a Hyperspectral UAV: Challenges and Opportunities. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2009, XL-1/W4, 415–419. [Google Scholar] [CrossRef] [Green Version]

- Launeau, P.; Kassouk, Z.; Debaine, F.; Roy, R.; Mestayer, P.G.; Boulet, C.; Rouaud, J.-M.; Giraud, M. Airborne Hyperspectral Mapping of Trees in an Urban Area. Int. J. Remote Sens. 2017, 38, 1277–1311. [Google Scholar] [CrossRef]

- Kruse, F.A.; Boardman, J.W.; Huntington, J.F. Comparison of Airborne Hyperspectral Data and Eo-1 Hyperion for Mineral Mapping. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1388–1400. [Google Scholar] [CrossRef] [Green Version]

- Burai, P.; Deák, B.; Valkó, O.; Tomor, T. Classification of Herbaceous Vegetation Using Airborne Hyperspectral Imagery. Remote Sens. 2015, 7, 2046–2066. [Google Scholar] [CrossRef] [Green Version]

- Kamal, M.; Phinn, S. Hyperspectral data for mangrove species mapping: A comparison of pixel-based and object-based approach. Remote Sens. 2011, 3, 2222–2242. [Google Scholar] [CrossRef] [Green Version]

- Peerbhay, K.Y.; Mutanga, O.; Ismail, R. Commercial tree species discrimination using airborne AISA Eagle hyperspectral imagery and partial least squares discriminant analysis (PLS-DA) in KwaZulu–Natal, South Africa. ISPRS J. Photogramm. Remote Sens. 2013, 79, 19–28. [Google Scholar] [CrossRef]

- Richter, R.; Reu, B.; Wirth, C.; Doktor, D.; Vohland, M. The use of airborne hyperspectral data for tree species classification in a species-rich Central European forest area. Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 464–474. [Google Scholar] [CrossRef]

- Yang, C.; Everitt, J.H.; Fletcher, R.S.; Jensen, R.R.; Mausel, P.W. Evaluating AISA + Hyperspectral Imagery for Mapping Black Mangrove along the South Texas Gulf Coast. Photogramm. Eng. Remote Sens. 2009, 4, 425–435. [Google Scholar] [CrossRef] [Green Version]

- Chaube, N.R.; Lele, N.; Misra, A.; Murthy, T.; Manna, S.; Hazra, S.; Panda, M.; Samal, R.N. Mangrove species discrimination and health assessment using AVIRIS-NG hyperspectral data. Curr. Sci. 2019, 116, 1136. [Google Scholar] [CrossRef]

- Hati, J.P.; Goswami, S.; Samanta, S.; Pramanick, N.; Majumdar, S.D.; Chaube, N.R.; Misra, A.; Hazra, S. Estimation of vegetation stress in the mangrove forest using AVIRIS-NG airborne hyperspectral data. Model. Earth Syst. Environ. 2021, 7, 1877–1889. [Google Scholar] [CrossRef]

- Hati, J.P.; Samanta, S.; Chaube, N.R.; Misra, A.; Giri, S.; Pramanick, N.; Gupta, K.; Majumdar, S.D.; Chanda, A.; Mukhopadhyay, A.; et al. Mangrove classification using airborne hyperspectral AVIRIS-NG and comparing with other spaceborne hyperspectral and multispectral data. Egypt. J. Remote Sens. Space Sci. 2021, 24, 273–281. [Google Scholar] [CrossRef]

- Jiang, Y.; Zhang, L.; Yan, M.; Qi, J.; Fu, T.; Fan, S.; Chen, B. High-Resolution Mangrove Forests Classification with Machine Learning Using Worldview and UAV Hyperspectral Data. Remote Sens. 2021, 13, 1529. [Google Scholar] [CrossRef]

- Liu, L.; Coops, N.C.; Aven, N.W.; Pang, Y. Mapping urban tree species using integrated airborne hyperspectral and LiDAR remote sensing data. Remote Sens. Environ. 2017, 200, 170–182. [Google Scholar] [CrossRef]

- Li, Q.; Wong, F.K.K.; Fung, T. Mapping multi-layered mangroves from multispectral, hyperspectral, and LiDAR data. Remote Sens. Environ. 2021, 258, 112403. [Google Scholar] [CrossRef]

- Stuart, M.B.; McGonigle, A.J.S.; Willmott, J.R. Hyperspectral Imaging in Environmental Monitoring: A Review of Recent Developments and Technological Advances in Compact Field Deployable Systems. Sensors 2019, 19, 3071. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Cao, J.; Leng, W.; Liu, K.; Liu, L.; He, Z.; Zhu, Y. Object-based mangrove species classification using unmanned aerial vehicle hyperspectral images and digital surface models. Remote Sens. 2018, 10, 89. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Wang, L. Feasibility of using consumer-grade unmanned aerial vehicles to estimate leaf area index in Mangrove forest. Remote Sens. Lett. 2018, 9, 1040–1049. [Google Scholar] [CrossRef]

- Jaakkola, A.; Hyyppä, J.; Kukko, A.; Yu, X.; Kaartinen, H.; Lehtomäki, M.; Lin, Y. A low-cost multi-sensoral mobile mapping system and its feasibility for tree measurements. ISPRS J. Photogramm. Remote Sens. 2010, 65, 514–522. [Google Scholar] [CrossRef]

- Kosugi, Y.; Mukoyama, S.; Takabayashi, Y.; Uto, K.; Oda, K.; Saito, G. Low-altitude hyperspectral observation of paddy using radio-controlled helicopter. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Vancouver, BC, Canada, 24–29 July 2011; pp. 1748–1751. [Google Scholar]

- Hruska, R.; Mitchell, J.; Anderson, M.; Glenn, N.F. Radiometric and Geometric Analysis of Hyperspectral Imagery Acquired from an Unmanned Aerial Vehicle. Remote Sens. 2012, 4, 2736–2752. [Google Scholar] [CrossRef] [Green Version]

- Gallay, M.; Eck, C.; Zgraggen, C.; Kaňuk, J.; Dvorný, E. High Resolution Airbone Laser Scanning and Hyperspectral Imaging with a small UAV platform. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, XLI-B1, 823–827. [Google Scholar] [CrossRef] [Green Version]

- Lucieer, A.; Malenovský, Z.; Veness, T.; Wallace, L. HyperUAS—Imaging Spectroscopy from a Multirotor Unmanned Aircraft System. J. Field Rob. 2014, 31, 571–590. [Google Scholar] [CrossRef] [Green Version]

- Jaud, M.; Le Dantec, N.; Ammann, J.; Grandjean, P.; Constantin, D.; Akhtman, Y.; Barbieux, K.; Allemand, P.; Delacourt, C.; Merminod, B. Direct Georeferencing of a Pushbroom, Lightweight Hyperspectral System for Mini-UAV Applications. Remote Sens. 2018, 10, 204. [Google Scholar] [CrossRef] [Green Version]

- Oliveira, R.A.; Tommaselli, A.M.G.; Honkavaara, E. Generating a Hyperspectral Digital Surface Model Using a Hyperspectral 2D Frame Camera. ISPRS J. Photogramm. Remote Sens. 2019, 147, 345–360. [Google Scholar] [CrossRef]

- Saari, H.; Pölönen, I.; Salo, H.; Honkavaara, E.; Hakala, T.; Holmlund, C.; Mäkynen, J.; Mannila, R.; Antila, T.; Akujärvi, A. Miniaturized hyperspectral imager calibration and uav flight campaigns. In Proceedings of the SPIE, Sensors, Systems, and Next-Generation Satellites XVII, Dresden, Germany, 24 October 2013; Volume 8889. [Google Scholar] [CrossRef]

- Aasen, H.; Burkart, A.; Bolten, A.; Bareth, G. Generating 3D Hyperspectral Information with Lightweight UAV Snapshot Cameras for Vegetation Monitoring: From Camera Calibration to Quality Assurance. ISPRS J. Photogramm. Remote Sens. 2015, 108, 245–259. [Google Scholar] [CrossRef]

- Garzonio, R.; Di Mauro, B.; Colombo, R.; Cogliati, S. Surface Reflectance and Sun-Induced Fluorescence Spectroscopy Measurements Using a Small Hyperspectral UAS. Remote Sens. 2017, 9, 472. [Google Scholar] [CrossRef] [Green Version]

- Burkart, A.; Cogliati, S.; Schickling, A.; Rascher, U. A Novel UAV-Based Ultra-Light Weight Spectrometer for Field Spectroscopy. IEEE Sens. J. 2014, 14, 62–67. [Google Scholar] [CrossRef]

- Jaud, M.; Grasso, F.; Le Dantec, N.; Verney, R.; Delacourt, C.; Ammann, J.; Deloffre, J.; Grandjean, P. Potential of UAVs for Monitoring Mudflat Morphodynamics (Application to the Seine Estuary, France). ISPRS Int. J. Geoinf. 2016, 5, 50. [Google Scholar] [CrossRef] [Green Version]

- Brunier, G.; Michaud, E.; Fleury, J.; Anthony, E.J.; Morvan, S.; Gardel, A. Assessing the relationships between macro-faunal burrowing activity and mudflat geomorphology from UAV-based Structure-from-Motion photogrammetry. Remote Sens. Environ. 2020, 241, 111717. [Google Scholar] [CrossRef]

- Jolivet, M.; Anthony, E.J.; Gardel, A.; Brunier, G. Multi-Decadal to Short-Term Beach and Shoreline Mobility in a Complex River-Mouth Environment Affected by Mud From the Amazon. Front. Earth Sci. 2019, 7, 187. [Google Scholar] [CrossRef] [Green Version]

- Anthony, E.J.; Gratiot, N. Coastal Engineering and Large-Scale Mangrove Destruction in Guyana; South America: Averting an Environmental Catastrophe in the Making. Ecol. Eng. 2012, 47, 268–273. [Google Scholar] [CrossRef]

- Fromard, F.; Puig, H.; Mougin, E.; Marty, G.; Betoulle, J.L.; Cadamuro, L. Structure, above-ground biomass and dynamics of mangrove ecosystems: New data from French Guiana. Oecologia 1998, 115, 39–53. [Google Scholar] [CrossRef]

- Gardel, A.; Gensac, E.; Anthony, E.; Lesourd, S.; Loisel, H.; Marin, D. Wave-formed mud bars: Their morphodynamics and role in opportunistic mangrove colonization. J. Coast. Res. 2011, Special Issue 64, 384–387. [Google Scholar]

- Aschenbroich, A.; Michaud, E.; Stieglitz, T.; Fromard, F.; Gardel, A.; Tavares, M.; Thouzeau, G. Brachyuran crab community structure and associated sediment reworking activities in pioneer and young mangroves of French Guiana, South America. Estuar. Coast. Shelf Sci. 2016, 182, 60–71. [Google Scholar] [CrossRef] [Green Version]

- Aller, R.C.; Blair, N.E. Carbon Remineralization in the Amazon–Guianas Tropical Mobile Mudbelt: A Sedimentary Incinerator. Cont. Shelf Res. 2006, 26, 2241–2259. [Google Scholar] [CrossRef]

- Gontharet, S.; Mathieu, O.; Lévêque, J.; Milloux, M.-J.; Lesourd, S.; Philippe, S.; Caillaud, J.; Gardel, A.; Sarrazin, M.; Proisy, C. Distribution and Sources of Bulk Organic Matter (OM) on a Tropical Intertidal Mud Bank in French Guiana from Elemental and Isotopic Proxies. Chem. Geol. 2014, 376, 1–10. [Google Scholar] [CrossRef]

- Ray, R.; Michaud, E.; Aller, R.; Vantrepotte, V.; Gleixner, G.; Walcker, R.; Devesa, J.; Le Goff, M.; Morvan, S.; Thouzeau, G. The sources and distribution of carbon (DOC, POC, DIC) in a mangrove dominated estuary (French Guiana, South America). Biogeochemistry 2018, 138, 297–321. [Google Scholar] [CrossRef]

- Ray, R.; Thouzeau, G.; Walcker, R.; Vantrepotte, V.; Gleixner, G.; Morvan, S.; Devesa, J.; Michaud, E. Mangrove-Derived Organic and Inorganic Carbon Exchanges Between the Sinnamary Estuarine System (French Guiana, South America) and Atlantic Ocean. J. Geophys. Res. Biogeosci. 2020, 125, e2020JG005739. [Google Scholar] [CrossRef]

- Brunier, G.; Anthony, E.J.; Gratiot, N.; Gardel, A. Exceptional Rates and Mechanisms of Muddy Shoreline Retreat Following Mangrove Removal. Earth Surf. Process. Landf. 2019, 44, 1559–1571. [Google Scholar] [CrossRef]

- Bachmann, C.M.; Montes, M.J.; Parrish, C.E.; Fusina, R.A.; Nichols, C.R.; Li, R.-R.; Hallenborg, E.; Jones, C.A.; Lee, K.; Sellars, J.; et al. A Dual-Spectrometer Approach to Reflectance Measurements under Sub-Optimal Sky Conditions. Opt. Express 2012, 20, 8959. [Google Scholar] [CrossRef]

- Kazemipour, F.; Méléder, V.; Launeau, P. Optical Properties of Microphytobenthic Biofilms (MPBOM): Biomass Retrieval Implication. J. Quant. Spectrosc. Radiat. Transf. 2011, 112, 131–s142. [Google Scholar] [CrossRef]

- Launeau, P.; Méléder, V.; Verpoorter, C.; Barillé, L.; Kazemipour-Ricci, F.; Giraud, M.; Jesus, B.; Le Menn, E. Microphytobenthos Biomass and Diversity Mapping at Different Spatial Scales with a Hyperspectral Optical Model. Remote Sens. 2018, 10, 716. [Google Scholar] [CrossRef] [Green Version]

- Cho, M.A.; Skidmore, A.K. A New Technique for Extracting the Red Edge Position from Hyperspectral Data: The Linear Extrapolation Method. Remote Sens. Environ. 2006, 101, 181–193. [Google Scholar] [CrossRef]

- Alvarez-Vanhard, E.; Houet, T.; Mony, C.; Lecoq, L.; Corpetti, T. Can UAVs Fill the Gap between in Situ Surveys and Satellites for Habitat Mapping? Remote Sens. Environ. 2020, 243, 111780. [Google Scholar] [CrossRef]

- Aasen, H.; Bendig, J.; Bolten, A.; Bennertz, S.; Willkomm, M.; Bareth, G. Introduction and Preliminary Results of a Calibration for Full-Frame Hyperspectral Cameras to Monitor Agricultural Crops with UAVs. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-7, 1–8. [Google Scholar] [CrossRef] [Green Version]

- Honkavaara, E.; Khoramshahi, E. Radiometric Correction of Close-Range Spectral Image Blocks Captured Using an Unmanned Aerial Vehicle with a Radiometric Block Adjustment. Remote Sens. 2018, 10, 256. [Google Scholar] [CrossRef] [Green Version]

- Smith, G.M.; Milton, E.J. The Use of the Empirical Line Method to Calibrate Remotely Sensed Data to Reflectance. Int. J. Remote Sens. 1999, 20, 2653–2662. [Google Scholar] [CrossRef]

- Näsi, R.; Honkavaara, E.; Blomqvist, M.; Lyytikäinen-Saarenmaa, P.; Hakala, T.; Viljanen, N.; Kantola, T.; Holopainen, M. Remote Sensing of Bark Beetle Damage in Urban Forests at Individual Tree Level Using a Novel Hyperspectral Camera from UAV and Aircraft. Urban. For. Urban. Green. 2018, 30, 72–83. [Google Scholar] [CrossRef]

- Green, A.; Berman, M.; Switzer, P.; Craig, M.D. A transformation for ordering multispectral data in terms of image quality with implications for noise removal. IEEE Trans. Geosci. Remote Sens. 1988, 26, 65–74. [Google Scholar] [CrossRef] [Green Version]

- Kruse, F.A.; Lefkoff, A.B.; Boardman, J.B.; Heidebrecht, K.B.; Shapiro, A.T.; Barloon, P.J.; Goetz, A.F.H. The Spectral Image Processing System (SIPS)—Interactive Visualization and Analysis of Imaging spectrometer data. Remote Sens. Environ. 1993, 44, 145–163. [Google Scholar] [CrossRef]

- Lorenz, S.; Salehi, S.; Kirsch, M.; Zimmermann, R.; Unger, G.; Vest Sørensen, E.; Gloaguen, R. Radiometric Correction and 3D Integration of Long-Range Ground-Based Hyperspectral Imagery for Mineral Exploration of Vertical Outcrops. Remote Sens. 2018, 10, 176. [Google Scholar] [CrossRef] [Green Version]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jaud, M.; Sicot, G.; Brunier, G.; Michaud, E.; Le Dantec, N.; Ammann, J.; Grandjean, P.; Launeau, P.; Thouzeau, G.; Fleury, J.; et al. Easily Implemented Methods of Radiometric Corrections for Hyperspectral–UAV—Application to Guianese Equatorial Mudbanks Colonized by Pioneer Mangroves. Remote Sens. 2021, 13, 4792. https://doi.org/10.3390/rs13234792

Jaud M, Sicot G, Brunier G, Michaud E, Le Dantec N, Ammann J, Grandjean P, Launeau P, Thouzeau G, Fleury J, et al. Easily Implemented Methods of Radiometric Corrections for Hyperspectral–UAV—Application to Guianese Equatorial Mudbanks Colonized by Pioneer Mangroves. Remote Sensing. 2021; 13(23):4792. https://doi.org/10.3390/rs13234792

Chicago/Turabian StyleJaud, Marion, Guillaume Sicot, Guillaume Brunier, Emma Michaud, Nicolas Le Dantec, Jérôme Ammann, Philippe Grandjean, Patrick Launeau, Gérard Thouzeau, Jules Fleury, and et al. 2021. "Easily Implemented Methods of Radiometric Corrections for Hyperspectral–UAV—Application to Guianese Equatorial Mudbanks Colonized by Pioneer Mangroves" Remote Sensing 13, no. 23: 4792. https://doi.org/10.3390/rs13234792

APA StyleJaud, M., Sicot, G., Brunier, G., Michaud, E., Le Dantec, N., Ammann, J., Grandjean, P., Launeau, P., Thouzeau, G., Fleury, J., & Delacourt, C. (2021). Easily Implemented Methods of Radiometric Corrections for Hyperspectral–UAV—Application to Guianese Equatorial Mudbanks Colonized by Pioneer Mangroves. Remote Sensing, 13(23), 4792. https://doi.org/10.3390/rs13234792