Abstract

This study aimed to classify an urban area and its surrounding objects after the destructive M7.3 Kermanshah earthquake (12 November 2017) in the west of Iran using very high-resolution (VHR) post-event WorldView-2 images and object-based image analysis (OBIA) methods. The spatial resolution of multispectral (MS) bands (~2 m) was first improved using a pan-sharpening technique that provides a solution by fusing the information of the panchromatic (PAN) and MS bands to generate pan-sharpened images with a spatial resolution of about 50 cm. After applying a segmentation procedure, the classification step was considered as the main process of extracting the aimed features. The aforementioned classification method includes applying spectral and shape indices. Then, the classes were defined as follows: type 1 (settlement area) was collapsed areas, non-collapsed areas, and camps; type 2 (vegetation area) was orchards, cultivated areas, and urban green spaces; and type 3 (miscellaneous area) was rocks, rivers, and bare lands. As OBIA results in the integration of the spatial characteristics of the image object, we also aimed to evaluate the efficiency of object-based features for damage assessment within the semi-automated approach. For this goal, image context assessment algorithms (e.g., textural parameters, shape, and compactness) together with spectral information (e.g., brightness and standard deviation) were applied within the integrated approach. The classification results were satisfactory when compared with the reference map for collapsed buildings provided by UNITAR (the United Nations Institute for Training and Research). In addition, the number of temporary camps was counted after applying OBIA, indicating that 10,249 tents or temporary shelters were established for homeless people up to 17 November 2018. Based on the total damaged population, the essential resources such as emergency equipment, canned food and water bottles can be estimated. The research makes a significant contribution to the development of remote sensing science by means of applying different object-based image-analyzing techniques and evaluating their efficiency within the semi-automated approach, which, accordingly, supports the efficient application of these methods to other worldwide case studies.

1. Introduction

An earthquake is a sudden shaking of the ground, and it is known as a natural hazard that cannot be predicted. Therefore, the only means to reduce its consequences is to retrofit buildings in the pre-occurrence phase and quickly identify the damaged areas in the post-occurrence phase. Earthquake hazards yield unfavorable damage to buildings and other manmade structures, representing a serious threat to human life [1]. Rapid damage mapping in urban areas in the early hours after a hazardous event is an important task of the disaster response phase [2,3,4]. According to the collected statistical information, people being trapped in collapsed buildings poses a high potential of human loss after any strong earthquake [5,6]. Therefore, a quick evaluation of buildings in an urban area can be helpful to estimate earthquake aftermath in order to facilitate proper shelter for injured people and to increase the post-earthquake response of rescue teams [7,8].

The recent progress in remote sensing and Earth observation technologies provided a wide range of satellite images that can be employed for many applications, such as efficient natural hazard monitoring [9,10]. Facilitating the availability of a wide range of data requires a quick and cost-effective data driven image analysis approach, such as semi-automated object-based image analysis [11]. In recent years, with the development of remote sensing technology, Earth observation-based damage assessment has been widely investigated by many researchers. Remote sensing techniques are a cost-effective and prompt way to probe a specific area and utilize the obtained information for a quick disaster response [12,13].

Applying very high-resolution (VHR) multitemporal satellite imagery is one of the most common approaches to identifying and mapping destroyed buildings using remote sensing [14,15]. However, it is also understood that the VHR images are not available at all times due to the high cost of their production, especially in the pre-earthquake phase. Therefore, innovative and semi-automated methods, such as object-based image analysis (OBIA) and deep learning methods can be applied to extract and recognize damaged buildings from a single post-event VHR image in a quick and cost-effective manner. In the context of using a single image, commonly used spatial features in building damage identification include image texture [16,17,18,19] and post-earthquake buildings’ morphological characteristics [20]. Due to the complexity and diversity of building damage caused by earthquakes [19,21], applying traditional and common methods in the field of satellite image processing cannot help crisis management to correctly identify different devastated areas in an urban environment.

In the present study, semi-automated OBIA was used to classify and distinguish destroyed and damaged buildings using satellite data processing. The OBIA approach represents a promising methodology, because it has a lot in common with human perception, starting with segmenting images into homogeneous regions that almost correspond to real-world objects [22]. Technically, the various characteristics in the calculated segments, such as shape, texture, layer-based values, and the context of the object, are considered as the main factors of the classification process in the OBIA technique [23]. One of the most significant characteristics of the OBIA approach is the possibility of detecting and classifying targets bigger than pixels as image objects, which allows for the integration of a variety of spatial and spectral features, such as textural parameters, shape, neighborhood, and relations for modelling tasks [24]. Additionally, OBIA offers the ability of using the intrinsic properties of objects and the use of contextual or spatial behavior through the neighborhood or topological relationships between objects [25].

OBIA is regarded as an effective and efficient technology for VHR image classification due to its clear and intuitive technical process [26]. The OBIA approach can be effectively paired with geographic information system (GIS) techniques, allowing for a more comprehensive mapping of land use classes for GIS studies [27,28]. Moreover, compared with the pixel-based classification techniques, with the OBIA approach, the segmented objects exhibit rich spectral and textural features and provide shape and contextual information, which can improve the classification performance for various types of objects [29,30].

The use of statistical indicators, parameters, and the characteristics of segments is one of the most common operations in improving the OBIA technique for the classification of high-resolution images. Parameter optimization has been a research subject for decades, with the most recent trend being the use of an automated, optimal parameter determination technique. However, defining appropriate segmentation parameters, even for a single image, is a significant challenge [31]. Furthermore, it should be noted that OBIA requires specific parameterization for different urban patterns to produce optimal segmentation products [32].

It has to be indicated that the range of satellite sensors and the volume of remote sensing data and products has increased significantly over the past decade. Intensive progress in Earth observation technologies yielded significant improvements in the spatial, spectral, and temporal resolutions of satellite images, which is increasingly taking remote sensing into the arena of big data technology. The diversity and number of application fields and the variety of methods and methodologies to process large amounts of data have steadily increased [33,34,35]. Thus, traditional image processing and classification methods faced incentive challenges. Based on the context of novel satellite images, it is well understood that the remote sensing community demands novel and efficient data driven approaches such as semi-automated approaches. In response to this demand, the OBIA with rule-based development capability leads to developing an efficient framework which can be applied for infinitive applications. Technically, rule-based classification is an exceptional object-identifying technique that presents data mining performance by dividing image contexts into intelligent image objects which can be accordingly used as the base of semi-automated classification [36,37]. Based on these statements, the objective of the present study is to explore the performance of OBIA in building damage assessment and temporary camp detection using post-earthquake WorldView-2 VHR satellite imagery. Our goal is to apply and identify the efficiency of OBIA algorithms for the development of a semi-automated framework that can be used by future researchers in other earthquake case studies. It is well understood that the proposed approach will support future research and studies for applying efficient methods for cost-effective data driven approaches for monitoring earthquake consequences. This study can be considered as state of the art and progressive research in the domain of remote sensing application for rapid estimation of essential resources for the affected people.

2. Study Area

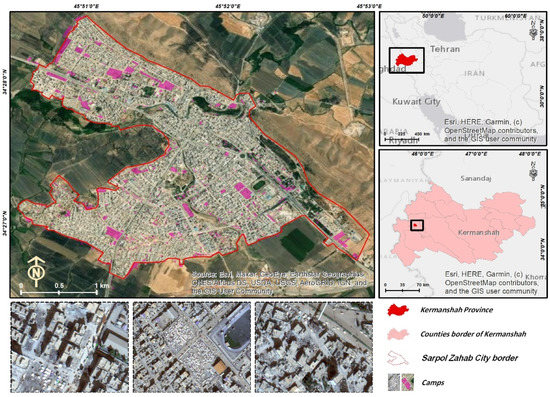

Iran is widely acknowledged as a country where many natural disasters occur every year, posing a serious threat to people’s lives. For example, on 26 December 2003, a destructive earthquake (M6.5) struck Bam city with 30,000 fatalities and 20,000 injuries [38]. On 11 August 2012, Ahar–Varzaghan twin earthquakes (M6.3 and M6.4) caused more than 300 deaths [39]. On 12 November 2017 (21:48 local time) in Sarpol-e Zahab, the deadliest earthquake (M7.3) of 2017 with more than 600 casualties, 7000 injured people and 15,000 homeless was recorded [40,41]. Given the reported earthquakes, the requirement to identify devastated areas for emergency assistance to the injured is one of the most critical tasks in cities that are prone to earthquakes. Based on seismic reports, the central government authorities were not able to estimate the extent of damage during the first 72 h of aftermath of the event, because of night-time earthquake, as well as the harsh topography of the affected area and the scattered settlement areas (e.g., towns and villages). These issues increased the number of casualties and imposed irreversible socioeconomic consequences [2].

As stated, Iran is a country that faces natural disasters, such as floods, landslides, and earthquakes, every year [42,43,44,45]. In the present study, we seek to identify the destroyed buildings in Sarpol-e Zahab located in the Kermanshah province, Iran. According to reliable data released from the Sarpol-e Zahab Municipality, the population of this city is currently 45,481 people, and the city covers an area of about 544 hectares [46]. The study area is located in the west of Iran in the Kermanshah province near the Iraqi border. Sarpol-e Zahab and the surrounding urban and rural areas were severely damaged by the M7.3 earthquake, which was the strongest seismic event since 1967 near the Iran–Iraq border (Figure 1) [47,48,49].

Figure 1.

Location of Sarpol-e Zahab city and Kermanshah province in west Iran.

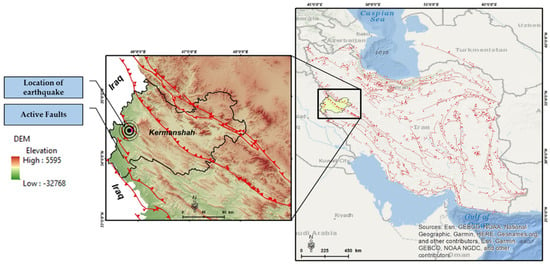

From the geological point of view, the M7.3 Sarpol-e Zahab earthquake occurred in the northwest Zagros fold-thrust area (Figure 2). The Zagros Mountains have been experiencing an uplift since the late Eocene because of the tectonic collision between the Arabian and Eurasian plates [50]. According to the USGS (United States Geological Survey) earthquake catalog, only a few earthquakes ranging from M4 to M5 have been recorded for a radius >200 km of the epicenter [51].

Figure 2.

Location of earthquake and active faults in the study area.

3. Materials and Methods

3.1. Dataset

The WorldView-2 is a commercial satellite that gathers information of 8 multispectral bands. The observation cycle and width of the footprint are 1.1 days and 16.4 km, respectively [52,53,54,55]. The spatial resolution of the acquired images is 2 m for multispectral (MS) bands: coastal blue (400–450 nm), blue (450–510 nm), green (510–580 nm), yellow (585–625 nm), red (630–690 nm), red edge (705–745 nm), NIR1 (770–895 nm), and NIR2 (860–1040 nm) [52]. The spatial resolution of the panchromatic (PAN) band (450–800 nm) of the sensor is about 0.5 m. According to Digital Globe [56], these 8 bands are uniquely designed to cover various demands and applications, such as damage monitoring, coastal line delineation, environmental purposes, and resource management [53,54]. In order to identify damaged buildings and the location of the temporary shelters and tents after the earthquake, we used a single four-band VHR image from the WorldView-2 satellite. Details of the obtained VHR image are given in Table 1. To validate the outcomes of VHR image classification, we also obtained a reference map provided by the United Nations. The reference map for Sarpol-e Zahab was generated in 2017 by the UNITAR (United Nations Institute for Training and Research) using various remote sensing and field measurements.

Table 1.

Applied image characteristics.

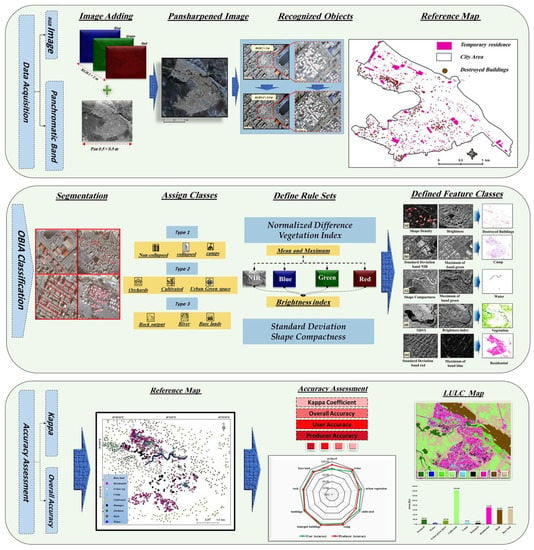

3.2. Methodology

The spectral information from WorldView-2 was used for different indices to create rulesets for the OBIA method. The general workflow of this study is shown in Figure 3. As shown in Table 1, the spectral bands of WorldView-2 images reveal that the spatial resolution is 2 m, but for the current case, the mentioned spatial resolution is not suitable to extract urban features. The pan-sharpening technique provides a solution to boost the spatial resolution of the MS band to 0.5 m by fusing the PAN and MS images to generate pan-sharpened images. In brief, the pan-sharpened images are promoted MS images with spatial resolution that is the same as that of the PAN image [57,58,59]. Therefore, as shown in the workflow (Figure 3), we fused the panchromatic band and spectral bands of WorldView-2 to increase the spatial resolution of the image because increasing the spatial resolution of the image is extremely convenient for detecting small objects in the image, such as temporary settlement tents and small buildings, as well as for identifying different patterns of destroyed buildings [59]. After preparing the image, object-based classification was performed. After achieving the classification results using object-based technique, to examine the performance of the applied technique we evaluated the results’ accuracy using ground control points related to the study area received from the UN. The kappa coefficient was used to calculate the accuracy assessment. The OBIA oriented classification was performed in the eCognition software environment, and accuracy assessment was conducted using Microsoft Excel software.

Figure 3.

Flowchart of the research process. As it can be observed from the figure, the process started with image input, segmentation, applying the object-based features, classification and validation as main steps of the research scheme.

3.2.1. Object-Based Image Analysis for Damage Mapping

A building’s characteristics, such as the geometric features of buildings, the area, rectangular fit, and convexity, yield various unexpected complexity in collapsed building detection after earthquakes [60]. Where the urban morphology is combined with an adequate image resolution to allow for recognition, OBIA approaches have shown good performance in the extraction of objects (such as roofs and roads) at the settlement level [61,62]. To consider both context and pixel values in a classification process, OBIA techniques are a beneficial means, because they use various items, such as texture, form, compacity, and relationships of neighborhood pixels during the segmentation and feature extraction process [63,64,65,66,67,68]. Although the main goal of the study is to detect the damaged buildings and temporary camps, other urban element were classified to provide statistical information about the city. To begin classifying using the OBIA technique, image segmentation is the first requirement. In fact, image objects/segments are obtained during image segmentation to represent the boundary of the objects. Image segmentation enables users to access reliable results within the classifying process [69,70,71,72].

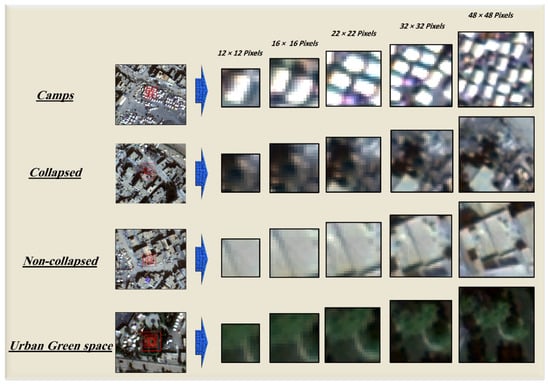

Regarding the variety of scales of urban features and objects, different scales of segmentation were applied to identify the features and objects. For instance, by selecting a low segmentation scale, tiny image objects can be created, which is a process that is called over-segmentation [73]. A low segmentation scale is implemented to identify tents and temporary residents as well as preserve the feature boundaries. Some examples of differences between urban objects in various classes are presented in Figure 4.

Figure 4.

Examples of scale differences in urban objects. The figure also shows the image context when applying the segmentation task in OBIA which should detect the objects and develop them as image objects.

For this purpose, a segmentation scale of 25 was applied for the segmentation process. In order to obtain the optimal scale of segmentation, the cadaster map and field measurement for 120 building as sample were employed. For this goal, the segmentation was performed by several scales (10, 15, 25, 30, 35) and by comparing the area of obtained image objects of 120 sample buildings with image generated in each scale, we selected the 25 as optimal scale of segmentation. The segmented features in some parts of the image were illogical, which means that the features were not distinguished completely. To solve this problem, merging operations were used in the desired parts to obtain the correct border of the features. The scale levels for segmentation and merging were chosen regarding visual inspection and trial and error, as recommended by previous studies [74,75]. The numbers were validated by visual examinations to identify the shapes and patterns of the objects. In the present study, to implement the object-based technique, the following different rulesets were used: NDVI; mean and maximum of band red, green, blue, and NIR; the brightness index; standard deviation; and shape compactness. Determining the rules depends on human experience and reasoning to achieve a specific objective [74,75,76,77]. An explanation of each of the rulesets is given below.

- Normalized Difference Vegetation Index

As the cultivated area, area of orchards, and urban green space were significantly different from each other, the NDVI was used to identify green lands in the study area. The use of this index after applying segmentation with a size of 250 provided satisfactory results in detecting vegetation in the study area. This index was implemented using the following equation [76]:

where NIR represents the near-infrared band (which is band 4 in our case) and R equals the red band (which is band 3 in our case).

- Mean and Maximum of bands

Due to the different spectral reflections of objects in different ranges of the electromagnetic spectrum, statistical indices such as the mean and maximum reflection of used bands can be applied to distinguish objects from each other. In the present study, using the maximum reflection in the visible bands (RGB), we were able to identify and extract buildings with bright or impenetrable roofs. The use of averages in the blue band also helped to identify bright objects in the used image.

- Brightness index

The brightness index distinguishes and identifies the brightest and darkest parts of the image using the values reflected from it. Applying this index allowed us to identify the shadows of buildings and trees as the darkest part of the image and the tents as the brightest objects of the image. The calculation of this index is based on the following equation [77]:

where is brightness weight of the image k, which is between 0 and 1. K represents the number layers of the used image (4 in our case). is the sum of the brightness weights of all layers of the image k used to calculate , and represents the average intensity of the image layer k of the segment .

- Standard Deviation (StdDev)

This index indicates the measurement of standard deviation of the pixels that produce an object or a segment. The calculation of this index is based on the following equation [78]:

where is the calculated StdDev for object v in image k, is a set of pixels created by object v, is the coordinates of pixels of object v, and represents the calculated StdDev of a pixel in object v.

- Shape compactness

This index describes the compactness ratio of objects. The compression of the image objects is obtained using Equation (4) [79] by dividing the area and perimeter of the object by the total number of pixels. In this criterion, the value range of the effects is between zero and infinity, which in a satisfactory situation is equal to 1.

3.2.2. Accuracy Assessment

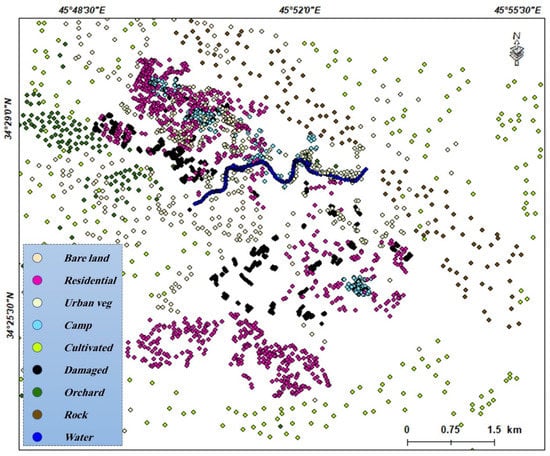

When analyzing satellite images, it is crucial for the accuracy of any classification to be assessed [73]. Therefore, we measured the accuracy of our methodology concerning its suitability for the given application (identifying devastated buildings affected by the earthquake). In this study, we assessed the accuracy of the obtained map by evaluating the overall accuracy, user accuracy, producer accuracy, and kappa coefficient. The data used in the evaluation of accuracy indices include data extracted from the image used for processing, which has a spatial accuracy of 0.5 m, as well as data received from the UNITAR, which was collected after the earthquake (Figure 5).

Figure 5.

Control points obtained from the reference map.

Overall and user and producer accuracies were obtained using the data gathered from the aforementioned image. Table 2 presents how the accuracy indices are calculated.

Table 2.

Accuracy assessment indices.

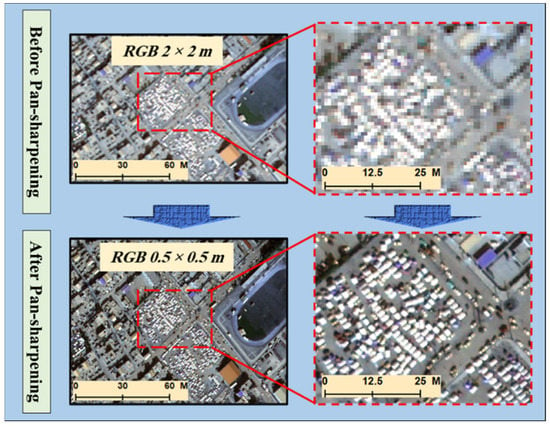

4. Results

The present investigation was conducted to identify buildings devastated by earthquakes and the temporary camps in Sarpol-e Zahab. For this purpose, a four-band single image from the WorldView-2 satellite was used, which was taken after the earthquake. The technique used to identify the urban objects was the OBIA method. In order to increase the spatial resolution of the used image, we pan-sharpened the image using QGIS software. Performing pan-sharpening had a huge impact on increasing the spatial accuracy to identify the urban objects rather than the simple image (Figure 6).

Figure 6.

Result of pan-sharpening (top image with a resolution of 2 m and bottom image with a resolution of 0.5 m).

4.1. Object-Based Image Analysis

After the pre-processing step, to identify the devastated buildings and the camps, the OBIA method was utilized. Segmentation is a significant step in OBIA. It can be considered as a process of dividing an image into homogeneous and non-overlapping zones that are then identified as objects [69]; therefore, to segment the image used in the present study, the multiresolution method was used in which the small segments are merged. Due to the irregular pattern of the destroyed buildings, the segmentation process of these features requires a high precision. Therefore, in the obtained segmentation, all of the segments related to the destroyed buildings were merged with each other. To define the appropriate rulesets, five functions, namely, shape compactness, standard deviation (StdDev), brightness index, mean and maximum of bands, and normalized difference vegetation index (NDVI), were utilized in the present study. Technically, each of the used functions and rules demonstrated reliable performance in identifying certain features. For instance, the shape compactness is the most suitable ruleset for identifying irregularly and disordered patterns of the demolished buildings, and high accuracy has been observed when identifying vegetation with the NDVI.

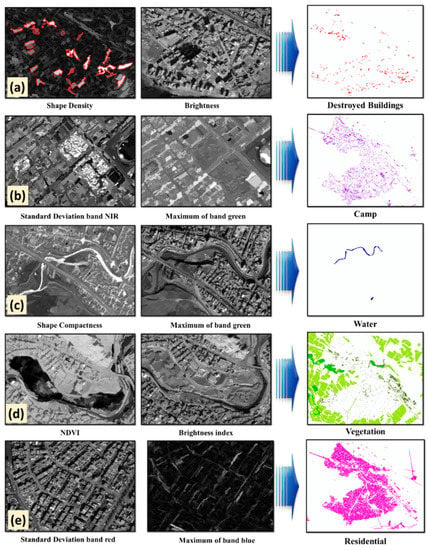

The obtained results from the remote sensing and satellite image processing techniques have not demonstrated considerable accuracy. Utilizing object-based methods has somewhat solved the issues of uncertainty of these methods. In the present study, different indices were used to classify objects with the same statistical properties. The spectral reflection of various objects, especially in the urban environments, is different. That is, every single class has a different spectral signature to that of other classes; therefore, we analyzed all classes considering their statistical and spectral attributes. Figure 7 shows some of the computed results. It is well understood that object-based classification is based on objects generated by the segmentation. Moreover, after the trial-and-error period, corrections, such as the merging of some segments, are required.

Figure 7.

Partial results of applying rulesets and OBIA classification. (a–e) are indices used for identification of the objects.

The destroyed buildings in the image obtained from the WV-2 satellite do not have a proper and recognizable shape. The only elements that are effective in detecting them are the high density of the pixels and their heterogeneity. Accordingly, we used segmentation with a size of 40, and then the objects associated with the destroyed buildings were identified. After performing this step, these objects were merged in a class using the shape index. Other indices were not appropriate for the detection of the destroyed buildings. For instance, in Figure 7a, the brightness index was compared to the shape density index, and, eventually, the shape density index displayed better performance in identifying destroyed buildings. Furthermore, temporary camps after the earthquake are among the critical areas in which to provide aid to the injured people, and it is necessary to correctly identify these places. Since the tents allocated to temporary residence were in white, it was easy to identify them. Nevertheless, the brightness index cannot be used for this purpose, because this index distinguishes all high-brightness entities; therefore, the probability of uncertainty in detecting tents increases. For this purpose, a segment with a size of 20 and the standard deviation of the NIR band were used (Figure 7b). As the water bodies in the NIR band are dark, it is easy to identify the river. However, in urban environments, there is a problem with the complexity of objects, so class extraction is not easy. For this purpose, both the spectral (separation of the river from other long objects) and compression coefficient (separation of the river from other regular objects) thresholds were used (Figure 7c). The application of the normalized differential vegetation index (NDVI) is one of the most commonly used methods of extracting vegetation. In the present study, this operation was performed using red and near-infrared bands. Eventually, three types of vegetation were identified (Table 3 and Figure 7d).

Table 3.

Vegetation obtained from the NDVI.

Moreover, the standard deviation of the red band was used to extract the residential areas. All performed statistical indices were based on trial and error in eCognition software (Figure 7e).

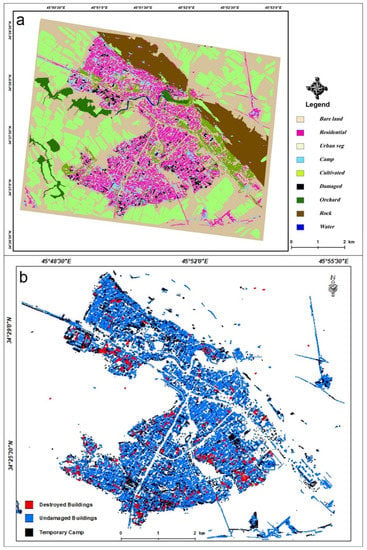

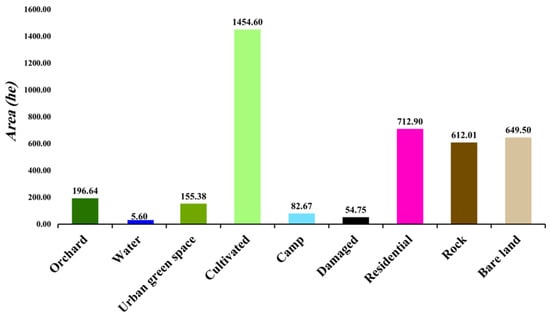

After implementing the defined rulesets, an identified class map was extracted with emphasis placed on identifying temporary camps and destroyed buildings as well as undestroyed buildings. In this study, nine classes were identified and mapped. The area of each land use was calculated in ha. Agricultural lands and urban buildings account for the largest area of obtained classes (Figure 8).

Figure 8.

LULC map of Sarpol-e Zahab. (a): Whole extracted class map; (b): three main classes, namely, temporary camps, destroyed buildings, and undestroyed buildings.

The area of each obtained class was also calculated in the ArcMap software environment. The area of each class was calculated in ha. Agricultural lands and urban buildings account for the largest areas of obtained LULC (land use land cover) (Figure 9), while the area of destroyed buildings is equal to 54.75 ha. Since the infrastructure area of buildings in Sarpol-e Zahab city is on average equal to 90 square meters, according to this calculation, it can be estimated that about 6083 buildings were destroyed.

Figure 9.

Area of obtained classes.

4.2. Accuracy Assessment

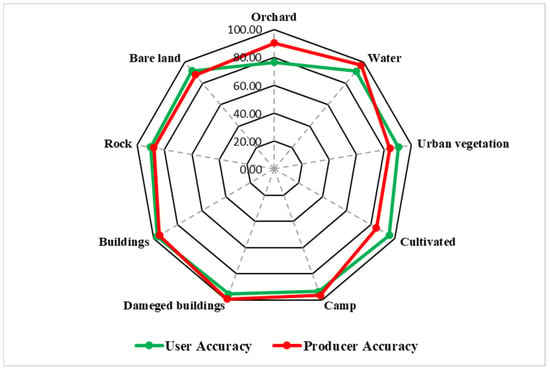

As previously stated, in the present study, four indices were used to evaluate the accuracy of the final results. Table 4 demonstrates the calculation error matrix for all classes. Generally, user’s accuracy and producer’s accuracy (among classes) were 92.92% and 92.94% for all LULC, respectively. Using the data obtained from the error matrix, the calculated overall accuracy for the acquired map was 94.26%, which is a reliable rate. Moreover, according to the purpose of the present study, the calculated producer accuracy for the class of destroyed buildings was 99.17%, and the user accuracy obtained for that was 95.33%, revealing a high rate of reliability (Table 4 and Figure 10). The kappa coefficient was determined, which is one of the most commonly used indices to compute the accuracy of satellite image classification results. In this regard, field data collected by the United Nations after the earthquake were used. The results showed that the obtained map presents a kappa coefficient of 94.05%.

Table 4.

User and producer accuracy assessment for each class.

Figure 10.

User and producer accuracy assessment for each class.

4.3. Human Settlement in Temporary Camps

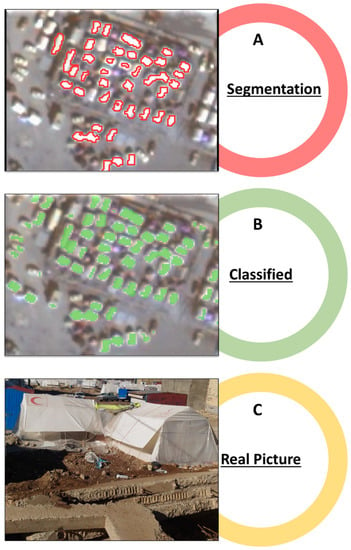

One of the most important measures to reduce post-earthquake stress and concern is to provide temporary and safe housing and other essential demands for people whose houses have been destroyed. Thus, an object-based VHR image analysis will enable us to estimate from “A” to “Z” for a proper disaster response. In the present study, one of the objectives was to identify the camps and tents provided in the study area. The results revealed that about 10,249 tents were established after the event. The estimated number of the tents is in a good agreement with the report of the Iranian Red Crescent Society for the city of Sarpol-e Zahab that stated that the number of distributed tents was about 10,000 right after the earthquake. The capacity of the tents distributed to provide shelter for the people of Sarpol-e Zahab was an average of four people per tent. According to the total population of Sarpol-e Zahab, which was equal to 45,481 people, about 40,996 people had temporarily settled and about 4485 people were homeless and required aid and tents. Figure 11 presents the size of each tent at the classified segmentation as well as a photo taken by the authors at the earthquake site. Furthermore, based on the empirical relationships gathered for previous Iranian earthquakes, essential demands of the damaged population, such as emergency toilets and baths, blankets, and canned food, are estimated to support more flexible and resilient disaster response according to Table 5.

Figure 11.

Condition of distributed tents for temporary settlement of people. (A): Image segmentation into objects as tents, (B): classified objects as tents; and (C): photo taken by the authors at the earthquake site.

Table 5.

Formulation of required resources based on Sphere, 2004 and Bam earthquake report estimation [80].

5. Discussion

After an earthquake, the most crucial task is to quickly identify destroyed buildings so that immediate aid can be provided. The average revisit interval of WV-2 is 1.1 days, and it is capable of collecting up to 1 million km2 Earth images daily, but the revisit interval of most optical satellite missions varies from days to weeks, which makes the prompt damage response difficult. High-resolution satellite data are usually commercial and limited to certain companies, which has become a problem in conducting a high-quality examination of the technique. The image used in the present study was a four-band image of the WV-2 satellite. To increase its spatial resolution, the panchromatic band of this satellite with a resolution of 0.5 m was applied, which supported us in applying a variety of object-based features and identifying properly distinguishable objects. Despite the enhancements, it is still difficult to identify some features. For instance, identifying destroyed buildings that do not have a regular shape or pattern, continuing the path of a river that is covered by a canopy of trees, and determining objects such as cars or small buildings that are covered by the shadow of towers and larger buildings remain challenges. Therefore, it must be noted that, in addition to the accuracy of the techniques and applied materials, user creativity is also required. Additionally, the segmentation process in the OBIA method of classification through the merging of adjacent pixels with similar characteristics and the formation of image objects minimized the pixel-based errors during classification [11]. Moreover, the lack of spectral bands in very high spatial quality images is a limitation of spectral indices. For instance, SWIR bands and some related indices might help in identifying complex features.

This study was a novel attempt to provide useful information from VHR optical images from “A” to “Z” for rescue teams using a cost-effective method. Here, we demonstrated the feasibility of VHR optical images not only for the detection of damages and changes but also for the provision of useful information regarding the number of affected people and their initial demands. This cost-effective, semi-automated method presented here will enable decision makers to predict consequences of an event and estimate the demands of the affected people. The detection of objects in the urban environment is a challenging problem in the field of optical remote sensing image processing, especially when it is required to distinguish the specific entities. Therefore, the use of an enhanced object-based technique facilitates the detection of the aftermath of urban elements, such as temporary tents and collapsed buildings. The presented semi-automated approach showed a satisfactory result for the detection of the urban classes, but not for the site specifications. Further analysis must be conducted to examine this method’s transferability to other case studies. Indeed, transferability is an element of research that, in many cases, allows knowledge previously gained while solving a problem to be accordingly applied to a different case study with almost the same conditions, such as an earthquake in a different geographic location. In future work, a joint focus on object-based image analysis and deep learning algorithms might satisfy the transferability problem much more easily.

6. Conclusions

We aimed to identify urban objects using the OBIA method with emphasis placed on the extraction of earthquake-damaged buildings in the city of Sarpol-e Zahab, Iran. Because of inherent difficulties of the remote sensing techniques, we cannot classify the degree of damage as defined by EMS-98 (European Macroseismic Scale). Accordingly, we only detected and validated totally collapsed buildings. Disaster response using only optical images is not complete, even when VHR images are available. To decrease data latency, complementary datasets are required. For instance, synthetic aperture radar (SAR) imagery is independent of weather conditions in day or night. Another advantage of SAR data is the use of phase and intensity information of complex images in different polarizations, which is helpful for the detection of different mechanisms of urban and non-urban objects. Double-bounce information can quickly provide the location of built-up areas, while its decrease comparing the pre-event images can reveal the location of damaged buildings. As a complementary task, combining optical and SAR imagery is a crucial task for a thorough aftermath analysis. Although there are several datasets for both optical and SAR missions, in order to attain an effective disaster response, the priority should be given to those with shorter revisit intervals [81,82,83]. Accordingly, differential methods (with pre-events and post-events) may provide accurate results as described in previous studies [83,84]. Since the use of OBIA for an area struck with disaster in the night remains a question, the acquisition of VHR polarimetric SAR information in X, C, and L bands is recommended.

Due to the wide variety of methods and techniques, it is necessary to identify those that are efficient [9,10,11]. From the methodological perspective, results of this study showed that a rule-based OBIA can be considered as an efficient data driven approach in the domain of remote sensing. However, we also concluded that this approach with effective spectral and spatial algorithms as well as integration remote sensing and GIScience can be considered as an efficient modelling method. We expect that integration of OBIA with novel image classification methods such as deep learning will even increase the efficiency of OBIA and provide a robust image analysis methodology which will be considered in our future works. The main objective of this research was to use OBIA and identify efficient object-based methods. The results of this research suggest that integrated semi-automated spatial, geometrical, and spectral features can be applied to monitor building damages as the result of earthquakes. Based on the research outcome, we conclude that the current study can be considered a piece of progressive research in remote sensing sciences due to the proposal of an efficient approach. The results will support authorities and decision makers to gather the required information in the post-disaster step for crisis management and mitigation objectives.

Author Contributions

Conceptualization, S.K., B.F. and M.M.; methodology, B.F. and D.O.; software, D.O.; validation, S.K., B.F. and D.O.; formal analysis, D.O.; investigation, S.K., M.M. and D.O.; resources, M.M.; data curation, S.K.; writing—original draft preparation, D.O., S.K., M.M. and B.F.; writing—review and editing, S.K. and B.F.; visualization, D.O.; supervision, S.K. and B.F.; project administration, S.K. and M.M.; funding acquisition, M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Japanese Society for the Promotion of Science (JSPS) Grants-in-Aid for Scientific Research (KAKENHI), grant numbers 20H02411 and 19H02408.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Karimzadeh, S.; Feizizadeh, B.; Matsuoka, M. From a GIS-based hybrid site condition map to an earthquake damage as-sessment in Iran: Methods and trends. Int. J. Disaster Risk Reduct. 2017, 22, 23–36. [Google Scholar] [CrossRef]

- Firuzi, E.; Ansari, A.; Hosseini, K.A.; Karkooti, E. Developing a customized system for generating near real time ground motion ShakeMap of Iran’s earthquakes. J. Earthq. Eng. 2020, 1–23. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; Paolo, G. Remote sensing and earthquake damage assessment: Experiences, limits, and perspectives. Proc. IEEE 2012, 100, 2876–2890. [Google Scholar] [CrossRef]

- Eguchi, T.; Huyck, K.; Ghosh, S.; Adams, J.; McMillan, A. Utilizing new technologies in managing hazards and disasters. In Geospatial Techniques in Urban Hazard and Disaster Analysis; Springer: New York, NY, USA, 2009; Volume 2, pp. 295–323. [Google Scholar]

- Ghasemi, M.; Karimzadeh, S.; Matsuoka, M.; Feizizadeh, B. What Would Happen If the M 7.3 (1721) and M 7.4 (1780) Historical Earthquakes of Tabriz City (NW Iran) Occurred Again in 2021? ISPRS Int. J. GeoInf. 2021, 10, 657. [Google Scholar] [CrossRef]

- Coburn, W.; Spence, J.; Pomonis, A. Factors determining human casualty levels in earthquakes: Mortality prediction in building collapse. In Proceedings of the tenth world conference on earthquake engineering. Balkema Rotterdam 1992, 10, 5989–5994. [Google Scholar]

- Brunner, D.; Lemoine, G.; Bruzzone, L. Earthquake Damage Assessment of Buildings Using VHR Optical and SAR Imagery. IEEE Trans. Geosci. Remote Sens. 2010, 48, 2403–2420. [Google Scholar] [CrossRef] [Green Version]

- Jaiswal, K.; Wald, J. Creating a Global Building Inventory for Earthquake Loss Assessment and Risk Management; Open-File Report 2008–1160; US Geological Survey: Denver, CO, USA, 2008.

- Feizizadeh, B.; Abadi, H.S.S.; Didehban, K.; Blaschke, T.; Neubauer, F. Object-Based Thermal Remote-Sensing Analysis for Fault Detection in Mashhad County, Iran. Can. J. Remote Sens. 2019, 45, 847–861. [Google Scholar] [CrossRef]

- Karimzadeh, S.; Samsonov, S.; Matsuoka, M. Block-based damage assessment of the 2012 Ahar-Varzaghan, Iran, earthquake through SAR remote sensing data. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), FortWorth, TX, USA, 23–28 July 2017. [Google Scholar]

- Najafi, P.; Feizizadeh, B.; Navid, H. A Comparative Approach of Fuzzy Object Based Image Analysis and Machine Learning Techniques Which Are Applied to Crop Residue Cover Mapping by Using Sentinel-2 Satellite and UAV Imagery. Remote Sens. 2021, 13, 937. [Google Scholar] [CrossRef]

- United Nations; International Search and Rescue Advisory Group. INSARAG Guidelines; Volume II: Preparedness and Response; Manual B: Operations; United Nations International Search and Rescue Advisory Group: Geneva, Switzerland, 2015. [Google Scholar]

- Han, Q.; Yin, Q.; Zheng, X.; Chen, Z. Remote sensing image building detection method based on Mask R-CNN. Complex Intell. Syst. 2021, 1–9. [Google Scholar] [CrossRef]

- Gusella, L.; Adams, B.J.; Bitelli, G.; Huyck, C.K.; Mognol, A. Object-Oriented Image Understanding and Post-Earthquake Damage Assessment for the 2003 Bam, Iran, Earthquake. Earthq. Spectra 2005, 21, 225–238. [Google Scholar] [CrossRef]

- Yamazaki, F.; Yano, Y.; Matsuoka, M. Visual Damage Interpretation of Buildings in Bam City using QuickBird Images following the 2003 Bam, Iran, Earthquake. Earthq. Spectra 2005, 21, 329–336. [Google Scholar] [CrossRef]

- Klonus, S.; Tomowski, D.; Ehlers, M.; Reinartz, P.; Michel, U. Combined Edge Segment Texture Analysis for the Detection of Damaged Buildings in Crisis Areas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1118–1128. [Google Scholar] [CrossRef] [Green Version]

- Moya, L.; Zakeri, H.; Yamazaki, F.; Liu, W.; Mas, E.; Koshimura, S. 3D gray level co-occurrence matrix and its application to identifying collapsed buildings. ISPRS J. Photogramm. Remote Sens. 2019, 149, 14–28. [Google Scholar] [CrossRef]

- Song, J.; Wang, X.; Li, P. Urban building damage detection from VHR imagery by including temporal and spatial texture features. Yaogan Xuebao J. Remote Sens. 2012, 16, 1233–1245. [Google Scholar]

- Wang, X.; Li, P. Extraction of earthquake-induced collapsed buildings using very high-resolution imagery and airborne lidar data. Int. J. Remote Sens. 2015, 36, 2163–2183. [Google Scholar] [CrossRef]

- Chini, M.; Pierdicca, N.; Emery, W. Exploiting SAR and VHR Optical Images to Quantify Damage Caused by the 2003 Bam Earthquake. IEEE Trans. Geosci. Remote Sens. 2008, 47, 145–152. [Google Scholar] [CrossRef]

- Ural, S.; Hussain, E.; Kim, K.; Fu, C.-S.; Shan, J. Building Extraction and Rubble Mapping for City Port-au-Prince Post-2010 Earthquake with GeoEye-1 Imagery and Lidar Data. Photogramm. Eng. Remote Sens. 2011, 77, 1011–1023. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Chen, M. Optimization in multi-scale segmentation of high-resolution satellite images for artificial feature recognition. Int. J. Remote Sens. 2007, 28, 4625–4644. [Google Scholar] [CrossRef]

- Blaschke, T.; Lang, S.; Hay, G. Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Springer: New York, NY, USA, 2008; p. 817. [Google Scholar]

- Avci, Z.U.; Karaman, M.; Ozelkan, E.; Kumral, M.; Budakoglu, M. OBIA based hierarchical image classification for industrial lake water. Sci. Total Environ. 2014, 487, 565–573. [Google Scholar] [CrossRef] [PubMed]

- Benz, U.C.; Hofmann, P.; Willhauck, G.; Lingenfelder, I.; Heynen, M. Multi-resolution, object-oriented fuzzy analysis of remote sensing data for GIS-ready information. ISPRS J. Photogramm. Remote Sens. 2004, 58, 239–258. [Google Scholar] [CrossRef]

- Wang, J.; Zheng, Y.; Wang, M.; Shen, Q.; Huang, J. Object-Scale Adaptive Convolutional Neural Networks for High-Spatial Resolution Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 283–299. [Google Scholar] [CrossRef]

- Fu, T.; Ma, L.; Li, M.; Johnson, B.A. Using convolutional neural network to identify irregular segmentation objects from very high-resolution remote sensing imagery. J. Appl. Remote Sens. 2018, 12, 025010. [Google Scholar] [CrossRef]

- Arvor, D.; Durieux, L.; Andrés, S.; Laporte, M.-A. Advances in Geographic Object-Based Image Analysis with ontologies: A review of main contributions and limitations from a remote sensing perspective. ISPRS J. Photogramm. Remote Sens. 2013, 82, 125–137. [Google Scholar] [CrossRef]

- Costa, H.; Carrão, H.; Bação, F.; Caetano, M. Combining per-pixel and object-based classifications for mapping land cover over large areas. Int. J. Remote Sens. 2014, 35, 738–753. [Google Scholar] [CrossRef]

- Pal, M.; Mather, M. Some issues in the classification of DAIS hyperspectral data. Int. J. Remote Sens. 2006, 27, 2895–2916. [Google Scholar] [CrossRef]

- Chen, G.; Weng, Q.; Hay, J.; He, Y. Geographic object-based image analysis (GEOBIA): Emerging trends and future op-portunities. GIScience Remote Sens. 2018, 55, 159–182. [Google Scholar] [CrossRef]

- Fallatah, A.; Jones, S.; Mitchell, D.; Kohli, D. Mapping informal settlement indicators using object-oriented analysis in the Middle East. Int. J. Digit. Earth 2018, 12, 802–824. [Google Scholar] [CrossRef]

- Feizizadeh, B.; Garajeh, M.K.; Blaschke, T.; Lakes, T. An object based image analysis applied for volcanic and glacial landforms mapping in Sahand Mountain, Iran. CATENA 2020, 198, 105073. [Google Scholar] [CrossRef]

- Ghasemi, M.; Karimzadeh, S.; Feizizadeh, B. Urban classification using preserved information of high dimensional textural features of Sentinel-1 images in Tabriz, Iran. Earth Sci. Inform. 2021, 1–18. [Google Scholar] [CrossRef]

- Garajeh, M.K.; Malakyar, F.; Weng, Q.; Feizizadeh, B.; Blaschke, T.; Lakes, T. An automated deep learning convolutional neural network algorithm applied for soil salinity distribution mapping in Lake Urmia, Iran. Sci. Total Environ. 2021, 778, 146253. [Google Scholar] [CrossRef] [PubMed]

- Taherzadeh, E.; Shafri, Z. Development of a generic model for the detection of roof materials based on an object-based approach using WorldView-2 satellite imagery. Adv. Remote Sens. 2013, 2, 312–321. [Google Scholar] [CrossRef] [Green Version]

- Fernandes, R.; Aguiar, F.C.; Silva, J.M.; Ferreira, T.; Pereira, M. Optimal attributes for the object-based detection of giant reed in riparian habitats: A comparative study between Airborne High Spatial Resolution and WorldView-2 imagery. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 79–91. [Google Scholar] [CrossRef]

- United Nations. United Nations Launches Appeal for Iran Earthquake. 2004. Available online: http://www.un.org/News/Press/docs/2004/iha852.doc.htm (accessed on 1 March 2021).

- Solaymani Azad, S.; Saboor, N.; Moradi, M.; Ajhdari, A.; Youssefi, T.; Mashal, M.; Roustaei, M. Preliminary Report on Geological Features of the Ezgaleh-Kermanshah Earthquake (M~7.3), November 12, 2017, West Iran; SSD of GSI Preliminary Report; Geological Survey & Mineral Explorations of Iran: Tehran, Iran, 2017; Volume 17, p. 1.

- Karimzadeh, S.; Matsuoka, M.; Miyajima, M.; Adriano, B.; Fallahi, A.; Karashi, J. Sequential SAR Coherence Method for the Monitoring of Buildings in Sarpole-Zahab, Iran. Remote Sens. 2018, 10, 1255. [Google Scholar] [CrossRef] [Green Version]

- Pourghasemi, H.R.; Gayen, A.; Edalat, M.; Zarafshar, M.; Tiefenbacher, J.P. Is multi-hazard mapping effective in assessing natural hazards and integrated watershed management? Geosci. Front. 2020, 11, 1203–1217. [Google Scholar] [CrossRef]

- Pourghasemi, H.R.; Gayen, A.; Panahi, M.; Rezaie, F.; Blaschke, T. Multi-hazard probability assessment and mapping in Iran. Sci. Total Environ. 2019, 692, 556–571. [Google Scholar] [CrossRef]

- Central Office of Natural Resources and Watershed Management in the Jahrom Township. Hydrology and Flood; Technical Report; Central Office of Natural Resources and Watershed Management in the Jahrom Township: Jahrom, Iran, 2015; Volume 1, pp. 121–122.

- Khosravi, K.; Pourghasemi, R.; Chapi, K.; Bahri, M. Flash flood susceptibility analysis and its mapping using different bivariate models in Iran: A comparison between Shannon’s entropy, statistical index, and weighting factor models. Environ. Monitor. Assess. 2016, 188, 1–21. [Google Scholar] [CrossRef] [PubMed]

- Hoshyar, S.; Zargar, A.; Fallahi, A. Temporary housing pattern based on grounded theory method (Case study: Sarpol-Zahab city after the earthquake 2017). Environ. Hazard Manag. 2020, 6, 287–300. [Google Scholar]

- Washaya, P.; Balz, T. SAR coherence change detection of urban areas affected by disasters using sentinel-1 imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-3, 1857–1861. [Google Scholar] [CrossRef] [Green Version]

- Hajeb, M.; Karimzadeh, S.; Fallahi, A. Seismic damage assessment in Sarpole-Zahab town (Iran) using synthetic aperture radar (SAR) images and texture analysis. Nat. Hazards 2020, 103, 1–20. [Google Scholar] [CrossRef]

- Mouthereau, F.; Lacombe, O.; Vergés, J. Building the Zagros collisional orogen: Timing, strain distribution and the dynamics of Arabia/Eurasia plate convergence. Tectonophysics 2012, 532, 27–60. [Google Scholar] [CrossRef]

- Hasanlou, M.; Shah-Hosseini, R.; Seydi, S.; Karimzadeh, S.; Matsuoka, M. Earthquake Damage Region Detection by Multitemporal Coherence Map Analysis of Radar and Multispectral Imagery. Remote Sens. 2021, 13, 1195. [Google Scholar] [CrossRef]

- Feng, W.; Samsonov, S.; Almeida, R.; Yassaghi, A.; Li, J.; Qiu, Q.; Zheng, W. Geodetic constraints of the 2017 Mw7. 3 Sarpol Zahab, Iran earthquake, and its implications on the structure and mechanics of the northwest Zagros thrust-fold belt. Geophys. Res. Lett. 2018, 45, 6853–6861. [Google Scholar] [CrossRef]

- Karimzadeh, S.; Matsuoka, M.; Kuang, J.; Ge, L. Spatial Prediction of Aftershocks Triggered by a Major Earthquake: A Binary Machine Learning Perspective. ISPRS Int. J. Geoinf. 2019, 8, 462. [Google Scholar] [CrossRef] [Green Version]

- Tian, J.; Wang, L.; Li, X.; Gong, H.; Shi, C.; Zhong, R.; Liu, X. Comparison of UAV and WorldView-2 imagery for mapping leaf area index of mangrove forest. Int. J. Appl. Earth Obs. Geoinf. 2017, 61, 22–31. [Google Scholar] [CrossRef]

- Geo-Informatics and Space Technology Development Agency. Worldview-2. 2020. Available online: https://www.gistda.or.th/main/system/files_force/satellite/104/file/2534-m-worldview2-datasheet.pdf?download=1.pdf (accessed on 9 September 2019).

- WorldView-2. WorldView-2 Satellite Sensor. 2019. Available online: https://www.satimagingcorp.com/satellite-sensors/worldview-2/ (accessed on 4 April 2019).

- Digital Globe. The Benefits of the 8 Spectral Bands of WorldView-2. 2009. Available online: http://worldview2.digitalglobe.com/docs/WorldView-2_8-Band_Applications_Whitepaper.pdf (accessed on 20 July 2011).

- Zhou, H.; Liu, Q.; Xu, Q.; Wang, Y. Pan-Sharpening with a CNN-Based Two Stage Ratio Enhancement Method. In Proceedings of the IGARSS IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 216–219. [Google Scholar] [CrossRef]

- Wang, P.; Alganci, U.; Sertel, E. Comparative Analysis on Deep Learning based Pan-sharpening of Very High-Resolution Satellite Images. Int. J. Environ. Geoinform. 2021, 8, 150–165. [Google Scholar] [CrossRef]

- Pendyala, V.G.K.; Kalluri, H.K.; Rao, V.C. Assessment of Suitable Image Fusion Method for CARTOSAT-2E Satellite Urban Imagery for Automatic Feature Extraction. Adv. Model. Anal. B 2020, 63, 26–32. [Google Scholar] [CrossRef]

- Janalipour, M.; Mohammadzadeh, A. Building Damage Detection Using Object-Based Image Analysis and ANFIS From High-Resolution Image (Case Study: BAM Earthquake, Iran). IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 9, 1937–1945. [Google Scholar] [CrossRef]

- Fallatah, A.; Jones, S.; Mitchell, D. Object-based random forest classification for informal settlements identification in the Middle East: Jeddah a case study. Int. J. Remote Sens. 2020, 41, 4421–4445. [Google Scholar] [CrossRef]

- Kuffer, M.; Pfeffer, K.; Sliuzas, R. Slums from Space—15 Years of Slum Mapping Using Remote Sensing. Remote Sens. 2016, 8, 455. [Google Scholar] [CrossRef] [Green Version]

- Bendini, N.; Fonseca, M.; Soares, R.; Rufin, P.; Schwieder, M.; Rodrigues, A.; Hostert, P. Applying A Phenological Object-Based Image Analysis (Phenobia) for Agricultural Land Classification: A Study Case in the Brazilian Cerrado. In Proceedings of the IGARSS, IEEE International Geoscience and Remote Sensing Symposium, Brussels, Belgium, 26 September–2 October 2020; pp. 1078–1081. [Google Scholar]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef] [Green Version]

- Lang, S. Object-based image analysis for remote sensing applications: Modeling reality—Dealing with complexity. In Object-Based Image Analysis; Springer: Berlin/Heidelberg, Germany, 2008; pp. 3–27. [Google Scholar] [CrossRef]

- Belgiu, M.; Csillik, O. Sentinel-2 cropland mapping using pixel-based and object-based time-weighted dynamic time warping analysis. Remote Sens. Environ. 2018, 204, 509–523. [Google Scholar] [CrossRef]

- Najafi, P.; Navid, H.; Feizizadeh, B.; Eskandari, I. Object-based satellite image analysis applied for crop residue estimating using Landsat OLI imagery. Int. J. Remote Sens. 2018, 39, 6117–6136. [Google Scholar] [CrossRef]

- Feizizadeh, B. A Novel Approach of Fuzzy Dempster–Shafer Theory for Spatial Uncertainty Analysis and Accuracy Assessment of Object-Based Image Classification. IEEE Geosci. Remote Sens. Lett. 2017, 15, 18–22. [Google Scholar] [CrossRef]

- Qiu, B.; Zhang, K.; Tang, Z.; Chen, C.; Wang, Z. Developing soil indices based on brightness, darkness, and greenness to improve land surface mapping accuracy. GISci. Remote Sens. 2017, 54, 759–777. [Google Scholar] [CrossRef]

- Blaschke, T.; Feizizadeh, B.; Holbling, D. Object-Based Image Analysis and Digital Terrain Analysis for Locating Landslides in the Urmia Lake Basin, Iran. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 4806–4817. [Google Scholar] [CrossRef]

- Desheng, L.; Xia, F. Assessing object-based classification: Advantages and limitations. Remote Sens. Lett. 2010, 4, 187–194. [Google Scholar]

- Ghamisi, P.; Benediktsson, J.A.; Cavallaro, G.; Plaza, A. Automatic Framework for Spectral–Spatial Classification Based on Supervised Feature Extraction and Morphological Attribute Profiles. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2147–2160. [Google Scholar] [CrossRef]

- Zehtabian, A.; Ghassemian, H. Automatic Object-Based Image Classification Using Complex Diffusions and a New Distance Metric. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4106–4114. [Google Scholar] [CrossRef]

- Xu, H. Rule-based impervious surface mapping using high spatial resolution imagery. Int. J. Remote Sens. 2012, 34, 27–44. [Google Scholar] [CrossRef]

- Hamedianfar, A.; Shafri, M. Detailed intra-urban mapping through transferable OBIA rule sets using WorldView-2 very-high-resolution satellite images. Int. J. Remote Sens. 2015, 36, 3380–3396. [Google Scholar] [CrossRef]

- Qiu, B.; Liu, Z.; Tang, Z.; Chen, C. Developing indices of temporal dispersion and continuity to map natural vegetation. Ecol. Indic. 2016, 64, 335–342. [Google Scholar] [CrossRef]

- Stumpf, A.; Kerle, N. Object-oriented mapping of landslides using Random Forests. Remote Sens. Environ. 2011, 115, 2564–2577. [Google Scholar] [CrossRef]

- Blaschke, T.; Hay, G.J.; Kelly, M.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.Q.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2013, 87, 180–191. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Feizizadeh, B.; Blaschke, T.; Tiede, D.; Moghaddam, M.H.R. Evaluating fuzzy operators of an object-based image analysis for detecting landslides and their changes. Geomorphology 2017, 293, 240–254. [Google Scholar] [CrossRef]

- Wright, J.; Lillesand, T.M.; Kiefer, R.W. Remote Sensing and Image Interpretation. Geogr. J. 1980, 146, 448. [Google Scholar] [CrossRef]

- Karimzadeh, S.; Miyajima, M.; Hassanzadeh, R.; Amiraslanzadeh, R.; Kamel, B. A GIS-based seismic hazard, building vulnerability and human loss assessment for the earthquake scenario in Tabriz. Soil Dyn. Earthq. Eng. 2014, 66, 263–280. [Google Scholar] [CrossRef]

- Karimzadeh, S.; Mastuoka, M. Building Damage Assessment Using Multisensor Dual-Polarized Synthetic Aperture Radar Data for the 2016 M 6.2 Amatrice Earthquake, Italy. Remote Sens. 2017, 9, 330. [Google Scholar] [CrossRef] [Green Version]

- Karimzadeh, S.; Matsuoka, M. A Weighted Overlay Method for Liquefaction-Related Urban DamageDetection: A Case Study of the 6 September 2018 Hokkaido Eastern Iburi Earthquake, Japan. Geosciences 2018, 8, 487. [Google Scholar] [CrossRef] [Green Version]

- Miura, H.; Midorikawa, S.; Matsuoka, M. Building Damage Assessment Using High-Resolution Satellite SAR Images of the 2010 Haiti Earthquake. Earthq. Spectra 2016, 32, 591–610. [Google Scholar] [CrossRef]

- Dong, L.; Shan, J. A comprehensive review of earthquake-induced building damage detection with remote sensing techniques. ISPRS J. Photogramm. Remote Sens. 2013, 84, 85–99. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).