Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning

Abstract

1. Introduction

2. Materials

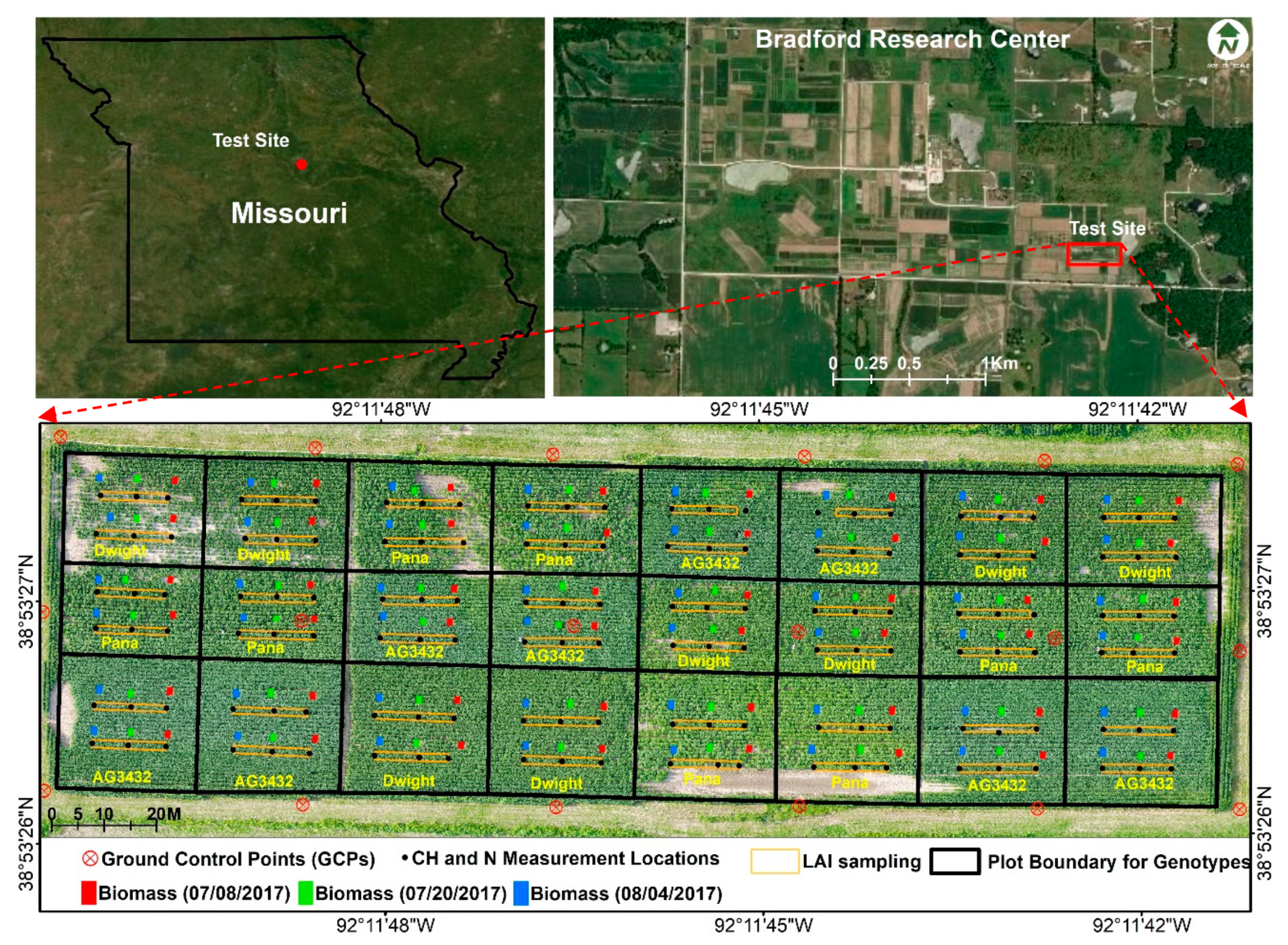

2.1. Test Site and Field Layout

2.2. Data Acquisition

2.2.1. Field Data Collection

2.2.2. Remote Sensing Data

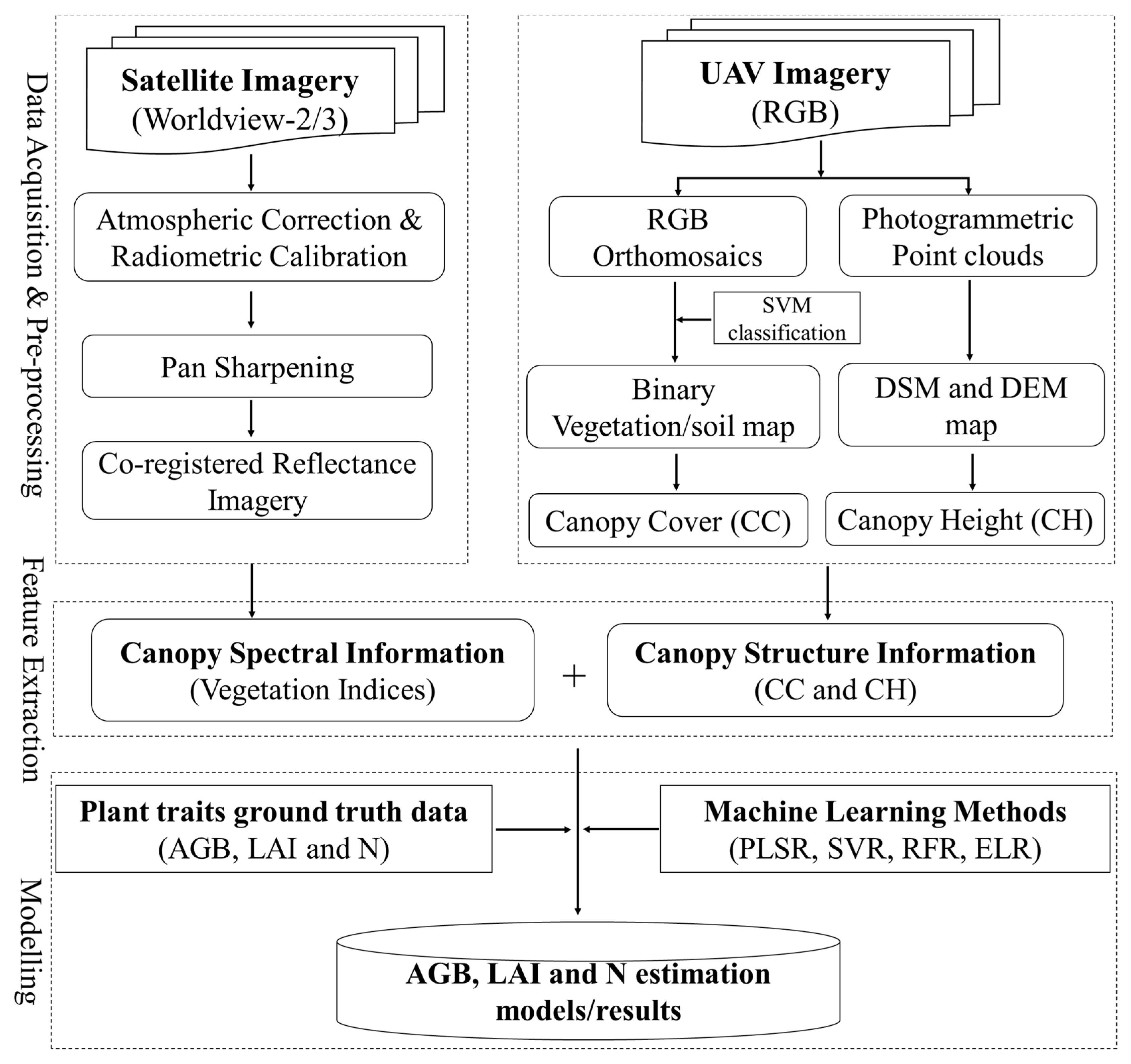

2.3. Image Preprocessing

2.3.1. Worldview Data Preprocessing

2.3.2. UAV Imagery Preprocessing

3. Methods

3.1. Feature Extraction

3.1.1. Satellite Imagery-Based Spectral Feature Extraction

3.1.2. UAV Imagery-Based Canopy Height Extraction

3.1.3. UAV Imagery-Based Canopy Cover Extraction

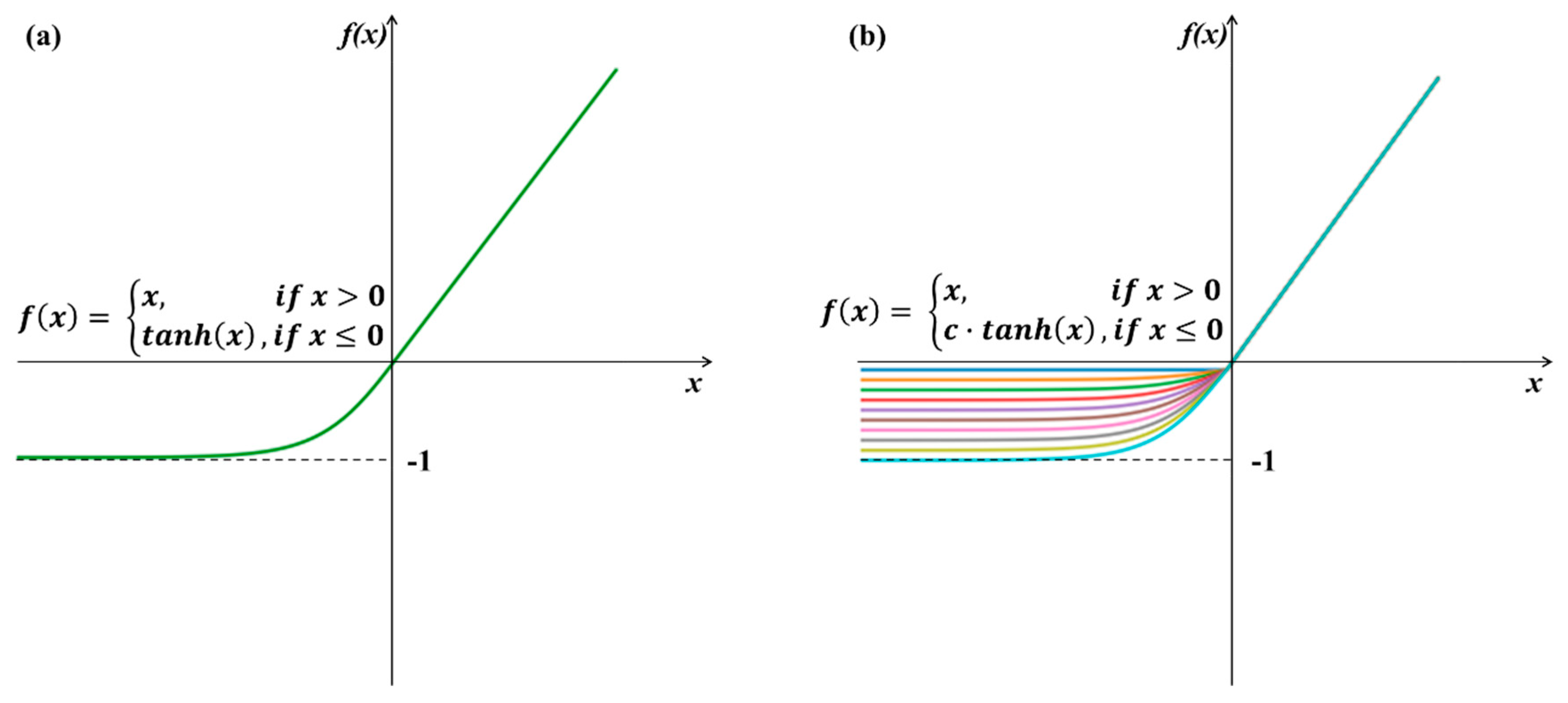

3.2. Modeling Methods

4. Results

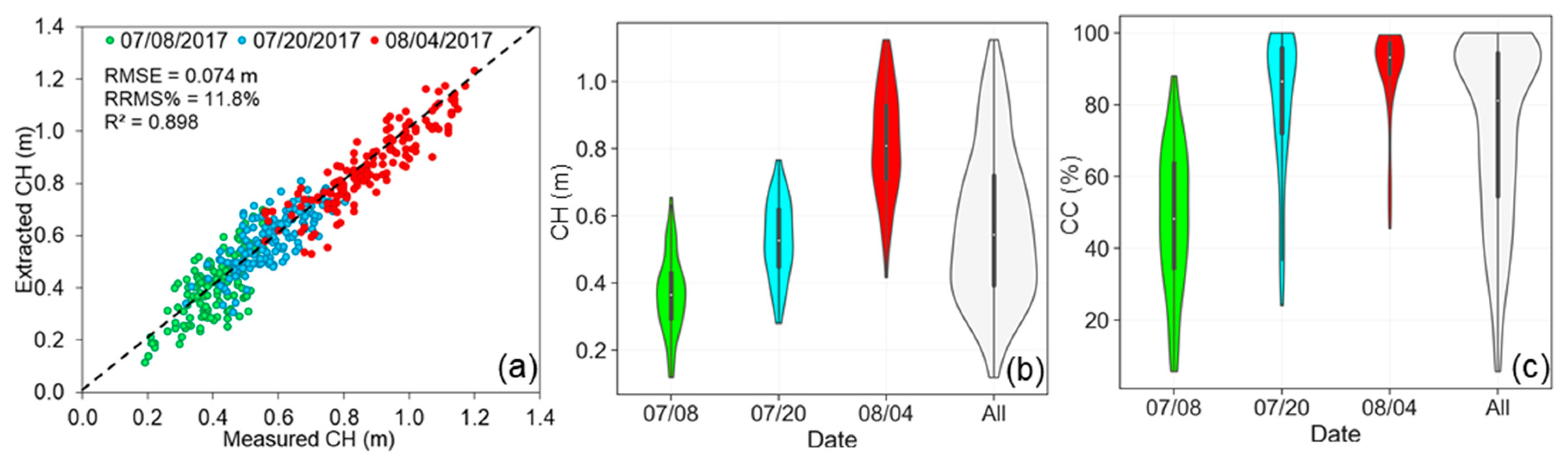

4.1. Distribution of Canopy Height and Canopy Cover

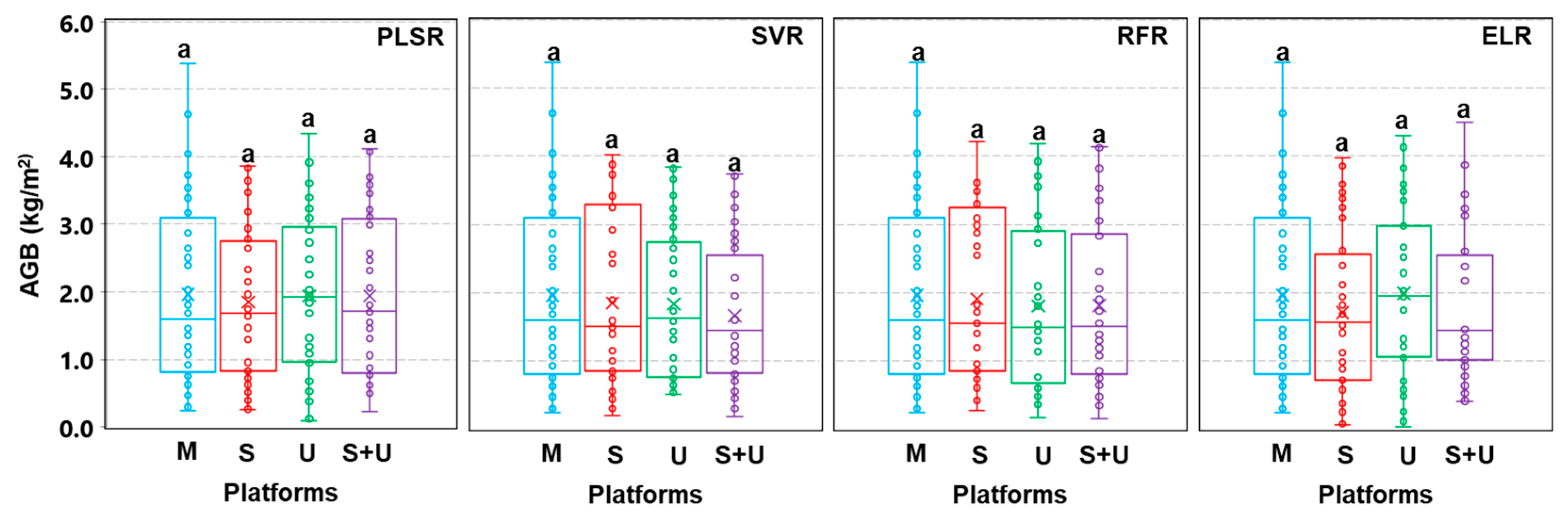

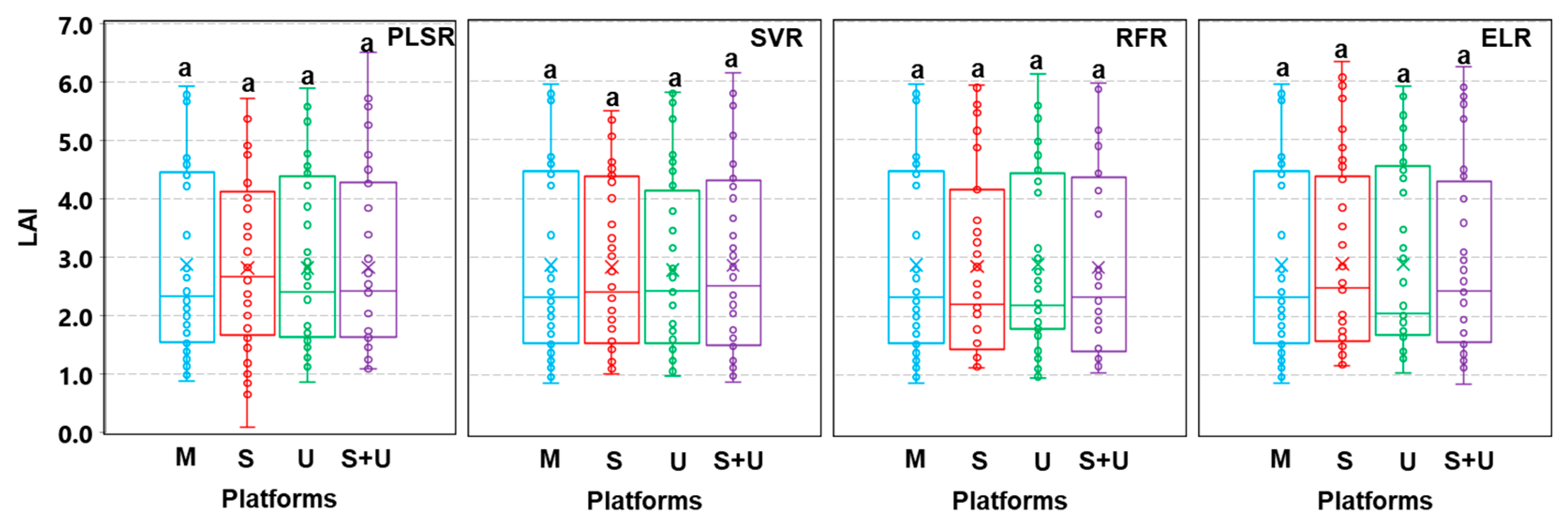

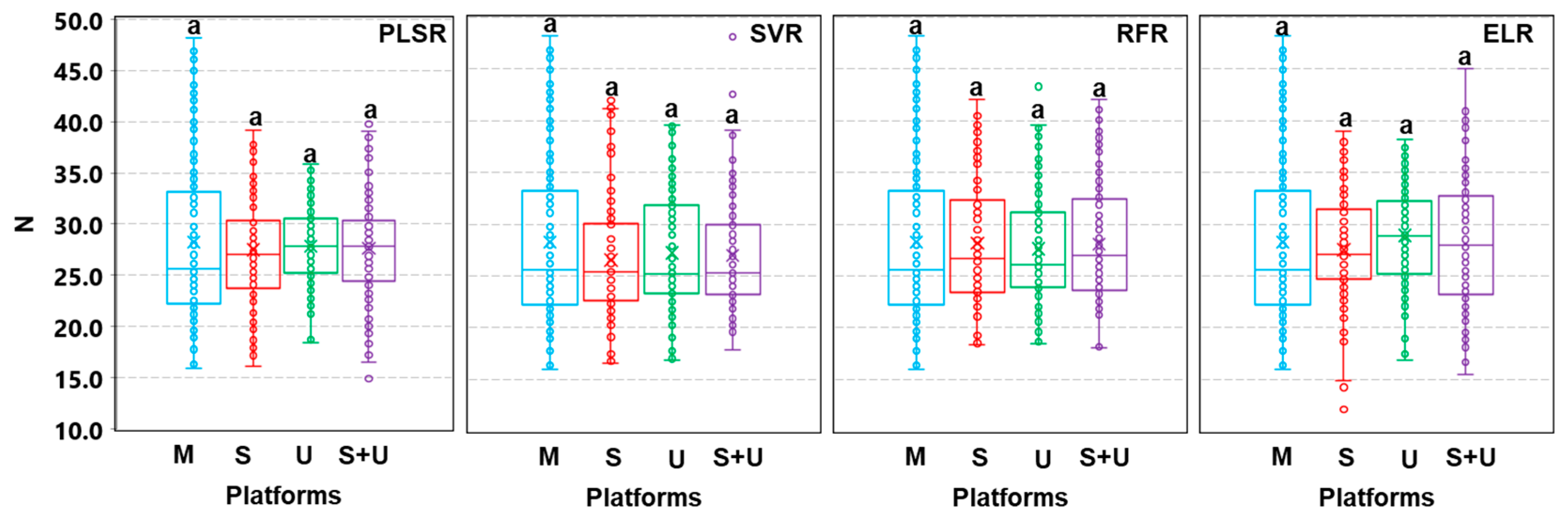

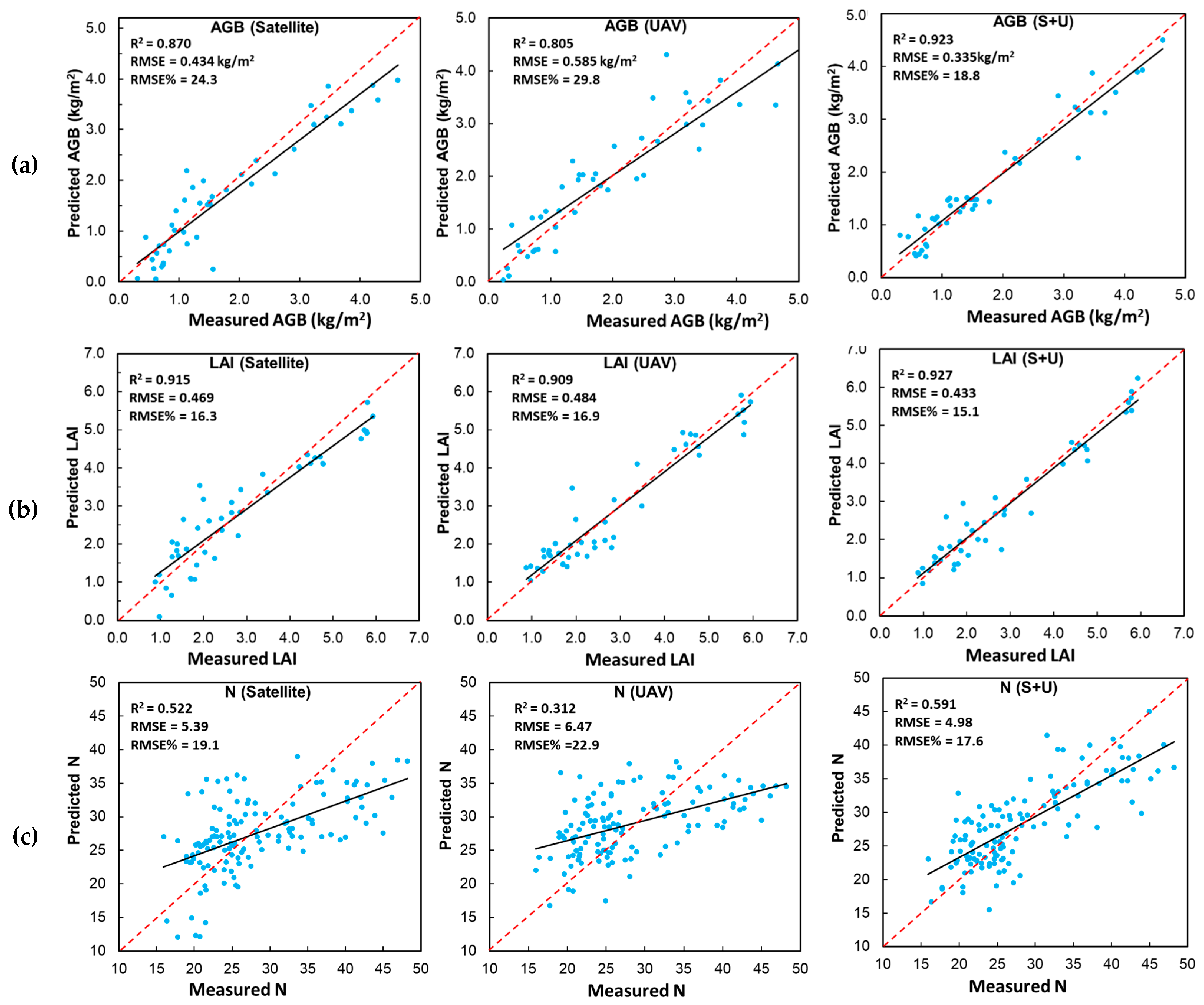

4.2. Estimation Results of AGB, LAI, and N

5. Discussion

5.1. Satellite/UAV Data Fusion and Its Impact on Model Performance

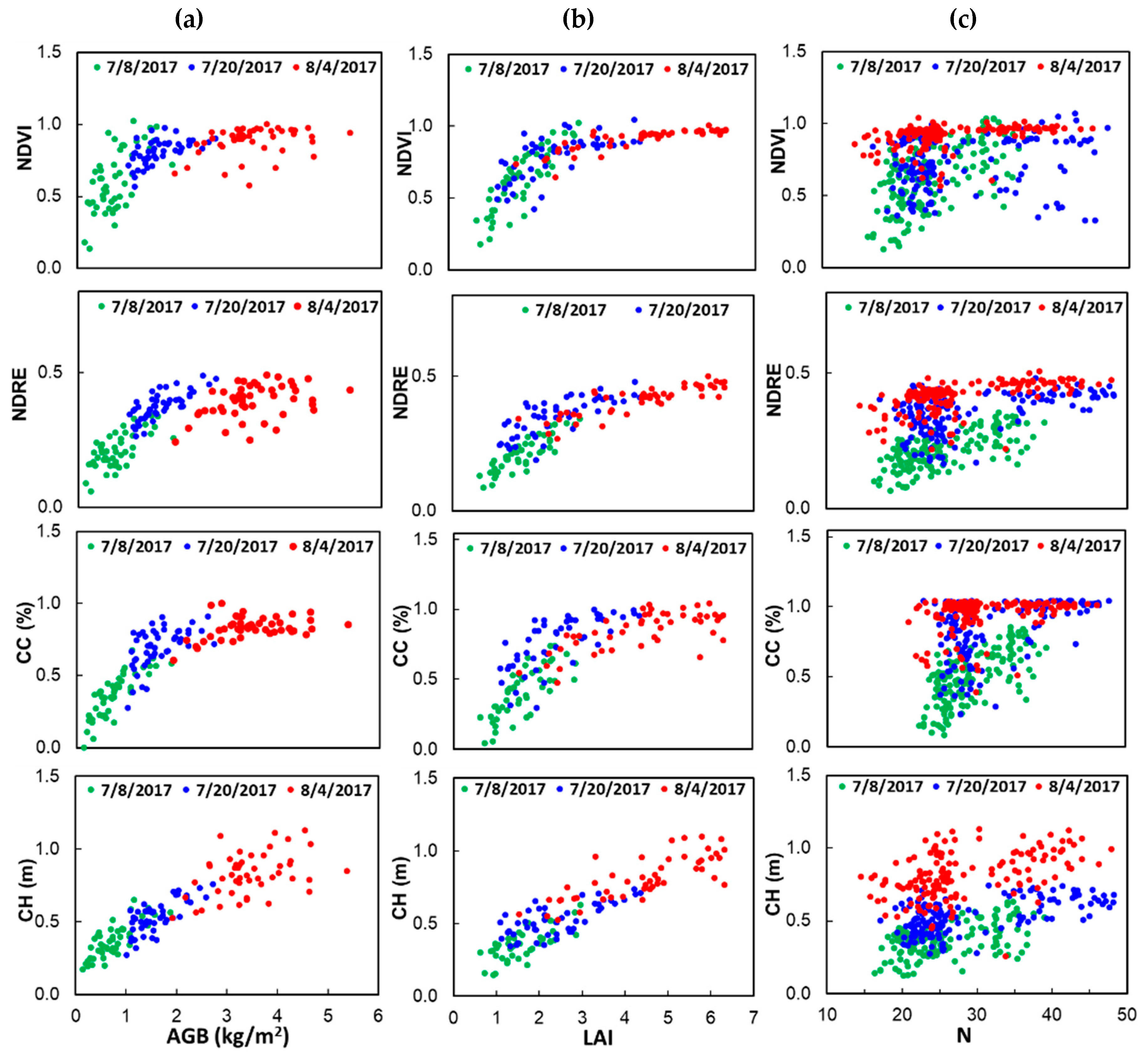

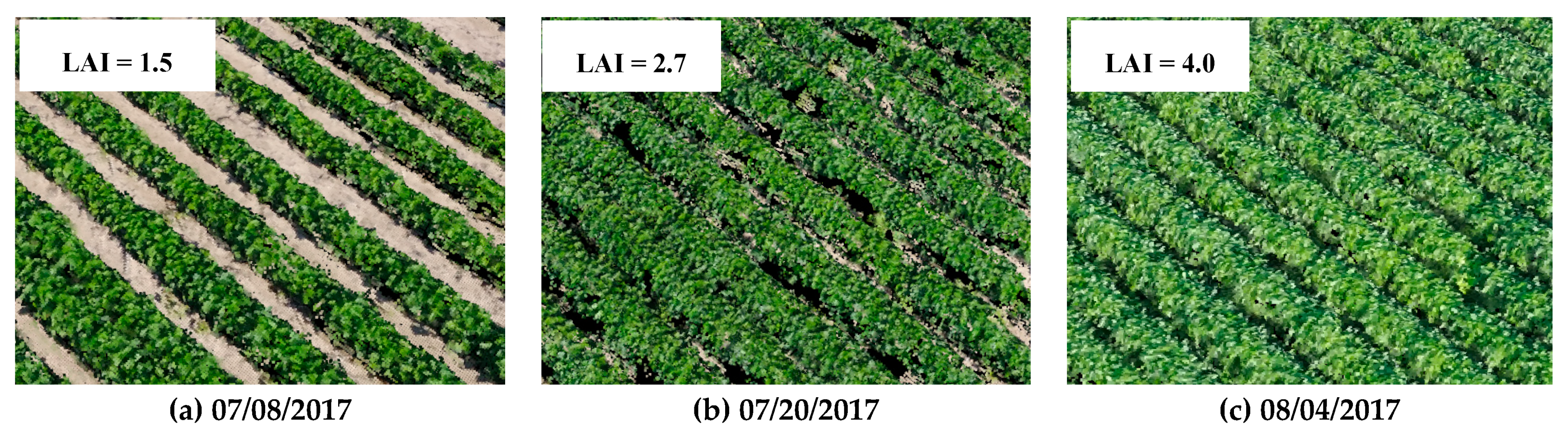

5.2. Canopy Structure Information and Spectral Saturation Issue

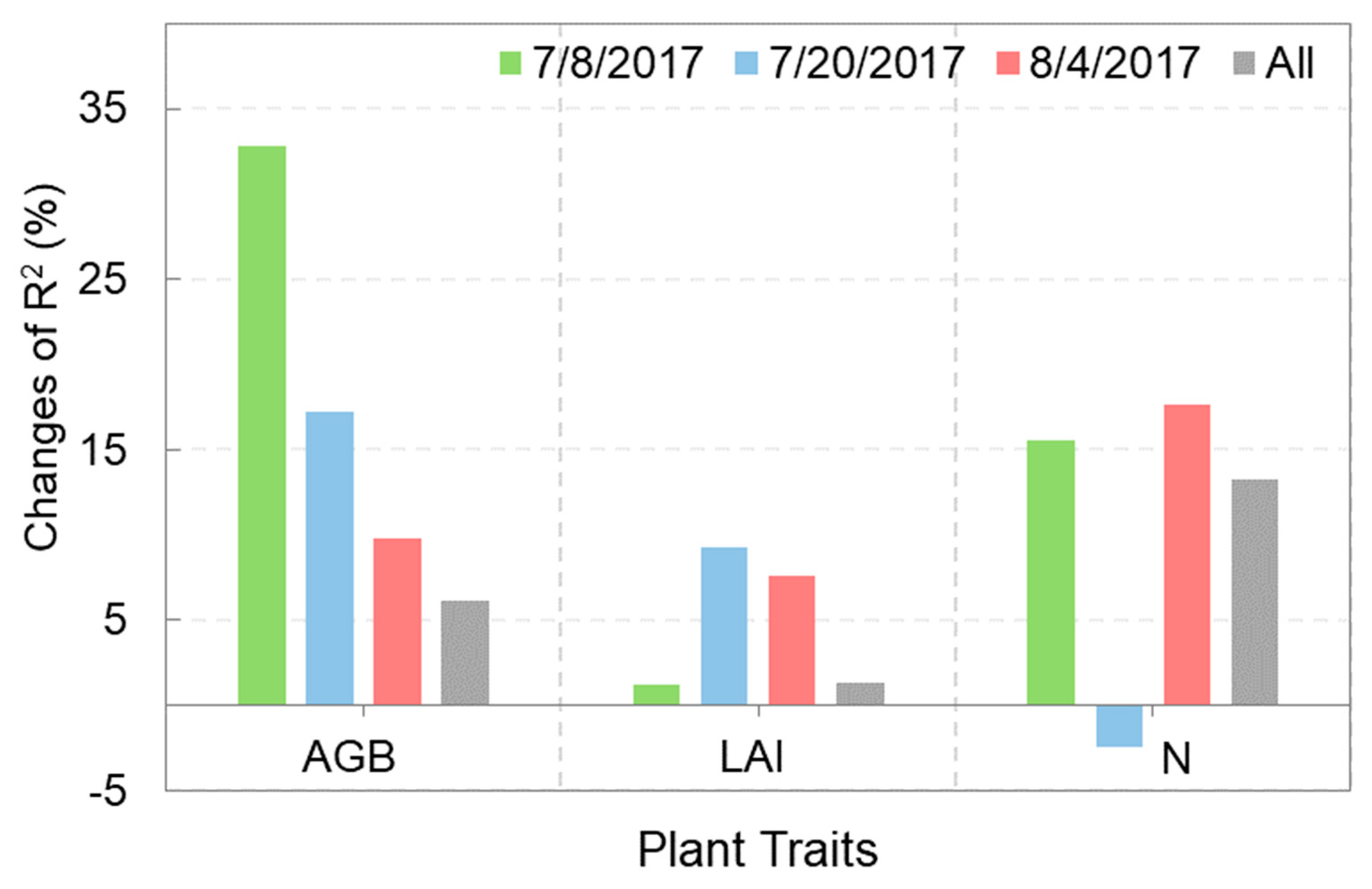

5.3. Contribution of Data Fusion at Different Crop Developmental Stages

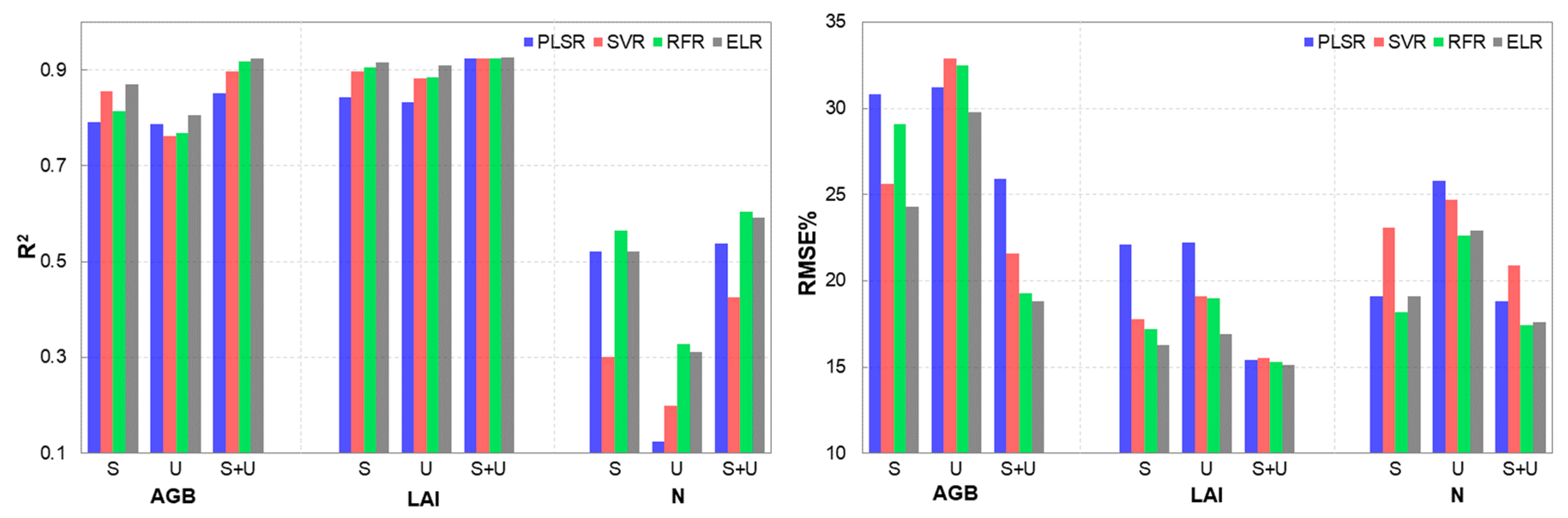

5.4. Comparison of Different Modeling Methods

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Schut, A.G.; Traore, P.C.S.; Blaes, X.; Rolf, A. Assessing yield and fertilizer response in heterogeneous smallholder fields with UAVs and satellites. Field Crop. Res. 2018, 221, 98–107. [Google Scholar] [CrossRef]

- Araus, J.L.; Cairns, J.E. Field high-throughput phenotyping: The new crop breeding frontier. Trends Plant Sci. 2014, 19, 52–61. [Google Scholar] [CrossRef]

- Yu, N.; Li, L.; Schmitz, N.; Tian, L.F.; Greenberg, J.A.; Diers, B.W. Development of methods to improve soybean yield estimation and predict plant maturity with an unmanned aerial vehicle based platform. Remote Sens. Environ. 2016, 187, 91–101. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Ghulam, A.; Sidike, P.; Hartling, S.; Maimaitiyiming, M.; Peterson, K.; Shavers, E.; Fishman, J.; Peterson, J.; Kadam, S. Unmanned Aerial System (UAS)-based phenotyping of soybean using multi-sensor data fusion and extreme learning machine. ISPRS J. Photogramm. Remote Sens. 2017, 134, 43–58. [Google Scholar] [CrossRef]

- Marshall, M.; Thenkabail, P. Advantage of hyperspectral EO-1 Hyperion over multispectral IKONOS, GeoEye-1, WorldView-2, Landsat ETM plus, and MODIS vegetation indices in crop biomass estimation. ISPRS J. Photogramm. Remote Sens. 2015, 108, 205–218. [Google Scholar] [CrossRef]

- Liu, J.G.; Pattey, E.; Jego, G. Assessment of vegetation indices for regional crop green LAI estimation from Landsat images over multiple growing seasons. Remote Sens. Environ. 2012, 123, 347–358. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Eblimit, K.; Peterson, K.T.; Hartling, S.; Esposito, F.; Khanal, K.; Newcomb, M.; Pauli, D. Uav-based high resolution thermal imaging for vegetation monitoring, and plant phenotyping using ici 8640 p, flir vue pro r 640, and thermomap cameras. Remote Sens. 2019, 11, 330. [Google Scholar] [CrossRef]

- Moeckel, T.; Safari, H.; Reddersen, B.; Fricke, T.; Wachendorf, M. Fusion of ultrasonic and spectral sensor data for improving the estimation of biomass in grasslands with heterogeneous sward structure. Remote Sens. 2017, 9, 98. [Google Scholar] [CrossRef]

- Jackson, R.D.; Huete, A.R. Interpreting vegetation indices. Prev. Vet. Med. 1991, 11, 185–200. [Google Scholar] [CrossRef]

- Wang, C.; Nie, S.; Xi, X.H.; Luo, S.Z.; Sun, X.F. Estimating the Biomass of Maize with Hyperspectral and LiDAR Data. Remote Sens. 2017, 9, 11. [Google Scholar] [CrossRef]

- Greaves, H.E.; Vierling, L.A.; Eitel, J.U.H.; Boelman, N.T.; Magney, T.S.; Prager, C.M.; Griffin, K.L. Estimating aboveground biomass and leaf area of low-stature Arctic shrubs with terrestrial LiDAR. Remote Sens. Environ. 2015, 164, 26–35. [Google Scholar] [CrossRef]

- Rischbeck, P.; Elsayed, S.; Mistele, B.; Barmeier, G.; Heil, K.; Schmidhalter, U. Data fusion of spectral, thermal and canopy height parameters for improved yield prediction of drought stressed spring barley. Eur. J. Agron. 2016, 78, 44–59. [Google Scholar] [CrossRef]

- Puliti, S.; Saarela, S.; Gobakken, T.; Ståhl, G.; Næsset, E. Combining UAV and Sentinel-2 auxiliary data for forest growing stock volume estimation through hierarchical model-based inference. Remote Sens. Environ. 2018, 204, 485–497. [Google Scholar] [CrossRef]

- Mutanga, O.; Skidmore, A.K. Narrow band vegetation indices overcome the saturation problem in biomass estimation. Int. J. Remote Sens. 2004, 25, 3999–4014. [Google Scholar] [CrossRef]

- Delegido, J.; Verrelst, J.; Meza, C.; Rivera, J.; Alonso, L.; Moreno, J. A red-edge spectral index for remote sensing estimation of green LAI over agroecosystems. Eur. J. Agron. 2013, 46, 42–52. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Pattey, E.; Zarco-Tejada, P.J.; Strachan, I.B. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Delegido, J.; Verrelst, J.; Alonso, L.; Moreno, J. Evaluation of sentinel-2 red-edge bands for empirical estimation of green LAI and chlorophyll content. Sensors 2011, 11, 7063–7081. [Google Scholar] [CrossRef]

- Clevers, J.G.; Gitelson, A.A. Remote estimation of crop and grass chlorophyll and nitrogen content using red-edge bands on Sentinel-2 and-3. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 344–351. [Google Scholar] [CrossRef]

- Wang, W.; Yao, X.; Yao, X.; Tian, Y.; Liu, X.; Ni, J.; Cao, W.; Zhu, Y. Estimating leaf nitrogen concentration with three-band vegetation indices in rice and wheat. Field Crops Res. 2012, 129, 90–98. [Google Scholar] [CrossRef]

- Vaglio Laurin, G.; Pirotti, F.; Callegari, M.; Chen, Q.; Cuozzo, G.; Lingua, E.; Notarnicola, C.; Papale, D. Potential of ALOS2 and NDVI to estimate forest above-ground biomass, and comparison with lidar-derived estimates. Remote Sens. 2017, 9, 18. [Google Scholar] [CrossRef]

- Schmidt, M.; Carter, J.; Stone, G.; O’Reagain, P. Integration of optical and X-band radar data for pasture biomass estimation in an open savannah woodland. Remote Sens. 2016, 8, 989. [Google Scholar] [CrossRef]

- Dalponte, M.; Ørka, H.O.; Ene, L.T.; Gobakken, T.; Næsset, E. Tree crown delineation and tree species classification in boreal forests using hyperspectral and ALS data. Remote Sens. Environ. 2014, 140, 306–317. [Google Scholar] [CrossRef]

- Hartling, S.; Sagan, V.; Sidike, P.; Maimaitijiang, M.; Carron, J. Urban Tree Species Classification Using a WorldView-2/3 and LiDAR Data Fusion Approach and Deep Learning. Sensors 2019, 19, 1284. [Google Scholar] [CrossRef] [PubMed]

- Blázquez-Casado, Á.; Calama, R.; Valbuena, M.; Vergarechea, M.; Rodríguez, F. Combining low-density LiDAR and satellite images to discriminate species in mixed Mediterranean forest. Ann. For. Sci. 2019, 76, 57. [Google Scholar] [CrossRef]

- Badreldin, N.; Sanchez-Azofeifa, A. Estimating forest biomass dynamics by integrating multi-temporal Landsat satellite images with ground and airborne LiDAR data in the Coal Valley Mine, Alberta, Canada. Remote Sens. 2015, 7, 2832–2849. [Google Scholar] [CrossRef]

- Cao, L.; Pan, J.; Li, R.; Li, J.; Li, Z. Integrating airborne LiDAR and optical data to estimate forest aboveground biomass in arid and semi-arid regions of China. Remote Sens. 2018, 10, 532. [Google Scholar] [CrossRef]

- Zhang, L.; Shao, Z.; Liu, J.; Cheng, Q. Deep Learning Based Retrieval of Forest Aboveground Biomass from Combined LiDAR and Landsat 8 Data. Remote Sens. 2019, 11, 1459. [Google Scholar] [CrossRef]

- Pope, G.; Treitz, P. Leaf area index (LAI) estimation in boreal mixedwood forest of Ontario, Canada using light detection and ranging (LiDAR) and WorldView-2 imagery. Remote Sens. 2013, 5, 5040–5063. [Google Scholar] [CrossRef]

- Rutledge, A.M.; Popescu, S.C. Using LiDAR in determining forest canopy parameters. In Proceedings of the ASPRS 2006 Annual Conference, Reno, NV, USA, 1–5 May 2006. [Google Scholar]

- Jensen, J.L.; Humes, K.S.; Vierling, L.A.; Hudak, A.T. Discrete return lidar-based prediction of leaf area index in two conifer forests. Remote Sens. Environ. 2008, 112, 3947–3957. [Google Scholar] [CrossRef]

- Thomas, V.; Treitz, P.; Mccaughey, J.; Noland, T.; Rich, L. Canopy chlorophyll concentration estimation using hyperspectral and lidar data for a boreal mixedwood forest in northern Ontario, Canada. Int. J. Remote Sens. 2008, 29, 1029–1052. [Google Scholar] [CrossRef]

- Tonolli, S.; Dalponte, M.; Neteler, M.; Rodeghiero, M.; Vescovo, L.; Gianelle, D. Fusion of airborne LiDAR and satellite multispectral data for the estimation of timber volume in the Southern Alps. Remote Sens. Environ. 2011, 115, 2486–2498. [Google Scholar] [CrossRef]

- Dash, J.P.; Watt, M.S.; Bhandari, S.; Watt, P. Characterising forest structure using combinations of airborne laser scanning data, RapidEye satellite imagery and environmental variables. Forestry 2015, 89, 159–169. [Google Scholar] [CrossRef]

- Hütt, C.; Schiedung, H.; Tilly, N.; Bareth, G. Fusion of high resolution remote sensing images and terrestrial laser scanning for improved biomass estimation of maize. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, XL-7, 101–108. [Google Scholar]

- Li, W.; Niu, Z.; Wang, C.; Huang, W.; Chen, H.; Gao, S.; Li, D.; Muhammad, S. Combined use of airborne LiDAR and satellite GF-1 data to estimate leaf area index, height, and aboveground biomass of maize during peak growing season. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4489–4501. [Google Scholar] [CrossRef]

- Höfle, B. Radiometric correction of terrestrial LiDAR point cloud data for individual maize plant detection. IEEE Geosci. Remote Sens. Lett. 2013, 11, 94–98. [Google Scholar] [CrossRef]

- Dandois, J.P.; Ellis, E.C. High spatial resolution three-dimensional mapping of vegetation spectral dynamics using computer vision. Remote Sens. Environ. 2013, 136, 259–276. [Google Scholar] [CrossRef]

- Walter, J.; Edwards, J.; McDonald, G.; Kuchel, H. Photogrammetry for the estimation of wheat biomass and harvest index. Field Crops Res. 2018, 216, 165–174. [Google Scholar] [CrossRef]

- Sankaran, S.; Khot, L.R.; Espinoza, C.Z.; Jarolmasjed, S.; Sathuvalli, V.R.; Vandemark, G.J.; Miklas, P.N.; Carter, A.H.; Pumphrey, M.O.; Knowles, N.R. Low-altitude, high-resolution aerial imaging systems for row and field crop phenotyping: A review. Eur. J. Agron. 2015, 70, 112–123. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Hartling, S.; Esposito, F.; Fritschi, F.B. Soybean yield prediction from UAV using multimodal data fusion and deep learning. Remote Sens. Environ. 2020, 237, 111599. [Google Scholar] [CrossRef]

- Sidike, P.; Sagan, V.; Qumsiyeh, M.; Maimaitijiang, M.; Essa, A.; Asari, V. Adaptive trigonometric transformation function with image contrast and color enhancement: Application to unmanned aerial system imagery. IEEE Geosci. Remote Sens. Lett. 2018, 15, 404–408. [Google Scholar] [CrossRef]

- Maimaitijiang, M.; Sagan, V.; Sidike, P.; Maimaitiyiming, M.; Hartling, S.; Peterson, K.T.; Maw, M.J.; Shakoor, N.; Mockler, T.; Fritschi, F.B. Vegetation index weighted canopy volume model (CVMVI) for soybean biomass estimation from unmanned aerial system-based RGB imagery. ISPRS J. Photogramm. Remote Sens. 2019, 151, 27–41. [Google Scholar] [CrossRef]

- Kachamba, D.J.; Orka, H.O.; Naesset, E.; Eid, T.; Gobakken, T. Influence of Plot Size on Efficiency of Biomass Estimates in Inventories of Dry Tropical Forests Assisted by Photogrammetric Data from an Unmanned Aircraft System. Remote Sens. 2017, 9, 610. [Google Scholar] [CrossRef]

- White, J.C.; Wulder, M.A.; Vastaranta, M.; Coops, N.C.; Pitt, D.; Woods, M. The Utility of Image-Based Point Clouds for Forest Inventory: A Comparison with Airborne Laser Scanning. Forests 2013, 4, 518–536. [Google Scholar] [CrossRef]

- Li, W.; Niu, Z.; Chen, H.Y.; Li, D.; Wu, M.Q.; Zhao, W. Remote estimation of canopy height and aboveground biomass of maize using high-resolution stereo images from a low-cost unmanned aerial vehicle system. Ecol. Indic. 2016, 67, 637–648. [Google Scholar] [CrossRef]

- Dash, J.; Pearse, G.; Watt, M. UAV multispectral imagery can complement satellite data for monitoring forest health. Remote Sens. 2018, 10, 1216. [Google Scholar] [CrossRef]

- Sagan, V.; Maimaitijiang, M.; Sidike, P.; Maimaitiyiming, M.; Erkbol, H.; Hartling, S.; Peterson, K.; Peterson, J.; Burken, J.; Fritschi, F. Uav/satellite Multiscale Data Fusion for Crop Monitoring and Early Stress Detection. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2019, XLII-2/W13, 715–722. [Google Scholar] [CrossRef]

- Meacham-Hensold, K.; Montes, C.M.; Wu, J.; Guan, K.; Fu, P.; Ainsworth, E.A.; Pederson, T.; Moore, C.E.; Brown, K.L.; Raines, C. High-throughput field phenotyping using hyperspectral reflectance and partial least squares regression (PLSR) reveals genetic modifications to photosynthetic capacity. Remote Sens. Environ. 2019, 231, 111176. [Google Scholar] [CrossRef]

- Wang, L.; Chang, Q.; Li, F.; Yan, L.; Huang, Y.; Wang, Q.; Luo, L. Effects of Growth Stage Development on Paddy Rice Leaf Area Index Prediction Models. Remote Sens. 2019, 11, 361. [Google Scholar] [CrossRef]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhu, Q.-Y.; Siew, C.-K. Extreme learning machine: Theory and applications. Neurocomputing 2006, 70, 489–501. [Google Scholar] [CrossRef]

- Sidike, P.; Asari, V.K.; Sagan, V. Progressively Expanded Neural Network (PEN Net) for hyperspectral image classification: A new neural network paradigm for remote sensing image analysis. ISPRS J. Photogramm. Remote Sens. 2018, 146, 161–181. [Google Scholar] [CrossRef]

- Sidike, P.; Chen, C.; Asari, V.; Xu, Y.; Li, W. Classification of hyperspectral image using multiscale spatial texture features. In Proceedings of the 2016 8th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Los Angeles, CA, USA, 21–24 August 2016; pp. 1–4. [Google Scholar]

- Huang, G.B.; Zhou, H.M.; Ding, X.J.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. Part B 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Cao, F.; Yang, Z.; Ren, J.; Chen, W.; Han, G.; Shen, Y. Local block multilayer sparse extreme learning machine for effective feature extraction and classification of hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5580–5594. [Google Scholar] [CrossRef]

- Peterson, K.T.; Sagan, V.; Sidike, P.; Hasenmueller, E.A.; Sloan, J.J.; Knouft, J.H. Machine Learning-Based Ensemble Prediction of Water-Quality Variables Using Feature-Level and Decision-Level Fusion with Proximal Remote Sensing. Photogramm. Eng. Remote Sens. 2019, 85, 269–280. [Google Scholar] [CrossRef]

- Sidike, P.; Sagan, V.; Maimaitijiang, M.; Maimaitiyiming, M.; Shakoor, N.; Burken, J.; Mockler, T.; Fritschi, F.B. dPEN: Deep Progressively Expanded Network for mapping heterogeneous agricultural landscape using WorldView-3 satellite imagery. Remote Sens. Environ. 2019, 221, 756–772. [Google Scholar] [CrossRef]

- ENVI, A.C.M. QUAC and FLAASH User’s Guide. Atmospheric Correction Module Version 4.7; ITT Visual Information Solutions: Boulder, CO, USA, 2009. [Google Scholar]

- Gitelson, A.A.; Vina, A.; Ciganda, V.; Rundquist, D.C.; Arkebauer, T.J. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.; Schell, J.; Deering, D. Monitoring vegetation systems in the Great Plains with ERTS. NASA Spec. Publ. 1974, 351, 309. [Google Scholar]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Merzlyak, M.N. Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote Sens. 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Huete, A.; Didan, K.; Miura, T.; Rodriguez, E.P.; Gao, X.; Ferreira, L.G. Overview of the radiometric and biophysical performance of the MODIS vegetation indices. Remote Sens. Environ. 2002, 83, 195–213. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Daughtry, C.; Walthall, C.; Kim, M.; De Colstoun, E.B.; McMurtrey Iii, J. Estimating corn leaf chlorophyll concentration from leaf and canopy reflectance. Remote Sens. Environ. 2000, 74, 229–239. [Google Scholar] [CrossRef]

- Haboudane, D.; Miller, J.R.; Tremblay, N.; Zarco-Tejada, P.J.; Dextraze, L. Integrated narrow-band vegetation indices for prediction of crop chlorophyll content for application to precision agriculture. Remote Sens. Environ. 2002, 81, 416–426. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [PubMed]

- Penuelas, J.; Baret, F.; Filella, I. Semi-empirical indices to assess carotenoids/chlorophyll a ratio from leaf spectral reflectance. Photosynthetica 1995, 31, 221–230. [Google Scholar]

- Baret, F.; Guyot, G. Potentials and limits of vegetation indices for LAI and APAR assessment. Remote Sens. Environ. 1991, 35, 161–173. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef]

- Deering, D. Measuring “forage production” of grazing units from Landsat MSS data. In Proceedings of the Tenth International Symposium of Remote Sensing of the Envrionment, Ann Arbor, MI, USA, 6–10 October 1975; pp. 1169–1198. [Google Scholar]

- Torres-Sanchez, J.; Pena, J.M.; de Castro, A.I.; Lopez-Granados, F. Multi-temporal mapping of the vegetation fraction in early-season wheat fields using images from UAV. Comput. Electron. Agric. 2014, 103, 104–113. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Schirrmann, M.; Giebel, A.; Gleiniger, F.; Pflanz, M.; Lentschke, J.; Dammer, K.H. Monitoring Agronomic Parameters of Winter Wheat Crops with Low-Cost UAV Imagery. Remote Sens. 2016, 8, 706. [Google Scholar] [CrossRef]

- Zha, H.; Miao, Y.; Wang, T.; Li, Y.; Zhang, J.; Sun, W.; Feng, Z.; Kusnierek, K. Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning. Remote Sens. 2020, 12, 215. [Google Scholar] [CrossRef]

- Geladi, P.; Kowalski, B.R. Partial least-squares regression: A tutorial. Anal. Chim. Acta 1986, 185, 1–17. [Google Scholar] [CrossRef]

- Yeniay, Ö.; Göktaş, A. A comparison of partial least squares regression with other prediction methods. Hacet. J. Math. Stat. 2002, 31, 99–111. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Gleason, C.J.; Im, J. Forest biomass estimation from airborne LiDAR data using machine learning approaches. Remote Sens. Environ. 2012, 125, 80–91. [Google Scholar] [CrossRef]

- Andrew, A.M. An Introduction to Support Vector Machines and Other Kernel-Based Learning Methods by Nello Christianini and John Shawe-Taylor, Cambridge University Press, Cambridge, 2000, xiii+ 189 pp., ISBN 0-521-78019-5 (Hbk,£ 27.50). Robotica 2000, 18, 687–689. [Google Scholar] [CrossRef]

- Maimaitiyiming, M.; Sagan, V.; Sidike, P.; Kwasniewski, M.T. Dual Activation Function-Based Extreme Learning Machine (ELM) for Estimating Grapevine Berry Yield and Quality. Remote Sens. 2019, 11, 740. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Omer, G.; Mutanga, O.; Abdel-Rahman, E.M.; Adam, E. Empirical prediction of leaf area index (LAI) of endangered tree species in intact and fragmented indigenous forests ecosystems using WorldView-2 data and two robust machine learning algorithms. Remote Sens. 2016, 8, 324. [Google Scholar] [CrossRef]

- Iqbal, F.; Lucieer, A.; Barry, K.; Wells, R. Poppy Crop Height and Capsule Volume Estimation from a Single UAS Flight. Remote Sens. 2017, 9, 647. [Google Scholar] [CrossRef]

- Wang, Y.; Wen, W.; Wu, S.; Wang, C.; Yu, Z.; Guo, X.; Zhao, C. Maize plant phenotyping: Comparing 3D laser scanning, multi-view stereo reconstruction, and 3D digitizing estimates. Remote Sens. 2019, 11, 63. [Google Scholar] [CrossRef]

- Ballester, C.; Hornbuckle, J.; Brinkhoff, J.; Smith, J.; Quayle, W. Assessment of in-season cotton nitrogen status and lint yield prediction from unmanned aerial system imagery. Remote Sens. 2017, 9, 1149. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef]

- Näsi, R.; Viljanen, N.; Kaivosoja, J.; Alhonoja, K.; Hakala, T.; Markelin, L.; Honkavaara, E. Estimating biomass and nitrogen amount of barley and grass using UAV and aircraft based spectral and photogrammetric 3D features. Remote Sens. 2018, 10, 1082. [Google Scholar] [CrossRef]

- Stanton, C.; Starek, M.J.; Elliott, N.; Brewer, M.; Maeda, M.M.; Chu, T.X. Unmanned aircraft system-derived crop height and normalized difference vegetation index metrics for sorghum yield and aphid stress assessment. J. Appl. Remote Sens. 2017, 11, 026035. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Tilly, N.; Aasen, H.; Bareth, G. Fusion of plant height and vegetation indices for the estimation of barley biomass. Remote Sens. 2015, 7, 11449–11480. [Google Scholar] [CrossRef]

- Freeman, K.W.; Girma, K.; Arnall, D.B.; Mullen, R.W.; Martin, K.L.; Teal, R.K.; Raun, W.R. By-plant prediction of corn forage biomass and nitrogen uptake at various growth stages using remote sensing and plant height. Agron. J. 2007, 99, 530–536. [Google Scholar] [CrossRef]

- Zhou, G.; Yin, X. Relationship of cotton nitrogen and yield with normalized difference vegetation index and plant height. Nutr. Cycl. Agroecosyst. 2014, 100, 147–160. [Google Scholar] [CrossRef]

- Yin, X.; McClure, M.A. Relationship of corn yield, biomass, and leaf nitrogen with normalized difference vegetation index and plant height. Agron. J. 2013, 105, 1005–1016. [Google Scholar] [CrossRef]

- Vergara-Díaz, O.; Zaman-Allah, M.A.; Masuka, B.; Hornero, A.; Zarco-Tejada, P.; Prasanna, B.M.; Cairns, J.E.; Araus, J.L. A novel remote sensing approach for prediction of maize yield under different conditions of nitrogen fertilization. Front. Plant Sci. 2016, 7, 666. [Google Scholar] [CrossRef] [PubMed]

- Becker-Reshef, I.; Vermote, E.; Lindeman, M.; Justice, C. A generalized regression-based model for forecasting winter wheat yields in Kansas and Ukraine using MODIS data. Remote Sens. Environ. 2010, 114, 1312–1323. [Google Scholar] [CrossRef]

- Guo, B.-B.; Zhu, Y.-J.; Feng, W.; He, L.; Wu, Y.-P.; Zhou, Y.; Ren, X.-X.; Ma, Y. Remotely estimating aerial N uptake in winter wheat using red-edge area index from multi-angular hyperspectral data. Front. Plant Sci. 2018, 9, 675. [Google Scholar] [CrossRef] [PubMed]

- Muñoz-Huerta, R.F.; Guevara-Gonzalez, R.G.; Contreras-Medina, L.M.; Torres-Pacheco, I.; Prado-Olivarez, J.; Ocampo-Velazquez, R.V. A review of methods for sensing the nitrogen status in plants: Advantages, disadvantages and recent advances. Sensors 2013, 13, 10823–10843. [Google Scholar] [CrossRef]

- Wang, C.; Feng, M.-C.; Yang, W.-D.; Ding, G.-W.; Sun, H.; Liang, Z.-Y.; Xie, Y.-K.; Qiao, X.-X. Impact of spectral saturation on leaf area index and aboveground biomass estimation of winter wheat. Spectrosc. Lett. 2016, 49, 241–248. [Google Scholar] [CrossRef]

- Gao, S.; Niu, Z.; Huang, N.; Hou, X. Estimating the Leaf Area Index, height and biomass of maize using HJ-1 and RADARSAT-2. Int. J. Appl. Earth Obs. Geoinf. 2013, 24, 1–8. [Google Scholar] [CrossRef]

- Pelizari, P.A.; Sprohnle, K.; Geiss, C.; Schoepfer, E.; Plank, S.; Taubenbock, H. Multi-sensor feature fusion for very high spatial resolution built-up area extraction in temporary settlements. Remote Sens. Environ. 2018, 209, 793–807. [Google Scholar] [CrossRef]

- Peterson, K.; Sagan, V.; Sidike, P.; Cox, A.; Martinez, M. Suspended Sediment Concentration Estimation from Landsat Imagery along the Lower Missouri and Middle Mississippi Rivers Using an Extreme Learning Machine. Remote Sens. 2018, 10, 1503. [Google Scholar] [CrossRef]

- Vapnik, V.N. Statistical Learning Theory; Wiley: New York, NY, USA, 1998; Volume 1, pp. 156–160. [Google Scholar]

- Mutanga, O.; Adam, E.; Cho, M.A. High density biomass estimation for wetland vegetation using WorldView-2 imagery and random forest regression algorithm. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 399–406. [Google Scholar] [CrossRef]

- Pullanagari, R.; Kereszturi, G.; Yule, I. Mapping of macro and micro nutrients of mixed pastures using airborne AisaFENIX hyperspectral imagery. ISPRS J. Photogramm. Remote Sens. 2016, 117, 1–10. [Google Scholar] [CrossRef]

- Liu, H.; Shi, T.; Chen, Y.; Wang, J.; Fei, T.; Wu, G. Improving spectral estimation of soil organic carbon content through semi-supervised regression. Remote Sens. 2017, 9, 29. [Google Scholar] [CrossRef]

| Parameters * | Date | No. of Samples | Mean | Max. | Min. | SD | CV (%) |

|---|---|---|---|---|---|---|---|

| AGB (kg/m2) | 8 July 2017 | 48 | 0.468 | 1.17 | 0.097 | 0.244 | 52.1 |

| 20 July 2017 | 48 | 1.00 | 1.69 | 0.624 | 0.271 | 27.0 | |

| 4 August 2017 | 48 | 2.13 | 3.32 | 1.19 | 0.445 | 20.9 | |

| All | 144 | 1.21 | 3.32 | 0.10 | 0.77 | 64.0 | |

| LAI | 8 July 2017 | 48 | 1.64 | 2.96 | 0.630 | 0.598 | 36.5 |

| 20 July 2017 | 48 | 2.46 | 4.36 | 1.10 | 0.911 | 37.1 | |

| 4 August 2017 | 48 | 4.43 | 6.32 | 1.53 | 1.32 | 29.8 | |

| All | 144 | 2.84 | 6.32 | 0.630 | 1.53 | 54.0 | |

| N | 8 July 2017 | 144 | 25.5 | 38.9 | 16.3 | 5.71 | 22.4 |

| 20 July 2017 | 144 | 29.3 | 50.0 | 17.1 | 8.71 | 29.7 | |

| 4 August 2017 | 144 | 28.4 | 47.9 | 14.5 | 7.75 | 27.3 | |

| All | 432 | 27.7 | 50.0 | 14.5 | 7.65 | 27.6 | |

| CH (m) | 8 July 2017 | 144 | 0.413 | 0.573 | 0.194 | 0.092 | 22.3 |

| 20 July 2017 | 144 | 0.587 | 0.801 | 0.326 | 0.103 | 17.5 | |

| 4 August 2017 | 144 | 0.889 | 1.21 | 0.564 | 0.150 | 16.9 | |

| All | 432 | 0.621 | 1.21 | 0.194 | 0.231 | 37.2 |

| Platforms | Spectral Bands (Wavelength) * | Resolution | Acquisition Date 1 | Acquisition Date 2 | Acquisition Date 3 |

|---|---|---|---|---|---|

| Satellite (Worldview-2) | Pan band (450–800 nm) VNIR: Coast (400–450 nm), Blue (450–510 nm), Green (510–580 nm), Yellow (585–625 nm), Red (630–690 nm), Red-edge (705–745 nm), Near-infrared1 (770–895 nm), Near-infrared2 (860–1040 nm) | Pan: 0.5 m VNIR: 2.0 m | 10 July 2017 | / | / |

| Satellite (Worldview-3) | Pan band VNIR: Coast, Blue, Green, Yellow, Red, Red-edge, Near-infrared1, Near-infrared2 | Pan: 0.31 m VNIR: 1.24 m | / | 22 July 2017 | 9 August 2017 |

| UAV (RGB) | Red, Green, Blue | 0.01 m | 8 July 2017 | 20 July 2017 | 4 August 2017 |

| Platforms | Features | Formulation | References |

|---|---|---|---|

| Worldview2/3 (Spec. Info.) | Coast (C), Blue (B), Yellow (Y), Green (G), Red (R), Red-edge (RE), Near-infrared1 (NIR1), Near-infrared2 (NIR2) | The reflectance value of each band | / |

| Red-edge chlorophyll index | RECI = (NIR1 /RE) − 1 | [59] | |

| Normalized difference vegetation index | NDVI = (NIR1 − R)/(NIR1 + R) | [60] | |

| Green normalized difference vegetation index | GNDVI = (NIR1 − G)/(NIR1 + G) | [61] | |

| Normalized difference red-edge | NDRE = (NIR1 − RE)/(NIR1 + RE) | [62] | |

| Ratio vegetation index | RVI = NIR1/R | [63] | |

| The enhanced vegetation index | EVI = 2.5*((NIR1 − R)/(NIR1 + 6*R − 7.5*B + 1)) | [63] | |

| The enhanced vegetation index (2-band) | EVI2 = 2.5 * (NIR − RED)/(NIR + 2.5*RED + 1) | [64] | |

| Optimized soil-adjusted vegetation index | OSAVI = (NIR1 − R)/(NIR1 − R + L) (L = 0.16) | [65] | |

| Modified chlorophyll absorption in reflectance index | MCARI = [(RE − R) − 0.2*(RE − G)]*(RE/R) | [66] | |

| Transformed chlorophyll absorption in reflectance index | TCARI = 3*[(RE − R) − 0.2*(RE − G)*(RE/R)] | [67] | |

| MCARI/OSAVI | MCARI/OSAVI | [66] | |

| TCARI/OSAVI | TCARI/OSAVI | [67] | |

| Wide dynamic range vegetation index | WDRVI = (a*NIR1 − R)/ (a*NIR1 + R) (a = 0.12) | [68] | |

| Structure insensitive pigment index | SIPI = (NIR1 − B)/ (NIR1 − R) | [69] | |

| Normalized ratio vegetation index | NRVI = (RVI − 1)/(RVI + 1) | [70] | |

| Visible atmospherically resistance index | VARI = (G − R)/(G + R − B) | [71] | |

| Transformed vegetation index | TVI = sqrt [(NIR1 − R)/(NIR1 + R) + 0.5] | [72] | |

| UAV RGB (Struc. Info.) | Canopy height (m) | CH = DSM − DEM * | / |

| (Struc. Info.) | Canopy cover (%) | [73] |

| Platforms * | Metrics | PLSR | SVR | RFR | ELR |

|---|---|---|---|---|---|

| Satellite (Spec. info.) | R2 | 0.792 | 0.856 | 0.814 | 0.870 |

| RMSE | 0.605 | 0.502 | 0.571 | 0.434 | |

| RMSE% | 30.8 | 25.6 | 29.1 | 24.3 | |

| UAV (Struc. info.) | R2 | 0.787 | 0.763 | 0.769 | 0.805 |

| RMSE | 0.612 | 0.645 | 0.637 | 0.585 | |

| RMSE% | 31.2 | 32.9 | 32.5 | 29.8 | |

| S + U (Spec. + Struc. info.) | R2 | 0.852 | 0.898 | 0.918 | 0.923 |

| RMSE | 0.510 | 0.385 | 0.346 | 0.335 | |

| RMSE% | 25.9 | 21.6 | 19.3 | 18.8 |

| Platforms * | Metrics | PLSR | SVR | RFR | ELR |

|---|---|---|---|---|---|

| Satellite (Spec. info.) | R2 | 0.844 | 0.898 | 0.905 | 0.915 |

| RMSE | 0.634 | 0.512 | 0.494 | 0.469 | |

| RMSE% | 22.1 | 17.8 | 17.2 | 16.3 | |

| UAV (Struc. info.) | R2 | 0.832 | 0.883 | 0.884 | 0.909 |

| RMSE | 0.566 | 0.549 | 0.546 | 0.484 | |

| RMSE% | 22.2 | 19.1 | 19.0 | 16.9 | |

| S + U (Spec. + Struc. info.) | R2 | 0.924 | 0.923 | 0.925 | 0.927 |

| RMSE | 0.442 | 0.446 | 0.439 | 0.433 | |

| RMSE% | 15.4 | 15.5 | 15.3 | 15.1 |

| Platforms * | Metrics | PLSR | SVR | RFR | ELR |

|---|---|---|---|---|---|

| Satellite (Spec. info.) | R2 | 0.522 | 0.301 | 0.565 | 0.522 |

| RMSE | 5.39 | 6.52 | 5.14 | 5.39 | |

| RMSE% | 19.1 | 23.1 | 18.2 | 19.1 | |

| UAV (Struc. info.) | R2 | 0.125 | 0.199 | 0.328 | 0.312 |

| RMSE | 7.29 | 6.98 | 6.39 | 6.47 | |

| RMSE% | 25.8 | 24.7 | 22.6 | 22.9 | |

| S + U (Spec. + Struc. info.) | R2 | 0.538 | 0.426 | 0.605 | 0.591 |

| RMSE | 5.30 | 5.90 | 4.90 | 4.98 | |

| RMSE% | 18.8 | 20.9 | 17.4 | 17.6 |

| Plant Traits | Platforms * | Metrics | 07/08/2017 | 07/20/2017 | 08/04/2017 | All |

|---|---|---|---|---|---|---|

| AGB | Satellite | R2 | 0.591 | 0.674 | 0.540 | 0.870 |

| RMSE | 0.217 | 0.284 | 0.435 | 0.434 | ||

| RMSE% | 30.9 | 16.9 | 12.0 | 24.3 | ||

| UAV | R2 | 0.675 | 0.644 | 0.428 | 0.805 | |

| RMSE | 0.193 | 0.305 | 0.485 | 0.585 | ||

| RMSE% | 27.6 | 13.5 | 13.4 | 29.8 | ||

| S + U | R2 | 0.785 | 0.790 | 0.593 | 0.923 | |

| RMSE | 0.157 | 0.228 | 0.389 | 0.335 | ||

| RMSE% | 22.4 | 13.5 | 10.9 | 18.8 | ||

| LAI | Satellite | R2 | 0.811 | 0.798 | 0.697 | 0.915 |

| RMSE | 0.289 | 0.473 | 0.763 | 0.469 | ||

| RMSE% | 17.0 | 19.4 | 16.9 | 16.3 | ||

| UAV | R2 | 0.640 | 0.733 | 0.694 | 0.909 | |

| RMSE | 0.4 | 0.543 | 0.768 | 0.484 | ||

| RMSE% | 23.5 | 22.3 | 17.0 | 16.9 | ||

| S + U | R2 | 0.821 | 0.872 | 0.750 | 0.927 | |

| RMSE | 0.282 | 0.465 | 0.693 | 0.433 | ||

| RMSE% | 16.6 | 19.1 | 15.3 | 15.1 | ||

| N | Satellite | R2 | 0.495 | 0.573 | 0.589 | 0.522 |

| RMSE | 3.74 | 5.42 | 4.15 | 5.39 | ||

| RMSE% | 14.8 | 18.9 | 15.1 | 19.1 | ||

| UAV | R2 | 0.404 | 0.239 | 0.255 | 0.312 | |

| RMSE | 4.06 | 7.23 | 5.59 | 6.47 | ||

| RMSE% | 16.1 | 25.2 | 20.4 | 22.9 | ||

| S + U | R2 | 0.572 | 0.559 | 0.693 | 0.591 | |

| RMSE | 3.44 | 5.51 | 3.59 | 4.98 | ||

| RMSE% | 13.6 | 19.2 | 13.1 | 17.6 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Maimaitijiang, M.; Sagan, V.; Sidike, P.; Daloye, A.M.; Erkbol, H.; Fritschi, F.B. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sens. 2020, 12, 1357. https://doi.org/10.3390/rs12091357

Maimaitijiang M, Sagan V, Sidike P, Daloye AM, Erkbol H, Fritschi FB. Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sensing. 2020; 12(9):1357. https://doi.org/10.3390/rs12091357

Chicago/Turabian StyleMaimaitijiang, Maitiniyazi, Vasit Sagan, Paheding Sidike, Ahmad M. Daloye, Hasanjan Erkbol, and Felix B. Fritschi. 2020. "Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning" Remote Sensing 12, no. 9: 1357. https://doi.org/10.3390/rs12091357

APA StyleMaimaitijiang, M., Sagan, V., Sidike, P., Daloye, A. M., Erkbol, H., & Fritschi, F. B. (2020). Crop Monitoring Using Satellite/UAV Data Fusion and Machine Learning. Remote Sensing, 12(9), 1357. https://doi.org/10.3390/rs12091357