About the Pitfall of Erroneous Validation Data in the Estimation of Confusion Matrices

Abstract

1. Introduction

2. Theoretical Framework

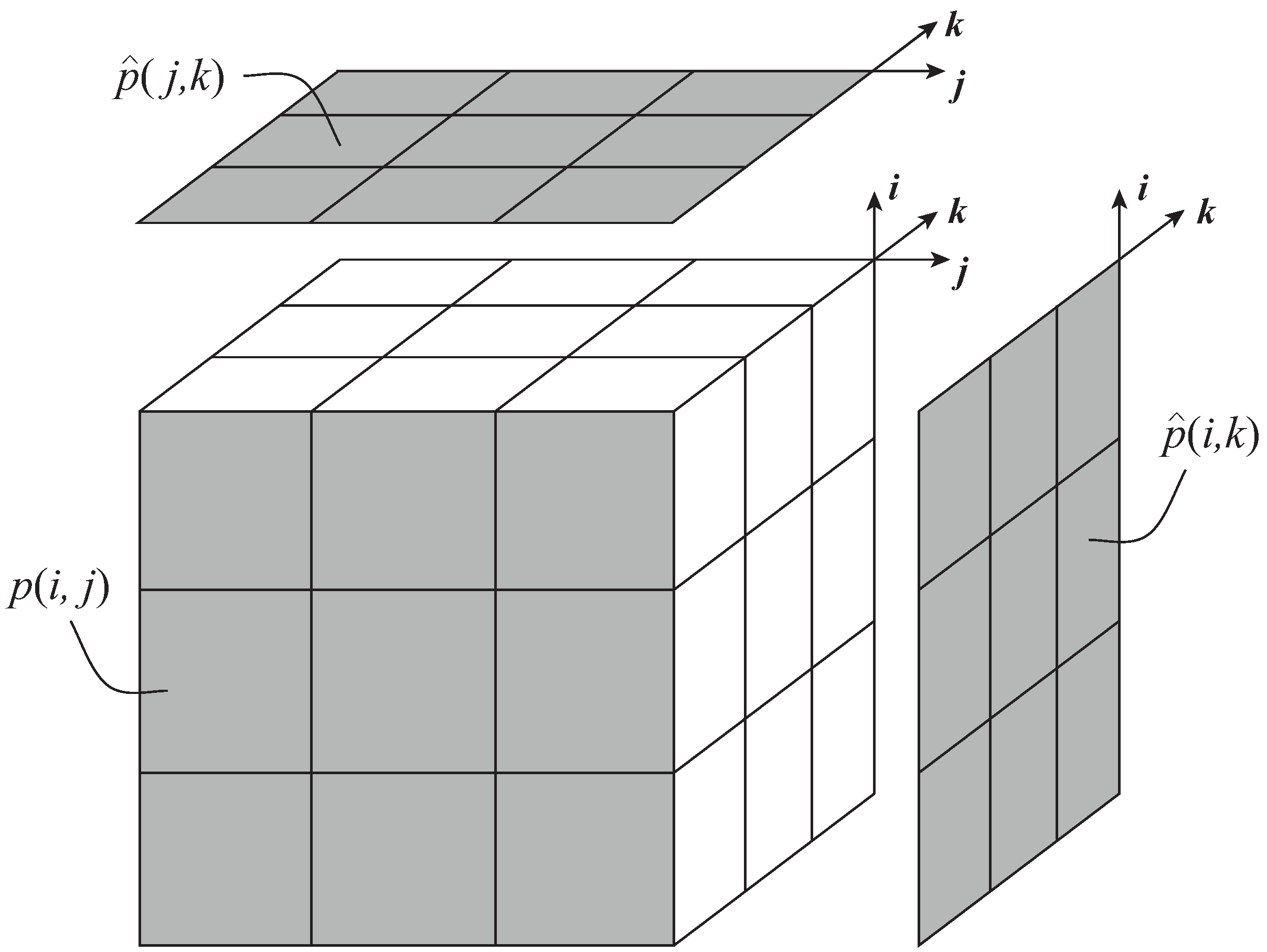

2.1. Joint Probability Table

2.2. Maximum Entropy Estimation

2.3. Assessing the Conditional Independence

3. Synthetic Case Studies

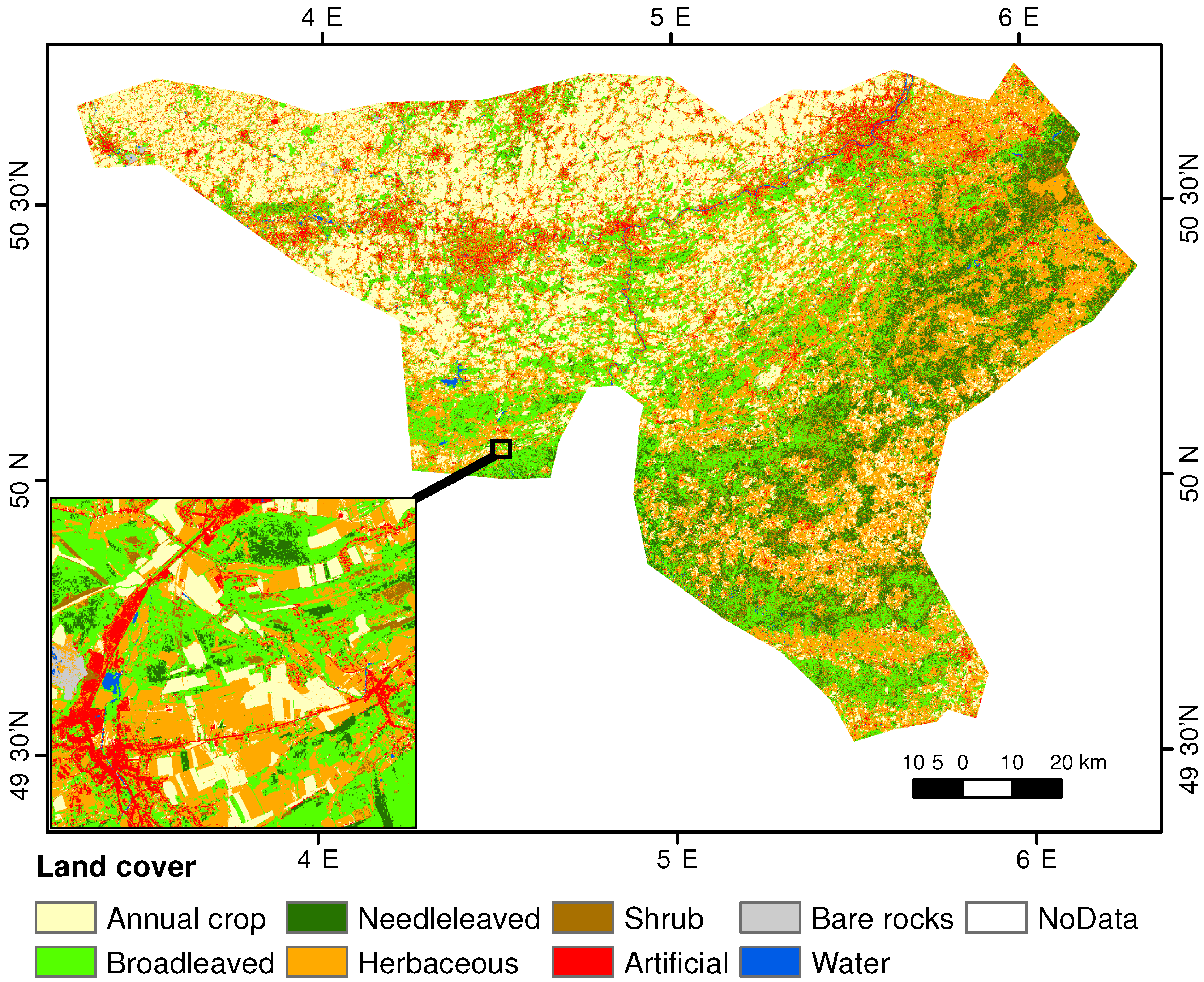

3.1. Virtual Truth

3.2. Classified Maps

- An error pattern that reuses the observed confusions that occur in practice, i.e., with many errors between poorly separable classes (e.g., herbaceous cover vs cropland) and few errors between highly separable classes (e.g., a water body vs a tree). An empirical confusion matrix based on quality controlled reference data collection with field survey over the area was used for this. This pattern was selected because classification algorithms often meet the same discrimination issues, therefore they are likely to have a similar pattern despite their different performances. This pattern will be referred to as Obs.

- A class-independent error pattern, where all off-diagonal values are equal to the same constant. The values of the diagonal were set according to the frequency of each class in the virtual truth. This pattern was selected because it was used in a previous study [3]. It will be referred to as Const.

- A random error pattern, where all off-diagonal values are independently selected from a uniform distribution. This pattern was selected for its lack of arbitrary structure, contrary to the constant errors of Const and the more symmetrical errors of Obs. This pattern will be referred to as Rand.

3.3. Reference Datasets

3.3.1. Thematic Interpretation Errors

- A field-based error pattern, with based on the assessment of operators in the study area. A high accuracy confusion matrix was obtained by comparing a consensual point-based photo-interpretation (from 25-cm visible and infra-red orthophotos at two dates, combined with 1-m resolution LIDAR) with a field survey. The corresponding error rate in this dataset is about . This pattern will be referred to as Field.

- A class-independent error pattern, where the probability of errors is constant for all classes. Three levels of errors were considered: , and . This pattern will be referred to as Unif.

- A proportional error pattern, where the probability of errors is proportional to the “virtual truth” class frequency. Two levels of errors were considered: , and . This pattern will be referred to as Prop.

- A conditional error pattern which is specific to each classified map. Contrary to the other methods, knowledge about i and j classes of the pixel are used to simulate a correlation between the reference and the classification results. For doing this, of the points that were misclassified were labelled with the same incorrect label in the reference dataset (while all other labels remained correct). This pattern will be referred to as Cor, with a mention of the map from which it is derived.

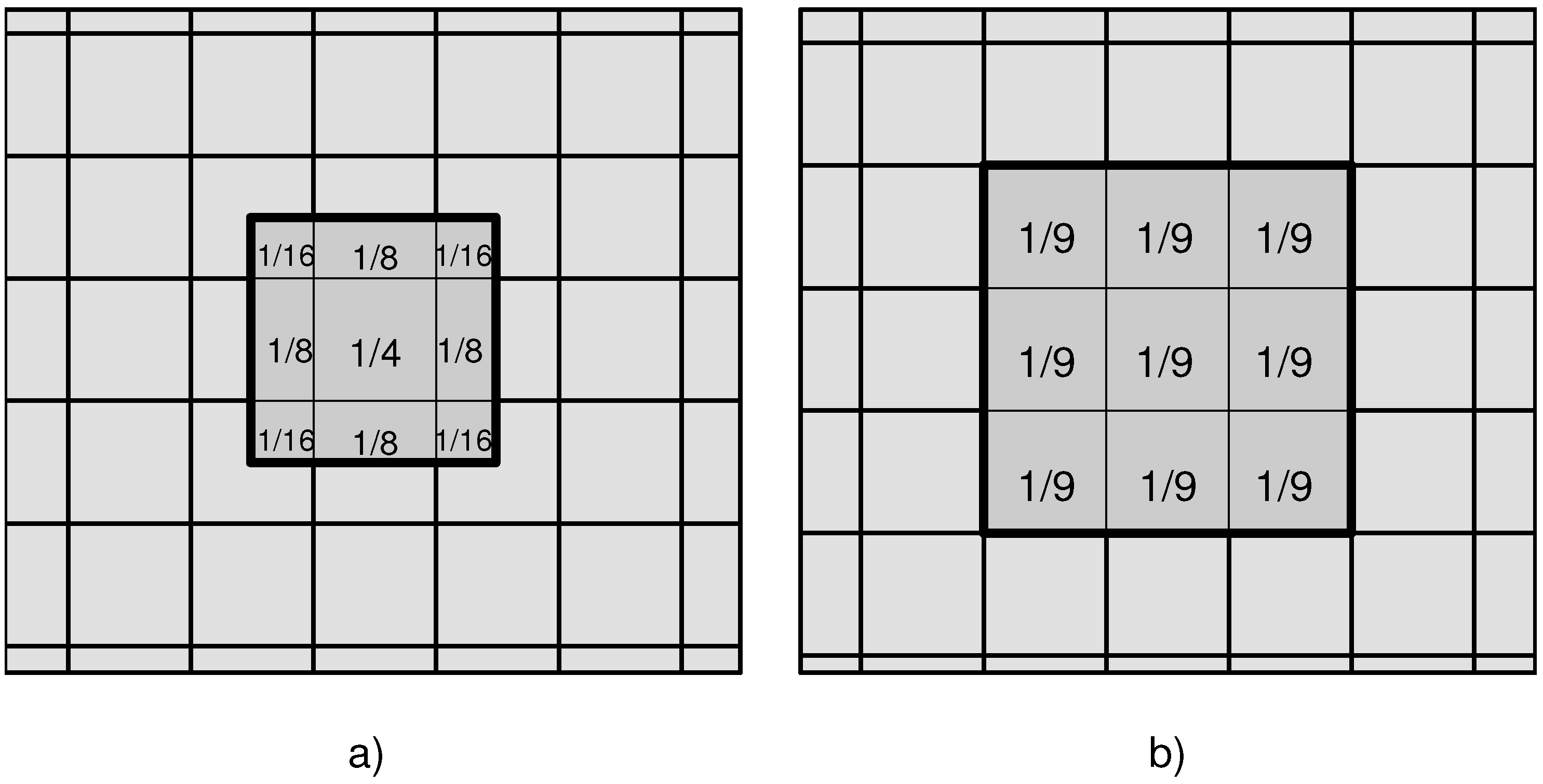

3.3.2. Geolocation Errors

3.4. Impact of Erroneous Reference Data

3.4.1. Thematic Errors

3.4.2. Geolocation Errors

4. Results

4.1. Uncertainty from Thematic Errors

4.1.1. Impact of Imperfect Reference Dataset

4.1.2. Maximum Entropy Correction

4.2. Uncertainty from Geolocation Errors

4.2.1. Impact of Imperfect Reference Dataset

4.2.2. Maximum Entropy Correction

5. Discussion

5.1. Comparing Classification Outputs

5.2. Map Quality Assessment and Area Estimates

5.3. Geolocation Error

5.4. Practical Recommendation

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Proof of the Equivalences

Appendix B. Confusion Matrices (i,j) of Classified Maps

| Crop | NeedleL | BroadL | Herb | Shrub | Artif | BareS | Water | |

|---|---|---|---|---|---|---|---|---|

| crop | 228.0 | 0 | 0 | 38.6 | 0 | 0 | 0 | 0 |

| NeedleL | 0 | 183.0 | 22.4 | 1.37 | 0 | 3.64 | 0 | 0 |

| BroadL | 0 | 13.6 | 70.2 | 0 | 0 | 1.89 | 0 | 0 |

| Herb | 48.0 | 2.54 | 0 | 262.0 | 6.29 | 0 | 1.08 | 0 |

| Shrub | 0 | 2.54 | 1.12 | 3.95 | 44.2 | 0 | 0 | 0 |

| Artif | 2.44 | 0 | 0 | 0 | 0 | 38.5 | 0 | 0 |

| BareS | 0 | 0 | 0 | 2.59 | 0 | 9.27 | 0.22 | 0 |

| Water | 0 | 0 | 0 | 0 | 0 | 6.64 | 0 | 5.64 |

| Crop | NeedleL | BroadL | Herb | Shrub | Artif | BareS | Water | |

|---|---|---|---|---|---|---|---|---|

| crop | 259.0 | 0 | 0 | 14.2 | 0 | 0 | 0 | 0 |

| NeedleL | 0 | 195.0 | 9.27 | 0.53 | 0 | 1.56 | 0 | 0 |

| BroadL | 0 | 5.01 | 83.9 | 0 | 0 | 0.9 | 0 | 0 |

| Herb | 19.0 | 1.13 | 0 | 291.0 | 2.24 | 0 | 0.78 | 0 |

| Shrub | 0 | 1.06 | 0.49 | 1.55 | 48.2 | 0 | 0 | 0 |

| Artif | 0.94 | 0 | 0 | 0 | 0 | 50.4 | 0 | 0 |

| BareS | 0 | 0 | 0 | 1.06 | 0 | 4.12 | 0.52 | 0 |

| Water | 0 | 0 | 0 | 0 | 0 | 3.03 | 0 | 5.64 |

| Crop | NeedleL | BroadL | Herb | Shrub | Artif | BareS | Water | |

|---|---|---|---|---|---|---|---|---|

| crop | 267.0 | 2.34 | 2.43 | 1.49 | 0.49 | 0.2 | 0.22 | 0.15 |

| NeedleL | 1.12 | 189.0 | 0.93 | 3.1 | 2.34 | 2.69 | 0.29 | 0.58 |

| BroadL | 3.18 | 2.53 | 86.2 | 1.37 | 2.44 | 0.73 | 0.14 | 0.53 |

| Herb | 3.5 | 3.38 | 0.73 | 292.0 | 0.08 | 0.2 | 0.31 | 0.68 |

| Shrub | 1.43 | 0.17 | 1.4 | 3.57 | 38.4 | 1.73 | 0.04 | 0.86 |

| Artif | 0.43 | 1.84 | 0.72 | 3.49 | 2.66 | 50.4 | 0.12 | 0.67 |

| BareS | 0.05 | 1.43 | 0.18 | 3.24 | 3.0 | 1.93 | 0.1 | 0.83 |

| Water | 2.26 | 1.28 | 1.11 | 0 | 1.08 | 2.06 | 0.08 | 1.34 |

| Crop | NeedleL | BroadL | Herb | Shrub | Artif | BareS | Water | |

|---|---|---|---|---|---|---|---|---|

| crop | 234.0 | 5.93 | 4.02 | 7.02 | 3.73 | 4.49 | 0.27 | 0.28 |

| NeedleL | 5.88 | 165.0 | 3.65 | 1.23 | 3.96 | 4.03 | 0 | 1.17 |

| BroadL | 8.32 | 9.52 | 65.8 | 6.23 | 5.52 | 5.78 | 0.33 | 1.31 |

| Herb | 2.5 | 4.86 | 1.23 | 277.0 | 3.53 | 5.57 | 0.07 | 0.68 |

| Shrub | 7.38 | 3.34 | 3.99 | 5.33 | 22.4 | 1.79 | 0.1 | 0.15 |

| Artif | 6.05 | 4.49 | 7.35 | 0.52 | 1.09 | 32.0 | 0.09 | 0.04 |

| BareS | 6.29 | 8.48 | 5.09 | 7.9 | 4.54 | 0.77 | 0.06 | 1.21 |

| Water | 8.45 | 0.23 | 2.52 | 2.49 | 5.7 | 5.55 | 0.38 | 0.8 |

| Crop | NeedleL | BroadL | Herb | Shrub | Artif | BareS | Water | |

|---|---|---|---|---|---|---|---|---|

| Crop | 266.0 | 1.8 | 1.74 | 2.07 | 1.53 | 1.68 | 0.1 | 0.41 |

| NeedleL | 1.76 | 189.0 | 1.61 | 2.25 | 1.39 | 1.42 | 0.18 | 0.56 |

| BroadL | 1.75 | 1.65 | 81.3 | 2.04 | 1.38 | 1.86 | 0.14 | 0.56 |

| Herb | 1.84 | 1.94 | 1.94 | 294.0 | 1.45 | 1.87 | 0.22 | 0.62 |

| Shrub | 1.65 | 1.95 | 1.81 | 1.98 | 39.6 | 1.51 | 0.14 | 0.54 |

| Artif | 1.83 | 1.95 | 1.85 | 1.91 | 1.8 | 48.1 | 0.18 | 0.46 |

| BareS | 1.82 | 1.73 | 1.78 | 2.31 | 1.63 | 1.71 | 0.14 | 0.51 |

| Water | 1.87 | 1.92 | 1.64 | 1.7 | 1.7 | 1.8 | 0.2 | 1.98 |

| Crop | NeedleL | BroadL | Herb | Shrub | Artif | BareS | Water | |

|---|---|---|---|---|---|---|---|---|

| Crop | 243.0 | 4.99 | 4.22 | 5.75 | 3.61 | 3.91 | 0.22 | 0.75 |

| NeedleL | 5.36 | 166.0 | 4.09 | 5.22 | 3.24 | 3.4 | 0.2 | 0.6 |

| BroadL | 5.35 | 5.14 | 64.3 | 5.22 | 3.42 | 3.49 | 0.21 | 0.68 |

| Herb | 5.19 | 4.88 | 4.66 | 272.0 | 3.31 | 3.71 | 0.15 | 0.6 |

| Shrub | 4.94 | 5.15 | 4.01 | 5.15 | 26.8 | 3.46 | 0.2 | 0.62 |

| Artif | 5.49 | 5.01 | 4.12 | 5.03 | 3.47 | 35.2 | 0.16 | 0.82 |

| BareS | 5.23 | 5.23 | 4.37 | 4.77 | 3.51 | 3.37 | 0.02 | 0.83 |

| Water | 4.48 | 5.24 | 3.94 | 4.92 | 3.14 | 3.41 | 0.14 | 0.74 |

Appendix C. Confusion Matrices (j,k) of Reference Datasets

| Crop | NeedleL | BroadL | Herbac | Shrub | Artif | Bare S | Water | |

|---|---|---|---|---|---|---|---|---|

| Crop | 265.0 | 0 | 0 | 13.8 | 0 | 0 | 0 | 0 |

| NeedleL | 0 | 202.0 | 0 | 0 | 0 | 0 | 0 | 0 |

| BroadL | 0 | 4.88 | 88.8 | 0 | 0 | 0 | 0 | 0 |

| Herbac | 9.28 | 0 | 0 | 299.0 | 0 | 0 | 0 | 0 |

| Shrub | 0 | 0 | 0 | 0 | 50.5 | 0 | 0 | 0 |

| Artif | 0 | 0 | 0 | 0 | 0 | 60.0 | 0 | 0 |

| Bare S | 0 | 0 | 0 | 0 | 0 | 0 | 1.3 | 0 |

| Water | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 5.64 |

| Crop | NeedleL | BroadL | Herbac | Shrub | Artif | Bare S | Water | |

|---|---|---|---|---|---|---|---|---|

| Crop | 252.0 | 3.82 | 3.58 | 4.13 | 3.78 | 4.11 | 3.71 | 4.01 |

| NeedleL | 2.83 | 181.0 | 2.75 | 2.86 | 2.99 | 3.12 | 3.25 | 2.71 |

| BroadL | 1.33 | 1.37 | 84.4 | 1.39 | 1.29 | 1.33 | 1.34 | 1.19 |

| Herbac | 4.65 | 4.23 | 4.1 | 278.0 | 4.32 | 4.3 | 4.28 | 4.25 |

| Shrub | 0.57 | 0.8 | 0.65 | 0.64 | 45.4 | 0.7 | 0.82 | 0.86 |

| Artif | 0.94 | 0.86 | 0.84 | 0.9 | 0.79 | 53.9 | 0.85 | 0.95 |

| Bare S | 0.02 | 0.04 | 0.03 | 0 | 0.01 | 0 | 1.16 | 0.04 |

| Water | 0.08 | 0.09 | 0.01 | 0.13 | 0.11 | 0.07 | 0.09 | 5.06 |

| Crop | NeedleL | BroadL | Herbac | Shrub | Artif | Bare S | Water | |

|---|---|---|---|---|---|---|---|---|

| Crop | 265.0 | 1.92 | 1.75 | 2.23 | 1.98 | 2.14 | 2.05 | 1.99 |

| NeedleL | 1.48 | 192.0 | 1.15 | 1.63 | 1.48 | 1.38 | 1.43 | 1.7 |

| BroadL | 0.78 | 0.69 | 88.7 | 0.75 | 0.68 | 0.63 | 0.72 | 0.71 |

| Herbac | 2.22 | 2.13 | 2.42 | 292.0 | 2.06 | 2.38 | 2.28 | 2.16 |

| Shrub | 0.29 | 0.36 | 0.31 | 0.36 | 48.2 | 0.34 | 0.2 | 0.41 |

| Artif | 0.4 | 0.39 | 0.35 | 0.43 | 0.45 | 57.3 | 0.32 | 0.39 |

| Bare S | 0 | 0.01 | 0 | 0.01 | 0.01 | 0.02 | 1.23 | 0.02 |

| Water | 0.03 | 0.06 | 0.08 | 0.01 | 0.05 | 0.05 | 0.07 | 5.29 |

| Crop | NeedleL | BroadL | Herbac | Shrub | Artif | Bare S | Water | |

|---|---|---|---|---|---|---|---|---|

| Crop | 273.0 | 0.92 | 0.66 | 0.88 | 0.86 | 0.85 | 0.86 | 0.81 |

| NeedleL | 0.5 | 198.0 | 0.67 | 0.58 | 0.58 | 0.63 | 0.61 | 0.59 |

| BroadL | 0.26 | 0.28 | 91.7 | 0.27 | 0.26 | 0.33 | 0.33 | 0.21 |

| Herbac | 1.01 | 0.98 | 0.85 | 302.0 | 0.9 | 0.93 | 0.91 | 0.91 |

| Shrub | 0.18 | 0.05 | 0.14 | 0.15 | 49.5 | 0.11 | 0.17 | 0.16 |

| Artif | 0.13 | 0.28 | 0.14 | 0.15 | 0.2 | 58.8 | 0.19 | 0.14 |

| Bare S | 0 | 0 | 0.01 | 0 | 0.01 | 0.01 | 1.27 | 0 |

| Water | 0.03 | 0.02 | 0.01 | 0.05 | 0 | 0.03 | 0.02 | 5.48 |

| Crop | NeedleL | BroadL | Herbac | Shrub | Artif | Bare S | Water | |

|---|---|---|---|---|---|---|---|---|

| Crop | 251.0 | 7.88 | 3.9 | 11.8 | 2.11 | 2.21 | 0.03 | 0.21 |

| NeedleL | 7.31 | 182.0 | 2.59 | 7.6 | 1.14 | 1.54 | 0.03 | 0.11 |

| BroadL | 2.93 | 2.07 | 84.3 | 3.18 | 0.49 | 0.64 | 0.03 | 0.07 |

| Herbac | 13.0 | 8.15 | 4.1 | 278.0 | 2.58 | 2.56 | 0.02 | 0.18 |

| Shrub | 1.43 | 1.06 | 0.49 | 1.65 | 45.5 | 0.32 | 0.02 | 0.03 |

| Artif | 1.71 | 1.41 | 0.63 | 2.12 | 0.26 | 53.8 | 0.01 | 0.06 |

| Bare S | 0.07 | 0.03 | 0.01 | 0.03 | 0.02 | 0 | 1.14 | 0 |

| Water | 0.16 | 0.08 | 0.07 | 0.17 | 0.04 | 0.02 | 0 | 5.1 |

| Crop | NeedleL | BroadL | Herbac | Shrub | Artif | Bare S | Water | |

|---|---|---|---|---|---|---|---|---|

| Crop | 265.0 | 4.25 | 2.06 | 5.55 | 1.04 | 1.05 | 0.02 | 0.12 |

| NeedleL | 3.56 | 192.0 | 1.52 | 3.63 | 0.54 | 0.84 | 0 | 0.07 |

| BroadL | 1.58 | 0.85 | 88.9 | 1.72 | 0.22 | 0.33 | 0.02 | 0.01 |

| Herbac | 6.14 | 4.55 | 1.69 | 293.0 | 1.0 | 1.13 | 0 | 0.14 |

| Shrub | 0.74 | 0.47 | 0.26 | 0.59 | 48.2 | 0.21 | 0 | 0.02 |

| Artif | 0.88 | 0.58 | 0.19 | 0.88 | 0.12 | 57.3 | 0.02 | 0.02 |

| Bare S | 0.01 | 0.02 | 0 | 0.01 | 0.01 | 0 | 1.25 | 0 |

| Water | 0.12 | 0.03 | 0.01 | 0.08 | 0 | 0.02 | 0 | 5.38 |

| Crop | NeedleL | BroadL | Herbac | Shrub | Artif | Bare S | Water | |

|---|---|---|---|---|---|---|---|---|

| Crop | 273.0 | 1.84 | 0.72 | 2.46 | 0.45 | 0.42 | 0 | 0.03 |

| NeedleL | 1.4 | 198.0 | 0.48 | 1.66 | 0.28 | 0.35 | 0.02 | 0 |

| BroadL | 0.55 | 0.51 | 91.8 | 0.6 | 0.11 | 0.09 | 0 | 0.01 |

| Herbac | 2.8 | 1.58 | 0.77 | 302.0 | 0.53 | 0.57 | 0.01 | 0.06 |

| Shrub | 0.25 | 0.15 | 0.06 | 0.29 | 49.6 | 0.06 | 0 | 0 |

| Artif | 0.34 | 0.2 | 0.14 | 0.39 | 0.05 | 58.9 | 0 | 0.01 |

| Bare S | 0 | 0 | 0 | 0.01 | 0 | 0 | 1.29 | 0 |

| Water | 0.01 | 0.01 | 0.01 | 0.04 | 0 | 0 | 0.01 | 5.56 |

References

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good practices for estimating area and assessing accuracy of land change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Comber, A.; Fisher, P.; Brunsdon, C.; Khmag, A. Spatial analysis of remote sensing image classification accuracy. Remote Sens. Environ. 2012, 127, 237–246. [Google Scholar] [CrossRef]

- Carlotto, M.J. Effect of errors in ground truth on classification accuracy. Int. J. Remote Sens. 2009, 30, 4831–4849. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Brannstrom, C.; Filippi, A. Remote classification of Cerrado (Savanna) and agricultural land covers in northeastern Brazil. Geocarto Int. 2008, 23, 109–134. [Google Scholar] [CrossRef]

- Foody, G.M. Assessing the accuracy of land cover change with imperfect ground reference data. Remote Sens. Environ. 2010, 114, 2271–2285. [Google Scholar] [CrossRef]

- Radoux, J.; Waldner, F.; Bogaert, P. How response designs and class proportions affect the accuracy of validation data. Remote Sens. 2020, 12, 257. [Google Scholar] [CrossRef]

- Van Coillie, F.M.; Gardin, S.; Anseel, F.; Duyck, W.; Verbeke, L.P.; De Wulf, R.R. Variability of operator performance in remote-sensing image interpretation: The importance of human and external factors. Int. J. Remote Sens. 2014, 35, 754–778. [Google Scholar] [CrossRef]

- Powell, R.; Matzke, N.; De Souza, C.; Clark, M.; Numata, I.; Hess, L.; Roberts, D. Sources of error in accuracy assessment of thematic land-cover maps in the Brazilian Amazon. Remote Sens. Environ. 2004, 90, 221–234. [Google Scholar] [CrossRef]

- See, L.; Comber, A.; Salk, C.; Fritz, S.; Van Der Velde, M.; Perger, C.; Schill, C.; McCallum, I.; Kraxner, F.; Obersteiner, M. Comparing the quality of crowdsourced data contributed by expert and non-experts. PLoS ONE 2013, 8, e69958. [Google Scholar] [CrossRef]

- Enøe, C.; Georgiadis, M.P.; Johnson, W.O. Estimation of sensitivity and specificity of diagnostic tests and disease prevalence when the true disease state is unknown. Prev. Vet. Med. 2000, 45, 61–81. [Google Scholar] [CrossRef]

- Espeland, M.A.; Handelman, S.L. Using latent class models to characterize and assess relative error in discrete measurements. Biometrics 1989, 45, 587–599. [Google Scholar] [CrossRef] [PubMed]

- Hui, S.L.; Zhou, X.H. Evaluation of diagnostic tests without gold standards. Stat. Methods Med. Res. 1998, 7, 354–370. [Google Scholar] [CrossRef] [PubMed]

- Sarmento, P.; Carrão, H.; Caetano, M.; Stehman, S. Incorporating reference classification uncertainty into the analysis of land cover accuracy. Int. J. Remote Sens. 2009, 30, 5309–5321. [Google Scholar] [CrossRef]

- Kapur, J.N. Maximum Entropy Models in Science and Engineering; John Wiley & Son: New Delhi, India, 1989. [Google Scholar]

- Wu, N. The Maximum Entropy Method; Springer Science & Business Media: Berlin, Germany, 2012; Volume 32. [Google Scholar]

- Fienberg, S.E. An iterative procedure for estimation in contingency tables. Ann. Math. Stat. 1970, 41, 907–917. [Google Scholar] [CrossRef]

- Barthélemy, J.; Suesse, T. mipfp: An R Package for Multidimensional Array Fitting and Simulating Multivariate Bernoulli Distributions. J. Stat. Softw. Code Snippets 2018, 86, 1–20. [Google Scholar] [CrossRef]

- Forthommme, D. Iterative Proportional Fitting for Python with N Dimensions. Github 2019, 1, 1–2. Available online: Https://Github.Com/Dirguis/Ipfn (accessed on 14 December 2020).

- Radoux, J.; Bourdouxhe, A.; Coos, W.; Dufrêne, M.; Defourny, P. Improving Ecotope Segmentation by Combining Topographic and Spectral Data. Remote Sens. 2019, 11, 354. [Google Scholar] [CrossRef]

- Radoux, J.; Bogaert, P. Good practices for object-based accuracy assessment. Remote Sens. 2017, 9, 646. [Google Scholar] [CrossRef]

- Radoux, J.; Chomé, G.; Jacques, D.; Waldner, F.; Bellemans, N.; Matton, N.; Lamarche, C.; d’Andrimont, R.; Defourny, P. Sentinel-2’s potential for sub-pixel landscape feature detection. Remote Sens. 2016, 8, 488. [Google Scholar] [CrossRef]

- Foody, G.M. Sample size determination for image classification accuracy assessment and comparison. Int. J. Remote Sens. 2009, 30, 5273–5291. [Google Scholar] [CrossRef]

- Stehman, S.V. Estimating area from an accuracy assessment error matrix. Remote Sens. Environ. 2013, 132, 202–211. [Google Scholar] [CrossRef]

- Foody, G.M. Valuing map validation: The need for rigorous land cover map accuracy assessment in economic valuations of ecosystem services. Ecol. Econ. 2015, 111, 23–28. [Google Scholar] [CrossRef]

- Radoux, J.; Defourny, P. A quantitative assessment of boundaries in automated forest stand delineation using very high resolution imagery. Remote Sens. Environ. 2007, 110, 468–475. [Google Scholar] [CrossRef]

| References | Trusted | ||||||

|---|---|---|---|---|---|---|---|

| Maps | |||||||

| Trusted | 100 | 90.1 | 95.1 | 90.0 | 94.9 | 97.2 | |

| 79.8 | 72.2 | 76.0 | 72.1 | 75.9 | 77.5 | ||

| 80.8 | 73.1 | 77.0 | 73.0 | 76.9 | 78.5 | ||

| 83.2 | 75.3 | 79.3 | 75.3 | 79.2 | 81.4 | ||

| 92.0 | 83.1 | 87.6 | 82.9 | 87.5 | 89.5 | ||

| 92.4 | 83.4 | 87.9 | 83.2 | 87.8 | 89.8 | ||

| 93.3 | 84.2 | 88.8 | 84.1 | 88.7 | 90.9 | ||

| References | Trusted | |||||||

|---|---|---|---|---|---|---|---|---|

| Maps | ||||||||

| Trusted | 100 | 89.9 | 90.4 | 91.5 | 96.0 | 96.2 | 96.6 | |

| 79.8 | 89.8 | 73.2 | 73.4 | 77.2 | 77.4 | 77.3 | ||

| 80.8 | 73.9 | 90.4 | 74.4 | 78.2 | 78.3 | 78.3 | ||

| 83.2 | 75.3 | 75.6 | 91.7 | 80.1 | 80.2 | 81.0 | ||

| 92.0 | 83.3 | 83.8 | 84.4 | 96.0 | 89.0 | 89.1 | ||

| 92.4 | 83.7 | 84.1 | 84.7 | 89.1 | 96.2 | 89.4 | ||

| 93.3 | 84.2 | 84.6 | 86.0 | 89.7 | 89.9 | 96.7 | ||

| Maps | References | RMSE with | RMSE with | RMSE () | RMSE () | Bias with | Bias |

|---|---|---|---|---|---|---|---|

| 1.82 | 0.74 | 2.58 | 3.81 | 0.34 | 3.08 | ||

| Uni | 5.71 | 2.04 | 2.7 | 9.05 | −1.69 | −8.92 | |

| Uni | 3.25 | 1.87 | 2.73 | 4.66 | −1.46 | −4.29 | |

| Uni | 1.79 | 1.68 | 2.68 | 2.15 | −1.25 | −0.46 | |

| Prop | 5.11 | 2.16 | 2.69 | 9.31 | −1.75 | −8.54 | |

| Prop | 2.96 | 1.65 | 2.71 | 4.51 | −1.12 | −3.92 | |

| Prop | 1.73 | 1.57 | 2.63 | 2.15 | −0.95 | −2.17 | |

| Field | 1.52 | 1.46 | 2.63 | 2.83 | −0.42 | −3.79 | |

| 2.96 | 1.78 | 4 | 10.11 | 1.52 | 10.62 | ||

| Uni | 3.93 | 1.99 | 4.01 | 7.72 | −0.74 | −6.51 | |

| Uni | 2.63 | 1.91 | 3.9 | 4.19 | −0.85 | −4.13 | |

| Uni | 1.86 | 1.8 | 4.03 | 2.21 | −0.79 | −1.13 | |

| Prop | 3.33 | 1.81 | 4.06 | 7.9 | −0.6 | −9.63 | |

| Prop | 2.37 | 1.86 | 4.01 | 4.12 | −0.48 | −7.63 | |

| Prop | 1.73 | 1.72 | 4.02 | 2.15 | −0.54 | −3.88 | |

| Field | 1.8 | 1.77 | 3.95 | 2.74 | −0.32 | −1.76 | |

| 1.83 | 0.79 | 2.78 | 4.05 | 0.43 | 3.84 | ||

| Uni | 5.65 | 1.92 | 2.69 | 9.08 | −1.52 | −8.79 | |

| Uni | 3.28 | 1.71 | 2.78 | 4.72 | −1.26 | −3.54 | |

| Uni | 1.8 | 1.61 | 2.71 | 2.19 | −1.07 | −2.41 | |

| Prop | 5.09 | 1.86 | 2.63 | 9.17 | −1.41 | −9.91 | |

| Prop | 3.05 | 1.7 | 2.8 | 4.6 | −0.97 | −4.29 | |

| Prop | 1.77 | 1.56 | 2.64 | 2.08 | −0.81 | −2.04 | |

| Field | 1.54 | 1.4 | 2.68 | 2.75 | −0.4 | −2.66 | |

| 2.8 | 1.65 | 3.91 | 9.58 | 1.39 | 11.05 | ||

| Uni | 3.85 | 1.89 | 3.87 | 7.85 | −0.53 | −6.2 | |

| Uni | 2.61 | 1.91 | 3.92 | 4.21 | −0.74 | −3.07 | |

| Uni | 1.73 | 1.71 | 3.69 | 2.13 | −0.68 | −1.82 | |

| Prop | 3.18 | 1.7 | 3.94 | 7.95 | −0.33 | −10.45 | |

| Prop | 2.23 | 1.73 | 3.82 | 4.07 | −0.32 | −4.95 | |

| Prop | 1.75 | 1.75 | 4.01 | 2.13 | −0.5 | −3.07 | |

| Field | 1.77 | 1.73 | 3.86 | 2.67 | −0.24 | −3.57 | |

| 1.85 | 0.69 | 2.49 | 3.44 | 0.21 | 3.69 | ||

| Uni | 6.29 | 3.31 | 2.35 | 9.23 | −3.1 | −10.18 | |

| Uni | 3.77 | 2.81 | 2.54 | 4.75 | −2.58 | −3.93 | |

| Uni | 2.08 | 2.18 | 2.46 | 2.16 | −1.89 | −2.68 | |

| Prop | 5.61 | 3 | 2.43 | 9.29 | −2.75 | −11.18 | |

| Prop | 3.57 | 2.38 | 2.41 | 4.61 | −2.05 | −3.68 | |

| Prop | 2.05 | 1.86 | 2.56 | 2.09 | −1.47 | −1.81 | |

| Field | 1.68 | 1.52 | 2.51 | 2.62 | −0.37 | −4.56 | |

| 2.66 | 1.42 | 3.67 | 8.54 | 1.13 | 6.42 | ||

| Uni | 5.23 | 2.79 | 3.78 | 8.16 | −2.39 | −5.95 | |

| Uni | 3.32 | 2.46 | 3.74 | 4.31 | −1.96 | −4.33 | |

| Uni | 2.15 | 2.08 | 3.65 | 2.14 | −1.51 | −2.33 | |

| Prop | 4.68 | 2.55 | 3.76 | 8.02 | −2 | −7.33 | |

| Prop | 3.03 | 2.13 | 3.69 | 4.09 | −1.51 | −1.2 | |

| Prop | 2 | 1.89 | 3.78 | 2.06 | −1.15 | −0.58 | |

| Field | 2.02 | 1.89 | 3.87 | 2.28 | −0.55 | −2.33 |

| Shifted | Crop | BroadL | NeedleL | Herbac | Shrub | Artif | Bare | Water | Tot | |

|---|---|---|---|---|---|---|---|---|---|---|

| Original | ||||||||||

| Crop | 27.01 | 0.13 | 0.03 | 0.79 | 0.01 | 0.12 | 0.00 | 0.01 | ||

| BroadL | 0.12 | 17.68 | 0.37 | 1.02 | 0.67 | 0.21 | 0.00 | 0.01 | ||

| NeeldleL | 0.02 | 0.39 | 8.21 | 0.47 | 0.34 | 0.08 | 0.00 | 0.00 | ||

| Herb. | 0.81 | 0.95 | 0.48 | 26.94 | 0.63 | 0.91 | 0.02 | 0.01 | ||

| Shrub | 0.01 | 0.70 | 0.34 | 0.59 | 3.26 | 0.05 | 0.00 | 0.00 | ||

| Artif | 0.12 | 0.22 | 0.09 | 0.90 | 0.04 | 4.58 | 0.00 | 0.01 | ||

| Bare | 0.00 | 0.00 | 0.00 | 0.02 | 0.00 | 0.00 | 0.13 | 0.00 | ||

| Water | 0.01 | 0.01 | 0.00 | 0.01 | 0.00 | 0.01 | 0.00 | 0.50 | ||

| Accuracy | 0.96 | 0.88 | 0.86 | 0.88 | 0.66 | 0.77 | 0.87 | 0.95 | 88.30 | |

| Shifted | Crop | BroadL | NeedleL | Herbac | Shrub | Artif | Bare | Water | Tot | |

|---|---|---|---|---|---|---|---|---|---|---|

| Original | ||||||||||

| Crop | 26.82 | 0.16 | 0.04 | 0.92 | 0.02 | 0.14 | 0.00 | 0.01 | ||

| BroadL | 0.15 | 17.17 | 0.55 | 1.15 | 0.77 | 0.26 | 0.00 | 0.01 | ||

| NeedleL | 0.03 | 0.57 | 7.92 | 0.52 | 0.38 | 0.10 | 0.00 | 0.00 | ||

| Herb | 0.92 | 1.13 | 0.53 | 26.44 | 0.65 | 1.05 | 0.02 | 0.01 | ||

| Shrub | 0.02 | 0.79 | 0.38 | 0.63 | 3.09 | 0.05 | 0.00 | 0.00 | ||

| Artif | 0.15 | 0.25 | 0.10 | 1.05 | 0.05 | 4.35 | 0.00 | 0.01 | ||

| Bare | 0.00 | 0.00 | 0.00 | 0.02 | 0.00 | 0.00 | 0.13 | 0.00 | ||

| Water | 0.01 | 0.01 | 0.00 | 0.01 | 0.00 | 0.01 | 0.00 | 0.49 | ||

| Accuracy | 0.95 | 0.86 | 0.83 | 0.86 | 0.62 | 0.73 | 0.85 | 0.93 | 86.41 | |

| Shifted | Crop | BroadL | NeedleL | Herbac | Shrub | Artif | Bare | Water | Tot | |

|---|---|---|---|---|---|---|---|---|---|---|

| Original | ||||||||||

| Crop | 27.03 | 0.13 | 0.03 | 0.76 | 0.01 | 0.11 | 0 | 0.01 | ||

| BroadL | 0.12 | 17.73 | 0.4 | 0.98 | 0.62 | 0.2 | 0 | 0.01 | ||

| NeedleL | 0.02 | 0.43 | 8.23 | 0.44 | 0.31 | 0.08 | 0 | 0 | ||

| Herbac | 0.78 | 0.9 | 0.45 | 27.13 | 0.58 | 0.88 | 0.01 | 0.01 | ||

| Shrub | 0.01 | 0.66 | 0.32 | 0.54 | 3.38 | 0.05 | 0 | 0 | ||

| Artif | 0.12 | 0.21 | 0.08 | 0.86 | 0.04 | 4.63 | 0 | 0.01 | ||

| Bare | 0 | 0 | 0 | 0.01 | 0 | 0 | 0.13 | 0 | ||

| Water | 0.01 | 0.01 | 0 | 0.01 | 0 | 0.01 | 0 | 0.5 | ||

| Accuracy | 0.96 | 0.88 | 0.86 | 0.88 | 0.68 | 0.78 | 0.87 | 0.94 | 88.76 | |

| geo. errors | 0.04 | 0.12 | 0.14 | 0.12 | 0.32 | 0.22 | 0.13 | 0.06 | 11.24 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Radoux, J.; Bogaert, P. About the Pitfall of Erroneous Validation Data in the Estimation of Confusion Matrices. Remote Sens. 2020, 12, 4128. https://doi.org/10.3390/rs12244128

Radoux J, Bogaert P. About the Pitfall of Erroneous Validation Data in the Estimation of Confusion Matrices. Remote Sensing. 2020; 12(24):4128. https://doi.org/10.3390/rs12244128

Chicago/Turabian StyleRadoux, Julien, and Patrick Bogaert. 2020. "About the Pitfall of Erroneous Validation Data in the Estimation of Confusion Matrices" Remote Sensing 12, no. 24: 4128. https://doi.org/10.3390/rs12244128

APA StyleRadoux, J., & Bogaert, P. (2020). About the Pitfall of Erroneous Validation Data in the Estimation of Confusion Matrices. Remote Sensing, 12(24), 4128. https://doi.org/10.3390/rs12244128