Comparing the Spatial Accuracy of Digital Surface Models from Four Unoccupied Aerial Systems: Photogrammetry Versus LiDAR

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Site

2.2. Ground Control Points (GCPs) and Checkpoints

2.3. Creation of the UAS-LiDAR Dataset

2.4. UAS Imagery—Data Collection

2.5. UAS Imagery—Data Processing Workflow

2.6. Creation of DSMs of Difference (DoDs)

3. Results

3.1. Accuracy of UAS DSMs Compared to Checkpoints and DSMs of Difference (DoDs)

3.1.1. DJI Inspire 1 (INS)

3.1.2. DJI Matrice 210 (MAT)

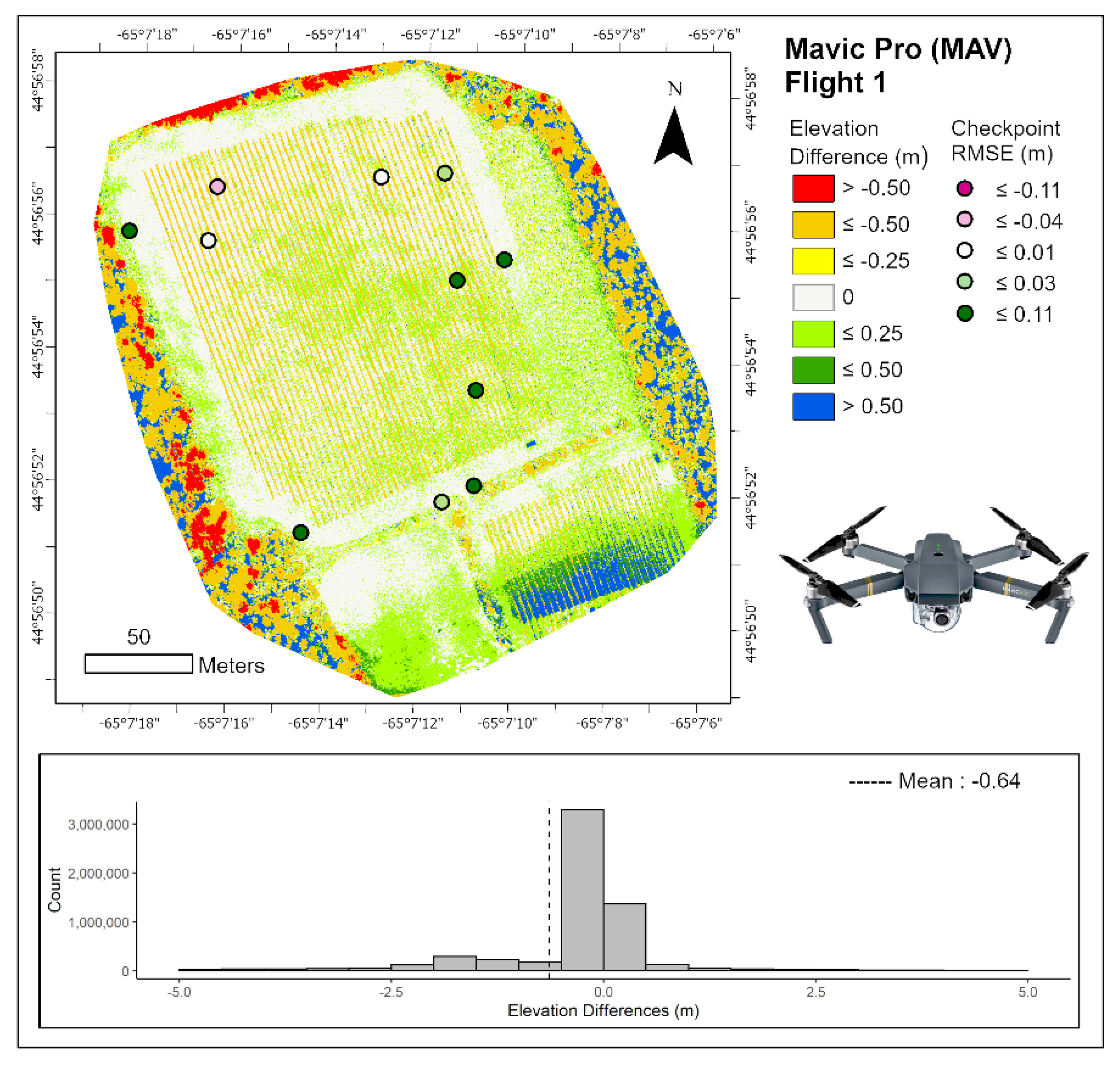

3.1.3. DJI Mavic Pro (MAV)

3.1.4. Phantom 4 Professional (P4P)

3.2. Differences across Land Covers

3.3. Summary of UAS Performance

4. Discussion

4.1. LiDAR Data Collection, Processing and Accuracy

4.2. Overall Accuracy of UAS DSMs Compared to Checkpoints and DSMs

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Campbell, J.; Shin, M. Essentials of Geographic Information Systems; Saylor: Washington, DC, USA, 2011; ISBN 978-1-4533-2196-6. [Google Scholar]

- Ritchie, J.C. Airborne laser altimeter measurements of landscape topography. Remote Sens. Environ. 1995, 53, 91–96. [Google Scholar] [CrossRef]

- Taud, H.; Parrot, J.-F.; Alvarez, R. DEM generation by contour line dilation. Comput. Geosci. 1999, 25, 775–783. [Google Scholar] [CrossRef]

- Chasmer, L.; Hopkinson, C.; Treitz, P. Investigating laser pulse penetration through a conifer canopy by integrating airborne and terrestrial lidar. Can. J. Remote Sens. 2006, 32, 116–125. [Google Scholar] [CrossRef] [Green Version]

- Open Topography. Available online: https://opentopography.org/ (accessed on 17 June 2020).

- NOAA. Data Access Viewer. Available online: https://coast.noaa.gov/dataviewer/#/lidar/search/ (accessed on 17 June 2020).

- USGS. LidarExplorer. Available online: https://prd-tnm.s3.amazonaws.com/LidarExplorer/index.html#/ (accessed on 17 June 2020).

- Nex, F.; Remondino, F. UAV for 3D mapping applications: A review. Appl. Geomat. 2014, 6, 1–15. [Google Scholar] [CrossRef]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and assessment of spectrometric, stereoscopic imagery collected using a lightweight UAV spectral camera for precision agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Haala, N.; Brenner, C. Extraction of buildings and trees in urban environments. ISPRS J. Photogramm. Remote Sens. 1999, 54, 130–137. [Google Scholar] [CrossRef]

- Priestnall, G.; Jaafar, J.; Duncan, A. Extracting urban features from LiDAR digital surface models. Comput. Environ. Urban Syst. 2000, 24, 65–78. [Google Scholar] [CrossRef]

- Torresan, C.; Berton, A.; Carotenuto, F.; Gennaro, S.F.D.; Gioli, B.; Matese, A.; Miglietta, F.; Vagnoli, C.; Zaldei, A.; Wallace, L. Forestry applications of UAVs in Europe: A review. Int. J. Remote Sens. 2017, 38, 2427–2447. [Google Scholar] [CrossRef]

- Frueh, C.; Zakhor, A. Constructing 3D city models by merging ground-based and airborne views. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 18–20 June 2003. [Google Scholar] [CrossRef] [Green Version]

- Arbeck. The Difference between Digital Surface Model (DSM) and Digital Terrain Models (DTM) when Talking about Digital Elevation Models (DEM). 2015. Available online: https://commons.wikimedia.org/wiki/File:The_difference_between_Digital_Surface_Model_(DSM)_and_Digital_Terrain_Models_(DTM)_when_talking_about_Digital_Elevation_models_(DEM).svg (accessed on 23 August 2020).

- Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 17 June 2020).

- Pix4D. Pix4Dmapper: Professional Drone Mapping and Photogrammetry Software. Available online: https://www.pix4d.com/product/pix4dmapper-photogrammetry-software (accessed on 17 June 2020).

- Hashemi-Beni, L.; Jones, J.; Thompson, G.; Johnson, C.; Gebrehiwot, A. Challenges and opportunities for UAV-based digital elevation model generation for flood-risk management: A case of Princeville, North Carolina. Sensors 2018, 18, 3843. [Google Scholar] [CrossRef] [Green Version]

- Mandlburger, G.; Wenzel, K.; Spitzer, A.; Haala, N.; Glira, P.; Pfeifer, N. Improved topographic models via concurrent airborne LiDAR and dense image matching. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 259–266. [Google Scholar] [CrossRef] [Green Version]

- Jensen, J.L.R.; Mathews, A.J. Assessment of image-based point cloud products to generate a bare Earth surface and estimate canopy heights in a woodland ecosystem. Remote Sens. 2016, 8, 50. [Google Scholar] [CrossRef] [Green Version]

- Salach, A.; Bakuła, K.; Pilarska, M.; Ostrowski, W.; Górski, K.; Kurczyński, Z. Accuracy assessment of point clouds from LiDAR and dense image matching acquired using the UAV platform for DTM creation. ISPRS Int. J. Geo-Inf. 2018, 7, 342. [Google Scholar] [CrossRef] [Green Version]

- Schonberger, J.L.; Frahm, J.-M. Structure-from-motion revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Fonstad, M.A.; Dietrich, J.T.; Courville, B.C.; Jensen, J.L.; Carbonneau, P.E. Topographic structure from motion: A new development in photogrammetric measurement. Earth Surf. Process. Landf. 2013, 38, 421–430. [Google Scholar] [CrossRef] [Green Version]

- Pricope, N.G.; Mapes, K.L.; Woodward, K.D.; Olsen, S.F.; Baxley, J.B. Multi-sensor assessment of the effects of varying processing parameters on UAS product accuracy and quality. Drones 2019, 3, 63. [Google Scholar] [CrossRef] [Green Version]

- Hirschmuller, H. Stereo processing by semiglobal matching and mutual information. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 328–341. [Google Scholar] [CrossRef] [PubMed]

- Rothermel, M.; Wenzel, K.; Fritsch, D.; Haala, N. SURE: Photogrammetric surface reconstruction from imagery. In Proceedings of the LC3D Workshop, Berlin, Germany, 4–5 December 2012; Volume 8. [Google Scholar]

- Wenzel, K.; Rothermel, M.; Haala, N.; Fritsch, D. SURE–The ifp software for dense image matching. In Proceedings of the Photogrammetric Week, Stuttgart, Germany, 9–13 September 2013; Volume 13, pp. 59–70. [Google Scholar]

- Remondino, F.; Spera, M.G.; Nocerino, E.; Menna, F.; Nex, F.; Gonizzi-Barsanti, S. Dense image matching: Comparisons and analyses. In Proceedings of the Digital Heritage International Congress, Marseille, France, 28 October–1 November 2013; Volume 1, pp. 47–54. [Google Scholar]

- Gašparović, M.; Seletković, A.; Berta, A.; Balenović, I. The evaluation of photogrammetry-based DSM from low-cost UAV by LiDAR-based DSM. South-East Eur. For. 2017, 8. [Google Scholar] [CrossRef] [Green Version]

- Gauci, A.A.; Brodbeck, C.J.; Poncet, A.M.; Knappenberger, T. Assessing the geospatial accuracy of aerial imagery collected with various UAS platforms. Trans. ASABE 2018, 61, 1823–1829. [Google Scholar] [CrossRef]

- Coveney, S.; Roberts, K. Lightweight UAV digital elevation models and orthoimagery for environmental applications: Data accuracy evaluation and potential for river flood risk modelling. Int. J. Remote Sens. 2017, 38, 3159–3180. [Google Scholar] [CrossRef] [Green Version]

- Agüera-Vega, F.; Carvajal-Ramírez, F.; Martínez-Carricondo, P. Accuracy of digital surface models and orthophotos derived from unmanned aerial vehicle photogrammetry. J. Surv. Eng. 2017, 143, 1–10. [Google Scholar] [CrossRef]

- Mancini, F.; Dubbini, M.; Gattelli, M.; Stecchi, F.; Fabbri, S.; Gabbianelli, G. Using unmanned aerial Vehicles (UAV) for high-resolution reconstruction of topography: The structure from motion approach on coastal environments. Remote Sens. 2013, 5, 6880–6898. [Google Scholar] [CrossRef] [Green Version]

- Wallace, L.; Lucieer, A.; Malenovský, Z.; Turner, D.; Vopěnka, P. Assessment of forest structure using two UAV techniques: A comparison of airborne laser scanning and structure from motion (SfM) point clouds. Forests 2016, 7, 62. [Google Scholar] [CrossRef] [Green Version]

- Yao, H.; Qin, R.; Chen, X. Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef] [Green Version]

- Sankey, T.T.; McVay, J.; Swetnam, T.L.; McClaran, M.P.; Heilman, P.; Nichols, M. UAV hyperspectral and lidar data and their fusion for arid and semi-arid land vegetation monitoring. Remote Sens. Ecol. Conserv. 2018, 4, 20–33. [Google Scholar] [CrossRef]

- Brede, B.; Lau, A.; Bartholomeus, H.M.; Kooistra, L. Comparing RIEGL RiCOPTER UAV LiDAR derived canopy height and DBH with terrestrial LiDAR. Sensors 2017, 17, 2371. [Google Scholar] [CrossRef] [PubMed]

- Cao, L.; Liu, K.; Shen, X.; Wu, X.; Liu, H. Estimation of forest structural parameters using UAV-LiDAR data and a process-based model in ginkgo planted forests. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 1–16. [Google Scholar] [CrossRef]

- Khan, S.; Aragão, L.; Iriarte, J. A UAV–lidar system to map Amazonian rainforest and its ancient landscape transformations. Int. J. Remote Sens. 2017, 38, 2313–2330. [Google Scholar] [CrossRef]

- Liang, X.; Wang, Y.; Pyörälä, J.; Lehtomäki, M.; Yu, X.; Kaartinen, H.; Kukko, A.; Honkavaara, E.; Issaoui, A.E.I.; Nevalainen, O.; et al. Forest in situ observations using unmanned aerial vehicle as an alternative of terrestrial measurements. For. Ecosyst. 2019, 6, 20. [Google Scholar] [CrossRef] [Green Version]

- Sofonia, J.; Shendryk, Y.; Phinn, S.; Roelfsema, C.; Kendoul, F.; Skocaj, D. Monitoring sugarcane growth response to varying nitrogen application rates: A comparison of UAV SLAM LiDAR and photogrammetry. Int. J. Appl. Earth Obs. Geoinf. 2019, 82, 101878. [Google Scholar] [CrossRef]

- Giordan, D.; Adams, M.S.; Aicardi, I.; Alicandro, M.; Allasia, P.; Baldo, M.; De Berardinis, P.; Dominici, D.; Godone, D.; Hobbs, P. The use of unmanned aerial vehicles (UAVs) for engineering geology applications. Bull. Eng. Geol. Environ. 2020, 1–45. [Google Scholar] [CrossRef] [Green Version]

- Province of Nova Scotia GeoNOVA. Available online: https://geonova.novascotia.ca/ (accessed on 10 March 2020).

- Applanix POSPac UAV. Available online: https://www.applanix.com/downloads/products/specs/POSPac-UAV.pdf (accessed on 17 June 2020).

- Phoenix LiDAR Systems. Phoenix Spatial Explorer. Available online: https://www.phoenixlidar.com/software/ (accessed on 22 July 2020).

- CloudCompare. (Version 2.1). Available online: http://www.cloudcompare.org/ (accessed on 17 June 2020).

- Federal Geographic Data Committee Geospatial Positioning Accuracy Standards. Available online: https://www.fgdc.gov/standards/projects/FGDC-standards-projects/accuracy/part1/chapter1 (accessed on 14 May 2020).

- Evans, J.S.; Hudak, A.T.; Faux, R.; Smith, A.M.S. Discrete return lidar in natural resources: Recommendations for project planning, data processing, and deliverables. Remote Sens. 2009, 1, 776. [Google Scholar] [CrossRef] [Green Version]

- Esri ArcGIS Pro. 2D and 3D GIS Mapping Software. Available online: https://www.esri.com/en-us/arcgis/products/arcgis-pro/overview (accessed on 17 June 2020).

- USGS Agisoft Photoscan Workflow. Available online: https://uas.usgs.gov/nupo/pdf/USGSAgisoftPhotoScanWorkflow.pdf (accessed on 14 May 2020).

- Lague, D.; Brodu, N.; Leroux, J. Accurate 3D comparison of complex topography with terrestrial laser scanner: Application to the Rangitikei canyon (N-Z). ISPRS J. Photogramm. Remote Sens. 2013, 82, 10–26. [Google Scholar] [CrossRef] [Green Version]

- Ressl, C.; Mandlburger, G.; Pfeifer, N. Investigating adjustment of airborne laser scanning strips without usage of GNSS/IMU trajectory data. In Proceedings of the ISPRS Workshop Laser scanning, Paris, France, 1–2 September 2009; pp. 195–200. [Google Scholar]

- Stone, C.; Osborne, J. Deployment and Integration of Cost-Effective High Resolution Remotely Sensed Data for the Australian Forest Industry. Available online: https://www.fwpa.com.au/images/resources/-2017/Amended_Final_Report_PNC326-1314-.pdf (accessed on 14 May 2020).

- Hussey, A. Velodyne Slashes the Price in Half of Its Most Popular LiDAR Sensor. Available online: https://www.businesswire.com/news/home/20180101005041/en/Velodyne-Slashes-Price-Popular-LiDAR-Sensor (accessed on 24 July 2020).

| Unit | Specification | Value |

|---|---|---|

| DJI Matrice 600 Pro UAS | Weight (kg) | 10 |

| Diameter (cm) | 170 | |

| Max. takeoff weight (kg) | 15.5 | |

| Max. payload (kg) | 5.5 | |

| Max. speed (km/h) | 65 | |

| Max. hover time (min) | 16 | |

| Manufacture year | 2017 | |

| Cost * | ~30,000 USD | |

| Velodyne VLP-16 LiDAR | Weight (g) | 830 |

| No. of returns | 2 (strongest and last) | |

| Accuracy (m) | ±0.03 | |

| Beam divergence (degrees) | 0.16875 | |

| Points/second | Up to 600,000 | |

| No. of lasers | 16 | |

| Laser wavelength (nm) | 903 | |

| Laser range (m) | 100 | |

| Field of view (degrees) | 360 | |

| Manufacture year | 2018 | |

| Cost * | ~20,000 USD | |

| Applanix APX-15 IMU | Weight (g) | 60 |

| No. of possible satellites | 336 GNSS channels | |

| Rate of data collection (Hz) | 200 | |

| Parameters | Position, Time, Velocity, Heading, Pitch, Roll | |

| Manufacture year | 2017 | |

| Cost * | ~15,000 USD |

| CP | Longitude | Latitude | Altitude | LiDAR |

|---|---|---|---|---|

| P1 | −65.11892131 | 44.94836999 | 54.75 | 0.03 |

| P2 | −65.11953122 | 44.94875508 | 61.09 | 0.05 |

| P3 | −65.1196785 | 44.9482073 | 59.96 | 0.03 |

| P4 | −65.11980599 | 44.94866469 | 63.44 | 0.05 |

| P5 | −65.11989563 | 44.94911118 | 63.24 | 0.05 |

| P6 | −65.11986297 | 44.94773879 | 57.41 | 0.01 |

| P7 | −65.12068701 | 44.94759533 | 57.64 | 0.08 |

| P9 | −65.12173866 | 44.94883572 | 72.04 | 0.02 |

| P10 | −65.1212735 | 44.94880432 | 71.54 | −0.02 |

| P11 | −65.12122784 | 44.94902887 | 72.39 | 0.03 |

| P12 | −65.12026788 | 44.94908824 | 66.85 | 0.04 |

| P20 | −65.11967805 | 44.94780874 | 57.09 | 0.01 |

| ME | 0.03 | |||

| St. Dev. | 0.02 | |||

| MAE | 0.04 | |||

| RMSE | 0.04 |

| Inspire 1 V1 (INS) | Matrice 210 (MAT) | Mavic Pro (MAV) | Phantom 4 Pro (P4P) | |

|---|---|---|---|---|

| Platform |  |  |  |  |

| Dimensions (cm) | 43.7 × 30.2 × 45.3 | 88.7 × 88.0 × 37.8 | 19.8 × 8.3 × 8.3 | 25.0 × 25.0 × 20.0 |

| Weight (g) | 3060 ~ | 4570 | 734 | 1388 |

| Max Flight Time (min) | 18 | 38 | 27 | 30 |

| No. Batteries | 1 | 2 | 1 | 1 |

| Sensor (CMOS) | 1/2.3″ | 4/3″ | 1/2.3″ | 1″ |

| Effective Pixels (MP) | 12.4 | 20.8 ^ | 12.35 | 20 |

| GSD—70 m (cm/px) | 3.06 | 1.55 | 2.3 | 1.91 |

| GNSS | GPS + GLONASS | GPS + GLONASS | GPS + GLONASS | GPS + GLONASS |

| GNSS Vertical Accuracy (m) | +/- 0.5 | +/- 0.5 * | +/- 0.5 | +/- 0.5 |

| GNSS Horizontal Accuracy (m) | +/- 2.5 | +/- 1.5 * | +/- 1.5 | +/- 1.5 |

| Manufacture Year | 2014 | 2017 | 2017 | 2016 |

| Cost | ~2000 USD | ~7000 USD | ~1000 USD | ~1500 USD |

| UAS | Flight # | Flight Start Time | Flight Order | Duration | No. Images | Point Cloud Density | ME | St. Dev. | MAE | RMSE |

|---|---|---|---|---|---|---|---|---|---|---|

| INS | 1 | 10:15 | 2 | 9 min 32 s | 117 | 434.03 | 0.01 | 0.03 | 0.02 | 0.03 |

| 2 | 11:50 | 7 | 9 min 36 s | 117 | 452.69 | 0.02 | 0.03 | 0.02 | 0.03 | |

| 3 | 12:45 | 11 | 9 min 30 s | 117 | 434.03 | 0.02 | 0.02 | 0.03 | 0.03 | |

| MAT | 1 | 10:50 | 4 | 11 min 6 s | 216 | 1371.70 | 0.003 | 0.07 | 0.05 | 0.06 |

| 2 | 11:35 | 6 | 9 min 42 s | 160 | 1275.51 | −0.03 | 0.25 | 0.11 | 0.24 | |

| 3 | 12:30 | 10 | 9 min 43 s | 160 | 1371.74 | 0.02 | 0.08 | 0.07 | 0.08 | |

| MAV | 1 | 10:30 | 3 | 12 min 5 s | 177 | 771.60 | 0.04 | 0.05 | 0.05 | 0.06 |

| 2 | 12:00 | 8 | 11 min 34 s | 176 | 730.60 | 0.04 | 0.05 | 0.05 | 0.07 | |

| 3 | 12:15 | 9 | 11 min 54 s | 176 | 730.46 | 0.05 | 0.06 | 0.05 | 0.07 | |

| P4P | 1 | 10:00 | 1 | 8 min 10 s | 146 | 1111.11 | 0.02 | 0.03 | 0.03 | 0.04 |

| 2 | 11:17 | 5 | 10 min 35 s | 160 | 1040.58 | 0.01 | 0.03 | 0.03 | 0.04 | |

| 3 | 13:00 | 12 | 10 min 36 s | 160 | 1040.58 | 0.007 | 0.03 | 0.02 | 0.03 |

| UAS and Flight # | ME | St. Dev. | MAE | RMSE |

|---|---|---|---|---|

| INS 1 | −0.66 | 2.37 | 0.95 | 2.09 |

| MAT 1 | −0.80 | 2.43 | 1.02 | 1.97 |

| MAV 1 | −0.64 | 2.33 | 0.94 | 2.03 |

| P4P 3 | −0.79 | 2.58 | 1.03 | 2.04 |

| Land Cover | UAS and Flight # | ME | St. Dev. | MAE | RMSE |

|---|---|---|---|---|---|

| Vines | INS 1 | −0.64 | 0.79 | 0.45 | 0.77 |

| MAT 1 | −0.77 | 0.83 | 0.54 | 0.90 | |

| MAV 1 | −0.63 | 0.83 | 0.54 | 0.91 | |

| P4P 3 | −0.67 | 0.79 | 0.37 | 0.65 | |

| Bare Soil | INS 1 | −0.02 | 0.44 | 0.12 | 0.54 |

| MAT 1 | −0.07 | 0.43 | 0.10 | 0.58 | |

| MAV 1 | −0.01 | 0.42 | 0.11 | 0.59 | |

| P4P 3 | −0.07 | 0.42 | 0.08 | 0.46 | |

| Dirt Road | INS 1 | 0.03 | 0.11 | 0.09 | 0.14 |

| MAT 1 | −0.17 | 0.21 | 0.05 | 0.08 | |

| MAV 1 | 0.03 | 0.13 | 0.06 | 0.09 | |

| P4P 3 | −0.05 | 0.06 | 0.07 | 0.09 | |

| Long Grass | INS 1 | −0.37 | 1.42 | 0.39 | 1.27 |

| MAT 1 | −0.39 | 1.42 | 0.44 | 1.39 | |

| MAV 1 | −0.01 | 0.42 | 0.11 | 0.59 | |

| P4P 3 | −0.44 | 1.46 | 0.38 | 1.21 | |

| Forest | INS 1 | −2.47 | 4.81 | 3.50 | 5.14 |

| MAT 1 | −2.99 | 4.76 | 3.89 | 5.46 | |

| MAV 1 | −2.48 | 4.71 | 4.28 | 5.88 | |

| P4P 3 | −3.04 | 5.14 | 2.81 | 4.62 | |

| Mowed Grass | INS 1 | −0.03 | 0.13 | 0.16 | 0.41 |

| MAT 1 | −0.06 | 0.14 | 0.17 | 0.47 | |

| MAV 1 | −0.01 | 0.17 | 0.17 | 0.45 | |

| P4P 3 | −0.04 | 0.12 | 0.14 | 0.36 |

| INS | MAT | MAV | P4P | |

|---|---|---|---|---|

| Physical Parameters | ||||

| Dimensions | 3 | 4 | 1 | 2 |

| Weight | 3 | 4 | 1 | 2 |

| Max. Flight Time | 4 | 1 | 3 | 2 |

| No. Batteries | 1 | 2 | 1 | 1 |

| GSD | 4 | 1 | 3 | 2 |

| Subtotal | 15 | 12 | 9 | 9 |

| Statistics | ||||

| Checkpoints | 1 | 2 | 2 | 1 |

| DoD | 4 | 1 | 2 | 3 |

| Vines | 2 | 3 | 4 | 1 |

| Bare Soil | 2 | 3 | 4 | 1 |

| Dirt Road | 3 | 1 | 2 | 2 |

| Long Grass | 3 | 4 | 1 | 2 |

| Forest | 2 | 3 | 4 | 1 |

| Mowed Grass | 2 | 4 | 3 | 1 |

| Subtotal | 19 | 21 | 22 | 12 |

| Total | 34 | 33 | 31 | 21 |

| Overall Ranking | 4 | 3 | 2 | 1 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rogers, S.R.; Manning, I.; Livingstone, W. Comparing the Spatial Accuracy of Digital Surface Models from Four Unoccupied Aerial Systems: Photogrammetry Versus LiDAR. Remote Sens. 2020, 12, 2806. https://doi.org/10.3390/rs12172806

Rogers SR, Manning I, Livingstone W. Comparing the Spatial Accuracy of Digital Surface Models from Four Unoccupied Aerial Systems: Photogrammetry Versus LiDAR. Remote Sensing. 2020; 12(17):2806. https://doi.org/10.3390/rs12172806

Chicago/Turabian StyleRogers, Stephanie R., Ian Manning, and William Livingstone. 2020. "Comparing the Spatial Accuracy of Digital Surface Models from Four Unoccupied Aerial Systems: Photogrammetry Versus LiDAR" Remote Sensing 12, no. 17: 2806. https://doi.org/10.3390/rs12172806

APA StyleRogers, S. R., Manning, I., & Livingstone, W. (2020). Comparing the Spatial Accuracy of Digital Surface Models from Four Unoccupied Aerial Systems: Photogrammetry Versus LiDAR. Remote Sensing, 12(17), 2806. https://doi.org/10.3390/rs12172806