Deep Semantic Segmentation of Center Pivot Irrigation Systems from Remotely Sensed Data

Abstract

1. Introduction

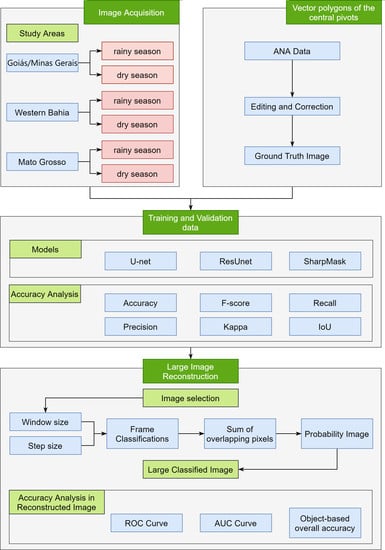

2. Materials and Methods

2.1. Study Area

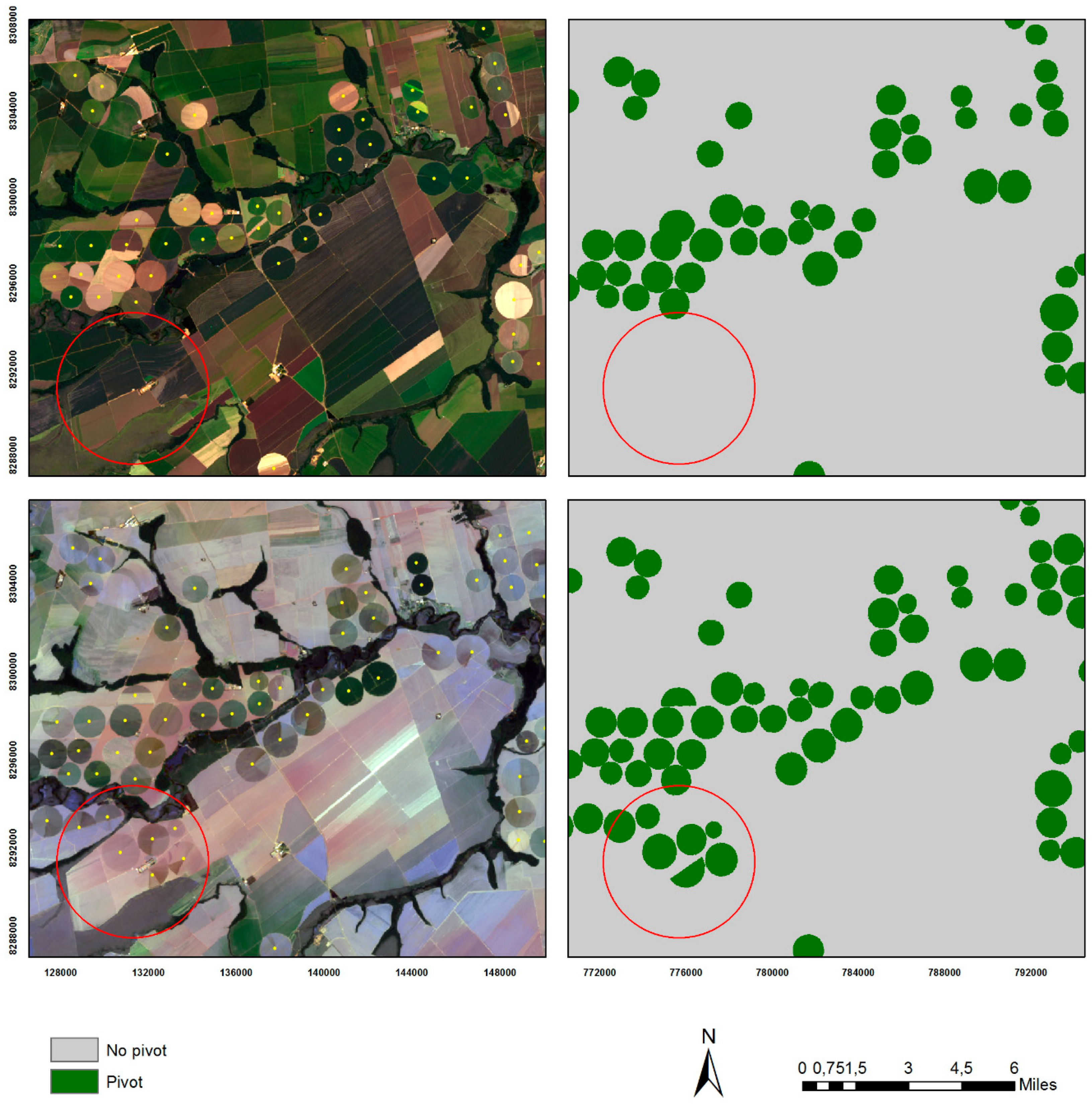

2.2. Dataset and Training Samples

2.3. Deep Learning Models

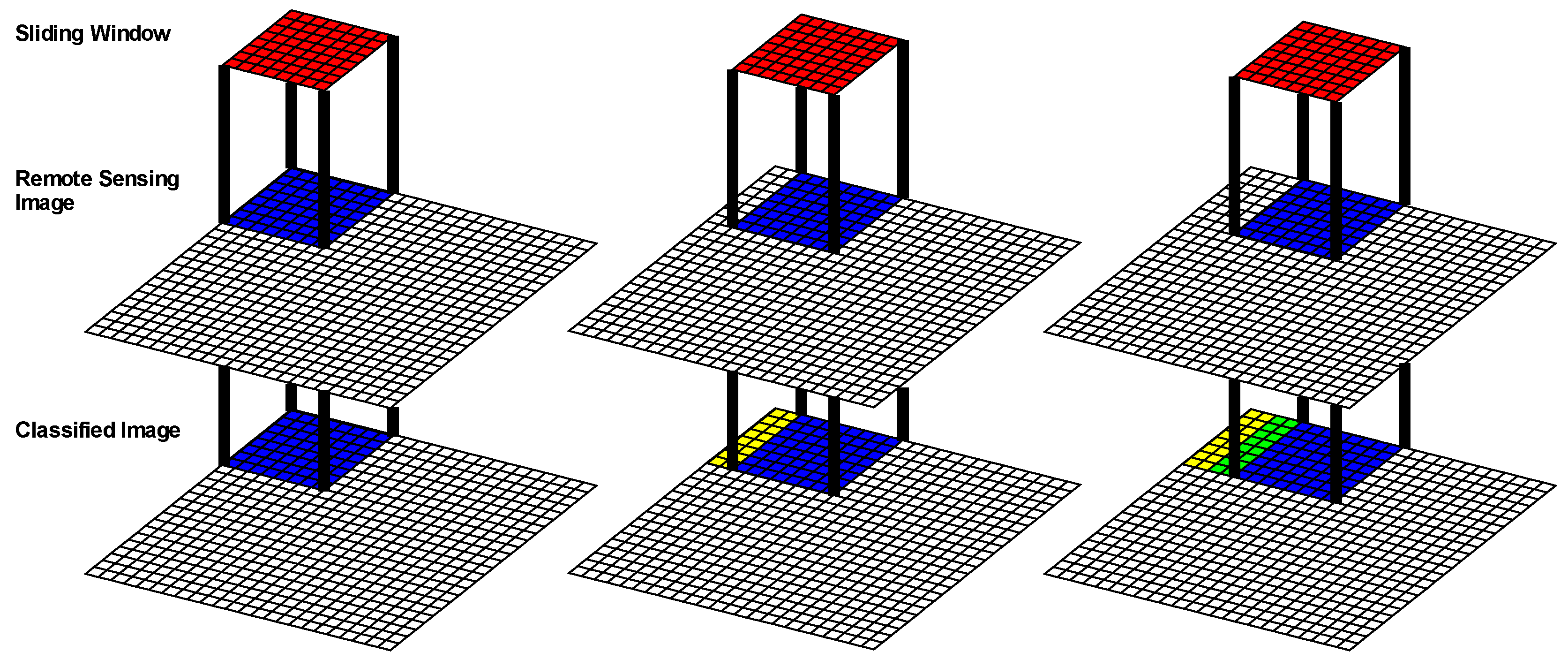

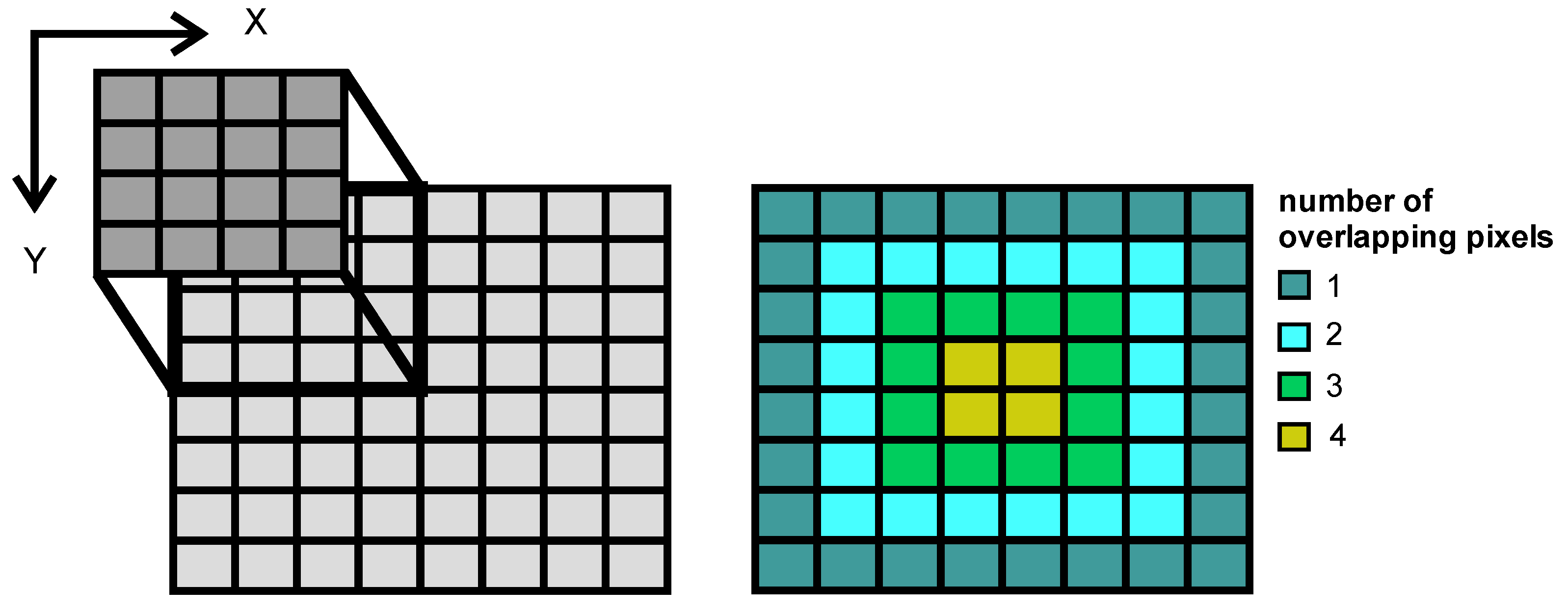

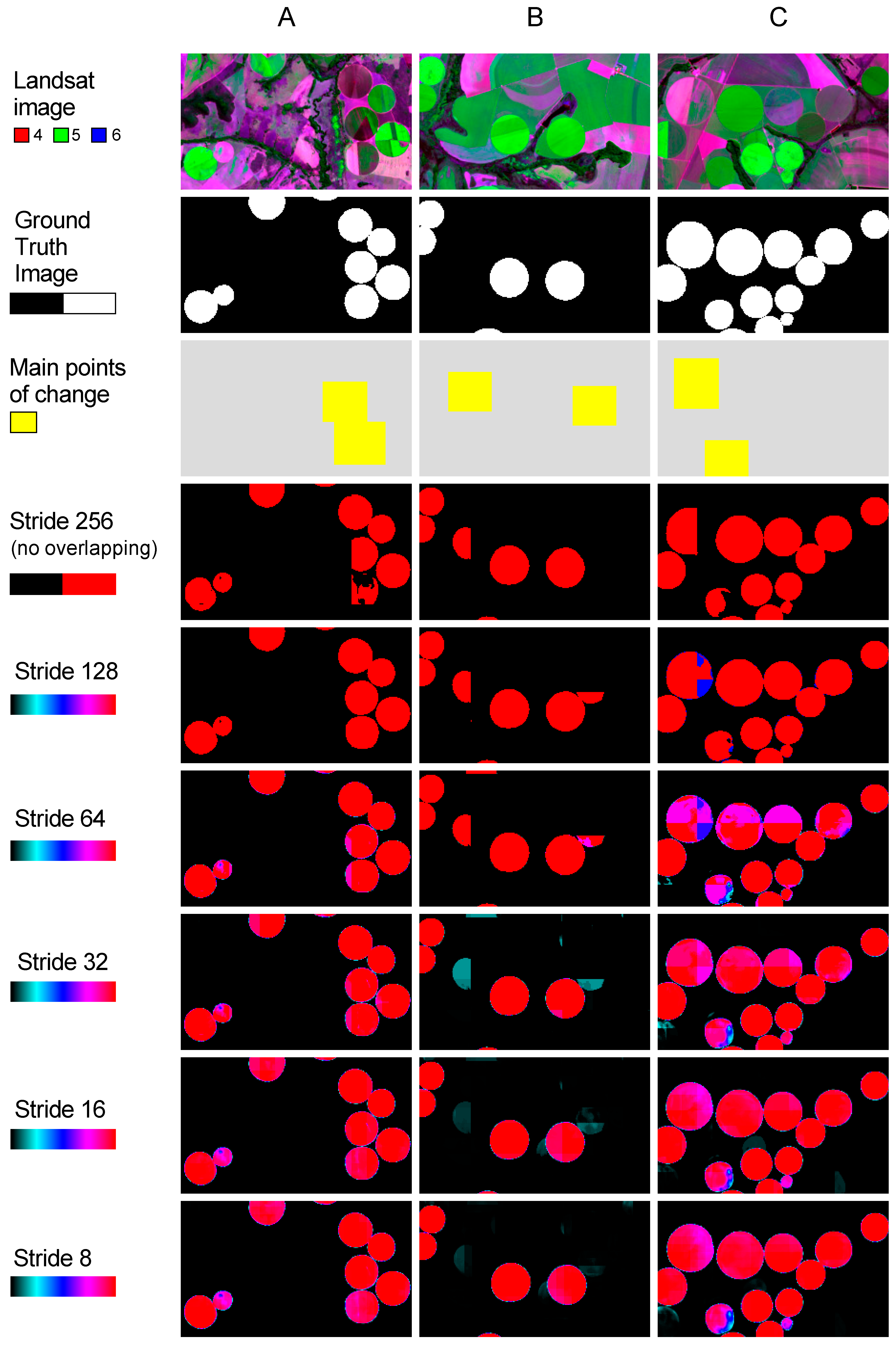

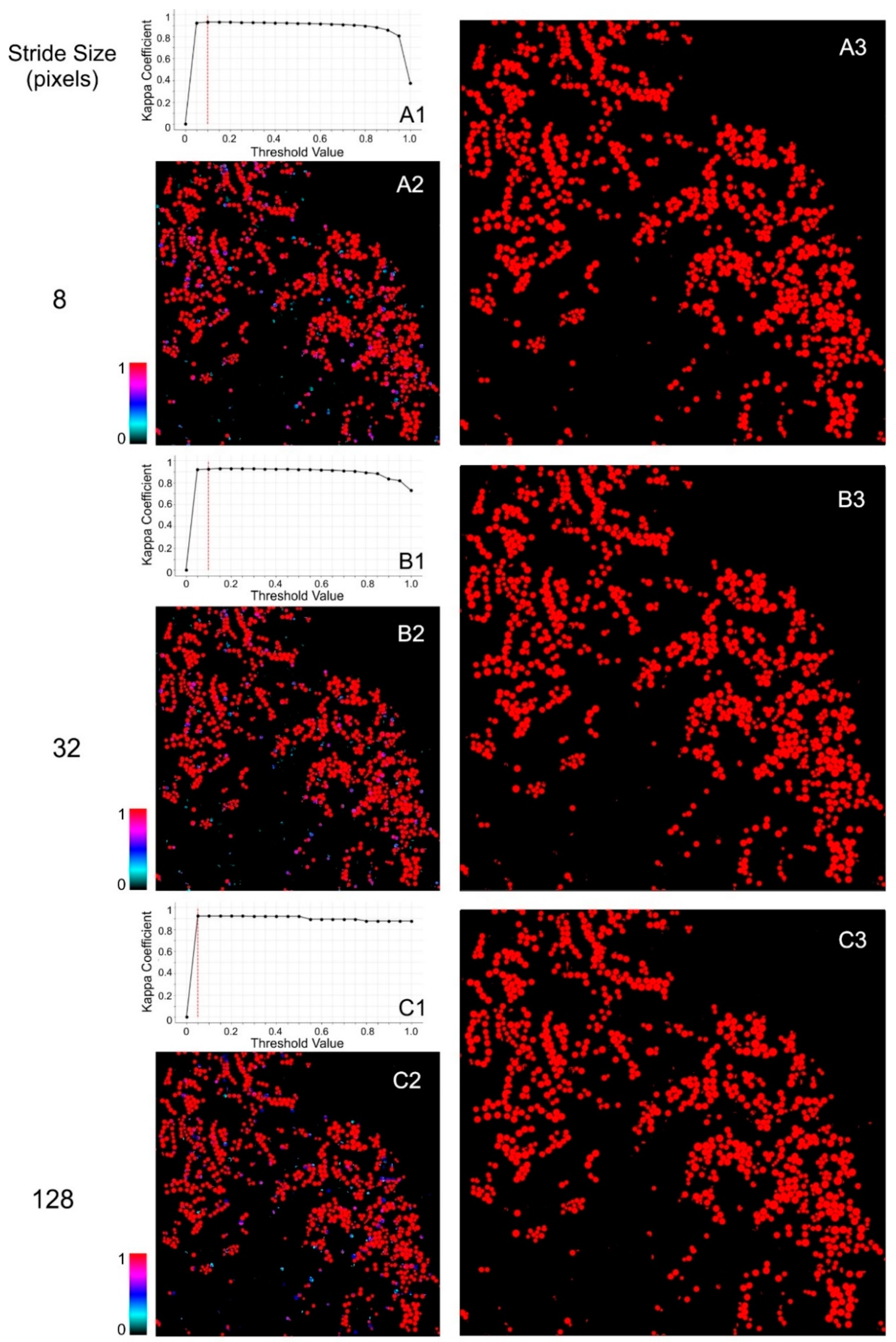

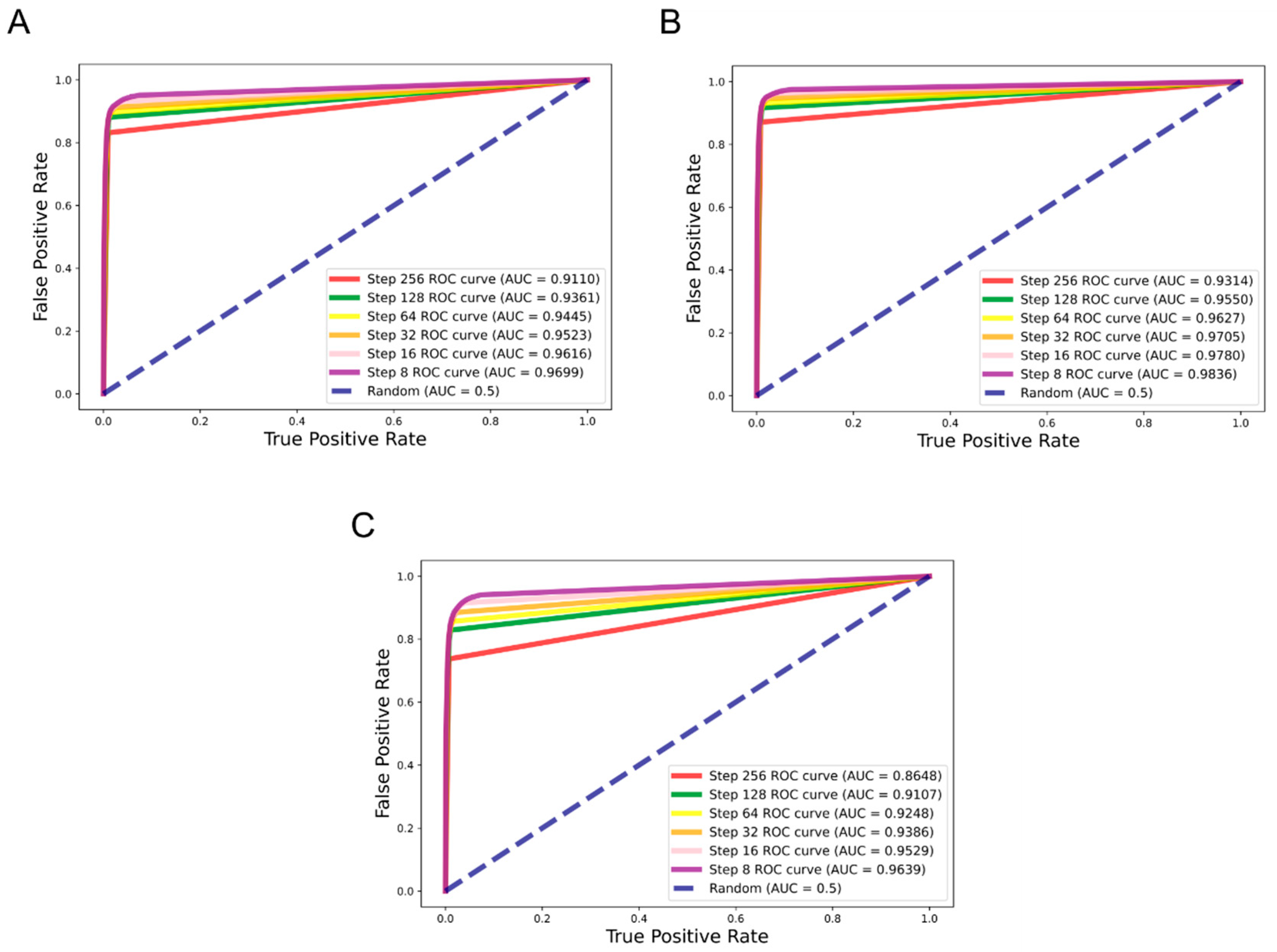

2.4. Classified Image Reconstruction for Large Scenes

2.5. Season Analysis

2.6. Accuracy Assessment

3. Results

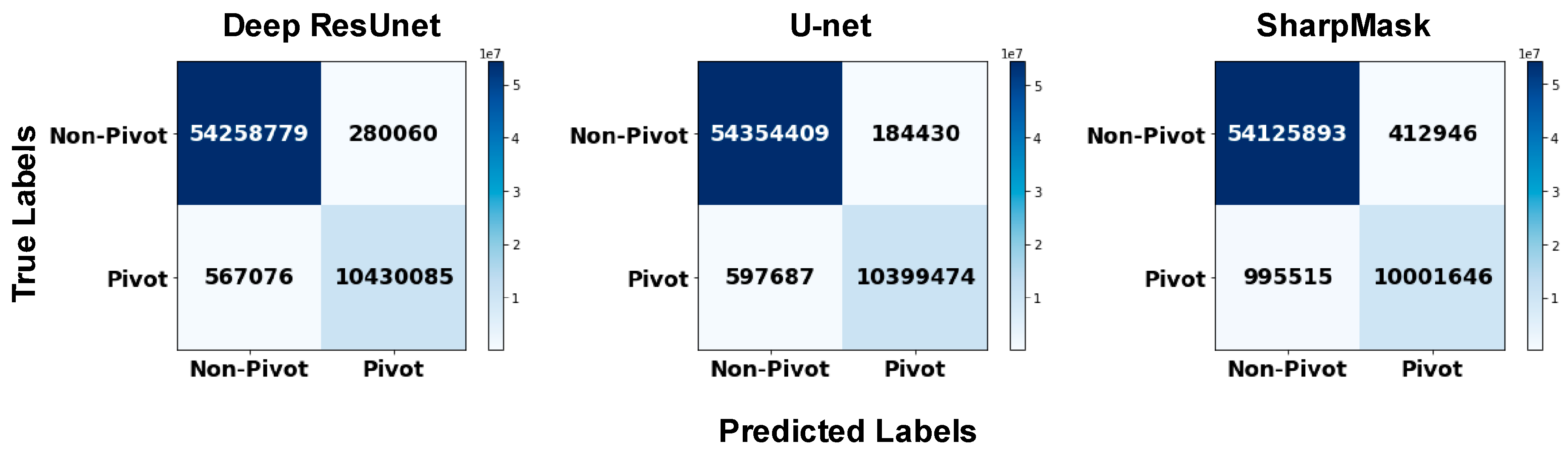

3.1. Comparison between CNN architectures from the validation samples

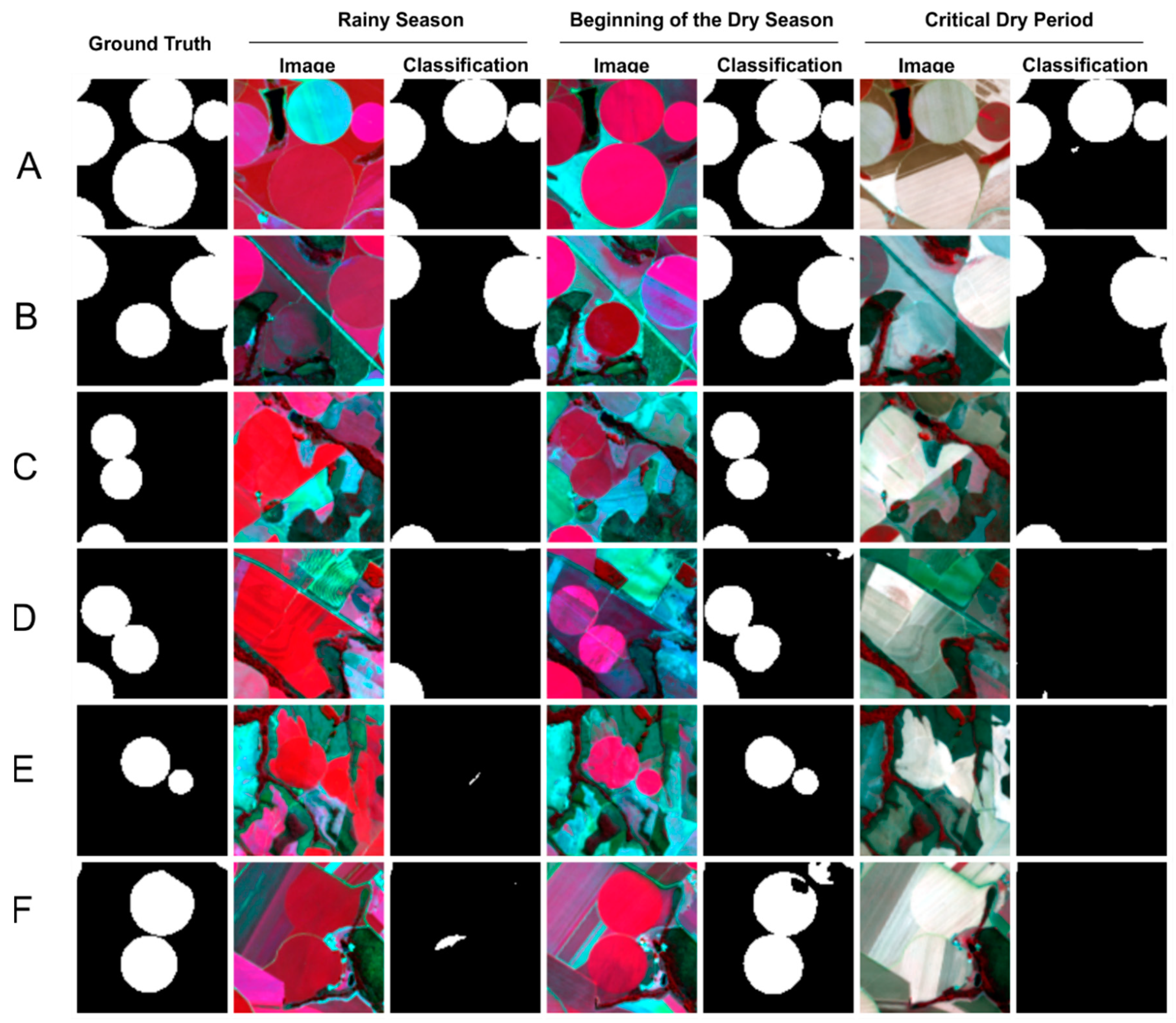

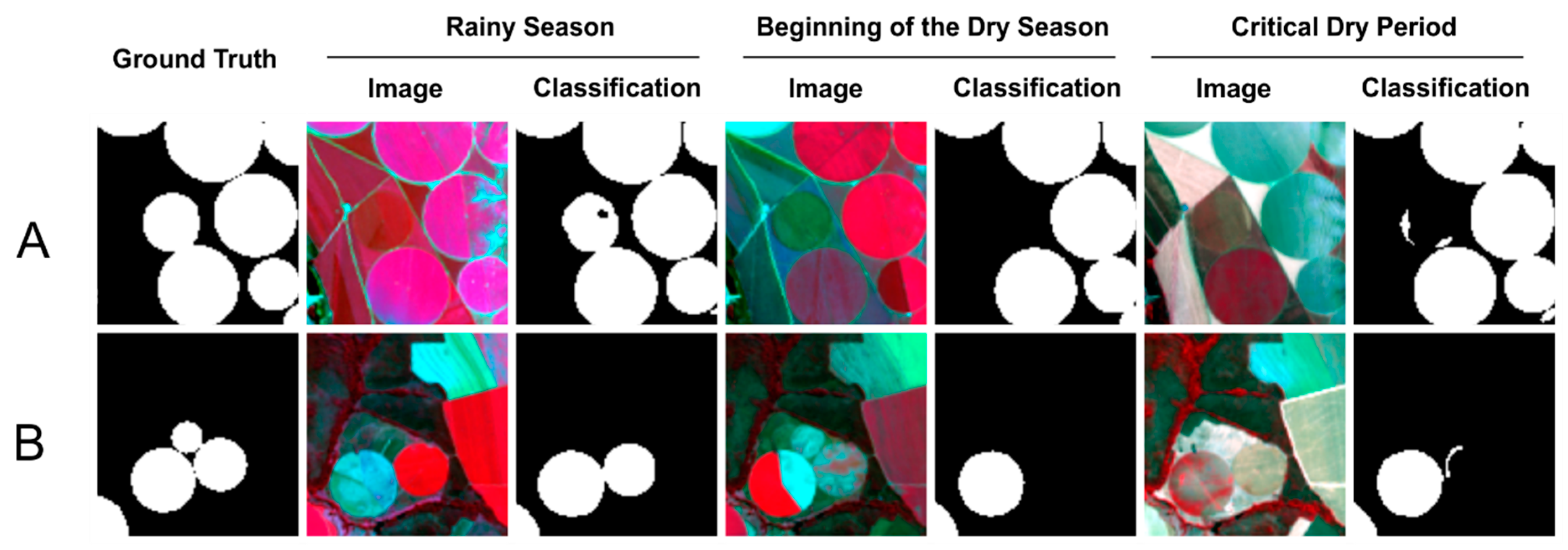

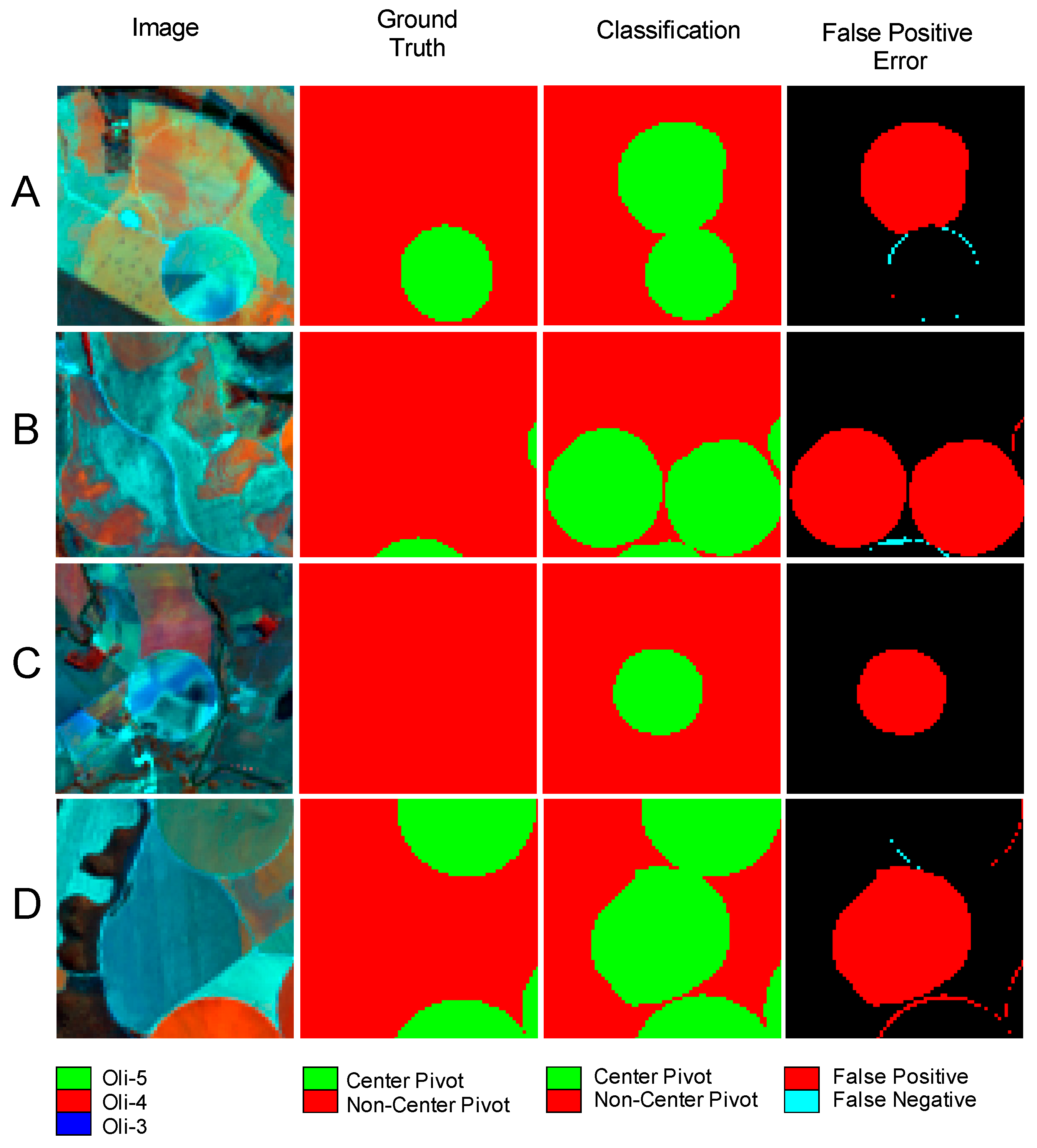

3.2. Results of Entire Classified Image in Different Seasons

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Alexandridis, T.K.; Zalidis, G.C.; Silleos, N.G. Mapping irrigated area in Mediterranean basins using low cost satellite Earth Observation. Comput. Electron. Agric. 2008, 64, 93–103. [Google Scholar] [CrossRef]

- Althoff, D.; Rodrigues, L.N. The expansion of center-pivot irrigation in the Cerrado biome. IRRIGA 2019, 1, 56–61. [Google Scholar] [CrossRef]

- Agência Nacional de Águas (Brasil). Levantamento da Agricultura Irrigada por Pivôs Centrais no Brasil—2014: Relatório Síntese; Agência Nacional de Águas (ANA): Brasília, Brasil, 2016; ISBN 978-85-8210-034-9. [Google Scholar]

- Alexandratos, N.; Bruinsma, J. World Agriculture towards 2030/2050: The 2012 Revision; No. 12-03, ESAWorking Paper; FAO, Agricultural Development Economics Division: Rome, Italy, 2012. [Google Scholar]

- Siebert, S.; Döll, P. Quantifying blue and green virtual water contents in global crop production as well as potential production losses without irrigation. J. Hydrol. 2010, 384, 198–217. [Google Scholar] [CrossRef]

- Crist, E.; Mora, C.; Engelman, R. The interaction of human population, food production, and biodiversity protection. Science 2017, 356, 260–264. [Google Scholar] [CrossRef] [PubMed]

- Aznar-Sánchez, J.A.; Belmonte-Ureña, L.J.; Velasco-Muñoz, J.F.; Manzano-Agugliaro, F. Economic analysis of sustainable water use: A review of worldwide research. J. Clean. Prod. 2018, 198, 1120–1132. [Google Scholar] [CrossRef]

- Mancosu, N.; Snyder, R.L.; Kyriakakis, G.; Spano, D. Water scarcity and future challenges for food production. Water 2015, 7, 975–992. [Google Scholar] [CrossRef]

- Velasco-Muñoz, J.F.; Aznar-Sánchez, J.A.; Batlles-delaFuente, A.; Fidelibus, M.D. Sustainable irrigation in agriculture: An analysis of global research. Water 2019, 11, 1758. [Google Scholar] [CrossRef]

- Velasco-Muñoz, J.F.; Aznar-Sánchez, J.A.; Belmonte-Ureña, L.J.; López-Serrano, M.J. Advances in water use efficiency in agriculture: A bibliometric analysis. Water 2018, 10, 377. [Google Scholar] [CrossRef]

- Velasco-Muñoz, J.V.; Aznar-Sánchez, J.A.; Belmonte-Ureña, L.J.; Román-Sánchez, I.M. Sustainable water use in agriculture: A review of worldwide research. Sustainability 2018, 10, 1084. [Google Scholar] [CrossRef]

- Cotterman, K.A.; Kendall, A.D.; Basso, B.; Hyndman, D.W. Groundwater depletion and climate change: Future prospects of crop production in the Central High Plains Aquifer. Clim. Chang. 2018, 146, 187–200. [Google Scholar] [CrossRef]

- Myers, S.S.; Smith, M.R.; Guth, S.; Golden, C.D.; Vaitla, B.; Mueller, N.D.; Dangour, A.D.; Huybers, P. Climate change and global food systems: Potential impacts on food security and undernutrition. Annu. Rev. Public Health 2017, 38, 259–277. [Google Scholar] [CrossRef] [PubMed]

- Ambast, S.K.; Keshari, A.K.; Gosain, A.K. Satellite remote sensing to support management of irrigation systems: Concepts and approaches. Irrig. Drain. 2002, 51, 25–39. [Google Scholar] [CrossRef]

- Ozdogan, M.; Yang, Y.; Allez, G.; Cervantes, C. Remote sensing of irrigated agriculture: Opportunities and challenges. Remote Sens. 2010, 2, 2274–2304. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Hanjra, M.A.; Dheeravath, V.; Gumma, M. A Holistic view of global croplands and their water use for ensuring global food security in the 21st century through advanced remote sensing and non-remote sensing approaches. Remote Sens. 2010, 2, 211–261. [Google Scholar] [CrossRef]

- Davis, K.F.; Rulli, M.C.; Seveso, A.; D’Odorico, P. Increased food production and reduced water use through optimized crop distribution. Nat. Geosci. 2017, 10, 919–924. [Google Scholar] [CrossRef]

- Heller, R.C.; Johnson, K.A. Estimating irrigated land acreage from Landsat imagery. Photogramm. Eng. Remote Sens. 1979, 45, 1379–1386. [Google Scholar]

- Rundquist, D.C.; Hoffman, R.O.; Carlson, M.P.; Cook, A.E. The Nebraska Center-Pivot Inventory: An example of operational satellite remote sensing on a long-term basis. Photogramm. Eng. Remote Sens. 1989, 55, 587–590. [Google Scholar]

- Chen, Y.; Lu, D.; Luo, L.; Pokhrel, Y.; Deb, K.; Huang, J.; Ran, Y. Detecting irrigation extent, frequency, and timing in a heterogeneous arid agricultural region using MODIS time series, Landsat imagery, and ancillary data. Remote Sens. Environ. 2018, 204, 197–211. [Google Scholar] [CrossRef]

- Ozdogan, M.; Gutman, G. A new methodology to map irrigated areas using multi-temporal MODIS and ancillary data: An application example in the continental US. Remote Sens. Environ. 2008, 112, 3520–3537. [Google Scholar] [CrossRef]

- Pervez, M.S.; Brown, J.F. Mapping irrigated lands at 250-m scale by merging MODIS data and national agricultural statistics. Remote Sens. 2010, 2, 2388–2412. [Google Scholar] [CrossRef]

- Pervez, M.S.; Budde, M.; Rowland, J. Mapping irrigated areas in Afghanistan over the past decade using MODIS NDVI. Remote Sens. Environ. 2014, 149, 155–165. [Google Scholar] [CrossRef]

- Bazzi, H.; Baghdadi, N.; Ienco, D.; El Hajj, M.; Zribi, M.; Belhouchette, H.; Escorihuela, M.J.; Demarez, V. Mapping irrigated areas using Sentinel-1 time series in Catalonia, Spain. Remote Sens. 2019, 11, 1836. [Google Scholar] [CrossRef]

- Bazzi, H.; Baghdadi, N.; El Hajj, M.; Zribi, M.; Minh, D.H.T.; Ndikumana, E.; Courault, D.; Belhouchette, H. Mapping paddy rice using Sentinel-1 SAR time series in Camargue, France. Remote Sens. 2019, 11, 887. [Google Scholar] [CrossRef]

- Bousbih, S.; Zribi, M.; El Hajj, M.; Baghdadi, N.; Lili-Chabaane, Z.; Gao, Q.; Fanise, P. Soil Moisture and Irrigation Mapping in a semi-arid region, based on the synergetic use of Sentinel-1 and Sentinel-2 Data. Remote Sens. 2018, 10, 1953. [Google Scholar] [CrossRef]

- Gao, Q.; Zribi, M.; Escorihuela, M.; Baghdadi, N.; Segui, P. Irrigation mapping using Sentinel-1 time series at field scale. Remote Sens. 2018, 10, 1495. [Google Scholar] [CrossRef]

- Demarez, V.; Helen, F.; Marais-Sicre, C.; Baup, F. In-season mapping of irrigated crops using Landsat 8 and Sentinel-1 time series. Remote Sens. 2019, 11, 118. [Google Scholar] [CrossRef]

- Fieuzal, R.; Duchemin, B.; Jarlan, L.; Zribi, M.; Baup, F.; Merlin, O.; Hagolle, O.; Garatuza-Payan, J. Combined use of optical and radar satellite data for the monitoring of irrigation and soil moisture of wheat crops. Hydrol. Earth Syst. Sci. 2011, 15, 1117–1129. [Google Scholar] [CrossRef]

- Hadria, R.; Duchemin, B.; Jarlan, L.; Dedieu, G.; Baup, F.; Khabba, S.; Olioso, A.; Le Toan, T. Potentiality of optical and radar satellite data at high spatio-temporal resolutions for the monitoring of irrigated wheat crops in Morocco. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, S32–S37. [Google Scholar] [CrossRef]

- Martins, J.D.; Bohrz, I.S.; Tura, E.F.; Fredrich, M.; Veronez, R.P.; Kunz, G.A. Levantamento da área irrigada por pivô central no Estado do Rio Grande do Sul. IRRIGA 2016, 21, 300. [Google Scholar] [CrossRef]

- Ferreira, E.; Toledo, J.H.D.; Dantas, A.A.; Pereira, R.M. Cadastral maps of irrigated areas by center pivots in the State of Minas Gerais, using CBERS-2B/CCD satellite imaging. Eng. Agríc 2011, 31, 771–780. [Google Scholar] [CrossRef]

- Sano, E.E.; Lima, J.E.; Silva, E.M.; Oliveira, E.C. Estimative variation in the water demand for irrigation by center pivot in Distrito Federal-Brazil, between 1992 and 2002. Eng. Agríc. 2005, 25, 508–515. [Google Scholar] [CrossRef]

- Schmidt, W.; Coelho, R.D.; Jacomazzi, M.A.; Antunes, M.A. Spatial distribution of center pivots in Brazil: I-southeast region. Rev. Bras. Eng. Agríc. Ambient. 2004, 8, 330–333. [Google Scholar] [CrossRef]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Garcia-Rodriguez, J. A review on deep learning techniques applied to semantic segmentation. arXiv 2017, arXiv:1704.06857. [Google Scholar]

- Garcia-Garcia, A.; Orts-Escolano, S.; Oprea, S.; Villena-Martinez, V.; Martinez-Gonzalez, P.; Garcia-Rodriguez, J. A survey on deep learning techniques for image and video semantic segmentation. Appl. Soft Comput. 2018, 70, 41–65. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Georgiou, T.; Lew, M.S. A review of semantic segmentation using deep neural networks. Int. J. Multimed. Inf. Retr. 2018, 7, 87–93. [Google Scholar] [CrossRef]

- Guo, Y.; Liu, Y.; Oerlemans, A.; Lao, S.; Wu, S.; Lew, M.S. Deep learning for visual understanding: A review. Neurocomputing 2016, 187, 27–48. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep learning in remote sensing applications: A meta-analysis and review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Carranza-García, M.; García-Gutiérrez, J.; Riquelme, J. A framework for evaluating land use and land cover classification using convolutional neural networks. Remote Sens. 2019, 11, 274. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Li, M.; Wang, L.; Wang, J.; Li, X.; She, J. Comparison of land use classification based on convolutional neural network. J. Appl. Remote Sens. 2020, 14, 1. [Google Scholar] [CrossRef]

- Scott, G.J.; England, M.R.; Starms, W.A.; Marcum, R.A.; Davis, C.H. Training deep convolutional neural networks for land–cover classification of high-resolution imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 549–553. [Google Scholar] [CrossRef]

- Li, W.; Liu, H.; Wang, Y.; Li, Z.; Jia, Y.; Gui, G. Deep learning-based classification methods for remote sensing images in urban built-up areas. IEEE Access 2019, 7, 36274–36284. [Google Scholar] [CrossRef]

- Huang, B.; Zhao, B.; Song, Y. Urban land-use mapping using a deep convolutional neural network with high spatial resolution multispectral remote sensing imagery. Remote Sens. Environ. 2018, 214, 73–86. [Google Scholar] [CrossRef]

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building extraction in very high resolution remote sensing imagery using deep learning and guided filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef]

- Wagner, F.H.; Dalagnol, R.; Tarabalka, Y.; Segantine, T.Y.F.; Thomé, R.; Hirye, M.C.M. U-Net-Id, an Instance Segmentation Model for Building Extraction from Satellite Images—Case Study in the Joanópolis City, Brazil. Remote Sens. 2020, 12, 1544. [Google Scholar] [CrossRef]

- De Bem, P.P.; de Carvalho Junior, O.A.; Fontes Guimarães, R.; Trancoso Gomes, R.A. Change detection of deforestation in the Brazilian Amazon using Landsat data and Convolutional Neural Networks. Remote Sens. 2020, 12, 901. [Google Scholar] [CrossRef]

- Ma, W.; Xiong, Y.; Wu, Y.; Yang, H.; Zhang, X.; Jiao, L. Change detection in remote sensing images based on image mapping and a deep capsule network. Remote Sens. 2019, 11, 626. [Google Scholar] [CrossRef]

- Peng, D.; Zhang, Y.; Guan, H. End-to-end change detection for high resolution satellite images using improved UNet++. Remote Sens. 2019, 11, 1382. [Google Scholar] [CrossRef]

- Zhang, W.; Lu, X. The spectral-spatial joint learning for change detection in multispectral imagery. Remote Sens. 2019, 11, 240. [Google Scholar] [CrossRef]

- Chen, Y.; Fan, R.; Bilal, M.; Yang, X.; Wang, J.; Li, W. Multilevel Cloud detection for high-resolution remote sensing imagery using multiple convolutional neural networks. ISPRS Int. J. Geo-Inf. 2018, 7, 181. [Google Scholar] [CrossRef]

- Jeppesen, J.H.; Jacobsen, R.H.; Inceoglu, F.; Toftegaard, T.S. A cloud detection algorithm for satellite imagery based on deep learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Cheng, Q.; Liu, Y.; You, S.; He, Z. Deep learning-based cloud detection for medium and high-resolution remote sensing images of different sensors. ISPRS J. Photogramm. Remote Sens. 2019, 150, 197–212. [Google Scholar] [CrossRef]

- Xie, F.; Shi, M.; Shi, Z.; Yin, J.; Zhao, D. Multilevel cloud detection in remote sensing images based on deep learning. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2017, 10, 3631–3640. [Google Scholar] [CrossRef]

- Zhang, C.; Yue, P.; Di, L.; Wu, Z. Automatic identification of center pivot irrigation systems from Landsat images using Convolutional Neural Networks. Agriculture 2018, 8, 147. [Google Scholar] [CrossRef]

- Yi, Y.; Zhang, Z.; Zhang, W.; Zhang, C.; Li, W.; Zhao, T. Semantic Segmentation of Urban Buildings from VHR Remote Sensing Imagery Using a Deep Convolutional Neural Network. Remote Sens. 2019, 11, 1774. [Google Scholar] [CrossRef]

- Hessel, F.O.; Carvalho Junior, O.A.; Gomes, R.A.T.; Martins, E.S.; Guimarães, R.F. Dinâmica e sucessão dos padrões da paisagem agrícola no município de Cocos (Bahia). RAE GA 2012, 26, 128–156. [Google Scholar] [CrossRef]

- De Oliveira, S.N.; Carvalho Júnior, O.A.; Gomes, R.A.T.; Guimarães, R.F.; Martins, E.S. Detecção de mudança do uso e cobertura da terra usando o método de pós-classificação na fronteira agrícola do Oeste da Bahia sobre o Grupo Urucuia durante o período 1988–2011. Rev. Bras. Cartogr. 2014, 66, 1157–1176. [Google Scholar]

- De Oliveira, S.N.; Carvalho Júnior, O.A.; Gomes, R.A.T.; Guimarães, R.F.; McManus, C.M. Landscape-fragmentation change detection from agricultural expansion in the Brazilian savanna, Western Bahia, Brazil (1988–2011). Reg. Environ. Chang. 2015, 17, 411–423. [Google Scholar] [CrossRef]

- De Oliveira, S.N.; de Carvalho Júnior, O.A.; Gomes, R.A.T.; Guimarães, R.F.; McManus, C.M. Deforestation analysis in protected areas and scenario simulation for structural corridors in the agricultural frontier of Western Bahia, Brazil. Land Use Policy 2017, 61, 40–52. [Google Scholar] [CrossRef]

- Menke, A.B.; Carvalho Júnior, O.A.; Gomes, R.A.T.; Martins, E.S.; Oliveira, S.N. Análise das mudanças do uso agrícola da terra a partir de dados de sensoriamento remoto multitemporal no município de Luís EduardoMagalhães (BA–Brasil). Soc. Nat. 2009, 21, 315–326. [Google Scholar] [CrossRef]

- Pousa, R.; Costa, M.H.; Pimenta, F.M.; Fontes, V.C.; Brito, V.F.A.; Castro, M. Climate Change and Intense Irrigation Growth in Western Bahia, Brazil: The Urgent Need for Hydroclimatic Monitoring. Water 2019, 11, 933. [Google Scholar] [CrossRef]

- Brunckhorst, A.; Bias, E. Aplicação de SIG na gestão de conflitos pelo uso da água na porção goiana da bacia hidrográfica do rio São Marcos, município de Cristalina–GO. Geociências 2014, 33, 228–243. [Google Scholar]

- Pinhati, F.S.C. Simulações de ampliações da irrigação por Pivô Central na Bacia do Rio São Marcos. Master’s Thesis, University of Brasília, Brasília, Brazil, 2018. [Google Scholar]

- Sano, E.E.; Lima, J.E.F.W.; Silva, E.M.; Oliveira, E.C. Estimativa da Variação na Demanda de Água para Irrigação por Pivô-Central no Distrito Federal entre 1992 e 2002. Eng. Agríc. 2005, 25, 508–515. [Google Scholar] [CrossRef]

- Silva, L.M.C.; da Hora, M.A.G.M. Conflito pelo uso da água na bacia hidrográfica do rio São Marcos: O estudo de caso da UHE batalha. Engevista 2015, 17, 166–174. [Google Scholar] [CrossRef]

- Galford, G.L.; Mustard, J.F.; Melillo, J.; Gendrin, A.; Cerri, C.C.; Cerri, C.E.P. Wavelet analysis of MODIS time series to detect expansion and intensification of row-crop agriculture in Brazil. Remote Sens. Environ. 2008, 112, 576–587. [Google Scholar] [CrossRef]

- Arvor, D.; Jonathan, M.; Meirelles, M.S.P.; Dubreuil, V.; Durieux, L. Classification of MODIS EVI time series for crop mapping in the state of Mato Grosso, Brazil. Int. J. Remote Sens. 2011, 32, 7847–7871. [Google Scholar] [CrossRef]

- Bernardes, T.; Adami, M.; Formaggio, A.R.; Moreira, M.A.; de Azeredo França, D.; de Novaes, M.R. Imagens mono e multitemporais MODIS para estimativa da área com soja no Estado de Mato Grosso. Pesqui. Agropecu. Bras. 2011, 46, 1530–1537. [Google Scholar] [CrossRef]

- Gusso, A.; Arvor, D.; Ricardo Ducati, J.; Veronez, M.R.; Da Silveira, L.G. Assessing the modis crop detection algorithm for soybean crop area mapping and expansion in the Mato Grosso state, Brazil. Sci. World J. 2014, 2014, 863141. [Google Scholar] [CrossRef]

- Agência Nacional de Águas (Brasil). Levantamento da Agricultura Irrigada por Pivôs Centrais no BRASIL/Agência Nacional de Águas, Embrapa Milho e Sorgo, 2nd ed.; ANA: Brasília, Brazil, 2019. [Google Scholar]

- Vermote, E.; Justice, C.; Claverie, M.; Franch, B. Preliminary analysis of the performance of the Landsat 8/OLI land surface reflectance product. Remote Sens. Environ. 2016, 185, 46–56. [Google Scholar] [CrossRef]

- Abade, N.A.; Carvalho Júnior, O.A.; Guimarães, R.F.; De Oliveira, S.N. Comparative Analysis of MODIS Time-Series Classification Using Support Vector Machines and Methods Based upon Distance and Similarity Measures in the Brazilian Cerrado-Caatinga Boundary. Remote Sens. 2015, 7, 12160–12191. [Google Scholar] [CrossRef]

- Carvalho Júnior, O.A.; Sampaio, C.D.S.; Silva, N.C.D.; Couto Júnior, A.F.; Gomes, R.A.T.; Carvalho, A.P.F.; Shimabukuro, Y.E. Classificação de padrões de savana usando assinaturas temporais NDVI do sensor MODIS no Parque Nacional Chapada dos Veadeiros. Rev. Bras. Geof. 2008, 26, 505–517. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. arXiv 2015, arXiv:1505.04597. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Pinheiro, P.O.; Lin, T.Y.; Collobert, R.; Dollár, P. Learning to Refine Object Segments. In Lecture Notes in Computer Science, Proceedings of the Computer Vision–ECCV 2016, Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; v. 9905; pp. 75–91. [Google Scholar]

- He, H.; Yang, D.; Wang, S.; Wang, S.; Li, Y. Road Extraction by Using Atrous Spatial Pyramid Pooling Integrated Encoder-Decoder Network and Structural Similarity Loss. Remote Sens. 2019, 11, 1015. [Google Scholar] [CrossRef]

- Feng, W.; Sui, H.; Huang, W.; Xu, C.; An, K. Water body extraction from very high-resolution remote sensing imagery using deep U-Net and a superpixel-based conditional random field model. IEEE Geosci. Remote Sens. Lett. 2018, 16, 618–622. [Google Scholar] [CrossRef]

- Li, W.; He, C.; Fang, J.; Zheng, J.; Fu, H.; Yu, L. Semantic Segmentation-Based Building Footprint Extraction Using Very High-Resolution Satellite Images and Multi-Source GIS Data. Remote Sens. 2019, 11, 403. [Google Scholar] [CrossRef]

- Cui, B.; Fei, D.; Shao, G.; Lu, Y.; Chu, J. Extracting Raft Aquaculture Areas from Remote Sensing Images via an Improved U-Net with a PSE Structure. Remote Sens. 2019, 11, 2053. [Google Scholar] [CrossRef]

- Ding, K.; Yang, Z.; Wang, Y.; Liu, Y. An Improved Perceptual Hash Algorithm Based on U-Net for the Authentication of High-Resolution Remote Sensing Image. Appl. Sci. 2019, 9, 2972. [Google Scholar] [CrossRef]

- Liu, Z.; Feng, R.; Wang, L.; Zhong, Y.; Cao, L. D-Resunet: Resunet and Dilated Convolution for High Resolution Satellite Imagery Road Extraction. In Proceedings of the 2019 IEEE International Geoscience and Remote Sensing Symposium—IGARSS 2019, Yokohama, Japan, 28 July–2 August 2109; pp. 3927–3930. [Google Scholar]

- Diakogiannis, F.I.; Waldner, F.; Caccetta, P.; Wu, C. Resunet-a: A deep learning framework for semantic segmentation of remotely sensed data. ISPRS J. Photogramm. Remote Sens. 2020, 162, 94–114. [Google Scholar] [CrossRef]

- Congalton, R.G.; Oderwald, R.G.; Mead, R.A. Assessing Landsat classification accuracy using discrete multivariate analysis statistical techniques. Photogramm. Eng. Remote Sensing 1983, 49, 1671–1678. [Google Scholar]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Everingham, M.; Van Gool, L.; Williams, C.K.I.; Winn, J.; Zisserman, A. The PASCAL visual object classes (VOC) challenge. IJCV 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Hoiem, D.; Chodpathumwan, Y.; Dai, Q. Diagnosing error in object detectors. In Proceedings of the 12th European Conference on Computer Vision—Volume Part III (ECCV’12), Florence, Italy, 7–13 October 2012; Fitzgibbon, A., Lazebnik, S., Perona, P., Sato, Y., Schmid, C., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 340–353. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. Imagenet large scale visual recognition challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Stehman, S.V.; Wickham, J.D. Pixels, blocks of pixels, and polygons: Choosing a spatial unit for thematic accuracy assessment. Remote Sens. Environ. 2011, 115, 3044–3055. [Google Scholar] [CrossRef]

- Ye, S.; Pontius, R.G., Jr.; Rakshit, R. A review of accuracy assessment for object-based image analysis: From per-pixel to per-polygon approaches. ISPRS J. Photogramm. Remote Sens. 2018, 141, 137–147. [Google Scholar] [CrossRef]

- Carvalho Júnior, O.A.; Guimarães, R.F.; Gillespie, A.R.; Silva, N.C.; Gomes, R.A.T. A New approach to change vector analysis using distance and similarity measures. Remote Sens. 2011, 3, 2473–2493. [Google Scholar] [CrossRef]

| Region | Date | Path/Row |

|---|---|---|

| Western Bahia | 7 June 2014 | 220/068 |

| Western Bahia | 7 June 2014 | 220/069 |

| Western Bahia | 30 November 2014 | 220/068 |

| Western Bahia | 30 November 2014 | 220/069 |

| Mato Grosso | 10 June 2014 | 225/070 |

| Mato Grosso | 10 June 2014 | 225/071 |

| Mato Grosso | 16 November 2014 | 225/070 |

| Mato Grosso | 16 November 2014 | 225/071 |

| Goiás/Minas Gerais | 22 May 2014 | 220/071 |

| Goiás/Minas Gerais | 22 May 2014 | 220/072 |

| Goiás/Minas Gerais | 13 May 2014 | 221/071 |

| Goiás/Minas Gerais | 13 May 2014 | 221/072 |

| Goiás/Minas Gerais | 10 June 2015 | 220/071 |

| Goiás/Minas Gerais | 28 July 2015 | 220/072 |

| Goiás/Minas Gerais | 04 August 2015 | 221/071 |

| Goiás/Minas Gerais | 04 August 2015 | 221/072 |

| Region | Date | Path/Row |

|---|---|---|

| Goiás/Minas Gerais | 18 June 2018 | 220/071 |

| Goiás/Minas Gerais | 18 June 2018 | 220/072 |

| Goiás/Minas Gerais | 25 June 2018 | 221/071 |

| Goiás/Minas Gerais | 20 May 2019 | 221/071 |

| Goiás/Minas Gerais | 20 May 2019 | 220/072 |

| Goiás/Minas Gerais | 27 May 2019 | 221/071 |

| Goiás/Minas Gerais | 24 August 2019 | 220/071 |

| Goiás/Minas Gerais | 24 August 2019 | 220/072 |

| Goiás/Minas Gerais | 31 August 2019 | 221/071 |

| Accuracy Metric | Equation |

|---|---|

| (TA) | |

| (R) | |

| , where | |

| Accuracy | F-Score | Recall | Precision | Kappa | IoU | |

|---|---|---|---|---|---|---|

| Deep ResUnet | 0.9871 | 0.9610 | 0.9484 | 0.9739 | 0.9532 | 0.9249 |

| U-net | 0.9880 | 0.9638 | 0.9457 | 0.9826 | 0.9638 | 0.9301 |

| SharpMask | 0.97585 | 0.9342 | 0.9095 | 0.9603 | 0.9214 | 0.8765 |

| Predicted Labels | |||||||

|---|---|---|---|---|---|---|---|

| Rainy Season (May 2019) | Beginning of the Dry Season (June 2018) | Critical Dry Period (August 2019) | |||||

| Pivot | Non-Pivot | Pivot | Non-Pivot | Pivot | Non-Pivot | ||

| True Label | Pivot | 902 (18 partially identified) | 72 | 937 (25 partially identified) | 37 | 860 (68 partially identified) | 114 |

| Non-pivot | 8 | Does not apply | 19 (total or pivot fractions) | Does not apply | 2 | Does not apply | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

de Albuquerque, A.O.; de Carvalho Júnior, O.A.; Carvalho, O.L.F.d.; de Bem, P.P.; Ferreira, P.H.G.; de Moura, R.d.S.; Silva, C.R.; Trancoso Gomes, R.A.; Fontes Guimarães, R. Deep Semantic Segmentation of Center Pivot Irrigation Systems from Remotely Sensed Data. Remote Sens. 2020, 12, 2159. https://doi.org/10.3390/rs12132159

de Albuquerque AO, de Carvalho Júnior OA, Carvalho OLFd, de Bem PP, Ferreira PHG, de Moura RdS, Silva CR, Trancoso Gomes RA, Fontes Guimarães R. Deep Semantic Segmentation of Center Pivot Irrigation Systems from Remotely Sensed Data. Remote Sensing. 2020; 12(13):2159. https://doi.org/10.3390/rs12132159

Chicago/Turabian Stylede Albuquerque, Anesmar Olino, Osmar Abílio de Carvalho Júnior, Osmar Luiz Ferreira de Carvalho, Pablo Pozzobon de Bem, Pedro Henrique Guimarães Ferreira, Rebeca dos Santos de Moura, Cristiano Rosa Silva, Roberto Arnaldo Trancoso Gomes, and Renato Fontes Guimarães. 2020. "Deep Semantic Segmentation of Center Pivot Irrigation Systems from Remotely Sensed Data" Remote Sensing 12, no. 13: 2159. https://doi.org/10.3390/rs12132159

APA Stylede Albuquerque, A. O., de Carvalho Júnior, O. A., Carvalho, O. L. F. d., de Bem, P. P., Ferreira, P. H. G., de Moura, R. d. S., Silva, C. R., Trancoso Gomes, R. A., & Fontes Guimarães, R. (2020). Deep Semantic Segmentation of Center Pivot Irrigation Systems from Remotely Sensed Data. Remote Sensing, 12(13), 2159. https://doi.org/10.3390/rs12132159