Abstract

To obtain a high-accuracy vegetation classification of high-resolution UAV images, in this paper, a multi-angle hyperspectral remote sensing system was built using a six-rotor UAV and a Cubert S185 frame hyperspectral sensor. The application of UAV-based multi-angle remote sensing in fine vegetation classification was studied by combining a bidirectional reflectance distribution function (BRDF) model for multi-angle remote sensing and object-oriented classification methods. This method can not only effectively reduce the classification phenomena that influence different objects with similar spectra, but also benefit the construction of a canopy-level BRDF. Then, the importance of the BRDF characteristic parameters are discussed in detail. The results show that the overall classification accuracy (OA) of the vertical observation reflectance based on BRDF extrapolation (BRDF_0°) (63.9%) was approximately 24% higher than that based on digital orthophoto maps (DOM) (39.8%), and kappa using BRDF_0° was 0.573, which was higher than that using DOM (0.301); a combination of the hot spot and dark spot features, as well as model features, improved the OA and kappa to around 77% and 0.720, respectively. The reflectance features near hot spots were more conducive to distinguishing maize, soybean, and weeds than features near dark spots; the classification results obtained by combining the observation principal plane (BRDF_PP) and on the cross-principal plane (BRDF_CP) features were best (OA = 89.2%, kappa = 0.870), and especially, this combination could improve the distinction among different leaf-shaped trees. BRDF_PP features performed better than BRDF_CP features. The observation angles in the backward reflection direction of the principal plane performed better than those in the forward direction. The observation angles associated with the zenith angles between −10° and −20° were most favorable for vegetation classification (solar position: zenith angle 28.86°, azimuth 169.07°) (OA was around 75%–80%, kappa was around 0.700–0.790); additionally, the most frequently selected bands in the classification included the blue band (466 nm–492 nm), green band (494 nm–570 nm), red band (642 nm–690 nm), red edge band (694 nm–774 nm), and the near-infrared band (810 nm–882 nm). Overall, the research results promote the application of multi-angle remote sensing technology in vegetation information extraction and provide important theoretical significance and application value for regional and global vegetation and ecological monitoring.

1. Introduction

The vegetation ecosystem is an important foundation for ecological systems [1]. The use of remote sensing technology has become the main approach for vegetation ecological resource surveys and environmental monitoring due to the corresponding real-time, repeatability, and wide-coverage advantages [2,3,4]. With the development of remote sensing technology, visible light, multispectral, hyperspectral, and other sensors have been widely used in the remote sensing of vegetation [5,6], and more hyperspectral and high-resolution information has been obtained than ever before, greatly improving the accuracy of image classification [7,8].

As one of the current frontiers of remote sensing development, hyperspectral remote sensing technology has played an increasingly important role in quantitative analyses and accurate classifications of vegetation due to its ability to acquire high-resolution spectral and spatial data [9,10,11,12]. For instance, Filippi utilized an unsupervised self-organizing neural network to perform complex vegetation mapping in a coastal wetland environment [13]. Fu et al. proposed an integrated scheme for vegetation classification by simultaneously exploiting spectral and spatial image information to improve the vegetation classification accuracy [14].

From the perspective of remote sensing imaging, remote sensing vertical photography can obtain only the spectral feature projection of the target feature in one direction, and it lacks sufficient information to infer the reflection anisotropy and spatial structure [15]. Multi-angle observations of a target can provide information in multiple directions and be used to construct the bidirectional reflectance distribution function (BRDF) [16,17,18], which increases the abundance of target observation information; additionally, this approach can extract more detailed and reliable spatial structure parameters than a single-direction observation can [19]. Multi-angle hyperspectral remote sensing, which combines the advantages of multi-angle observation and hyperspectral imaging technology, is projected to become an effective technical method for the classification of vegetation in remote sensing images.

The UAV remote sensing platform has emerged due to its flexibility, easy operation, high efficiency, and low cost; it can efficiently acquire high-resolution spatial and spectral data on demand [20]. The UAV remote sensing platform has the ability to provide multi-angle observations and thus has become popular in multi-angle remote sensing [21,22,23,24]. Roosjen et al. studied the hyperspectral anisotropy of barley, winter wheat, and potatoes using a drone-based imaging hyperspectrometer by obtaining multi-angle observation data for hemispherical surfaces by hovering around the crops [25]. In addition, Liu and Abd-Elrahman developed an object-based image analysis (OBIA) approach by utilizing multi-view information acquired using a digital camera mounted on a UAV [26]. They also introduced a multi-view object-based classification using deep convolutional neural network (MODe) method to process UAV images for land cover classification [27]. Both methods avoided the salt and pepper phenomenon of the classified image and have achieved favorable classification results. However, it is difficult to obtain the continuous spectrum characteristics of the ground objects because of the fewer wave bands the optical sensors use. Moreover, the research does not fully mine the contribution difference of multi-angle features. Furthermore, how to use the limited multi-angle observations to construct the BRDF of ground objects to enrich the observation information of the target is also one of the difficulties in the application of multi-angle remote sensing.

In this paper, key technical issues, such as the difficulty in distinguishing complex vegetation species from a single remote sensing observation direction, the construction of the BRDF model based on UAV multi-angle observation data, and model application for vegetation classification and extraction, were studied. The purpose of this study was to discuss the role of ground object BRDF characteristic parameters in the fine classification of vegetation, thereby improving the understanding of the relationship between the BRDF and plant leaves and vegetation canopy structure parameters, as well as promoting the application of multi-angle optical remote sensing in the acquisition of vegetation information.

2. Date Sets

2.1. Study Area

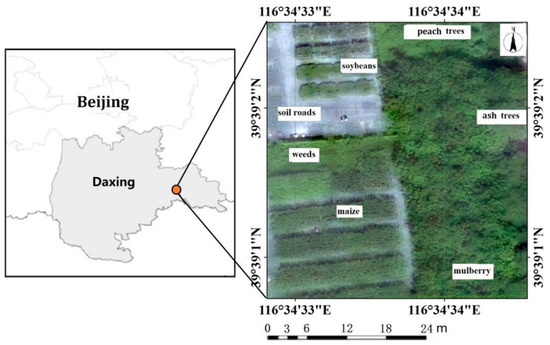

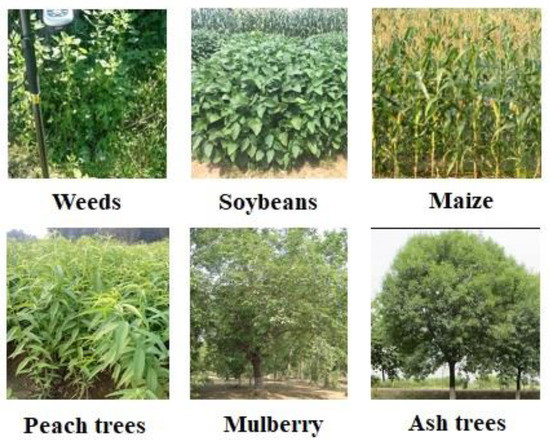

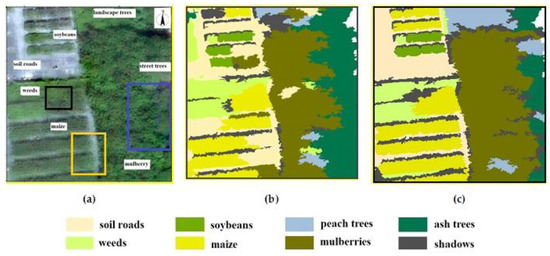

The research area was in the village of Luozhuang, Changziying town, Daxing District, Beijing, as shown in Figure 1. The image was acquired on 24 August 2018. The weather was clear and cloudless. The rich vegetation types included weeds, crops, and tree species, as shown in Figure 2. The crops included soybean in the flowering and pod-bearing stages, and maize in the powder stage. The tree species included mulberry, peach, and ash trees. The vegetation grew densely, and shadows greatly affected the classification results. Therefore, shadows were recognized as a type of object in this paper. In summary, the land species were divided into eight types: weeds, soybeans, maize, mulberries, peach trees, ash trees, dirt roads, and shadows.

Figure 1.

Study site.

Figure 2.

Schematic images of the vegetation types in the study area.

2.2. UAV Hyperspectral Remote Sensing Platform

In this paper, a Cubert S185 hyperspectral sensor mounted on a DJI Jingwei M600 PRO (Dajiang, Shenzhen, China), which is a rotary-wing vehicle with six rotors, was used to obtain research data, and is shown in Figure 3. The Cubert S185 frame-frame imaging spectrometer (Germeny) [28] simultaneously captured both low spatial resolution hyperspectral images (50 × 50 pixels) and high spatial resolution panchromatic images (1000 × 1000 pixels), and then obtained high spatial resolution hyperspectral images via data fusion using Cubert Pilot software. The sensor provides 125 spectral channels with wavelengths ranging from 450 nm to 950 nm (4-nm sampling interval). Table 1 lists the main performance parameters of the hyperspectral cameras.

Figure 3.

Sensor system and UAV platform: (a) Cubert hyperspectral camera and (b) DJI Jingwei M600 PRO.

Table 1.

Main parameters of the Cubert UHD 185 snapshot hyperspectral sensor (provided by the manufacturer).

2.3. Flight Profile and Conditions

The drone mission was implemented from 12:10 to 12:30 on 24 August 2018. Regarding the sun’s position, the zenith angle was 28.86°, and the azimuth was 169.07°. The weather was clear and cloudless, there was no wind, and the light intensity was stable. The flying height was 100 m, and the acquired hyperspectral image had a ground sample distance of 4 cm after data fusion. To ensure that the remote sensing platform obtained a sufficient observation angle for each feature and to improve the accuracy of the BRDF model construction, the flight adopted vertical photography and oblique photogrammetry (the angle of the mirror center was 30°). To obtain more abundant multi-angle observation data, the image heading and side overlap were both greater than 80%. Moreover, RTK (real-time kinematic) carrier phase difference technology was used to measure the coordinates of the ground control points with a planimetric accuracy better than 1 cm. The number of control points was 5, and they were located in areas with clear, distinguishable, and unblocked GPS signals.

2.4. Data Processing

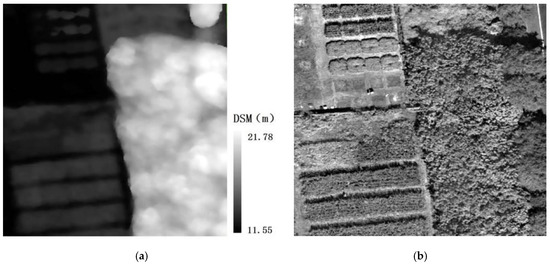

According to the flight mission plan described above, the hyperspectral experimental dataset was successfully acquired, and the data were processed with Agisoft PhotoScan software Version 1.2.5 (St.Petersburg Russia) to generate a digital orthophoto map (DOM) and digital surface model (DSM) data for the research area. Data processing included matching according to high definition digital images and position and orientation system (POS) information at the time of image acquisition (latitude and longitude, altitude, flip, and pitch and rotation angle of the UAV flight), detecting the feature points of photos based on a dynamic structure algorithm, establishing matching feature point pairs, and arranging photos. A dense three-dimensional point cloud was generated using a dense multi-perspective stereomatching algorithm, and the ground control points were input for geometric corrections. Finally, the DSM and DOM of the experimental area were obtained, as shown in Figure 4.

Figure 4.

Data processing results: (a) DSM and (b) DOM.

3. Materials and Methods

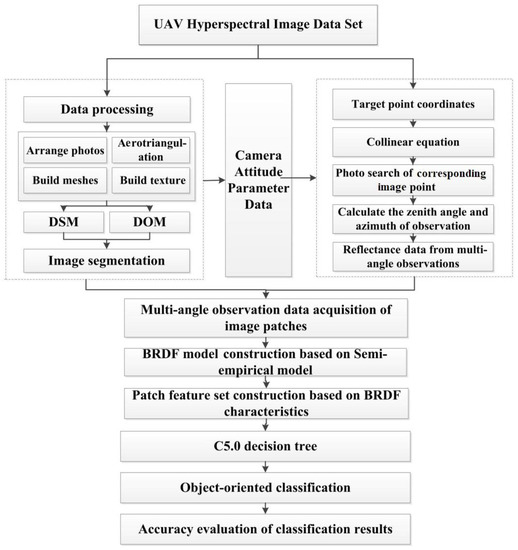

This paper proposed a novel vegetation classification method combining an object-oriented classification method and BRDF. The relationship between BRDF characteristics and plant leaves and vegetation canopy structure is discussed to promote the development of multi-angle optical remote sensing in the application field of vegetation remote sensing. First, the method of image segmentation combining spectral and DSM features was studied to improve the accuracy of the object-oriented edge and the segmentation of the plaque. Second, multiple hyperspectral data sets were obtained using vertical and oblique photogrammetry, the acquired multi-angle observation data of the ground object were used, and then the semi-empirical kernel driver model was used to invert the BRDF model of each object patch. Third, according to the characteristics of BRDF for each segmentation patch, a multi-class feature set was constructed. Finally, object-oriented classification was carried out for fine vegetation classification. The specific research technology route is shown in Figure 5:

Figure 5.

Flowchart of the classification method. DSM: digital surface model, DOM: digital orthophoto maps, BRDF: bidirectional reflectance distribution function.

3.1. Image Segmentation

UAV remote sensing technology can acquire DSMs through the acquisition and processing of multiple overlapping images, and this information can be used as auxiliary information to improve the image segmentation accuracy. In this study, the DSM and spectral characteristics were taken as basic information, and the object set of the UAV hyperspectral image segmentation was constructed using Definiens eCognition Developer 7.0 (München, Germany). A multi-resolution segmentation method was adopted. A segmentation scale parameter was manually adjusted using a trial and error method and finally a segmentation scale of 50 was selected, which resulted in visually correct segmentation. The shape and compactness weight parameters [29] used in the segmentation algorithm were also found using trial and error, and values of 0.05 and 0.8 were used, respectively.

Next, the extraction of the feature sets for image segmentation patches were discussed, which were used for vegetation classification.

3.2. Multi-angle Observation Data Acquisition and BRDF Model Construction

First, the maximum inscribed circle of each object patch was obtained as the attribute representative of the patch. Second, corresponding image points for each pixel inside the inscribed circle were found. Then, the average value of the reflectances in the corresponding circular area in one image was read as the reflectivity of the segmented block under different observation angles. At the same time, the observation angle of each image block with the same name was obtained. Finally, the BRDF model of the segmentation block was constructed by using the reflectance of a multi-angle observation. The specific steps were as follows:

(1) Aerotriangulation and camera attitude parameter solution:

On the basis of multi-angle image data sets with high amounts of overlap acquired through vertical photography and oblique photogrammetry, control point data obtained using synchronous field measurements were used to calculate the coordinates of pending points in the study area via aerotriangulation and then used as control points for multiple images and image correction. In this method, aerial camera stations were established for the whole network, and the acquired images were used for point transmission and network construction.

The exterior and interior orientation parameters were obtained via aerotriangulation with control point data, and the internal camera parameters were obtained via camera calibration. The camera calibration and orientation was carried out suing the Agisoft Photoscans software. Then, the coordinates of the known object in three-dimensional space, the corresponding image pixel coordinates, and the camera interior parameters were used to determine the exterior parameters of the object in a known space, namely the rotation vector and the translation vector. Finally, the rotation vector was analyzed and processed to obtain the three-dimensional altitude angle of the camera relative to the spatial coordinates of the known object by considering the pitch, rotation, and wheel angles.

(2) Search for the corresponding image points:

The corresponding image point refers to the image point of any ground object target point in different photos [30]. It was obtained by photographing the same object point multiple times at different photo points during the aerial photography. After calculating the coordinates of the pending points in the study area and the elements of the internal and external orientations of each image, a collinearity equation with digital photogrammetry was used to determine the image plane coordinates of the target point for each image; then, the characteristics of the sensor image were used to determine whether each coordinate was within the visual threshold range and to search the image for corresponding image points.

(3) Observation angle and reflectance of points with the same name:

After searching for points with the same name, the zenith angle and observation azimuth of the object point in each image and points with the same name were calculated using the orientation relationship between the camera station (projection center) and the object point. In addition, the reflectance of points with the same name was determined for the selected band image.

(4) Parameter calculation for the semi-empirical kernel driver model:

Algorithm for model bidirectional reflectance anisotropics of the land surface (AMBRALS) [31] was selected to construct the BRDF. The semi-empirical core-driven model can be expressed using Equation (1):

The bidirectional reflectance can be decomposed into the sum of the weights of the three parts of uniform reflection, bulk reflection, and geometric optical reflection. Therefore, the value of isotropic reflection is generally equal to 1. In the core-driven model, R represents the bidirectional reflectivity, represents the ray zenith angle, represents the observation angle of the zenith angle, and σ represents the corresponding azimuth angle. Kvol and Kgeo are the bulk nuclear reflection and geometric optical nuclear reflection, respectively. fiso, fvol, and fgeo are constant coefficients that represent the proportions of uniform reflection, bulk reflection, and geometric optical reflection, respectively. The linear regression method was used to solve for the optimal parameter values. In addition, the bulk nuclear reflection and geometric optical nuclear reflection in the formula were calculated using the ray zenith angle, the observation zenith angle, and the corresponding azimuth angle, and therein, the ray zenith angle and the azimuth angle were calculated based on the time and date the image was obtained and the coordinates of the object point.

3.3. Feature Set Construction Based on the BRDF

To evaluate the application value of the BRDF model for vegetation classification, this study extracted two types of features from the BRDF model as the basic attributes for the identification of vegetation species. The first type was bidirectional reflectance factor (BRF) predicted by the BRDF model, including the maximum (hot spot) and the minimum (dark spot) reflectance values observed in the backscattering and forward scattering regions, respectively; the multi-angle observation reflectance in the main plane of the observation (considering the maximum view zenith angle of the remote sensing sensor, which was set to 60°); and then the observations in the principal planes beginning from the 0° zenith angle in the forward and backward directions of observation with a 10° sampling interval to obtain the multi-angle observation data. The multi-angle observed reflectance of the main vertical observation plane (the angular sampling method was consistent with the main plane of observation) and joint feature set of multi-angle reflectance for the main planes (25) were also considered. Second, the BRDF model parameters fiso, fvol, and fgeo were considered [25]. Table 2 summarizes the feature sets used for vegetation species identification.

Table 2.

Feature set construction using the BRDF for object-oriented classification.

3.4. Vegetation Classification and Accuracy Assessment

After obtaining the noise attribute information for each object according to the above scheme, the C5.0 decision tree [32] method was used to construct the vegetation species recognition model. The decision tree algorithm has a structure similar to the tree structure shown in the flow chart. This structure can intuitively display the classification rules, and the classification algorithm has a fast speed, high accuracy, and simple generation mode. This study used the SPSS Clementine V16.0 software (IBM, Chicago, USA) to achieve a fine classification of vegetation based on the C5.0 decision tree. To verify the effectiveness of the method, the image segmentation results were taken as samples, and the number of each sample was summarized, as shown in Table 3. Sixty percent of the samples were used as model training samples, and the remaining 40% were used as verification samples.

Table 3.

Samples of vegetation types.

The quantitative evaluation of the classification results mainly included the following index factors [33]: confusion matrix (overall accuracy, producer’s accuracy, and user’s accuracy) and the kappa coefficient. The overall accuracy is essentially tells us out of all the reference sites, what proportion were mapped correctly. The producer’s accuracy is the map accuracy from the point of view of the map maker (the producer). This is how often real features on the ground are correctly shown on the classified map or the probability that a certain land cover of an area on the ground is classified as such. The user’s accuracy is the accuracy from the point of view of a map user, not the map maker. It essentially tells the user how often the class on the map will actually be present on the ground. This is referred to as the reliability. The kappa coefficient is a statistical measure of inter-rater agreement or inter-annotator agreement for qualitative (categorical) items.

4. Image Classification Results

According to the set of classification feature parameters listed in Table 2, an image classification based on C5.0 was performed. A quantitative evaluation of the classification results is shown in Table 4. The overall classification accuracy based on BRDF_0° (63.9%) was approximately 24% higher than that based on the DOM. Two principal plane reflectance feature sets (BRDF_PP+CP) were used for the fine classification of vegetation, and the best results were obtained. The overall accuracy of classification (89%) was greatly improved by 39%, and the kappa coefficient (0.870) was increased by 0.438. The classification results for the study area based on BRDF_PP+CP are shown in Figure 6.

Table 4.

Classification accuracy based on a feature set construction with the BRDF (overall accuracy (OA) and kappa).

Figure 6.

Classification results: (a) the reference map, (b) the map produced using DOM, and (c) the map produced based on multi-angle reflectance characteristics of the observed principal planes and cross-principal planes.

From Figure 6, the object-oriented vegetation classification method based on the multi-angle reflectance characteristics achieved good mapping results with clear boundaries and an accurate location distribution. BRDF_PP+CP feature sets helped to improve the recognition accuracy of the junction of different tree species, as shown in the blue rectangle in Figure 6. This was because the observation data from different angles could reflect the difference in tree structure, and the tree species could be identified well using multi-angle difference features. In addition, it could improve the accuracy of the division of corn and field roads, as shown in the yellow rectangle in Figure 6. However, although the BRDF_PP+CP greatly improved the identification accuracy for shadows, it performed poorly regarding the distinction between shadows and weeds with a low height, as shown in the black area of Figure 6. The spectral vegetation types under shadow coverage in the study area were various, and the spectral characteristics of shadow were similar to those of weeds with a low height.

5. Discussion

5.1. Applicability Assessment of BRDF Characteristic Types

For promoting the realization of the relationship between the BRDF and plant leaves and vegetation canopy structure parameters, the following subsections are given to discuss the role of the ground object BRDF characteristic parameters in the fine classification of vegetation.

(1) Performance of BRDF_0°:

Table 4 shows that compared with the classification results based on the DOM, the classification accuracy based on BRDF_0° was greatly improved. Figure 7 shows the producer’s accuracy and user’s accuracy of each type of land feature using two classification features.

Figure 7.

Vegetation classification accuracy based on DOM and BRDF_0°.

Figure 7 indicates that BRDF_0° was instrumental in the distinction among different objects. The vertical reflectance data of DOM were obtained using statistical methods. However, the method of using a semi-empirical model to construct the BRDF and invert the vertically observed reflectance combines the advantages of an empirical model and a physical model. Although the model parameters are empirical parameters, they have certain physical significance. Consequently, the observation angle of the ground objects is unified with the vertical observations through the BRDF model, which weakens the reflection characteristics of the same type of vegetation affected by the observation angle difference. Compared with the classification results obtained using DOM data, the classification accuracy obtained using BRDF_0° was greatly improved, but the recognition accuracy of dirt roads, peach trees, and ash trees was still very low. The producer’s accuracy of weeds, soybeans, and maize improved to greater than 90%, but the user’s accuracy improved only slightly, which indicates that the results for these three types of land features were overclassified.

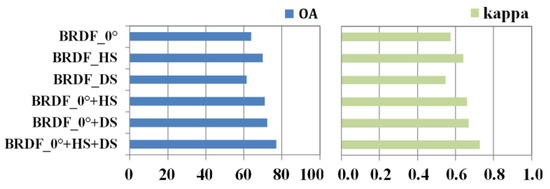

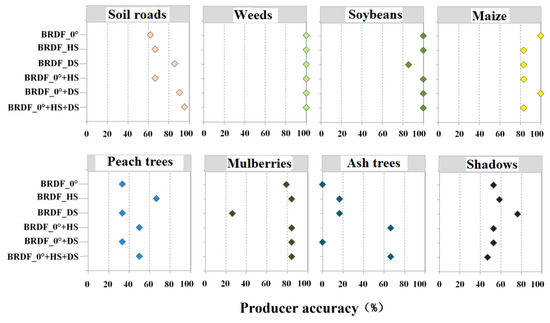

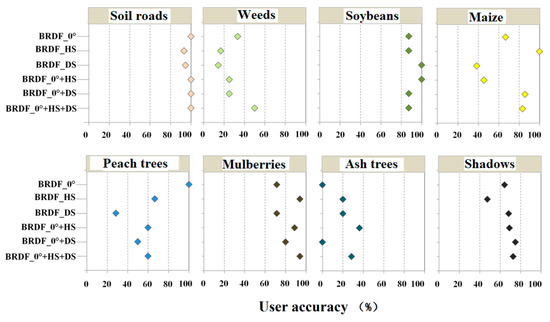

(2) Hot and dark spot reflectance signatures:

Six feature sets were used to classify vegetation, namely, the vertical observation direction (BRDF_0°); hot spot observation direction (BRDF_HS); dark spot observation direction (BRDF_DS); vertical observation direction and hot spot direction (BRDF_0°+HS); vertical observation direction and dark spot direction (BRDF_0°+DS); and vertical observation direction, hot spot direction, and dark spot direction (BRDF_0°+HS+DS). The overall classification accuracy and kappa coefficients of the six feature sets are shown in Figure 8. The classification accuracy of BRDF_0°+HS+DS was the highest at about 77%. The classification effect of vegetation types using BRDF_DS was slightly worse than that using BRDF_0°, while the classification effect of vegetation types using BRDF_HS was better than that using BRDF_0°. The results show that the hot spot reflectance signature had an excellent effect in the recognition of complex vegetation types. This was because the reflection characteristics of different objects in the direction of dark spots were lower than those in the direction of hot spots, and the hot spot effects between crops and tree species were quite different. The producer and user accuracies of each type of land feature are shown in Figure 9.

Figure 8.

Overall accuracy and kappa coefficient of vegetation classification based on hot and dark spot characteristics.

Figure 9.

Producer’s accuracy and user’s accuracy for each vegetation type based on hot and dark spot characteristics.

From Figure 9, the combined application of dark spot and hot spot directional reflectance features improved the classification accuracy. The classification results for soybean, peach trees, mulberry trees, and ash trees using BRDF_HS were more accurate than those using BRDF_DS. In contrast, the ground objects with a high accuracy included dirt roads and shadows based on the reflection features in the dark spot direction. The research shows that the tree structure features had a high sensitivity in the hot spot direction.

(3) Multi-angle reflectance characteristics of the observed principal plane and cross-principal plane:

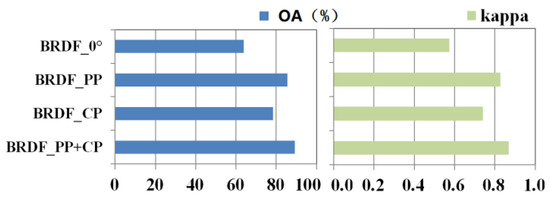

Four feature sets were used to classify the vegetation, namely the reflectance values from the vertical observation direction (BRDF_0°), principal plane (BRDF_PP), cross plane (BRDF_CP), principal and cross planes (BRDF_PP+CP). The corresponding classification results are shown in Figure 10. The classification accuracy using BRDF_PP+CP was the highest (OA = 88%). The reflectance characteristics from the principal plane were more conducive to the classification of complex vegetation species than those in the vertical main plane. The producer’s accuracy and user’s accuracy of each type of land feature are shown in Figure 11.

Figure 10.

Overall accuracy and kappa coefficient of the vegetation classification based on the multi-angle reflectance characteristics for the observed principal plane and cross-principal plane.

Figure 11.

Producer’s accuracy and user’s accuracy of each vegetation type based on the multi-angle reflectance characteristics for the observed principal plane and cross-principal plane.

Figure 11 shows that the combined application of reflectance characteristics from the principal and cross planes could improve the classification accuracy. The joint classification results for the reflectance characteristics in the two main planes show that the producer’s accuracy of other land features was greater than 90%, the producer’s accuracy of peach seedlings was approximately 52%, and the peach seedlings were misclassified as soybean and ash trees.

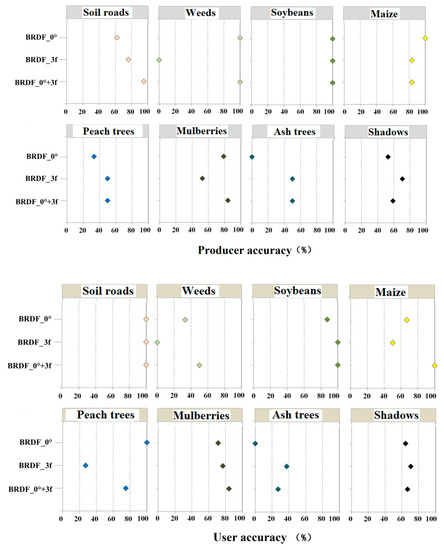

(4) BRDF model parameters:

Three feature sets were used to classify vegetation, namely the reflectance from the vertical observation direction (BRDF_0°), BRDF model parameters (BRDF_3f), and reflectance from the vertical observation direction and BRDF model parameters (BRDF_0°+3f). The corresponding classification results are shown in Figure 12. The classification accuracy of BRDF_0°+3f was the highest (OA = 78%). The proportions of uniform reflection, bulk reflection, and geometric optical reflection were expressed as parameters. The addition of model parameters increased the descriptive information for the physical structure of vegetation, which contributed to the classification. The producer’s and user’s accuracies of each type of land feature are shown in Figure 13.

Figure 12.

Overall accuracy and kappa coefficient of vegetation classification based on the BRDF model parameters.

Figure 13.

Producer’s accuracy and user’s accuracy of each vegetation type based on the BRDF model parameters.

5.2. Importance Evaluation of the Observation Angle and Band Selection

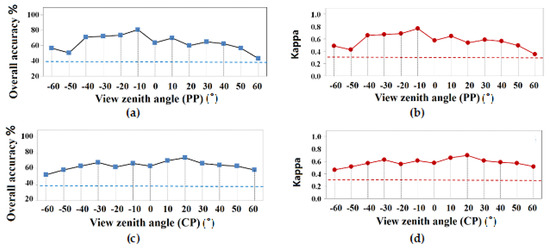

Figure 14 shows the variation in the classification accuracy (overall accuracy and kappa coefficient) based on a single observation angle feature in the main observation plane and main vertical plane. The angle feature in the main plane was observed. The angle feature located in the backward reflection direction (zenith angle between −10° and −20°) was associated with the optimal overall accuracy and kappa coefficient. In the main vertical observation plane, the classification accuracy exhibited a symmetrical phenomenon with the angle distribution, and the variation in amplitude was lower than that in the main observation plane.

Figure 14.

Observation angle importance analysis for the main plane. The blue/red dotted lines represent the classification results using DOM data.

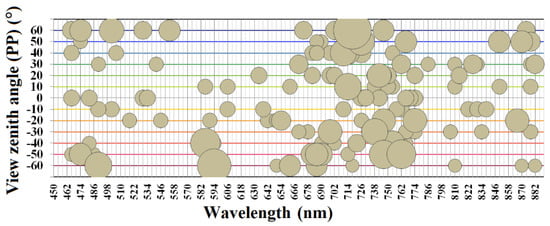

Hyperspectral remote sensing has the advantage of providing hundreds of spectral channels of data to obtain the spectral curves to reflect the attribute differences of the object. It also provides convenience for the study of the band sensitivity of different vegetation types. Figure 15 shows the top 10 bands in terms of feature importance when only the multiband dataset for the observation zenith angle was used for classification in the main observation plane, where the diameter of the circle represents the importance degree of the band. The importance of features were calculated using SPSS Clementine software, and the indicators included the sensitivity and information gain contribution. The results show that in the main plane of observation, the blue band (466–492 nm), green band (494–570 nm), red band (642–690 nm), red edge band (694–774 nm), and near-infrared band (810–882 nm) were of high importance, among which the blue light band, red light band, and red edge band were the most important.

Figure 15.

The importance of band selection at each angle in the principal plane. The diameter of the circle represents the importance of the band.

6. Conclusions

In this paper, the application of UAV multi-angle remote sensing in the fine classification of vegetation was studied by combining a constructed multi-angle remote sensing BRDF model with an object-oriented classification method. High-resolution image classification extraction with a UAV was the objective, and the importance of ground object BRDF characteristic parameters was discussed in detail. In addition, considering the spectral segmentation advantage of hyperspectral data and the importance of features from the two principal planes, the observation angles and band conditions of the participating classifications were further analyzed. The main conclusions are as follows.

(1) The overall classification accuracy (63.9%) based on the BRDF vertical observation reflectance characteristics was approximately 24% higher than that of traditional UAV orthophoto-based classification. The combined application of the reflection features from the main observation plane and main vertical plane yielded the best classification results, with an overall accuracy of approximately 89.2% and a kappa of 0.870.

(2) The reflectance characteristics near the hot spots were favorable for distinguishing between corn, soybean, and weeds. The combined application of the reflectance characteristics from the main observed plane could improve the classification accuracy of trees with different leaf shapes.

(3) The viewing angle characteristics in the retroreflective direction of the principal plane were better than those in the forward reflection direction. The observation angles associated with zenith angles between −10° and −20° were the most favorable for vegetation classification (sun position: zenith angle 28.86°, azimuth 169.07°).

(4) Bands of high importance for the fine classification of vegetation included the blue band (466–nm), green band (494–570 nm), red band (642–690 nm), red edge band (694–774 nm), and near-infrared band (810–882 nm), among which the blue, red, and red edge bands were the most important.

Due to the UAV hyperspectral image with a centimeter spatial resolution, when the research target size was larger than the image resolution, the introduction of an object-oriented analysis method can make the work of target recognition more accurate and efficient. Additionally, combining the construction of a multi-angle remote sensing BRDF model with an object-oriented classification method is very conducive to the study of the BRDF characteristics of canopy level vegetation. The research results provide a methodological reference and technical support for BRDF construction based on UAV multi-angle measurements, which promotes the development of multi-angle remote sensing technology in vegetation information extraction. The study provides important theoretical significance and application value for regional to global vegetation remote sensing applications. In this paper, only two classification characteristics of the reflectance and model parameters were proposed for the BRDF model. Research on the application of index characteristics, such as the vegetation index and BRDF shape index in vegetation classification, along with an evaluation of different classifiers, will be developed in future work.

Author Contributions

Conceptualization, L.D.; methodology, L.D. and Y.Y.; software, L.D. and Y.Y.; validation, Y.Y.; formal analysis, Y.Y.; investigation, L.D. and Y.Y.; resources, L.D.; data curation, L.D.; writing—original draft preparation, Y.Y.; writing—review and editing, L.D., X.L. and L.Z.; supervision, X.L. and L.Z.; project administration, L.D.

Funding

This research and APC was funded by National Key R&D Program of China, grant number no. 2018YFC0706004.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Winter, S.; Bauer, T.; Strauss, P.; Kratschmer, S.; Paredes, D.; Popescu, D.; Landa, B.; Guzmán, G.; Gómez, J.A.; Guernion, M. Effects of vegetation management intensity on biodiversity and ecosystem services in vineyards: A meta-analysis. J. Appl. Ecol. 2018, 55, 2484–2495. [Google Scholar] [CrossRef]

- Uuemaa, E.; Antrop, M.; Roosaare, J.; Marja, R.; Mander, Ü. Landscape Metrics and Indices: An Overview of Their Use in Landscape Research. Living Rev. Landsc. Res. 2009, 3, 1–28. [Google Scholar] [CrossRef]

- Patrick, M.J.; Ellery, W.N. Plant community and landscape patterns of a floodplain wetland in Maputaland, Northern KwaZulu-Natal, South Africa. Afr. J. Ecol. 2010, 45, 175–183. [Google Scholar] [CrossRef]

- Liu, G.L.; Zhang, L.C.; Zhang, Q.; Zipporah, M.; Jiang, Q.H. Spatio–Temporal Dynamics of Wetland Landscape Patterns Based on Remote Sensing in Yellow River Delta, China. Wetlands 2014, 34, 787–801. [Google Scholar] [CrossRef]

- Clark, M.L.; Buck-Diaz, J.; Evens, J. Mapping of forest alliances with simulated multi-seasonal hyperspectral satellite imagery. Remote Sens. Envrion. 2018, 210, 490–507. [Google Scholar] [CrossRef]

- Aslan, A.; Rahman, A.F.; Warren, M.W.; Robeson, S.M. Mapping spatial distribution and biomass of coastal wetland vegetation in Indonesian Papua by combining active and passive remotely sensed data. Remote Sens. Envrion. 2016, 183, 65–81. [Google Scholar] [CrossRef]

- Shukla, G.; Garg, R.D.; Kumar, P.; Srivastava, H.S.; Garg, P.K. Using multi-source data and decision tree classification in mapping vegetation diversity. Spat. Inf. Res. 2018, 1–13. [Google Scholar] [CrossRef]

- Shaw, J.R.; Cooper, D.J.; Sutfin, N.A. Applying a Hydrogeomorphic Channel Classification to understand Spatial Patterns in Riparian Vegetation. J. Veg. Sci. 2018, 29, 550–559. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Goetz, A.F.H. Three decades of hyperspectral remote sensing of the Earth: A personal view. Remote Sens. Envrion. 2009, 113, S5–S16. [Google Scholar] [CrossRef]

- Lunga, D.; Prasad, S.; Crawford, M.M.; Ersoy, O. Manifold-Learning-Based Feature Extraction for Classification of Hyperspectral Data: A Review of Advances in Manifold Learning. IEEE Signal Proc. Mag. 2013, 31, 55–66. [Google Scholar] [CrossRef]

- Ishida, T.; Kurihara, J.; Viray, F.A.; Namuco, S.B.; Paringit, E.C.; Perez, G.J.; Takahashi, Y.; Marciano, J.J., Jr. A novel approach for vegetation classification using UAV-based hyperspectral imaging. Comput. Elec. Agr. 2018, 144, 80–85. [Google Scholar] [CrossRef]

- Filippi, A.M.; Jensen, J.R. Fuzzy learning vector quantization for hyperspectral coastal vegetation classification. Remote Sens. Envrion. 2006, 99, 512–530. [Google Scholar] [CrossRef]

- Fu, Y.; Zhao, C.; Wang, J.; Jia, X.; Guijun, Y.; Song, X.; Feng, H. An Improved Combination of Spectral and Spatial Features for Vegetation Classification in Hyperspectral Images. Remote Sens. 2017, 9, 261. [Google Scholar] [CrossRef]

- Hall, F.G.; Hilker, T.; Coops, N.C.; Lyapustin, A.; Huemmrich, K.F.; Middleton, E.; Margolis, H.; Drolet, G.; Black, T.A. Multi-angle remote sensing of forest light use efficiency by observing PRI variation with canopy shadow fraction. Remote Sens. Envrion. 2008, 112, 3201–3211. [Google Scholar] [CrossRef]

- Gatebe, C.K.; King, M.D. Airborne spectral BRDF of various surface types (ocean, vegetation, snow, desert, wetlands, cloud decks, smoke layers) for remote sensing applications. Remote Sens. Envrion. 2016, 179, 131–148. [Google Scholar] [CrossRef]

- Xie, D.; Qin, W.; Wang, P.; Shuai, Y.; Zhou, Y.; Zhu, Q. Influences of Leaf-Specular Reflection on Canopy BRF Characteristics: A Case Study of Real Maize Canopies With a 3-D Scene BRDF Model. IEEE Trans. Geosci. Remote Sens. 2016, 55, 619–631. [Google Scholar] [CrossRef]

- Georgiev, G.T.; Gatebe, C.K.; Butler, J.J.; King, M.D. BRDF Analysis of Savanna Vegetation and Salt-Pan Samples. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2546–2556. [Google Scholar] [CrossRef]

- Peltoniemi, J.I.; Kaasalainen, S.; Naranen, J..; Rautiainen, M.; Stenberg, P.; Smolander, H.; Smolander, S.; Voipio, P. BRDF measurement of understory vegetation in pine forests: Dwarf shrubs, lichen, and moss. Remote Sens. Environ. 2005, 94, 343–354. [Google Scholar] [CrossRef]

- Mitchell, J.J.; Glenn, N.F.; Anderson, M.O.; Hruska, R.C.; Halford, A.; Baun, C.; Nydegger, N. 2012 4th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS). In Proceedings of the Unmanned Aerial Vehicle (UAV) Hyperspectral Remote Sensing for Dryland Vegetation Monitoring, Shanghai, China, 4–7 June 2012; pp. 1–10. [Google Scholar]

- Bareth, G.; Aasen, H.; Bendig, J.; Gnyp, M.L.; Bolten, A.; Jung, A.; Michels, R.; Soukkamäki, J. Low-weight and UAV-based Hyperspectral Full-frame Cameras for Monitoring Crops: Spectral Comparison with Portable Spectroradiometer Measurements. Photogramm. Fernerkun. Geoinf. 2015, 1, 69–79. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, H.; Wang, P. Quantitative modelling for leaf nitrogen content of winter wheat using UAV-based hyperspectral data. Int. J. Remote Sens. 2017, 38, 2117–2134. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Baixiang, X.U.; Liu, L. A Study of the application of multi-angle remote sensing to vegetation classification. Technol. Innov. Appl. 2018, 13, 1–10. [Google Scholar]

- Roosjen, P.; Bartholomeus, H.; Suomalainen, J.; Clevers, J. Investigating BRDF effects based on optical multi-angular laboratory and hyperspectral UAV measurements. In Proceedings of the Fourier Transform Spectroscopy, Lake Arrowhead, CA, USA, 1–4 March 2015. [Google Scholar] [CrossRef]

- Tao, L.; Amr, A.E. Multi-view object-based classification of wetland land covers using unmanned aircraft system images. Remote Sens. Envrion. 2018, 216, 122–138. [Google Scholar] [CrossRef]

- Tao, L.; Amr, A.E. Deep convolutional neural network training enrichment using multi-view object-based analysis of Unmanned Aerial systems imagery for wetlands classification. ISPRS J. Photogramm. Remote Sens. 2018, 139, 154–170. [Google Scholar] [CrossRef]

- Cubert S185 Frame-Frame Imaging Spectrometer was Producted by Cubert GmbH, Science Park II, Lise-Meitner Straße 8/1, D-89081 Ulm. Available online: http://cubert-gmbh.com/ (accessed on 6 May 2019).

- Mezaal, M.; Pradhan, B.; Rizeei, H. Improving landslide detection from airborne laser scanning data using optimized dempster–shafer. Remote Sens. 2018, 10, 1029. [Google Scholar] [CrossRef]

- Bryson, M.; Sukkarieh, S. Building a robust implementation of bearing-only inertial SLAM for a UAV. J. Field Robot. 2007, 24, 113–143. [Google Scholar] [CrossRef]

- Wanner, W.; Li, X.; Strahler, A.H. On the derivation of kernels for kernel-driven models of bidirectional reflectance. J. Geophys. Res. 1995, 100, 21077–21090. [Google Scholar] [CrossRef]

- Im, J.; Jensen, R.J. A change detection model based on neighborhood correlation image analysis and decision tree classification. Remote Sens. Envrion. 2005, 99, 326–340. [Google Scholar] [CrossRef]

- Pal, M. Random forest classifier for remote sensing classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).