1. Introduction

Global climate change and carbon-neutral regulations require renewable and sustainable technologies as alternatives to conventional fuels [

1]. One of the key challenges of renewable energy systems is their intermittency, which necessitates the use of alternative energy storage devices to ensure a stable and reliable power supply. Among these, batteries play a fundamental role in energy storage systems, stabilizing solar and wind power outputs, increasing their share in power generation, and facilitating their integration into the electric grid [

2]. Recently, rechargeable lithium–ion (Li-ion) batteries have become the dominant choice for energy storage applications, including electric vehicles (EVs), portable electronics, and grid storage, due to their high energy density, long lifespan, low self-discharge rate, and zero emissions [

3,

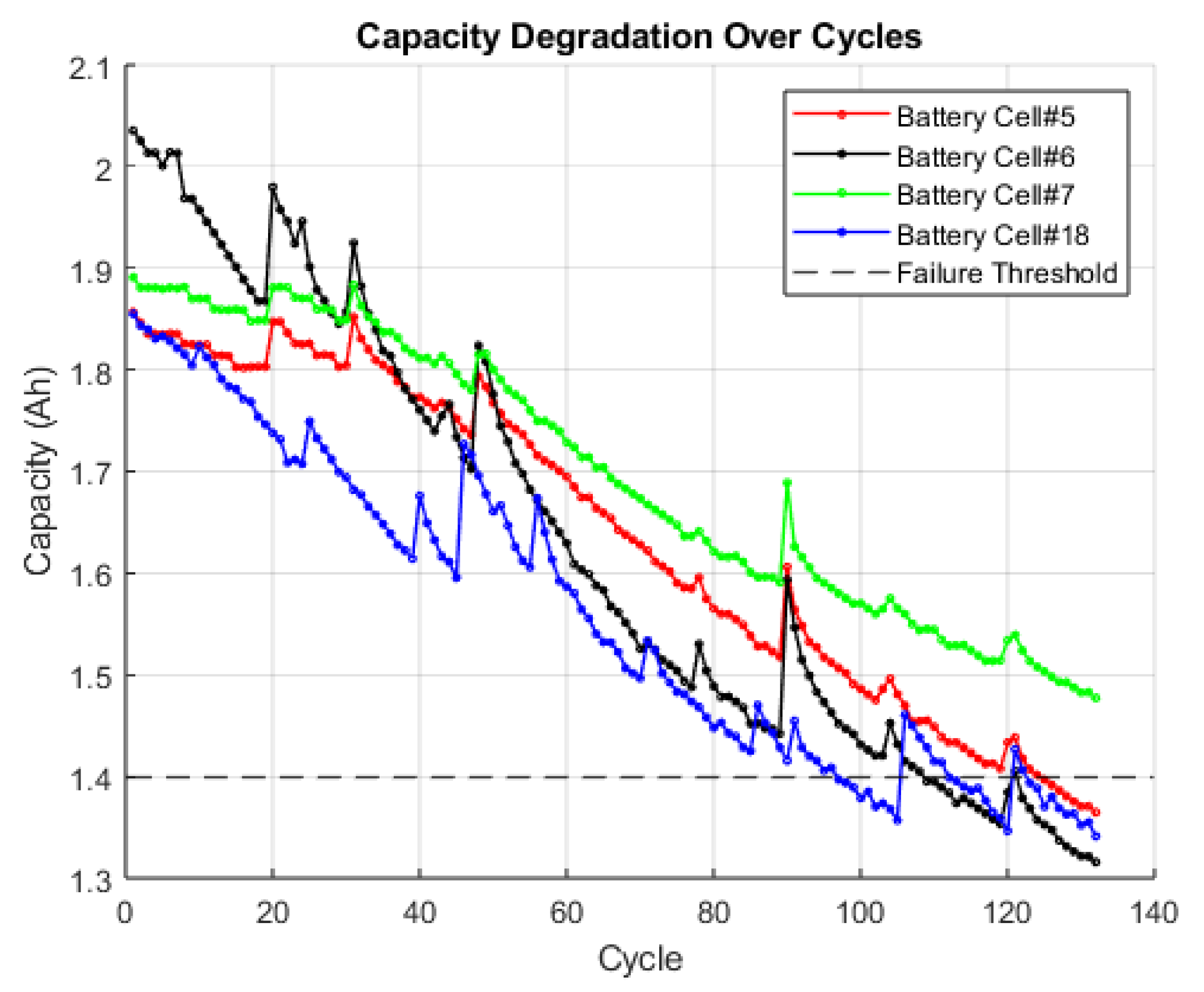

4]. Despite these advantages, Li-ion batteries experience aging phenomena such as capacity fade and increased internal resistance, which degrade performance over time. To address these challenges, Battery Management Systems (BMSs) are employed to monitor battery health, optimize performance, and ensure safe and efficient operation throughout the battery’s lifecycle [

5].

The cyclic aging phenomenon highlights a critical frequency to which the battery is subjected. To overcome this challenge, an electronic system called a Battery Management System (BMS) is used to manage the charging and discharging processes of rechargeable batteries. The BMS is crucial to optimizing battery performance and ensuring long-term durability by monitoring battery states [

6]. A key function of the BMS is to monitor the state of charge (SOC), which represents the ratio of the battery’s current capacity to its full charge capacity. Based on this monitoring, the BMS implements a balanced charging and discharging strategy to prevent overcharging and overdischarging, thus preserving battery health and longevity [

7].

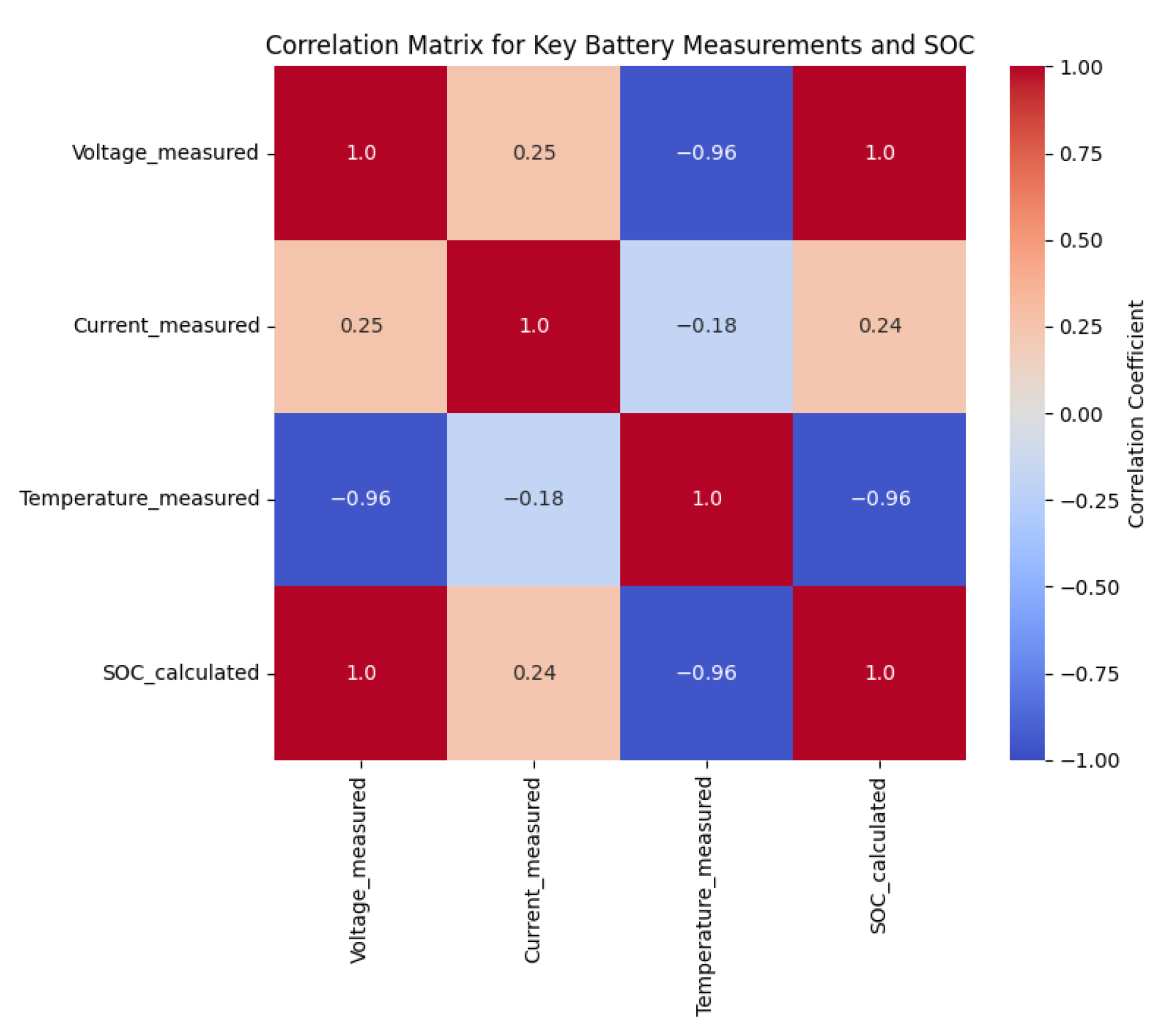

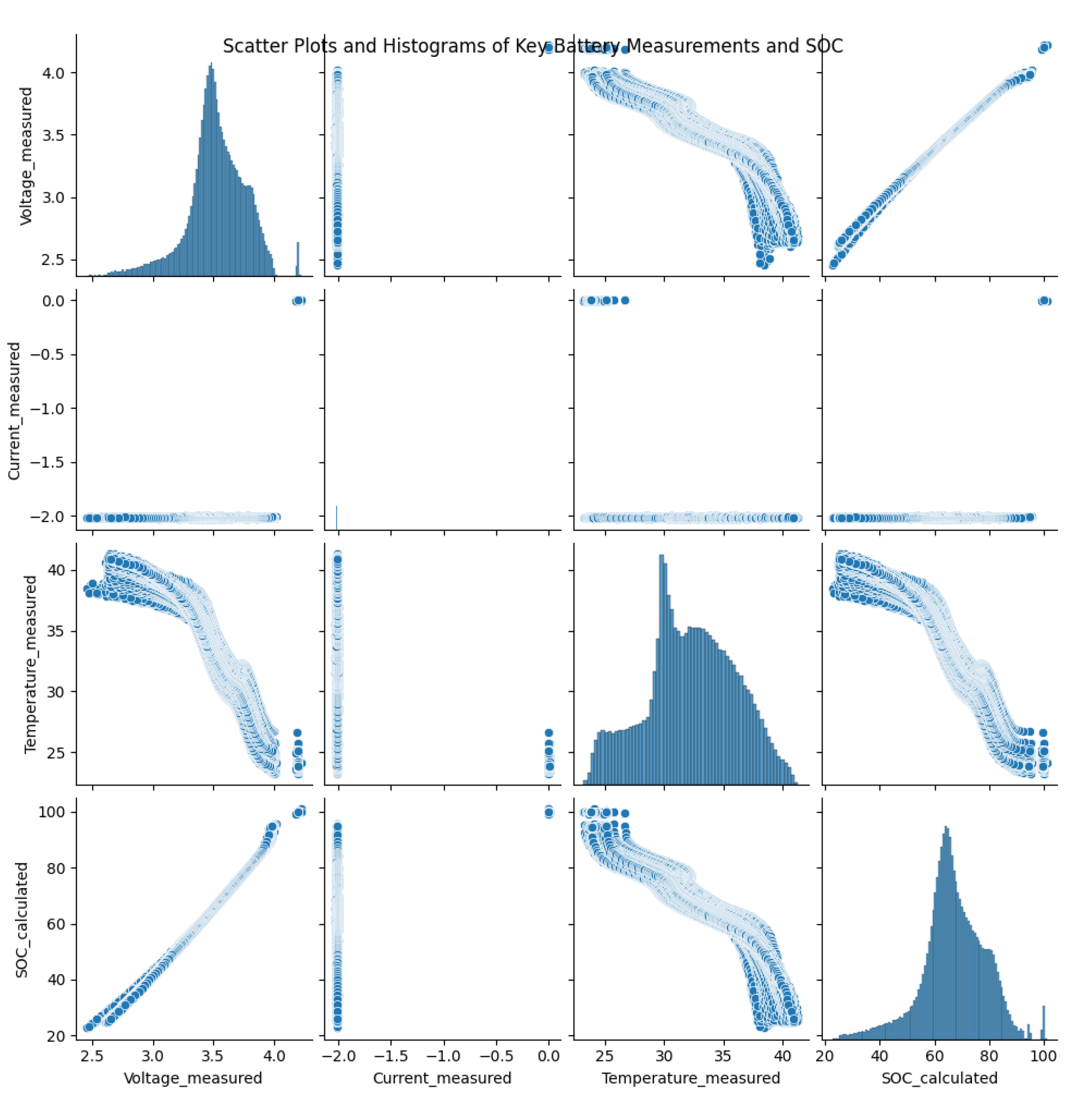

Given the dynamic, non-linear characteristics, and intricate electrochemical processes occurring within the battery, it is not feasible to directly monitor the state of charge (SOC) using sensors. Instead, it can be estimated indirectly using detectable signals such as current, voltage, temperature, and other variables [

8]. Aging cycles and temperature changes significantly influence battery performance, making calculating precise SOC extremely difficult [

9]. Consequently, various methods for SOC estimation were used in different battery-powered applications.

Traditional SOC estimation methods often overlook the critical impact of temperature variations, which can lead to thermal runaway—a significant safety concern in lithium–ion batteries. To enhance both accuracy and safety, recent studies have explored joint estimation techniques that simultaneously assess SOC and internal temperature. Notably, Zhang et al. [

10] proposed a non-invasive method employing ultrasonic reflection waves. In their approach, a piezoelectric transducer affixed to the battery surface emits ultrasonic pulses, and the reflected signals are analyzed to extract features sensitive to both SOC and temperature changes. By applying a back-propagation neural network to these features, they achieved root mean square errors of 7.42% for SOC and 0.40 °C for temperature estimation. This method offers a promising avenue for real-time battery monitoring, enhancing the reliability and safety of battery management systems. According to previous studies, SOC estimation methods can be broadly categorized into direct, indirect, and data-driven methods [

11].

Direct methods rely on mathematical models for SOC calculation based on key battery measurements such as voltage and current. Coulomb counting is a widely used direct method for SOC estimation due to its simplicity and real-time capability [

12]. This method involves integrating the current over time to determine the remaining charge in the battery. Despite its straightforward implementation, Coulomb counting is highly sensitive to initial SOC inaccuracies and sensor errors, leading to cumulative integration errors over time [

13]. Studies have shown that, while the Coulomb counting method is effective for short-term SOC estimation, its long-term accuracy is compromised without frequent calibration [

14]. The OCV method is another direct method that relies on the relationship between the open-circuit voltage and SOC. This method requires the battery to rest for a period of time to reach equilibrium, allowing accurate voltage measurements to be mapped to the SOC using a predefined voltage–SOC curve. Although the OCV method provides high accuracy under static conditions, it is not suitable for real-time applications due to the resting period requirement [

15].

Non-direct SOC estimation methods rely on indirect measurements such as voltage, current, and temperature, interpreted through mathematical models or data-driven frameworks. Among these, Electrochemical Impedance Spectroscopy (EIS) has been utilized to estimate internal battery states by analyzing frequency-domain impedance responses. This technique offers valuable insights into battery degradation mechanisms and internal states. However, the complexity of EIS hardware and the need for extensive post-processing limit its practicality for real-time SOC estimation [

16].

In contrast, model-based estimation methods—particularly those grounded in the Kalman Filter (KF) framework—have been widely adopted for real-time applications. The KF assumes linear dynamics, while its extensions, including the EKF, Unscented Kalman Filter (UKF), and Cubature Kalman Filter (CKF), are designed to handle non-linearities in battery behavior [

17]. These filters use state-space models and recursive updates to predict and correct SOC estimates in dynamic operating conditions [

18]. Among them, the EKF has been especially popular due to its balance between accuracy and computational efficiency. Nonetheless, the effectiveness of Kalman filter-based methods depends heavily on accurate battery modeling and can incur considerable computational overhead [

19,

20].

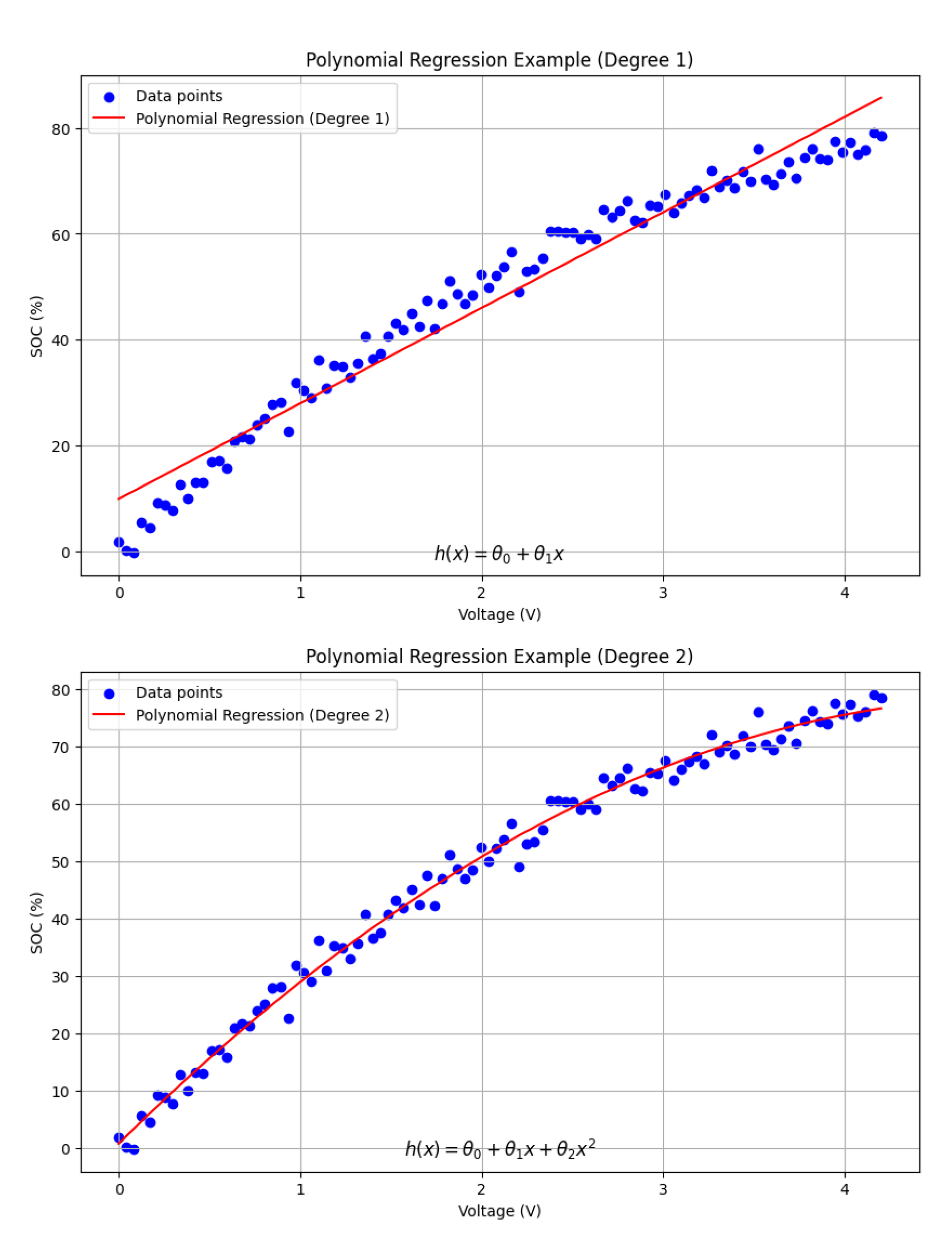

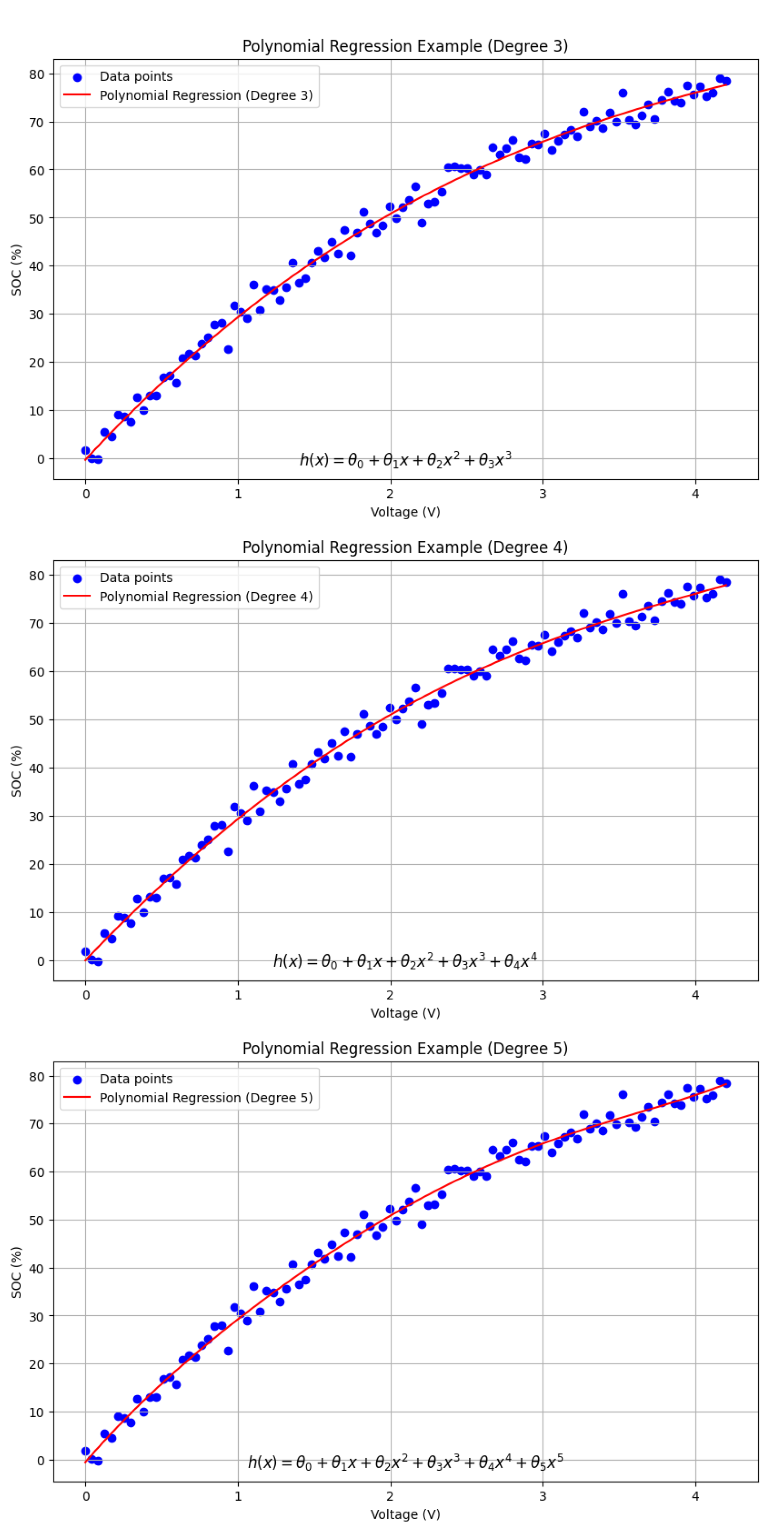

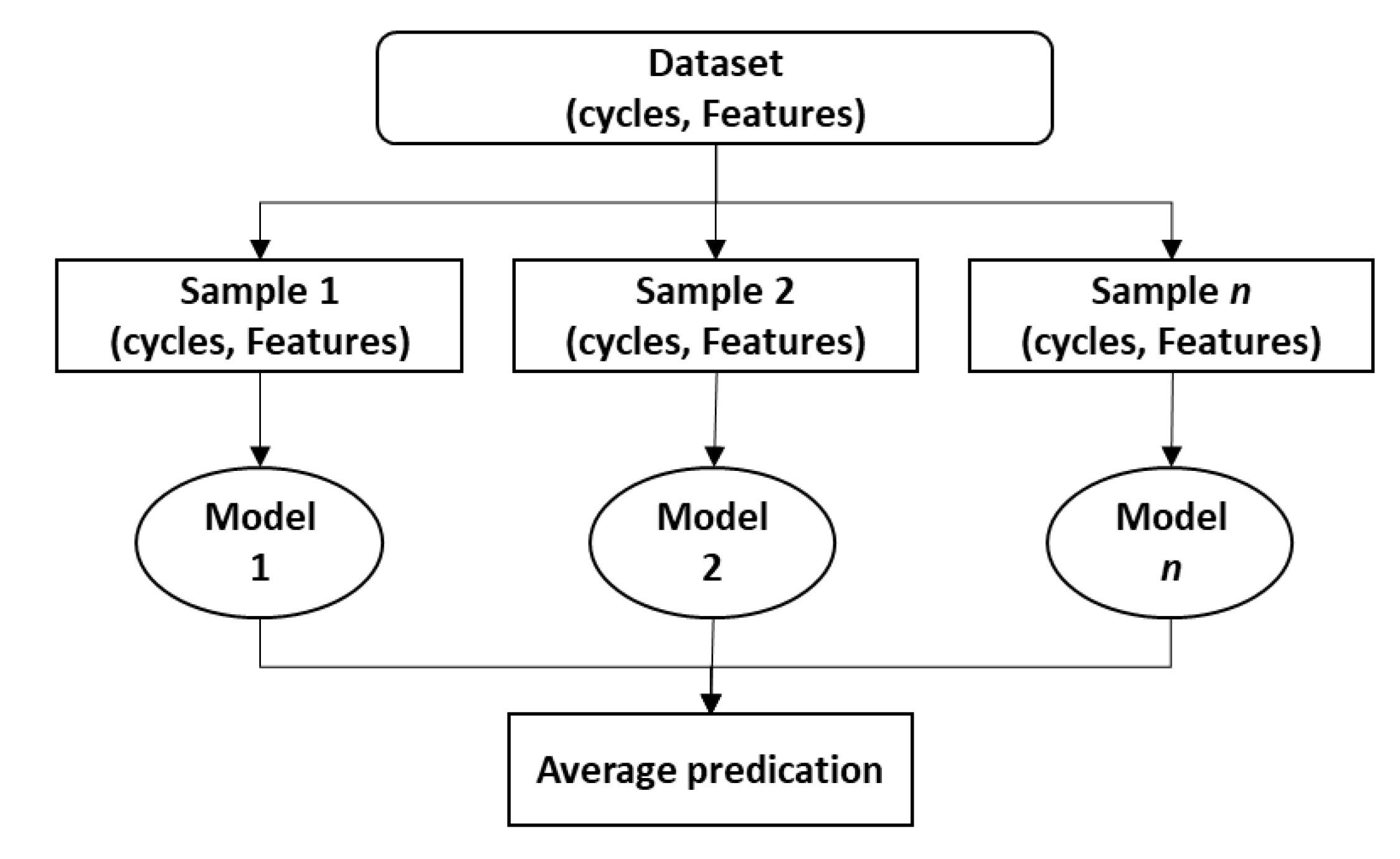

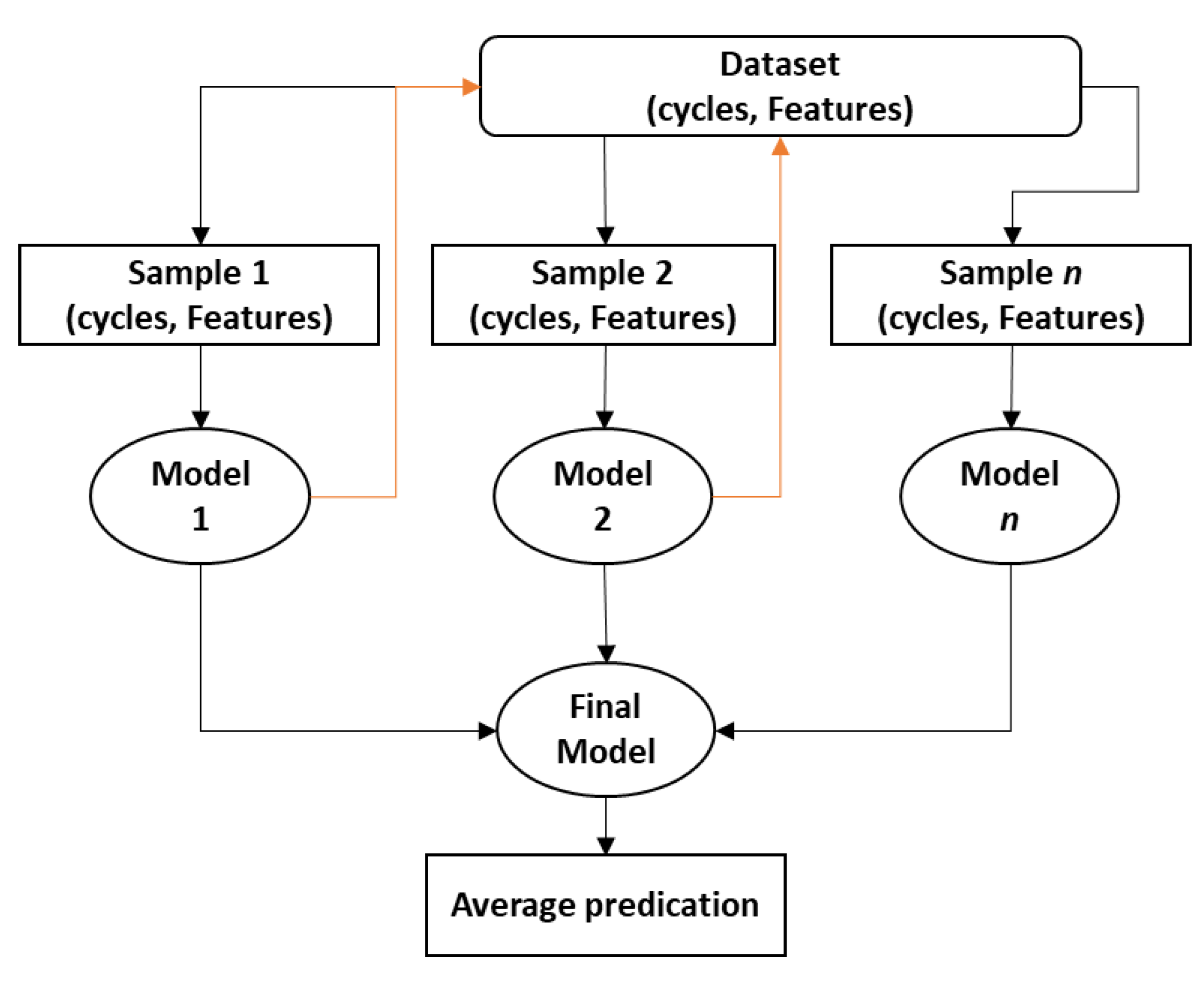

Recently, data-driven methods have gained significant attention for SOC estimation, leveraging regression machine learning functions [

21,

22]. In the data-driven domain, historical data are utilized to learn complex relationships between measurable parameters such as voltage, current, temperature, and SOC. Regression functions can handle non-linearities and interactions between variables, providing models with good performance in SOC estimation. However, the performance of these models heavily depends on the quality and quantity of the training data [

11,

22]. The regression machine learning models commonly used for SOC estimation include linear regression [

23], support vector machine [

24], Gaussian process regression [

25], and ensemble regression models [

26]. The advantages of these models include fast training speed and low computational requirements. However, limitations remain in adapting to complex battery operating conditions [

27]. Therefore, enhancing model performance in SOC estimation for advanced BMS operations is crucial.

The accuracy of regression models in previous studies is affected by the presence of measurement noise [

28]. When this noise cannot be ignored, the estimation results often exhibit significant fluctuations, impacting the original characteristics of the data and degrading the performance of the machine learning models [

29]. Therefore, implementing a denoising technique is essential for subsequent processing to ensure more reliable SOC estimation [

30]. In the literature, Chemali et al. [

31] utilized voltage, temperature, current, and mean voltage values from 50 to 400 steps as input features in a neural network framework. However, the mean-step approach cannot autonomously adapt to varying noise signal intensities, which may lead to either over-smoothing or under-smoothing of the signal during denoising. Other modern research has explored various denoising techniques within machine learning models to enhance SoC estimation. Chen et al. [

30] proposed a hybrid neural network that combines a Denoising Autoencoder with a Gated Recurrent Unit (GRU) to enrich SoC prediction. The DAE is used as an input data pre-processing step to extract useful features while reducing measurement noise, which are then fed into the GRU regression model. Moreover, Wang et al. [

32] addressed the problem of non-Gaussian noise and outliers in battery data by modifying the learning algorithm of an Extreme Learning Machine. Instead of the usual mean squared error, a mixture generalized maximum correntropy criterion was employed as the loss function to enhance robustness to noise and outliers. Wavelet transform (WT) is commonly used for signal analysis and denoising and is more complex to implement than simple averaging methods. In [

33], the authors applied the discrete wavelet transform (DWT) with a five-level decomposition and third-order Daubechies wavelet, effectively removing noise and stabilizing estimated SOCs. However, this study used the EKF and did not address the applicability of the wavelet transform to machine learning models.

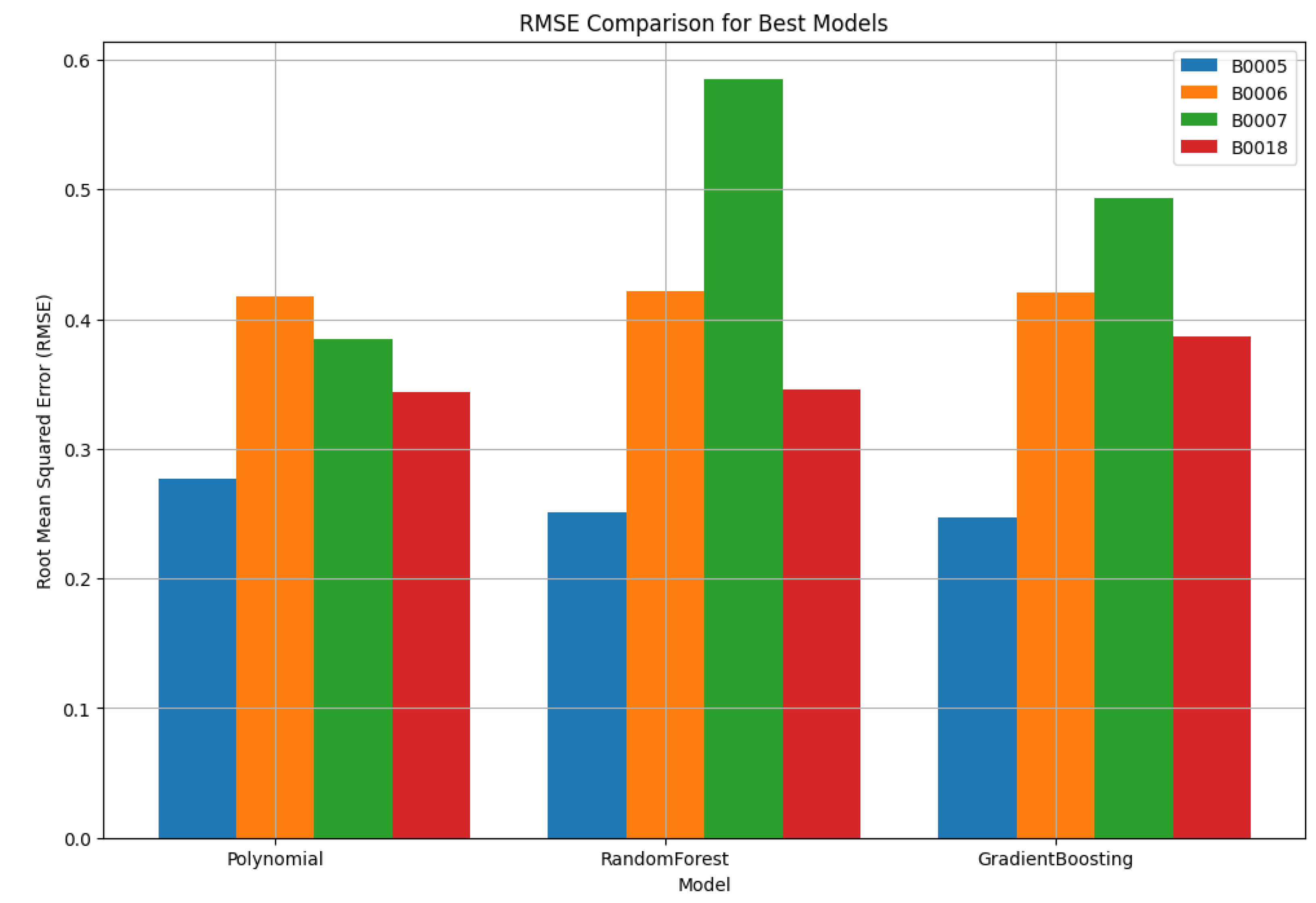

In this study, a new algorithm based on wavelet transforms is proposed to address the issue of measurement noise by removing noise components from the detail coefficients of battery signals. These detail coefficients capture high-frequency content, which is typically associated with noise. The effectiveness of wavelet denoising is validated through its ability to reduce prediction errors and enhance the reliability of SOC estimation results. The combination of wavelet denoising and regression machine learning methods offers distinct advantages in handling noise, capturing non-linear relationships, and providing a more flexible, accurate solution for SOC estimation. These advantages make this approach more suitable compared to other methods for addressing the challenges associated with SOC estimation [

34].

The primary contribution of this work lies in integrating wavelet-based denoising as a pre-processing step to enhance the quality of input signals, specifically voltage and temperature measurements, used in machine learning-based SOC estimation. By systematically evaluating multiple thresholding levels and applying them across several regression models, this study demonstrates that wavelet denoising significantly enhances estimation accuracy, particularly under real-world noisy measurement conditions. This approach offers a practical and scalable enhancement to SOC prediction pipelines and holds promise for implementation in embedded battery management systems.

4. Conclusions

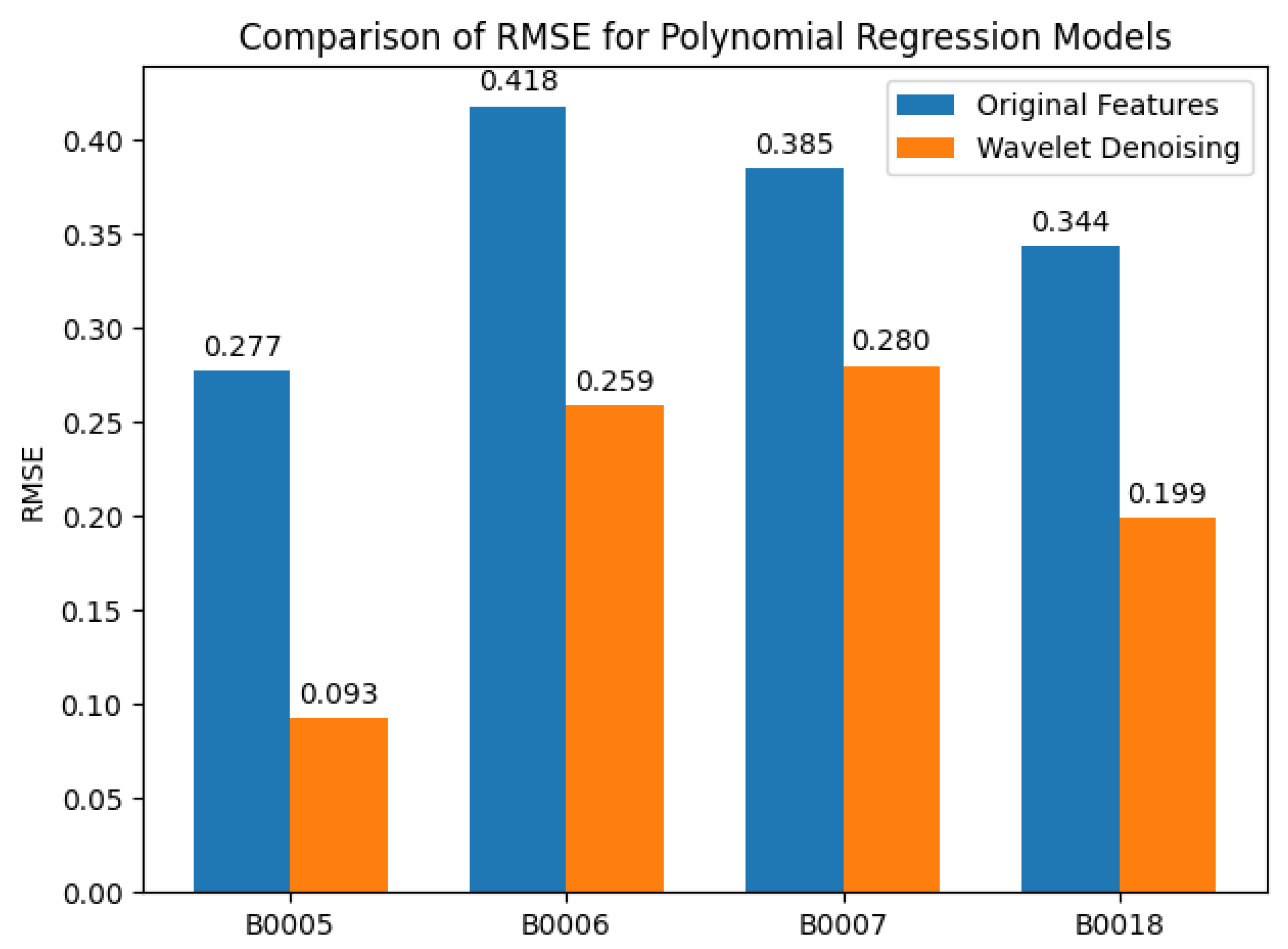

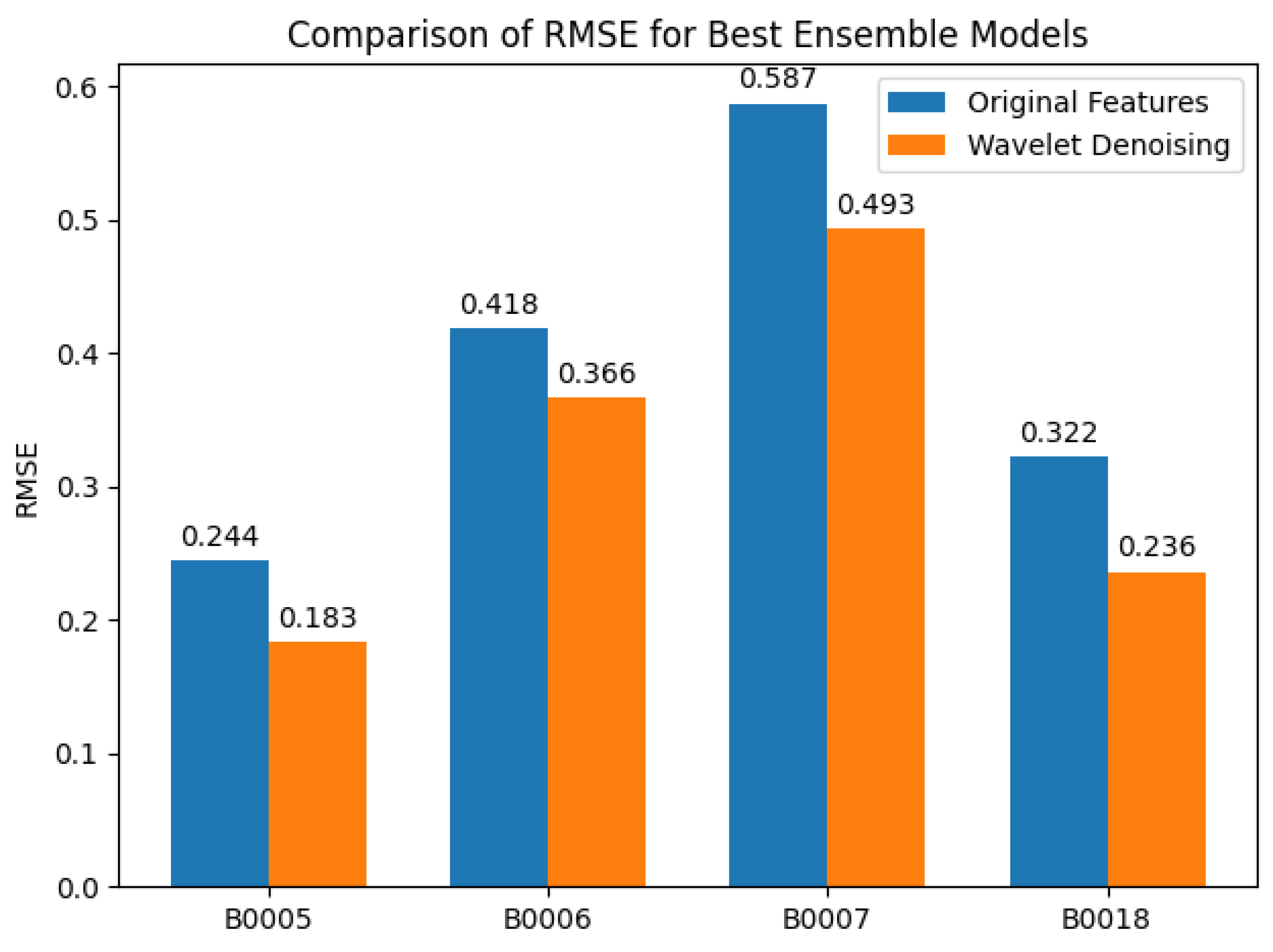

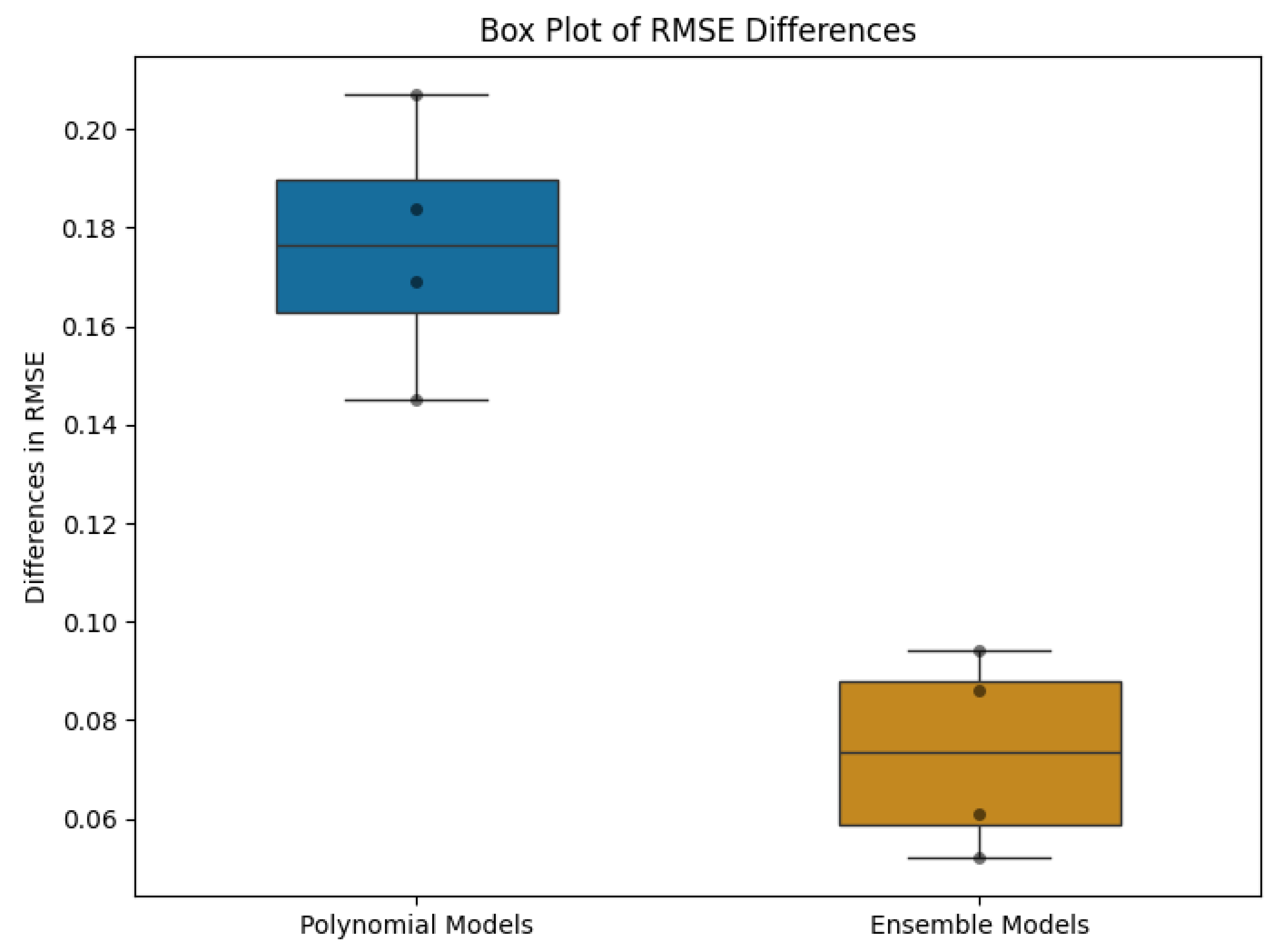

This study has thoroughly investigated the impact of wavelet denoising on the accuracy of SOC estimation models, utilizing both polynomial and ensemble machine learning models. The investigation outcomes indicate that both categories of models experience notable improvements from wavelet denoising. Wavelet-Polynomial models with threshold level 5 (0.2) displayed significant enhancements in accuracy, as indicated by decreased RMSE values across various battery datasets. Ensemble models, specifically Random Forest and Gradient Boosting, also exhibited improved performance, with a more consistent and less variable degree of enhancement compared to polynomial models. The statistical assessment carried out validates the substantial influence of wavelet denoising on SOC estimation precision. The p-value derived from t-tests offers strong evidence refuting the null hypothesis, endorsing the superiority of wavelet-denoised data over original data in terms of RMSE metric. Furthermore, the thorough evaluation of model performances under varying degrees of polynomial regression and ensemble model setups provides valuable insights into the specific conditions wherein each model type maximizes its effectiveness. These insights are vital for the practical implementation of SOC estimation methodologies in real-world scenarios, where prediction accuracy and reliability hold significant importance. Future research should concentrate on fine-tuning denoising parameters and investigating the integration of these strategies into real-time SOC estimation systems, such as electric vehicles or battery energy storage systems. Moreover, extending the range of the investigation to include different types of batteries and charging/discharging cycles could provide additional support for and strengthen the proposed approaches. Although traditional SOC estimation methods such as the Extended Kalman Filter (EKF), Particle Filtering (PF), and direct machine learning models have been widely adopted, they are often sensitive to measurement noise and rely heavily on accurate system modeling. In contrast, the proposed integration of wavelet denoising with regression-based machine learning offers a unique advantage by explicitly reducing signal noise prior to model training. This pre-processing step enhances feature stability and contributes to improved generalization across aging cycles, as reflected by consistent reductions in RMSE across multiple battery datasets. To further validate the effectiveness of the proposed approach, future work should incorporate baseline comparisons with classical model-based estimators such as EKF and PF, as well as deep learning-based methods. Such benchmarking will support a more comprehensive evaluation framework and better highlight the strengths and trade-offs of the proposed wavelet-enhanced data-driven methodology. Finally, we note that the proposed method was validated using only the NASA battery dataset, which primarily reflects degradation under ambient temperature conditions. To enhance the generalizability and applicability of the proposed approach, future work should incorporate additional datasets collected under varying environmental and operational conditions, such as different temperatures, charging/discharging rates, and battery chemistries. Multi-condition validation will further support the robustness of wavelet-based SOC estimation models for diverse real-world applications.