Federated Decision Transformers for Scalable Reinforcement Learning in Smart City IoT Systems

Abstract

1. Introduction

- We demonstrate how the FDT supports horizontal scalability and privacy by enabling decentralized policy learning through federated aggregation of local updates [34].

2. Related Work

2.1. Federated Learning in Smart Cities

2.2. Reinforcement Learning for Autonomous Decision-Making

2.3. Transformers for Reinforcement Learning and MARL

2.4. Federated Reinforcement Learning and Federated Transformers

3. Methodology

3.1. Model Design

- Embeddings. States, actions, and RTGs are projected into a common hidden dimension and concatenated with positional encodings.

- Transformer encoder. Multi-head self-attention captures long-range dependencies, enabling agents to model temporal correlations and interaction patterns without recurrence.

- Policy head. Outputs logits for discrete actions or mean values for continuous actions, conditioned on the contextualized sequence representation.

- RTG normalization. A running normalizer stabilizes training in heterogeneous client environments. Normalization is performed locally at each client, while aggregated statistics across clients maintain consistency at the federated level.

3.2. Client-Side Training

Complexity

3.3. Server-Side Aggregation

3.3.1. Complexity

| Algorithm 1 FDT Client-Side Training |

|

| Algorithm 2 Server Aggregation with Privacy and Robustness |

|

3.3.2. Explanation of Algorithm 2

3.3.3. Complexity

4. Comparative Analysis with MAAC

Baseline Comparison Scope

5. Experimental Setup

5.1. Multi-Agent Environment

5.2. Federated Setup

6. Results and Discussion

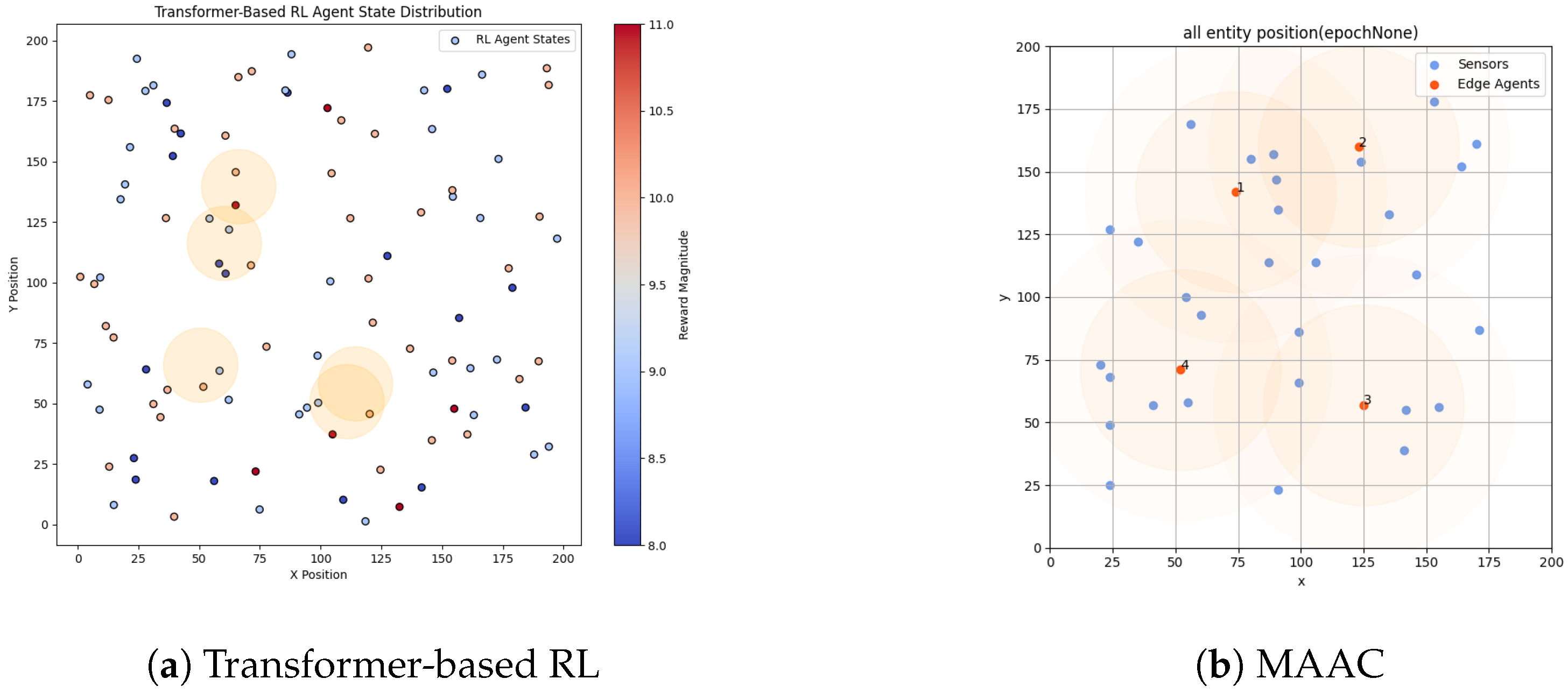

6.1. Learning Behavior

6.2. Reward Efficiency and Stability

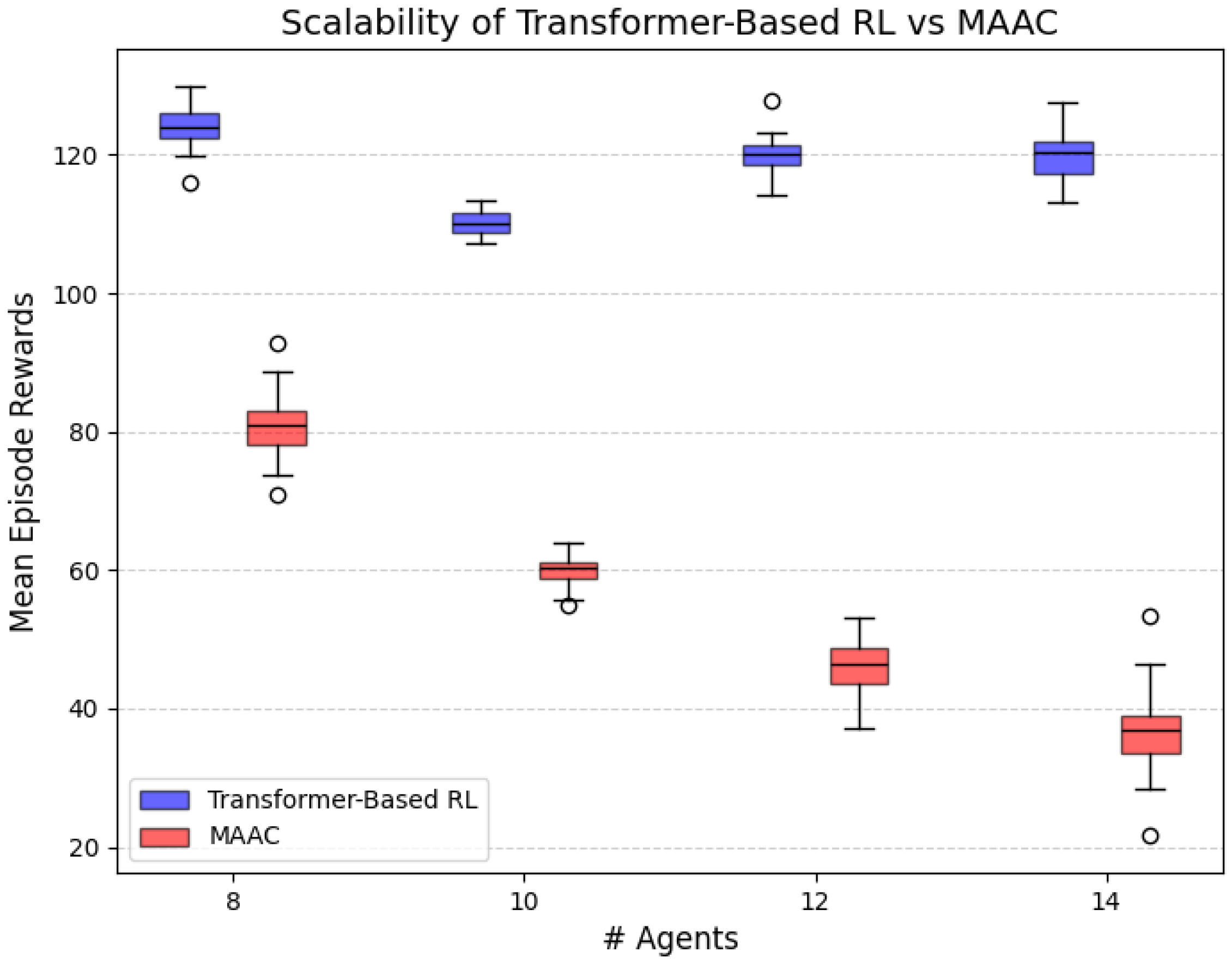

6.3. Scalability

6.4. Comparative Summary

6.5. Implications for Smart Cities

- Feasibility and Deployment Considerations. Practical IoT deployment introduces several operational constraints. Communication overhead is mitigated by transmitting compressed model deltas instead of raw gradients, combined with quantization and structured pruning to further reduce bandwidth requirements. Computational latency can be alleviated by partially offloading transformer inference to regional servers in a hierarchical FL architecture. Energy consumption challenges in battery-powered IoT devices are addressed through lightweight transformer variants (e.g., TinyBERT and MobileFormer) and adaptive client participation strategies. Together, these mechanisms enable scalable and energy-efficient implementations of the FDT across resource-constrained environments.

- Scalability and Component Sensitivity. To better understand the FDT’s performance sources, future work should include ablation studies on the transformer encoder, RTG conditioning, and aggregation mechanisms. This would clarify the contribution of each component, improve interpretability, and reinforce the FDT’s scalability under diverse network and workload conditions.

- Resilience and Cybersecurity. Beyond efficiency, the FDT inherently mitigates several key challenges in federated multi-agent learning. Client heterogeneity is handled through local RTG normalization and adaptive weighting, ensuring stable convergence across non-IID clients. Communication bottlenecks are alleviated through compressed delta updates, partial participation, and compatibility with quantization and structured pruning for bandwidth efficiency. Security and privacy vulnerabilities are mitigated using differential privacy, secure aggregation, and robust aggregation methods such as trimmed mean and Krum, which protect against poisoning and Byzantine attacks. Recent studies also emphasize blockchain-based auditability and trust management to strengthen security in smart city infrastructures [2,16,30,45,46]. Together, these strategies enhance the reliability, robustness, and trustworthiness of large-scale FDT deployments in urban IoT ecosystems.

7. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| RL | Reinforcement Learning |

| MAAC | Multi-Agent Actor–Critic |

| FDT | Federated Decision Transformer |

| MEC | Mobile Edge Computing |

| DT | Decision Transformer |

| RNN | Recurrent Neural Network |

| LSTM | Long Short-Term Memory |

| FL | Federated Learning |

| MARL | Multi-Agent Reinforcement Learning |

| MAT | Multi-Agent Transformer |

| MADT | Multi-Agent Decision Transformer |

| FRL | Federated Reinforcement Learning |

| FedTP | Federated Personalized Transformer |

References

- Ilyas, M. IoT Applications in Smart Cities. In Proceedings of the 2021 International Conference on Electronic Communications, Internet of Things and Big Data (ICEIB), Yilan County, Taiwan, 10–12 December 2021; pp. 44–47. [Google Scholar] [CrossRef]

- Zhang, K.; Ni, J.; Yang, K.; Liang, X.; Ren, J.; Shen, X.S. Security and Privacy in Smart City Applications: Challenges and Solutions. IEEE Commun. Mag. 2017, 55, 122–129. [Google Scholar] [CrossRef]

- Pandya, S.; Srivastava, G.; Jhaveri, R.; Babu, M.R.; Bhattacharya, S.; Maddikunta, P.K.R.; Mastorakis, S.; Piran, M.J.; Gadekallu, T.R. Federated Learning for Smart Cities: A Comprehensive Survey. Sustain. Energy Technol. Assess. 2023, 55, 102987. [Google Scholar] [CrossRef]

- Al-Huthaifi, R.; Li, T.; Huang, W.; Gu, J.; Li, C. Federated Learning in Smart Cities: Privacy and Security Survey. Inf. Sci. 2023, 632, 833–857. [Google Scholar] [CrossRef]

- Nguyen, D.C.; Ding, M.; Pathirana, P.N.; Seneviratne, A.; Li, J.; Poor, H.V. Federated Learning for Internet of Things: A Comprehensive Survey. IEEE Commun. Surv. Tutor. 2021, 23, 1622–1658. [Google Scholar] [CrossRef]

- Zhang, Z.; Rath, S.; Xu, J.; Xiao, T. Federated Learning for Smart Grid: A Survey on Applications and Potential Vulnerabilities. ACM Trans.-Cyber-Phys. Syst. 2025; Accepted. [Google Scholar] [CrossRef]

- Fu, Y.; Di, X. Federated Reinforcement Learning for Adaptive Traffic Signal Control: A Case Study in New York City. In Proceedings of the 26th IEEE International Conference on Intelligent Transportation Systems (ITSC 2023), Bilbao, Spain, 24–28 September 2023; pp. 5738–5743. [Google Scholar] [CrossRef]

- Sutton, R.S.; Barto, A.G. Reinforcement Learning: An Introduction. IEEE Trans. Neural Netw. 1998, 9, 1054. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; Van Den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the Game of Go with Deep Neural Networks and Tree Search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Wang, X.; Han, Y.; Wang, C.; Zhao, Q.; Chen, X.; Chen, M. In-Edge AI: Intelligentizing Mobile Edge Computing, Caching and Communication by Federated Learning. IEEE Netw. 2019, 33, 156–165. [Google Scholar] [CrossRef]

- Yu, S.; Chen, X.; Zhou, Z.; Gong, X.; Wu, D. When Deep Reinforcement Learning Meets Federated Learning: Intelligent Multitimescale Resource Management for Multiaccess Edge Computing in 5G Ultradense Network. IEEE Internet Things J. 2021, 8, 2238–2251. [Google Scholar] [CrossRef]

- Iqbal, S.; Sha, F. Actor-Attention-Critic for Multi-Agent Reinforcement Learning. arXiv 2019, arXiv:1810.02912. [Google Scholar]

- Lowe, R.; Wu, Y.; Tamar, A.; Harb, J.; Abbeel, P.; Mordatch, I. Multi-Agent Actor-Critic for Mixed Cooperative?Competitive Environments. In Proceedings of the 31st International Conference on Neural Information Processing Systems (NeurIPS 2017), Long Beach, CA, USA, 4–9 December 2017; pp. 6382–6393. Available online: https://papers.nips.cc/paper_files/paper/2017/hash/68a9750337a418a86fe06c1991a1d64c-Abstract.html (accessed on 15 September 2025).

- Buşoniu, L.; Babuška, R.; De Schutter, B. Multi-Agent Reinforcement Learning: An Overview. In Innovations in Multi-Agent Systems and Applications–1; Srinivasan, D., Jain, L.C., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 183–221. [Google Scholar] [CrossRef]

- Yang, H.; Huang, Y.; Shi, J.; Yang, Y. A Federated Framework for Edge Computing Devices with Collaborative Fairness and Adversarial Robustness. J. Grid Comput. 2023, 21, 36. [Google Scholar] [CrossRef]

- Feng, Y.; Guo, Y.; Hou, Y.; Wu, Y.; Lao, M.; Yu, T.; Liu, G. A survey of security threats in federated learning. Adv. Eng. Inform. 2025, 11, 165. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł; Polosukhin, I. Attention is All You Need. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Li, W.; Luo, H.; Lin, Z.; Zhang, C.; Lu, Z.; Ye, D. A Survey on Transformers in Reinforcement Learning. Trans. Mach. Learn. Res. 2023. Available online: https://openreview.net/forum?id=r30yuDPvf2 (accessed on 15 September 2025).

- Agarwal, P.; Abdul Rahman, A.; St-Charles, P.-L.; Prince, S.J.D.; Ebrahimi Kahou, S. Transformers in Reinforcement Learning: A Survey. arXiv 2023, arXiv:2307.05979. [Google Scholar] [CrossRef]

- Chen, L.; Lu, K.; Rajeswaran, A.; Lee, K.; Grover, A.; Laskin, M.; Abbeel, P.; Srinivas, A.; Mordatch, I. Decision Transformer: Reinforcement Learning via Sequence Modeling. arXiv 2021, arXiv:2106.01345. [Google Scholar] [CrossRef]

- Wen, M.; Grudzien Kuba, J.; Lin, R.; Zhang, W.; Wen, Y.; Wang, J.; Yang, Y. Multi-Agent Reinforcement Learning Is a Sequence Modeling Problem. arXiv 2022, arXiv:2205.14953. [Google Scholar] [CrossRef]

- Meng, L.; Wen, M.; Yang, Y.; Le, C.; Li, X.; Zhang, W.; Wen, Y.; Zhang, H.; Wang, J.; Xu, B. Offline Pre-Trained Multi-Agent Decision Transformer: One Big Sequence Model Tackles All SMAC Tasks. arXiv 2022, arXiv:2112.02845. [Google Scholar]

- Kapturowski, S.; Ostrovski, G.; Quan, J.; Munos, R.; Dabney, W. Recurrent Experience Replay in Distributed Reinforcement Learning. Available online: https://api.semanticscholar.org/CorpusID:59345798 (accessed on 15 September 2025).

- Park, H.; Shin, T.; Kim, S.; Lho, D.; Sim, B.; Song, J.; Kong, K.; Kim, J. Scalable Transformer Network-Based Reinforcement Learning Method for PSIJ Optimization in HBM. In Proceedings of the IEEE 31st Conference on Electrical Performance of Electronic Packaging and Systems (EPEPS 2022), San Jose, CA, USA, 9–12 October 2022; pp. 1–3. [Google Scholar] [CrossRef]

- Li, H.; Cai, Z.; Wang, J.; Tang, J.; Ding, W.; Lin, C.-T.; Shi, Y. FedTP: Federated Learning by Transformer Personalization. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 13426–13440. [Google Scholar] [CrossRef]

- Reddy, M.S.; Karnati, H.; Mohana Sundari, L. Transformer-Based Federated Learning Models for Recommendation Systems. IEEE Access 2024, 12, 109596–109607. [Google Scholar] [CrossRef]

- Sun, Z.; Xu, Y.; Liu, Y.; He, W.; Kong, L.; Wu, F.; Jiang, Y.; Cui, L. A Survey on Federated Recommendation Systems. IEEE Trans. Neural Netw. Learn. Syst. 2025, 36, 6–20. [Google Scholar] [CrossRef] [PubMed]

- Woisetschlager, H.; Erben, A.; Wang, S.; Mayer, R.; Jacobsen, H.-A. A Survey on Efficient Federated Learning Methods for Foundation Model Training. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence (IJCAI 2024), Jeju, Republic of Korea, 3–9 August 2024; pp. 1–9. [Google Scholar] [CrossRef]

- De La Torre Parra, G.; Selvera, L.; Khoury, J.; Irizarry, H.; Bou-Harb, E.; Rad, P. Interpretable Federated Transformer Log Learning for Cloud Threat Forensics. In Proceedings of the Network and Distributed System Security Symposium (NDSS 2022), San Diego, CA, USA, 27 February–3 March 2022. [Google Scholar]

- Rane, N.; Mallick, S.; Kaya, O.; Rane, J. Federated learning for edge artificial intelligence: Enhancing security, robustness, privacy, personalization, and blockchain integration in IoT. In Future Research Opportunities for Artificial Intelligence in Industry 4.0 and 5.0; Deep Science Publishing: San Francisco, CA, USA, 2024. [Google Scholar]

- Carlini, N.; Wagner, D. Towards Evaluating the Robustness of Neural Networks. In Proceedings of the 2017 IEEE Symposium on Security and Privacy (SP), San Jose, CA, USA, 22–26 May 2017; pp. 39–57. [Google Scholar] [CrossRef]

- Qi, J.; Zhou, Q.; Lei, L.; Zheng, K. Federated Reinforcement Learning: Techniques, Applications, and Open Challenges. arXiv 2021, arXiv:2108.11887. [Google Scholar] [CrossRef]

- Tang, X.; Yu, H. Competitive-Cooperative Multi-Agent Reinforcement Learning for Auction-Based Federated Learning. In Proceedings of the 32nd International Joint Conference on Artificial Intelligence (IJCAI 2023), Macao, China, 19–25 August 2023; pp. 4262–4270. [Google Scholar]

- Uddin, M.P.; Xiang, Y.; Hasan, M.; Bai, J.; Zhao, Y.; Gao, L. A Systematic Literature Review of Robust Federated Learning: Issues, Solutions, and Future Research Directions. ACM Comput. Surv. 2025, 57, 245. [Google Scholar] [CrossRef]

- Zeng, T.; Semiari, O.; Chen, M.; Saad, W.; Bennis, M. Federated Learning for Collaborative Controller Design of Connected and Autonomous Vehicles. In Proceedings of the 60th IEEE Conference on Decision and Control (CDC 2021), Austin, TX, USA, 14–17 December 2021; pp. 5033–5038. [Google Scholar] [CrossRef]

- Zhao, R.; Hu, H.; Li, Y.; Fan, Y.; Gao, F.; Gao, Z. Sequence Decision Transformer for Adaptive Traffic Signal Control. Sensors 2024, 24, 6202. [Google Scholar] [CrossRef]

- Xing, X.; Zhou, Z.; Li, Y.; Xiao, B.; Xun, Y. Multi-UAV Adaptive Cooperative Formation Trajectory Planning Based on an Improved MATD3 Algorithm of Deep Reinforcement Learning. IEEE Trans. Veh. Technol. 2024, 73, 12484–12499. [Google Scholar] [CrossRef]

- Li, Z.; Xu, C.; Zhang, G. A Deep Reinforcement Learning Approach for Traffic Signal Control Optimization. arXiv 2021, arXiv:2107.06115. [Google Scholar] [CrossRef]

- Chen, S.; Liu, J.; Cui, Z.; Chen, Z.; Wang, H.; Xiao, W. A Deep Reinforcement Learning Approach for Microgrid Energy Transmission Dispatching. Appl. Sci. 2024, 14, 3682. [Google Scholar] [CrossRef]

- Liu, X.; Qin, Z.; Gao, Y. Resource Allocation for Edge Computing in IoT Networks via Reinforcement Learning. arXiv 2019, arXiv:1903.01856. [Google Scholar] [CrossRef]

- Daniel, J.; de Kock, R.J.; Ben Nessir, L.; Abramowitz, S.; Mahjoub, O.; Khlifi, W.; Formanek, J.C.; Pretorius, A. Multi-Agent Reinforcement Learning with Selective State-Space Models. In Proceedings of the 24th International Conference on Autonomous Agents and Multiagent Systems (AAMAS ’25), Detroit, MI, USA, 19–23 May 2025; International Foundation for Autonomous Agents and Multiagent Systems: Richland, SC, USA, 2025; pp. 2481–2483. Available online: https://dl.acm.org/doi/10.5555/3709347.3743910 (accessed on 15 September 2025).

- Jiang, H.; Li, Z.; Wei, H.; Xiong, X.; Ruan, J.; Lu, J.; Mao, H.; Zhao, R. X-Light: Cross-City Traffic Signal Control Using Transformer on Transformer as Meta Multi-Agent Reinforcement Learner. In Proceedings of the Thirty-Third International Joint Conference on Artificial Intelligence (IJCAI 2024), Jeju, Republic of Korea, 3–9 August 2024; pp. 1–9. [Google Scholar] [CrossRef]

- Zhou, T.; Yu, J.; Zhang, J.; Tsang, D.H.K. Federated Prompt-based Decision Transformer for Resource Allocation of Customized VR Streaming in Mobile Edge Computing. IEEE Trans. Wireless Commun. 2025; early access. [Google Scholar] [CrossRef]

- Qiang, X.; Chang, Z.; Ye, C.; Hamalainen, T.; Min, G. Split Federated Learning Empowered Vehicular Edge Intelligence: Concept, Adaptive Design, and Future Directions. IEEE Wirel. Commun. 2025, 32, 90–97. [Google Scholar] [CrossRef]

- Lyu, L.; Yu, H.; Ma, X.; Chen, C.; Sun, L.; Zhao, J.; Yang, Q.; Yu, P.S. Privacy and Robustness in Federated Learning: Attacks and Defenses. IEEE Trans. Neural Netw. Learn. Syst. 2024, 35, 8726–8746. [Google Scholar] [CrossRef]

- Dritsas, E.; Trigka, M. Machine Learning for Blockchain and IoT Systems in Smart Cities: A Survey. Future Internet 2024, 16, 324. [Google Scholar] [CrossRef]

- Zhang, H.; Feng, S.; Liu, C.; Ding, Y.; Zhu, Y.; Zhou, Z.; Zhang, W.; Yu, Y.; Jin, H.; Li, Z. CityFlow: A Multi-Agent Reinforcement Learning Environment for Large Scale City Traffic Scenario. In Proceedings of the World Wide Web Conference (WWW 2019), San Francisco, CA, USA, 13–17 May 2019; pp. 3620–3624. [Google Scholar] [CrossRef]

- CityFlow. CityFlow. Available online: https://github.com/cityflow-project/CityFlow (accessed on 15 September 2025).

- NYC Taxi and Limousine Commission (TLC). TLC Trip Record Data. 2024. Available online: https://www.nyc.gov/site/tlc/about/tlc-trip-record-data.page (accessed on 15 September 2025).

| Dimension | MAAC (Centralized) | FDT (Federated, Decentralized) |

|---|---|---|

| Learning Architecture | Actor with centralized critic; coordination depends on critic access | Critic-free; decentralized trajectory modeling via self-attention |

| Temporal Dependencies | Captured through RNN/LSTM encoders; prone to vanishing gradients | Captured through multi-head self-attention; robust to long horizons |

| Training Paradigm | Centralized training with global critic gradients | Federated local training with periodic model aggregation |

| Scalability | Critic bottleneck limits performance in large networks | Horizontally scalable; resilient to node/server drop-outs |

| Privacy and Data Flow | Raw observations may be shared with critic | Raw data remains local; only encrypted model updates exchanged |

| Communication Overhead | Frequent critic gradient synchronization | Lightweight periodic updates (every K episodes) |

| Parameter | MAAC | Transformer RL |

|---|---|---|

| Number of Agents | 10 | 10 |

| Training Episodes | 50,000 | 50,000 |

| Batch Size | 64 | 64 |

| Discount Factor () | 0.99 | 0.99 |

| Learning Rate (Actor) | 0.0005 | 0.0005 |

| Learning Rate (Critic) | 0.0005 | 0.0005 |

| Optimizer | Adam | Adam |

| Exploration Strategy | -greedy (decay from 1.0 to 0.1) | -greedy (decay from 1.0 to 0.1) |

| Target Update Frequency | Every 100 steps | Every 100 steps |

| Communication Frequency (FL) | N/A | Every 10 episodes |

| Replay Buffer Size | 1,000,000 | 1,000,000 |

| Environment Type | MEC Simulation | MEC Simulation |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

AlTerkawi, L.; AlTarawneh, M. Federated Decision Transformers for Scalable Reinforcement Learning in Smart City IoT Systems. Future Internet 2025, 17, 492. https://doi.org/10.3390/fi17110492

AlTerkawi L, AlTarawneh M. Federated Decision Transformers for Scalable Reinforcement Learning in Smart City IoT Systems. Future Internet. 2025; 17(11):492. https://doi.org/10.3390/fi17110492

Chicago/Turabian StyleAlTerkawi, Laila, and Mokhled AlTarawneh. 2025. "Federated Decision Transformers for Scalable Reinforcement Learning in Smart City IoT Systems" Future Internet 17, no. 11: 492. https://doi.org/10.3390/fi17110492

APA StyleAlTerkawi, L., & AlTarawneh, M. (2025). Federated Decision Transformers for Scalable Reinforcement Learning in Smart City IoT Systems. Future Internet, 17(11), 492. https://doi.org/10.3390/fi17110492