Towards Fair Medical Risk Prediction Software

Abstract

1. Introduction

2. Related Work: From Fairness to Meta-Fairness in Medical Risk Assessment

2.1. Fairness and Beyond: A Short Analysis

2.1.1. Algorithmic Bias

2.1.2. Group and Individual Fairness

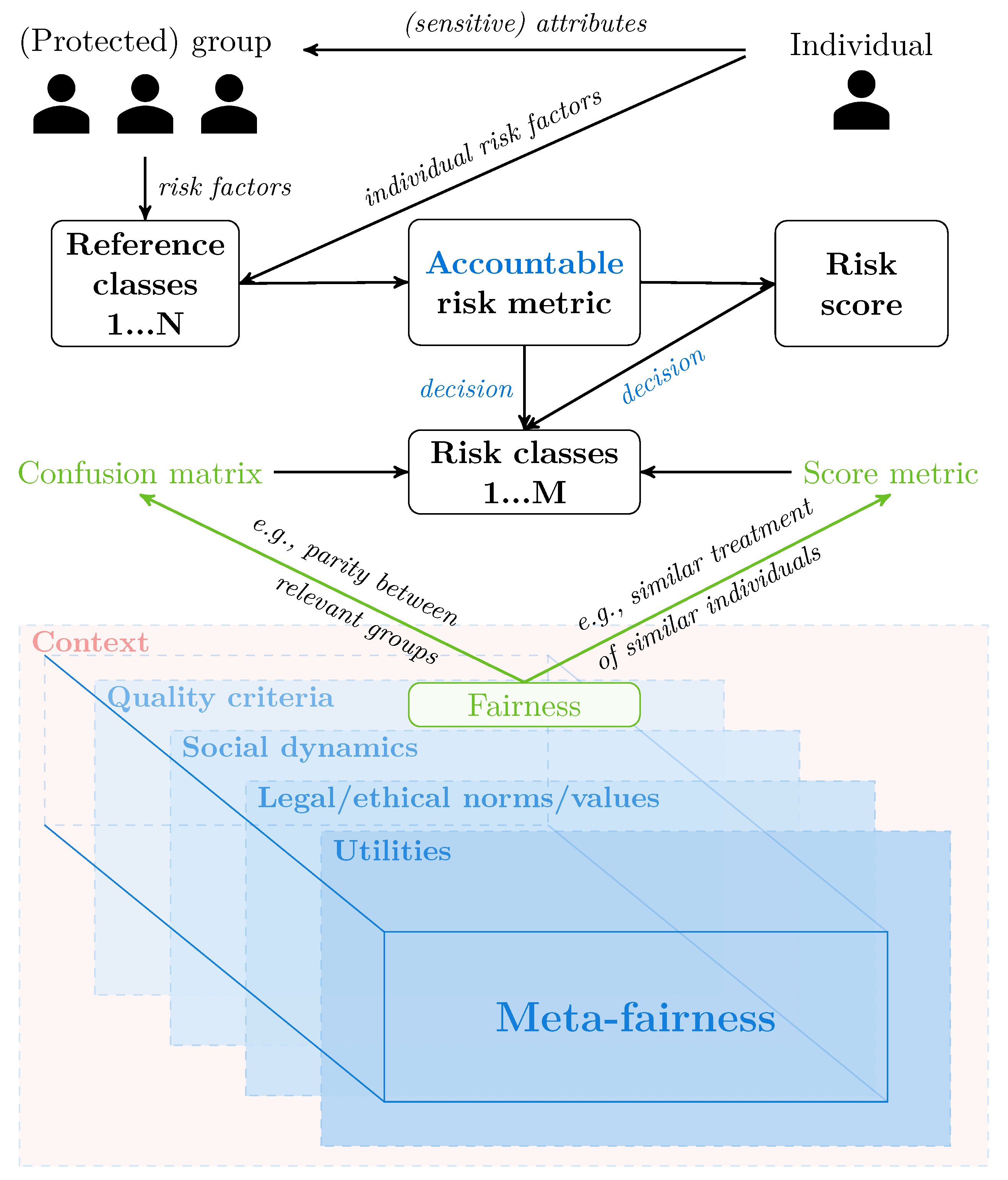

2.1.3. Fairness in Risk Assessment

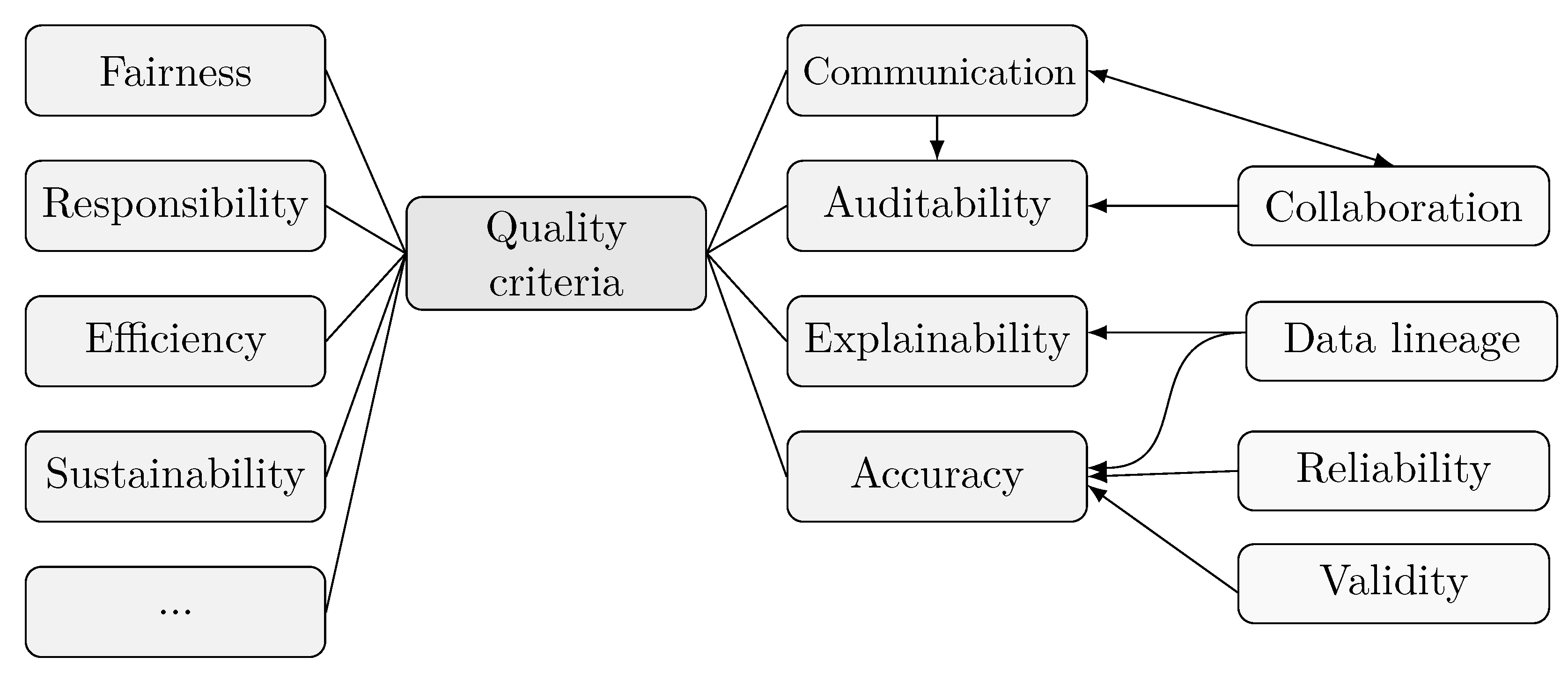

2.1.4. Accountable Algorithms

2.2. Meta-Fairness: Possible Definition

2.3. Approaches to Meta-Fairness

2.4. Fairness Software and Benchmarks

| Topic | Reference | |

|---|---|---|

| General alg. F; bias; F metrics | [1,2,4,11,12,13,14,15,16,17] | |

| (Social) dynamics | [18,19,52,63] | |

| Individual F | [3,13,21] | |

| F in risk score models | [2,4,20,24] | |

| Accountable algorithms; QCs | [25,31,33,34,35,36,37,38] | |

| Application to healthcare | [1,4,13,20,29,42,43,62] | |

| Topic | Reference | Difference to this MF proposal |

| Approaches to MF | [3] | Focused on classification of different fairness definitions and their interconnections, not on combining them; no guidance on what/how to choose |

| [4] | Focused on accountability; no explicit consideration of QCs, utilities, social dynamics; structured differently | |

| [5] | A purely game-theoretic perspective; one of the first mentions of MF in algorithms | |

| [6] | Focused on mediation; one of the first mentions of meta-ethics | |

| [7,23] | No explicit consideration of QCs and social dynamics; not focused on combining F definitions; not focused on individual fairness | |

| [8] | Interpolates between three preset fairness criteria; focused on law | |

| [40] | (Narrowly) focused on a polar trade-off between fairness and loyalty | |

| [41] | (Narrowly) focused on a trade-off between accuracy and fairness | |

| [44] | No concrete way for achieving fairness depending on context proposed; the need for interdisciplinary work outlined | |

| [45] | Focus on teaching; no concrete framework proposed | |

| [46] | Focused on limitations arising from conflicting definitions of fairness | |

| [49,50,51] | Focused on the fairness-accuracy trade-off only; no social dynamics; not frameworks (narrower focus) | |

| [52] | Focused on social objectives; not a framework per se | |

| Topic | Reference | |

| F in reinforcement learning | [47,48] | |

| F software/benchmarks | [53,54,55,56,57,58,59,60,61,62] | |

3. Materials and Methods

3.1. DST Enhanced by Interval Analysis

3.2. A Metric for Medical Risk Tools’ Assessment

- Calibration within groups (if the algorithm assigns probability x to a group of individuals for being positive with respect to the property of interest, then approximately an x fraction of them should actually be positive);

- Balance for the positive class (in the positive class, there should be equal average scores across groups);

- Balance for the negative class (the same as above for the negative class).

- R1

- Subgroups within the cohort that possess unique or additional characteristics, especially those leading to higher uncertainty or requiring specialized treatment, should not be disadvantaged.

- R2

- Validated lower and upper bounds are specified for the risk classes.

- R3

- The employment of suitable or newly developed technologies and methods is continuously monitored by a panel of experts; the patient’s treatment is adjusted accordingly if new insights become available.

- If the risk is sufficiently specified;

- If the model for computing the risk factors and calculating the overall risk is valid/accurate;

- If assignment to a risk class is valid/accurate.

- General information, 1 point: Does the algorithm/system offer adequate information about its purpose, its target groups, patients and their diseases, disease-related genetic variants, doctors, medical staff, experts, their roles, and their tasks? Are the output results appropriately handled? Can FAQs, knowledge bases, and similar information be easily found? (QC Explainability, Auditability)

- Risk factors:

- 2 pts Is there accessible and fair information specifying what types of data are expected regarding an individual’s demographics, lifestyle, health status, previous examination results, family medical history, and genetic predisposition, and over what time period this information should be collected? (QC Auditability, Data Lineage, Explainability)

- 1 pt Does the risk model include risk factors for protected or relevant/eligible groups? (QC Fairness)

- Assignment to risk classes:

- 0.5 pts Depending on the disease pattern, examination outcomes, and patients’ own medical samples (e.g., biomarkers), and using transparent risk metrics, are patients assigned to a risk class that is clearly described? (QC Explainability)

- 0.5 pts If terms such as high risk, moderate risk, or low risk are used, are transition classes provided to avoid assigning similar individuals to dissimilar classes and to include the impact of epistemic uncertainty or missing data? (QC Fairness)

- 1 pt Are the assignments to (transitional) risk classes made with the help of the risk model validated for eligible patient groups over a longer period of time in accordance with international quality standards? (QC Fairness, Accuracy, Validity)

- Assistance:

- 1 pt Can questionnaires be completed in a collaborative manner by patients and doctors together? (QC Auditability, Collaboration)

- 1 pt Can the treating doctor be involved in decision-making and risk interpretation beyond questionnaire completion; are experts given references to relevant literature on data, models, algorithms, validation, and follow-up? (QC Auditability)

- Data handling, 2 pts: Is the data complete and of high quality, and was it collected and stored according to relevant standards? Are data and results at disposal over a longer period of time? Are cross-cutting requirements such as data protection, privacy, and security respected? (QC Accuracy, Data Lineage)

- Result consequences:

- 1 pt Are the effects of various sources of uncertainty made clear to the patient and/or doctor? (QC Accuracy, Explainability, Auditability)

- 2 pts Does the output information also include counseling possibilities and help services over an appropriate period of time depending on the allocated risk class? (QC Responsibility, Sustainability)

- 1 pt Are arbitration boards and mediation procedures in the case of disputes available? (QC Responsibility, Sustainability)

3.3. The MF Framework: (Individual) Fairness Enhanced Through Meta-Fairness

3.3.1. A Checklist for Context, Utilities from Figure 2

- Domain: healthcare/finance/banking/…

- Stakeholders: Decision-makers and -recipients

- Kind of decisions: Classification, ranking, allocation, recommendation

- Type of decisions: Polar/non-polar

- Relevant groups: Sets of individuals representing potential sources of inequality (e.g., rich/poor, male/female; cf. [7])

- Eligible groups: Subgroups in relevant groups having a moral claim to being treated fairly (cf. the concept of a claim differentiator from [7]; e.g., rich/poor at over 50 years of age)

- Notion of fairness: What is the goal of justice depending on the context (cf. the concept of patterns of justice from [7]; e.g., demographic parity, equal opportunity, calibration, individual fairness)

- Legal and ethical constraints: Relevant regulatory requirements, industry standards, or organizational policies

- Time: Dynamics in the population

- Resources: What is available?

- Location: Is the problem location-specific? How?

- Scope: Model’s purpose; short-term or long-term effects? groups or individuals? Real-time or batch processing? High-stakes or low-stakes?

- Utilities: What are they for decision-makers, decision-recipients?

- QCs: What is relevant?

- Social objectives: What are social goals?

3.3.2. Questionnaires for Quality Criteria from Figure 2

3.3.3. QC Fairness ()

- Utilities: Are the correct functions used? (1 pt for the decision-maker’s and -recipient’s each)

- Patterns of justice: Are they correctly chosen and made explicit? (2 pt)

- Model: Does the risk model include risk factors to reflect relevant groups? (1 pt)

- Risk classes: If terms such as high risk, moderate risk, or low risk are used, are transition classes provided to avoid assigning similar individuals to dissimilar classes? (2 pt)

- Type: Is group (0 pt) or individual (1 pt) fairness assessed? (Individual fairness prioritized.)

- Bias: What biases out of the following list are tested for and are not exhibited by the model (here and in the following: 1 pt each if both true; 0 otherwise): 1/13/22/26/31/35/39

3.3.4. QC Accuracy ()

- General: Does the variable used by the model accurately represent the construct it intends to measure, and is it suitable for the model’s purpose (2 pt)?

- Uncertainty: Are the effects of various sources of uncertainty made clear to the patient and/or doctor (2 pt)?

- Data

- quality, representativeness: are FAIR principles upheld (1 pt each)?

- lineage: Are data and results at disposal over a longer period of time (1 pt)? Are cross-cutting requirements, e.g., data protection/privacy/security, respected (1 pt)?

- bias: 20/27/29/37 (1 pt each)

- Reliabilty: Is the model verified? Formal/code/result/uncertainty quantification (1 pt each)

- Validity: As the variable validated through appropriate (psychometric) testing (1 pt)? Are the assignments to (transitional) risk classes made with the help of the risk model validated for eligible patient groups over a longer period of time in accordance with international quality standards (1 pt)?

- Society: Does the model align with and effectively contribute to the intended social objectives? I.e., is the model’s purpose clearly defined (1 pt)? Does its deployment support the broader social goals it aims to achieve (1 pt)?

- Bias: Pertaining to accuracy: 8/11/14/18/24/33/42/43 (1 pt each)

3.3.5. QC Explainability

- Data: Are FAIR principles upheld with the focus on explainability (1 pt)?

- Information: Does the algorithm/system offer adequate information about its purpose, its target groups, patients and their diseases, disease-related genetic variants, doctors, medical staff, experts, their roles, and their tasks (4 pt)?

- Risk classes: Depending on the disease pattern, examination outcomes, and patients’ own medical samples (e.g., biomarkers), and using transparent risk metrics, are patients assigned to a risk class that is clearly described (4 pt)?

- Effects: Are the effects of various sources of uncertainty made clear to the patient and/or doctor (1 pt)?

- Bias: 7/15/21/28/30 (1 pt each)

3.3.6. QC Auditability

- Information: Are the output results appropriately handled (1 pt)? Can FAQs, knowledge bases, and similar information be easily found (1 pt)?

- Data types: Is there accessible and fair information specifying what types of data are expected regarding an individual’s demographics, lifestyle, health status, previous examination results, family medical history, and genetic predisposition, and over what time period should this information be collected (2 pt)?

- Expert involvement: Are experts given references to relevant literature on data, models, algorithms, validation, and follow-up (2 pt)?

- Bias: 3/4/5/25 (1 pt each)

3.3.7. QCs Responsibility/Sustainability

- Counseling: Does the output information also include counseling possibilities and help services over an appropriate period of time depending on the allocated risk class?

- Mediation: Are arbitration boards and mediation procedures in the case of disputes available?

- Trade-offs: Is optimization with respect to sustainability, respect, and fairness goals?

- Sustainability: Are environmental, economic, and social aspects of sustainability all taken into account?

- Bias: 2/10/17/36/38

3.3.8. QCs Communication/Collaboration

- Type of interaction: Are humans ‘out of the loop,’ ‘in the loop,’ ‘over the loop’?

- Adequacy: Are the model’s outputs used appropriately by human decision-makers? I.e., how are the model’s recommendations or predictions interpreted and acted upon by users? Are they integrated into decision-making processes fairly and responsibly?

- Collaboration: Can questionnaires be completed in a collaborative manner by patients and doctors together?

- Integrity: Is third-party interference excluded?

- Bias: 6/9/12/16/19/23/user 24/32/40

3.3.9. Legal/Ethical Norms and Values from Figure 2

- Adequacy: Are appropriate legal norms taken into account?

- Relevance: Are relevant and entitled groups correctly identified and made explicit?

3.3.10. Social Dynamics from Figure 2

- Time influence: Is the model designed to maintain stable performance across varying demographic groups over time?

- Interaction: Is the influence of the model on user behavior characterized? To what extent are these effects beneficial or appropriate?

- Utility: Is the social utility formulated?

- Purpose fulfillment: Is it assessed if it can be aligned with the functionality of the software under consideration?

- Feedback effects: Are there any studies characterizing changes induced by the model in the society?

- Bias: 34/41

3.4. DST for Meta-Fairness

3.4.1. Augmented Fairness Score

- Feature similarity inconsistency (FS): A patient with a nearly identical profile to another patient receives a substantially different risk score;

- Counterfactual bias (CB): If a single attribute is changed while all other features are held fixed (e.g., recorded ethnicities flipped from Black to White), the model recommendation changes, even though it should not medically matter; and

- Noise sensitivity (NS): Small, clinically irrelevant perturbations (e.g., rounding a glucose level from 125.6 to 126) lead to disproportionately different predictions.

- Demographic parity gap: One group is recommended for screening at a substantially lower rate than another, despite having similar risk profiles;

- Equal opportunity difference: The model fails to identify true positive cases more frequently in one group than in others; and

- Calibration gap: Predicted risk scores are systematically overestimated for one group and underestimated for another.

3.4.2. General MF Score

4. Results: Meta-Fairness, Applied

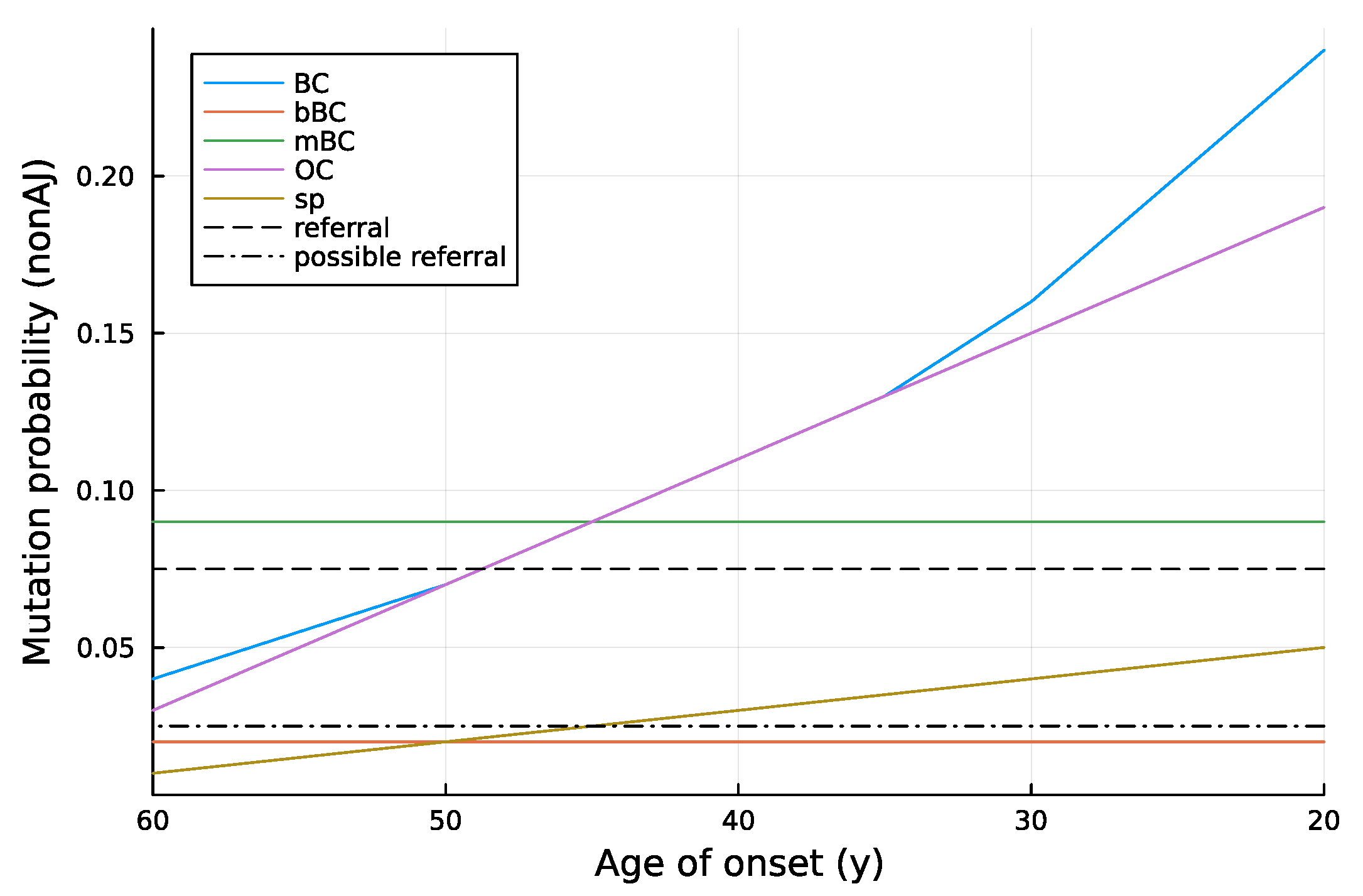

4.1. Predicting BRCA1/2 Mutation Probabilities

4.1.1. Model Outline

4.1.2. Example

4.1.3. Discussion

- 1

- Aggregation bias, since it cannot be excluded at the moment that false conclusions are drawn about individuals from the population;

- 22

- Inductive bias, since there are still assumptions built into the model structure that we consider general, and the Ashkenazi Jewish population only;

- 35

- Representation bias, since we cannot test for this at the moment; and

- 39

- Simplification bias, for the similar reason as 1.

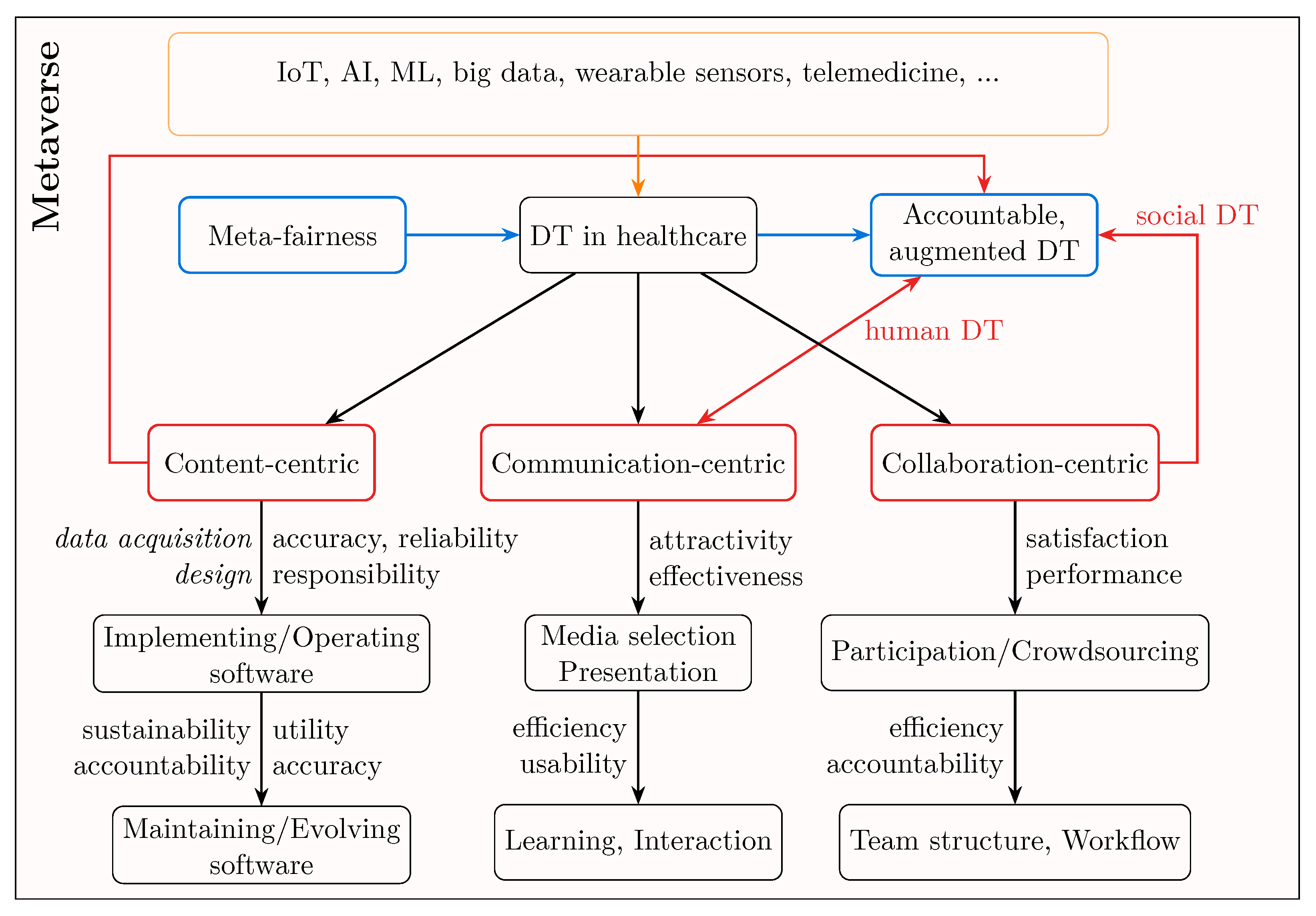

4.2. Meta-Fairness Applied to Communication in Digital Healthcare Twins

4.2.1. DTs and MF

4.2.2. Communication, Collaboration, and Bias

4.2.3. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| BC | Breast Cancer |

| BPA | Basic Probability Assignment |

| BRCA1/2 | BReast CAncer Gene 1 and 2 |

| COVID-19 | COronaVIrus Disease 2019 |

| DST | Dempster-Shafer Theory |

| DT | Digital Twin |

| FAIR | Findable, Accessible, Interoperable, Reusable |

| FAQ | Frequently Asked Questions |

| FE | Focal Element |

| IA | Interval Analysis |

| IoT | Internet of Things |

| KS | King Syndrome |

| MF | Meta-Fairness |

| ML | Machine Learning |

| OC | Ovarian Cancer |

| PT | Physical Twin |

| QC | Quality Criteria |

| RF | Risk Factor |

| RL | Reinforcement Learning |

| SA | Sensitive Attribute |

| V&V | Verification and Validation |

References

- Paulus, J.; Kent, D. Predictably unequal: Understanding and addressing concerns that algorithmic clinical prediction may increase health disparities. npj Digit. Med. 2020, 3, 99. [Google Scholar] [CrossRef]

- Kleinberg, J.; Mullainathan, S.; Raghavan, M. Inherent Trade-Offs in the Fair Determination of Risk Scores. In Proceedings of the 8th Innovations in Theoretical Computer Science Conference (ITCS 2017), Berkeley, CA, USA, 9–11 January 2017; Papadimitriou, C.H., Ed.; Schloss Dagstuhl–Leibniz-Zentrum für Informatik: Wadern, Germany, 2017; Volume 67, pp. 43:1–43:23. [Google Scholar] [CrossRef]

- Castelnovo, A.; Crupi, R.; Greco, G.; Regoli, D.; Penco, I.; Cosentini, A. A clarification of the nuances in the fairness metrics landscape. Sci. Rep. 2022, 12, 4209. [Google Scholar] [CrossRef]

- Luther, W.; Harutyunyan, A. Fairness in Healthcare and Beyond—A Survey. JUCS, 2025; to appear. [Google Scholar]

- Naeve-Steinweg, E. The averaging mechanism. Games Econ. Behav. 2004, 46, 410–424. [Google Scholar] [CrossRef]

- Hyman, J.M. Swimming in the Deep End: Dealing with Justice in Mediation. Cardozo J. Confl. Resolut. 2004, 6, 19–56. [Google Scholar]

- Hertweck, C.; Baumann, J.; Loi, M.; Vigano, E.; Heitz, C. A Justice-Based Framework for the Analysis of Algorithmic Fairness-Utility Trade-Offs. arXiv 2022. [Google Scholar] [CrossRef]

- Zehlike, M.; Loosley, A.; Jonsson, H.; Wiedemann, E.; Hacker, P. Beyond incompatibility: Trade-offs between mutually exclusive fairness criteria in machine learning and law. Artif. Intell. 2025, 340, 104280. [Google Scholar] [CrossRef]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976. [Google Scholar] [CrossRef]

- Ferson, S.; Kreinovich, V.; Ginzburg, L.; Myers, D.S.; Sentz, K. Constructing Probability Boxes and Dempster-Shafer Structures; Sandia National Laboratories: Albuquerque, NM, USA, 2003. [Google Scholar] [CrossRef]

- Russo, M.; Vidal, M.E. Leveraging Ontologies to Document Bias in Data. arXiv 2024. [Google Scholar] [CrossRef]

- Newman, D.T.; Fast, N.J.; Harmon, D.J. When eliminating bias isn’t fair: Algorithmic reductionism and procedural justice in human resource decisions. Organ. Behav. Hum. Decis. Process. 2020, 160, 149–167. [Google Scholar] [CrossRef]

- Anderson, J.W.; Visweswaran, S. Algorithmic individual fairness and healthcare: A scoping review. JAMIA Open 2024, 8, ooae149. [Google Scholar] [CrossRef] [PubMed]

- AnIML. Bias and Fairness—AnIML: Another Introduction to Machine Learning. Available online: https://animlbook.com/classification/bias_fairness/index.html (accessed on 21 October 2025).

- Zliobaite, I. Measuring discrimination in algorithmic decision making. Data Min. Knowl. Discov. 2017, 31, 1060–1089. [Google Scholar] [CrossRef]

- Arnold, D.; Dobbie, W.; Hull, P. Measuring Racial Discrimination in Algorithms; Working Paper 2020-184; University of Chicago, Becker Friedman Institute for Economics: Chicago, IL, USA, 2020. [Google Scholar] [CrossRef]

- Mosley, R.; Wenman, R. Methods for Quantifying Discriminatory Effects on Protected Classes in Insurance; Research paper; Casualty Actuarial Society: Arlington, VA, USA, 2022. [Google Scholar]

- Sanna, L.J.; Schwarz, N. Integrating Temporal Biases: The Interplay of Focal Thoughts and Accessibility Experiences. Psychol. Sci. 2004, 15, 474–481. [Google Scholar] [CrossRef]

- Mozannar, H.; Ohannessian, M.I.; Srebro, N. From Fair Decision Making to Social Equality. arXiv 2020. [Google Scholar] [CrossRef]

- Ladin, K.; Cuddeback, J.; Duru, O.K.; Goel, S.; Harvey, W.; Park, J.G.; Paulus, J.K.; Sackey, J.; Sharp, R.; Steyerberg, E.; et al. Guidance for unbiased predictive information for healthcare decision-making and equity (GUIDE): Considerations when race may be a prognostic factor. npj Digit. Med. 2024, 7, 290. [Google Scholar] [CrossRef]

- Dwork, C.; Hardt, M.; Pitassi, T.; Reingold, O.; Zemel, R. Fairness Through Awareness. arXiv 2011, arXiv:1104.3913. [Google Scholar] [CrossRef]

- Baloian, N.; Luther, W.; Peñafiel, S.; Zurita, G. Evaluation of Cancer and Stroke Risk Scoring Online Tools. In Proceedings of the 3rd CODASSCA Workshop on Collaborative Technologies and Data Science in Smart City Applications, Yerevan, Armenia, 23–25 August 2022, Yerevan, Armenia, 23–25 August 2022; Hajian, A., Baloian, N., Inoue, T., Luther, W., Eds.; Logos Verlag: Berlin, Germany, 2022; pp. 106–111. [Google Scholar]

- Baumann, J.; Hertweck, C.; Loi, M.; Heitz, C. Distributive Justice as the Foundational Premise of Fair ML: Unification, Extension, and Interpretation of Group Fairness Metrics. arXiv 2023, arXiv:2206.02897. [Google Scholar] [CrossRef]

- Petersen, E.; Ganz, M.; Holm, S.H.; Feragen, A. On (assessing) the fairness of risk score models. arXiv 2023, arXiv:2302.08851. [Google Scholar] [CrossRef]

- Diakopoulos, N.; Friedler, S. Principles for Accountable Algorithms and a Social Impact Statement for Algorithms. Available online: https://www.fatml.org/resources/principles-for-accountable-algorithms (accessed on 21 October 2025).

- Alizadehsani, R.; Roshanzamir, M.; Hussain, S.; Khosravi, A.; Koohestani, A.; Zangooei, M.H.; Abdar, M.; Beykikhoshk, A.; Shoeibi, A.; Zare, A.; et al. Handling of uncertainty in medical data using machine learning and probability theory techniques: A review of 30 years (1991–2020). arXiv 2020. [Google Scholar] [CrossRef]

- Auer, E.; Luther, W. Uncertainty Handling in Genetic Risk Assessment and Counseling. JUCS J. Univers. Comput. Sci. 2021, 27, 1347–1370. [Google Scholar] [CrossRef]

- Gillner, L.; Auer, E. Towards a Traceable Data Model Accommodating Bounded Uncertainty for DST Based Computation of BRCA1/2 Mutation Probability with Age. JUCS J. Univers. Comput. Sci. 2023, 29, 1361–1384. [Google Scholar] [CrossRef]

- Pfohl, S.R.; Foryciarz, A.; Shah, N.H. An empirical characterization of fair machine learning for clinical risk prediction. J. Biomed. Inform. 2021, 113, 103621. [Google Scholar] [CrossRef]

- Penafiel, S.; Baloian, N.; Sanson, H.; Pino, J. Predicting Stroke Risk with an Interpretable Classifier. IEEE Access 2020, 9, 1154–1166. [Google Scholar] [CrossRef]

- Baniasadi, A.; Salehi, K.; Khodaie, E.; Bagheri Noaparast, K.; Izanloo, B. Fairness in Classroom Assessment: A Systematic Review. Asia-Pac. Educ. Res. 2023, 32, 91–109. [Google Scholar] [CrossRef]

- University of Minnesota Duluth. Reliability, Validity, and Fairness. 2025. Available online: https://assessment.d.umn.edu/about/assessment-resources/using-assessment-results/reliability-validity-and-fairness (accessed on 8 September 2025).

- Moreau, L.; Ludäscher, B.; Altintas, I.; Barga, R.S.; Bowers, S.; Callahan, S.; Chin, G., Jr.; Clifford, B.; Cohen, S.; Cohen-Boulakia, S.; et al. Special Issue: The First Provenance Challenge. Concurr. Comput. Pract. Exp. 2008, 20, 409–418. [Google Scholar] [CrossRef]

- Pasquier, T.; Lau, M.K.; Trisovic, A.; Boose, E.R.; Couturier, B.; Crosas, M.; Ellison, A.M.; Gibson, V.; Jones, C.R.; Seltzer, M. If these data could talk. Sci. Data 2017, 4, 170114. [Google Scholar] [CrossRef] [PubMed]

- Jacobsen, A.; de Miranda Azevedo, R.; Juty, N.; Batista, D.; Coles, S.; Cornet, R.; Courtot, M.; Crosas, M.; Dumontier, M.; Evelo, C.T.; et al. FAIR Principles: Interpretations and Implementation Considerations. Data Intell. 2020, 2, 10–29. [Google Scholar] [CrossRef]

- Wilkinson, M.D.; Dumontier, M.; Jan Aalbersberg, I.J.; Appleton, G.; Axton, M.; Baak, A.; Blomberg, N.; Boiten, J.-W.; da Silva Santos, L.; Bourne, P.E.; et al. The FAIR Guiding Principles for scientific data management and stewardship. Sci. Data 2016, 3, 160018. [Google Scholar] [CrossRef]

- Sculley, D.; Holt, G.; Golovin, D.; Davydov, E.; Phillips, T.; Ebner, D.; Chaudhary, V.; Young, M.; Dennison, D. Hidden Technical Debt in Machine Learning Systems. In Proceedings of the NIPS’15: 29th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 7–12 December 2015; pp. 2494–2502. [Google Scholar]

- Qi, Q.; Tao, F.; Hu, T.; Anwer, N.; Liu, A.; Wei, Y.; Wang, L.; Nee, A. Enabling technologies and tools for digital twin. J. Manuf. Syst. 2021, 58, 3–21. [Google Scholar] [CrossRef]

- Neto, A.; Souza Neto, J. Metamodels of Information Technology Best Practices Frameworks. J. Inf. Syst. Technol. Manag. 2011, 8, 619. [Google Scholar] [CrossRef]

- Waytz, A.; Dungan, J.; Young, L. The whistleblower’s dilemma and the fairness–loyalty tradeoff. J. Exp. Soc. Psychol. 2013, 49, 1027–1033. [Google Scholar] [CrossRef]

- Zhang, Y.; Sang, J. Towards Accuracy-Fairness Paradox: Adversarial Example-based Data Augmentation for Visual Debiasing. In Proceedings of the 28th ACM International Conference on Multimedia, New York, NY, USA, 12–16 October 2020; MM ’20. pp. 4346–4354. [Google Scholar] [CrossRef]

- Carroll, A.; McGovern, C.; Nolan, M.; O’Brien, A.; Aldasoro, E.; O’Sullivan, L. Ethical Values and Principles to Guide the Fair Allocation of Resources in Response to a Pandemic: A Rapid Systematic Review. BMC Med. Ethics 2022, 23, 1–11. [Google Scholar] [CrossRef]

- Emanuel, E.; Persad, G. The shared ethical framework to allocate scarce medical resources: A lesson from COVID-19. Lancet 2023, 401, 1892–1902. [Google Scholar] [CrossRef]

- Kirat, T.; Tambou, O.; Do, V.; Tsoukiàs, A. Fairness and Explainability in Automatic Decision-Making Systems. A challenge for computer science and law. arXiv 2022, arXiv:2206.03226. [Google Scholar] [CrossRef]

- Modén, M.U.; Lundin, J.; Tallvid, M.; Ponti, M. Involving teachers in meta-design of AI to ensure situated fairness. In Proceedings of the Sixth International Workshop on Cultures of Participation in the Digital Age: AI for Humans or Humans for AI? Co-Located with the International Conference on Advanced Visual Interfaces (CoPDA@AVI 2022), Frascati, Italy, 7 June 2022; Volume 3136, pp. 36–42. [Google Scholar]

- Padh, K.; Antognini, D.; Lejal Glaude, E.; Faltings, B.; Musat, C. Addressing Fairness in Classification with a Model-Agnostic Multi-Objective Algorithm. arXiv 2021, arXiv:2009.04441. [Google Scholar]

- Jabbari, S.; Joseph, M.; Kearns, M.; Morgenstern, J.; Roth, A. Fairness in Reinforcement Learning. In Proceedings of the 34th International Conference on Machine Learning, Sydney, Australia, 6–11 August 2017; Precup, D., Teh, Y.W., Eds.; JMLR.org: New York, NY, USA, 2017; Volume 70, pp. 1617–1626. [Google Scholar] [CrossRef]

- Reuel, A.; Ma, D. Fairness in Reinforcement Learning: A Survey. arXiv 2024. [Google Scholar] [CrossRef]

- Petrović, A.; Nikolić, M.; M, J.; Bijanić, M.; Delibašić, B. Fair Classification via Monte Carlo Policy Gradient Method. Eng. Appl. Artif. Intell. 2021, 104, 104398. [Google Scholar] [CrossRef]

- Eshuijs, L.; Wang, S.; Fokkens, A. Balancing the Scales: Reinforcement Learning for Fair Classification. arXiv 2024. [Google Scholar] [CrossRef]

- Kim, W.; Lee, J.; Lee, J.; Lee, B.J. FairDICE: Fairness-Driven Offline Multi-Objective Reinforcement Learning. arXiv 2025. [Google Scholar] [CrossRef]

- Grote, T. Fairness as adequacy: A sociotechnical view on model evaluation in machine learning. AI Ethics 2024, 4, 427–440. [Google Scholar] [CrossRef]

- Kamiran, F.; Calders, T. Data preprocessing techniques for classification without discrimination. Knowl. Inf. Syst. 2012, 33, 1–33. [Google Scholar] [CrossRef]

- Menon, A.K.; Williamson, R.C. The cost of fairness in binary classification. In Proceedings of the 1st Conference on Fairness, Accountability and Transparency, New York, NY, USA, 23–24 February 2018; Friedler, S.A., Wilson, C., Eds.; PMLR: New York, NY, USA, 2018; Volume 81, pp. 107–118. [Google Scholar]

- Han, X.; Chi, J.; Chen, Y.; Wang, Q.; Zhao, H.; Zou, N.; Hu, X. FFB: A Fair Fairness Benchmark for In-Processing Group Fairness Methods. arXiv 2024, arXiv:2306.09468. [Google Scholar]

- Hardt, M.; Price, E.; Srebro, N. Equality of Opportunity in Supervised Learning. arXiv 2016, arXiv:1610.02413. [Google Scholar] [CrossRef]

- Baumann, J.; Hannák, A.; Heitz, C. Enforcing Group Fairness in Algorithmic Decision Making: Utility Maximization Under Sufficiency. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency, Seoul, Republic of Korea, 21–24 June 2022; FAccT ’22. pp. 2315–2326. [Google Scholar] [CrossRef]

- Duong, M.K.; Conrad, S. Towards Fairness and Privacy: A Novel Data Pre-processing Optimization Framework for Non-binary Protected Attributes. In Data Science and Machine Learning; Springer Nature: Singapore, 2023; pp. 105–120. [Google Scholar] [CrossRef]

- Bellamy, R.K.E.; Dey, K.; Hind, M.; Hoffman, S.C.; Houde, S.; Kannan, K.; Lohia, P.; Martino, J.; Mehta, S.; Mojsilovic, A.; et al. AI Fairness 360: An Extensible Toolkit for Detecting, Understanding, and Mitigating Unwanted Algorithmic Bias. arXiv 2018, arXiv:1810.01943. [Google Scholar] [CrossRef]

- Wang, S.; Wang, P.; Zhou, T.; Dong, Y.; Tan, Z.; Li, J. CEB: Compositional Evaluation Benchmark for Fairness in Large Language Models. arXiv 2025, arXiv:2407.02408. [Google Scholar]

- Fan, Z.; Chen, R.; Hu, T.; Liu, Z. FairMT-Bench: Benchmarking Fairness for Multi-turn Dialogue in Conversational LLMs. arXiv 2025, arXiv:2410.19317. [Google Scholar]

- Jin, R.; Xu, Z.; Zhong, Y.; Yao, Q.; Dou, Q.; Zhou, S.K.; Li, X. FairMedFM: Fairness Benchmarking for Medical Imaging Foundation Models. arXiv 2024, arXiv:2407.00983. [Google Scholar]

- Weinberg, L. Rethinking Fairness: An Interdisciplinary Survey of Critiques of Hegemonic ML Fairness Approaches. J. Artif. Intell. Res. 2022, 74, 75–109. [Google Scholar] [CrossRef]

- Moore, R.E.; Kearfott, R.B.; Cloud, M.J. Introduction to Interval Analysis; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2009. [Google Scholar] [CrossRef]

- Ayyub, B.M.; Klir, G.J. Uncertainty Modeling and Analysis in Engineering and the Sciences; Chapman & Hall/CRC: Boca Raton, FL, USA, 2006. [Google Scholar] [CrossRef]

- Smets, P. The Transferable Belief Model and Other Interpretations of Dempster-Shafer’s Model. arXiv 2013. [Google Scholar] [CrossRef]

- Skau, E.; Armstrong, C.; Truong, D.P.; Gerts, D.; Sentz, K. Open World Dempster-Shafer Using Complementary Sets. In Proceedings of the Thirteenth International Symposium on Imprecise Probability: Theories and Applications, Oviedo, Spain, 11–14 July 2023; de Cooman, G., Destercke, S., Quaeghebeur, E., Eds.; PMLR: New York, NY, USA, 2023; Volume 215, pp. 438–449. [Google Scholar]

- Xiao, F.; Qin, B. A Weighted Combination Method for Conflicting Evidence in Multi-Sensor Data Fusion. Sensors 2018, 18, 1487. [Google Scholar] [CrossRef]

- IEEE Computer Society. IEEE Standard for System, Software, and Hardware Verification and Validation; IEEE: Piscataway, NJ, USA, 2016. [Google Scholar] [CrossRef]

- Auer, E.; Luther, W. Towards Human-Centered Paradigms in Verification and Validation Assessment. In Collaborative Technologies and Data Science in Smart City Applications; Hajian, A., Luther, W., Han Vinck, A.J., Eds.; Logos Verlag: Berlin, Germany, 2018; pp. 68–81. [Google Scholar]

- Barnes, J.J.I.; Konia, M.R. Exploring Validation and Verification: How they Differ and What They Mean to Healthcare Simulation. Simul. Heal. J. Soc. Simul. Healthc. 2018, 13, 356–362. [Google Scholar] [CrossRef]

- Riedmaier, S.; Danquah, B.; Schick, B.; Diermeyer, F. Unified Framework and Survey for Model Verification, Validation and Uncertainty Quantification. Arch. Comput. Methods Eng. 2020, 28, 1–26. [Google Scholar] [CrossRef]

- Kannan, H.; Salado, A. A Theory-driven Interpretation and Elaboration of Verification and Validation. arXiv 2025, arXiv:2506.10997. [Google Scholar]

- Hanna, M.G.; Pantanowitz, L.; Jackson, B.; Palmer, O.; Visweswaran, S.; Pantanowitz, J.; Deebajah, M.; Rashidi, H.H. Ethical and Bias Considerations in Artificial Intelligence/Machine Learning. Mod. Pathol. 2025, 38, 100686. [Google Scholar] [CrossRef] [PubMed]

- Mehrabi, N.; Morstatter, F.; Saxena, N.; Lerman, K.; Galstyan, A. A Survey on Bias and Fairness in Machine Learning. ACM Comput. Surv. (CSUR) 2021, 54, 1–35. [Google Scholar] [CrossRef]

- Meier, J.D. Communication Biases. Sources of Insight. 2025. Available online: https://sourcesofinsight.com/communication-biases/ (accessed on 21 October 2025).

- Sokolovski, K. Top Ten Biases Affecting Constructive Collaboration. 2018. Available online: https://innodirect.com/top-ten-biases-in-collaboration/ (accessed on 21 October 2025).

- Balagopalan, A.; Zhang, H.; Hamidieh, K.; Hartvigsen, T.; Rudzicz, F.; Ghassemi, M. The Road to Explainability is Paved with Bias: Measuring the Fairness of Explanations. In Proceedings of the FAccT ’22: 2022 ACM Conference on Fairness, Accountability, and Transparency, Seoul, Republic of Korea, 21–24 June 2022; pp. 1194–1206. [Google Scholar] [CrossRef]

- Spranca, M.; Minsk, E.; Baron, J. Omission and Commission in Judgment and Choice. J. Exp. Soc. Psychol. 1991, 27, 76–105. [Google Scholar] [CrossRef]

- Caton, S.; Haas, C. Fairness in Machine Learning: A Survey. ACM Comput. Surv. 2024, 56, 1–38. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, L.; Zhou, Z.; Laurentiev, J.; Lakin, J.R.; Zhou, L.; Hong, P. Assessing fairness in machine learning models: A study of racial bias using matched counterparts in mortality prediction for patients with chronic diseases. J. Biomed. Inform. 2024, 156, 104677. [Google Scholar] [CrossRef]

- Luther, W.; Baloian, N.; Biella, D.; Sacher, D. Digital Twins and Enabling Technologies in Museums and Cultural Heritage: An Overview. Sensors 2023, 23, 1583. [Google Scholar] [CrossRef]

- Katsoulakis, E.; Wang, Q.; Wu, H.L.; Shahriyari, L.; Fletcher, R.; Liu, J.; Achenie, L.; Liu, H.; Jackson, P.; Xiao, Y.; et al. Digital twins for health: A scoping review. npj Digit. Med. 2024, 7, 77. [Google Scholar] [CrossRef]

- Bibri, S.; Huang, J.; Jagatheesaperumal, S.; Krogstie, J. The Synergistic Interplay of Artificial Intelligence and Digital Twin in Environmentally Planning Sustainable Smart Cities: A Comprehensive Systematic Review. Environ. Sci. Ecotechnol. 2024, 20, 100433. [Google Scholar] [CrossRef]

- Islam, K.M.A.; Khan, W.; Bari, M.; Mostafa, R.; Anonthi, F.; Monira, N. Challenges of Artificial Intelligence for the Metaverse: A Scoping Review. Int. Res. J. Multidiscip. Scope 2025, 6, 1094–1101. [Google Scholar] [CrossRef]

- Luther, W.; Auer, E.; Sacher, D.; Baloian, N. Feature-oriented Digital Twins for Life Cycle Phases Using the Example of Reliable Museum Analytics. In Proceedings of the 8th International Symposium on Reliability Engineering and Risk Management (ISRERM 2022), Hannover, Germany, 4–7 September 2022; Beer, M., Zio, E., Phoon, K.K., Ayyub, B.M., Eds.; Research Publishing: Singapore, 2022; Volume 9, pp. 654–661. [Google Scholar]

- Paulus, D.; Fathi, R.; Fiedrich, F.; Walle, B.; Comes, T. On the Interplay of Data and Cognitive Bias in Crisis Information Management. Inf. Syst. Front. 2024, 26, 391–415. [Google Scholar] [CrossRef] [PubMed]

- Lin, F.; Zhao, C.; Vehik, K.; Huang, S. Fair Collaborative Learning (FairCL): A Method to Improve Fairness amid Personalization. INFORMS J. Data Sci. 2024, 4, 67–84. [Google Scholar] [CrossRef]

- Chen, L.; Zheng, S.; Wu, Y.; Dai, H.N.; Wu, J. Resource and Fairness-Aware Digital Twin Service Caching and Request Routing with Edge Collaboration. IEEE Wirel. Commun. Lett. 2023, 12, 1881–1885. [Google Scholar] [CrossRef]

- Faullant, R.; Füller, J.; Hutter, K. Fair play: Perceived fairness in crowdsourcing competitions and the customer relationship-related consequences. Manag. Decis. 2017, 55, 1924–1941. [Google Scholar] [CrossRef]

| Name | Definition | Ref | C | QC | |

|---|---|---|---|---|---|

| 1 | Aggregation b | False conclusions drawn about individuals from observing the population | [75] | ST | F |

| 2 | Attribution b | Responsibility shifted between actors | * | H | RS |

| 3 | Audit b 1 | Arising from restricted data access | [13] | SY/H | Au |

| 4 | Audit b 2 | Arising from incomplete or selectively written documentation | [13] | SY/H | Au |

| 5 | Audit-washing | Superficial auditability through reports obscuring deeper mechanisms | [75] | SY | Au |

| 6 | Authority b | Overvaluing the opinions or decisions of an authority | [76] | H | C |

| 7 | Cognitive amplific. | Strengthening of pre-existing mental tendencies by external influences | *** | ST | E |

| 8 | Cohort b | Models developed using conventional/readily quantifiable groups | [13] | ST | A |

| 9 | Confirmation b | Seeking out information that confirms existing beliefs | [76] | H | C |

| 10 | Delegation b | Responsibility over-delegated to the algorithm, reducing human oversight | [13] | SY | RS |

| 11 | Deployment b | A difference between the intended and actual use of the model | [14] | SY | A |

| 12 | Egocentric b | Assuming others understand, know, or value things the same way one does | [76] | H | C |

| 13 | Equity b | Overemphasizing equal treatment | [76] | SY/H | F |

| 14 | Evaluation b | Inappropriate/disproportionate benchmarks for evaluation | [75] | ST | A |

| 15 | Feature b | Group differences in the meaning of group variables | [1] | ST | E |

| 16 | Framing | Information presentation influencing its perception | [76] | SY/H | C |

| 17 | Greenwashing b | Declaring systems “sustainable” without rigorous evidence | * | SY | RS |

| 18 | Group imbalance b | Different accuracy levels for different groups | † | ST | A |

| 19 | Group-think | A need to conform to social norms | [77] | H | C |

| 20 | Historical b | Data reflects structural inequalities from the past | [14] | SY | D |

| 21 | Illusion of transpar. | Thoughts/explanations believed to be more apparent than they are | [76] | SY/H | E |

| 22 | Inductive b | Assumptions built into the model’s structure | ** | SY | F |

| 23 | In/out-group b | Favoring own group members over others | [77] | H | C |

| 24 | Interpretation b | Inappropriate analysis of unclear or uncertain information | [74] | H | A |

| 25 | Investigator b | Assumptions, priors, and cognitive biases of auditors | H | Au | |

| 26 | Label b | Outcome variables having different meanings across groups | [1] | ST | F |

| 27 | Measurement bias | Labels/features/models do not accurately capture the intended variable | [75] | SY | D |

| 28 | Method b | Explanations depend on the chosen method | [78] | SY | E |

| 29 | Missingness | Absence of data impacting a certain group | [1] | ST | D |

| 30 | Omission b | Missing explanation deemed less significant than an explicit one | [79] | H | E |

| 31 | Optimization b | Choosing a goal function that ignores minority performance | [75] | ST | F |

| 32 | Overconfidence b | Belief that one’s abilities/performance are better than they are | * | H | C |

| 33 | Overfitting b | Model memorizing training data and performing poorly in real life | ST | A | |

| 34 | Privilege b | Algorithms not available where protected groups receive care | [13] | SY | S |

| 35 | Representation b | Introduced when designing features, categories, or encodings | [74] | ST | F |

| 36 | Responsib.-washing | Impression of responsibility | § | H | RS |

| 37 | Sampling b | Models tailored to the majority group | [1] | ST | D |

| 38 | Shared responsib. b | Assumption of responsibility decreasing in presence of others | H | RS | |

| 39 | Simplification b | Favoring simpler solutions that may underfit minority groups | [75] | H | F |

| 40 | Stereotyping b | Assumptions about individuals based on their membership in a group | [76] | H | C |

| 41 | Structural b | Persistent inequities embedded in social/economic/legal/cultural systems | [52] | SY | S |

| 42 | Temporal b | Dismissing differences in populations and behaviors over time | [18] | ST | A |

| 43 | Threshold b | The same model threshold favoring one group while disadvantaging another | [80] | ST | A |

| BC | 0.224 | [0.040, 0.067] | [0.209, 0.254] |

| OC | 0.182 | [0.030, 0.066] | [0.166, 0.212] |

| sp | 0.048 | [0.010, 0.019] | [0.043, 0.055] |

| mBC | 0.09 | 0.09 | [0.124, 0.136] |

| BC | 0.02 | 0.02 | [0.026, 0.029] |

| FE | Patient View (Expert 1) | Patient View (Expert 2) | Family View | |||

|---|---|---|---|---|---|---|

| QCF | ||||||

| QCA | ||||||

| QCE | ||||||

| C | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luther, W.; Auer, E. Towards Fair Medical Risk Prediction Software. Future Internet 2025, 17, 491. https://doi.org/10.3390/fi17110491

Luther W, Auer E. Towards Fair Medical Risk Prediction Software. Future Internet. 2025; 17(11):491. https://doi.org/10.3390/fi17110491

Chicago/Turabian StyleLuther, Wolfram, and Ekaterina Auer. 2025. "Towards Fair Medical Risk Prediction Software" Future Internet 17, no. 11: 491. https://doi.org/10.3390/fi17110491

APA StyleLuther, W., & Auer, E. (2025). Towards Fair Medical Risk Prediction Software. Future Internet, 17(11), 491. https://doi.org/10.3390/fi17110491