5.1. Overview of DT Research on the Production Efficiency of RESs and Energy-Saving Applications

In the energy sector, the development of DT technology is rapidly evolving. As previously discussed, only a limited number of publications have reported on these initiatives thus far. The objective of this section is to provide a detailed analysis of DT for production efficiency in RESs, specifically addressing the first half of RQ1 (“What is the current state-of-the-art of DT for enhancing production efficiency in RESs?”). The applications of DTs in this context include enhancing efficiency in the production and distribution of electricity across various energy sectors, such as nuclear, renewable, and conventional energy, as well as in automobiles, energy storage, batteries, and energy project planning. This section also explores DT applications in smart energy systems and energy cyber–physical systems. We review all publications that utilize DT to improve production efficiency in RESs and other energy-saving applications.

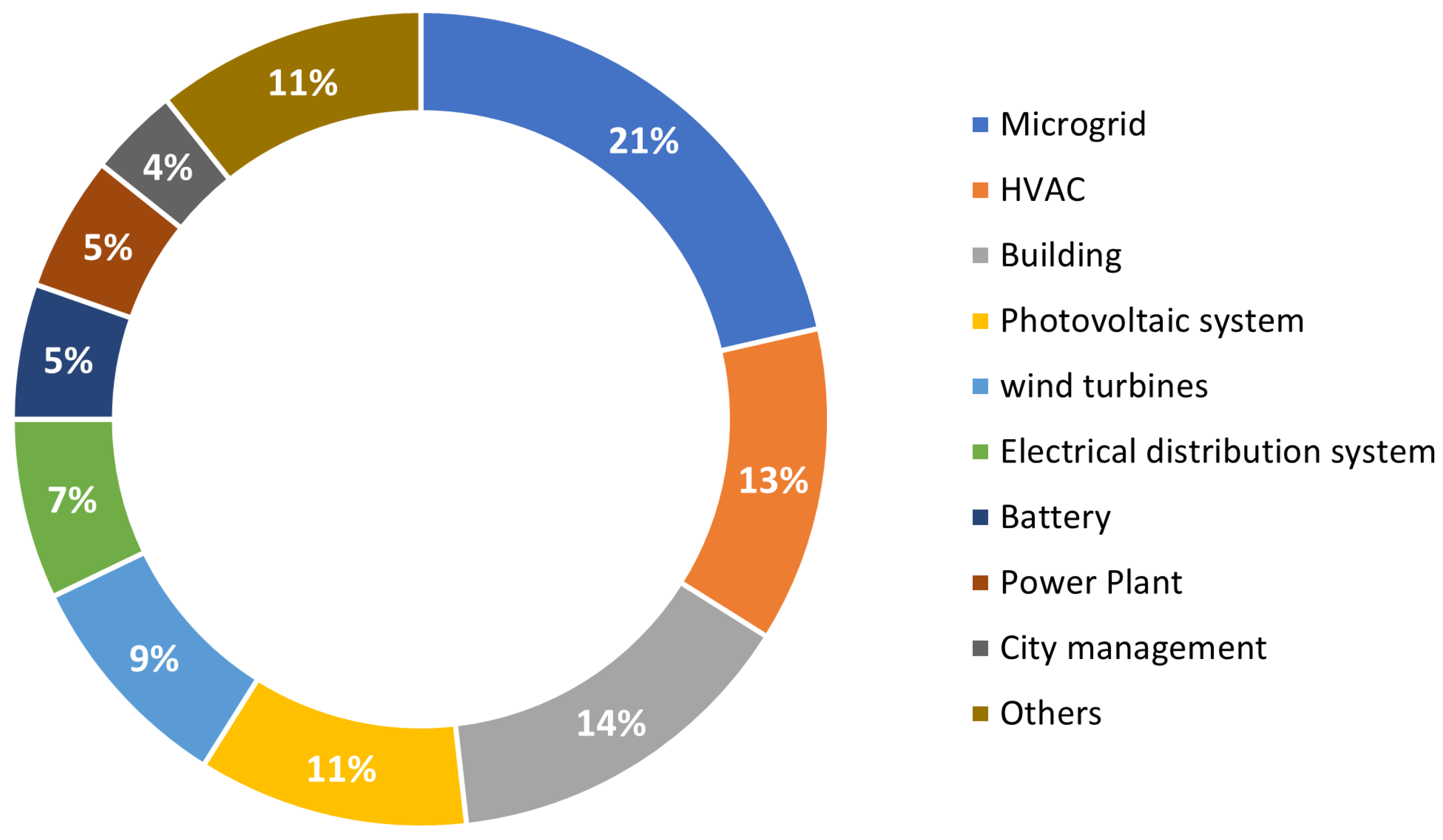

Figure 8 illustrates the stratification of papers based on the systems modeled using DT. The second half of RQ1, focusing on safety assurance in RESs, will be covered in

Section 5.2.

Zohdi et al. [

62] computed solar power flow with a reduced order model of Maxwell’s equation, and performed simulations to maximize the power absorbed by the panels by considering varying multi-panel inclination, panel refractive indices, sizes, shapes, heights, ground refractive properties, etc., and optimized the system using genomic-based machine learning (ML) algorithm. Moreover, Fahim and Sharma [

63] followed the 5D modeling approach, which refers to a physical entity, virtual representation, data curation, communication scheme, and services (PE, VR, DC, CS, and Ss), to monitor the wind turbines and identify wind speed based on advanced temporal convolutional neural network (TCN) and k-nearest neighbors (KNN) regression. They used the wind forecast to predict the power generation for the month through the 5G-next generation-radio access network (5G-NG-RAN) assisted cloud-based model for analyzing the wind farm. Pimenta et al. [

64] dealt with optimizing wind energy by creating models and performing simulations for increasing production efficiency. Howard et al. [

65], Kaewunrue et al. [

66], and Tagliabue et al. [

67] proposed a DT framework for modeling, controlling, and optimizing cyber–physical systems of the greenhouse production process. The framework is based on the Smart Industry Architecture Model (SIAM), which provides a systematic approach for the exchange of data and information between the different layers of the model. The proposed framework is evaluated using a case study of a greenhouse production process. The results show that the framework can be used to improve energy efficiency and productivity of the greenhouse production process.

Some researchers [

32,

59,

68] have discussed the necessity of developing a DT for renewable energy generators. He and Ai [

69] proposed a framework that emphasizes the importance of leveraging technologies such as big data, artificial intelligence, 5G, cloud computing, and IoT to enhance the capabilities of the power system digital twin (PSDT). By integrating data-driven and model-based tools, the PSDT aims to improve system understanding, decision-making, and overall grid management in the power sector. Zaballos and Briones [

70] proposed a BIM model with an IoT-based wireless sensor network for environmental monitoring and motion detection to obtain insights into occupants’ level of comfort to improve efficiency. Zhao et al. [

71] presented a dynamic cutting parameter optimization method for low carbon and high efficiency based on DT. Compared with traditional static optimization methods, this method can dynamically find optimal cutting parameters in light of the real-time sensing data of the machining conditions. The case study shows that the method can reduce the processing time by 5.84% and carbon emissions by 6.1%. However, this study still has limitations, mainly including the smart perception of machining conditions and the continuous evolution of optimization models driven by sensing data. In the case study, cutting parameters are dynamically optimized based on real-time sensing data for the smoothness of the cutting force, without considering other machining factors such as surface roughness, accuracy, tool life, etc.

Li et al. [

72] and Merkle et al. [

73] proposed a DT for battery management systems to improve the computational power and data storage capability using cloud computing. The proposed model-based battery diagnostic algorithms with adaptive extended H-infinity filter (AEHF) and particle swarm optimization (PSO) for SOC (state-of-charge) and SOH (state-of-health) estimations were implemented on two different types of batteries, i.e., lithium–ion and lead–acid batteries. Security and privacy of data were assured using MQTT and TCP/IP protocols. Battery modeling, experimental set-up, and validation are performed. Park and Byeo [

74] utilized the NARX algorithm (a dynamic neural network) and the multivariate adaptive regression splines (MARS) for the optimal scheduling (charging/discharging) process for an energy storage system (ESS) to minimize electricity bills. Brosinsky et al. [

75], Pan and Dou [

76], and Brosinsky and Song [

26] proposed a framework of PSDT with the main components being data-driven, closed-loop feedback, and real-time interaction. A DT construction of a CNC machine tool was presented by Zhao and Fang [

71], which utilized an optimization method for cutting parameters to reduce carbon emissions from manufacturing processes. Furthermore, there are several publications considering DT such as building information modeling (BIM) [

66] and simulations [

33,

64,

65], etc. Therefore, there is a need to consolidate research to retain a unified understanding of the topic and to guarantee that future research efforts are built on sound foundations.

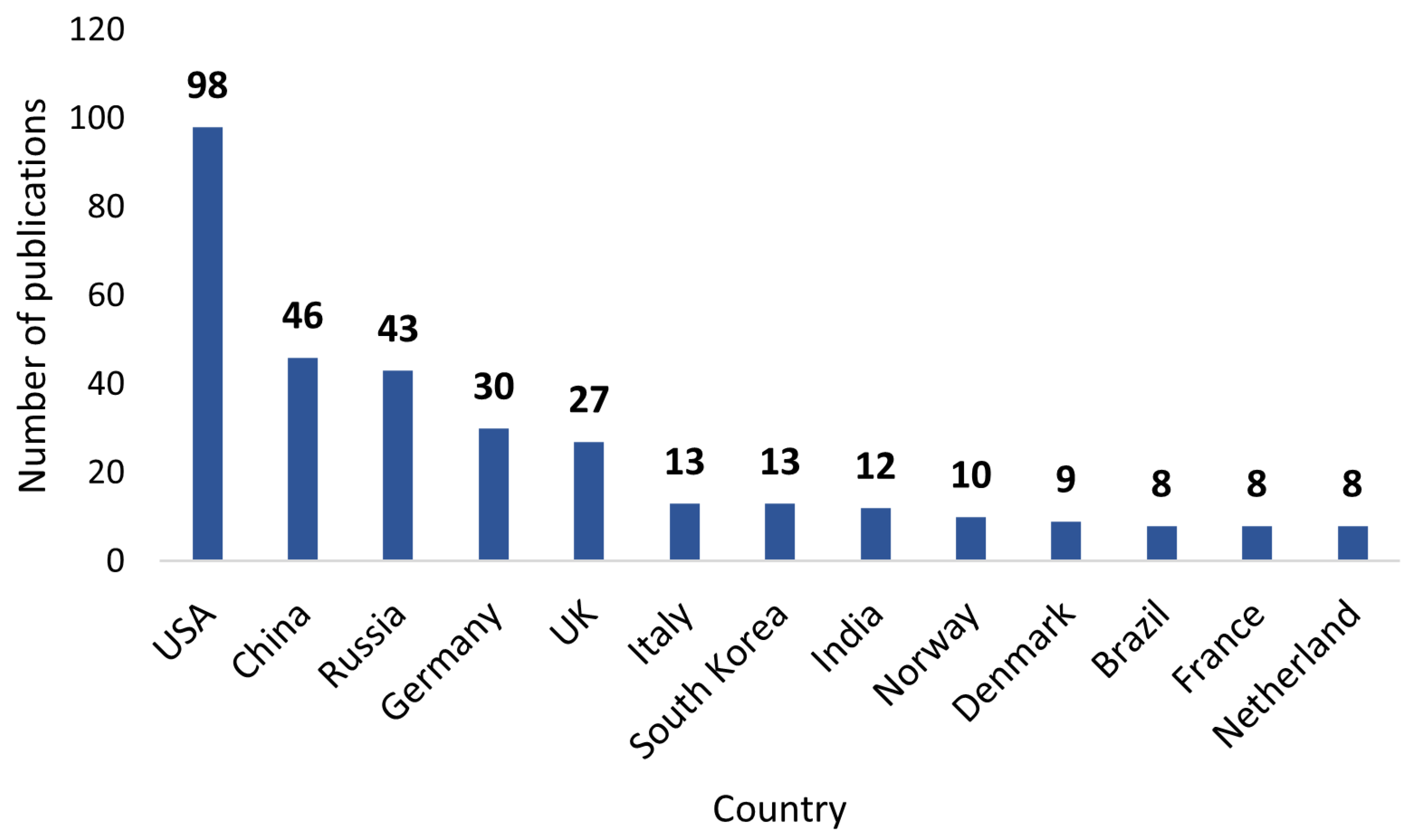

The number of energy-related DT publications has been increasing rapidly, with the USA, China, and the European Union taking the lead as shown in

Figure 9. Moreover, the research papers on DT in the energy industry were published in 34 scientific journals.

Table 3 shows the top 10 sources. The first five journals make up around 36.7% of the papers, with the journal Energies being the most prominent, making up about 16%. Most journals only have one publication in this field, indicating that it is a diverse area that fits with many other research topics and journals [

10].

Among all the articles reviewed, it is evident that this research domain remains relatively new and under-explored, with the earliest publication on DT for RESs dating back to 2019 [

33]. However, there is a clear indication that it is an emerging field, with an increasing number of publications in recent years showcasing a growing interest and recognition of the potential of DT in enhancing RESs.

5.2. Overview of DT Research on the Safety Assurance of RESs

In this section, we provide a detailed analysis of DT for safety assurance in RESs, addressing the second half of RQ1 (“What is the current state-of-the-art of DT for enhancing safety assurance in RESs?”). Various works propose fault detection and identification methodologies, control logic implementation, and defense approaches for smart grids and distributed systems, highlighting the importance of secure operations. Notably, the literature presents diverse approaches, including cloud-based distributed control algorithms, ML-based fault diagnosis, and prototypes for safety and risk management, such as the hydrogen high-pressure vessel discussed in [

5]. However, some prototypes remain untested, emphasizing the need for comprehensive evaluations in developing DTs.

Jain et al. [

33] designed a PV panel-level power converter prototype in which a DT estimator was created to capture the real-time characteristic of a PV energy conversion unit (PVECU) and fault signature library for power converter faults, PV panel faults, and electrical sensor faults using Xilinx Artix-7 field programmable gate array (FPGA) for real-time fault detection and identification. Zheng and Liu [

77] presented a review of DT for cybersecurity in the smart grid. Onederra et al. [

78] presented a novel thermal model of a medium-voltage cable under electrical stress in a wind farm. The model is based on experimental data from previous studies, and it allows us to predict the cable’s lifetime under different operating conditions. The model is also able to provide information about the cable’s aging state, which can be used to schedule preventive maintenance or replacement. The results of the simulations show that the model is able to accurately predict the cable’s failure time, and it can therefore be used as a valuable tool for cable design and maintenance.

Nguyen et al. [

79] proposed an advanced holistic assessment procedure using Digital Twins (DTs) and power-hardware-in-the-loop. This approach facilitates the assessment of the impact of distributed renewable energy resources (DRESs) at both local and global levels within their expected deployment environment. The approach was demonstrated via a case study that involved integrating a new PV inverter and load into a high PV penetration microgrid, governed by a coordinated voltage control algorithm. While the DTs were replicated from real devices, it was possible to make adjustments to the topology among them in simulation, without modifying the real connections or interfering with the activities inside the buildings and houses. Lei et al. [

80] explored methodologies for realizing a DT of thermal power plants. This article delves into the detailed implementation of the DT from five different perspectives, providing a practical and feasible path for monitoring and controlling the DT via web browsers. The functionalities of a DT thermal power plant are summarized as real-time monitoring, visualization, interactions, algorithm design, and so on.

Saad et al. [

81] proposed an IoT-based DT for cyber–physical networked microgrids (NMGs) to enhance resilience against cyberattacks. The cloud-based DT platform is implemented to provide a centralized oversight for the NMG system. The cloud system hosts the controllers (cyber-things) and the sensors (physical things) in the cloud IoT core in terms of the IoT shadow. The proposed DT covers the digital replica for both the physical layer, the cyber layer, and their hybrid interactions. The proposed framework ensures the proper and secure operation of the NMG. Additionally, it can detect false data injection (FDIA) and denial of service (DoS) attacks on the control system whether they are individual or coordinated attacks. Once an attack is detected, corrective action can be taken by the observer based on What-If scenarios that ensure the safe and seamless operation of the NMGs.

Pimenta et al. [

64] developed a simulation model for the continuous tracking of accumulated fatigue damage and the evaluation of alternative operation strategies for an offshore wind turbine. This model was capable of making accurate predictions of thrust force and power output using only data from project drawings and theoretical curves. The structural and mechanical properties of the wind turbine were calculated based on the geometric properties of the tower and blades. The aerodynamic properties of different sections of the blades were computed using 2D models created with ANSYS Fluent, which is a powerful computational fluid dynamics (CFD) software tool used for modeling and simulating fluid flow, heat transfer, and chemical reactions. The measured and simulated responses allowed for the identification and validation of structural dynamic properties and static and dynamic internal loads.

Table 4 illustrates the citations of safety assurance in RESs using DT. Among the 55 reviewed papers, only 15% of publications have implemented safety assurance. Specifically, three papers discuss equipment safety, another three focus on thermal and electrical safety, and two papers focus on security implementations against cyberattacks. These findings highlight the critical need for further research and attention to safety aspects within the literature, indicating an area that requires greater exploration and emphasis.

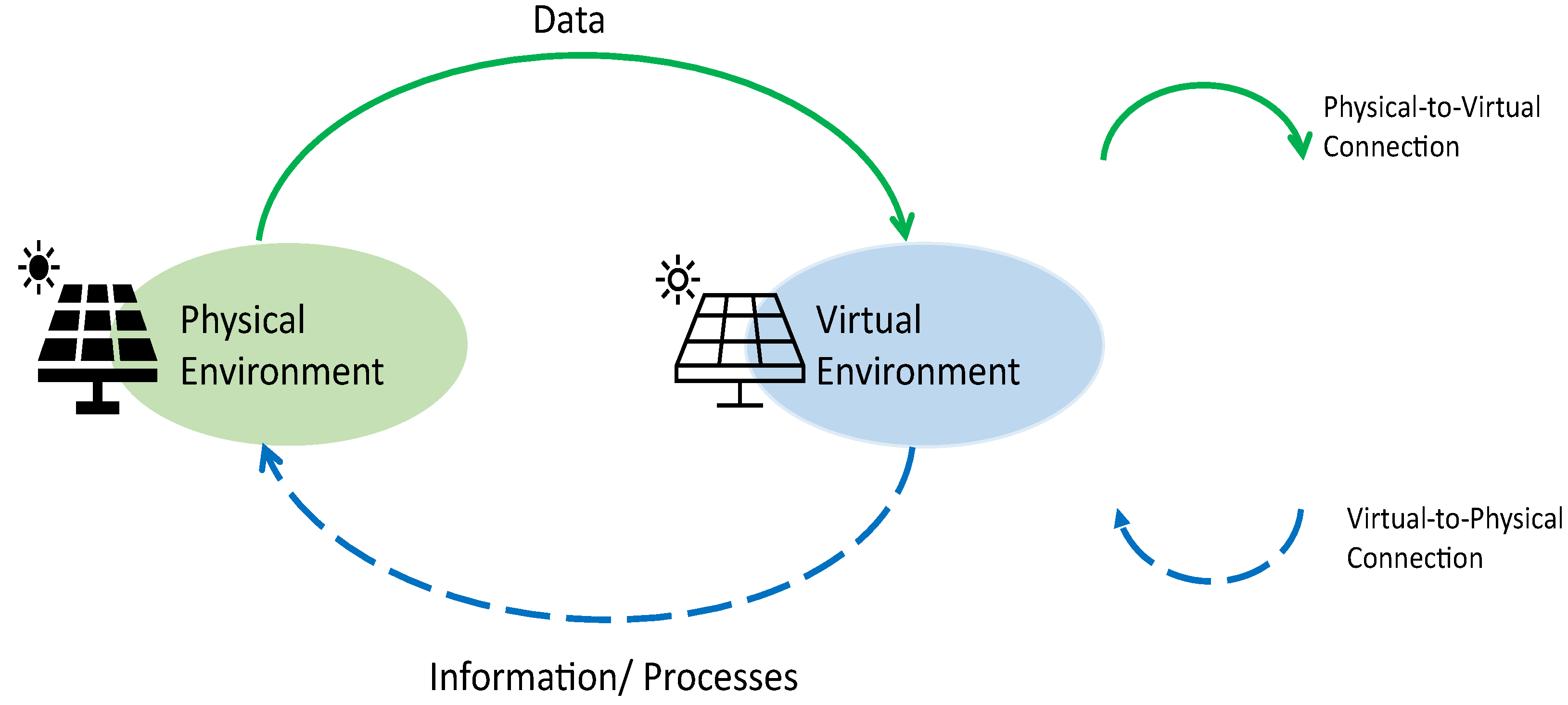

On the contrary, the DT heavily relies on the Industrial Internet-of-Things (IIoT) for both physical-to-virtual and virtual-to-physical twinning. The use of sensors, including RFID, facilitates data collection, while actuators affect changes in the physical environment. Although the literature emphasizes the importance of data security in this context, it is acknowledged as a broad topic requiring separate research.

While DT stands as a crucial technology within the energy industry, allowing for enhanced efficiency and productivity, predictive system behavior forecasting, and improved safety, challenges remain concerning the practical application of these DT models in real-world scenarios. Despite its significance in energy systems, the development of methods for applying DT models to RESs, notably production, predictive maintenance, and safety, is still in its early stages. Existing literature often comprises theoretical frameworks lacking tangible case studies and comprehensive methodologies. Nevertheless, there are practical instances detailed in certain literature [

59]. Consequently, there is a clear need for further research focusing on real case studies to establish methods for effectively integrating DT into the energy industry, thus amplifying its potential impact on RES management operations.

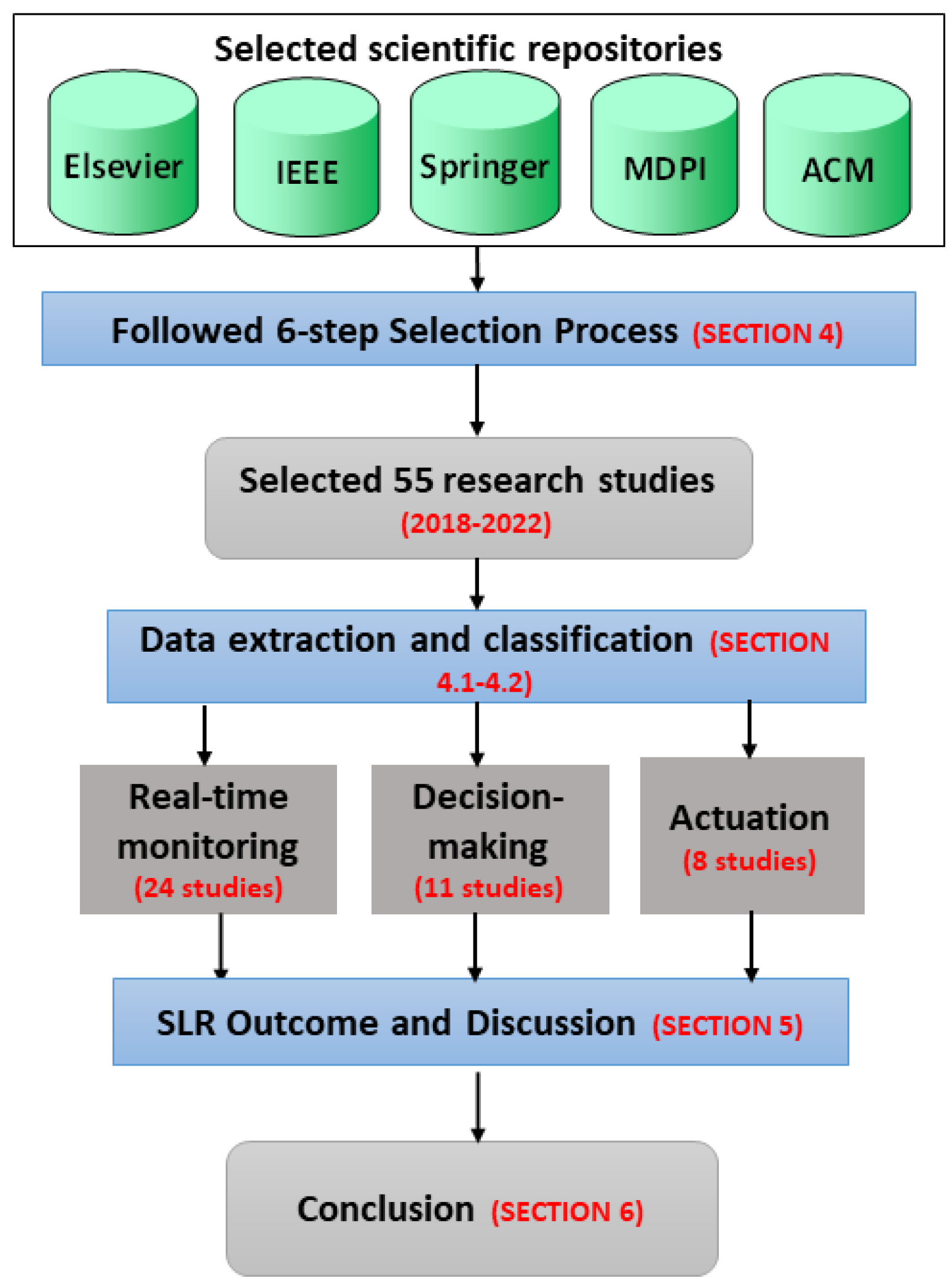

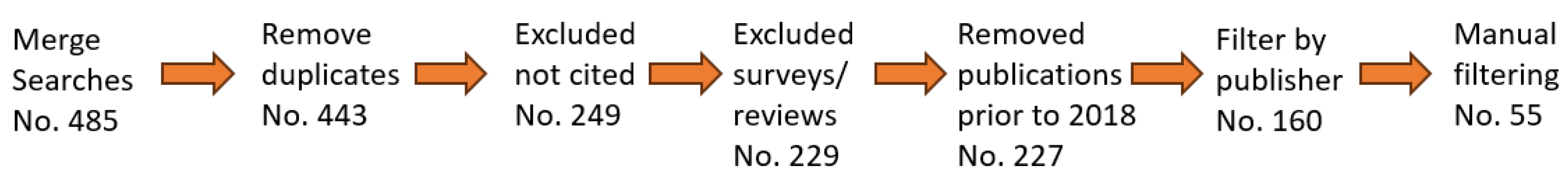

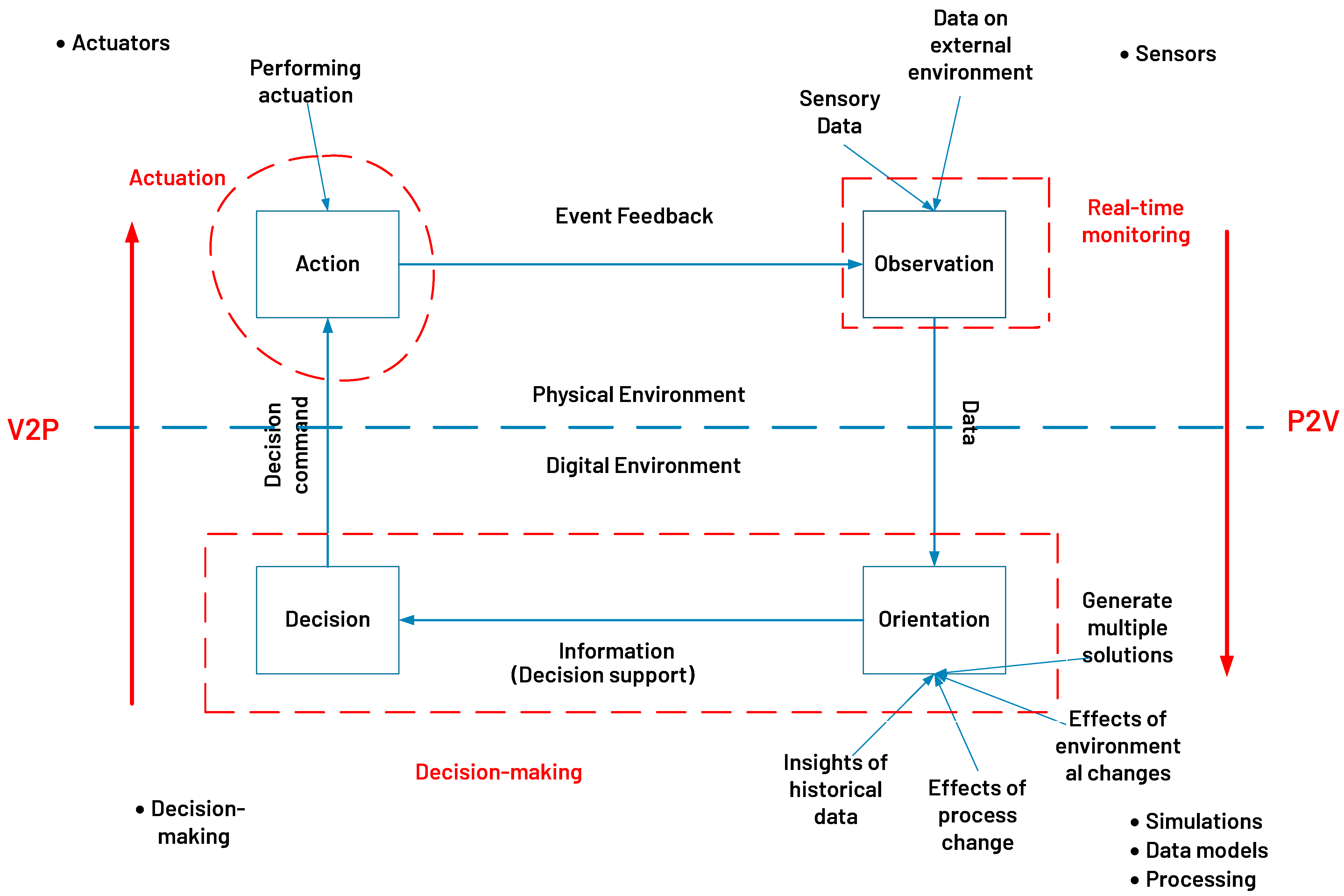

5.3. Analyzing Outcome with DT Taxonomy and OODA Framework

In this section, we delve into the outcomes of our investigation into the application of DTs for production efficiency and safety assurance in RESs, building on insights gained from previous sections. Initially, we introduce a taxonomy, as detailed in

Section 4.1, to systematically analyze and explore the literature. This taxonomy divides key aspects—hardware, software, and data—into physical and digital environments, providing a structured approach to our analysis. Following this, we utilize the OODA framework, which is tailored specifically for identifying the main components of DTs in RESs. We assess this framework by exploring common challenges and issues related to production efficiency and safety assurance within RESs. Through these analyses, our goal is to provide insights into the potential benefits and challenges of applying DTs in this sector, offering valuable insights for future developments.

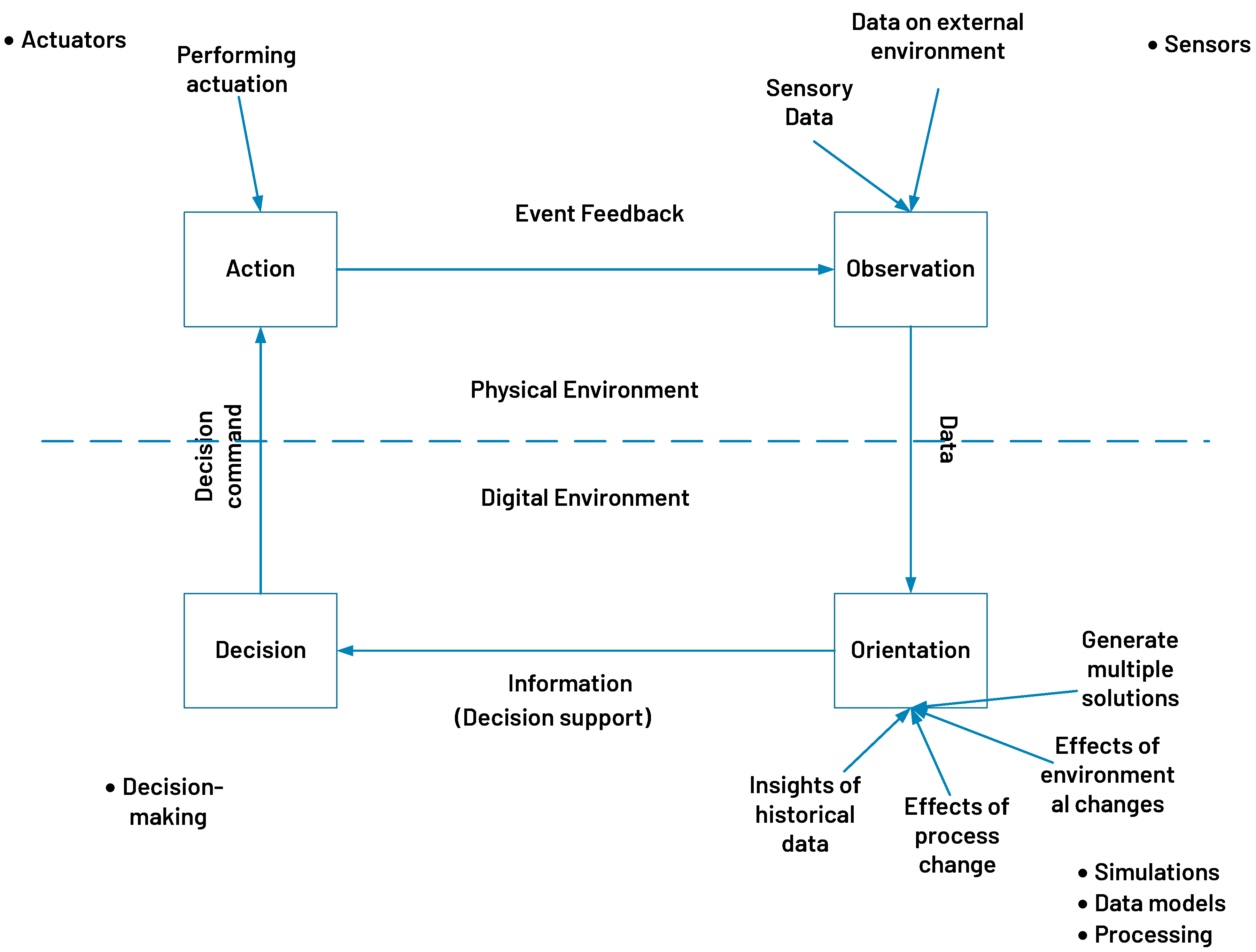

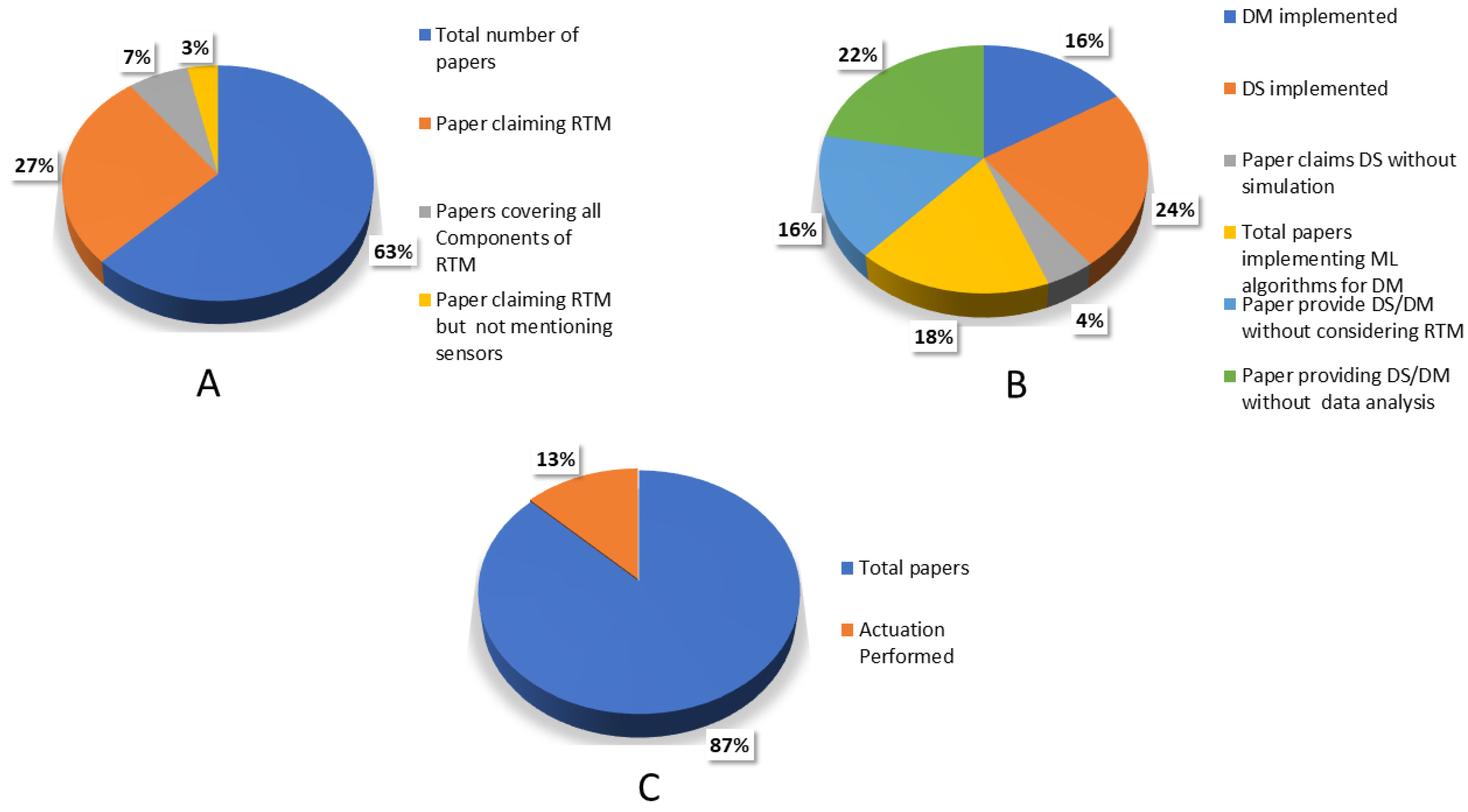

Firstly, we investigated the literature using the DT taxonomy, performing a comprehensive analysis of 55 selected primary publications discussed in

Section 4. We collected data from these primary studies and evaluated them based on predefined capabilities. Our analysis outlined the essential tools and components necessary for implementing real-time monitoring (RTM), decision-making, and actuation within DT systems. For example, real-time monitoring requires the utilization of sensors, computing resources, user interfaces, and communication protocols. Decision-making relies on a combination of simulation techniques, data processing methods, real-time monitoring capabilities, ML algorithms, and data modeling approaches. Lastly, actuation involves the integration of real-time monitoring, decision-making processes, and actuators.

Table 5 shows the distribution of DT implementation across a constructed taxonomy of the physical environment (i.e., hardware) and digital environment (i.e., software and data). By analyzing this collected data, we were able to identify gaps in the literature concerning CLDTs for production efficiency and safety assurance of RESs.

Furthermore, we conducted a comparative analysis of primary publications against these DT components, utilizing the OODA framework. The results of this analysis are presented in

Figure 10. Addressing RQ2: (“What literature gaps exist regarding the utilization of DT for enhancing production efficiency and safety assurance of RESs?”), our findings reveal significant gaps. Specifically, 27% of the publications claim real-time monitoring (RTM) capabilities; however, notably, only 7% of publications explicitly mention and include all the tools necessary for effective RTM. Additionally, only 16% of the publications have achieved decision-making capabilities, and a mere 13% involve actuation components. Interestingly, to the best of our knowledge, none of the papers focusing on RESs comprehensively encompass all three components required to realize a CLDT. This highlights significant gaps in the literature concerning P2V and V2P communication in RESs.

By considering the fundamental components required for RTM, such as sensors, computational resources, interfaces, and communication protocols, several opportunities in this area emerge. Some studies concentrate on RTM, while others investigate methods for accomplishing continuous RTM. DTs, which are virtual replicas of real-world systems, rely on RTM to function. They constantly exchange data with the actual system, which enables predictive maintenance. This implies that minor problems can be addressed before they become major issues and cause downtime. Overall, RTM provides a wide range of advantages for RESs. It can improve efficiency, lower risks, expedite design processes, simplify complicated systems, allow for faster adjustments, increase productivity, and lead to better decision-making. It can also improve safety and make the entire system more adaptable and competitive.

However, there are relatively few studies that validate and quantify these perceived advantages over existing procedures and systems. Few publications demonstrate substantial improvements beyond current standards. Notably, the implementation of RTM has been detailed in [

25,

33,

66,

72,

75,

81,

82,

83]. While many publications leverage sensor networks and IoT devices for RTM, some opt for supervisory control and data acquisition (SCADA) due to its capability to collect real-time data from remote locations and transfer it promptly.

Furthermore, in the literature, decision-making is often referred to as decision support, as seen in [

65], where the author proposes the interactive DT (InDT) and utilizes various decision analysis techniques to provide decision support. Decision support and decision-making are interconnected concepts, differing in their focus and purpose. Decision support focuses on providing assistance, information, and tools to enhance decision-making capabilities, while decision-making refers to the actual process of selecting a course of action from available alternatives.

For instance, Zohdi et al. [

62] propose decision-making and planning for household energy consumption to improve the efficiency of energy production. The system employs a distributed reinforcement learning (RL) method that runs in the virtual digital world to optimize household appliance schedules before applying the end result to physical assets. The proposed RL-based rescheduling method is validated using synthetic data, yielding corresponding results.

A limited number of publications in the RESs explore the implementation of actuation within CLDTs, highlighting the need for a more comprehensive approach. Closing this gap is essential to establish a V2P connection and attain a fully automated DT for RESs. Therefore, emphasizing the V2P connection becomes imperative to realize a closed loop. A holistic platform that integrates actuation, real-time monitoring, and decision-making is essential. As demonstrated in [

64], where the Power System Digital Twin (PSDT) is introduced with a data-driven approach, closed-loop feedback, and real-time interaction. However, this architecture lacks comprehensive evaluation.

5.4. The Comparison of Available DT Platforms in the Market against the DT Components Identified Using the OODA Framework

In our study, we focused on enhancing production efficiency and safety in RESs using DT technology, we conducted a comprehensive comparison of various DT platforms available on the market, each with its own set of features [

84]. In this context, we conducted a comparative analysis of three prominent DT solutions: AWS TwinMaker, ADT, and Ditto. These platforms offer distinct features and functionalities, ranging from real-time monitoring and predictive analytics capabilities to data storage and security measures. By conducting this comparison, we aimed to provide RES operators with valuable insights into the strengths and weaknesses of different DT platforms. This information can guide their decision-making process when selecting the platform that best suits their needs and goals.

AWS TwinMaker and ADT are prominent offerings from leading cloud providers Amazon Web Services (AWS) and Microsoft, respectively, in the competitive cloud computing market [

84,

85,

86]. They offer pre-built platforms with features catering to a broad range of DT use cases. Ditto is an open-source framework gaining traction. Its open-source nature allows for customization and flexibility, appealing to users who require specific functionalities beyond what pre-built platforms offer [

87]. Ditto stands out among open-source DT platforms due to its unmatched flexibility [

88]. The open-source nature allows deep customization and integration with existing tools, while strong security features ensure data protection [

87]. While requiring more development expertise compared to user-friendly options, Ditto’s active community, scalability, and cost-effectiveness make it a compelling choice for projects prioritizing customization and control over their DT development [

87,

88].

Table 6 presents a comparison among the three mentioned platforms regarding their real-time monitoring capabilities in the context of DT and highlights the features they support and those they do not support. Similarly,

Table 7,

Table 8,

Table 9 and

Table 10 compare platforms regarding their support for protocols, decision-support, decision-making, and actuation, highlighting both the features they support and those they do not support.

Table 6.

Comparison of DT platforms against real-time monitoring.

Table 6.

Comparison of DT platforms against real-time monitoring.

| Platforms | Supported | Not Supported |

|---|

| AWS TwinMaker | Amazon Kinesis Data Stream/Analytics for real-time data stream processing. AWS TwinMaker provides alerts based on data visualization to determine when data or forecasts are out of expectations [89]. Devices that are NOT directly connected to the Internet access AWS IoT Core via a physical hub | Primarily designed for online operation, real-time data are required for visualization and functionalities, causing limited support for offline functionality [90]. While devices connected to a private IP network can access AWS IoT Core with the help of a physical hub as an intermediary, devices using non-IP radio protocols such as ZigBee or Bluetooth LE are not directly supported by AWS IoT Core. They need a physical hub as an intermediary between them and AWS IoT Core for communication and security |

| ADT | Comprehensive monitoring with dashboards and Azure services–like monitor, logic apps, and time series insights for comprehensive monitoring and real-time alerts about events or anomalies [91]. The platform also enables 3D visualization of the CPS and its physical space | Large enterprises might not find ADTs suitable due to limitations in handling high traffic and potential beta-version issues. |

| Ditto | Eclipse Vorto is a platform aiming to define twin specifications and automatically generate code (e.g., based on the Ditto framework). It makes use of information models that are assembled by abstract and technology-agnostic function blocks [90]. In addition to the persistent mode, Ditto has a ‘live’ channel which lets an application communicate directly with a device. Using a live channel, Ditto acts as a router forwarding requests via the device connectivity layer to the actual devices. This channel can also be used to invoke operations (like e.g., “turn the light on now”) on the device and accept a response back from a device. Ditto search services could be used by an application that wants to create a dashboard to show the real-time data of a fleet of devices. Ditto allows for the mapping of different device data into a consistent, lightweight JSON model. This allows Ditto to provide a consistent interface for a heterogeneous set of devices [90]. | N/A |

All three platforms provide core functionalities for building and managing DTs including data modeling, connectivity with devices and sensors, and data visualization. However, they differentiate themselves in various aspects: AWS TwinMaker emphasizes graphical user interface (GUI) and ease of use, making it attractive for users with less technical expertise [

86]. ADT focuses on integration with Azure services and offers features like state synchronization and edge deployment [

86]. These features are valuable for scenarios requiring real-time data and distributed processing. Ditto prioritizes flexibility and an open-source approach, allowing for customization and integration with diverse tools and platforms. This caters to users who need tailored solutions beyond the limitations of pre-built platforms [

88,

90]. Additionally, AWS TwinMaker targets businesses of all sizes, focusing on ease of use and rapid deployment [

85]. ADT primarily targets enterprises already invested in the Azure ecosystem, leveraging existing infrastructure and services [

86]. Ditto appeals to a broad range of users, including individual developers, research institutions, and companies seeking a customizable and open-source solution. Therefore, comparing these three platforms provides a comprehensive overview of different approaches to DT development, catering to various needs and technical expertise levels.

Table 7.

Comparison of DT platforms against protocols.

Table 7.

Comparison of DT platforms against protocols.

| Platforms | Supported | Not Supported |

|---|

| AWS TwinMaker | MQTT ensures real-time transmission of data streams [89]. Other protocols supported include HTTP, Web Sockets, and LoRaWAN | AWS IoT does not support the following packets for MQTT 3: PUBREC, PUBREL, and PUBCOMP (these packets are part of the QoS 2 (assured delivery) mechanism in MQTT, which involves multiple message exchanges for confirmation). By omitting them, AWS IoT simplifies its implementation and potentially improves overall efficiency.)AWS IoT does not support the following packets for MQTT 5: PUBREC, PUBREL, PUBCOMP, and AUTH.AWS IoT does not support MQTT 5 server redirection. While AWS IoT does not support QoS 2 through these packets, it offers alternative mechanisms for ensuring message delivery with varying levels of reliability: shadow service and retries [92]. |

| ADT | IoT Hub supports protocols such as MQTT, AMQP, and HTTPS for device communication [93]. If the device does not support one of these protocols, it is possible to adapt both incoming and outgoing traffic using Azure IoT Protocol Gateway | IoT Hub has limited feature support for MQTT. If the solution needs MQTT v3.1.1 or v5 support, it recommends MQTT support in Azure Event Grid. |

| Ditto | AMQP 0.9.1, AMQP 1.0, Apache Kafka 2. x, HTTP (invoking external webhooks), MQTT 3.1.1, MQTT 5 [87,94] | It does not define or implement an IoT protocol in order to communicate with devices, i.e., Ditto itself does not handle the specifics of how data are exchanged between devices and the platform.

By not defining its own protocol, Ditto maintains protocol agnosticism. This allows it to integrate with diverse devices that utilize different communication protocols like MQTT, CoAP, or proprietary protocols used by specific manufacturers. |

ADT is a platform-as-a-service (PaaS) that provides enterprise-grade solutions for IoT connectivity [

93]. It allows the modeling of assets, systems, or entire environments, keeping DTs live and up-to-date through Azure IoT [

84]. Azure Synapse Analytics tracks the history of DTs, extracting insights to predict future states. The Azure OpenAI and Azure ML platforms support the development of autonomous systems that continuously learn and enhance their capabilities [

84]. Microsoft Mesh enables presence and shared experiences from any location on any device.

Comprehensive monitoring with dashboards and Azure services—like Monitor, Logic Apps, and Time Series Insights—facilitates real-time alerts about events or anomalies [

91]. The platform also enables 3D visualization of the CPS and its physical space. IoT Hub supports protocols such as message queuing telemetry transport (MQTT), advanced message queuing protocol (AMQP), and hypertext transfer protocol secure (HTTPS) for device communication [

93]. If the device does not support one of these protocols, it is possible to adapt both incoming and outgoing traffic using Azure IoT Protocol Gateway [

95]. Azure IoT Edge extends the capabilities of Azure IoT Hub by allowing the processing and analysis of data at the edge devices themselves. This is beneficial for scenarios requiring low latency, offline capabilities, or reduced cloud dependency [

91]. Data analysis is provided using various services such as Azure Time Series Insights, Stream Analytics, ML, Cognitive Services, advanced analytics, and algorithms [

91,

95].

Table 8.

Comparison of DT platforms against decision-support.

Table 8.

Comparison of DT platforms against decision-support.

| Platforms | Supported | Not Supported |

|---|

| AWS TwinMaker | Amazon Athena and Amazon Quick Sight are used for data analysis. AWS TwinMaker also supports the importation of multi-source and heterogeneous data [89,96] | Consistent rendering performance in the AWS TwinMaker scene is not supported due to hardware dependence. The AWS TwinMaker scene (provides a visual context for the data connected to the service) rendering performance is hardware-dependent. Performance varies across different computer hardware configurations [90]. |

| ADT | Azure IoT Edge extends the capabilities of Azure IoT Hub by allowing the processing and analysis of data at the edge devices themselves. This is beneficial for scenarios requiring low latency, offline capabilities, or reduced cloud dependency [91]. | The ADT platform does not directly support the message Routing and Rules Engine. Integration with Azure IoT Hub or Azure IoT Central is recommended. |

| Ditto | provides web-based simulation capabilities. Ditto structures the data sent by devices via Hono into digital IoT Twins [94]. It offers APIs to deal with Things, Features, Policies, Things-Search, Messages, and CloudEvents. There is also the option to use the Ditto Protocol [97]. Ditto will save the most recent values of a device in a database. This allows DT to query the last reported value of a device. A DT can also establish that it needs to be notified when the value changes. Based on a change, devices can also be notified if an application wants to change something in the device. | The integration of simulations or behavioral models is not directly supported and requires external tools or programming, potentially adding complexity [97]. |

Table 9.

Comparison of DT platforms against decision-making.

Table 9.

Comparison of DT platforms against decision-making.

| Platforms | Supported | Not Supported |

|---|

| AWS TwinMaker | ML models and AI services offered by AWS, such as Amazon SageMaker, allow the development of predictive models. AWS TwinMaker may be seamlessly integrated with Lambda and SageMaker, which offer additional data processing and decision-making capabilities [89].

The Rules Engine enables continuous processing of inbound data from devices connected to AWS IoT Core. One can configure rules in the Rules Engine in an intuitive, SQL-like syntax to automatically filter and transform inbound data. | It primarily focuses on visualization and monitoring, offering limited support and features for automated decision-making based on twin data [91]. Additionally, it does not support a fully integrated simulation engine. (Implementing features like automated decision-making based on DTs and integrated simulation engines can introduce significant complexity to the platform. This can be achieved by developing custom decision-making logic or integrating third-party solutions tailored according to needs). |

| ADT | Pre-defined conditions and Azure Logic Apps integration–Integrate AI and ML models to analyze data and generate insights for informed decision-making. Responsible AI dashboard—the dashboard offers a holistic assessment and debugging of models so one can make informed data-driven decisions. | N/A |

| Ditto | Pre-defined rules and custom integrations—decisions can be made through integration with external tools or a rule engine | N/A |

Table 10.

Comparison of DT platforms against actuation.

Table 10.

Comparison of DT platforms against actuation.

| Platforms | Supported | Not Supported |

|---|

| AWS TwinMaker | AWS OpsWorks is a configuration management service that provides managed instances of Chef and Puppet. Chef and Puppet are automation platforms that allow one to use code to automate the configurations of servers [98]. OpsWorks lets one use Chef and Puppet to automate how servers are configured, deployed, and managed across Amazon Elastic Compute Cloud (EC2) instances or on-premises compute environments. OpsWorks has three offerings: AWS OpsWorks for Chef Automate, AWS OpsWorks for Puppet Enterprise, and AWS OpsWorks Stacks. Puppet offers a set of tools for enforcing the desired state of infrastructure and automating on-demand tasks [98]. | N/A |

| ADT | Azure Automation—Azure Automation delivers cloud-based automation, operating system updates, and configuration services that support consistent management across Azure and non-Azure environments. It includes process automation, configuration management, update management, shared capabilities, and heterogeneous features [99]. | N/A |

| Ditto | Eclipse Arrowhead is an open-source framework for industrial automation based on service-oriented principles. It allows the creation of a highly flexible System of Systems (SoS) by defining local clouds for connecting application systems running on industrial cyber–physical systems (ICPSs) [87]. | N/A |

Azure Automation delivers cloud-based automation, operating system updates, and configuration services that support consistent management across Azure and non-Azure environments. It includes process automation, configuration management, update management, shared capabilities, and heterogeneous features [

99].

Table 11 shows the comparison of DT platforms against DT modeling, highlighting both the features they support and those they do not support. ADT uses a digital twin definition language (DTDL) to enable users to define their models in their vocabulary, using existing models to inherit from or interact with drawing a graph of DTs [

85]. DTDL language is based on JavaScript object notation (JSON) format and a comprehensive set of application programming interfaces (APIs) and tools [

100]. DTDL allows defining the model from scratch or inheriting from another. A model is defined by name, ID, and other properties. A DT model can have relationships with other models to exchange data, attach components as other models, and command requests and responses [

85,

99].

The use-case of DTs built using ADT spans various domains such as predictive maintenance, smart buildings, smart cities, energy, agriculture, manufacturing, asset tracking, and management [

85].

On the other hand, AWS TwinMaker is a graph-based twin virtualization platform designed for the quick creation of DT [

84]. This platform offers efficient tools to generate virtual representations of existing physical systems, integrating real-world data for faster monitoring operations [

86]. For authentication, users can choose two-factor authentication via Amazon Cognito or integrate with an existing LDAP setup. Additionally, users can gain in-depth insights into their DTs using Amazon QuickSight [

84]. Amazon Kinesis Data Stream/analytics facilitates real-time data stream processing. AWS TwinMaker offers alerts based on data visualization to detect deviations from expected data or forecasts [

89,

92]. Devices not directly connected to the Internet can access AWS IoT Core through a physical hub [

92]. AWS provides ML models and AI services, such as Amazon SageMaker, enabling the development of predictive models. Integration with Lambda and SageMaker enhances data processing and decision-making capabilities [

89].

The Rules Engine in AWS enables continuous processing of inbound device data, allowing intuitive filtering and transformation using SQL-like syntax [

92]. AWS OpsWorks offers configuration management services, automating server configurations across instances with tools like Chef and Puppet [

98]. AWS TwinMaker enables DT model specification using knowledge graphs. To model the physical environment, one can create entities in AWS TwinMaker that are virtual representations of physical systems, such as a furnace or an assembly line. One can also specify custom relationships between these entities to accurately represent the real-world deployment of these systems, and then connect these entities to various data stores to form a DT graph, which is a knowledge graph that structures and organizes information about the DT for easier access and understanding. As one builds out this model of their physical environment, AWS TwinMaker automatically creates and updates the DT graph by organizing the relationship information in a graph database [

95]. Use cases for DTs with AWS TwinMaker include predictive maintenance, remote monitoring of infrastructure, energy optimization, and real-time tracking of assets and supply chains [

85].

The Eclipse Foundation, operating as a global platform for open innovation and collaboration, presents an open-source framework called Ditto for IoT and DT solutions [

84,

86]. Ditto assists businesses in building DTs of assets with internet connectivity. As an IoT middleware, it seamlessly integrates into existing backend systems using supported protocols. It provides web APIs for simplified workloads, Microservices with the data store, static metadata management, and a JSON-based text protocol for communication [

86].

Table 12 illustrates the additional functionalities of DT platforms, categorized under various aspects such as storage, security, privacy, and more.

To summarize, there is a variety of IoT DT solutions available in the market, each possessing unique capabilities. Nevertheless, making a well-informed decision necessitates a comprehensive understanding of the distinctive features provided by each platform.