Introduction

Cognitive and neuropsychological studies have shown that human face is a very special sort of stimulus in terms of functions such as perception, recognition, adaptation, social interaction and non-verbal communication. Despite their diversity due to social tags and cultural norms, facial expressions conveying happiness, sadness, anger, scare, disgust, and surprise are considered universal. During face observation, the observer acquires some innate gains concerning the mental and the emotional state of the observed face. In this respect, the clues to be obtained from faces are quite functional in regulating human relationships. The processing of data related to the face is fast and automated and is associated with certain areas of the brain such as the fusiform face area (

Nasr & Tootell, 2012).

Many studies with adults have shown that females are more capable of determining accurately emotional facial expressions than males (

Hall and Matsumoto, 2004; cf Vassalo, Cooper and Douglas, 2009).

Although emotional facial expressions are recognized, localized, and eye fixated more quickly and accurately compared to neutral ones (Calvo, Avero and Lundqvist, 2006), some studies have indicated that males and females are sensitive to different kinds of emotional expressions. For example,

Goos and Silverman (

2002) have shown that females as compared with males are more sensitive to angry and sad facial expressions. The fact that females recognize negative emotions more rapidly is perhaps explained better from the evolutionary perspective (rather than from the general learning principle). As females are responsible for rearing, caring, and protection of their children, they have developed those skills which are needed to protect their offspring from danger (Hampson, van Anders and Mullin, 2006). However, no gender differences were reported regarding the speed and accuracy of recognition of emotional facial expressions (Grimshaw, Bulman-Fleming and Ngo, 2004).

Studies using real human faces have shown contradictory results. For example,

Fox and Damjanovic (

2006) found that eyes provided a key signal of threat and that sad facial expressions mediate the search advantage for threat-related facial expressions. Juth, Lundqvist, Karlsson and Ohman (2005) found that happy faces were recognized quicker than angry and fearful ones. The authors speculated that this quick recognition might be due to the ease of processing happy faces. On the other hand, Calvo, Avero and Lundqvist (2006) asserted that angry faces were more superior to the others. While there are studies showing that an angry facial expression is superior (accuracy, response time, fixation

etc.) (

i.e., Calvo, Avero and Lundqvist, 2006), in some studies, it has been found that angry and happy faces are more superior than sad or scared expressions (Williams, Moss, Bradshaw and Mattingley, 2005).

In exploring observation behaviors, researchers apply various techniques to solicit information. One of the frequently used ones the Bubbles, which is a technique that examine the categorization and recognition performance to specific visual information in which sparse stimulus is presented to determine the diagnostic visual information (Gossellin and Schyns, 2001). The Bubbles technique is used in face recognition and categorization studies (Smith, Cottrell, Gosselin and Schyns, 2005; Humphreys, Gosselin, Schyns and Johnson, 2006; Vinette, Gosselin and Schyns, 2004). Another approach is to use the eye tracking with eye movement metrics.

Previous studies have investigated the eye tracking patterns under a given task or instruction (emotional rating, identifying emotional valence category, defining the emotion). Those studies indicated a high success rate of categorization for happy faces but not for scared faces (

Calvo and Nummenmaa, 2009). On the other hand, there are limited numbers of studies related to the gender differences on eye tracking of the emotional face expressions without a task or instruction. Yet, it is critically important to identify which area(s) of the face have been focused while looking at a face in order to understand the underlying mechanism of non-verbal communication.

It is claimed that some specific facial areas provide more important clues in terms of determining and coding some emotional facial expressions. Eisenbarth and Alpers (2011) have determined that regardless of the emotion indicated by the facial expression, the first eye fixation is oriented on the eyes and the mouth. When the facial expression was sad, the fixation was on the eyes; when it was angry the fixation was on the mouth. It has been found out that in happy facial expressions, the eye fixation is on the mouth, while in scared and neutral facial expressions the eye fixation is equal both on eyes and mouth. As these researchers showed, eyes were important predictors of determining sadness and mouth was important predictor of determining happiness. In another study in which the participants were asked not just to passively look at the expressions, but to actively indicate the valance of the emotion, it was found that participants’ eye movements were directed towards the areas specific to the emotion; that is, “the smiling mouth” or “the sad eyes” (

Calvo and Nummenmaa, 2009).

Previous studies indicated no gender differences when participants are to identify the type of emotional expression, to rate the emotional valence of stimuli or to look at the stimuli only, in situations where the stimulus is presented for less than 10 seconds (Kirouac and Dore, 1985).

Considering the emotional face processing and hemispheric lateralization issue, such research findings imply mainly two lateralization hypotheses: (1) the hemispheric lateralization hypothesis (HLH) and (2) the valencespecific lateralization hypothesis (VSLH). According to hemispheric lateralization hypothesis, the right hemisphere is more specialized in to processing emotions with respect to the left one. Studies both supporting (Bourne and Maxwell, 2010) and contradicting (Fusar-Poli et.al, 2009) the HLH exist in the literature. On the other hand, the VSLH posits that the left and right hemispheres specialized each in processing different kind of emotions with the left hemisphere being specialized in processing mainly the positive emotions, and the right hemisphere being specialized in processing negative emotions (Jansari, Rodway and Goncalves, 2011).

Despite the numerous eye tracking studies on the recognition of facial expressions, conflicting results emerge. The aim of this study is to shed some light on this issue by taking into account a set of variables that may be responsible for prior conflicting results. Specifically, the focus was on observer’s gender, age of the model, as these variables were related different lateralization processes.

Method

Participants

The participants were forty volunteer undergraduate students (20 females, Mage = 20.25, SD = 0.64; 20 males, Mage = 21.60, SD = 1.50) with ages ranging from 19 to 27 years old. All participants (92.5% right-handed) had normal or corrected-to-normal vision and were allowed to wear their glasses or contact-lenses if required. All participants gave written informed consent.

Picture Battery

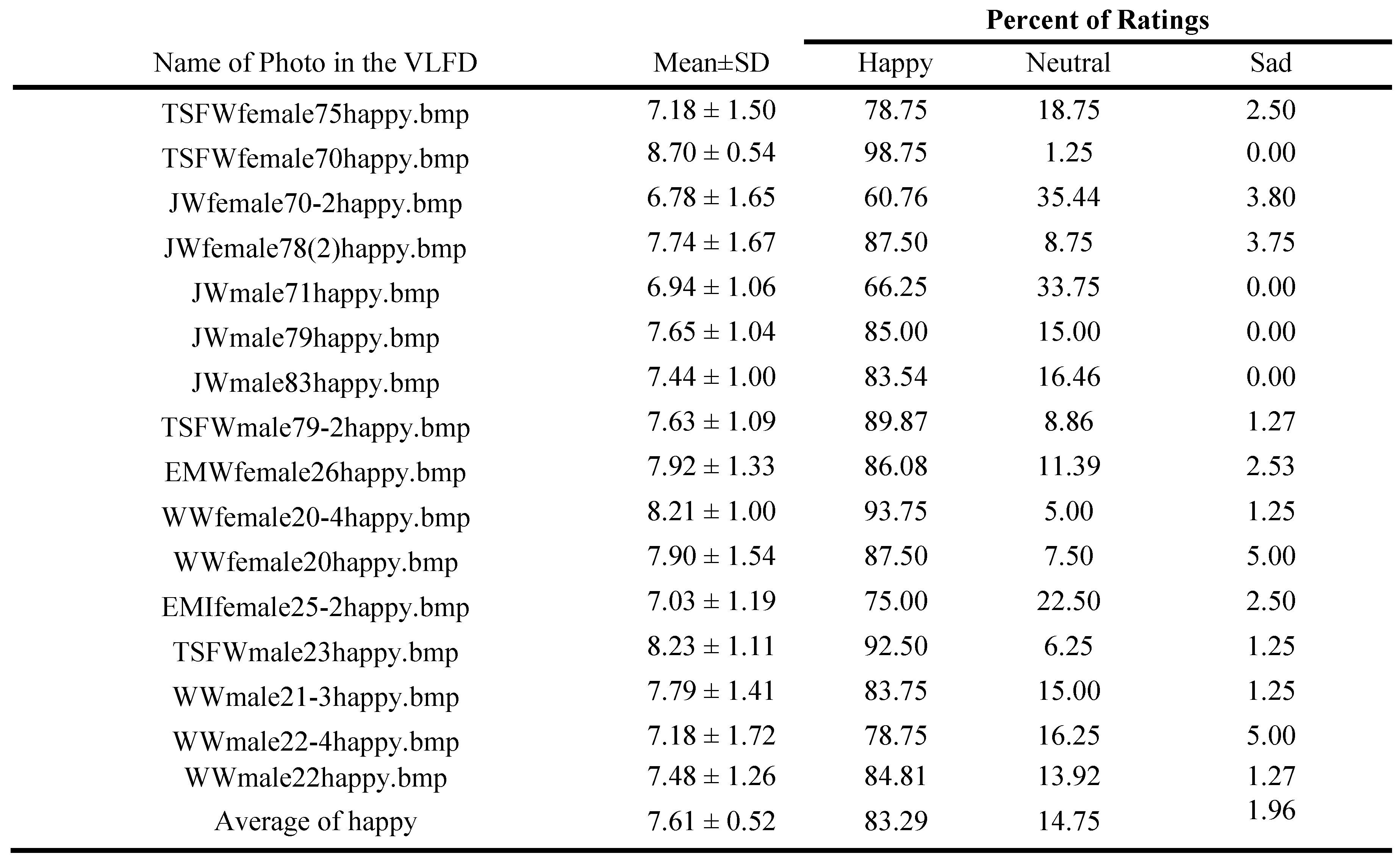

Forty-eight digital colorful, static and real face pictures with happy (16 pictures), neutral (16 pictures) and sad (16 pictures) emotional expressions were selected from the Vital Longevity Face Database (VLFD) (Minear and Park, 2004) were used in the study. The stimulus battery consisted of 24 females, 24 males, 24 young (with ages ranged from 19 to 27) and 24 elderly (with ages ranged from 65 to 84) person pictures with three different emotions. To identify whether the emotional expressions reflect similar results in Turkish culture, a pilot study was conducted with 49 randomly selected photos from VLFD.

For this purpose, a 9 point-Likert scale (sad: 1, neutral: 5, happy: 9) was administered to N = 80 volunteer students (50 females, Mage = 21.30, SD = 1.34; 30 males, Mage = 21.87, SD = 1.11) who were not the participants of the study to rate the emotional valences of faces. The photos were presented to the students in a sequence within one session by a projector and responses were recorded on a recording sheet.

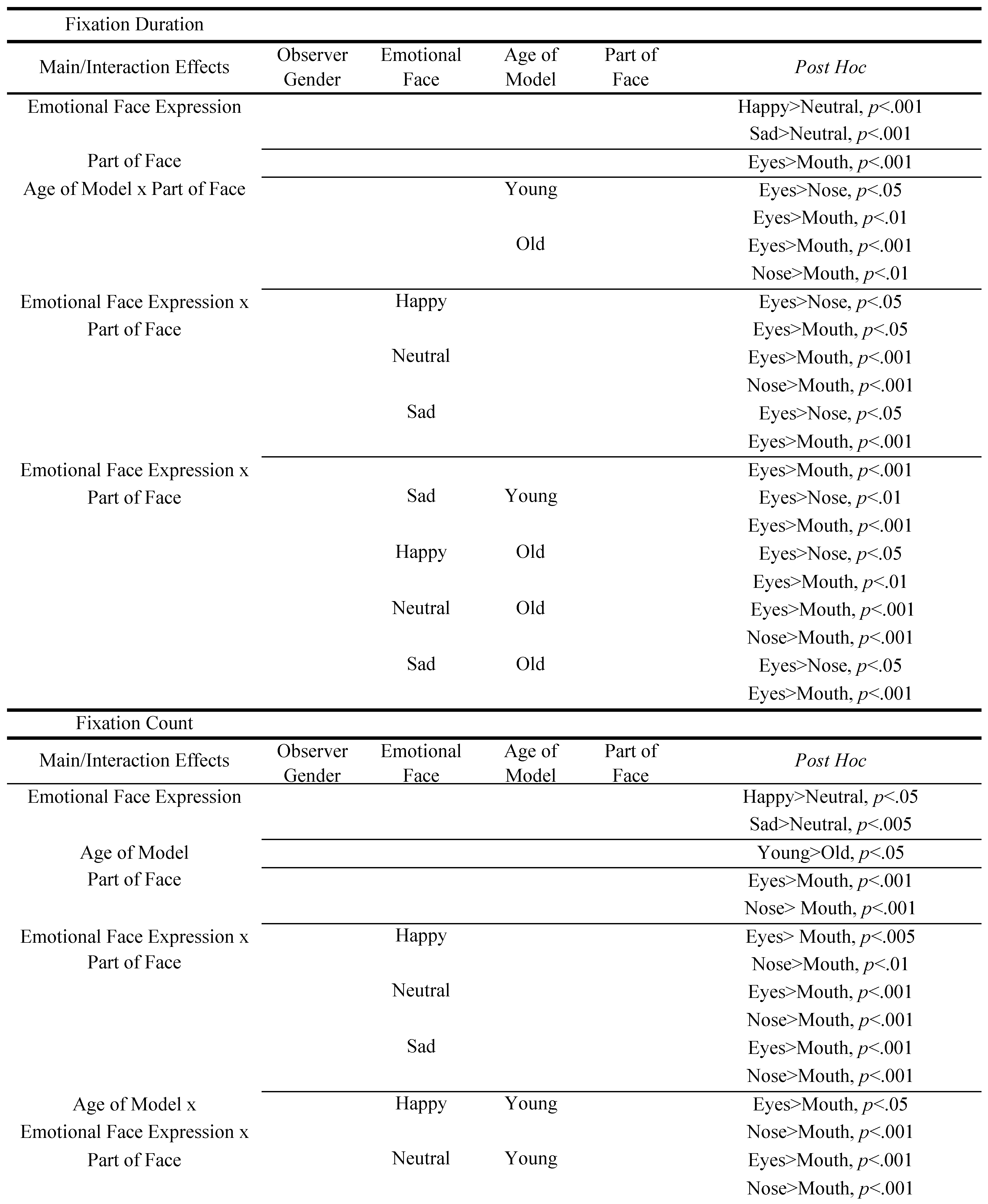

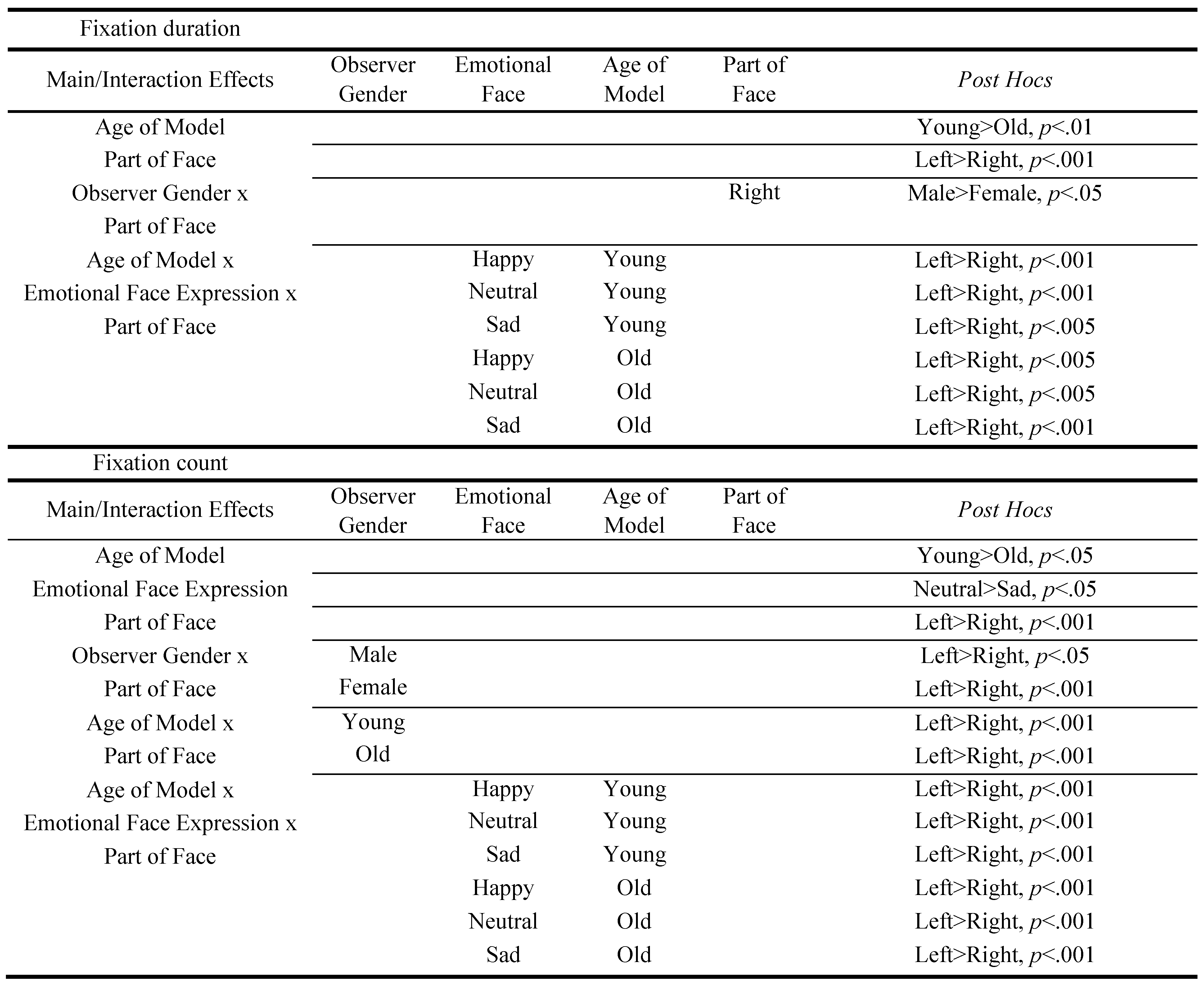

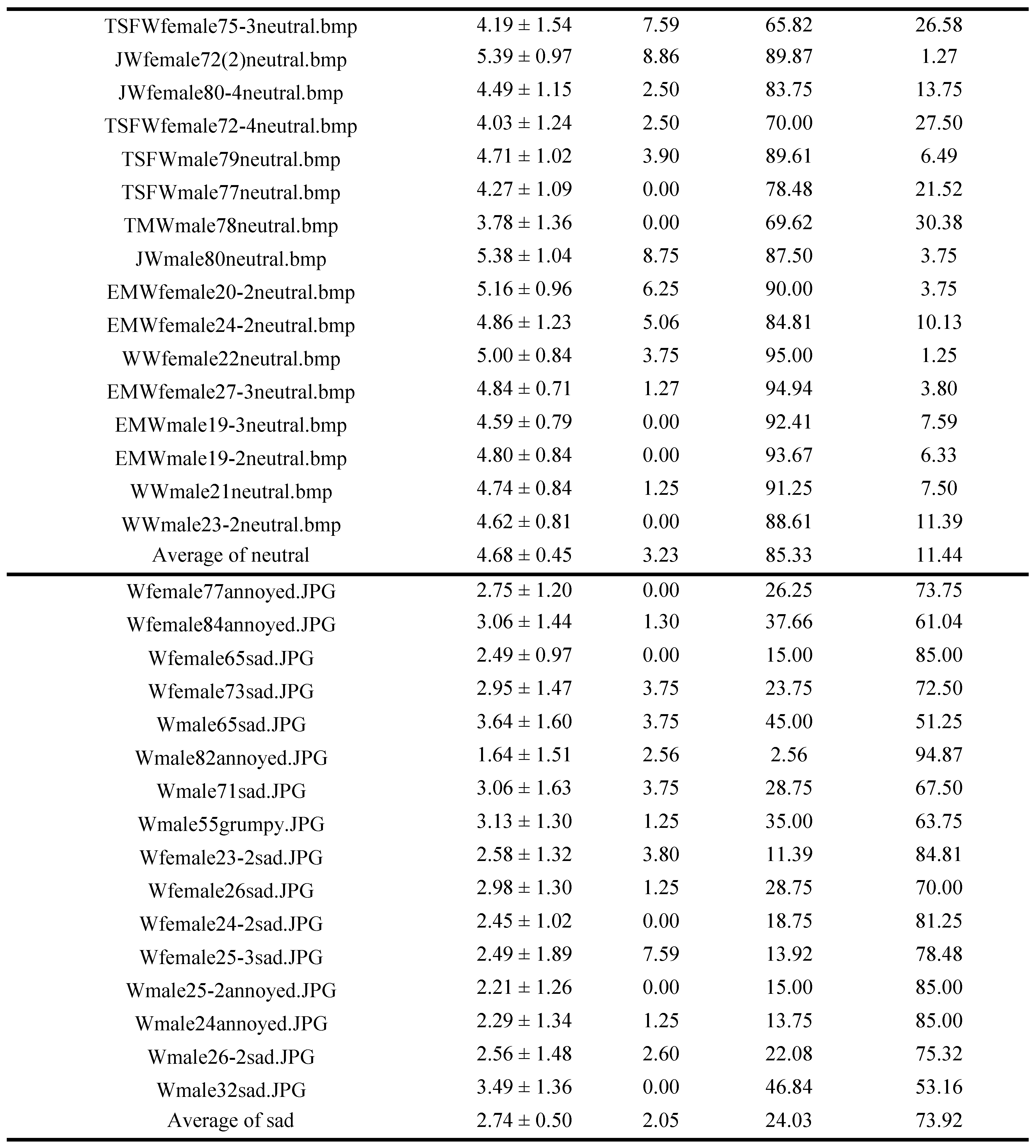

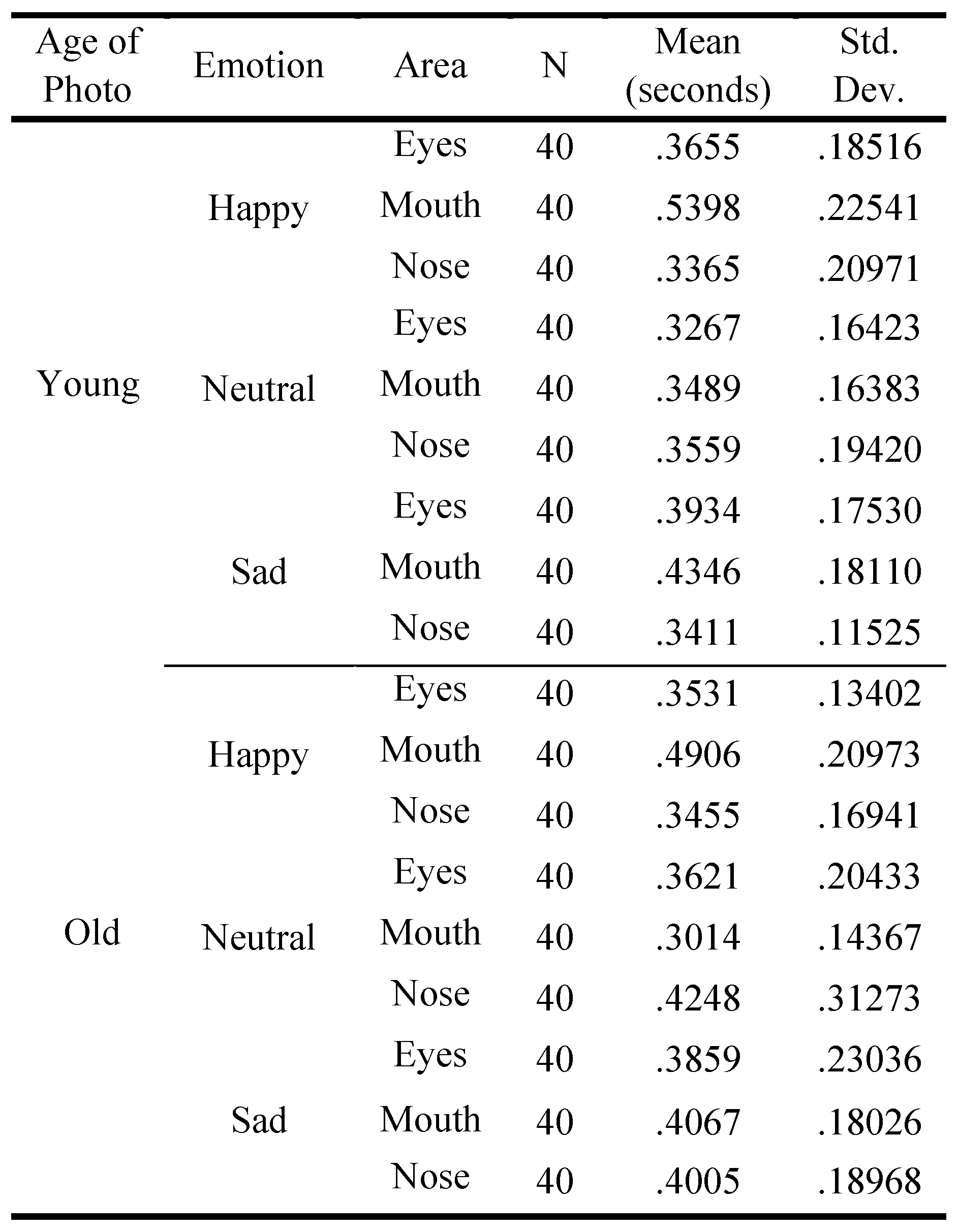

The pilot study revealed that the face pictures representing three emotion conditions (happy, sad, neutral) in American culture were rated accordingly and similarly classified into the same emotion categories with 80 Turkish students, (happy, 7.61±0.52; sad, 2.74±0.50; neutral, 4.48±0.45) (

Table 1). In addition, average picture ratings and the percentages on 9 point-Likert scale were calculated according to happy, sad and neutral categories (happy: 9, 8, 7; sad 3, 2, 1; neutral: 6, 5, 4). Selected photos were rated 83.29 % as happy, 73.92 % as sad, and 85.33 % as neutral. These findings showed that the emotional values of the photos used in the study were valid for the Turkish culture as well.

Procedure

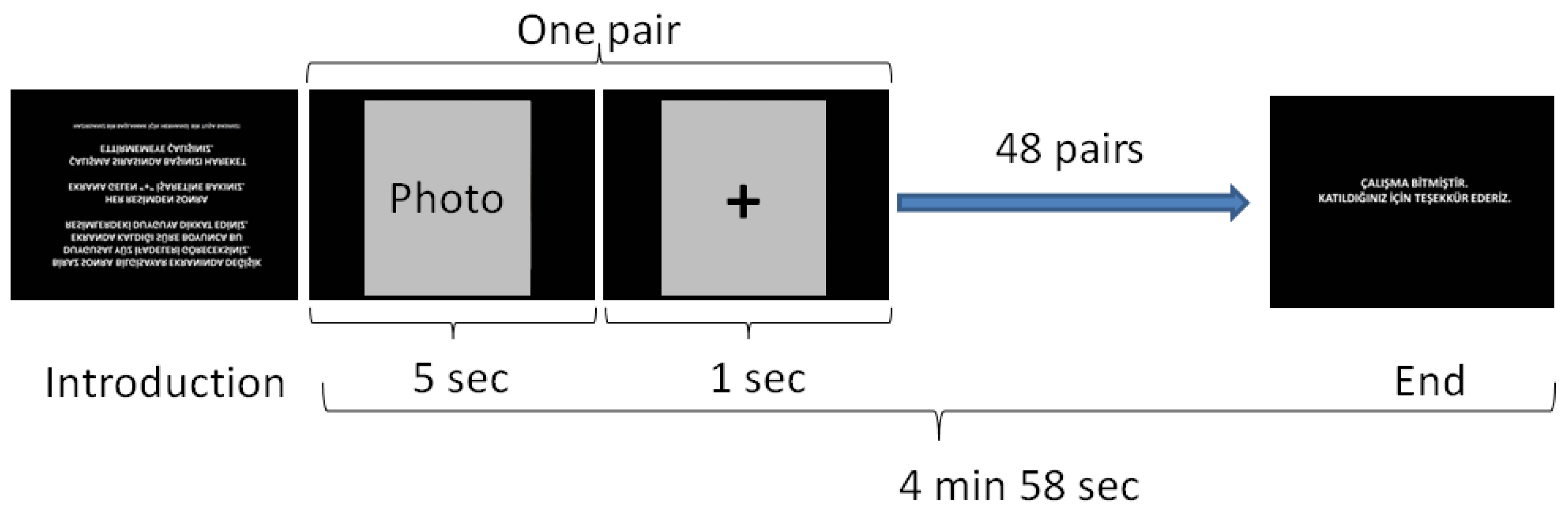

Forty-eight photos with emotional facial expressions were presented to the participants in random order on 17 inch TFT monitor as represented in

Figure 1. Each photo was presented on the screen for 5 seconds, followed each time by a one-second photo, showing a black plus sign in the center of 14,5x17 cm gray box.

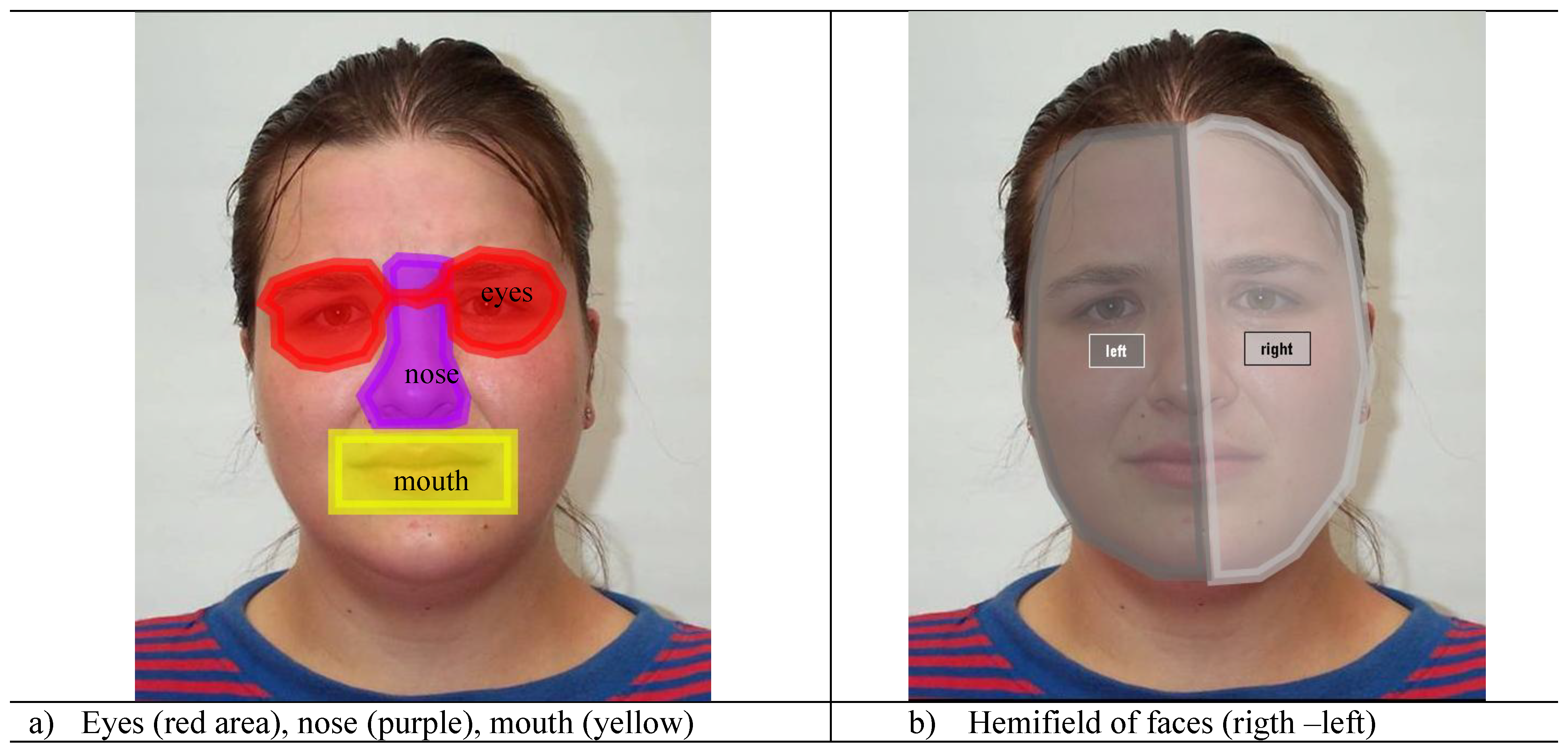

The study started with an instruction and a dummy picture (for warming to the experiment). Each emotional facial expression (happy, sad, and neutral) was shown 16 times at random order. Participants wearing glasses or contact lenses were permitted to wear them during testing hence this did not interfere with eye tracking data collection procedure. Participants were seated approximately 60–65 cm away from the computer screen and calibration was set for each participant separately. The base for calibration level was set to 70%, so that participants who had lower calibration scores were omitted from the study. The visual angle of the display was 30° x 27° and visual angle of the photos were approximately 14° x 16°. Eye movements were recorded by using 120 Hz remote infrared eye tracking system (T120 Tobii Eyetracker) with a 0.5° error rate. Participants were instructed to passively look at the photos. Two areas of interest (AOIs) were determined for eye metrics: (a) the face (parts of eye, nose, and mouth-

Figure 2a) and (b) the lateralization (hemifield of face: left and right sides-

Figure 2 Figure 2a).

Dependent measurements were fixation duration, first fixation location-duration, and fixation count under participants’ gender, emotional expressions, the age of the model, and parts of face. Those eye metrics were selected based on the relevant literature on the face recognition studies with eye movements (Eisenbarth and Alpers, 2011; Van Belle, de Graef, Verfaillie, Rossion, Lefèvre, 2010; Wong, Cronin-Golomb and Neargarder, 2005). All selected AOI’s (part of faces and/or hemifields) are made equal numerically. At the end of the test, all participants were debriefed.

Discussion

In this study, it was found that, emotional face expressions affected visual screening patterns in all conditions. This finding is consistent with the literature (see, Bradly et.al. 2003;

Carstensen and Mikels, 2005; Kesinger and Corkin, 2004). Young participants looked on the sad faces longer and more frequently, followed by the happy and neutral faces. These findings were in parallel with

Fox and Damjanovic (

2006) and Hortsman and Bauland’s (2006) research findings as well as with the emotional memory enhancement (EME) effect (Bradly et.al. 2003;

Carstensen and Mikels, 2005). As a well-known phenomenon, EME effect posits that emotional stimulus are better recalled and recognized than the neutral ones as the emotional stimulus leads to arousal, which in turn results in an enhancement of attention and memory (Kesinger and Corkin, 2004). According this effect, elder people generally tend to remember the positive, but the young people tend to remember the negative stimulus. This encoding selectivity causes emotional regulations so that young adults remember more negative aspects of the events (

Thapar and Rouder, 2009). The findings of the study indicated that gender of the observer did not have a significant main effect on the fixation duration and fixation count. This finding is partly consistent with previous findings (

Calvo and Lundqvist, 2008, Grimshaw et. al. 2004, Rahman, Wilson and Abrahams., 2004). Perhaps other variables such as the treatment manner are more important than the gender of observer to explain the inconsistencies reported in the literature.

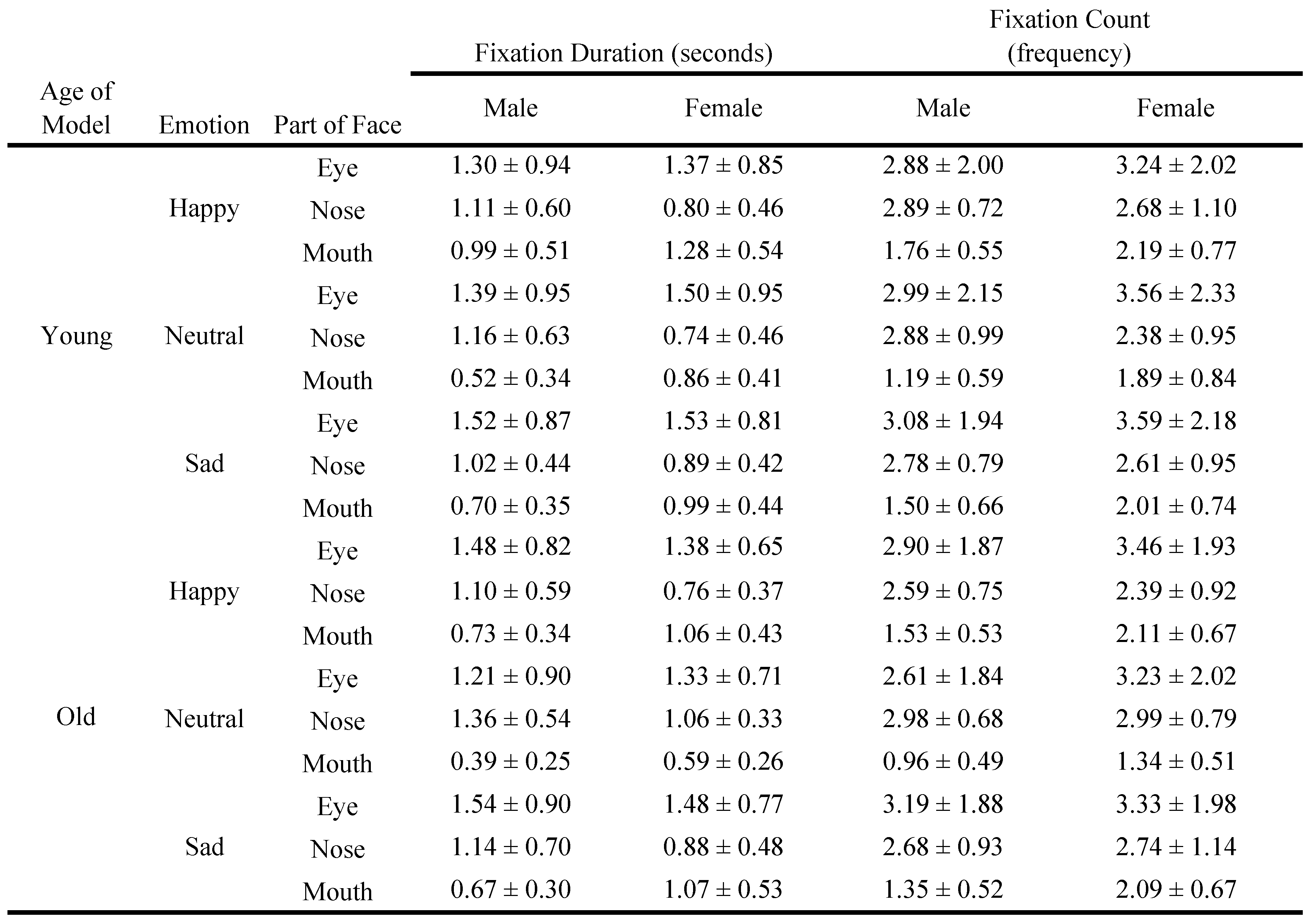

In addition, the parts of face variable had an effect on the fixation duration and fixation count measurements. Participants looked at eyes for a longer time and more frequently than at the other areas. As this study shows when the participants were asked to passively look at the face expressions, they focused on the eyes to determine sadness and happiness; on the other hand, they looked at the eyes and noise in neutral expressions. The longest fixated part of face was eyes both on the young and old models. This finding is consistent with the Eisenbarth and Alpers’ (2011) finding showing that eyes were important areas in determining sadness and the mouth in determining happiness. The studies which used Bubble technique for face recognition -such as those by Vinette, Gosselin and Schyns (2004), Humphreys, Gosselin, Schyns and Johnson (2006)- also reported similar findings that eyes are the most diagnostic regions for recognition of faces. Vinette, Gosselin and Schyns (2004) went further to suggest that eyes are a rich source of information about a person’s identity, state of mind, and intentions. Another finding of this study was that in neutral faces the participants looked at the models’ eyes and nose for longer periods of time than the mouth. For all of the emotional expressions (sad, happy and neutral) eyes received the most fixations.

Both in the young and old models, eye tracking metrics (first fixation duration and fixation duration) indicated a varying distribution across the parts of face. Participants fixated their gaze for a longer time on the eyes of the model than on its mouth and nose. They did so, in both the young and old faces. These findings partly support Calvo and Nummenmaa’s (2009) findings. Additionally, when the young participants were asked simply to passively look at the expressions for young neutral faces; it was revealed that their eye movement patterns were directed towards the eyes (that is, “the neutral eyes”). Yet, in other emotional expressions, participants’ eye movement patterns indicated a tendency towards the mouth and nose (that is, “the sad and smiling mouth”). When the young participants were asked to passively look at the expressions for sad, happy and neutral old faces, their eye movement patterns were directed towards the eyes (that is, “the sad, smiling and neutral eyes”). Consequently the eyes were the main predictors of passive visual screening for emotional facial expressions.

In this study, the position of first fixation was found to be on the nose area. However, duration of the first fixation to the facial parts varies according to the observer's gender and age of the model. Arizpe, Kravitz, Yovel and Baker (2012) suggested that starting position of eye movements strongly influence the visual fixation patterns during face processing. Given that we used a stimulus (black sign) for a second at the centre of the screen to fix participants after each photo, it is possible that participants might have looked at the centre of the upcoming face photo which corresponds to nose. Therefore, we cannot speculate that the nose is a start position when looking at the face for emotional face recognition. Although we had asked the observers to look at the photos with no guiding instructions, other effects such as the plus sign presented before each photo, or the contrast and the brightness of the photos, might be responsible for this effect and therefore could be explored more in depth in future studies.

According to the first fixation duration findings, for both young and old models with happy and sad emotional expressions, the first fixation was longer on the mouth while, it was on the nose for the neutral expressions. Mouth was an important part of face as the first and the basic phase of emotional processes whereas the eyes were important in predicting the higher level emotional processes. The importance of mouth, on the other hand, might be explained with evolutionary approach.

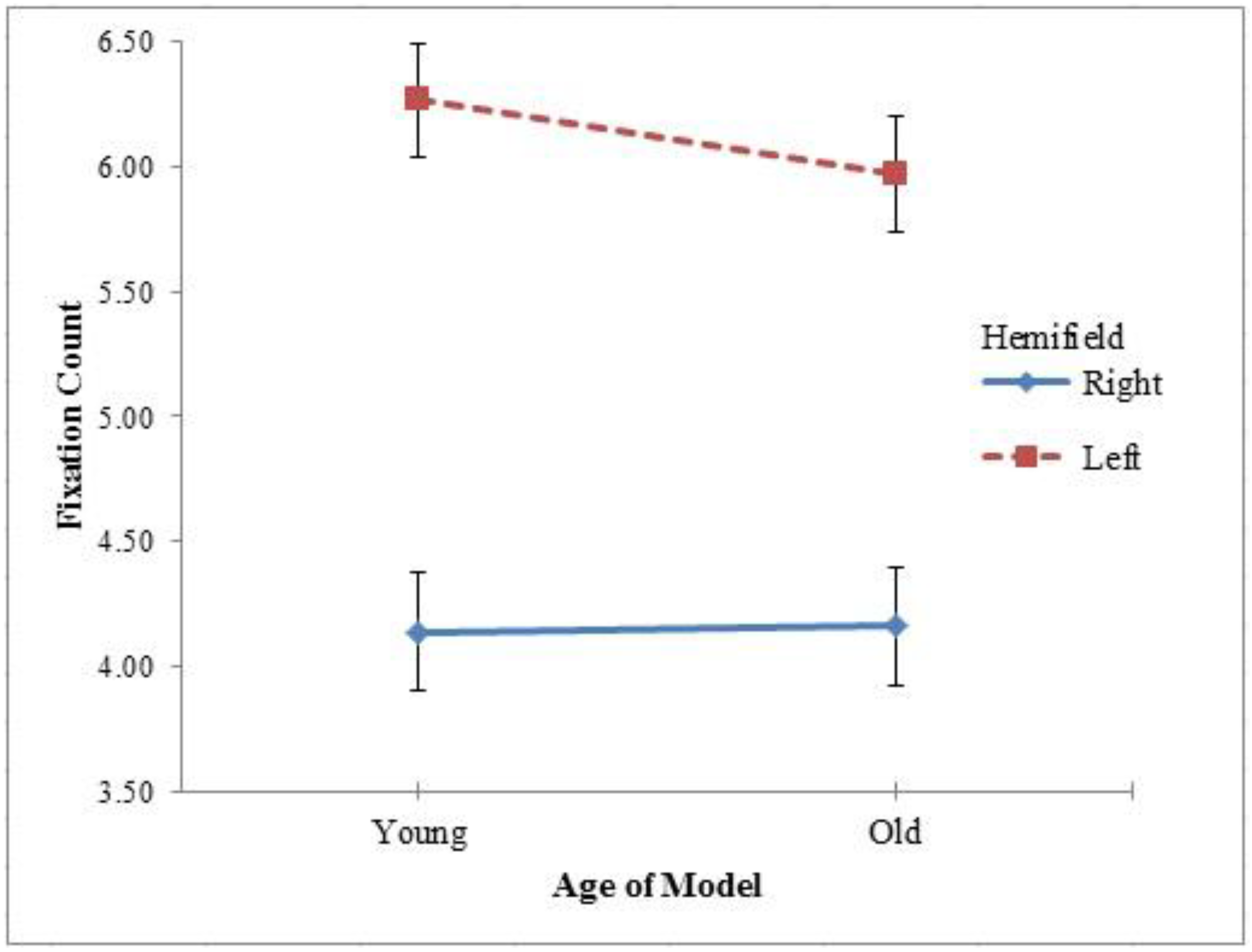

Age of the model has been found to be effective on the fixation duration and fixation count. According to these results, participants looked at the young models more and longer in time than the older ones. We annotated this finding to the physiological basis of “ageism”.

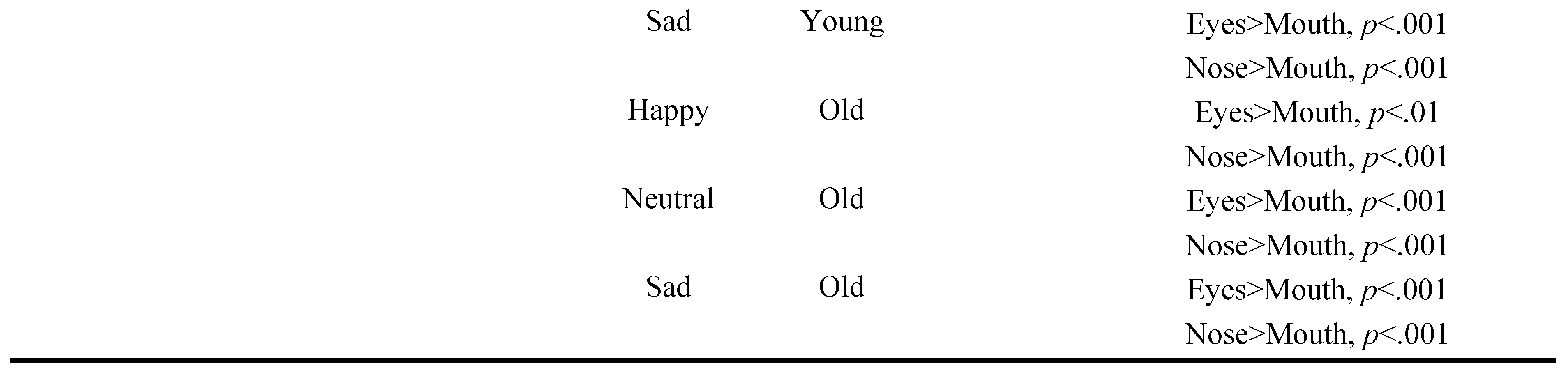

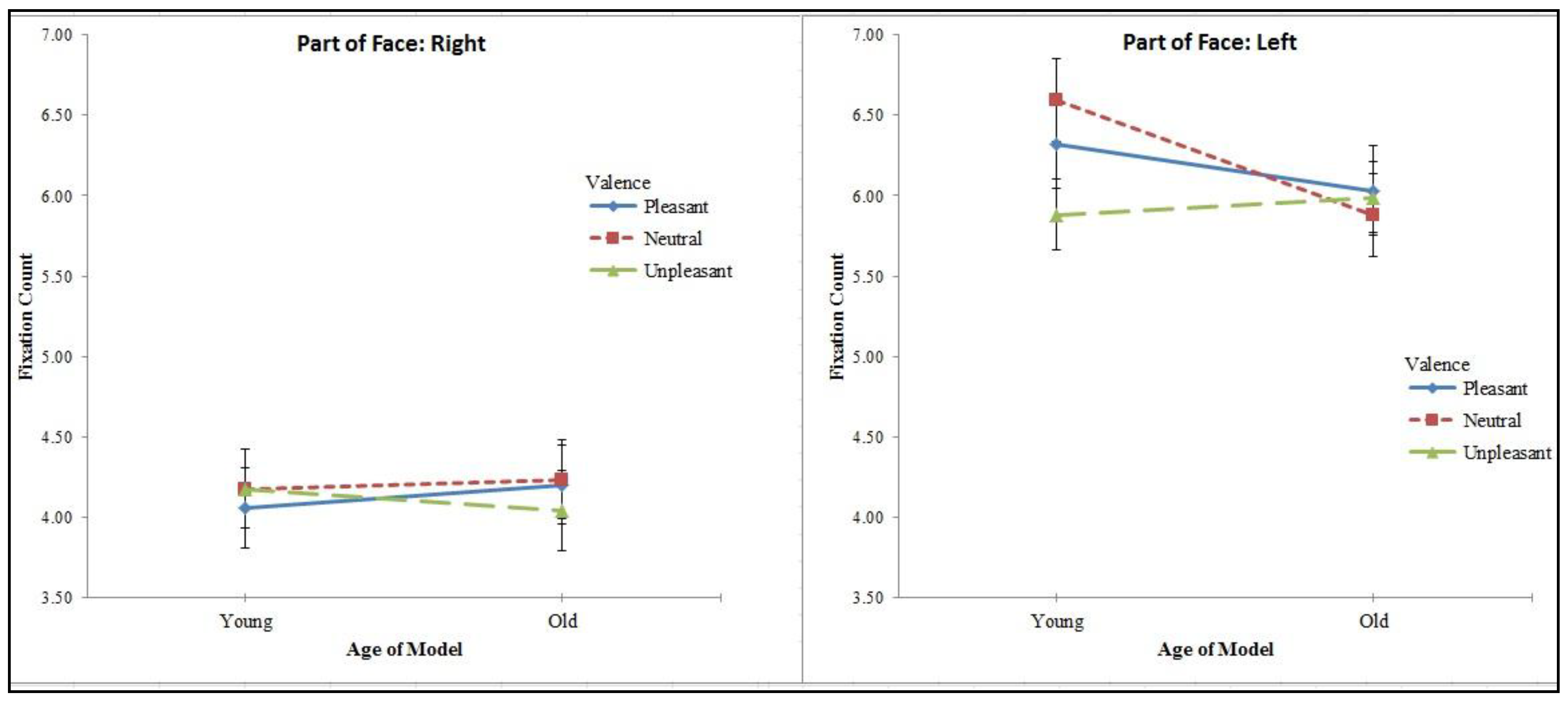

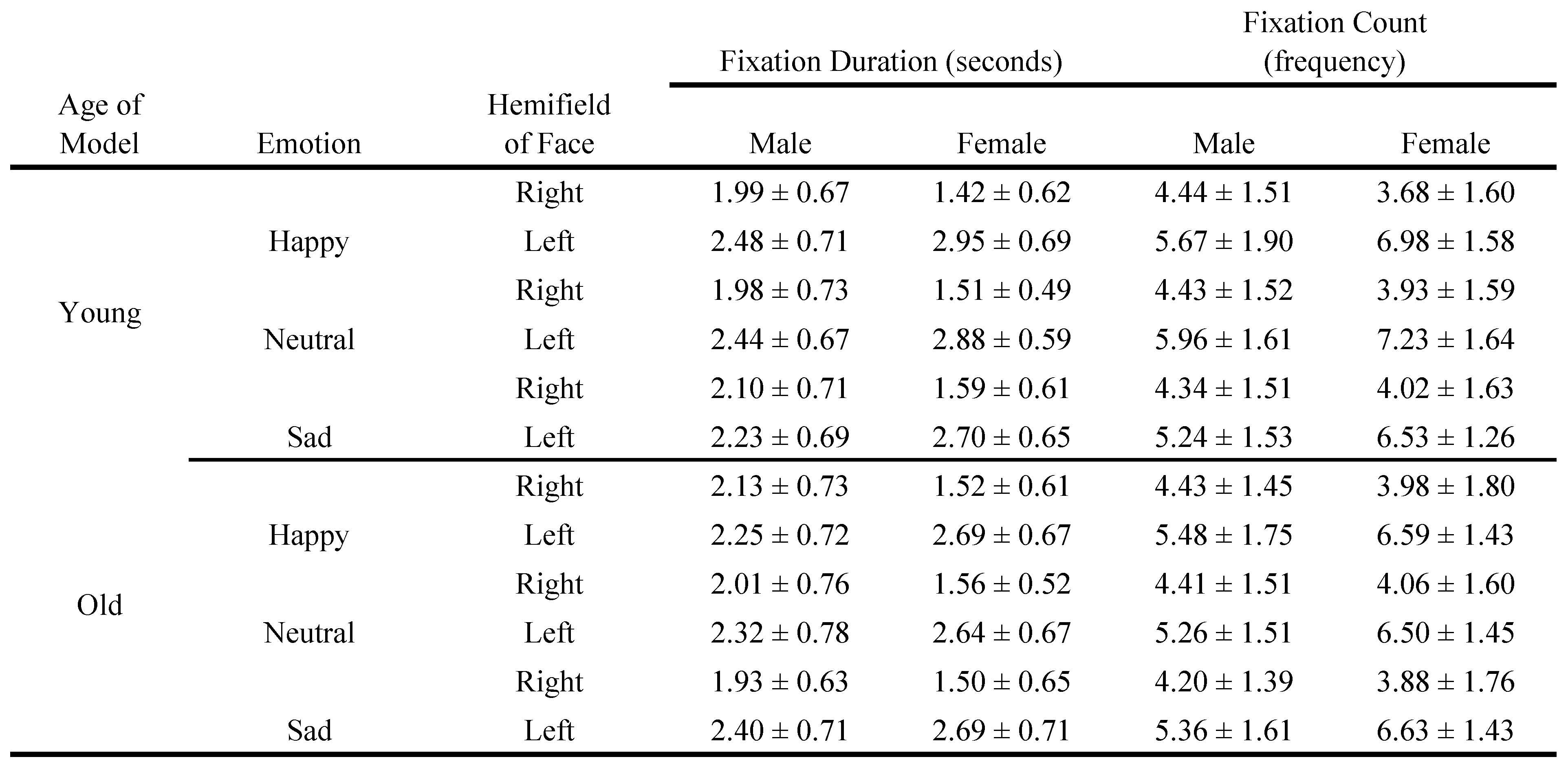

These findings suggest that some parts of face, especially the eyes, give us more important clues for determining and analyzing the emotional faces. The visual information on the left visual field (the left side of the face) is mainly processed by the right hemisphere and the visual information on the right visual field (the right side of the face) is mainly processed by the left hemisphere of the brain. Considering the effects of laterality on the fixation duration and fixation count, the left side of the face was fixated more and longer than the right side. Participants looked on the left side of all emotional (happy, sad, neutral) young and old faces more and longer compared to the right side. Those findings are in line with the hemispheric lateralization hypothesis that deals with the emotional face processing. Parallel with our results, Vinette et al., (2004) also used the Bubbles technique and found that the eye on the left half of the stimuli was used more effectively and more rapidly for face recognition than the right half. As they had suggested, “the right hemisphere of the brain processes faces more efficiently than the left”. Yet, it should be noted that this shouldn’t be taken as evidence supporting hemispheric difference in emotion/facial expression processing. More research is needed to explain this tendency as well as to explore whether this could be taken as evidence to support emotion/facial expression processing.

Males looked at the right side of the face longer than females. However, gender difference was not significant on the left side of the face. Furthermore, gender differences were found with regard to fixation counts on both the right and the left side of the face. In addition, females fixated more frequently their gaze than males. Fixation counts occurred more frequently on the left side than the right side, especially for faces expressing happy and sad emotions. The findings of this study are consistent with past research showing that females, as compared to males, are more sensitive to emotional faces than the neutral ones and they are superior at recognition of emotional expressions (

Calvo and Lundqvist, 2008;

Calvo and Nummenmaa, 2009; Palermo and Colthearth, 2004). In addition, the current finding showing that female looked longer and more frequently to the emotional faces, indicates that females attend to informative cues more than males. Thus, it can be speculated that during passive looking females are more sensitive than the males at the emotional faces.

To sum up, in support of the hemispheric lateralization hypothesis, the present study confirms that the eyes and mouth are particularly important parts of the face when reading emotional expressions. It further extends our knowledge in showing that scan paths of young observers differ across different emotional facial expressions.