Introduction

In pioneering studies about the psychology and perception in art, Buswell observed that “Eye movements are unconscious adjustments to the demands of attention during a visual experience” (

Buswell, 1935, p. 9).

There is wide agreement among researchers that eye movements are not just generated in a reflex way by the low-level information present in the picture, but are also controlled by other high-level variables, such as the affective significance of the stimuli and the nature of the particular task assigned to the subjects (

Vuilleumier, 2005).

Research on emotional processing suggests that stimuli endowed with emotional valence, compared to neutral ones, have a privileged early and automatic access to analysis by the cognitive system, which is biased to preferentially process stimuli that have a special adaptive importance (Lang, Bradley, & Cuthbert, 1990; Lang, Bradley, & Cuthbert, 1997). This seems to entail that, when a neutral picture is paired with an emotionally valenced (unpleasant and pleasant) picture, the pattern of subjects’ eye movements should be influenced by the emotional one. Namely, emotionally valenced pictures should be more likely to attract the initial fixation than neutral pictures when both are presented simultaneously, each in one visual field. Furthermore, emotional pictures are also fixated for a longer total time interval (Calvo & Lang, 2004; Calvo & Lang, 2005; Nummenmaa, Hyönä, & Calvo, 2006). The emotional content of pleasant and unpleasant stimuli is particularly relevant also in clinical populations. As an example, anxious subjects are likely to dwell more on unpleasant pictures (

Mogg & Bradley, 1998) and depressed subjects tend to spend significantly more time on unpleasant images compared to pleasant and neutral ones (Kellough, Beevers, Ellis, & Wells, 2008).

Nummenmaa, Hyönä & Calvo (2006) investigated eye movement behaviour of healthy subjects during the view of pairs of pictures, either with the instruction to compare the pleasantness of the pictures or to attend to the emotional or the neutral picture of each picture pair. The authors found that both the first fixation and the subsequent gaze behaviour were biased toward both pleasant and unpleasant emotional stimuli and this bias occurred even under explicit instructions to attend to a concurrently presented neutral picture.

The same emotional pictures advantage has been found by Calvo & Lang (2005) in a recognition priming paradigm, where two pictures (one neutral, one emotional) were simultaneously presented parafoveally. Results showed that the first fixation was more likely to be placed onto the emotional rather than on the neutral scene and emotional probes were more likely to be recognized than neutral probes when primed by a picture of identical semantic content but different in size, orientation, and colour. Negative and threatening stimuli seemed to be able to increase attention and vigilance level (Bannerman, Milders, de Gelder, & Sahraie, 2009).

Interestingly, in a visual search task it has been shown that participants were faster in detecting threatening animals among a background of plants, than vice versa and this difference was ascribed to the threatening valence of the animals (Öhman, Flykt, & Esteves, 2001). However, the difference between threatening animals and plants may be due to an animate/inanimate difference rather than a threatening difference. In fact, the effect of emotion and stimulus category has not been separated.

Neuroimaging studies on healthy subjects suggested that animate and inanimate stimuli are processed by distinct brain networks (

Kawashima et al., 2001;

Perani et al., 1995;

Perani et al., 1999). In particular, the left fusiform gyrus has been highlighted to play a critical role in the processing of animate entities and the left middle temporal gyrus in the processing of object-tools (inanimate).

Warrington and Shallice (

1984) speculated that inanimate objects were predominantly defined by their functional significance, whereas living things were primarily identified through their visual features. In line with these feature-based accounts of semantic memory organization, Ković, Plunkett, & Westermann (2009) observed that participants tend to look at animate pictures in a much more consistent way than at inanimate ones. Participants looked first at the eyes before moving to other parts of animate pictures, whereas they demonstrated much more dispersed patterns of looking when presented with inanimate objects. Authors suggested that the processing of animate pictures may be based on the saliency of their visual features (due to eye-movements towards those features) and that the processing of inanimate pictures is based more on functional features (which cannot be easily captured by looking behaviour in such a paradigm).

These findings suggest that both the emotional valence and the thematic content (animate vs. inanimate) of a picture are crucial factors influencing eye movement features.

In order to provide further evidences in favour of this hypothesis, the aim of this paper is to investigate exploratory behaviour of normal subjects during the vision of emotional vs. non-emotional stimuli characterized also by different thematic content (animate vs. inanimate) in order to highlight the factors likely to influence the observer’s eye movements.

The working hypothesis to be verified is that subjects will look more at emotional and animate pictures, and that the combination of these two aspects will produce a significantly different exploratory behaviour compared to neutral and inanimate pictures.

Methods

Participants - 65 university students took part in this experiment in return for course credits: 37 females (mean age = 24.41 years, SD = 2.02 years) and 28 males (mean age = 24.07 years, SD = 2.34 years). A written informed consent was signed by participants before beginning the test session.

All subjects had normal or corrected-to-normal vision. The absence of depressive and/or anxious symptoms was assessed by administering participants two clinical assessments: BDI-II - Beck Depression Inventory (Beck, Steer, & Brown, 1996) and STAI - State-Trait Anxiety Inventory (

Spielberger, 1983). Participants were informed that they would perform an experiment concerning visual perception with eye-movements recording, but they were unaware of the purpose of the study.

Apparatus - Eye movements were recorded using the EyeGaze (LC Technologies (USA)

http://www.eyegaze.com) System, a videooculographic device that consists of a videocamera mounted below the computer display. EyeGaze makes use of the Pupil-Centre/Corneal-Reflection (PCCR) method to determine gaze direction. A small, low power, infrared light emitting diode (LED) located at the centre of the camera lens illuminates the eye. Specialized image-processing software identifies and locates the centres of both the pupil and the corneal reflection and computes X and Y co-ordinates of gaze position. Sampling frequency is 60 Hz and accuracy 0,45deg.

Material - Experimental stimuli have been selected from the International Affective Picture System (IAPS – Lang, Bradley, & Cuthbert, 2005). The IAPS system provides a large set of standardized emotional colour photographs with a wide range of thematic contents. All emotional photographs are normatively rated on the basis of three dimensions: affective valence (ranging from pleasant to unpleasant), arousal (ranging from calm to excited), and dominance (ranging from controlled to incontrol) (Osgood, Suci, & Tannenbaum, 1957). Taking into account the global valence rating given by the IAPS accompanying manual, the images have been selected according to three categories: neutral (range of valence from 4.32 to 5.85), pleasant (range of valence from 6.14 to 7.97) and unpleasant (range of valence from 2.11 to 3.95). According to the manual, global valence rating values ranged in a Likert scale from 9 to 1; with 9 representing a high rating on each dimension and 1 a low rating on each dimension. With regard to the global valence rating dimension, high values correspond to pleasant pictures, medium values to neutral pictures and low values to unpleasant pictures.

As for thematic content, two categories have been created: animate (i.e. depicting animals) and inanimate (i.e. representing objects and landscapes). The arousal dimension of the selected pictures ranged between 2.74 and 6.64 (M = 4.46, SD = 1.13). For the purpose of this study, pictures containing human faces or body parts were intentionally excluded.

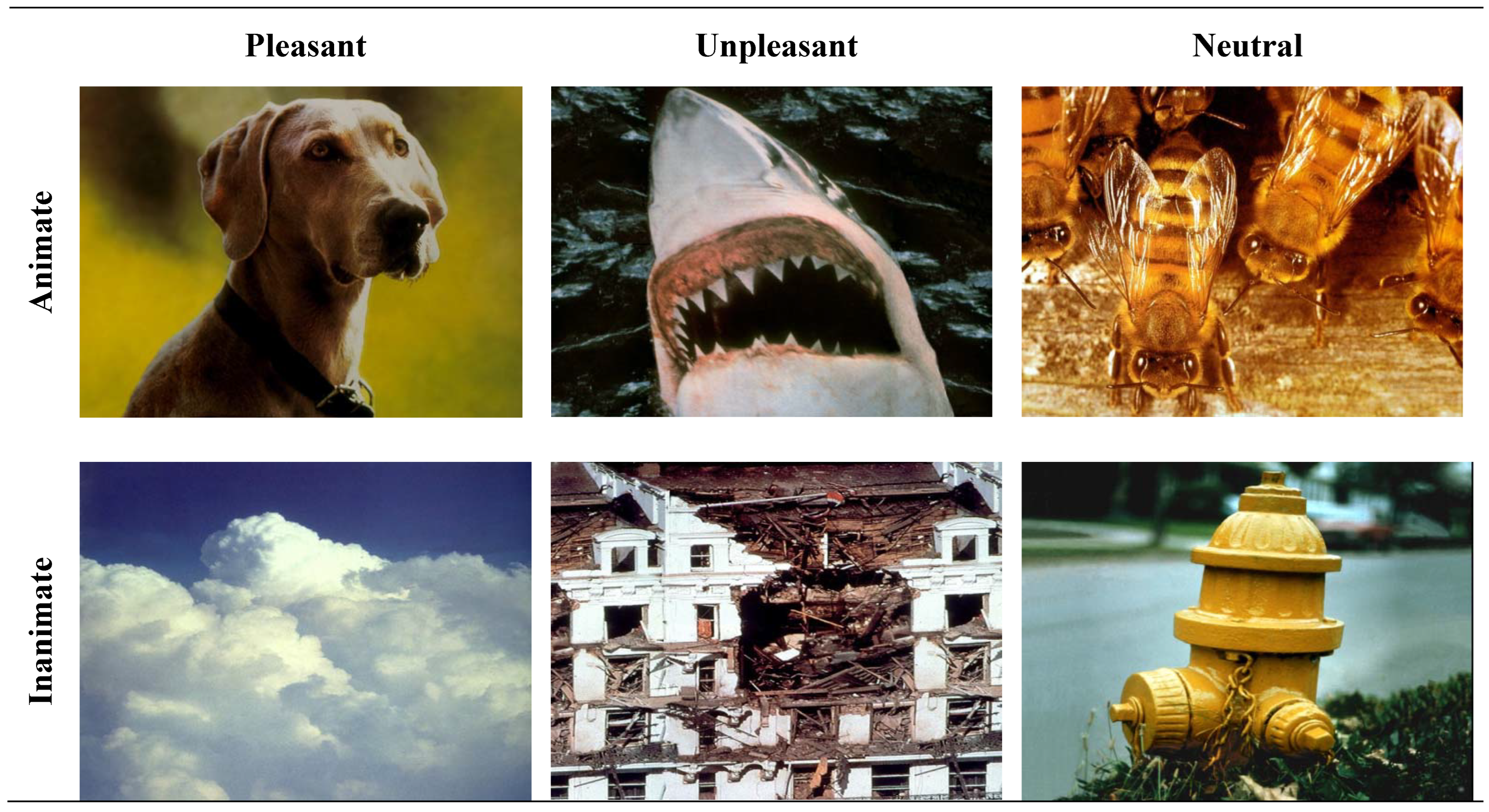

A total of 36 animate pictures - of which 12 neutral, 12 unpleasant and 12 pleasant - and 36 inanimate pictures - of which 12 neutral, 12 unpleasant and 12 pleasant - were selected. Examples of pictures for each category are shown in

Figure 1. Images had a size of 709 x 532 pixels (corresponding to 14 deg of visual angle) and the levels of color saturation and luminance have been adjusted with the Adobe Photoshop 6.0 program (

Table 1).

Combining the two factors - thematic content and valence - all possible combinations have been identified and 36 slides composed of two pictures each were created. The two pictures within a slide were paired horizontally and the side (left or right) of presentation of each picture was matched across the combinations.

Emotion processing affects eye movements with a possible effect lasting for long time, so that the eye movement pattern within one slide could affect the exploration of the next slide. However, neuroimaging studies (

Hart et al., 2010) showed that executive functions and affective processes compete in order to optimise on-line performance. In the present experiment, every time a new slide was presented, an “executive-affective” competition process was likely to occur. The slide presentation time, i.e. 12 seconds, was enough to allow the completion of this process without affecting the exploration of the following slide.

In addition, the eye movement pattern within a slide could be affected by the previous one as in the “trial-to-trial learning” effect (

Tabata et al., 2008). However, the effect of the previous trial/slide is larger with shorter intertrial intervals and it significantly decreases after 2 secconds. Again, in the present experiment it is unlikely that such a kind of effect could play a role in the eye movement pattern. Therefore, the same random sequence of slide presentation was used for all participants since presentation order does not seem to affect eye movement pattern.

Procedure - During the experiment subjects were comfortably seated at a desk, 60 cm away from a 17” computer display (1024 x 768 pixels resolution) that corresponds to 30 deg of visual angle. After calibration, subjects were asked to look at the images on the screen; no specific task was assigned to the subjects. The 36 slides were presented in a random sequence, the same for all subjects. Each slide was presented for 12 seconds. A blank slide with a red cross in the middle was interposed between two successive stimuli and maintained for 2 seconds in order to center subject’s gaze position before showing the successive stimulus.

Data analysis – Eye movement analysis was performed offline by using the NYAN (Eye Tracking Software NYAN2, Interactive Minds GmbH,

http://www.interactive-minds.com/) software. The first step in the analysis of eye movements consists of the identification of saccades and fixations within the recorded eye movements. NYAN makes use of a fixation detector algorithm based on gaze deviation threshold. This threshold indicates the maximum distance a gaze sample can have from the center of a fixation. A second threshold is used which indicates the minimum number of samples a fixation consists of. Gaze displacement between two successive fixations positions are identified as saccades. Once saccades and fixations are identified from the temporal sequence of eye position data, correlation is established with the position of gaze with respect to the computer screen.

For each image presented in a slide the following parameters were computed: number of fixations, mean fixation duration, total gaze duration and the number of transitions between the two images, left and right. In addition the first fixation that the subject makes at the appearance of a new visual stimulus has been identified as right or left picture viewing.

Gaze exploratory behaviour can be analysed from different points of view: quantitative parameters and exploratory strategies. Quantitative parameters are those provided by the software in terms of number of fixations, fixation duration, and gaze duration within each image. The exploratory behaviour can be quantified in terms of first fixation location at the appearance of a new visual stimulus, and number of transitions among the two pictures in the slide. Taken together, both aspects of exploratory behaviour are able to provide further evidence of the underlying cognitive processes and/or the attraction effects of stimulus characteristics.

All data were analyzed with SPSS 13.0 statistical software. Repeated measures ANOVA (analysis of variance) was used to compare the means of number of fixations and the gaze duration with post-hoc comparisons with alpha levels determined by Bonferroni corrections, and paired samples t tests were used for all condition comparisons.

Results

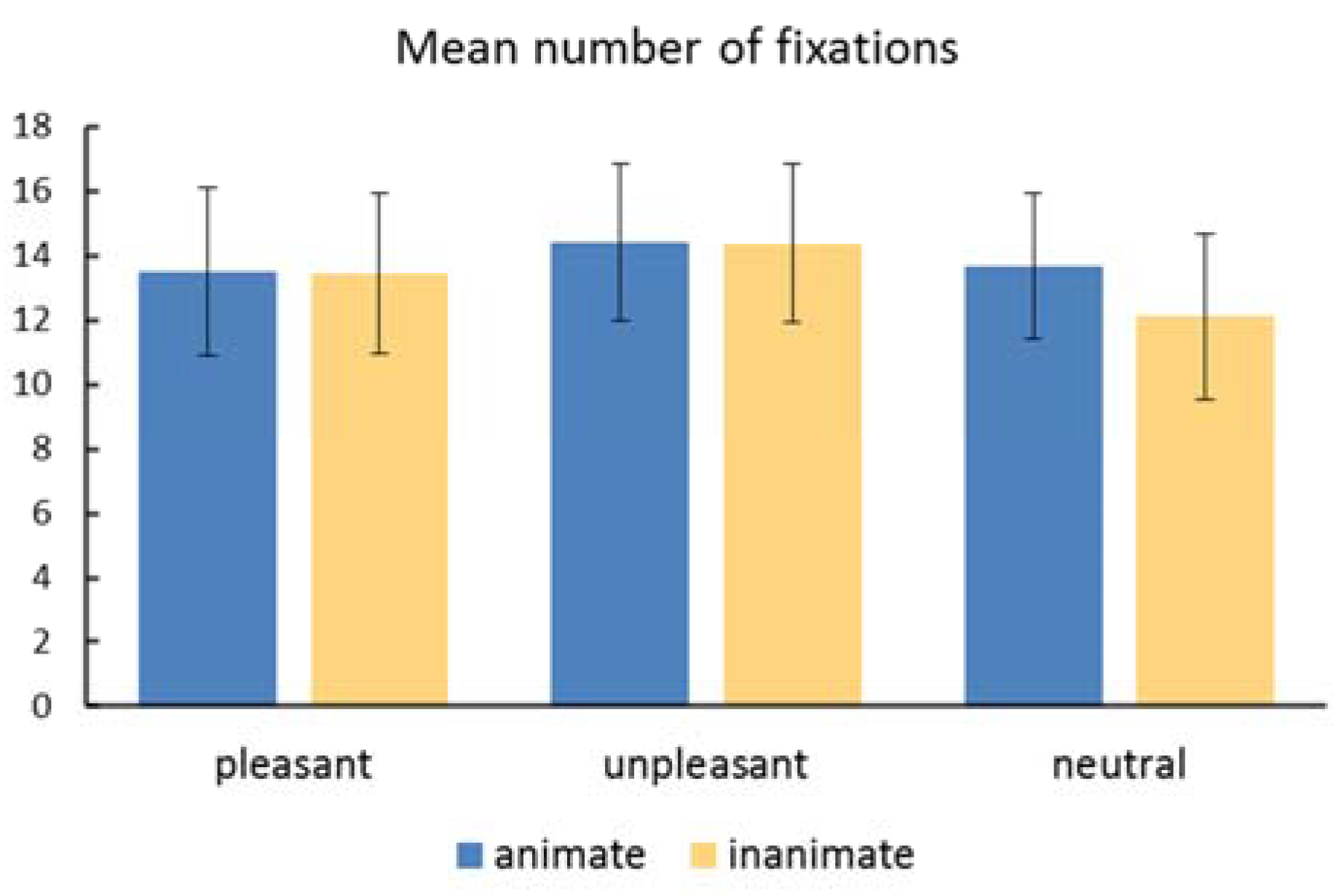

Number of fixations - The histogram in

Figure 2 compares the mean number of fixations for animate

vs. inanimate pictures within each valence category. Descriptive statistics are reported in

Table 1. A 3 (valence: unpleasant

vs. neutral

vs. pleasant) x 2 (thematic content: animate

vs. inanimate) repeated measures ANOVA indicates a main effect of valence,

F(2, 128) = 11.04,

p < .001, and a significant interaction between valence and thematic content,

F(2, 128) = 19.66,

p < .001. The thematic content shows a trend toward significance,

F(1, 64) = 3.56,

p = .064.

Post-hoc comparisons showed more gaze fixation on emotional pictures (both pleasant and unpleasant) than on the neutral ones (ps < .001). Non-significant differences were found between animate vs. inanimate pictures (p = .064).

A paired samples t-test analysis shows that animate pictures with different valence do not differ significantly, t(64) < .16, p < .874. When considering inanimate thematic content significant differences can be observed.

Neutral pictures receive the lowest number of fixations compared to unpleasant and pleasant pictures, t(64) < 6.81, p < .001, and compared to animate pictures, t(64) < 6.81, p < .001. Unpleasant pictures receive higher number of fixations compared to pleasant pictures, t(64) = -2.74, p < .01, and compared to neutral inanimate and emotional pictures, t(64) < 6.81, p < .001.

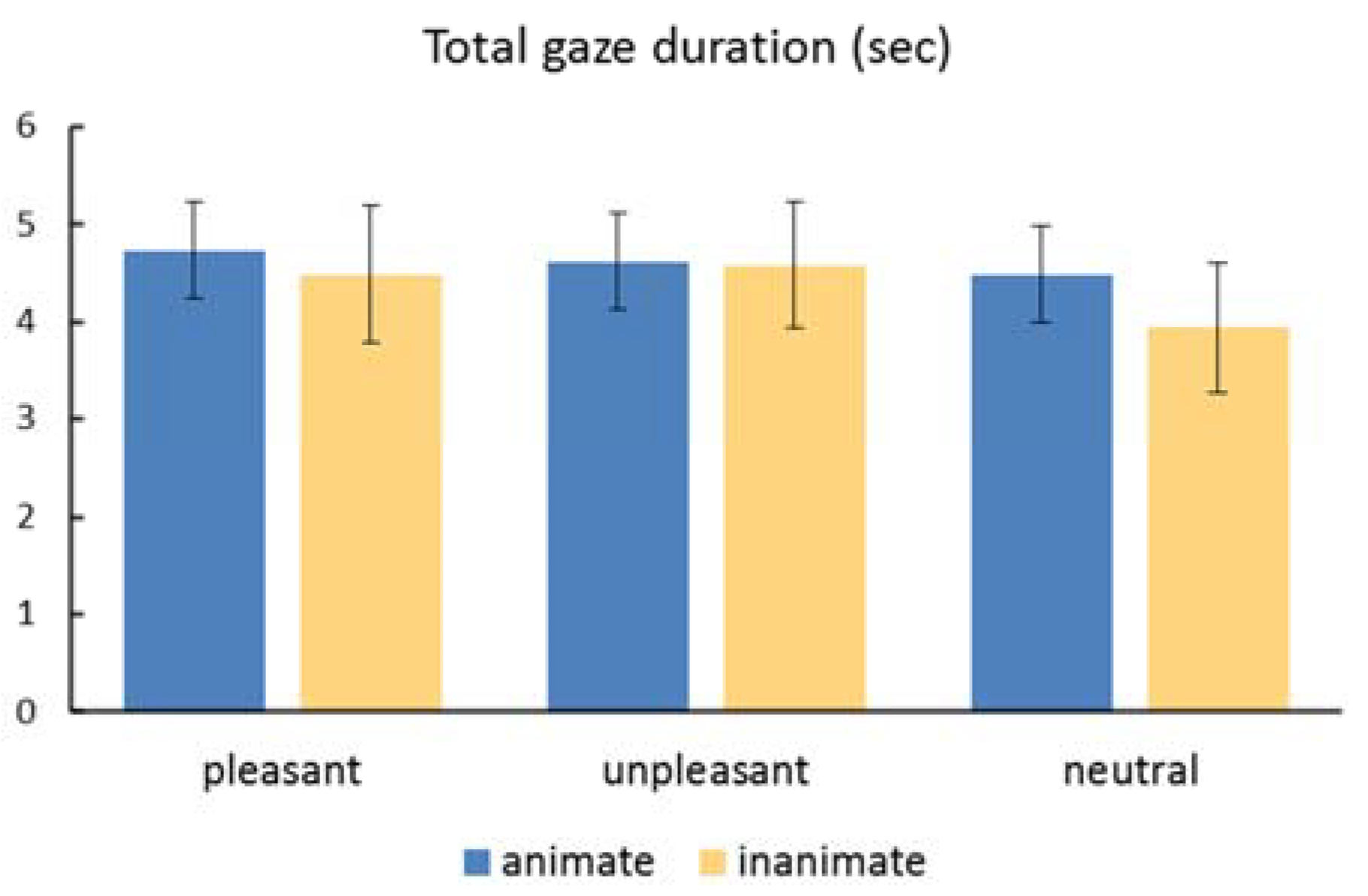

Total gaze duration - The histogram in

Figure 3 compares the total gaze duration for animate vs. inanimate pictures within each valence category.

Descriptive statistics of the total gaze duration for animate

vs. inanimate pictures within each valence category are reported in

Table 1. A 3 (valence: unpleasant vs. neutral vs. pleasant) x 2 (thematic content: animate vs. inanimate) repeated measures ANOVA indicates a main effect of valence,

F(2, 128) = 12.34,

p < .001, a main effect of thematic content,

F(1, 65) = 15.45,

p < .001, and a significant interaction between valence and thematic content,

F(2, 128) = 8.97,

p < .001. Post-hoc comparisons showed that both pleasant and unpleasant pictures were fixated significantly longer than neutral ones (

ps < .001) and animate picture were attended to for longer durations than inanimate ones (

p < .001).

A paired samples t-test analysis comparing pictures with the same valence indicates that pleasant animate pictures were fixated significantly longer compared to pleasant inanimate ones, t(64) = 2.57, p < .05, neutral animate pictures were fixated significantly longer compared to neutral inanimate ones, t(64) = 5.59, p < .001, while unpleasant animate pictures do not differ from unpleasant inanimate ones, t(64) = .45, p = .65.

As for thematic content, neutral animate pictures were fixated significantly less compared to pleasant animate ones, t(64) = 2.68, p < .01, while neutral inanimate pictures were fixated significantly less compared to both pleasant and unpleasant ones, t(64) < 6.22, p < .001. Interestingly, neutral inanimate pictures were fixated significantly less compared to neutral animate pictures and to emotional pictures both inanimate and animate, t(64) < 6.97, p < .001.

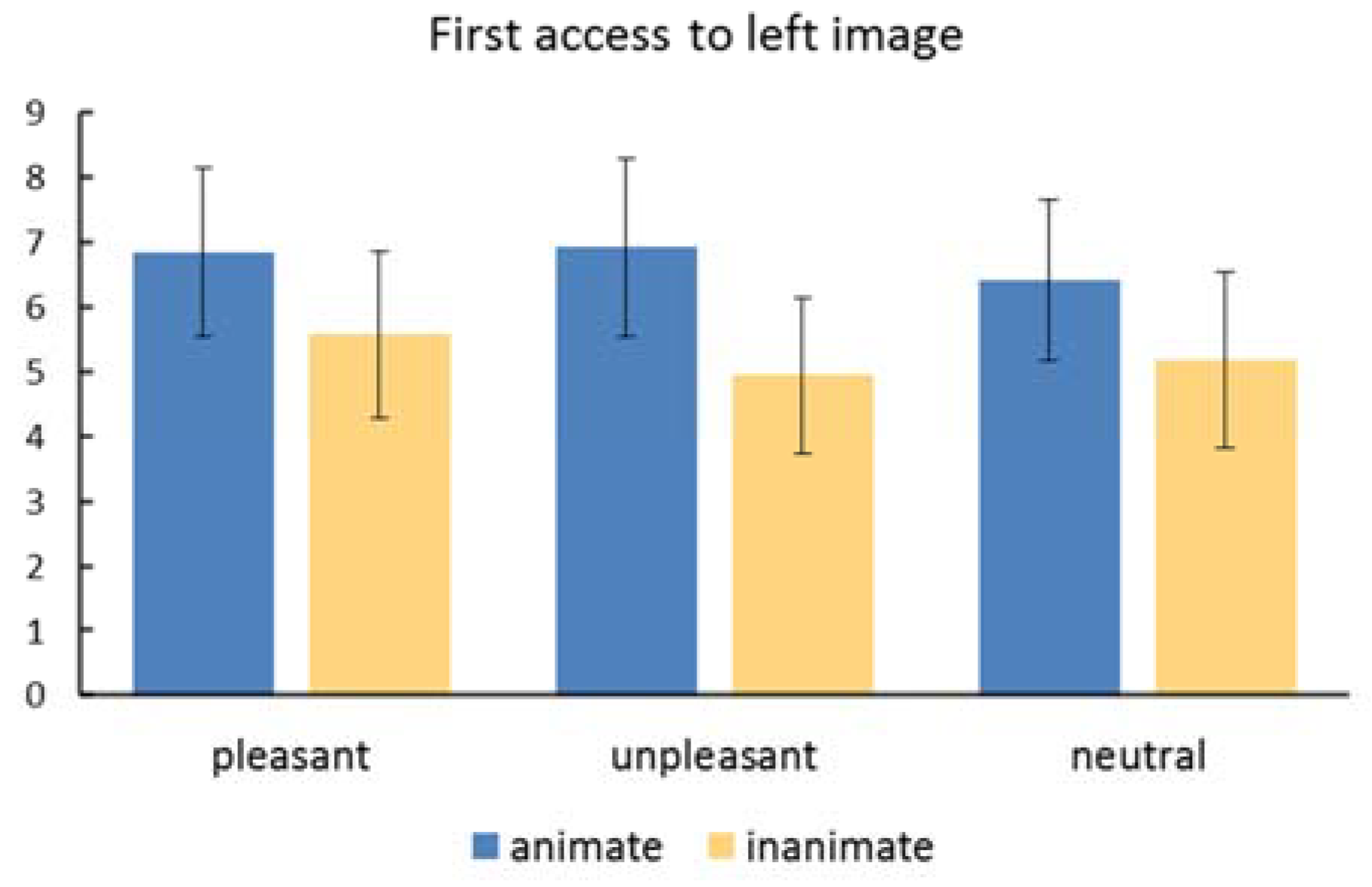

First access - Considering responses from all subjects, 72% of the first access is made on the left image. A paired samples t-test analysis comparing left or right first access for each slide showed a significant statistical difference, t(64) = 8.4, p < .001. This behaviour could be related to the usual scanning behaviour during reading that starts from the upper left corner, or it could be influenced by slide characteristics.

A 3 (valence: unpleasant vs. neutral vs. pleasant) x 2 (thematic content: animate vs. inanimate) repeated measures ANOVA indicates a main effect of the thematic content, F(1, 64) = 63.98, p < .001, of valence F(2, 128) = 3.58, p < .05 and a significant interaction between valence and thematic content, F(2, 128) = 3.59, p < .05.

Post-hoc comparisons showed that the first access on animate left images was significantly higher compared to inanimate ones,

t(64) > 4.55,

p < .001, and the first access on pleasant images was higher compared to neutral ones (

p< .05) (

Figure 4).

Number of transitions - Number of transitions between the left and right pictures within the same slide ranges from 1 to 23 (M = 4.52, SD = 0.63). Paired samples t-test analysis shows no significant difference when subjects were presented with couple of images with the same thematic content compared with couple of images with different thematic content, t(64) = 1.18, ns. Also, no significant difference was achieved pairing two animate and two inanimate pictures, t(64) = .16, ns.

Discussion and Conclusions

The results so far described illustrate the exploratory behaviour of normal subjects during the vision of affective or neutral pictures. Different valence categories (neutral, pleasant, unpleasant) and thematic content (animate, inanimate) have been considered.

Our findings showed that the number of fixations and the gaze duration were greater for emotional pictures, both pleasant and unpleasant, than for neutral ones. In addition, animate pictures were fixated longer than inanimate ones.

In particular, with respect to the number of fixations, neutral inanimate pictures received the lowest number of fixations. Comparing animate vs. inanimate pictures, valence seems to be significant only for the latter category. As far as total gaze duration is concerned, subjects spent significantly less time on neutral inanimate pictures and more time on pleasant animate ones.

In total 72% of first accesses are made on the left image. The thematic content exerts a significant influence on this dynamic aspect of exploration whereas the valence does not. Dynamic aspects of exploration represented by the number of transitions between the two pictures of a given couple do not seem to be related to the valence neither to the thematic content, although number of transitions significantly varies among subjects (range from 1 to 23).

Therefore, the results of the present study seem to confirm further that neutral pictures attract less the attention of subjects as already reported in the literature (Calvo & Lang, 2004, 2005; Nummenmaa, Hyönä & Calvo, 2006) and suggest the effect of thematic content as an additional significant aspect of visual stimuli that is able to influence exploratory behaviour.

Differences between animate and inanimate objects were also found in other experimental paradigms such as picture naming. Subjects seemed to be faster (Proverbio, Del Zotto & Zani (2007) and more accurate (

Thomas & Forde, 2006) in naming animate objects compared to inanimate ones.

The advantage of the animate objects has been explained by higher within-category similarity for animate in comparison to inanimate ones (Gerlach, 2001; Lag, 2005; Lag, Hveem, Ruud, & Laeng, 2006).

Laws and Neve (

1999) observed a higher “intra-item representational variability” in inanimate pictures and a structural similarity in animate ones.

Data from linguistic and eye movement paradigms suggest a different processing between animate and inanimate pictures. In addition, a potential relationship between emotional valence and animate semantic category could be taken into account. Recently, Yang, Bellgowan, & Martin (2011), showed stronger activation in the amygdala for animals and humans pictures compared to inanimate objects. Emotional valence and thematic content could interact to influence attention allocation (

Yang et al., 2012).

Finally, both emotional and animate pictures may represent privileged adaptive stimuli with a facilitating effect in cognitive processing. In fact, this kind of stimuli may increase vigilance in dangerous situations. A faster detection of animate and emotional stimuli could be crucial in an evolutionary context.