Test–Retest Reliability of a Computerized Hand–Eye Coordination Task

Highlights

- This study evaluated the reliability of a computerized protocol (COI-SV) for hand–eye coordination assessment in healthy adults.

- This is a useful tool for clinical, sports, and visuomotor research.

- COI-SV shows moderate-to-good reliability in healthy adults.

- Accuracy is stable, with slight session and initial examiner effects.

Abstract

1. Introduction

2. Materials and Methods

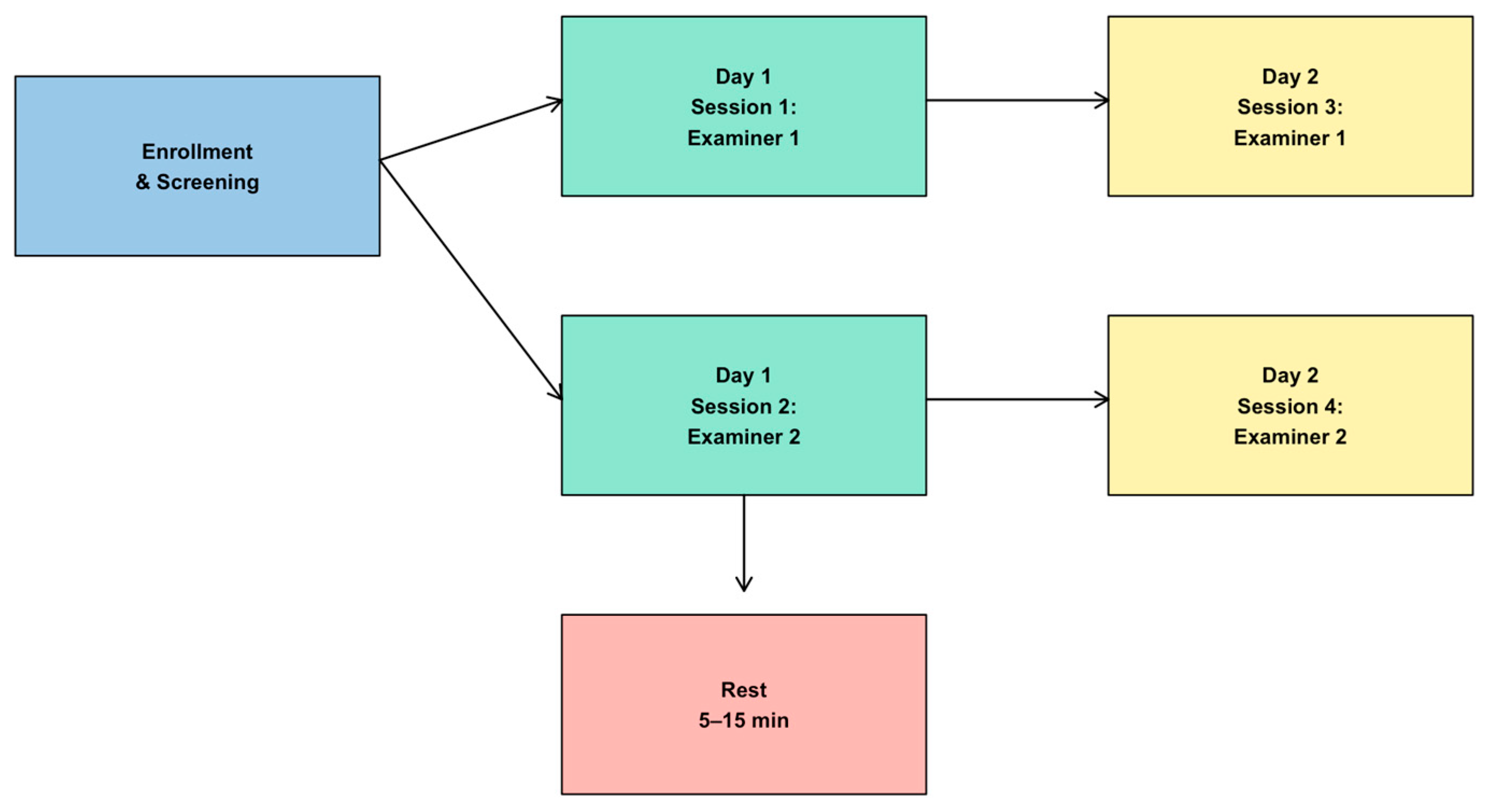

2.1. Study Design

2.2. Participant Recruitment

2.3. Hand–Eye Coordination

- The participant was seated approximately 60–70 cm from the screen.

- The examiner read aloud the standardized instructions: “Red dots will appear randomly anywhere on the screen. You must try to touch the red dot as quickly and accurately as possible. You may use whichever hand you prefer.”

- The participant confirmed understanding of the instructions.

- The test was initiated with the command: “Let’s begin.”

2.4. Test and Re-Test Methodology

2.5. Statistical Analysis

3. Results

3.1. Participants

3.2. Influence of Age and Sex

3.3. Reproducibility and Session Effects

3.4. Reproducibility and Reliability Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Crawford, J.D.; Medendorp, W.P.; Marotta, J.J. Spatial transformations for eye-hand coordination. J. Neurophysiol. 2004, 92, 10–19. [Google Scholar] [CrossRef] [PubMed]

- Kauffman, J.M.; Hallahan, D.P.; Pullen, P.C. Handbook of Special Education, 2nd ed.; Taylor & Francis: Abingdon, UK, 2017. [Google Scholar]

- Fliers, E.; Rommelse, N.; Vermeulen, S.H.; Altink, M.; Buschgens, C.J.; Faraone, S.V.; Sergeant, J.A.; Franke, B.; Buitelaar, J.K. Motor coordination problems in children and adolescents with ADHD rated by parents and teachers: Effects of age and gender. J. Neural Transm. 2008, 115, 211–220. [Google Scholar] [CrossRef] [PubMed]

- Gao, K.L.; Ng, S.S.; Kwok, J.W.; Chow, R.T.; Tsang, W.W. Eye-hand coordination and its relationship with sensori-motor impairments in stroke survivors. J. Rehabil. Med. 2010, 42, 368–373. [Google Scholar] [CrossRef]

- Gowen, E.; Miall, R.C. Eye-hand interactions in tracing and drawing tasks. Hum. Mov. Sci. 2006, 25, 568–585. [Google Scholar] [CrossRef] [PubMed]

- Niechwiej-Szwedo, E.; Wu, S.; Nouredanesh, M.; Tung, J.; Christian, L.W. Development of eye-hand coordination in typically developing children and adolescents assessed using a reach-to-grasp sequencing task. Hum. Mov. Sci. 2021, 80, 102868. [Google Scholar] [CrossRef]

- Kim, H.J.; Lee, C.H.; Kim, E.Y. Temporal differences in eye–hand coordination between children and adults during manual action on objects. Hong Kong J. Occup. Ther. 2018, 31, 106–114. [Google Scholar] [CrossRef]

- Battaglia-Mayer, A.; Caminiti, R. Parieto-frontal networks for eye–hand coordination and movements. Handb. Clin. Neurol. 2018, 151, 499–524. [Google Scholar] [CrossRef]

- Leigh, R.J.; Zee, D.S. The Neurology of Eye Movements, 5th ed.; Oxford University Press: Oxford, UK, 2015. [Google Scholar]

- Rand, M.; Stelmach, G. Effects of hand termination and accuracy constraint on eye–hand coordination during sequential two-segment movements. Exp. Brain Res. 2010, 207, 197–211. [Google Scholar] [CrossRef]

- Terrier, R.; Forestier, N.; Berrigan, F.; Germain-Robitaille, M.; Lavallière, M.; Teasdale, N. Effect of terminal accuracy requirements on temporal gaze-hand coordination during fast discrete and reciprocal pointings. J. Neuroeng. Rehabil. 2011, 8, 10. [Google Scholar] [CrossRef]

- Lazzari, S.; Mottet, D.; Vercher, J.L. Eye-hand coordination in rhythmical pointing. J. Mot. Behav. 2009, 41, 294–304. [Google Scholar] [CrossRef]

- de Vries, S.; Huys, R.; Zanone, P.G. Keeping your eye on the target: Eye—hand coordination in a repetitive Fitts’ task. Exp. Brain Res. 2018, 236, 3181–3190. [Google Scholar] [CrossRef]

- Guiard, Y. On Fitts’s and Hooke’s laws: Simple harmonic movement in upper-limb cyclical aiming. Acta Psychol. 1993, 82, 139–159. [Google Scholar] [CrossRef] [PubMed]

- Huys, R.; Fernandez, L.; Bootsma, R.J.; Jirsa, V.K. Fitts’ law is not continuous in reciprocal aiming. Proc. Biol. Sci. 2010, 277, 1179–1184. [Google Scholar] [CrossRef]

- Ngadiyana, H. The effect of eye-hand coordination training on accuracy of service in volleyball players. In Proceedings of the 1st South Borneo International Conference on Sport Science and Education (SBICSSE 2019), Banjarmasin, Indonesia, 28–29 November 2019; Atlantis Press: Dordrecht, The Netherlands, 2020. [Google Scholar]

- Carey, D.P. Eye–hand coordination: Eye to hand or hand to eye? Curr. Biol. 2000, 10, R416–R419. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Dane, S.; Hazar, F.; Tan, Ü. Correlations between eye-hand reaction time and power of various muscles in badminton players. Int. J. Neurosci. 2008, 118, 349–354. [Google Scholar] [CrossRef]

- Dube, S.P.; Mungal, S.U.; Kulkarni, M.B. Simple visual reaction time in badminton players: A comparative study. Natl. J. Physiol. Pharm. Pharmacol. 2015, 5, 18–20. [Google Scholar] [CrossRef]

- Di, X.; Zhu, S.; Jin, H.; Wang, P.; Ye, Z.; Zhou, K.; Zhuo, Y.; Rao, H. Altered resting brain function and structure in professional badminton players. Brain Connect. 2012, 2, 225–233. [Google Scholar] [CrossRef]

- Leo, A.; Handjaras, G.; Bianchi, M.; Marino, H.; Gabiccini, M.; Guidi, A.; Scilingo, E.P.; Pietrini, P.; Bicchi, A.; Santello, M.; et al. A synergy-based hand control is encoded in human motor cortical areas. Elife 2016, 5, e13420. [Google Scholar] [CrossRef]

- Komogortsev, O.V.; Gobert, D.V.; Jayarathna, S.; Koh, D.H.; Gowda, S.M. Standardization of automated analyses of oculomotor fixation and saccadic behaviors. IEEE Trans. Biomed. Eng. 2010, 57, 2635–2645. [Google Scholar] [CrossRef]

- Ujbányi, T.; Kővári, A.; Sziládi, G.; Katona, J. Examination of the eye-hand coordination related to computer mouse movement. Infocommunications J. 2020, 12, 26–31. [Google Scholar] [CrossRef]

- Popelka, S.; Stachoň, Z.; Šašinka, Č.; Doležalová, J. EyeTribe tracker data accuracy evaluation and its interconnection with Hypothesis software for cartographic purposes. Comput. Intell. Neurosci. 2016, 2016, 9172506. [Google Scholar] [CrossRef]

- Ooms, K.; Dupont, L.; Lapon, L.; Popelka, S. Accuracy and precision of fixation locations recorded with the low-cost Eye Tribe tracker in different experimental set-ups. J. Eye Mov. Res. 2015, 8, 1–24. [Google Scholar] [CrossRef]

- Dalmaijer, E. Is the low-cost EyeTribe eye tracker any good for research? PeerJ Prepr. 2014, 2, e585v1. [Google Scholar] [CrossRef]

- Weichert, F.; Bachmann, D.; Rudak, B.; Fisseler, D. Analysis of the accuracy and robustness of the Leap Motion Controller. Sensors 2013, 13, 6380–6393. [Google Scholar] [CrossRef]

- GRANMO. Sample Size and Power Calculator V 7.12. Institut Municipal d’Investigació Médica. Available online: http://www.imim.es/ofertadeserveis/software-public/granmo/ (accessed on 15 July 2025).

- Fogt, N.; Uhlig, R.; Thach, D.P.; Liu, A. The influence of head movement on the accuracy of a rapid pointing task. Optometry 2002, 73, 665–673. [Google Scholar]

- Ríder-Vázquez, A.; Vega-Holm, M.; Sánchez-González, M.C.; Gutiérrez-Sánchez, E. Minimum perceptual time (MPT). Repeatability and reproducibility of variables applied to “sports vision”. Graefe’s Arch. Clin. Exp. Ophthalmol. 2025, 263, 1175–1182. [Google Scholar] [CrossRef]

- Jorge, J.; Fernandes, P. Static and dynamic visual acuity and refractive errors in elite football players. Clin. Exp. Optom. 2019, 102, 51–56. [Google Scholar] [CrossRef]

- Nascimento, H.; Alvarez-Peregrina, C.; Martinez-Perez, C.; Sánchez-Tena, M.Á. Vision in futsal players: Coordination and reaction time. Int. J. Environ. Res. Public Health 2021, 18, 9069. [Google Scholar] [CrossRef]

- Antona, B.; Barrio, A.; Barra, F.; Gonzalez, E.; Sanchez, I. Repeatability and agreement in the measurement of horizontal fusional vergences. Ophthalmic Physiol. Opt. 2008, 28, 475–491. [Google Scholar] [CrossRef] [PubMed]

- Anstice, N.S.; Davidson, B.; Field, B.; Mathan, J.; Collins, A.V.; Black, J.M. The repeatability and reproducibility of four techniques for measuring horizontal heterophoria: Implications for clinical practice. J. Optom. 2021, 14, 275–281. [Google Scholar] [CrossRef] [PubMed]

- McCullough, S.J.; Doyle, L.; Saunders, K.J. Intra- and inter-examiner repeatability of cycloplegic retinoscopy among young children. Ophthalmic Physiol. Opt. 2017, 37, 16–23. [Google Scholar] [CrossRef]

- Coudiere, A.; Danion, F.R. Eye-hand coordination during sequential reaching to uncertain targets: The effect of task difficulty, target width, movement amplitude, and task scaling. Exp. Brain Res. 2025, 243, 143. [Google Scholar] [CrossRef]

- Igoresky, A.; Tangkudung, J. Relationship between eye-hand coordination and precision service. In Proceedings of the 1st International Conference on Sport Sciences, Health and Tourism (ICSSHT 2019), Padang, Indonesia, 13–14 November 2019; Atlantis Press: Dordrecht, The Netherlands, 2021. [Google Scholar]

- Brinkman, C.; Baez, S.E.; Quintana, C.; Andrews, M.L.; Heebner, N.R.; Hoch, M.C.; Hoch, J.M. The reliability of an upper- and lower-extremity visuomotor reaction time task. J. Sport Rehabil. 2020, 30, 828–831. [Google Scholar] [CrossRef]

- Pastel, S.; Klenk, F.; Bürger, D.; Heilmann, F.; Witte, K. Reliability and validity of a self-developed virtual reality-based test battery for assessing motor skills in sports performance. Sci. Rep. 2025, 15, 6256. [Google Scholar] [CrossRef]

- Abid, M.; Poitras, I.; Gagnon, M.; Mercier, C. Eye-hand coordination during upper limb motor tasks in individuals with or without a neurodevelopmental disorder: A systematic review. Front. Neurol. 2025, 16, 1569438. [Google Scholar] [CrossRef]

- Szabo, D.A.; Neagu, N.; Teodorescu, S.; Sopa, I.S. Eye-hand relationship of proprioceptive motor control and coordination in children 10–11 years old. Health Sports Rehabil. Med. 2020, 21, 185–191. [Google Scholar] [CrossRef]

- Fitts, P.M. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. 1954, 47, 381–391. [Google Scholar] [CrossRef]

- Lavoie, E.; Hebert, J.S.; Chapman, C.S. Comparing eye–hand coordination between controller-mediated virtual reality and a real-world object interaction task. J. Vis. 2024, 24, 9. [Google Scholar] [CrossRef]

- Lavoie, E.; Hebert, J.S.; Chapman, C.S. How a lack of haptic feedback affects eye-hand coordination and embodiment in virtual reality. Sci. Rep. 2025, 15, 25219. [Google Scholar] [CrossRef]

| Comparison | Measure | Bias (Mean Difference) | 95% LoA | ICC (95% CI) |

|---|---|---|---|---|

| Intra-session (Day 1 vs. Day 2) | Accuracy | +1.31 points | −5.39 to 8.00 | 0.70 (0.52–0.82) |

| Response time | −0.01 s | −0.13 to 0.11 | ||

| Inter-examiner (Day 1) | Accuracy | +1.33 points | −8.54 to 11.20 | 0.51 (0.28–0.68) |

| Response time | −0.01 s | −0.12 to 0.11 | ||

| Inter-examiner (Day 2) | Accuracy | +0.61 points | −6.98 to 8.20 | 0.71 (0.55–0.82) |

| Response time | −0.001 s | −0.09 to 0.08 | ||

| Intra-examiner (Examiner 1) | Accuracy | +1.67 points | −8.49 to 11.82 | — |

| Intra-examiner (Examiner 2) | Accuracy | +0.94 points | −8.20 to 10.09 | — |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ríder-Vázquez, A.; Gutiérrez-Sánchez, E.; Martinez-Perez, C.; Sánchez-González, M.C. Test–Retest Reliability of a Computerized Hand–Eye Coordination Task. J. Eye Mov. Res. 2025, 18, 54. https://doi.org/10.3390/jemr18050054

Ríder-Vázquez A, Gutiérrez-Sánchez E, Martinez-Perez C, Sánchez-González MC. Test–Retest Reliability of a Computerized Hand–Eye Coordination Task. Journal of Eye Movement Research. 2025; 18(5):54. https://doi.org/10.3390/jemr18050054

Chicago/Turabian StyleRíder-Vázquez, Antonio, Estanislao Gutiérrez-Sánchez, Clara Martinez-Perez, and María Carmen Sánchez-González. 2025. "Test–Retest Reliability of a Computerized Hand–Eye Coordination Task" Journal of Eye Movement Research 18, no. 5: 54. https://doi.org/10.3390/jemr18050054

APA StyleRíder-Vázquez, A., Gutiérrez-Sánchez, E., Martinez-Perez, C., & Sánchez-González, M. C. (2025). Test–Retest Reliability of a Computerized Hand–Eye Coordination Task. Journal of Eye Movement Research, 18(5), 54. https://doi.org/10.3390/jemr18050054