Recognition and Misclassification Patterns of Basic Emotional Facial Expressions: An Eye-Tracking Study in Young Healthy Adults

Highlights

- An experimental eye-tracking study (N = 50) was conducted, analyzing both accurate and inaccurate recognition.

- Fear and sadness are often misclassified, mainly as disgust.

- Disgust is consistently misclassified as anger across participants.

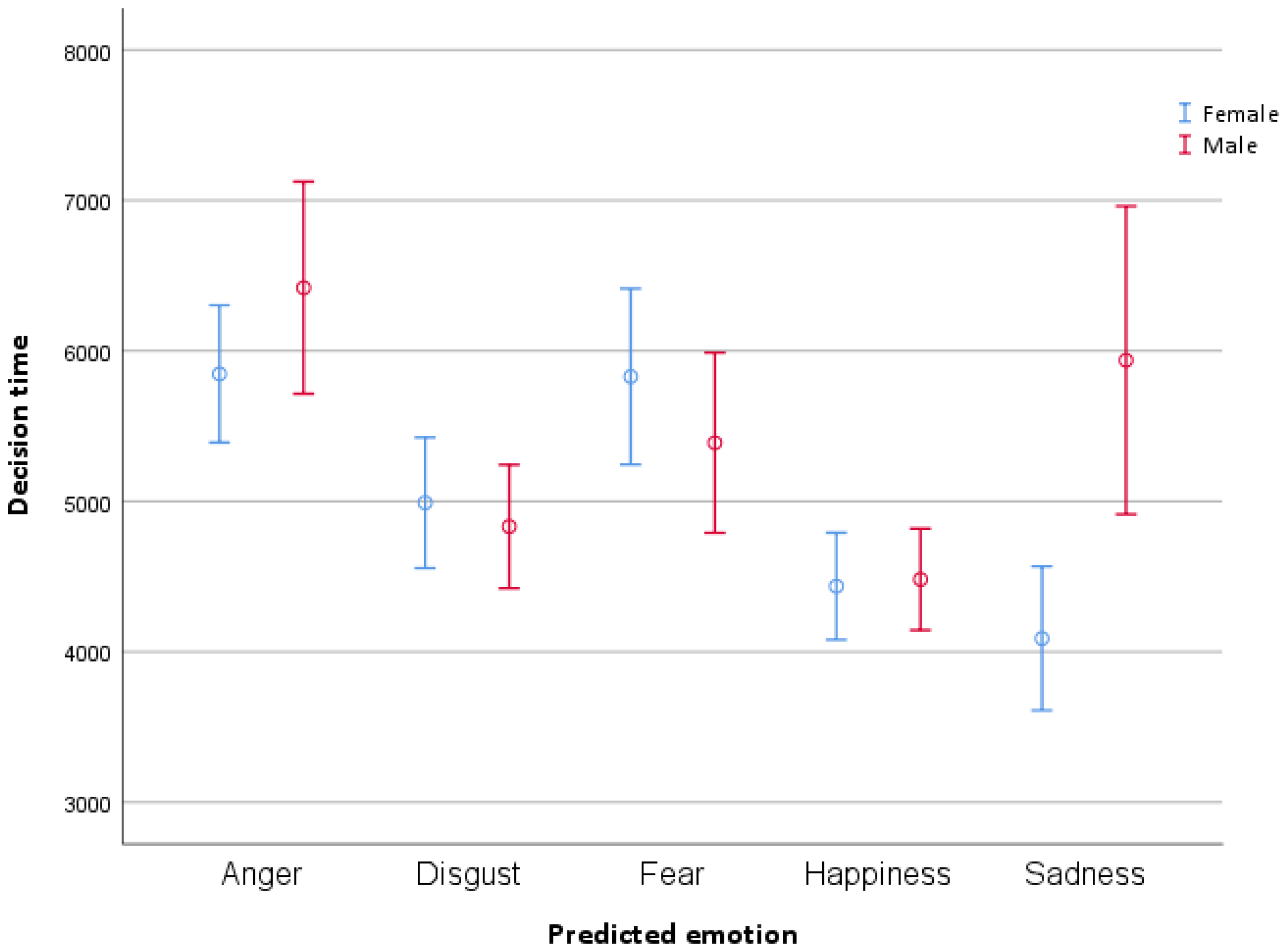

- Happiness is recognized fastest and most accurately; recognition of anger takes the longest time.

- Female participants respond faster; the gender of the poser shows no effect.

Abstract

1. Introduction

Research Questions

2. Materials and Methods

2.1. Participants

2.2. Materials and Apparatus

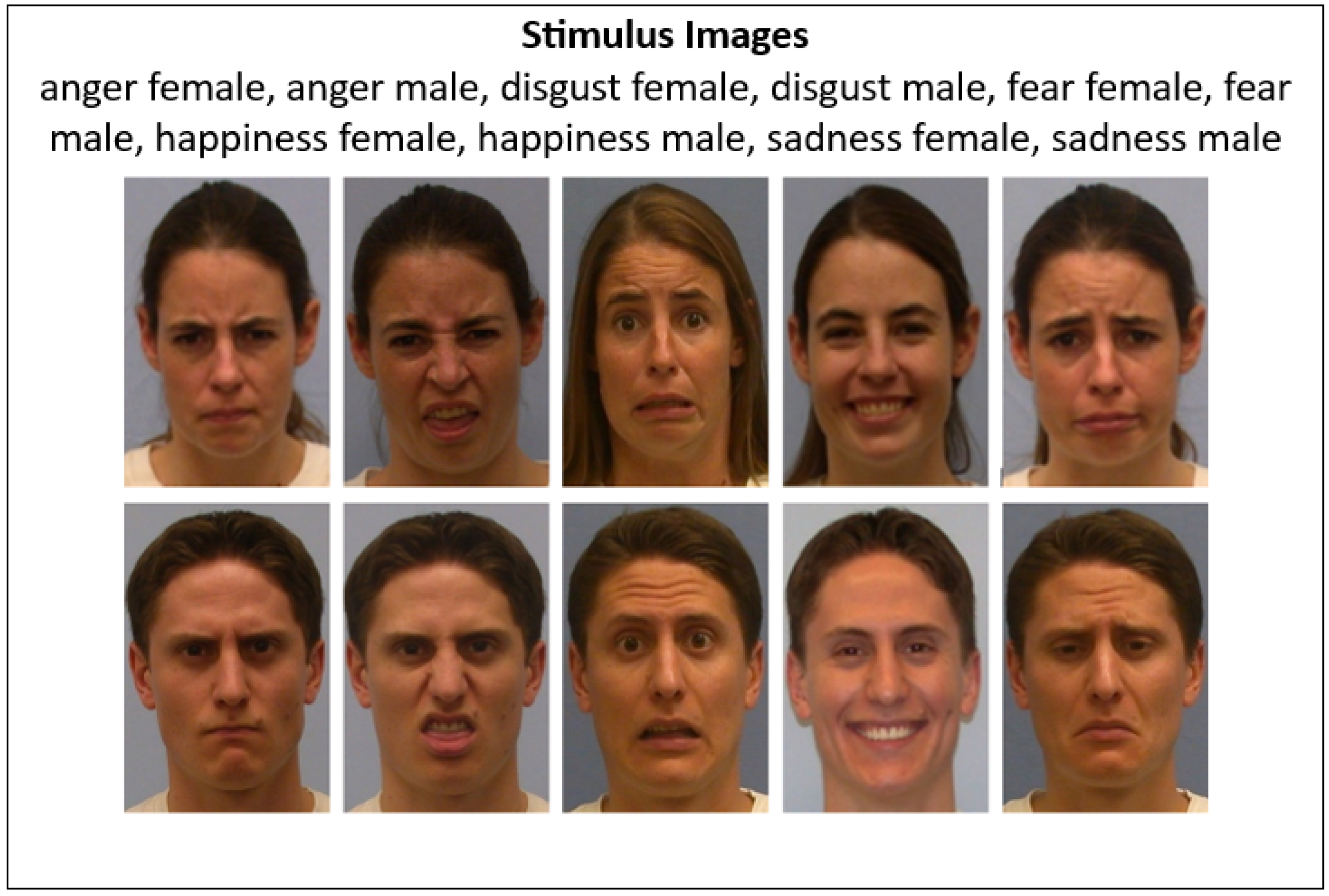

2.3. Stimuli

2.4. Procedure

2.5. Design

2.6. Data Preparation

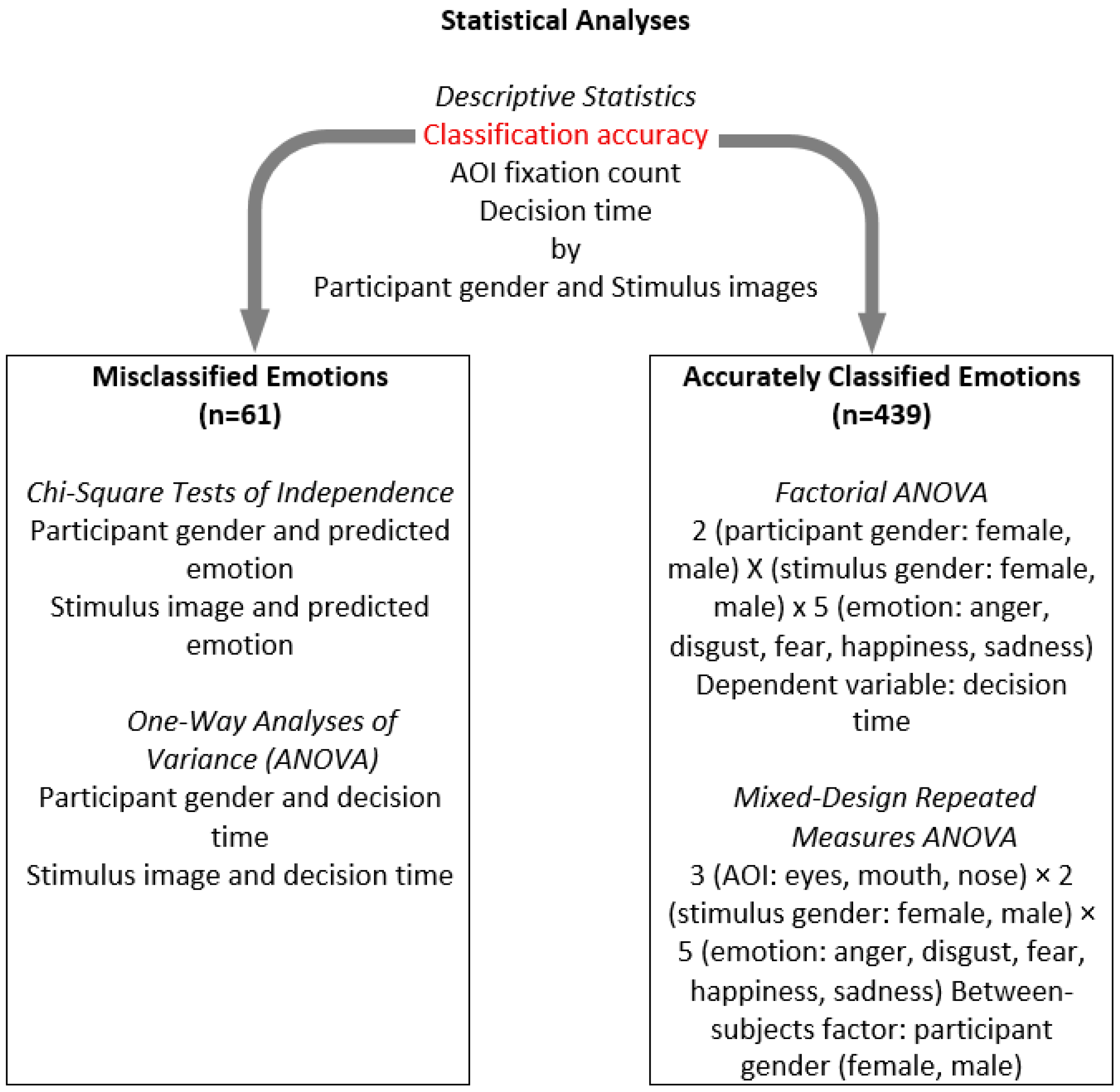

2.7. Statistical Analysis

3. Results

3.1. Descriptive Statistics

3.2. Patterns in Inaccurate Facial Emotion Recognition

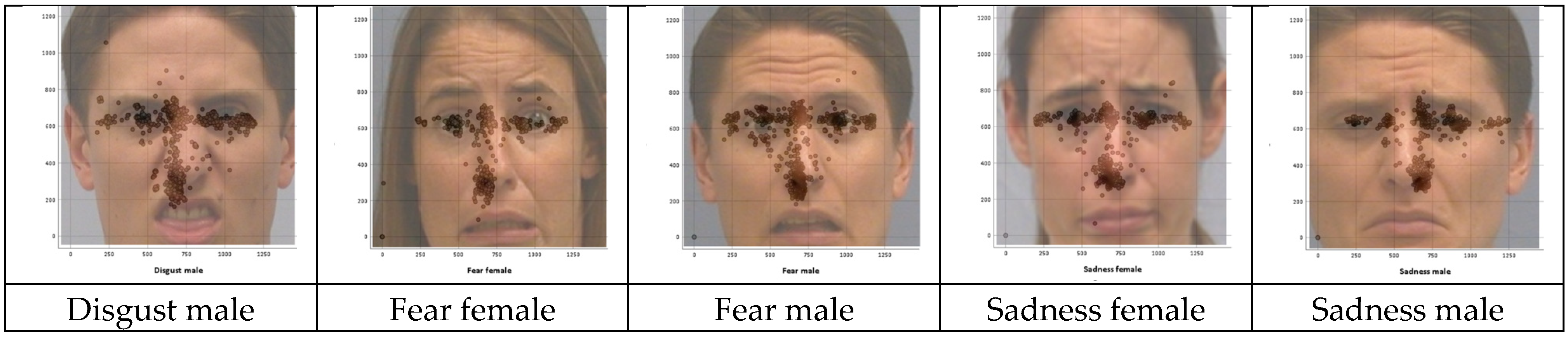

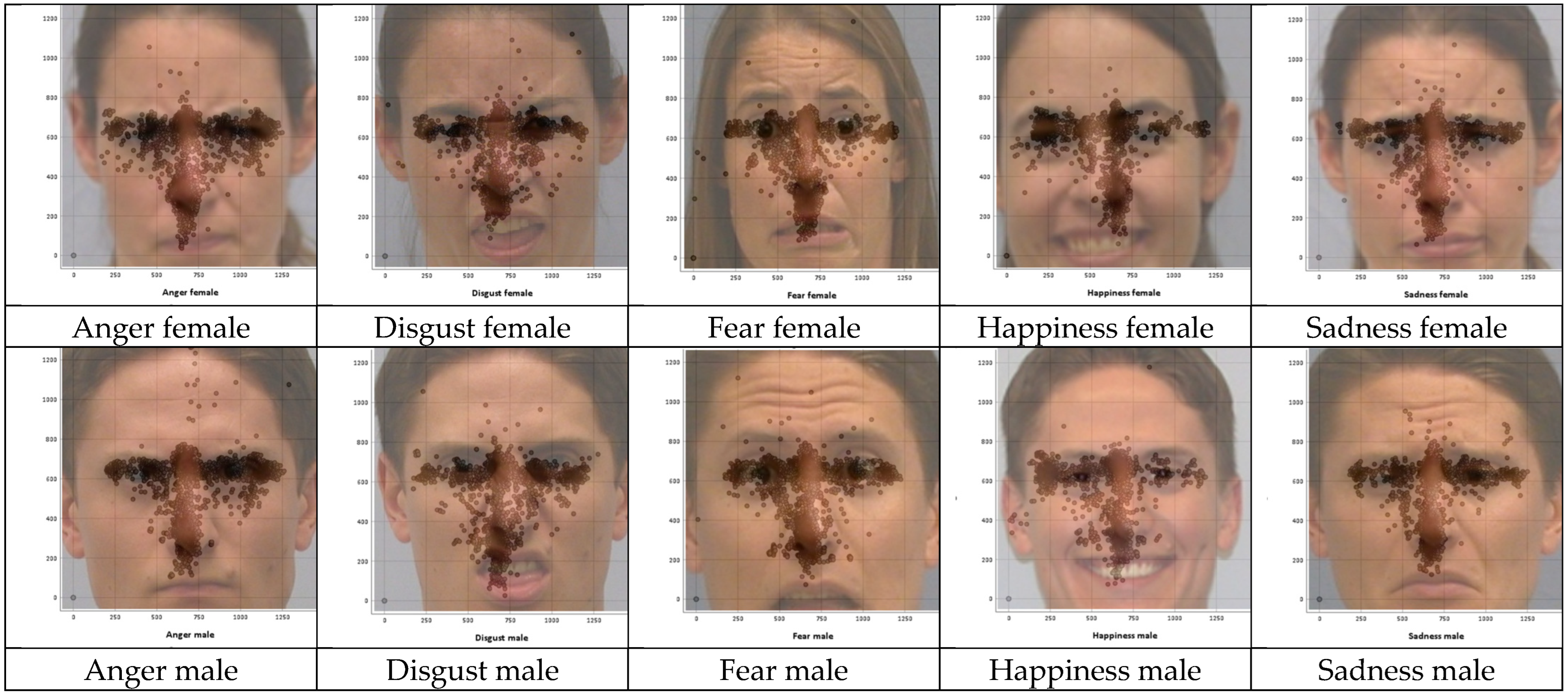

Fixation Distributions for the Misclassified Stimulus Images

3.3. Patterns in Accurate Facial Emotion Recognition

3.3.1. Decision Time

3.3.2. AOI Fixation Count

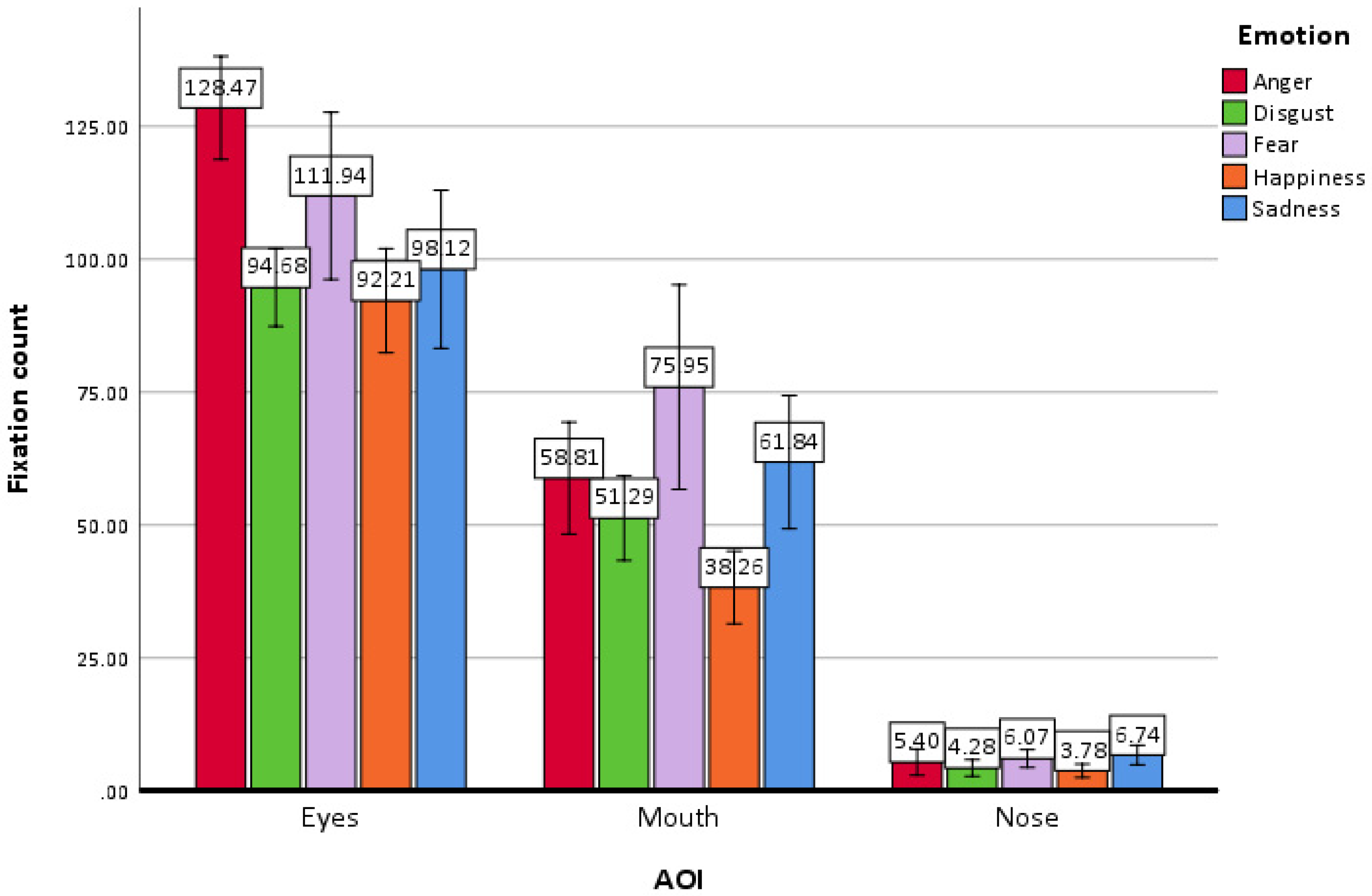

Main Effects

Interactions

Fixation Distributions for the Stimulus Images

3.4. Comparison of AOI Fixation Distributions for Misclassified Stimulus Images

4. Discussion

4.1. Inaccurate Recognition

4.2. Accurate Recognition

4.3. Eye-Tracking Patterns and AOI-Specific Fixation Trends

4.3.1. Limitations and Future Directions

4.3.2. Implications

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ekman, P.; Friesen, W.V. Constants across cultures in the face and emotion. J. Personal. Soc. Psychol. 1971, 17, 124–129. [Google Scholar] [CrossRef] [PubMed]

- Ekman, P. An argument for basic emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Jack, R.E. Culture and facial expressions of emotion. Vis. Cogn. 2013, 21, 1248–1286. [Google Scholar] [CrossRef]

- Gao, Z.; Zhao, W.; Liu, S.; Liu, Z.; Yang, C.; Xu, Y. Facial emotion recognition in schizophrenia. Front. Psychiatry 2021, 12, 633717. [Google Scholar] [CrossRef]

- Ekman, P.; Friesen, W.V.; Hager, J.C. Facial Action Coding System: The Manual on CD-ROM; Research Nexus eBook: Charleston, SC, USA, 2002. [Google Scholar]

- Ekman, P. Darwin and Facial Expression: A Century of Research in Review; Academic Press: Cambridge, MA, USA, 1973. [Google Scholar]

- Fiske, S.T.; Taylor, S.E. Social Cognition: From Brains to Culture, 2nd ed.; SAGE: Atlanta, GA, USA; London, UK; Paris, France, 2013. [Google Scholar]

- Ekman, P. Expression or communication about emotion. In Uniting Psychology and Biology: Integrative Perspectives on Human Development; Segal, N.L., Weisfeld, G.E., Weisfeld, C.C., Eds.; American Psychological Association: Washington, DC, USA, 1997; pp. 315–338. [Google Scholar] [CrossRef]

- Chen, J.; Cui, Y.; Wei, C.; Polat, K.; Alenezi, F. Advances in EEG-Based Emotion Recognition: Challenges, Methodologies, and Future Directions. Appl. Soft Comput. 2025, 180, 113478. [Google Scholar] [CrossRef]

- Wells, L.J.; Gillespie, S.M.; Rotshtein, P. Identification of emotional facial expressions: Effects of expression, intensity, and sex on eye gaze. PLoS ONE 2016, 11, e0168307. [Google Scholar] [CrossRef] [PubMed]

- López-Morales, H.; Zabala, M.L.; Agulla, L.; Aguilar, M.J.; Sosa, J.M.; Vivas, L.; López, M. Accuracy and speed in facial emotion recognition in children, adolescents, and adults. Curr. Psychol. 2025, 44, 4356–4370. [Google Scholar] [CrossRef]

- Johnson, S.M.; Greenberg, L.S. Emotion in marital therapy. In The Heart of the Matter: Perspectives on Emotion in Marital Therapy; Johnson, S.M., Greenberg, L.S., Eds.; Routledge: Oxfordshire, UK, 2013; pp. 3–22. [Google Scholar]

- Knothe, J.M.; Walle, E.A. The relational aboutness of emotions in interpersonal contexts. In The Social Nature of Emotion Expression: What Emotions Can Tell Us About the World; Springer: Berlin/Heidelberg, Germany, 2019; pp. 83–101. [Google Scholar]

- Fischer, A.H.; Pauw, L.S.; Manstead, A.S. Emotion recognition as a social act: The role of the expresser-observer relationship in recognizing emotions. In The Social Nature of Emotion Expression What Emotions Can Tell Us About the World; Hess, U., Hareli, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2019; pp. 7–24. [Google Scholar]

- Yamada, Y.; Inagawa, T.; Hirabayashi, N.; Sumiyoshi, T. Emotion recognition deficits in psychiatric disorders as a target of non-invasive neuromodulation: A systematic review. Clin. EEG Neurosci. 2022, 53, 506–512. [Google Scholar] [CrossRef]

- Krause, F.C.; Linardatos, E.; Fresco, D.M.; Moore, M.T. Facial emotion recognition in major depressive disorder: A meta-analytic review. J. Affect. Disord. 2021, 293, 320–328. [Google Scholar] [CrossRef]

- Burgio, F.; Menardi, A.; Benavides-Varela, S.; Danesin, L.; Giustiniani, A.; Stock, J.V.D.; De Mitri, R.; Biundo, R.; Meneghello, F.; Antonini, A.; et al. Facial emotion recognition in individuals with mild cognitive impairment: An exploratory study. Cogn. Affect. Behav. Neurosci. 2024, 24, 599–614. [Google Scholar] [CrossRef]

- Rodríguez-Antigüedad, J.; Martínez-Horta, S.; Horta-Barba, A.; Puig-Davi, A.; Campolongo, A.; Sampedro, F.; Bejr-Kasem, H.; Marín-Lahoz, J.; Pagonabarraga, J.; Kulisevsky, J. Facial emotion recognition deficits are associated with hypomimia and related brain correlates in Parkinson’s disease. J. Neural Transm. 2024, 131, 1463–1469. [Google Scholar] [CrossRef] [PubMed]

- Liu, J.; Chen, H.; Wang, H.; Wang, Z. Neural correlates of facial recognition deficits in autism spectrum disorder: A comprehensive review. Front. Psychiatry 2025, 15, 1464142. [Google Scholar] [CrossRef]

- Calder, A.J.; Young, A.W.; Keane, J.; Dean, M. Configural information in facial expression perception. J. Exp. Psychol. Hum. Percept. Perform. 2000, 26, 527–551. [Google Scholar] [CrossRef]

- Yitzhak, N.; Pertzov, Y.; Guy, N.; Aviezer, H. Many ways to see your feelings: Successful facial expression recognition occurs with diverse patterns of fixation distributions. Emotion 2022, 22, 844–860. [Google Scholar] [CrossRef] [PubMed]

- Paparelli, A.; Sokhn, N.; Stacchi, L.; Coutrot, A.; Richoz, A.R.; Caldara, R. Idiosyncratic fixation patterns generalize across dynamic and static facial expression recognition. Sci. Rep. 2024, 14, 16193. [Google Scholar] [CrossRef] [PubMed]

- Poncet, F.; Soussignan, R.; Jaffiol, M.; Gaudelus, B.; Leleu, A.; Demily, C.; Franck, N.; Baudouin, J.Y. The spatial distribution of eye movements predicts the (false) recognition of emotional facial expressions. PLoS ONE 2021, 16, e0245777. [Google Scholar] [CrossRef]

- Lawrence, K.; Campbell, R.; Skuse, D. Age, gender, and puberty influence the development of facial emotion recognition. Front. Psychol. 2015, 6, 761. [Google Scholar] [CrossRef]

- Michaud, M.; Roy-Charland, A.; Perron, M. Effects of Explicit Knowledge and Attentional-Perceptual Processing on the Ability to Recognize Fear and Surprise. Behav. Sci. 2025, 15, 166. [Google Scholar] [CrossRef]

- Murphy, J.; Millgate, E.; Geary, H.; Catmur, C.; Bird, G. No effect of age on emotion recognition after accounting for cognitive factors and depression. Q. J. Exp. Psychol. 2019, 72, 2690–2704. [Google Scholar] [CrossRef]

- Tracy, J.L.; Robins, R.W. The automaticity of emotion recognition. Emotion 2008, 8, 81–95. [Google Scholar] [CrossRef]

- Williams, L.M.; Mathersul, D.; Palmer, D.M.; Gur, R.C.; Gur, R.E.; Gordon, E. Explicit identification and implicit recognition of facial emotions: I. Age effects in males and females across 10 decades. J. Clin. Exp. Neuropsychol. 2009, 31, 257–277. [Google Scholar] [CrossRef]

- Hyniewska, S.; Sato, W.; Kaiser, S.; Pelachaud, C. Naturalistic emotion decoding from facial action sets. Front. Psychol. 2019, 9, 2678. [Google Scholar] [CrossRef]

- Pool, E.; Brosch, T.; Delplanque, S.; Sander, D. Attentional bias for positive emotional stimuli: A meta-analytic investigation. Psychol. Bull. 2016, 142, 79–106. [Google Scholar] [CrossRef] [PubMed]

- Thayer, J.; Johnsen, B.H. Sex differences in judgement of facial affect: A multivariate analysis of recognition errors. Scand. J. Psychol. 2000, 41, 243–246. [Google Scholar] [CrossRef]

- Lambrecht, L.; Kreifelts, B.; Wildgruber, D. Gender differences in emotion recognition: Impact of sensory modality and emotional category. Cogn. Emot. 2014, 28, 452–469. [Google Scholar] [CrossRef]

- Abbruzzese, L.; Magnani, N.; Robertson, I.H.; Mancuso, M. Age and gender differences in emotion recognition. Front. Psychol. 2019, 10, 2371. [Google Scholar] [CrossRef]

- Tian, Y.L.; Kanade, T.; Cohn, J.F. Facial expression analysis. In Handbook of Face Recognition; Li, S.Z., Jain, A.K., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 487–519. [Google Scholar] [CrossRef]

- Calder, A.J.; Young, A.W. Understanding the recognition of facial identity and facial expression. Nat. Rev. Neurosci. 2005, 6, 641–651. [Google Scholar] [CrossRef] [PubMed]

- Calvo, M.G.; Nummenmaa, L. Detection of emotional faces: Salient physical features guide effective visual search. J. Exp. Psychol. Gen. 2008, 137, 471–494. [Google Scholar] [CrossRef] [PubMed]

- Neta, M.; Kim, M.J. Surprise as an emotion: A response to Ortony. Perspect. Psychol. Sci. 2023, 18, 854–862. [Google Scholar] [CrossRef] [PubMed]

- Scherer, K.R. What are emotions? And how can they be measured? Soc. Sci. Inf. 2005, 44, 695–729. [Google Scholar] [CrossRef]

- Ortony, A. Are all “basic emotions” emotions? A problem for the (basic) emotions construct. Perspect. Psychol. Sci. 2022, 17, 41–61. [Google Scholar] [CrossRef]

- Hessels, R.S. How does gaze to faces support face-to-face interaction? A review and perspective. Psychon. Bull. Rev. 2020, 27, 856–881. [Google Scholar] [CrossRef]

- Holmqvist, K.; Nyström, M.; Andersson, R.; Dewhurst, R.; Jarodzka, H.; Van de Weijer, J. Eye Tracking: A Comprehensive Guide to Methods and Measures; Oxford University Press: Oxford, UK, 2011. [Google Scholar]

- Tracy, J.L.; Robins, R.W.; Schriber, R.A. Development of a FACS-verified set of basic and self-conscious emotion expressions. Emotion 2009, 9, 554–559. [Google Scholar] [CrossRef]

- Palermo, R.; Coltheart, M. Photographs of facial expression: Accuracy, response times, and ratings of intensity. Behav. Res. Methods Instrum. Comput. 2004, 36, 634–638. [Google Scholar] [CrossRef]

- Vassallo, S.; Cooper, S.L.; Douglas, J.M. Visual scanning in the recognition of facial affect: Is there an observer sex difference? J. Vis. 2009, 9, 11. [Google Scholar] [CrossRef]

- Bombari, D.; Schmid, P.C.; Schmid Mast, M.; Birri, S.; Mast, F.W.; Lobmaier, J.S. Emotion recognition: The role of featural and configural face information. Q. J. Exp. Psychol. 2013, 66, 2426–2442. [Google Scholar] [CrossRef]

- Schurgin, M.W.; Nelson, J.; Iida, S.; Ohira, H.; Chiao, J.Y.; Franconeri, S.L. Eye movements during emotion recognition in faces. J. Vis. 2014, 14, 14. [Google Scholar] [CrossRef]

- Du, S.; Tao, Y.; Martinez, A.M. Compound facial expressions of emotion. Proc. Natl. Acad. Sci. USA 2014, 111, E1454–E1462. [Google Scholar] [CrossRef] [PubMed]

- Hess, U.; Adams, R.B.; Kleck, R.E. The face is not an empty canvas: How facial expressions interact with facial appearance. Philos. Trans. R. Soc. B Biol. Sci. 2009, 364, 3497–3504. [Google Scholar] [CrossRef] [PubMed]

- Chen, E.; Liu, C.; Zhang, X.; Wang, K.; Wang, H. The role of task-irrelevant configural and featural face processing in the selective attention to gaze. Biol. Psychol. 2025, 199, 109060. [Google Scholar] [CrossRef] [PubMed]

- Rozin, P.; Haidt, J.; McCauley, C.R. Disgust. In Handbook of Emotions, 3rd ed.; Lewis, M., Haviland-Jones, J.M., Barrett, L.F., Eds.; Guilford Press: New York, NY, USA, 2008; pp. 757–776. [Google Scholar]

- Hoffmann, H.; Kessler, H.; Eppel, T.; Rukavina, S.; Traue, H.C. Expression intensity, gender and facial emotion recognition: Women recognize only subtle facial emotions better than men. Acta Psychol. 2010, 135, 278–283. [Google Scholar] [CrossRef]

- Curtis, V.; Aunger, R.; Rabie, T. Evidence that disgust evolved to protect from risk of disease. Proc. R. Soc. B Biol. Sci. 2004, 271, S131–S133. [Google Scholar] [CrossRef]

- Sauter, D.A.; Panattoni, C.; Happé, F. Children’s recognition of emotions from vocal cues. Br. J. Dev. Psychol. 2011, 31, 97–113. [Google Scholar] [CrossRef]

- Montagne, B.; Kessels, R.P.; De Haan, E.H.; Perrett, D.I. The emotion recognition task: A paradigm to measure the perception of facial emotional expressions at different intensities. Percept. Mot. Ski. 2007, 104, 589–598. [Google Scholar] [CrossRef]

- Brunet, N.M.; Ackerman, A.R. Effects of Closed Mouth vs. Exposed Teeth on Facial Expression Processing: An ERP Study. Behav. Sci. 2025, 15, 163. [Google Scholar] [CrossRef] [PubMed]

- Adams, R.B.; Gordon, H.L.; Baird, A.A.; Ambady, N.; Kleck, R.E. Effects of gaze on amygdala sensitivity to anger and fear faces. Science 2003, 300, 1536–1537. [Google Scholar] [CrossRef] [PubMed]

- Brody, L.R.; Hall, J.A. Gender and emotion in context. In Handbook of Emotions, 3rd ed.; Lewis, M., Haviland-Jones, J.M., Feldman Barrett, L., Eds.; Guilford Press: New York, NY, USA, 2008; pp. 395–408. [Google Scholar]

- Niedenthal, P.M.; Ric, F. Psychology of Emotion: Interpersonal, Experiential, and Cognitive Approaches; Psychology Press: London, UK, 2017. [Google Scholar]

- Hall, J.A.; Matsumoto, D. Gender differences in judgments of multiple emotions from facial expressions. Emotion 2004, 4, 201–206. [Google Scholar] [CrossRef]

- Henderson, J.M.; Williams, C.C.; Falk, R.J. Eye movements are functional during face learning. Mem. Cogn. 2005, 33, 98–106. [Google Scholar] [CrossRef]

- Royer, J.; Blais, C.; Charbonneau, I.; Déry, K.; Tardif, J.; Duchaine, B.; Fiset, D. Greater reliance on the eye region predicts better face recognition ability. Cognition 2018, 181, 12–20. [Google Scholar] [CrossRef] [PubMed]

- Eisenbarth, H.; Alpers, G.W. Happy mouth and sad eyes: Scanning emotional facial expressions. Emotion 2011, 11, 860–865. [Google Scholar] [CrossRef]

- Beaudry, O.; Roy-Charland, A.; Perron, M.; Cormier, I.; Tapp, R. Featural processing in recognition of emotional facial expressions. Cogn. Emot. 2013, 28, 416–432. [Google Scholar] [CrossRef] [PubMed]

- Singh, S.; Nasoz, F. Facial expression recognition with convolutional neural networks. In Proceedings of the 2020 10th Annual Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, USA, 6–8 January 2020; IEEE: New York, NY, USA, 2020; pp. 324–328. [Google Scholar]

- Yang, Y.F.; Gamer, M. Facial features associated with fear and happiness attract gaze during brief exposure without enhancing emotion recognition. Sci. Rep. 2025, 15, 30442. [Google Scholar] [CrossRef] [PubMed]

- Dzedzickis, A.; Kaklauskas, A.; Bucinskas, V. Human emotion recognition: Review of sensors and methods. Sensors 2020, 20, 592. [Google Scholar] [CrossRef] [PubMed]

| Stimulus Images | Eyes (y) | Nose (y) | Mouth Start (y) | Mouth End (y) |

|---|---|---|---|---|

| Anger Female | 239 | 275 | 315 | 353 |

| Anger Male | 258 | 297 | 337 | 379 |

| Disgust Female | 247 | 284 | 317 | 366 |

| Disgust Male | 216 | 250 | 286 | 334 |

| Fear Female | 213 | 248 | 269 | 358 |

| Fear Male | 217 | 277 | 312 | 373 |

| Happiness Male | 228 | 275 | 315 | 370 |

| Happiness Female | 241 | 286 | 316 | 359 |

| Sadness Female | 252 | 294 | 336 | 373 |

| Sadness Male | 258 | 301 | 343 | 391 |

| AOI Fixation Count | Classification Task | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Eyes | Mouth | Nose | Classification Accuracy | Decision Time (s) | |||||||||||

| Stimulus image | Female M SD | Male M SD | Total M SD | Female M SD | Male M SD | Total M SD | Female M SD | Male M SD | Total M SD | Female M SD | Male M SD | Total M SD | Female M SD | Male M SD | Total M SD |

| Anger male | 108.71 38.2 | 155.36 65.83 | 134.07 59.26 | 38.81 19.90 | 59.92 38.7 | 50.28 32.98 | 5.33 11.54 | 6.68 6.45 | 6.07 9.05 | 0.95 0.22 | 0.84 0.37 | 0.89 0.31 | 5.18 1.51 | 6.59 2.38 | 5.94 2.13 |

| Anger female | 127.86 38.9 | 135.92 41.37 | 132.24 40.03 | 65.33 59.53 | 70.52 65.30 | 68.15 62.1 | 4.95 14.54 | 4.92 5.46 | 4.93 10.48 | 1 0.00 | 0.88 0.33 | 0.93 0.25 | 6.68 2.08 | 6.77 2.72 | 6.73 2.42 |

| Disgust male | 99.71 48.34 | 124.36 120.37 | 113.11 94.45 | 60.05 48.48 | 66.92 80.68 | 63.78 67.29 | 4.33 6.26 | 6.2 10.87 | 5.35 9.02 | 0.71 0.46 | 0.84 0.37 | 0.78 0.42 | 5.69 2.75 | 5.64 2.11 | 5.67 2.4 |

| Disgust female | 85.71 34.36 | 103.08 46.22 | 95.15 41.72 | 42.52 27.46 | 53.16 54.15 | 48.3 43.9 | 3.67 4.84 | 4.48 7.93 | 4.11 6.64 | 0.95 0.22 | 1 0.00 | 0.98 0.15 | 4.56 1.49 | 4.99 2.22 | 4.79 1.91 |

| Fear male | 101.76 45.36 | 140.36 96.6 | 122.74 79.18 | 56.19 33.35 | 102.04 118.12 | 81.11 92.02 | 3.29 2.94 | 8.48 9.43 | 6.11 7.62 | 0.67 0.48 | 0.80 0.41 | 0.74 0.44 | 5.44 2.22 | 6.87 3.33 | 6.22 2.94 |

| Fear female | 110.67 57.36 | 101.88 103.26 | 105.89 84.67 | 70.86 32.49 | 76.8 93.90 | 74.09 71.98 | 5.71 11.1 | 4.76 4.75 | 5.2 8.19 | 0.86 0.36 | 0.80 0.41 | 0.83 0.38 | 6.2 2.07 | 5.51 2.19 | 5.82 2.14 |

| Happiness male | 89.76 41.12 | 90.92 25.2 | 90.39 33.02 | 41.71 37.38 | 38.64 46.31 | 40.04 42.04 | 3.24 4.3 | 4.28 4.73 | 3.8 4.52 | 1 0.00 | 0.96 0.20 | 0.98 0.15 | 4.58 1.61 | 4.34 1.06 | 4.45 1.33 |

| Happiness female | 80.52 36 | 106.84 59.82 | 94.83 51.57 | 40.67 29.33 | 40.84 21.73 | 40.76 25.19 | 3.14 6.07 | 4.28 5.34 | 3.76 5.65 | 1 0.00 | 1 0.00 | 1 0.00 | 4.05 0.87 | 4.81 1.51 | 4.47 1.3 |

| Sadness male | 70.29 31.52 | 130 124.23 | 102.74 97.86 | 44.71 20.38 | 82.04 78.87 | 65 62.09 | 5.67 6.51 | 7.76 8.54 | 6.8 7.67 | 0.90 0.30 | 0.84 0.37 | 0.87 0.34 | 4.19 1.86 | 6.44 3.22 | 5.41 2.89 |

| Sadness female | 72.67 38.42 | 119.36 73.07 | 98.04 63.69 | 50.57 33.55 | 76.44 93.15 | 64.63 72.78 | 6.05 9.64 | 6.24 8.35 | 6.15 8.86 | 0.76 0.44 | 0.76 0.44 | 0.76 0.43 | 4.42 2.12 | 6.28 4.39 | 5.43 3.63 |

| Total | 94.77 44.25 | 120.81 83.00 | 108.92 69.26 | 51.14 36.9 | 66.73 75.41 | 59.62 61.37 | 4.54 8.43 | 5.81 7.47 | 5.23 7.94 | 0.88 0.32 | 0.87 0.33 | 0.88 0.33 | 5.10 2.06 | 5.82 2.76 | 5.49 2.49 |

| Emotion | M | SE | 95% CI (LL–UL) |

|---|---|---|---|

| Anger | 6132.97 | 190.40 | (5758.68–6507.25) |

| Disgust | 4935.17 | 193.92 | (4554.01–5316.39) |

| Fear | 5597.78 | 205.77 | (5193.28–6002.28) |

| Happiness | 4453.60 | 182.61 | (4094.63–4812.57) |

| Sadness | 5019.03 | 204.69 | (4616.66–5421.39) |

| Emotion | Participant Gender | M | SE | 95% CI (LL–UL) |

|---|---|---|---|---|

| Anger | Female | 5846.44 | 266.26 | (5323.03–6369.84) |

| Male | 6419.50 | 272.25 | (5884.33–6954.67) | |

| Disgust | Female | 5022.77 | 284.15 | (4464.20–5581.34) |

| Male | 4847.62 | 263.95 | (4328.76–5366.49) | |

| Fear | Female | 5800.52 | 299.64 | (5211.51–6389.52) |

| Male | 5395.06 | 282.12 | (4840.48–5949.61) | |

| Happiness | Female | 4432.24 | 263.48 | (3914.31–4950.16) |

| Male | 4474.97 | 252.92 | (3977.78–4972.14) | |

| Sadness | Female | 4100.95 | 293.36 | (3524.28–4677.62) |

| Male | 5937.10 | 285.53 | (5375.81–6498.39) |

| Emotion 1 | Emotion 2 | M Difference | SE Difference | 95% CI (LL–UL) | p |

|---|---|---|---|---|---|

| Anger (64.23, 2.54) | Disgust | 14.14 | 2.90 | (5.60–22.68) | <0.001 |

| Fear | −0.43 | 5.88 | (−17.74–16.87) | 1.000 | |

| Happiness | 19.47 | 2.60 | (11.83–27.12) | <0.001 | |

| Sadness | 8.66 | 4.83 | (−5.57–22.88) | 0.796 | |

| Disgust (50.08, 1.96) | Anger | −14.14 | 2.90 | (−22.68–5.60) | <0.001 |

| Fear | −14.57 | 4.70 | (−28.40–0.75) | 0.032 | |

| Happiness | 5.33 | 1.77 | (0.12–10.54) | 0.042 | |

| Sadness | −5.48 | 3.10 | (−14.59–3.62) | 0.828 | |

| Fear (64.65, 5.68) | Anger | 0.43 | 5.88 | (−16.87–17.74) | 1.000 |

| Disgust | 14.57 | 4.70 | (0.75–28.40) | 0.032 | |

| Happiness | 19.90 | 5.47 | (3.82–35.99) | 0.007 | |

| Sadness | 9.09 | 4.83 | (−5.13–23.31) | 0.660 | |

| Happiness (44.75, 1.58) | Anger | −19.47 | 2.60 | (−27.12–11.83) | <0.001 |

| Disgust | −5.33 | 1.77 | (−10.54–0.12) | 0.042 | |

| Fear | −19.90 | 5.47 | (−35.99–3.82) | 0.007 | |

| Sadness | −10.82 | 3.58 | (−21.35–0.28) | 0.040 | |

| Sadness (55.57, 4.15) | Anger | −8.66 | 4.83 | (−22.88–5.57) | 0.796 |

| Disgust | 5.48 | 3.10 | (−3.62–14.59) | 0.828 | |

| Fear | −9.09 | 4.83 | (−23.31–5.13) | 0.660 | |

| Happiness | 10.82 | 3.58 | (0.28–21.35) | 0.040 |

| AOI | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Emotion | Eyes | Mouth | Nose | ||||||

| M | SE | 95% CI | M | SE | 95% CI | M | SE | 95% CI | |

| Anger | 128.47 | 4.82 | (118.78–138.15) | 58.81 | 5.24 | (48.28–69.34) | 5.40 | 1.23 | (2.93–7.86) |

| Disgust | 94.68 | 3.64 | (87.37–101.99) | 51.29 | 3.96 | (43.33–59.25) | 4.28 | 0.78 | (2.72–5.84) |

| Fear | 111.94 | 7.82 | (96.22–127.66) | 75.95 | 9.57 | (56.70–95.20) | 6.07 | 0.83 | (4.40–7.75) |

| Happiness | 92.21 | 4.87 | (82.42–102.00) | 38.26 | 3.39 | (31.44–45.08) | 3.78 | 0.63 | (2.53–5.04) |

| Sadness | 98.12 | 7.39 | [83.25–112.98) | 61.84 | 6.23 | [49.31–74.37) | 6.74 | 0.92 | [4.89–8.59) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alkan, N. Recognition and Misclassification Patterns of Basic Emotional Facial Expressions: An Eye-Tracking Study in Young Healthy Adults. J. Eye Mov. Res. 2025, 18, 53. https://doi.org/10.3390/jemr18050053

Alkan N. Recognition and Misclassification Patterns of Basic Emotional Facial Expressions: An Eye-Tracking Study in Young Healthy Adults. Journal of Eye Movement Research. 2025; 18(5):53. https://doi.org/10.3390/jemr18050053

Chicago/Turabian StyleAlkan, Neşe. 2025. "Recognition and Misclassification Patterns of Basic Emotional Facial Expressions: An Eye-Tracking Study in Young Healthy Adults" Journal of Eye Movement Research 18, no. 5: 53. https://doi.org/10.3390/jemr18050053

APA StyleAlkan, N. (2025). Recognition and Misclassification Patterns of Basic Emotional Facial Expressions: An Eye-Tracking Study in Young Healthy Adults. Journal of Eye Movement Research, 18(5), 53. https://doi.org/10.3390/jemr18050053