Investigating Non-Visual Eye Movements Non-Intrusively: Comparing Manual and Automatic Annotation Styles

Abstract

:Introduction

Experimental Methods

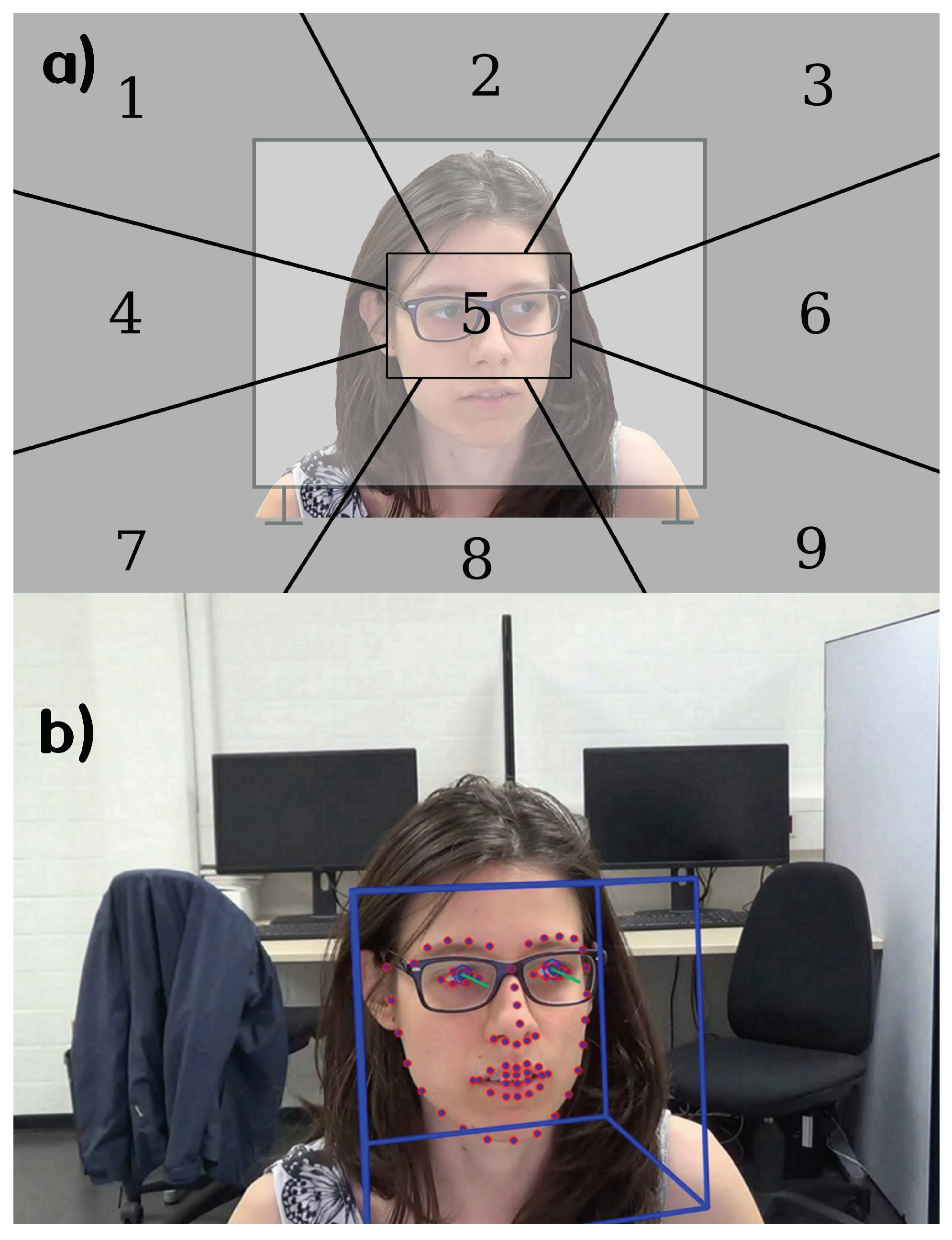

Manual Annotation with Coding Grid

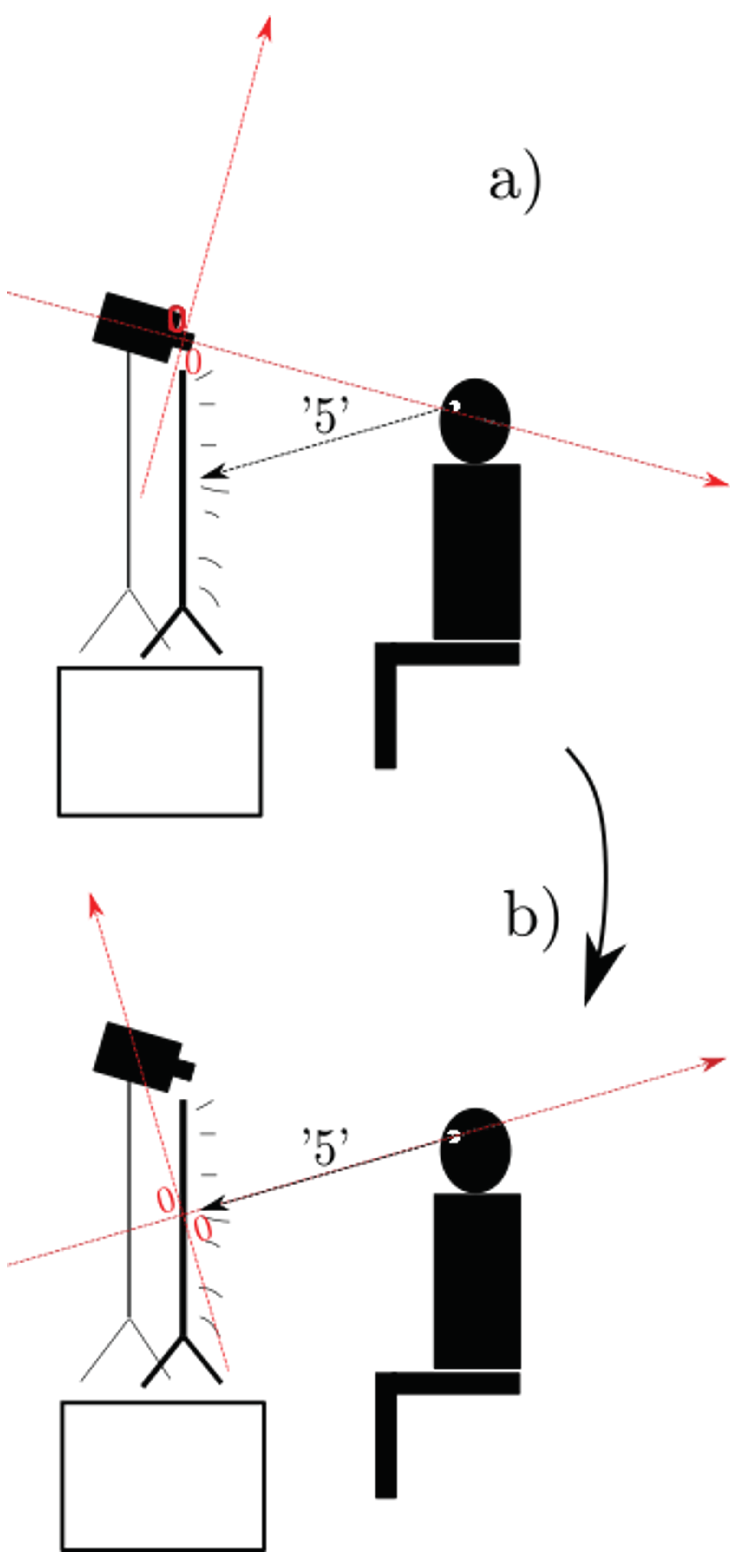

Automatic Annotation with OpenFace

Results

Intra-class Correlation Coefficient of Absolute Agreement

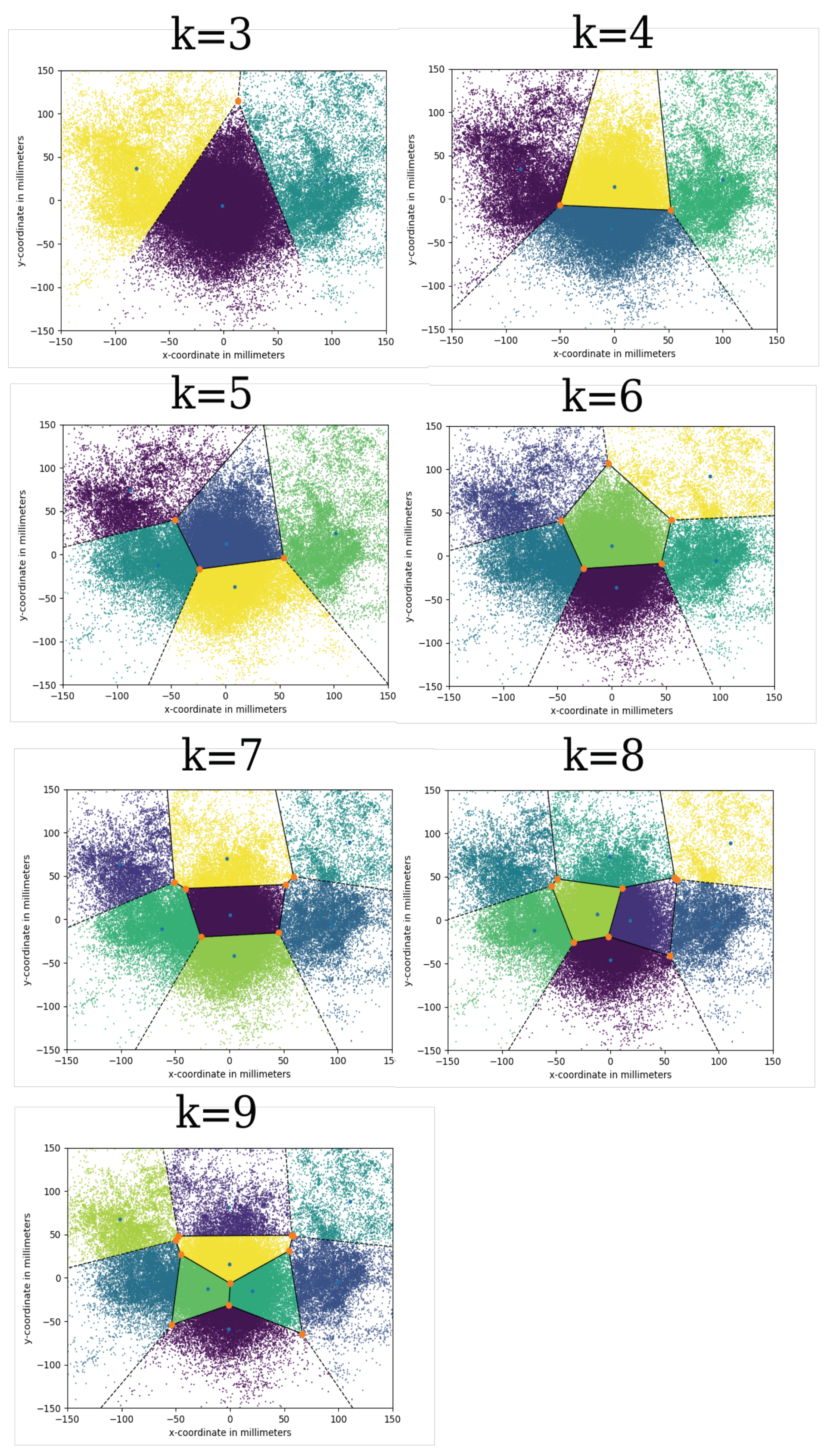

Reconstructing the Coding Grid from OpenFace

Discussion

Ethics and Conflict of Interest

Acknowledgments

Appendix A

Appendix 1: Experimental Method

Participants

Design

Materials

Procedure

Appendix 2: Figure 6

Appendix 3: Figure 7

References

- Baltrušaitis, T., A. Zadeh, Y. C. Lim, and L.-P. Morency. 2018. Openface 2.0: Facial behavior analysis toolkit. 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018); pp. 59–66. [Google Scholar] [CrossRef]

- Baltrušaitis, T. [TadasBaltrusaitis]. 2018. There are two steps that are used for computing the head pose [Comment on the online forum post how can you get headpose location in mm (on headposelive gui)]. GitHub. March 1. https://github.com/TadasBaltrusaitis/OpenFace/issues/362#issuecomment-369746047.

- Baltrušaitis, T. [TadasBaltrusaitis], and ashoorie. 2021. Output Quality (Gaze Direction Underestimation, Default Face Measures) [Online forum post]. GitHub. May 11. https://github.com/TadasBaltrusaitis/Open-Face/issues/969.

- Bojko, A. 2013. Eye tracking the user experience: A practical guide to research. Rosenfeld Media. [Google Scholar]

- Carlei, C., and D. Kerzel. 2014. Gaze direction affects visuo-spatial short-term memory. Brain and Cognition 90: 63–68. [Google Scholar] [CrossRef] [PubMed]

- Diamantopoulos, G., S. I. Wooley, and M. Spann. 2009. A critical review on past research into neurolinguistic programming eye accessing cues model [Unpublished manuscript]. https://www.researchgate.net/publication/232180053_A_Critical_Review_of_Past_Research_into_the_Neuro-linguistic_Programming_Eyeaccessing_Cues_Model.

- Ehrlichman, H., and J. Barrett. 1983. Random saccadic eye movements during verbal-linguistic and visualimaginal tasks. Acta psychologica 53, 1: 9–26. [Google Scholar] [CrossRef] [PubMed]

- Ehrlichman, H., D. Micic, A. Sousa, and J. Zhu. 2007. Looking for answers: Eye movements in non-visual cognitive tasks. Brain and Cognition 64, 1: 7–20. [Google Scholar] [CrossRef] [PubMed]

- Florea, L., C. Florea, R. Vrânceanu, and C. Vertan. 2013. Can Your Eyes Tell Me How You Think? A Gaze Directed Estimation of the Mental Activity. BMVC. [Google Scholar] [CrossRef]

- Fortenbaugh, F. C., M. A. Silver, and L. C. Robertson. 2015. Individual differences in visual field shape modulate the effects of attention on the lower visual field advantage in crowding. Journal of Vision 15, 2: 19–19. [Google Scholar] [CrossRef] [PubMed]

- Fydanaki, A., and Z. Geradts. 2018. Evaluating OpenFace: an open-source automatic facial comparison algorithm for forensics. Forensic sciences research 3, 3: 202–209. [Google Scholar] [CrossRef] [PubMed]

- Hiscock, M., and K. J. Bergstrom. 1981. Ocular motility as an indicator of verbal and visuospatial processing. Memory & Cognition 9, 3: 332–338. [Google Scholar] [CrossRef]

- Kernos, J. 2019. Non-visual eye movements during remembering past and imagining future episodes. Unpublished bachelor’s thesis, University of Osnabrück. [Google Scholar]

- Kock, F. 2021. Does the movement pattern of non-visual eye movement during episodic vs semantic memory tasks correspond to Lévy Flights? Unpublished bachelor’s thesis, University of Osnabrück. [Google Scholar]

- Kock, F., and A. Hohenberger. 2021. Does the Movement Pattern of Non-Visual Eye Movements during Episodic vs Semantic Memory Tasks Correspond To Lévy Flights? [Poster]. Spatial Cognition 2020/1, University of Latvia, Latvia. August 2-4. http://dspace.lu.lv/dspace/bitstream/handle/7/56598/Fabienne_Kock_Poster_L%C3%A9vy_Flights_in_Non-Visual_Eye-Movements.pdf?sequence=1.

- Koo, T. K., and M. Y. Li. 2016. A guideline of selecting and reporting intraclass correlation coefficients for reliability research. Journal of chiropractic medicine 15, 2: 155–163. [Google Scholar] [CrossRef] [PubMed]

- Landis, J. R., and G. G. Koch. 1977. The measurement of observer agreement for categorical data. Biometrics, 159–174. [Google Scholar] [CrossRef]

- Max Planck Institute for Psycholinguistics, The Language Archive. 2019. ELAN (Version 6) [Computer software]. https://archive.mpi.nl/tla/elan.

- Micic, D., H. Ehrlichman, and R. Chen. 2010. Why do we move our eyes while trying to remember? The relationship between non-visual gaze patterns and memory. Brain and Cognition 74, 3: 210–224. [Google Scholar] [CrossRef] [PubMed]

- Müller, R., and P. Büttner. 1994. A critical discussion of intraclass correlation coefficients. Statistics in Medicine 13, 23-24: 2465–2476. [Google Scholar] [CrossRef] [PubMed]

- Novikov, A. V. 2019. PyClustering: Data mining library. Journal of Open Source Software 4, 36: 1230. [Google Scholar] [CrossRef]

- Pedregosa, F., G. Varoquaux, A. Gramfort, V. Michel, B. Thirion, O. Grisel, M. Blondel, P. Prettenhofer, R. Weiss, and V. Dubourg. 2011. Scikit-learn: Machine learning in Python. Journal of Machine Learning Research 12: 2825–2830. [Google Scholar]

- R Core Team. 2020. R: A language and environment for statistical computing. R Foundation for Statistical Computing. https://www.R-project.org/.

- Schmitz, M. 2021. Speech, Memory, and Directions of Non-Visual Eye Movements [Unpublished bachelor’s thesis], University of Osnabrück.

- Stelter, S. 2019. Intraindividual consistencies and interindivdual differences in non-visual eye movement patterns in semantic and episodic memory tasks. Unpublished bachelor’s thesis, University of Osnabrück. [Google Scholar]

- Stephan, A., and S. Walter. 2020. Situated affectivity. In The Routledge Handbook of Phenomenology of Emotion. Routledge: pp. 299–311. [Google Scholar]

- Wood, E., T. Baltrušaitis, X. Zhang, Y. Sugano, P. Robinson, and A. Bulling. 2015. Rendering of eyes for eye-shape registration and gaze estimation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV). pp. 3756–3764. [Google Scholar]

Copyright © 2022. This article is licensed under a Creative Commons Attribution 4.0 International License.

Share and Cite

Stüber, J.; Junctorius, L.; Hohenberger, A. Investigating Non-Visual Eye Movements Non-Intrusively: Comparing Manual and Automatic Annotation Styles. J. Eye Mov. Res. 2022, 15, 1-14. https://doi.org/10.16910/jemr.15.2.1

Stüber J, Junctorius L, Hohenberger A. Investigating Non-Visual Eye Movements Non-Intrusively: Comparing Manual and Automatic Annotation Styles. Journal of Eye Movement Research. 2022; 15(2):1-14. https://doi.org/10.16910/jemr.15.2.1

Chicago/Turabian StyleStüber, Jeremias, Lina Junctorius, and Annette Hohenberger. 2022. "Investigating Non-Visual Eye Movements Non-Intrusively: Comparing Manual and Automatic Annotation Styles" Journal of Eye Movement Research 15, no. 2: 1-14. https://doi.org/10.16910/jemr.15.2.1

APA StyleStüber, J., Junctorius, L., & Hohenberger, A. (2022). Investigating Non-Visual Eye Movements Non-Intrusively: Comparing Manual and Automatic Annotation Styles. Journal of Eye Movement Research, 15(2), 1-14. https://doi.org/10.16910/jemr.15.2.1