Performance Evaluation of Monocular Markerless Pose Estimation Systems for Industrial Exoskeletons

Abstract

1. Introduction

2. Experimental Method

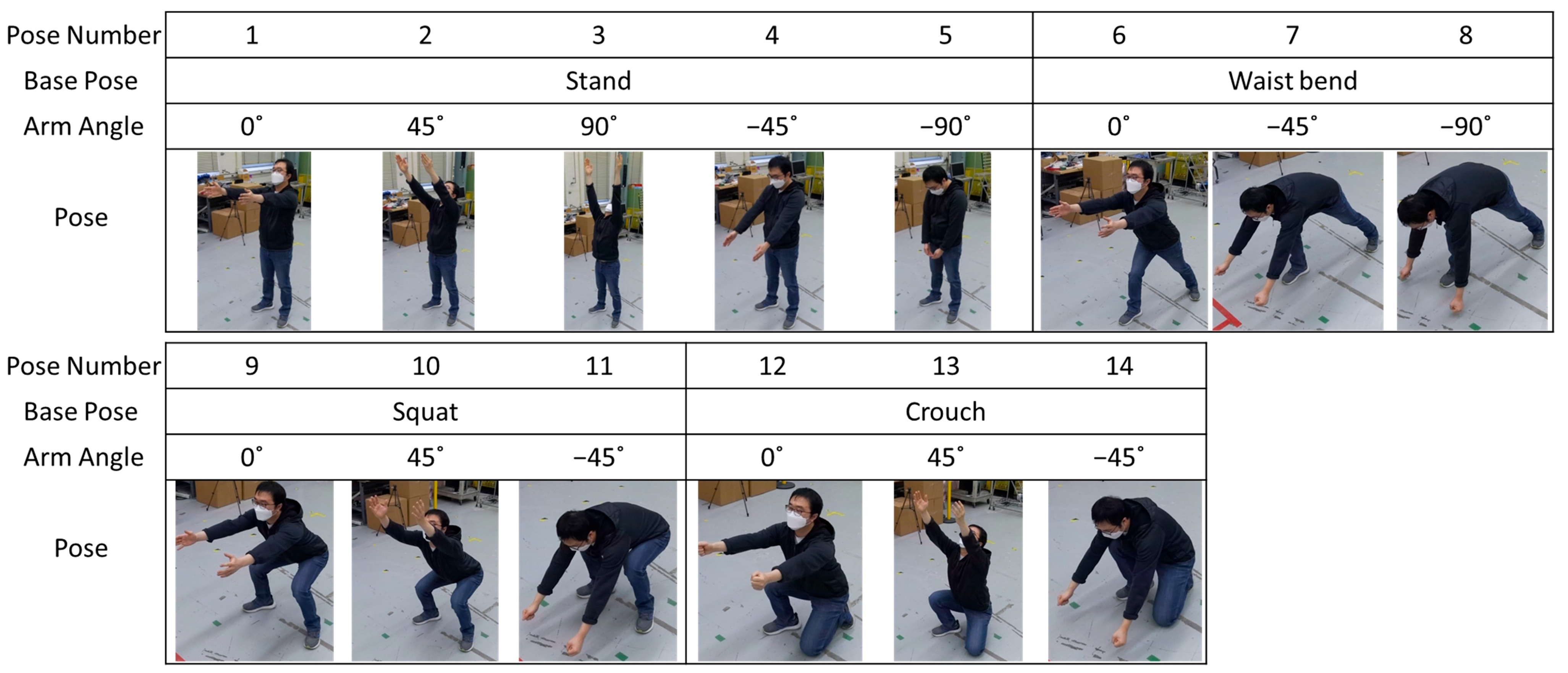

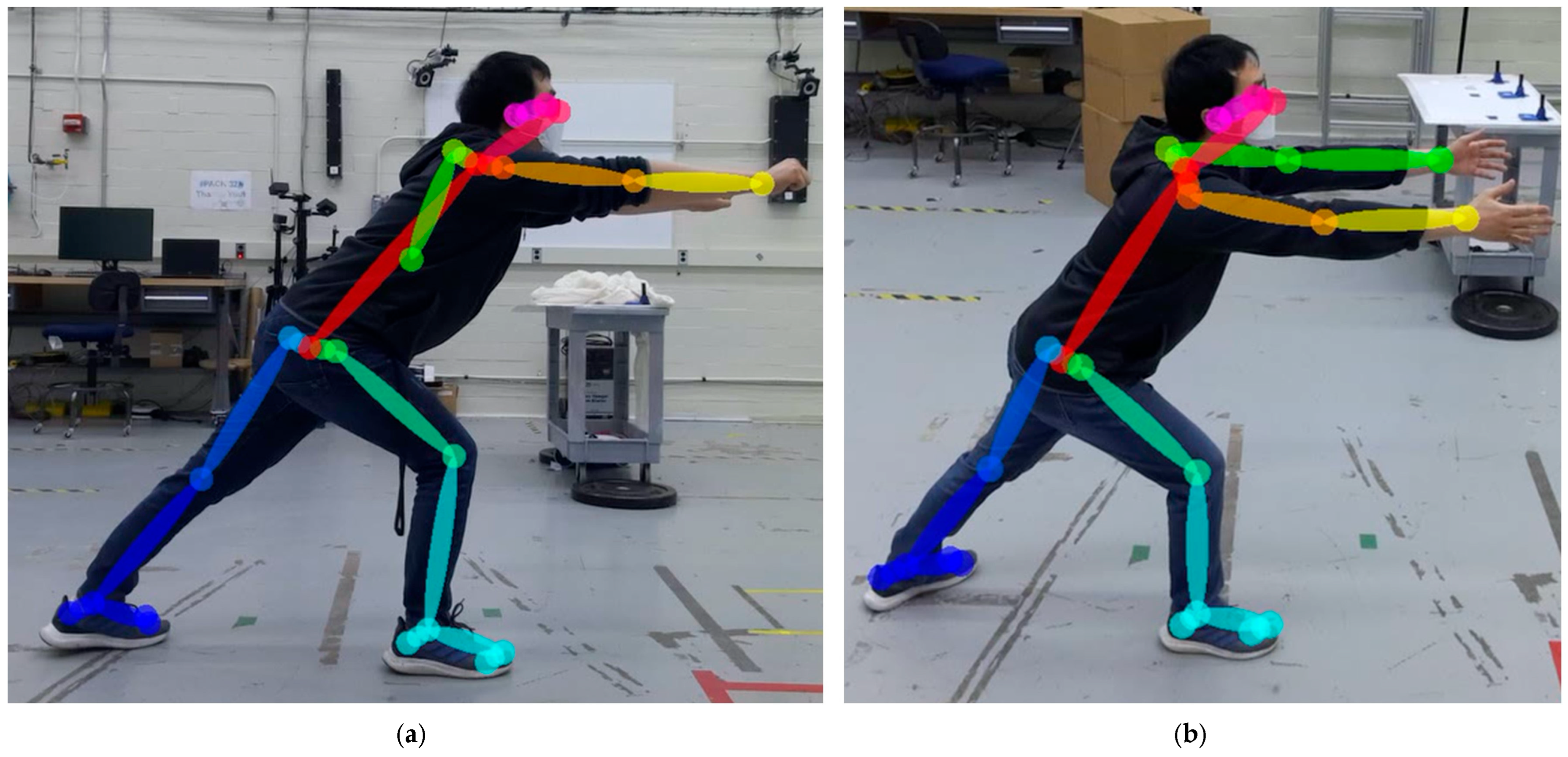

2.1. Poses

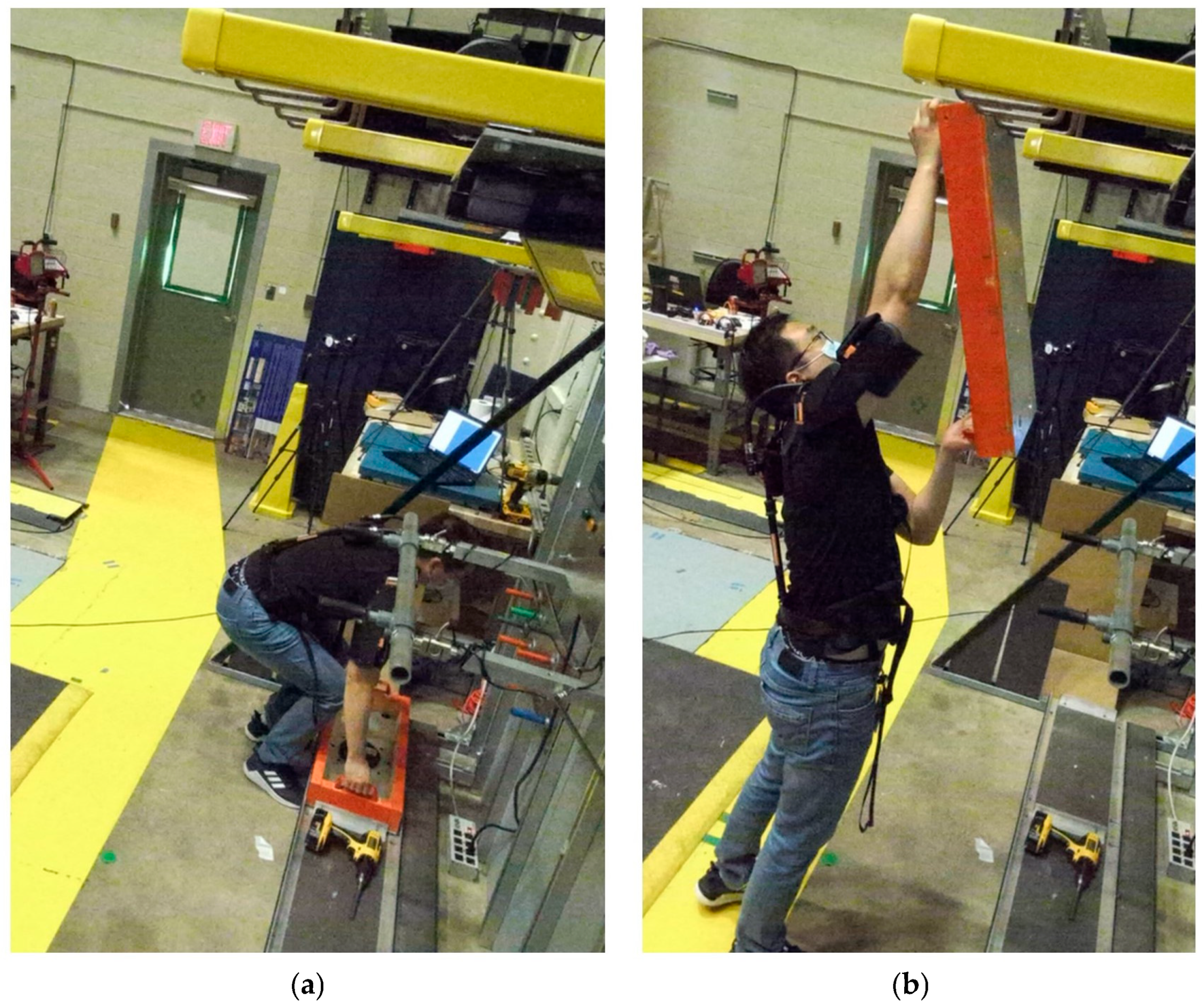

2.2. Types of Exoskeletons

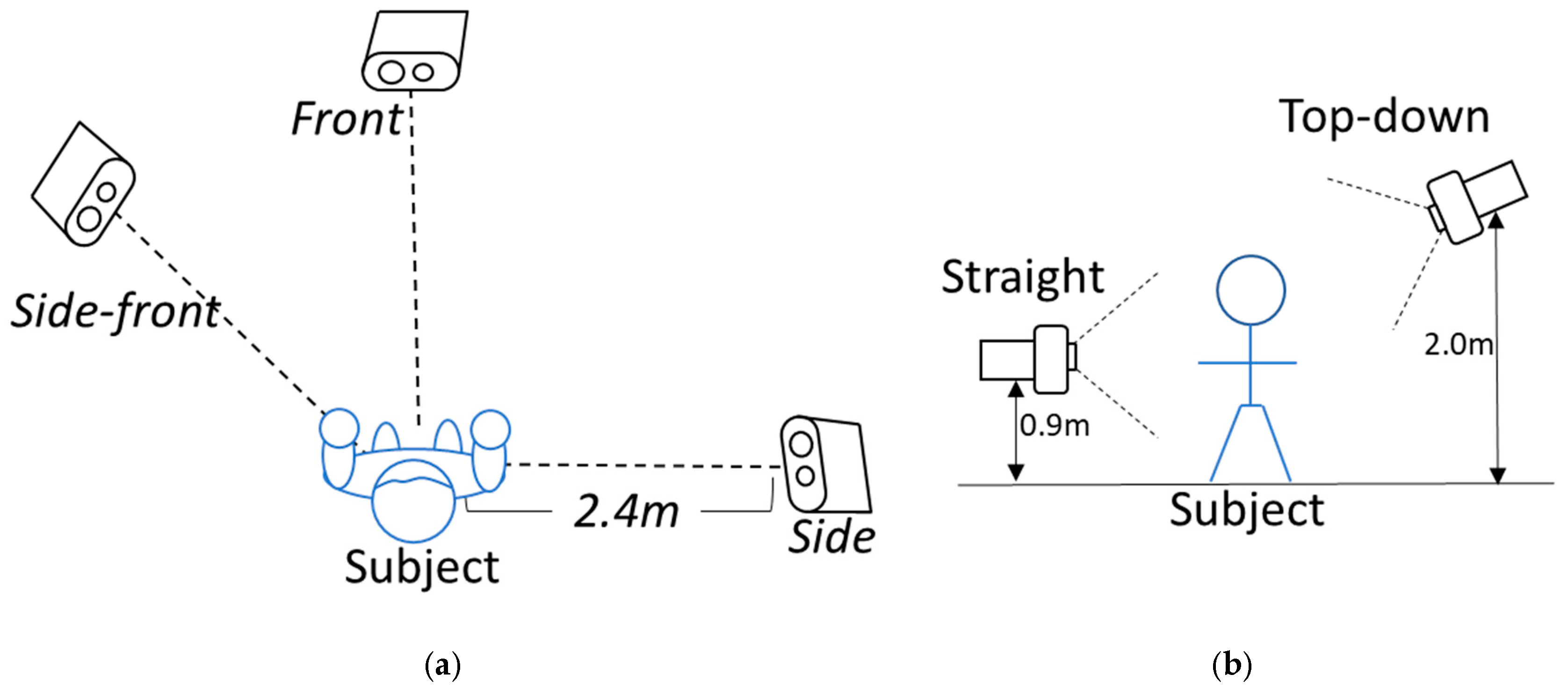

2.3. Sensor and Image Capture

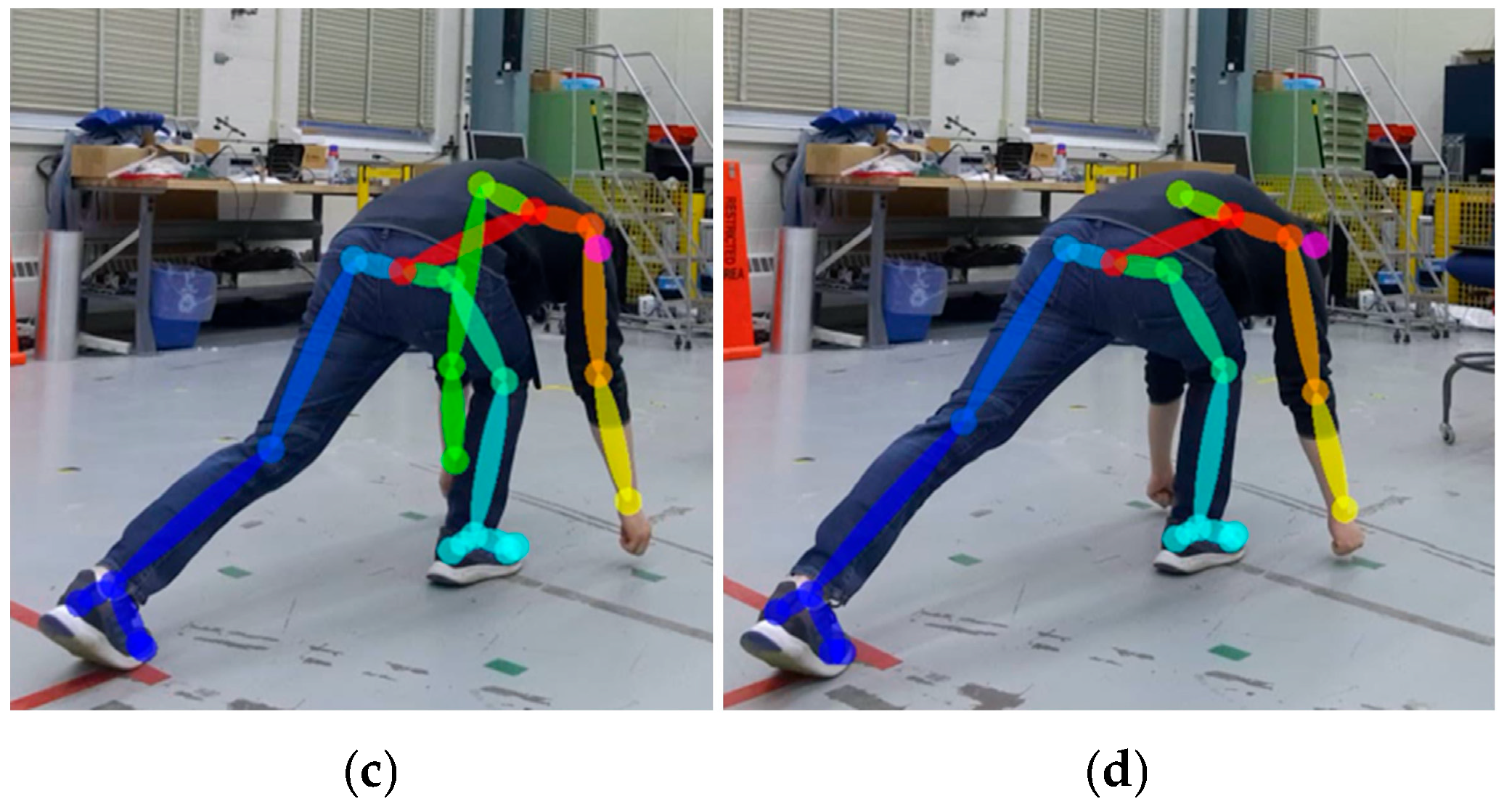

2.4. Pose Estimation Evaluation

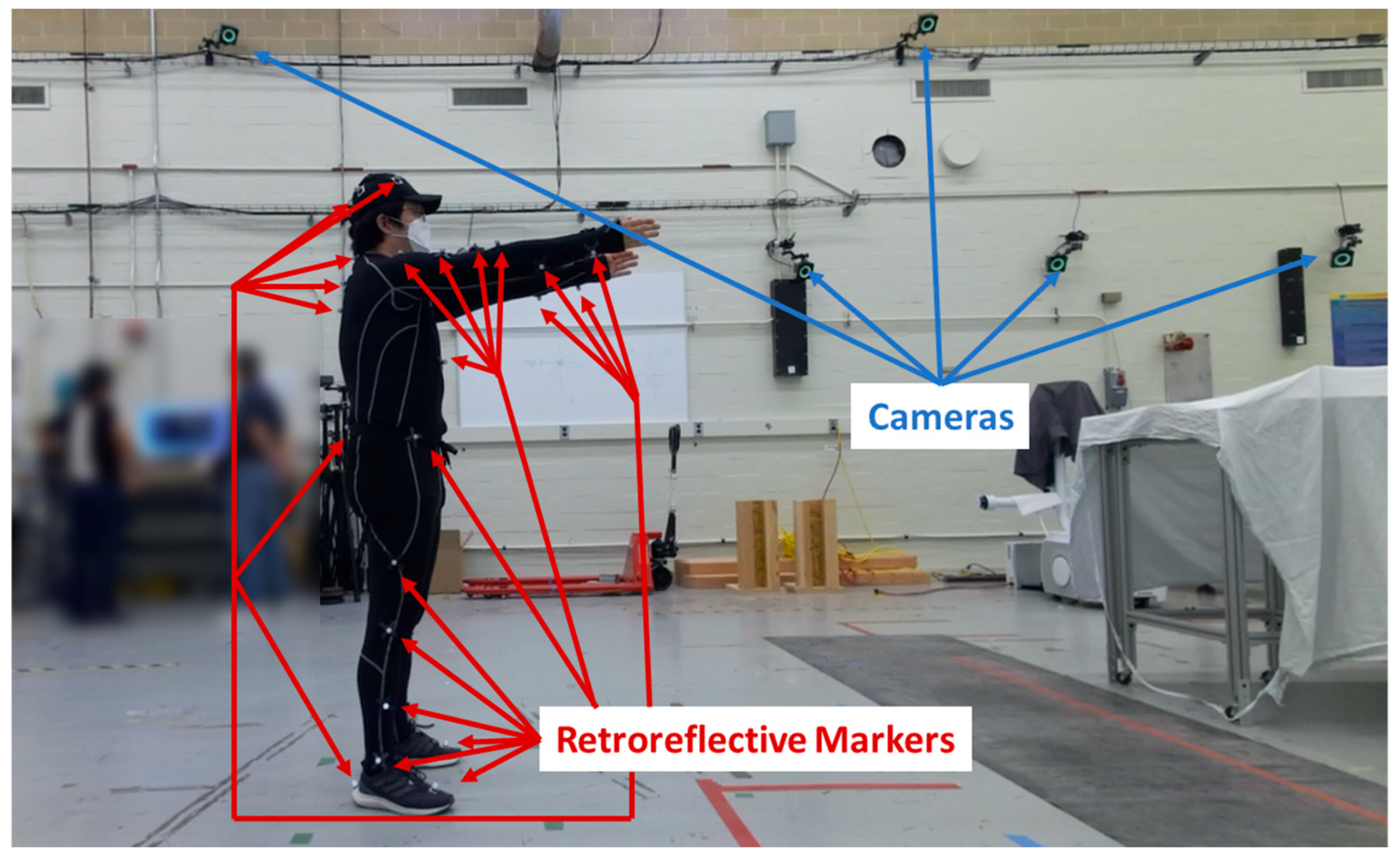

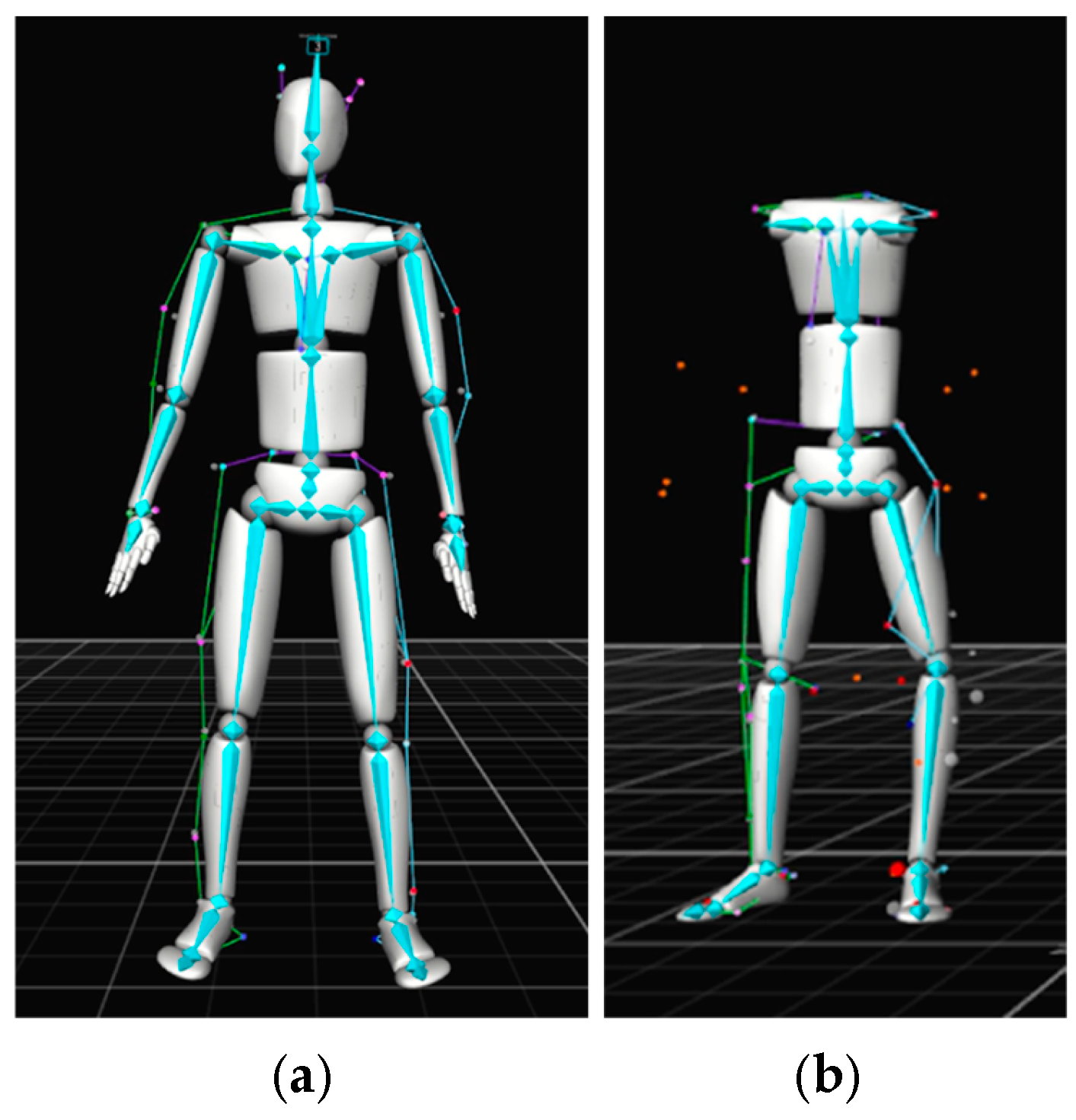

2.5. OTS as the Reference System

3. Results

3.1. Pose Estimation Evaluation Results

3.2. Acceptable Results by Category

4. Discussion

4.1. Factors Contributing to Pose Estimation Errors

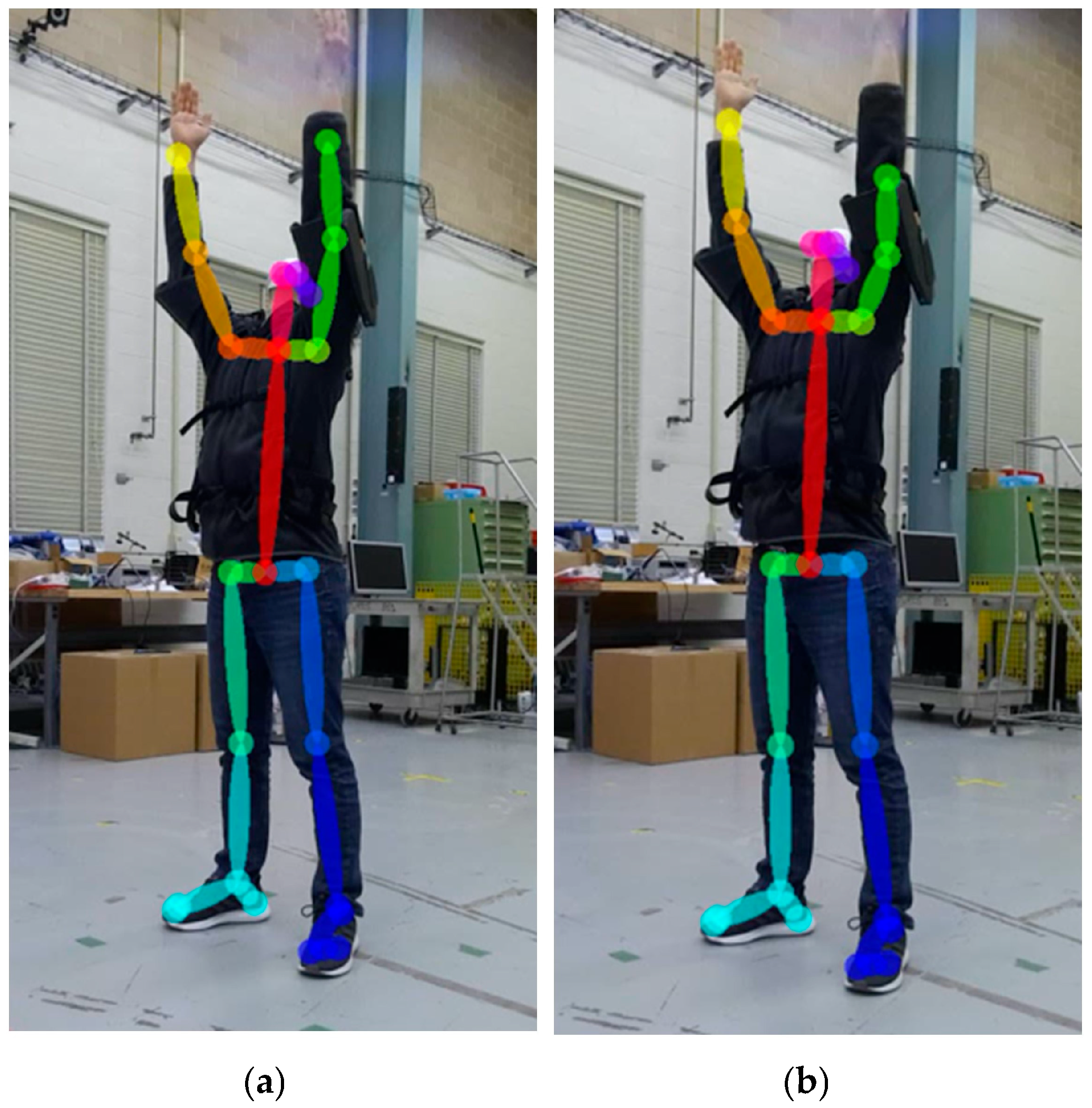

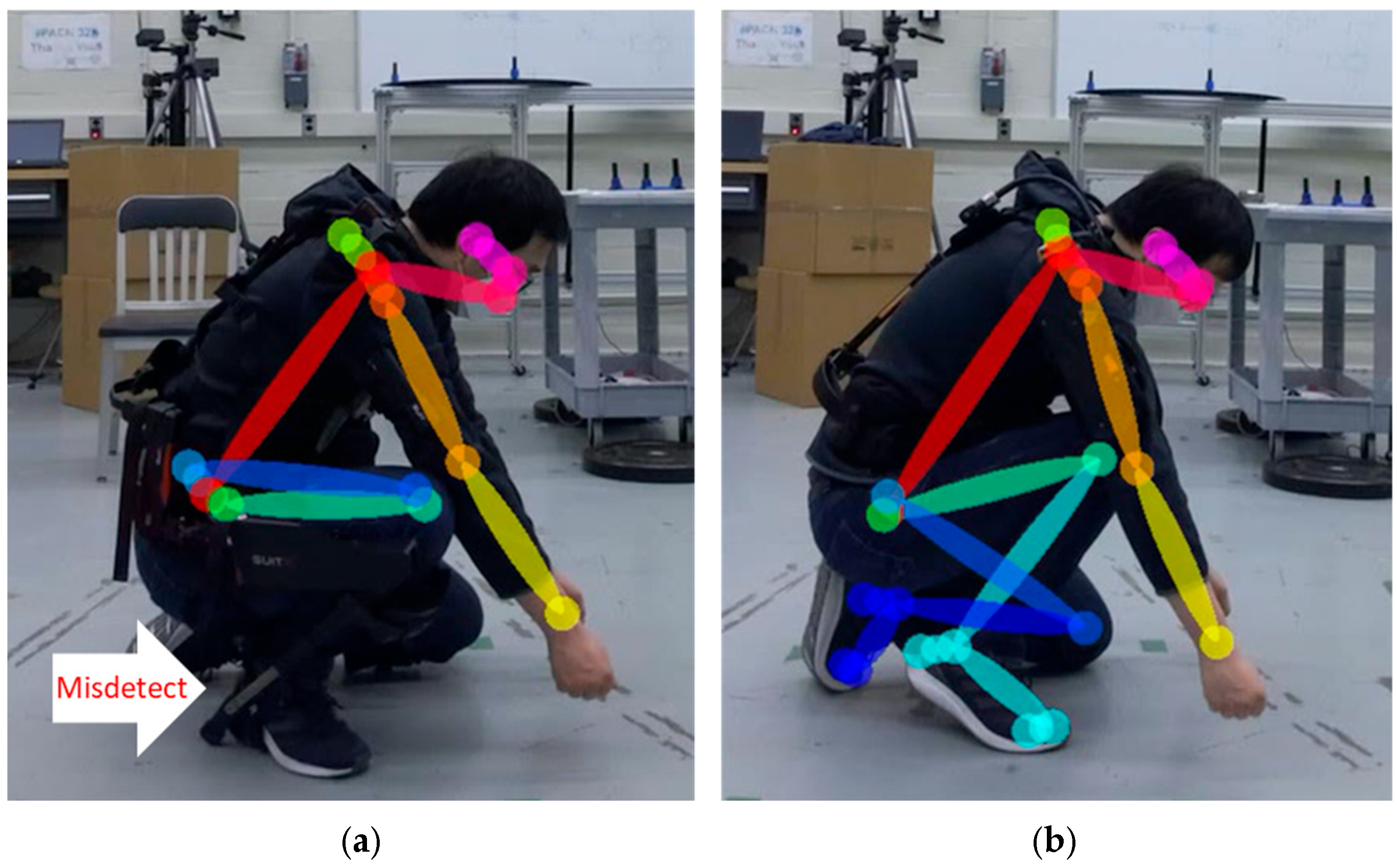

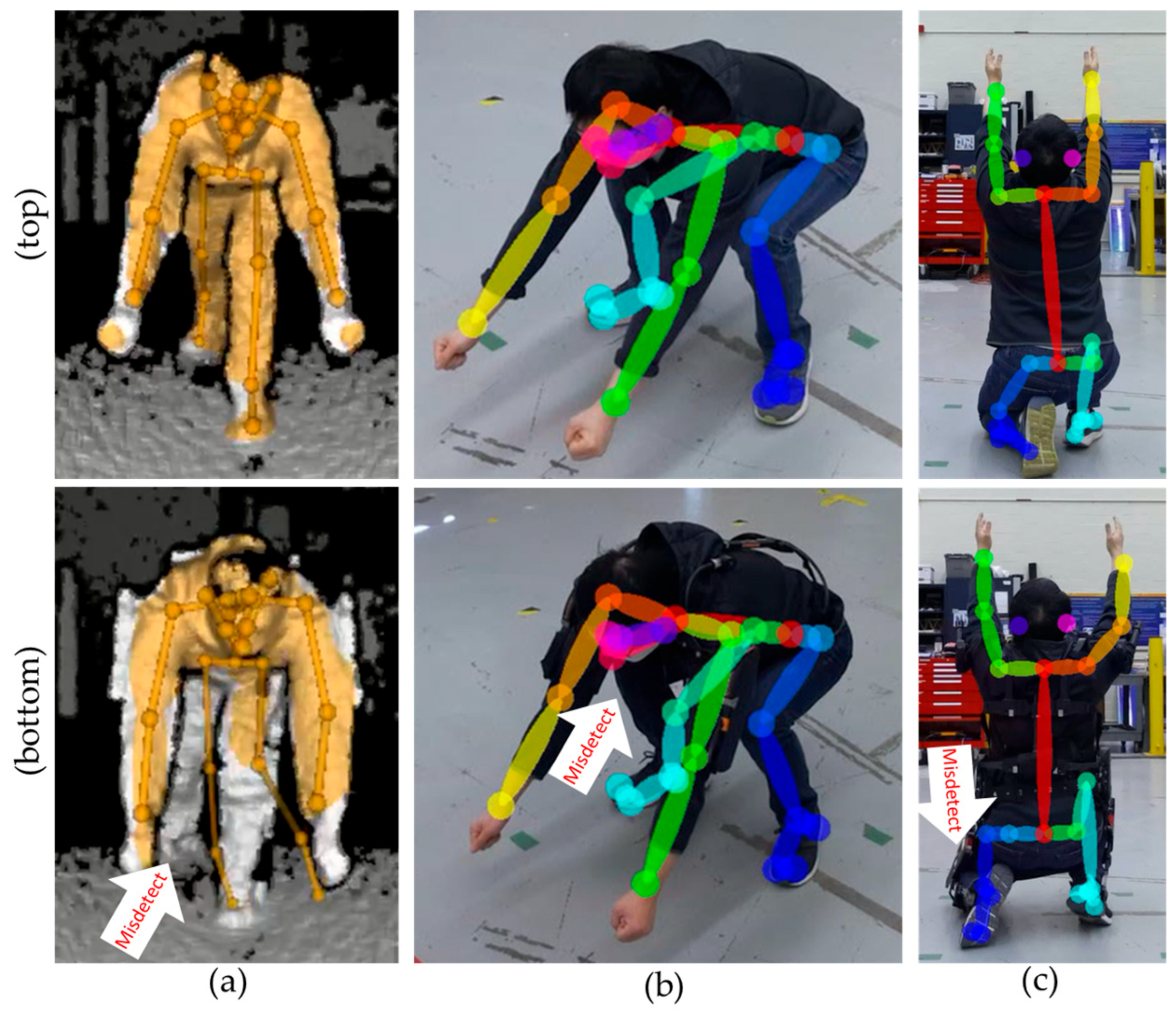

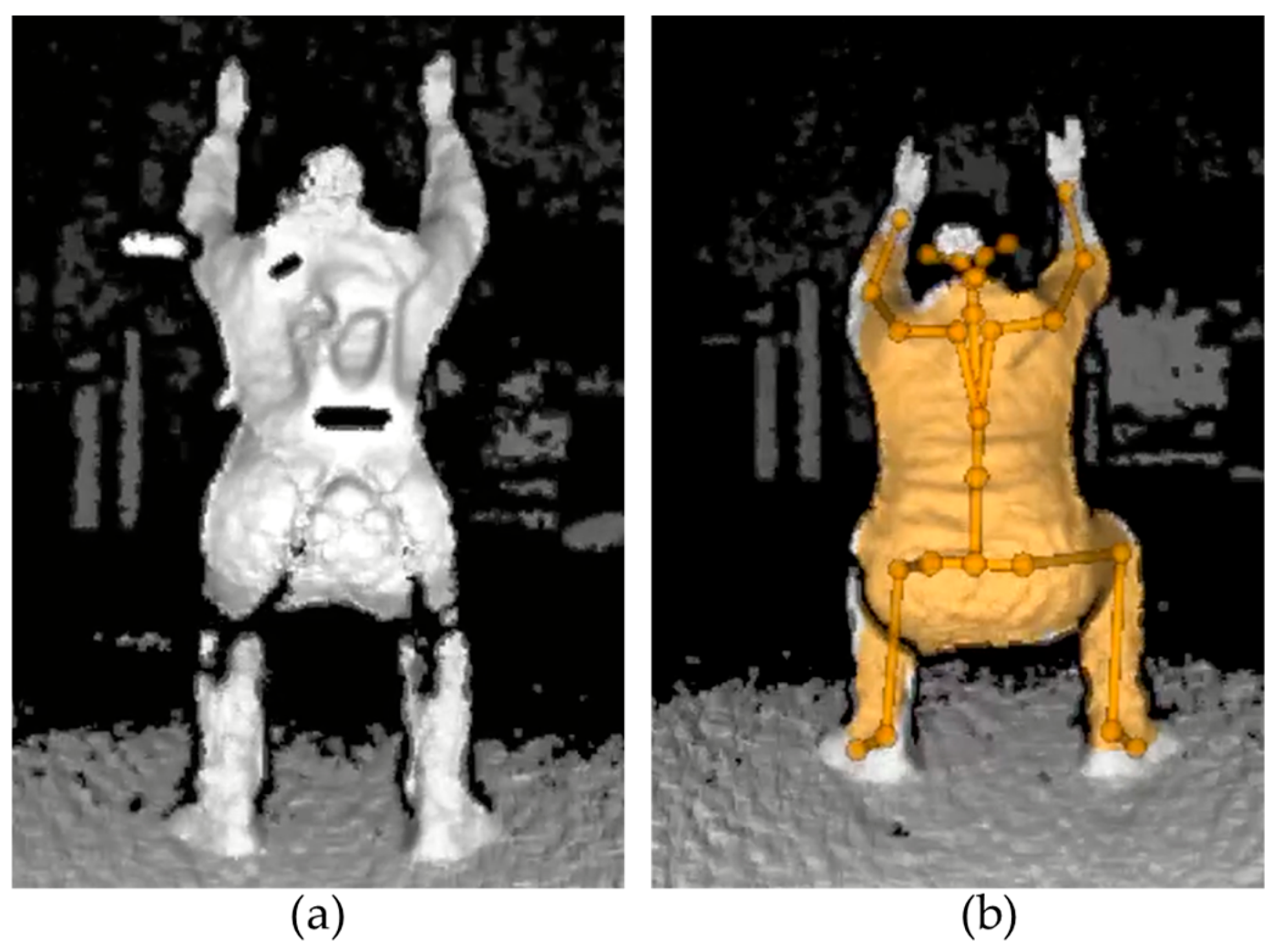

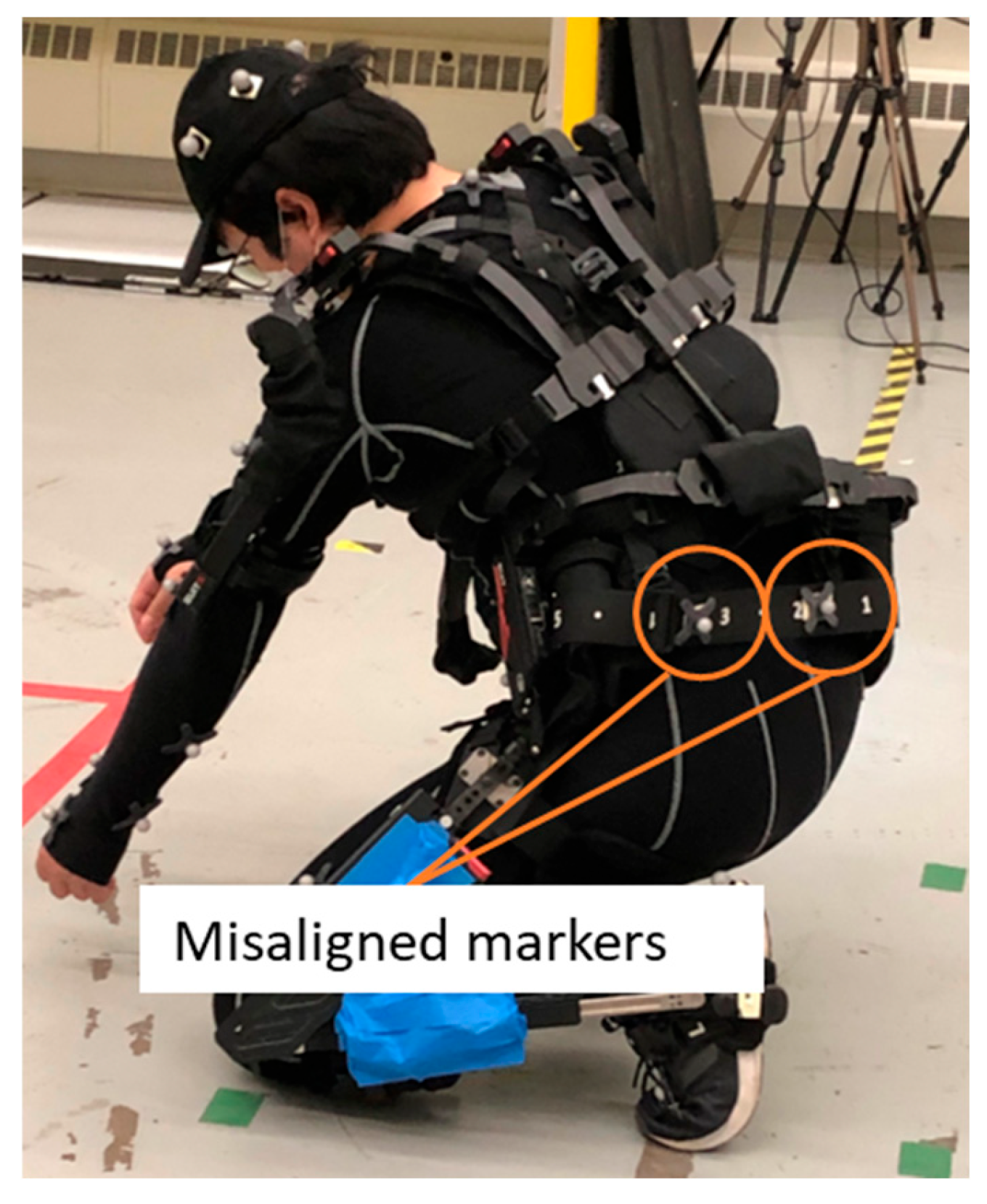

4.1.1. Occlusions

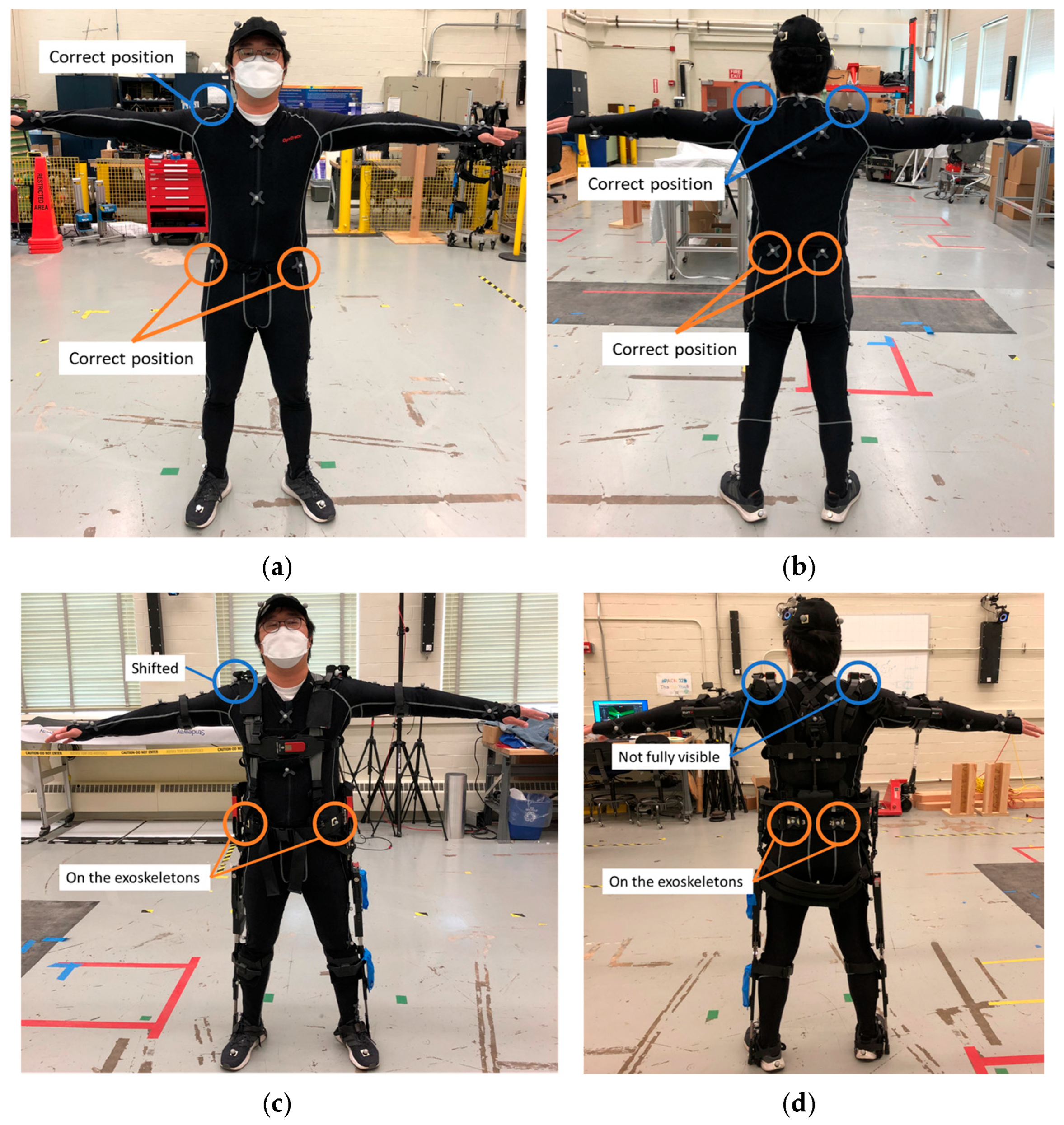

4.1.2. Wearing Exoskeletons

4.2. Reference System Analysis

4.3. Strategies for Exoskeleton Studies

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Huang, A.; Badurdeen, F. Metrics-Based Approach to Evaluate Sustainable Manufacturing Performance at the Production Line and Plant Levels. J. Clean. Prod. 2018, 192, 462–476. [Google Scholar] [CrossRef]

- Haapala, K.R.; Zhao, F.; Camelio, J.; Sutherland, J.W.; Skerlos, S.J.; Dornfeld, D.A.; Jawahir, I.S.; Clarens, A.F.; Rickli, J.L. A Review of Engineering Research in Sustainable Manufacturing. J. Manuf. Sci. Eng. 2013, 135, 041013. [Google Scholar] [CrossRef]

- Yale Environmetal Health & Safety Ergonomics: Awkward Posture. 2018. Available online: https://ehs.yale.edu/sites/default/files/files/ergo-awkward-posture.pdf (accessed on 22 January 2024).

- Injuries, Illnesses and Fatalities. Occupational Injuries and Illnesses Resulting in Musculoskeletal Disorders (MSDs), U.S. Bureau_of Labor Statistics. Available online: https://bls.gov/iif/factsheets/msds.htm (accessed on 22 January 2024).

- Gillette, J.; Stephenson, M. EMG Analysis of an Upper Body Exoskeleton during Automotive Assembly. In Proceedings of the 42nd Annual Meeting of the American Society of Biomechanics, Rochester, MN, USA, 8–11 August 2018; pp. 308–309. [Google Scholar]

- Jorgensen, M.J.; Hakansson, N.A.; Desai, J. The Impact of Passive Shoulder Exoskeletons during Simulated Aircraft Manufacturing Sealing Tasks. Int. J. Ind. Ergon. 2022, 91, 103337. [Google Scholar] [CrossRef]

- Kawale, S.S.; Sreekumar, M. Design of a Wearable Lower Body Exoskeleton Mechanism for Shipbuilding Industry. Procedia Comput. Sci. 2018, 133, 1021–1028. [Google Scholar] [CrossRef]

- Zhu, Z.; Dutta, A.; Dai, F. Exoskeletons for Manual Material Handling—A Review and Implication for Construction Applications. Autom. Constr. 2021, 122, 103493. [Google Scholar] [CrossRef]

- Li-Baboud, Y.-S.; Virts, A.; Bostelman, R.; Yoon, S.; Rahman, A.; Rhode, L.; Ahmed, N.; Shah, M. Evaluation Methods and Measurement Challenges for Industrial Exoskeletons. Sensors 2023, 23, 5604. [Google Scholar] [CrossRef]

- ASTM F3443-20; Standard Practice for Load Handling When Using an Exoskeleton. ASTM: West Conshohocken, PA, USA, 2020. Available online: https://www.astm.org/f3443-20.html (accessed on 1 May 2025).

- Bostelman, R.; Li-Baboud, Y.-S.; Virts, A.; Yoon, S.; Shah, M. Towards Standard Exoskeleton Test Methods for Load Handling. In Proceedings of the 2019 Wearable Robotics Association Conference (WearRAcon), Scottsdale, AZ, USA, 25–27 March 2019; IEEE: Scottsdale, AZ, USA, 2019; pp. 21–27. [Google Scholar]

- Virts, A.; Bostelman, R.; Yoon, S.; Shah, M.; Li-Baboud, Y.-S. A Peg-in-Hole Test and Analysis Method for Exoskeleton Evaluation; National Institute of Standards and Technology (U.S.): Gaithersburg, MD, USA, 2022; p. NIST TN 2208. [Google Scholar]

- ASTM F3518-21; Standard Guide for Quantitative Measures for Establishing Exoskeleton Functional Ergonomic Parameters and Test Metrics. ASTM: West Conshohocken, PA, USA, 2021. Available online: https://www.astm.org/f3518-21.html (accessed on 22 January 2024).

- Bostelman, R.; Virts, A.; Yoon, S.; Shah, M.; Baboud, Y.S.L. Towards Standard Test Artefacts for Synchronous Tracking of Human-Exoskeleton Knee Kinematics. Int. J. Hum. Factors Model. Simul. 2022, 7, 171. [Google Scholar] [CrossRef]

- Faisal, A.I.; Majumder, S.; Mondal, T.; Cowan, D.; Naseh, S.; Deen, M.J. Monitoring Methods of Human Body Joints: State-of-the-Art and Research Challenges. Sensors 2019, 19, 2629. [Google Scholar] [CrossRef]

- ASTM F3474-20; Standard Practice for Establishing Exoskeleton Functional Ergonomic Parameters and Test Metrics. ASTM: West Conshohocken, PA, USA, 2020. Available online: https://www.astm.org/f3474-20.html (accessed on 22 January 2024).

- Seel, T.; Raisch, J.; Schauer, T. IMU-Based Joint Angle Measurement for Gait Analysis. Sensors 2014, 14, 6891–6909. [Google Scholar] [CrossRef]

- Lebleu, J.; Gosseye, T.; Detrembleur, C.; Mahaudens, P.; Cartiaux, O.; Penta, M. Lower Limb Kinematics Using Inertial Sensors during Locomotion: Accuracy and Reproducibility of Joint Angle Calculations with Different Sensor-to-Segment Calibrations. Sensors 2020, 20, 715. [Google Scholar] [CrossRef]

- Zügner, R.; Tranberg, R.; Timperley, J.; Hodgins, D.; Mohaddes, M.; Kärrholm, J. Validation of Inertial Measurement Units with Optical Tracking System in Patients Operated with Total Hip Arthroplasty. BMC Musculoskelet Disord. 2019, 20, 52. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Z.; Wang, H.; Guo, S.; Wang, J.; Zhao, Y.; Tian, Q. The Effects of Unpowered Soft Exoskeletons on Preferred Gait Features and Resonant Walking. Machines 2022, 10, 585. [Google Scholar] [CrossRef]

- Yang, Y.; Dong, X.; Liu, X.; Huang, D. Robust Repetitive Learning-Based Trajectory Tracking Control for a Leg Exoskeleton Driven by Hybrid Hydraulic System. IEEE Access 2020, 8, 27705–27714. [Google Scholar] [CrossRef]

- Chen, B.; Grazi, L.; Lanotte, F.; Vitiello, N.; Crea, S. A Real-Time Lift Detection Strategy for a Hip Exoskeleton. Front. Neurorobot. 2018, 12, 17. [Google Scholar] [CrossRef]

- Pesenti, M.; Gandolla, M.; Pedrocchi, A.; Roveda, L. A Backbone-Tracking Passive Exoskeleton to Reduce the Stress on the Low-Back: Proof of Concept Study. In Proceedings of the 2022 International Conference on Rehabilitation Robotics (ICORR), Rotterdam, The Netherlands, 25–29 July 2022; IEEE: Rotterdam, The Netherlands, 2022; pp. 1–6. [Google Scholar]

- Yu, S.; Huang, T.-H.; Wang, D.; Lynn, B.; Sayd, D.; Silivanov, V.; Park, Y.S.; Tian, Y.; Su, H. Design and Control of a High-Torque and Highly Backdrivable Hybrid Soft Exoskeleton for Knee Injury Prevention During Squatting. IEEE Robot. Autom. Lett. 2019, 4, 4579–4586. [Google Scholar] [CrossRef]

- Yoon, S.; Li-Baboud, Y.-S.; Virts, A.; Bostelman, R.; Shah, M. Feasibility of Using Depth Cameras for Evaluating Human—Exoskeleton Interaction. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2022, 66, 1892–1896. [Google Scholar] [CrossRef]

- Cao, Z.; Hidalgo, G.; Simon, T.; Wei, S.-E.; Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation Using Part Affinity Fields. arXiv 2018. [Google Scholar] [CrossRef]

- Microsoft Azure Kinect DK Documentation. Available online: https://learn.microsoft.com/en-us/azure/kinect-dk/ (accessed on 22 January 2024).

- Buenaflor, C.; Kim, H.-C. Six Human Factors to Acceptability of Wearable Computers. Int. J. Multimed. Ubiquitous Eng. 2013, 8, 103–114. [Google Scholar]

- Heikenfeld, J.; Jajack, A.; Rogers, J.; Gutruf, P.; Tian, L.; Pan, T.; Li, R.; Khine, M.; Kim, J.; Wang, J.; et al. Wearable Sensors: Modalities, Challenges, and Prospects. Lab Chip 2018, 18, 217–248. [Google Scholar] [CrossRef]

- Plantard, P.; Shum, H.P.H.; Le Pierres, A.-S.; Multon, F. Validation of an Ergonomic Assessment Method Using Kinect Data in Real Workplace Conditions. Appl. Ergon. 2017, 65, 562–569. [Google Scholar] [CrossRef]

- Romeo, L.; Marani, R.; Malosio, M.; Perri, A.G.; D’Orazio, T. Performance Analysis of Body Tracking with the Microsoft Azure Kinect. In Proceedings of the 2021 29th Mediterranean Conference on Control and Automation (MED), Puglia, Italy, 22–25 June 2021; IEEE: Puglia, Italy, 2021; pp. 572–577. [Google Scholar]

- Tölgyessy, M.; Dekan, M.; Chovanec, Ľ. Skeleton Tracking Accuracy and Precision Evaluation of Kinect V1, Kinect V2, and the Azure Kinect. Appl. Sci. 2021, 11, 5756. [Google Scholar] [CrossRef]

- Albert, J.A.; Owolabi, V.; Gebel, A.; Brahms, C.M.; Granacher, U.; Arnrich, B. Evaluation of the Pose Tracking Performance of the Azure Kinect and Kinect v2 for Gait Analysis in Comparison with a Gold Standard: A Pilot Study. Sensors 2020, 20, 5104. [Google Scholar] [CrossRef]

- Guess, T.M.; Bliss, R.; Hall, J.B.; Kiselica, A.M. Comparison of Azure Kinect Overground Gait Spatiotemporal Parameters to Marker Based Optical Motion Capture. Gait Posture 2022, 96, 130–136. [Google Scholar] [CrossRef]

- Yeung, L.-F.; Yang, Z.; Cheng, K.C.-C.; Du, D.; Tong, R.K.-Y. Effects of Camera Viewing Angles on Tracking Kinematic Gait Patterns Using Azure Kinect, Kinect v2 and Orbbec Astra Pro V2. Gait Posture 2021, 87, 19–26. [Google Scholar] [CrossRef] [PubMed]

- Özsoy, U.; Yıldırım, Y.; Karaşin, S.; Şekerci, R.; Süzen, L.B. Reliability and Agreement of Azure Kinect and Kinect v2 Depth Sensors in the Shoulder Joint Range of Motion Estimation. J. Shoulder Elb. Surg. 2022, 31, 2049–2056. [Google Scholar] [CrossRef]

- Yang, B.; Dong, H.; El Saddik, A. Development of a Self-Calibrated Motion Capture System by Nonlinear Trilateration of Multiple Kinects V2. IEEE Sens. J. 2017, 17, 2481–2491. [Google Scholar] [CrossRef]

- D’Antonio, E.; Taborri, J.; Mileti, I.; Rossi, S.; Patane, F. Validation of a 3D Markerless System for Gait Analysis Based on OpenPose and Two RGB Webcams. IEEE Sens. J. 2021, 21, 17064–17075. [Google Scholar] [CrossRef]

- D’Antonio, E.; Taborri, J.; Palermo, E.; Rossi, S.; Patane, F. A Markerless System for Gait Analysis Based on OpenPose Library. In Proceedings of the 2020 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Dubrovnik, Croatia, 25–28 May 2020; IEEE: Dubrovnik, Croatia, 2020; pp. 1–6. [Google Scholar]

- Nakano, N.; Sakura, T.; Ueda, K.; Omura, L.; Kimura, A.; Iino, Y.; Fukashiro, S.; Yoshioka, S. Evaluation of 3D Markerless Motion Capture Accuracy Using OpenPose With Multiple Video Cameras. Front. Sports Act. Living 2020, 2, 50. [Google Scholar] [CrossRef]

- Kim, W.; Sung, J.; Saakes, D.; Huang, C.; Xiong, S. Ergonomic Postural Assessment Using a New Open-Source Human Pose Estimation Technology (OpenPose). Int. J. Ind. Ergon. 2021, 84, 103164. [Google Scholar] [CrossRef]

- Rhodin, H.; Spörri, J.; Katircioglu, I.; Constantin, V.; Meyer, F.; Müller, E.; Salzmann, M.; Fua, P. Learning Monocular 3D Human Pose Estimation from Multi-View Images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Amin, S.; Andriluka, M.; Rohrbach, M.; Schiele, B. Multi-View Pictorial Structures for 3D Human Pose Estimation. In Proceedings of the British Machine Vision Conference 2013, Bristol, UK, 9–13 September 2013; British Machine Vision Association: Bristol, UK, 2013; pp. 45.1–45.11. [Google Scholar]

- Ann Virts. Exoskeleton Performance Data. National Institute of Standards and Technology Public Data Repository. 2021. Available online: https://doi.org/10.18434/mds2-2429 (accessed on 29 April 2025).

- OptiTrack Documentation. Available online: https://docs.optitrack.com/ (accessed on 22 January 2024).

- OptiTrack Motive. Available online: https://docs.optitrack.com/motive (accessed on 22 January 2024).

- OptiTrack Documentation: Skeleton Marker Sets-Full Body-Conventional. 2022. Available online: https://docs.optitrack.com/markersets/full-body/conventional-39 (accessed on 22 January 2024).

- OptiTrack Documentation: Skeleton Marker Sets-Rizzoli Marker Sets. 2022. Available online: https://docs.optitrack.com/markersets/rizzoli-markersets (accessed on 22 January 2024).

- Cheng, Y.; Yang, B.; Wang, B.; Wending, Y.; Tan, R. Occlusion-Aware Networks for 3D Human Pose Estimation in Video. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; IEEE: Seoul, Republic of Korea, 2019; pp. 723–732. [Google Scholar]

- Aboul-Enein, O.; Bostelman, R.; Li-Baboud, Y.-S.; Shah, M. Performance Measurement of a Mobile Manipulator-on-a-Cart and Coordinate Registration Methods for Manufacturing Applications; National Institute of Standards and Technology (U.S.): Gaithersburg, MD, USA, 2022; p. NIST AMS 100-45r1. [Google Scholar]

- Ronchi, M.R.; Mac Aodha, O.; Eng, R.; Perona, P. It’s All Relative: Monocular 3D Human Pose Estimation from Weakly Supervised Data. arXiv 2018, arXiv:1805.06880. [Google Scholar]

- Rahman, A. Towards a Markerless 3D Pose Estimation Tool. In Proceedings of the Extended Abstracts of the 2023 CHI Conference on Human Factors in Computing Systems, Hamburg, Germany, 23–28 April 2023; ACM: Hamburg Germany, 2023; pp. 1–6. [Google Scholar]

- Ahmed, N.; Rahman, A.; Rhode, L. Best Practices for Exoskeleton Evaluation Using DeepLapCut. In Proceedings of the 2023 ACM Sigmetrics Student Research Competition, Orlando, FL, USA, 19–22 June 2023. [Google Scholar]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless Pose Estimation of User-Defined Body Parts with Deep Learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef] [PubMed]

- Sarajchi, M.; Al-Hares, M.K.; Sirlantzis, K. Wearable Lower-Limb Exoskeleton for Children With Cerebral Palsy: A Systematic Review of Mechanical Design, Actuation Type, Control Strategy, and Clinical Evaluation. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 2695–2720. [Google Scholar] [CrossRef] [PubMed]

- Nutter, F.W., Jr. Assessing the Accuracy, Intra-Rater Repeatability, and Inter-Rater Reliability of Disease Assessment Systems. Phytopathology 1993, 83, 806. [Google Scholar] [CrossRef]

- Kang, C.; Lee, C.; Song, H.; Ma, M.; Pereira, S. Variability Matters: Evaluating Inter-Rater Variability in Histopathology for Robust Cell Detection. In Computer Vision—ECCV 2022 Workshops; Karlinsky, L., Michaeli, T., Nishino, K., Eds.; Lecture Notes in Computer Science; Springer Nature Switzerland: Cham, Switzerland, 2023; Volume 13807, pp. 552–565. ISBN 978-3-031-25081-1. [Google Scholar]

| Sensor | Joint(s) of Interest | Subject Task | Measurement | Wearable Robot | Reference |

|---|---|---|---|---|---|

| OTS | Knee | Sit to stand | Human joint angle Exoskeleton joint angle | Non-powered full-body rigid | Bostelman 2019 [14] |

| RGB | Shoulder, wrist | Peg-in-hole | Joint poses | Non-powered full-body rigid | Virts 2022 [12] |

| OTS Force plate | Hip, knee, ankle | Gait | Joint angle Walking speed | Non-powered lower-body soft | Zhang 2022 [20] |

| Angle encoder | Hip, knee | Gait | Joint angle | Powered lower-body rigid | Yang 2020 [21] |

| Angle encoder IMU | Hip Trunk | Lift | Joint angle Kinematic | Powered lower-back rigid | Chen 2018 [22] |

| IMU | Hip, knee | Lift | Joint angle | Non-powered lower-back rigid | Pesenti 2022 [23] |

| IMU | Trunk, thigh, Shank, hip, knee | Squat | Joint angle | Powered knee soft | Yu 2019 [24] |

| Sensor | Joint(s) of Interest | Pose or Task | Measurement | Factors Affecting the Performance | Reference |

|---|---|---|---|---|---|

| Depth OTS (reference) | Trunk, neck, shoulder, elbow, leg | Lowering a load Lifting a load Car assembly | Joint angle differences to reference system | Viewpoint (front, side-front) Occlusion | Plantard 2017 [30] |

| Depth | Head, pelvis, hand, foot | Reference pose: T-pose | Mean distance error of joints | Image resolution, body occlusions, subject–sensor distance | Romeo 2021 [31] |

| Depth | Full body | Reference pose: T-pose | Standard deviation of joint poses Counting undetected joints | Viewpoint (distance) | Tölgyessy 2021 [32] |

| Depth OTS (reference) | Full body | Gait | Joint pose differences to reference system | Subject walking speed | Albert 2020 [33] |

| Depth OTS (reference) | Ankle | Gait | Joint pose differences to reference system | N/A | Guess 2022 [34] |

| Depth OTS (reference) | Hip, knee, ankle | Gait | Joint angle differences to reference system | Viewpoint (0°, 22.5°, 45°, 67.5°, 90°) | Yeung 2021 [35] |

| Depth OTS (reference) | Shoulder | Shoulder flexion, abduction, internal rotation, external rotation | Interobserver reliability to reference system | N/A | Özsoy 2022 [36] |

| Depth OTS (reference) | Elbow, ankle | Arm swing Leg swing | Vertical coordinate trajectory | Occlusion | Yang 2017 [37] |

| RGB IMU (reference) | Hip and knee Ankle was excluded due to low performance | Gait | Joint angle differences to reference system | Viewpoint (back, side, side-back) Subject task pose—walking and running | D’Antonio 2021 [38] |

| RGB IMU (reference) | Hip, knee, ankle | Gait | Max/min joint angle differences to reference system | N/A | D’Antonio 2020 [39] |

| RGB OTS (reference) | Elbow, wrist, knee, ankle | Gait, jump, throw | Joint pose trajectory differences to reference system | Subject task pose | Nakano 2020 [40] |

| RGB Depth IMU (reference) | Full body | Six static poses for loading Four static poses for occlusion Dynamic simple lifting Dynamic complex lifting | Joint angle differences to reference system | Viewpoint (front, side, back) Occlusion | Kim 2021 [41] |

| Condition 1 * | Condition 2 | Condition 3 | |

|---|---|---|---|

| The joints are correctly detected. | 100 | 0 | 12 |

| One or two connected joints have misdetection. | 0 | 37 | 88 |

| One or two connected joints have misalignment. | 0 | 63 | 0 |

| Two or more independent joints have misdetection or misalignment. | 0 | 0 | 0 |

| Acceptability | Case | Description |

|---|---|---|

| Acceptable (A) | Pose estimation quality is sufficient for exoskeleton analysis when: | |

| 1 | Misdetections or misalignments are not observed | |

| 2 | One or two linked joints have misalignments | |

| 3 | One or two linked joints have misdetections for less than 20% of the samples | |

| Unacceptable (U) | Pose estimation quality is insufficient for exoskeleton analysis when: | |

| 1 | Two or more unlinked joints have misdetections for more than 20% of the samples | |

| 2 | Two or more joints have misalignments for more than 20% of the samples | |

| 3 | One or two linked joints have misdetections for more than 20% of the samples |

| Exoskeleton | Image | Trial | Pose | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | |||

| Control | RGB | 1 | 1 | 1 | 1 | 2 | 1 | 1 | 1 | −1 | 2 | 1 | −1 | 1 | 1 | 1 |

| 2 | 1 | 1 | 1 | 1 | 1 | 2 | 1 | −1 | 2 | 1 | −1 | 1 | 1 | 3 | ||

| 3 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | −1 | −1 | 1 | 1 | 1 | 1 | 1 | ||

| 4 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | −1 | 1 | 1 | 1 | 1 | 1 | 2 | ||

| 5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | −1 | 1 | 1 | 1 | 1 | 1 | −3 | ||

| Depth | 1 | 1 | 1 | 1 | −1 | 3 | 1 | 1 | −1 | −3 | 1 | −1 | −3 | 1 | −1 | |

| 2 | 1 | 1 | 1 | −1 | 3 | −3 | 1 | −3 | −3 | 1 | −1 | −3 | −3 | −1 | ||

| 3 | 1 | 1 | 1 | −1 | 3 | 1 | 1 | −3 | 1 | 1 | −1 | 3 | 3 | −1 | ||

| 4 | 1 | 1 | 1 | −1 | 3 | −3 | 1 | −1 | 1 | 1 | −1 | −2 | −3 | −1 | ||

| 5 | 1 | 1 | 1 | −1 | 3 | 1 | 1 | −1 | 3 | 1 | −1 | −1 | 3 | −1 | ||

| Type1 | RGB | 1 | 1 | 1 | 1 | 1 | 1 | 2 | 1 | −1 | 1 | 1 | 1 | 3 | 2 | −3 |

| 2 | 1 | 1 | 1 | 1 | 1 | 1 | 3 | −1 | 1 | 1 | 1 | 2 | 2 | 2 | ||

| 3 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | −1 | 1 | 1 | 1 | 3 | 2 | 2 | ||

| 4 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 3 | 2 | 2 | ||

| 5 | 1 | 1 | 1 | 1 | 1 | 1 | * | −1 | 1 | 1 | 1 | 3 | 2 | 2 | ||

| Depth | 1 | 2 | 1 | 1 | −1 | 2 | 1 | −1 | −1 | −1 | −1 | 1 | −1 | −1 | −1 | |

| 2 | 2 | 1 | 1 | −1 | 1 | 1 | −1 | −1 | 1 | 1 | −3 | −1 | −1 | −1 | ||

| 3 | 2 | 1 | 1 | −1 | 1 | 1 | −1 | −1 | 1 | 1 | −3 | −1 | −1 | −1 | ||

| 4 | −2 | 1 | 1 | −1 | 1 | 1 | −2 | −1 | 1 | 1 | 3 | −1 | −1 | −1 | ||

| 5 | −2 | 1 | 1 | −1 | 1 | 1 | * | −1 | 1 | 1 | −3 | −1 | −1 | −1 | ||

| Viewpoints | Total Evaluation | Acceptable Case (%) | Not Acceptable Case (%) | Missing Data (# of Occurrences) | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | Total | −1 | −2 | −3 | Total | ||||

| Straight | Front | 558 | 55.6 * | 10.9 | 3.0 | 69.5 | 20.3 | 4.3 | 5.9 | 30.5 | 2 |

| Side-front | 558 | 61.6 | 9.9 | 4.3 | 75.8 | 10.0 | 0.2 | 14.0 | 24.2 | 2 | |

| Side | 558 | 15.1 | 4.8 | 4.1 | 24.0 | 28.1 | 3.2 | 44.6 | 76.0 | 2 | |

| Side-back | 550 | 6.0 | 11.6 | 0.7 | 18.4 | 42.2 | 0.2 | 39.3 | 81.6 | 10 | |

| Back | 560 | 13.9 | 7.3 | 0.0 | 21.3 | 66.3 | 7.3 | 5.2 | 78.8 | 0 | |

| Top down | Front | 560 | 39.6 | 11.8 | 1.4 | 52.9 | 36.6 | 4.8 | 5.7 | 47.1 | 0 |

| Side-front | 554 | 49.6 | 8.3 | 4.9 | 62.8 | 21.7 | 0.7 | 14.8 | 37.2 | 6 | |

| Side | 560 | 25.9 | 5.0 | 2.7 | 33.6 | 31.1 | 1.1 | 34.3 | 66.4 | 0 | |

| Side-back | 560 | 13.9 | 9.8 | 0.9 | 24.6 | 33.2 | 0.2 | 42.0 | 75.4 | 0 | |

| Back | 559 | 19.0 | 9.8 | 0.9 | 29.7 | 55.3 | 8.4 | 6.6 | 70.3 | 1 | |

| Image | Exoskeleton Type | Total Evaluation | Acceptable Case (%) | Not Acceptable Case (%) | Missing Data | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | Total | −1 | −2 | −3 | Total | ||||

| RGB | Control | 700 | 44.1 | 14.0 | 3.9 | 62.0 | 15.3 | 1.3 | 21.4 | 38.0 | 0 |

| Type 1 | 696 | 39.1 | 14.1 | 3.7 | 56.9 | 20.0 | 3.0 | 20.1 | 43.1 | 4 | |

| Type 2 | 700 | 43.6 | 12.6 | 0.7 | 56.9 | 14.3 | 2.6 | 26.3 | 43.1 | 0 | |

| Type 3 | 693 | 39.7 | 15.3 | 1.4 | 56.4 | 13.6 | 4.3 | 25.7 | 43.6 | 7 | |

| Depth | Control | 700 | 22.4 | 6.4 | 3.0 | 31.9 | 37.4 | 3.3 | 27.4 | 68.1 | 0 |

| Type 1 | 695 | 14.5 | 2.3 | 2.7 | 19.6 | 62.3 | 5.8 | 12.4 | 80.4 | 5 | |

| Type 2 | 700 | 17.1 | 4.0 | 0.9 | 22.0 | 54.6 | 3.1 | 20.3 | 78.0 | 0 | |

| Type 3 | 693 | 19.6 | 2.7 | 2.0 | 24.4 | 58.6 | 1.0 | 16.0 | 75.6 | 7 | |

| Image | Exoskeleton | Pose (%) | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | ||

| RGB | Control | 72.0 * | 84.0 | 82.0 | 76.0 | 58.0 | 72.0 | 50.0 | 36.0 | 68.0 | 92.0 | 44.0 | 46.0 | 54.0 | 34.0 |

| Type 1 | 74.0 | 94.0 | 82.0 | 70.0 | 40.0 | 52.0 | 38.3 | 24.0 | 79.6 | 80.0 | 40.0 | 48.0 | 48.0 | 26.0 | |

| Type 2 | 80.0 | 100.0 | 80.0 | 78.0 | 60.0 | 64.0 | 32.0 | 12.0 | 70.0 | 76.0 | 24.0 | 40.0 | 54.0 | 26.0 | |

| Type 3 | 64.0 | 90.0 | 80.0 | 79.2 | 70.0 | 68.0 | 34.0 | 20.0 | 60.0 | 78.0 | 32.0 | 35.6 | 50.0 | 28.0 | |

| Depth | Control | 54.0 | 58.0 | 62.0 | 40.0 | 46.0 | 42.0 | 36.0 | 10.0 | 28.0 | 50.0 | 0.0 | 2.0 | 18.0 | 0.0 |

| Type 1 | 22.0 | 42.0 | 52.0 | 30.0 | 40.0 | 26.0 | 2.1 | 0.0 | 14.3 | 36.0 | 8.0 | 0.0 | 0.0 | 0.0 | |

| Type 2 | 32.0 | 50.0 | 54.0 | 30.0 | 42.0 | 20.0 | 4.0 | 2.0 | 34.0 | 24.0 | 0.0 | 8.0 | 8.0 | 0.0 | |

| Type 3 | 40.0 | 54.0 | 44.0 | 39.6 | 42.0 | 34.0 | 20.0 | 0.0 | 26.0 | 38.0 | 2.0 | 0.0 | 0.0 | 0.0 | |

| Total | 54.8 | 71.5 | 67.0 | 55.3 | 49.8 | 47.3 | 27.2 | 13.0 | 47.5 | 59.3 | 18.8 | 22.3 | 29.0 | 14.4 | |

| Image | Exoskeleton | Straight Viewpoint (%) | Top-Down Viewpoint (%) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Front | Side-Front | Side | Side-Back | Back | Front | Side-Front | Side | Side-Back | Back | ||

| RGB | Control | 87.1 * | 100.0 | 38.6 | 30.0 | 30.0 | 67.1 | 91.4 | 62.9 | 55.7 | 57.1 |

| Type 1 | 92.8 | 95.7 | 37.7 | 42.9 | 14.3 | 77.1 | 81.2 | 50.0 | 35.7 | 42.9 | |

| Type 2 | 84.3 | 97.1 | 27.1 | 34.3 | 38.6 | 71.4 | 74.3 | 57.1 | 35.7 | 48.6 | |

| Type 3 | 72.9 | 100.0 | 28.6 | 33.8 | 35.7 | 57.1 | 91.2 | 54.3 | 34.3 | 55.7 | |

| Depth | Control | 57.1 | 64.3 | 21.4 | 2.9 | 27.1 | 41.4 | 50.0 | 20.0 | 21.4 | 12.9 |

| Type 1 | 47.8 | 50.7 | 4.3 | 0.0 | 11.4 | 35.7 | 34.8 | 2.9 | 1.4 | 7.2 | |

| Type 2 | 60.0 | 37.1 | 15.7 | 2.9 | 10.0 | 35.7 | 35.7 | 2.9 | 7.1 | 12.9 | |

| Type 3 | 54.3 | 61.4 | 18.6 | 0.0 | 2.9 | 37.1 | 44.1 | 18.6 | 5.7 | 0.0 | |

| Total | 69.5 | 75.8 | 24.0 | 18.4 | 21.3 | 52.9 | 62.8 | 33.6 | 24.6 | 29.7 | |

| Viewpoint | Pose (%) | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | ||

| Straight | Front | 95.0 * | 100.0 | 100.0 | 50.0 | 100.0 | 87.5 | 71.1 | 2.5 | 85.0 | 97.5 | 32.5 | 52.5 | 67.5 | 32.5 |

| Side-front | 85.0 | 87.5 | 100.0 | 100.0 | 100.0 | 92.5 | 78.9 | 47.5 | 92.5 | 82.5 | 52.5 | 47.5 | 45.0 | 50.0 | |

| Side | 77.5 | 72.5 | 10.0 | 70.0 | 7.5 | 15.0 | 2.5 | 2.5 | 15.0 | 15.0 | 17.5 | 7.5 | 0.0 | 22.5 | |

| Side-back | 0.0 | 47.5 | 55.0 | 47.5 | 2.5 | 2.5 | 2.5 | 12.5 | 10.0 | 47.5 | 0.0 | 16.7 | 12.5 | 0.0 | |

| Back | 0.0 | 57.5 | 67.5 | 0.0 | 25.0 | 0.0 | 20.0 | 10.0 | 0.0 | 65.0 | 0.0 | 2.5 | 50.0 | 0.0 | |

| Top down | Front | 42.5 | 90.0 | 100.0 | 100.0 | 100.0 | 50.0 | 0.0 | 0.0 | 77.5 | 82.5 | 10.0 | 45.0 | 35.0 | 7.5 |

| Side-front | 100.0 | 100.0 | 85.0 | 100.0 | 90.0 | 87.5 | 37.5 | 15.0 | 60.5 | 50.0 | 37.5 | 40.0 | 50.0 | 30.0 | |

| Side | 52.5 | 30.0 | 2.5 | 52.5 | 30.0 | 72.5 | 57.9 | 17.5 | 57.5 | 62.5 | 37.5 | 0.0 | 0.0 | 0.0 | |

| Side-back | 62.5 | 80.0 | 62.5 | 37.5 | 0.0 | 37.5 | 7.5 | 5.0 | 25.0 | 27.5 | 0.0 | 0.0 | 0.0 | 0.0 | |

| Back | 32.5 | 50.0 | 87.5 | 0.0 | 42.5 | 27.5 | 0.0 | 17.9 | 52.5 | 62.5 | 0.0 | 12.5 | 30.0 | 0.0 | |

| Total | 54.8 | 71.5 | 67.0 | 55.3 | 49.8 | 47.3 | 27.2 | 13.0 | 47.5 | 59.3 | 18.8 | 22.6 | 29.0 | 14.3 | |

| Exoskeleton | Pose | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | |

| Control | 100.0 * | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 40.0 | 100.0 | 100.0 | 100.0 |

| Type1 | 100.0 | 40.0 | 20.0 | 0.0 | 0.0 | 0.0 | 100.0 | 100.0 | 0.0 | 0.0 | 100.0 | 100.0 | 40.0 | 0.0 |

| Type2 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 80.0 | 100.0 | 100.0 | 80.0 | 100.0 | 100.0 | 20.0 |

| Type3 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 | 100.0 |

| RGB | Depth | OTS | |

|---|---|---|---|

| Control | 0.62 | 0.32 | 0.96 |

| Type 1 | 0.57 | 0.19 | 0.43 |

| Type 2 | 0.57 | 0.22 | 0.91 |

| Type 3 | 0.56 | 0.24 | 1.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, S.; Li-Baboud, Y.-S.; Virts, A.; Bostelman, R.; Shah, M.; Ahmed, N. Performance Evaluation of Monocular Markerless Pose Estimation Systems for Industrial Exoskeletons. Sensors 2025, 25, 2877. https://doi.org/10.3390/s25092877

Yoon S, Li-Baboud Y-S, Virts A, Bostelman R, Shah M, Ahmed N. Performance Evaluation of Monocular Markerless Pose Estimation Systems for Industrial Exoskeletons. Sensors. 2025; 25(9):2877. https://doi.org/10.3390/s25092877

Chicago/Turabian StyleYoon, Soocheol, Ya-Shian Li-Baboud, Ann Virts, Roger Bostelman, Mili Shah, and Nishat Ahmed. 2025. "Performance Evaluation of Monocular Markerless Pose Estimation Systems for Industrial Exoskeletons" Sensors 25, no. 9: 2877. https://doi.org/10.3390/s25092877

APA StyleYoon, S., Li-Baboud, Y.-S., Virts, A., Bostelman, R., Shah, M., & Ahmed, N. (2025). Performance Evaluation of Monocular Markerless Pose Estimation Systems for Industrial Exoskeletons. Sensors, 25(9), 2877. https://doi.org/10.3390/s25092877