1. Introduction

With the increasing number of wireless devices and the development of ultra-5G and 6G technologies in industrial Internet of Things (IoT) networks, wireless sensor networks (WSNs) [

1], as a key component of IoT systems, face higher demands for low latency, low remote state estimation (RSE) errors, and low system error rates (SERs) [

2,

3,

4]. As the number of smart devices grows and large-scale IoT applications proliferate, ensuring the efficiency and reliability of these systems has become a critical issue to address.

To enhance spectrum efficiency in WSNs, non-orthogonal multiple access (NOMA) technology has been widely adopted due to its capability to support multiple sensors with the same time–frequency resources through superposition coding and successive interference cancellation [

5,

6]. Recent studies have enhanced NOMA further by integrating active reconfigurable intelligent surfaces (RISs) to boost the link reliability and energy efficiency. For instance, the work presented in [

7] investigates joint UAV trajectory optimization and active RIS control in NOMA systems, highlighting significant energy efficiency gains. However, this study primarily addressed aerial–terrestrial network scenarios and was not directly applicable to ground-based sensor networks.

Both passive and active RIS technologies have been explored extensively for improving NOMA system performance. Recent research, such as that presented in [

8], demonstrates passive RISs’ effectiveness in reducing the bit error rate (BER) and extending the network coverage. Nevertheless, a passive RIS lacks active amplification capabilities and adaptability under highly dynamic channel conditions. Conversely, an active RIS, as explored in [

9], enhances the physical-layer security by providing analytical derivations for the secrecy outage probability. However, this study does not incorporate critical aspects such as sensor grouping strategies or estimation error optimization.

Traditional optimization methods like block coordinate descent (BCD) and successive convex approximation (SCA) have been employed for resource allocation in RIS-assisted NOMA networks. For example, the research by Xu et al. [

10] achieves improved system sum rates using BCD-based resource scheduling techniques. Additionally, recent efforts such as [

11] have explored low-complexity signal processing schemes, including sparse channel parameter estimation for MIMO-FBMC systems, which are highly relevant to industrial big data communication scenarios. However, these approaches typically focus on the physical layer and do not address joint decision-making strategies or cross-layer coordination for sensor estimation. Moreover, they lack multi-agent coordination mechanisms and considerations for remote state estimation (RSE) error control, which are essential in practical WSN deployments.

Meanwhile, reinforcement learning methodologies, including deep reinforcement learning (DRL), deep deterministic policy gradient (DDPG), and multi-agent DDPG (MADDPG), have emerged as effective solutions for dynamic resource allocation. A recent IEEE Transactions study [

12] presents an attention-augmented MADDPG framework applied to NOMA-based vehicular mobile edge computing, demonstrating improved convergence rates and predictive accuracy under realistic workload scenarios. Additionally, recent studies such as [

13] have employed DRL approaches to jointly optimize the UAV trajectories, transmission power, and RIS phase shifts in RIS-aided UAV-NOMA systems. Nonetheless, these studies have predominantly considered passive RIS configurations and assumed static user grouping, limiting the adaptability in dynamic scenarios.

To the best of our knowledge, no existing research has jointly tackled the sensor state estimation error (RSE), sensor grouping, transmission power control, and active RIS beamforming within an uplink NOMA-assisted WSN using a decentralized multi-agent reinforcement learning framework. To this end, we propose a MADDPG-based distributed policy learning approach. MADDPG is particularly suitable for this problem setting because it explicitly models the interactions among agents and handles decentralized control in partially observable environments. In our scenario, each sensor acts as an agent, making decisions based only on its local state, such as channel gain and estimation covariance. This naturally forms a Dec-POMDP (Decentralized Partially Observable Markov Decision Process), to which MADDPG is well suited. It enables coordinated learning during centralized training while maintaining independent execution, supports hybrid discrete–continuous action spaces, and offers scalability in large sensor networks with dynamic channel conditions.

The main contributions of this paper are summarized as follows:

- 1.

Joint Integration of an Active RIS and MADDPG: We propose a joint optimization framework that integrates an active RIS and MADDPG-based decentralized learning for uplink WSNs. This design improves the estimation accuracy, reduces the power consumption, and enhances the system’s adaptability.

- 2.

Real-Time Estimation via Kalman Filtering: The proposed method incorporates Kalman filtering (KF) to enable real-time state tracking, which improves the estimation robustness under varying network conditions.

- 3.

Decentralized Resource Allocation: We formulate a novel decentralized resource allocation problem that jointly considers sensor grouping, power control, and active RIS beamforming to minimize the RSE error—an optimization objective rarely addressed in existing works. Each sensor acts as an independent agent and makes decisions based on its local state in a Dec-POMDP setting. The MADDPG framework is employed to enable scalable and coordinated learning among agents. While our method is built upon an established RL architecture, it is tailored to a unique estimation-driven optimization task in WSNs. We also acknowledge the absence of a formal theoretical convergence analysis and highlight this as a direction for future investigation.

- 4.

Performance Gains Verified by Simulations: Simulation results show significant improvements in the RSE error reduction and system reliability compared to those under conventional and single-agent approaches, confirming the practicality of the proposed framework.

The remainder of this paper is organized as follows:

Section 2 details the local sensor state estimation and the uplink NOMA transmission model with an active RIS, including the derivation of the RSE error metrics and the outage probability model.

Section 3 introduces the MADDPG-based decentralized algorithm, elaborating on the joint optimization framework that integrates sensor grouping, power allocation, and active RIS beamforming, as well as incorporating KF or enhanced real-time state estimation.

Section 4 presents the simulation experiments, performance comparisons with benchmark algorithms, and a comprehensive analysis of the results demonstrating improvements in the RSE accuracy and network reliability. Finally,

Section 5 concludes this paper by summarizing the key findings and potential directions for future research.

Notations: denotes the set of real numbers. represents the set of complex numbers. The symbol denotes the transpose operation, and the superscript indicates the matrix inverse. The notation refers to probability and to expectation. represents the trace operator of a matrix. A bold symbol (e.g., ) is used to denote vectors or matrices, with lowercase bold letters representing vectors and uppercase bold letters representing matrices. Non-bold symbols (e.g., p) indicate scalar quantities such as real-valued parameters or constants.

2. The System Model

This letter explores an RSE system within an uplink NOMA transmission model, aided by an active RIS. As depicted in

Figure 1, the system consists of a single BS, equipped with

G receive antennas, an active RIS with

M reflective elements and

N single-antenna sensors. The sets of sensors, sensor groups, and reflective elements are denoted as

,

and

, respectively.

This letter explores an RSE system within an uplink NOMA transmission model, aided by an active RIS. As depicted in

Figure 1, the system consists of a single BS equipped with

G receive antennas; an active RIS with

M reflective elements; and

N single-antenna sensors. To facilitate decentralized decision-making, the

N sensors are partitioned into

G sensor groups, each served by the BS equipped with

G antennas. Note that the grouping does not imply a one-to-one mapping between each group and a specific antenna; instead, it is designed for efficient multi-agent training and resource allocation. The sets of sensors, sensor groups, and reflective elements are denoted as

,

, and

, respectively.

2.1. Local State Estimation

The output observed by sensor

n at time

k for the associated linear time-invariant (LTI) process can be expressed as follows

where

and

represent the state and measurement vectors, respectively [

14], with

and

denoting the dimensions of the state and measurement vectors for sensor

n. The state evolution is governed by the state transition matrix

, while the measurement process is characterized by the measurement matrix

. The disturbances

and

are assumed to be zero-mean, independent, and identically distributed (i.i.d.) Gaussian noise processes, with covariance matrices

and

, respectively.

Each sensor operates a local Kalman filter (KF) and transmits the corresponding minimum mean squared error (MMSE) state estimate to the remote estimator, along with the associated remote state estimation (RSE) error covariance

, which are defined as [

15]

The prior state estimate

and the posterior state estimate

at time

k are updated through the following steps. The Kalman gain is denoted by

, and the error covariances are represented by

and

, corresponding to the prior and posterior error covariances, respectively. The KF update process is given by

where

is the

identity matrix, and

denotes the dimension of the state vector

.

The local estimation error covariance converges exponentially to a steady-state value. Without loss of generality, we assume that each sensor’s KF reaches this steady state. To simplify the following analysis, we denote the steady-state covariance as , .

2.2. The Uplink Communication Model

After obtaining , sensor n transmits it to the BS as a data packet. The communication between the sensors and the BS is based on an uplink NOMA communication model, supported by an active RIS. In this model, the RIS serves as an enhancement tool for signal propagation, optimizing both the signal quality and coverage.

The equivalent baseband time-domain channel in the system is composed of three parts: the channel from the RIS to the BS, from sensor

n to the RIS, and from sensor

n to the BS. These channels are denoted by

,

, and

, respectively. The reflection coefficient of the RIS element

m is expressed as

, where

represents the reflection amplitude and

denotes the phase shift. The RIS beamforming is then captured by the reflection coefficient matrix

diag

[

2]. Unlike passive RISs, active RISs are equipped with amplifiers that consume additional power, making thermal noise at the active RIS significant. Consequently, the signal received at the BS can be modeled as

where

represents the binary channel selection for sensor

n. The total channel gain

is denoted as

,

. The noise

is defined as

,

. The thermal noise at the RIS is modeled by

, which follows a circularly symmetric complex Gaussian distribution, i.e.,

. Similarly, the thermal noise at the BS is modeled by

, where

[

2]. Let

represent the data symbol transmitted by sensor

n at time

k, while

denotes the corresponding power used for computation offloading. We assume that the transmitted symbols are independent and identically distributed (i.i.d.) as

. Since it is an uplink NOMA channel, we assume that the channel gains between different sensors decrease, i.e.,

.

The channel model comprises both path loss and small-scale fading. For the direct link between the BS and the sensor

n, we assume Rayleigh fading. The corresponding channel can be expressed as

where

is the distance between the BS and sensor

n,

is the path loss exponent, and

represents the small-scale fading channel, modeled as

.

The active RIS is deployed at a fixed location, where line-of-sight (LoS) links exist from both the BS to the RIS and from the RIS to sensor

n. Therefore, the reflection channel follows Rician fading. The channel matrix from the RIS to the BS,

, and the channel vector from sensor

n to the RIS,

, are given by [

16]

where

and

represent the distances from the BS to the RIS and from the RIS to sensor

n, respectively.

and

are the path loss exponents, while

and

denote the Rician factors. The terms

and

represent the LoS components from the RIS to the BS and from the sensor to the RIS, respectively. The small-scale fading components,

and

, follow the complex Gaussian distributions

and

, respectively.

Therefore, according to the uplink NOMA decoding protocol with successive interference cancellation (SIC), the signal from sensor

n is decoded after those from the sensors with smaller indices (i.e.,

), whose signals are assumed to have been successfully decoded and removed at the BS. Thus, the residual interference with sensor

n originates only from the sensors with indices greater than

n. The signal-to-interference-plus-noise ratio (SINR) of sensor

n is given by

where

accounts for the effective noise at the BS, including thermal noise from both the RIS and the BS receiver.

In digital communication theory, the SER for a single sensor is expressed in terms of the SINR as

, where

denotes the Gaussian Q-function. By applying the above theory, the SER can be approximated as

[

3]. For sensor

n at time

k, the SER is approximated as

2.3. Remote State Eatimation

Each sensor transmits its local state estimation measurement data to the remote estimator (i.e., the BS) via a wireless channel, utilizing uplink NOMA technology enhanced by RIS assistance. Thus, the transmission of

can be characterized by a binary random process

, where (see

Figure 2)

Combining (

15) and (

16), the probability of the successful transmission of

is expressed as

Then, the RSE error covariance is [

3]

where

is a positive parameter and

(see Algorithm 1).

To quantify the remote estimation quality of sensor

n at time

k, we define the trace of the expected RSE covariance for the sensor as follows:

Based on the preceding system model and corresponding derivations, the optimization objective is to minimize the sum of the expected covariance of the RSE errors, which can be expressed as follows:

where

K is the maximum value of the time slot set.

represents the amplification power of the RIS [

2]. Here,

is the amplifier efficiency,

is the total power budget,

is the DC biasing power consumption, and

is the circuit power consumption of the active RIS. Constraint (

20a) ensures that the transmission power of sensor

n is within the maximum allowed limit. Constraints (

20b) and (

20c) enforce that each channel supports at most

I sensors and that each sensor is assigned to only one channel. These two constraints together imply that the total number of sensors

N must not exceed the overall channel capacity, i.e.,

, in order to ensure the feasibility of the sensor-to-channel assignment. For example, if

sensors are to be scheduled using

channels, and each channel can support up to

sensors, then the total capacity is

, and the assignment is feasible. However, if

, at least one sensor cannot be accommodated, violating the constraints. Therefore, this relationship is essential to guarantee a valid channel allocation. Constraint (

20d) limits the amplification power of the active RIS.

| Algorithm 1: Iterative Algorithm Based on the KF Method. |

Input: . Output: . Initialization: , . - 1:

while or do - 2:

Calculate according to formulas (5)–(9) - 3:

Update - 4:

Update - 5:

end while - 6:

Obtain - 7:

for to K do - 8:

for to N do - 9:

for to G do - 10:

Set - 11:

if then - 12:

- 13:

else - 14:

- 15:

end if - 16:

end for - 17:

end for - 18:

end for

|

Based on the well-known properties of the standard KF (see Lemma 2.3 in [

17]), it follows that

, where

is a fixed constant. Consequently, by applying the natural logarithm function to (

19), the expression can be expressed as

Therefore, Problem 1 can be transformed into

3. MADDPG-Based Resource Allocation

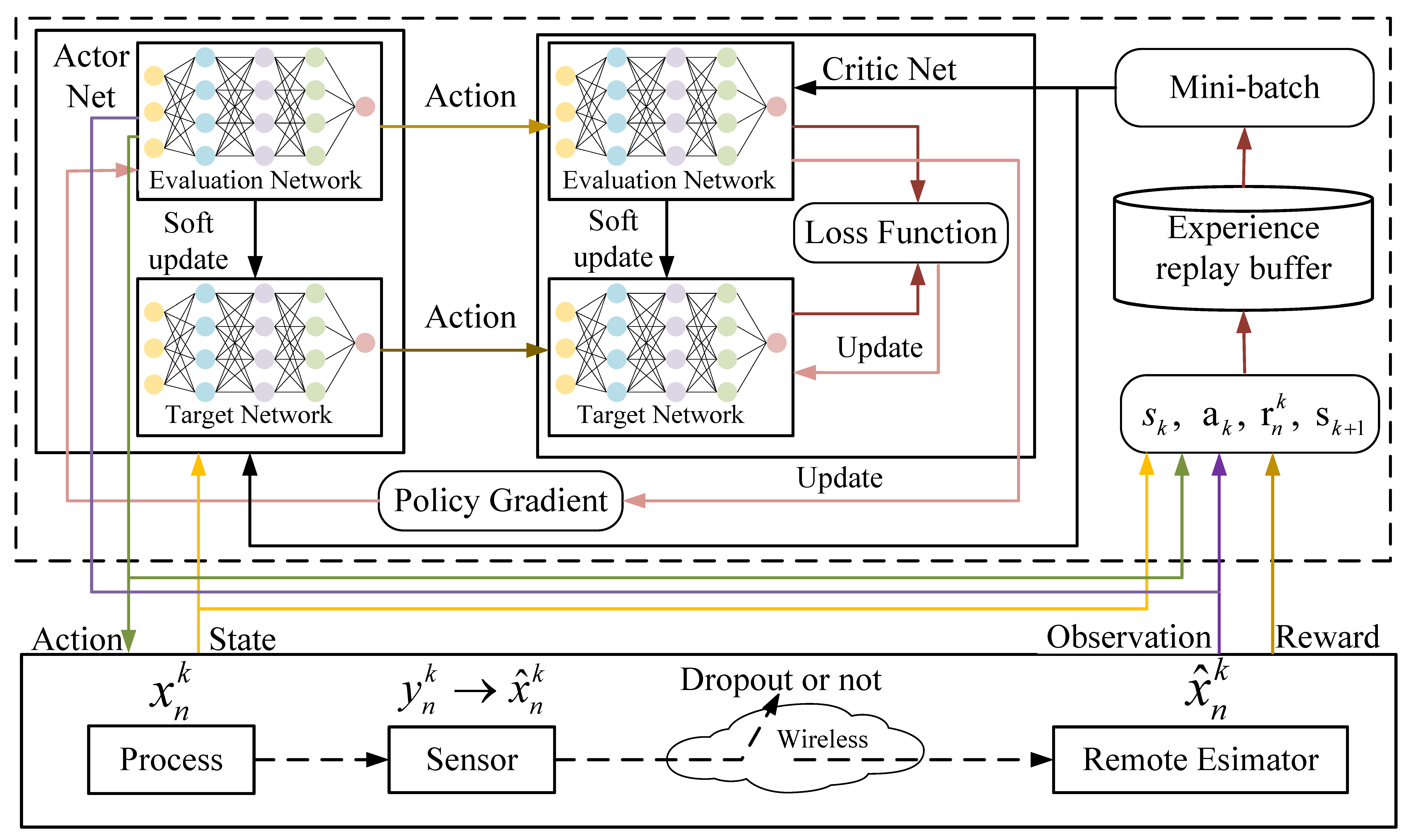

In this section, we first reformulate the optimization problem proposed in Problem 2 as a Multi-Agent Markov Decision Process (MDP) and then solve it using the MADDPG, Algorithm 2. As shown in

Figure 2, the proposed system architecture follows a centralized training and decentralized execution (CTDE) framework, which is well suited to distributed wireless sensor networks. Specifically, the MADDPG algorithm is trained offline at a fusion center or an edge server, where both the actor and critic networks are optimized based on collected environmental data. After training, only the lightweight actor networks are deployed to individual sensor nodes, enabling each agent to independently make decisions based on its local observation without the need for online coordination. This ensures scalability and practicality in decentralized scenarios. Regarding the deployment of an active RIS, we assume it is installed at a fixed infrastructure location (e.g., a gateway node or controller hub), where an external or a battery-based power supply is available. Each active element is equipped with a low-power amplifier, and the number of elements is limited to ensure that the overall power consumption remains within practical bounds. The actions related to RIS beamforming are optimized jointly with the power control and sensor grouping through learned policies.

These implementation considerations ensure that the proposed active RIS-assisted MADDPG architecture is both theoretically grounded and practically feasible for deployment in low-power, distributed WSN environments.

Figure 2.

Schematic of MADDPG.

Figure 2.

Schematic of MADDPG.

3.1. MDP Formulation

As depicted in

Figure 2, each sensor functions as an individual agent. At time step

k, agent

n receives an observation

from the environment and takes an action

. At time step

k, given the set of states

and the set of joint actions

, the agent receives an immediate reward

and the subsequent observation

. For sensor

n, the observable information at time

k comprises both the channel quality state based on its current location and the remote state estimation (RSE) error covariance. This can be represented as

. The system state at time

k is the collection of all sensor observations, expressed as

. At each time step, each sensor selects its action, which consists of the power allocation

, sensor grouping

, and beamforming selection for the RIS, denoted as

. Thus, the action

can be written as

. The reward function assesses the agent’s performance and guides its decision-making. The objective of this letter is to minimize the total RSE error of all sensors. MADDPG is typically used to solve maximization problems, where each agent aims to maximize its reward by selecting actions [

18]. However, Problem 2 is a minimization problem, so we need to invert the objective value by defining the reward as the negative of the cost, i.e.,

.

3.2. The MADDPG Algorithm

In this section, we propose the RIS-MADDPG algorithm to address the optimization problem outlined above. The MADDPG algorithm is a widely used multi-agent reinforcement learning (MADRL) method for continuous control problems. For

N agents, the MADDPG algorithm involves

N actor networks and

N critic networks, as shown in

Figure 2. Each agent’s actor network generates actions based on its policy, denoted as

, with each policy implemented by a neural network

. The critic networks, parameterized by

, evaluate the quality of the actions taken by the agents, considering the system state and the joint actions. Each agent

n has an experience replay memory

that stores transition tuples

. At each time step, each agent samples a minibatch from its memory to update its policy. The gradient for agent

n is computed as follows [

18]:

To stabilize the learning process, MADDPG employs the target network technique. The target networks for the action and evaluation functions,

and

, respectively, are updated using backpropagation. The loss function for updating the evaluation network is

where

.

After updating

and

, the parameters of the target networks,

and

, are softly updated as

where

and

are the update parameters for the target network, and

denotes the update interval that determines how frequently the actor and target networks are updated during training. The detailed steps of the RIS-MADDPG algorithm are provided in Algorithm 2.

| Algorithm 2 RIS-MADDPG Algorithm. |

- 1:

Initialize the actor network and the critic network for each agent n at time step k; - 2:

Initialize the target network and the critic nework for each agent n at time step k; - 3:

Initialize the experience replay buffer for each agent n; - 4:

for episode = 1 to E do - 5:

Initialize state and - 6:

while step do - 7:

for each agent n do - 8:

Get , select action - 9:

Execute actions and obtain the reward and the next state - 10:

Store in - 11:

end for - 12:

end while - 13:

for each agent n do - 14:

Sample a random mini-batch of samples from the experience replay buffer - 15:

Update the critic network by minimizing the loss function in ( 24) - 16:

if - 17:

Update the parameters using the deterministic policy gradient in ( 23) - 18:

Update two target networks: ( 25) and ( 26) - 19:

end if - 20:

end for - 21:

end for

|

To ensure the reproducibility of our results, all of the hyperparameters, training configurations, and simulation environment details have been clearly specified in

Table 1, and the algorithmic process has been fully described in Algorithm 2.

Table 1.

Simulation parameters.

Table 1.

Simulation parameters.

| Description | Parameter and Value |

|---|

| Transmission model | N = 9, G = I = 3, M = 16, pmax = 20 dBm |

| δR = δR = 4 PaRIS = 32 dBm |

| Ptot = 30 dBm, PDC = −5 dBm, Pc = −10 dBm |

| αR = 2.7, αB = 1.5, αS = 1.6, αm ∈ [1.1, 2] |

| ξ = 0.8, α = 1017,

= −70 dBm,

= −80 dBm |

| KF model | An, Cn, Qn, Rn ∈ [0.5, 1.5] |

| >MADDPG | >E = 10, K = 4000, n = 128, = 2000 |

3.3. Computational Complexity Analysis

The computational complexity of Algorithm 1 mainly arises from two iterative phases: the convergence phase for updating the estimation covariance and the scheduling phase involving nested loops over time slots (K), agents (N), and groups (G). Specifically, the convergence phase’s complexity is , and the scheduling phase’s complexity is . Thus, the total complexity of Algorithm 1 is , reflecting linear scalability in terms of the agents and groups.

For the MADDPG algorithm used to solve Problem 2, the complexity consists of two parts: centralized critic network training and decentralized actor network execution. The centralized training’s complexity is , where is the maximum number of training episodes. The decentralized execution complexity per agent is constant per episode, resulting in an overall execution complexity of . Hence, the total complexity is . Compared to centralized approaches like DDPG (with complexity ), MADDPG significantly reduces the computational overhead, thus enhancing the scalability and efficiency for multi-agent scenarios.

4. Simulation Results

Based on the above discussion, we divide the computational complexity of applying the MADDPG algorithm to solving Problem 2 into three components:

The critic network (centralized training): During the centralized training phase, the critic network evaluates the global state–action pairs involving all N agents. Suppose that the maximum number of episodes is denoted as , and each agent requires a complexity of for a single update: the overall complexity becomes .

The actor network (decentralized execution): In the decentralized execution phase, each agent independently selects actions using its actor network. Since each agent makes decisions independently with the complexity per episode, the total complexity for all episodes is .

Therefore, the total computational complexity of the MADDPG algorithm is represented as

Moreover, we critically analyze the overhead costs compared to those of the baseline models. Specifically, compared to the centralized DDPG method—which incurs a complexity of approximately due to the joint optimization of the actions over all agents—MADDPG significantly reduces the complexity by decentralizing the actor network computations, compared to that of the standard reinforcement learning methods.

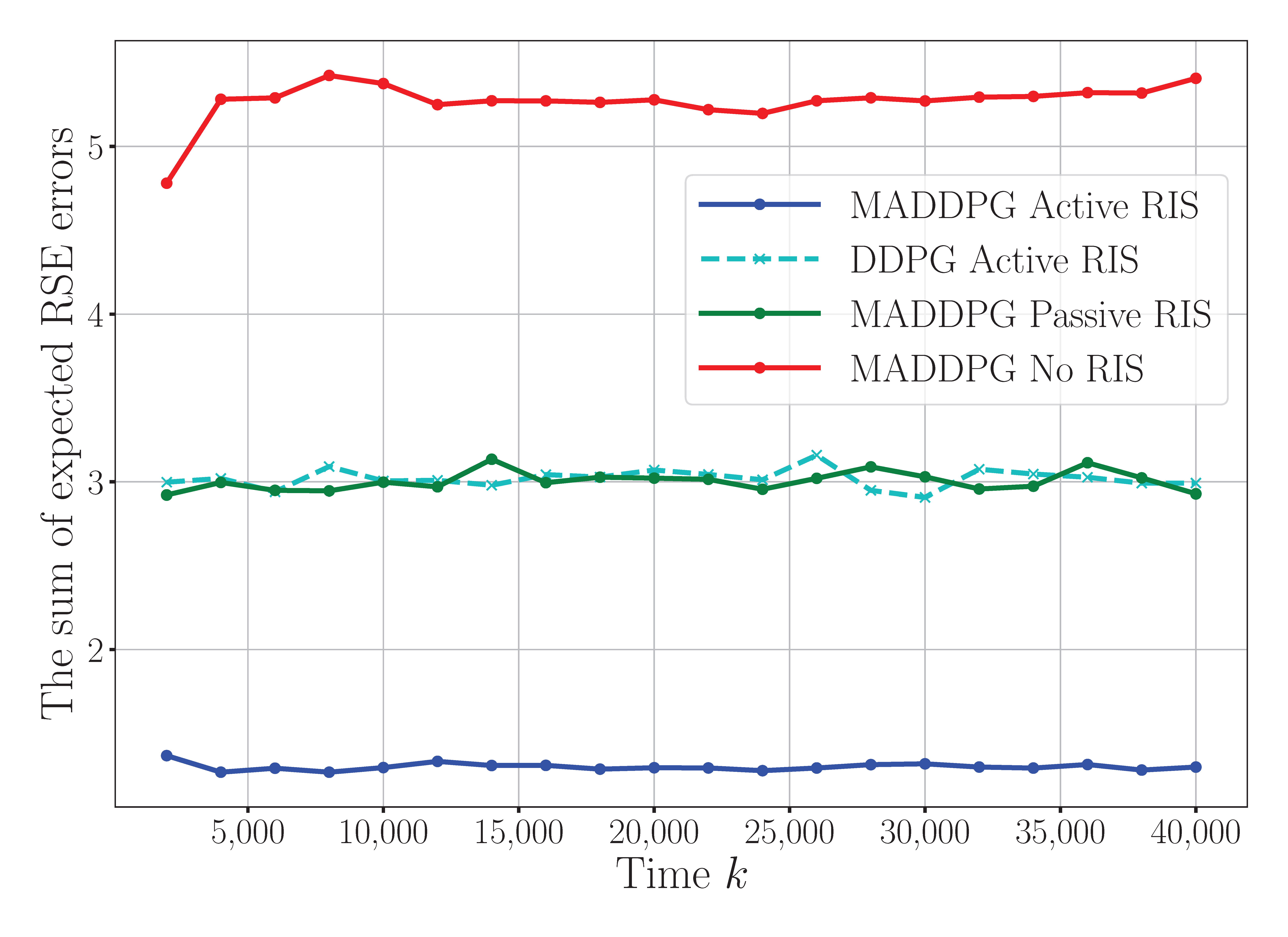

Figure 3 compares our proposed MADDPG algorithm with active RIS assistance against three baseline approaches: MADDPG with a passive RIS, DDPG with an active RIS, and MADDPG without RIS assistance. The results demonstrate substantial reductions in the performance errors provided by the active RIS. Specifically, the MADDPG algorithm with active RIS assistance achieves an 88% reduction in error compared to that with a passive RIS and a 200% reduction compared to that with no RIS assistance. Additionally, the active RIS within the MADDPG framework surpasses active-RIS-assisted DDPG by approximately 87% in its error reduction. These results clearly highlight the superior performance of the MADDPG algorithm with the active RIS, underscoring the potential of the active RIS to optimize WSNs. To enhance the clarity further, we have specified in the figure captions that the horizontal axis denotes the iteration steps and the vertical axis corresponds to the normalized estimation errors, both of which are dimensionless quantities.

Figure 4 illustrates the impact of the number of reflection elements on the sensor’s RSE error. It can be observed that increasing the number of elements to 128 results in substantial error reductions of 32%, 78%, and 120% when compared to configurations with 32, 16, and 8 elements, respectively. This performance gain is attributed to the improved signal reflection and enhanced spatial diversity enabled by more reflection elements. These results demonstrate that a higher number of elements significantly enhances the sensing accuracy, thereby playing a crucial role in reducing the estimation error.

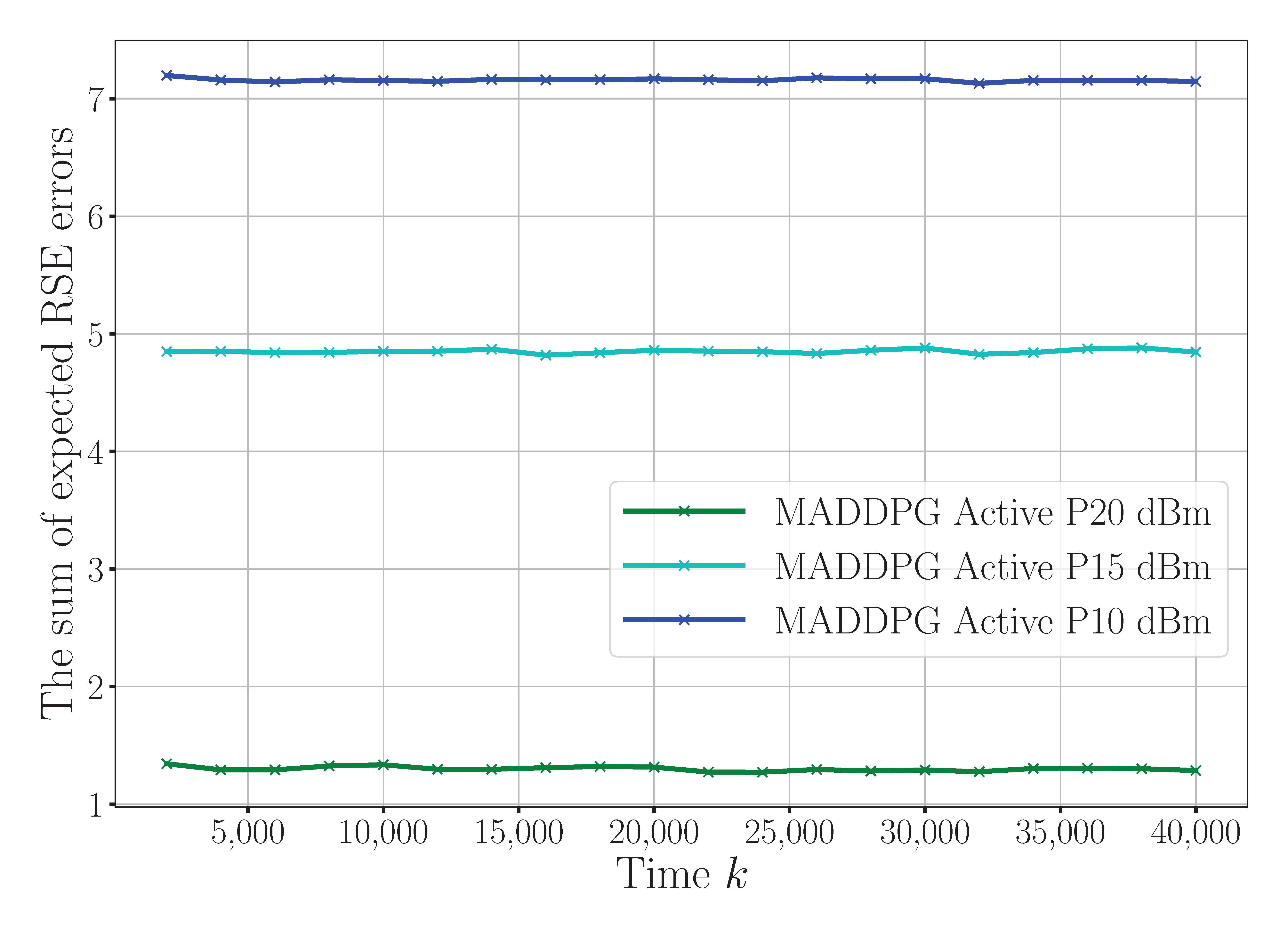

Figure 5 presents the convergence behavior of the RSE error under different maximum power constraints (10 dBm, 15 dBm, and 20 dBm). While the general convergence trends are visually similar, higher power constraints clearly lead to faster convergence and a lower steady-state RSE error. This is because larger transmission power budgets improve the signal-to-noise ratio (SNR) at the receiver, enabling more precise state estimations. These findings indicate that the power allocation not only influences the final estimation accuracy but also affects the convergence speed, highlighting its critical importance in dynamic WSN environments. In summary, the results in

Figure 4 and

Figure 5 jointly confirm that both the number of reflection elements and the power constraints have a pronounced impact on the sensing performance. Optimizing these parameters leads to more accurate and responsive sensing, which is essential for reliable state estimation in resource-constrained wireless sensor networks.

Figure 3.

The sum of expected RSE errors under different algorithms.

Figure 3.

The sum of expected RSE errors under different algorithms.

Figure 4.

The convergence for different numbers of RIS reflecting elements.

Figure 4.

The convergence for different numbers of RIS reflecting elements.

Figure 5.

Convergence for different maximum power constraints.

Figure 5.

Convergence for different maximum power constraints.

To investigate the system-level trade-offs further,

Figure 6 presents the convergence behavior of the proposed algorithm under different sensor-to-channel configurations (e.g., two sensors with six channels, three sensors with four channels, and four sensors with three channels). It can be observed that as the number of sensors increases under limited channel resources, the RSE error grows due to heightened interference and limited degrees of freedom for resource allocation. This validates the system’s sensitivity to the sensor density and available spectrum.

Figure 6.

Convergence comparison under different sensor-to-channel configurations.

Figure 6.

Convergence comparison under different sensor-to-channel configurations.

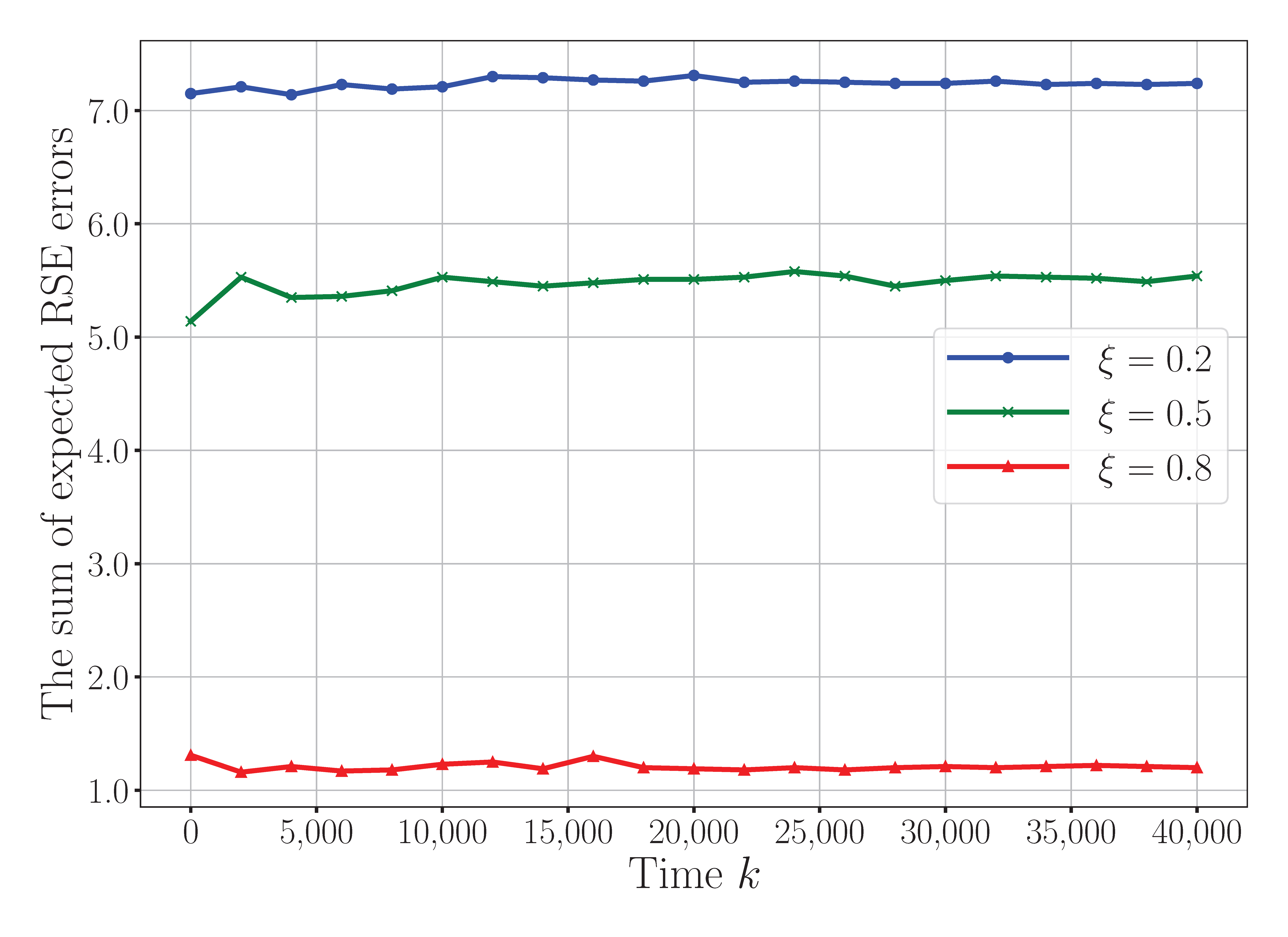

Figure 7 evaluates the impact of different amplifier efficiency coefficients

on the convergence behavior of the proposed algorithm. The parameter

reflects the effective amplification capability of the active RIS, as constrained by the total power budget

and the internal power consumption

, as defined in constraint (20d). A larger value for

enables the active RIS to contribute more effectively to signal enhancement via stronger amplification, thereby improving the signal-to-noise ratio (SNR) at the receiver and reducing the RSE error. However, increasing

also implies that more power is allocated to RIS amplification, potentially limiting the power available for sensors. As shown in the figure, a moderate value for

achieves a good trade-off between RIS enhancement and the overall system power balance, whereas excessive amplification may lead to diminishing returns. This result highlights the importance of careful tuning of the RIS-related parameters to optimize the joint communication–sensing performance in energy-constrained WSN environments.

Figure 7.

Convergence performance under different RIS amplifier efficiency coefficients .

Figure 7.

Convergence performance under different RIS amplifier efficiency coefficients .

To facilitate a comprehensive and intuitive comparison across different experimental settings,

Table 2 presents a summary of the convergence time and the steady-state RSE error under various configurations. In this table, “Conv. Time” denotes the number of iterations (abbreviated as “Iter.”) required for the algorithm to reach convergence, while “Conv. Value” represents the final RSE error after stabilization. The data in the table are systematically derived from key experimental results, primarily based on the quantitative trends observed in

Figure 3,

Figure 4 and

Figure 5. As shown in

Table 2, the proposed MADDPG algorithm assisted by the active RIS consistently achieves a superior performance in terms of both the convergence speed and estimation accuracy, particularly when equipped with a larger number of reflecting elements. In contrast, reducing the number of RIS elements or the transmission power constraint leads to increased estimation errors, highlighting the importance of spatial diversity and power resources for accurate sensing. This table provides a clear and concise overview of the system behavior across algorithms and configurations, offering valuable insights for future research and parameter optimization in wireless sensor networks.

Table 2.

A summary of the convergence times and convergence values under different configurations.

Table 2.

A summary of the convergence times and convergence values under different configurations.

| Scenario | Conv. Time (Iter.) | Conv. Value |

|---|

| MADDPG + Active RIS (128 elements, 20 dBm) | 3500 | 0.75 |

| MADDPG + Active RIS (32 elements, 20 dBm) | 3300 | 1.25–1.35 |

| MADDPG + Active RIS (16 elements, 20 dBm) | 3200 | 2.20–2.35 |

| MADDPG + Active RIS (8 elements, 20 dBm) | 3000 | 3.00 |

| MADDPG + Active RIS (16 elements, 10 dBm) | 3500 | 7.10–7.25 |

| MADDPG + Active RIS (16 elements, 15 dBm) | 3500 | 4.85–5.00 |

| DDPG + Active RIS (16 elements, 20 dBm) | 4500 | 3.10–3.20 |

| MADDPG + Passive RIS (16 elements, 20 dBm) | 5000 | 3.00 |

| MADDPG without RIS (16 elements, 20 dBm) | 10,000 | 5.15–5.25 |