Abstract

Deep learning models developed to predict knee joint kinematics are usually trained on inertial measurement unit (IMU) data from healthy people and only for the activity of walking. Yet, people with knee osteoarthritis have difficulties with other activities and there are a lack of studies using IMU training data from this population. Our objective was to conduct a proof-of-concept study to determine the feasibility of using IMU training data from people with knee osteoarthritis performing multiple clinically important activities to predict knee joint sagittal plane kinematics using a deep learning approach. We trained a bidirectional long short-term memory model on IMU data from 17 participants with knee osteoarthritis to estimate knee joint flexion kinematics for phases of walking, transitioning to and from a chair, and negotiating stairs. We tested two models, a double-leg model (four IMUs) and a single-leg model (two IMUs). The single-leg model demonstrated less prediction error compared to the double-leg model. Across the different activity phases, RMSE (SD) ranged from 7.04° (2.6) to 11.78° (6.04), MAE (SD) from 5.99° (2.34) to 10.37° (5.44), and Pearson’s R from 0.85 to 0.99 using leave-one-subject-out cross-validation. This study demonstrates the feasibility of using IMU training data from people who have knee osteoarthritis for the prediction of kinematics for multiple clinically relevant activities.

1. Introduction

People who have knee osteoarthritis commonly report pain and physical limitation performing functional activities such as walking, transitioning from a chair and negotiating stairs [1]. During these activities they also use less sagittal plane range of movement (knee flexion) during particular phases of activities (e.g., stance phase of walking) compared to people who do not have osteoarthritis [2,3,4,5]. Clinicians are interested in the relationship between specific kinematic measures and clinical outcomes in people with knee osteoarthritis [6]. For example, a person may have difficulty descending stairs because they do not use available knee flexion movement during the stance phase. Interventions such as exercise [7] and total knee replacement [8] have demonstrated the ability to improve knee flexion angle during walking in people who have knee osteoarthritis. Clinical guidelines recommend that the performance of painful and limited activities are monitored over the course of treatment [9]. However, there are currently several limitations to clinicians being able to accurately quantify sagittal plane knee range of movement during functional activities in both clinical and free-living environments (e.g., patient’s home or work, or during recreation).

Clinicians are unable to routinely access gold standard optoelectronic motion analysis systems (e.g., Vicon) due to cost and space requirements. Smartphone camera-based technology is more accessible to clinicians and has demonstrated validity and reliability for measuring sagittal plane knee angles [10]. Both optoelectronic and smartphone camera-based systems require the patient to be observed within a fixed volume to record useful clinical information, precluding their use in a free-living environment. Inertial measurement units (IMUs) are a wearable sensor technology that is emerging as an alternative for biomechanical analysis, allowing a patient to move freely in clinical and free-living environments. Multiple scoping reviews have described the potential role of IMUs for the assessment of people with knee osteoarthritis [11,12] and following knee replacement surgery [13]. These reviews highlight the need for further investigation of IMU systems that can be used for monitoring biomechanics of patients in free-living conditions.

There is a substantial volume of research validating IMUs for estimating kinematics in laboratory environments [14,15,16], although two barriers exist for widespread clinical adoption. In uncontrolled environments such as in a clinic or in free-living conditions, the presence of metallic equipment (e.g., chairs or railings) and devices such as mobile phones and computers can interfere with the magnetometer data which can affect the reliability of fusion algorithm estimates [17,18], making the data unusable [17]. Although some fusion methods have been described which use only accelerometers and gyroscopes, they require IMU calibration prior to each use [19]. To overcome the magnetometer problem and calibration requirements, machine learning (a form of artificial intelligence) approaches have been used to predict kinematics (e.g., knee joint flexion angle) from only the raw accelerometer and gyroscope data [20,21]. Although traditional machine learning requires the researcher to identify important features from the IMU data to train the model, a more contemporary approach is to use deep learning (a subfield of machine learning) that automatically detects features, minimising programming requirements [22,23,24,25,26].

There are a small number of studies where deep learning models have been trained to predict knee joint angular kinematics for walking from IMU training data collected mostly from healthy people [22,23,24,25,26,27]. However, people with knee osteoarthritis experience significant difficulty with functional activities other than walking, such as negotiating stairs and transitioning to and from a chair. There is only one reported study using IMU data collected from participants who have knee osteoarthritis to train a deep learning model to predict sagittal plane knee kinematics, which was only for the activity of walking [26]. No study has yet developed a deep learning model to predict knee joint kinematics for multiple, clinically important activities using IMU data collected from people with knee osteoarthritis.

The aim of this study was to demonstrate a proof-of-concept for the feasibility of using IMU training data collected from people who have knee osteoarthritis performing three clinically relevant functional activities: walking, negotiating stairs, and transitioning to/from a chair, to train a deep learning model to predict knee joint flexion angles. The second aim was to determine if a single-leg model (two sensors on one leg) or double-leg model (two sensors on both legs) was more accurate.

2. Materials and Methods

2.1. Study Design

This study was a retrospective, prognostic study using continuous IMU data, collected from people with knee osteoarthritis who performed multiple clinically relevant activities, to predict knee joint sagittal plane kinematics.

2.2. Participants

Participants in this study were part of a broader investigation into the use of IMUs in people with knee osteoarthritis [14,28]. Seventeen participants with knee osteoarthritis were recruited from local physiotherapists, GP practices and local community centres. This number of participants mirrors other studies [21,24,29,30] and was thought to be sufficient to test the feasibility of this proof-of-concept study. Participants were included if they met the clinical diagnostic criteria for knee osteoarthritis [31], had ≥3 months of pain, ≥4/10 pain on most days, and moderate activity limitation (single item on the Function, Daily Living sub-scale of the Knee injury and Osteoarthritis Outcome Score) [32]. To minimise the effects of soft tissue artefact during motion capture that can introduce ‘noise’ into the data, we excluded participants with a body mass index (BMI) > 35 kg/m2 and those who had a BMI >30 kg/m2 with a waist-to-hip ratio (WHR) of ≤0.85 for women and ≤0.95 for men (those with greater soft tissue around the lower limbs). Participants were excluded if they had previous lower limb arthroplasty or mobility impairments due to other medical conditions (e.g., cognitive impairment, recent trauma or neurological disorders). The study was approved by the Human Research Ethics Committee of Curtin University (HRE2017-0738).

2.3. Data Collection

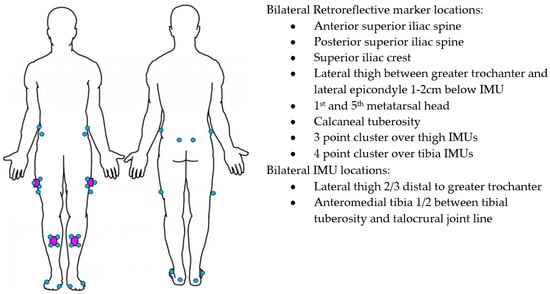

Participants were initially screened for eligibility over the phone and subsequently attended a university motion analysis laboratory. After providing written informed consent, height and weight data were collected. IMUs and retroreflective markers were placed on the participants in a standardised manner by an experienced musculoskeletal physiotherapist in the locations described in Figure 1. Participants performed 5 repetitions of knee flexion/extension as a warm-up on each knee. A standardised battery of functional activities was then performed that included 4 trials of stand-to-sit, 4 trials of sit-to-stand, 3 trials of 3-stair ascent, 3 trials of 3-stair descent, and 3 trials of a 5-metre self-paced walk. Participants rested for 30 s between trials and 60 s between activities. IMUs were removed after completion of the battery of functional activities and raw data were offloaded.

Figure 1.

IMU (purple) and Vicon marker (blue) placement.

2.4. Instrumentation

Four IMUs (v6 research sensors, DorsaVi, Melbourne, Australia) sampling at 100 Hz (accelerometer 8G, gyroscope 2000 degrees/second) were attached to the lower limbs with double-sided hypoallergenic tape. The IMUs’ dimensions were 4.8 × 2.9 × 1 centimetres and they weighed 17 g. Three-dimensional motion analysis was recorded with an 18 camera Vicon (Oxford Metrics Inc., Oxford, UK) sampling at 250 Hz. The relatively small reconstruction errors of <1 mm have resulted in the Vicon being considered the gold standard motion analysis system [33,34]. Twenty-eight retroreflective markers were placed on the participant’s pelvis and lower limbs using a cluster-based approach in alignment with International Society of Biomechanics recommendations [35]. For this purpose, marker clusters were affixed to the IMUs (Figure 1) and anatomical markers were placed at the locations outlined in Figure 1. Additional markers were applied to relevant joint centres for a static calibration trial, then removed. This approach has previously been described in detail [36]. The sensor system was synchronised with the Vicon prior to being attached to the participant. IMUs in the same orientation were placed in a wooden box with retroreflective markers attached to the outside. The box was then rotated >90° ten times and recorded as a single trial in Vicon Nexus software (Oxford Metrics Inc., Oxford, UK) to facilitate subsequent time-synchronisation of the IMU and Vicon systems.

2.5. Data Preparation

Vicon trials were reconstructed and modelled using Vicon Nexus software. Gaps in trajectories were noted through visual inspection. Cubic spline interpolation was used to fill gaps of ≤20 frames (0.08 s), and if gaps were larger than this they were discarded. Kinematic trajectories were then filtered using a low-pass Butterworth filter with a 6 Hz cut-off frequency as determined by residual analysis. Vicon data were down-sampled from 250 to 100 Hz to allow time synchronisation with the IMU sensors.

We used the raw triaxial accelerometer and gyroscope data from 4 IMUs that were output as individual timestamped files using the IMU proprietary software (MDMv6 Manager v6.883, DorsaVi). Reconstructed Vicon data and the filtered raw orientation data from each IMU were time synchronised by the use of normalised cross-correlation using a customised LabVIEW program (National Instruments, Austin, TX, USA). The event markers were automatically detected by the LabVIEW program. Events for phases of walking and stair trials were heel contact and toe-off for swing and stance phases. Sit-to-stand and stand-to-sit events were anterior and posterior movement of the pelvis. Start and end times from the raw IMU data were exported for each phase of activity for the raw IMU and reconstructed Vicon data, which were used as inputs into the model. All trials were visually inspected to validate the automated synchronisation and event markers.

We input the affected leg, side of interest, activity, direction of stair climbing and phase of activity as categorical variables into the model. In this study, we also investigate the interdependency between both legs by training the model with two different structures of input data: double-leg, and single-leg. The double-leg model consisted of 38 input variables (24 accelerometer/gyroscope from 4 IMUs and 14 categorical variables), whereas the single-leg model included 27 input variables (12 accelerometer/gyroscope from 2 IMUs, side, 14 categorical variables).

2.6. Deep Learning Model Development

The target prediction variable was the knee flexion joint angle at each time step obtained from the Vicon motion capture for multiple activities from the raw IMU accelerometer and gyroscope data.

One deep learning approach known as long short-term memory (LSTM) is suitable to handle discrepancies between steps in time-series data, where each trial differs in length [37]. LSTM also requires less pre-processing compared to other deep learning approaches, such as convolutional neural networks (CNNs), and is more suitable for real-time applications [38]. Recently a N-layer feed forward neural network (FFNN) demonstrated superior results for kinematic prediction of the lower limb compared to a recurrent neural network known as LSTM [23]. FFNN generally uses all the data points to make the prediction, whereas LSTM only uses past data points, resulting in its reduced accuracy for the first few data points. We chose to use a further evolution of LSTM and FFNN known as bidirectional LSTM (BiLSTM), which has both recurrent and feedforward characteristics because it transverses the input data twice, using both past and future data for predictions [26] to improve accuracy compared to LSTM [39]. BiLSTM has been successfully implemented for predicting knee joint kinematics during walking for people who have knee osteoarthritis or previous knee replacement [26].

2.7. Model

A previous study using IMU data to train a deep learning prediction model reported that the number of IMUs can affect kinematic prediction error [40]. To explore the effect of using additional IMUs, we developed two models: double-leg and single-leg. The double-leg model uses data from 4 IMUs from both legs as input to predict the knee joint angle of the leg of interest, whereas the single-leg model uses data from 2 IMUs from the leg of interest as input.

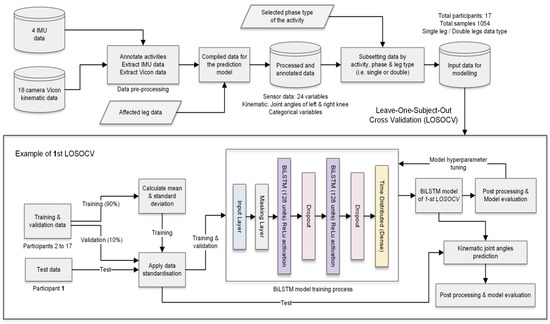

The model architecture was based on a stacked BiLSTM model. As BiLSTM requires input data for each sequence to have the same length, sequences were padded to the maximum sequence length of the activity phase. A single masking layer was used as the first layer to ignore all padded values. Then, two separate BiLSTM hidden layers with 128 units and a rectified linear unit activation function extracted the features from the sequences. BiLSTM was set to output a value for each time step in the input data, resulting in returning the sequence. A dropout layer was added after each BiLSTM hidden layer to randomly drop some units together with their connections from the network to reduce overfitting. The dropout rate was set to 0.2 and learning rate 0.0001. Finally, a separate fully connected time distributed output layer with linear activation was used to return the estimated joint angle (one for a single-leg input type and two for the double-leg input type). The proposed BiLSTM kinematic prediction model architecture is illustrated in Figure 2.

Figure 2.

Data preparation and model architecture of the proposed BiLSTM kinematic prediction models.

The model is trained using the adaptive momentum (Adam) optimisation algorithm [41]. The final model parameters were selected after the hyperparameter tuning process assessing the loss function and model metrics. In this study, we retained the same hyperparameters across all model training processes to investigate the influence of input data variation to the prediction model. The data processing, machine model and experimental results were developed and implemented using Python 3 with the libraries Pandas, Numpy, Scipy, Scikit learn, Keras and Tensorflow.

2.8. Validation and Data Standardisation

As a clinician needs to know the average accuracy of a predictive model for each new patient, we used a leave-one-subject-out cross-validation (LOSOCV) method, which is most appropriate as it accounts for between-participant variability [42]. The LOSOCV method sequentially trained the model on the data from all participants except for one, which was left out and used for testing. This procedure looped through the total number of participants, resulting in 17 kinematic prediction models. The dataset was separated into training, validation and test datasets. For each validation fold, data from 16 participants were separated into training (90%) and validation (10%) sets randomly based on the unique samples. Training data were used to optimise the model parameters, whereas validation data were used as the unseen data during the model training process to fine tune the parameters such as validation loss, batch size and learning rate. Finally, the model was tested on all samples for the left-out participant’s data.

For each activity, we calculated the average root mean square error (RMSE), normalised RMSE (nRMSE) [43], mean absolute error (MAE) and Pearson correlation coefficient (R) between the Vicon reference and predictions for time-series data. R values were averaged across participants using Fisher’s z transformation [44]. The strength of the correlation was categorised as excellent (R > 0.9), strong (0.67 < R ≤ 0.9), moderate (0.35 < R ≤ 0.67) and weak (R ≤ 0.35) based on similar studies [29]. In addition, we calculated the RMSE for the average maximum (peakRMSE) and minimum (minRMSE) knee flexion angles.

Each input variable and target variable were standardised for scale and distribution separately in each loop. Mean and standard deviation were computed only on the training data across all trials and all time points for each variable to prevent data leakage when applying pre-processing statistics. Validation and test data were standardised based on the corresponding mean and calculated from the training data used in each loop. A three-dimensional input shape is required for the BiLSTM model (N_samples, N_timesteps, N_features); therefore, input data are reshaped prior to being passed to the model. The number of samples collected from participants used to train the models is shown in Table 1.

Table 1.

Number of samples for each activity.

3. Results

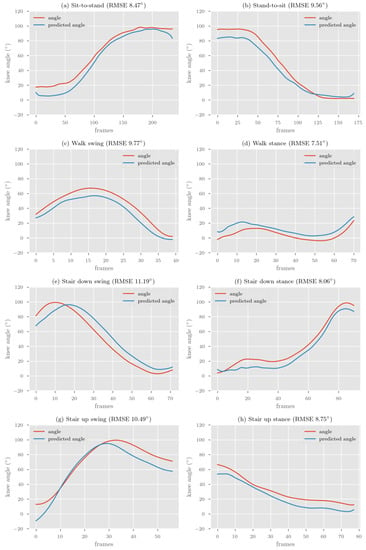

The participant characteristics are shown in Table 2. The accuracy of our two models is presented in Table 3. Examples of representative model prediction for each activity phase compared to the Vicon reference standard (based on RMSE) are presented in Figure 3. Overall, the difference between the double-leg and the single-leg model was small, with an RMSE difference ranging from 0.11° to 1.96° and MAE from 0.01° to 1.46° for time-series predictions.

Table 2.

Characteristics of participants.

Table 3.

Knee flexion angle prediction error for time-series, peak and minimum estimates for each activity.

Figure 3.

Representative single-leg BiLSTM model prediction compared to Vicon reference for each activity phase.

The single-leg model demonstrated the smallest RMSE/MAE across activities (five of eight activities) for time-series predictions compared to the double-leg model. The double-leg model demonstrated the smallest RMSE/MAE for predicting simultaneous double-leg activities (sit-to-stand and stand-to-sit) compared with activities that require reciprocal movements of both legs (walking or stairs).

Correlations between the reference Vicon system and the deep learning model were excellent (R > 0.9) for both the single-leg and double-leg models for all activities except for the stance phase of walking. The strongest correlation coefficient was for sit-to-stand, stand-to-sit and the swing phase of ascending stairs (R = 0.99).

The peakRMSE and minRMSE for each activity was almost always lower than the time-series RMSE for large range activities (sit-to-stand, stand-to-sit and swing phases). For small range activities (stance phases), the minRMSE was always lower than the time-series RMSE, whereas the peakRMSE was always higher.

4. Discussion

The aim of this study was to establish a proof-of concept for the feasibility of using IMU data collected from people who have knee osteoarthritis for development of a deep learning model to predict sagittal plane knee joint angles for multiple clinically relevant activities. We developed a BiLSTM kinematic prediction model on IMU training data that included walking, negotiating stairs and transitioning to/from a chair for people who have knee osteoarthritis. The prediction error (RMSE/MAE) between the reference Vicon system and kinematic predictions was lowest for the stance phase for walking and going down stairs, and highest for the swing phase for going down and up stairs. Although, as a proportion of the range used during each activity phase, the sit-to-stand and stand-to-sit had the lowest prediction error, the stance phase for walking and going down stairs had the highest prediction error (nRMSE). For time-series data, the shape of the predicted curve had a consistently excellent correlation (R > 0.9) to the Vicon system across activities.

The second aim was to develop two types of models using training data from (i) two IMUs on one leg (single-leg) and (ii) four IMUs on two legs (double-leg). The single-leg model demonstrated more frequent smaller errors and had excellent correlations (R > 0.9) than the double-leg model across activities that require reciprocal, asymmetrical lower limb movement, such as walking and negotiating stairs; however, the difference in error between models was small. For activities that require bilateral simultaneous movement (sit-to-stand/stand-to-sit), the double leg model demonstrated smaller error.

4.1. Comparison to Previous Literature

In comparison to other deep learning models were that developed to predict knee joint kinematics just for walking, our BiLSTM model for multiple activities demonstrated prediction errors and correlations within the range of previous studies (RMSE 0.97–12.1°, R 0.94–0.99) [22,24,25,26,27].

Only one other study has used deep learning to predict knee kinematics in people who have knee osteoarthritis for the activity of walking [26]. Our model has the benefit of being more broadly applicable for real world use, when combined with human activity recognition, because of the inclusion of multiple clinically important activities for people who have knee osteoarthritis. The model by Renani et al. [26] demonstrated small average RMSE 2.9° (SD 1.1) for time-series prediction of knee flexion/extension during walking using a BiLSTM model. Training data in that study was from four IMUs placed on the pelvis, thigh, shank and foot. In comparison, our BiLSTM model trained on data from only a thigh and shank IMU demonstrated substantially higher average RMSE during walking phases (single model—stance 7.04° (SD 2.6), swing 9.7° (SD 3.86)). Their results may have demonstrated lower error because of the higher number of samples (n = 3943) compared to our study (n = 955), the additional IMUs placed on the pelvis and foot, the inclusion in our training data of activities other than walking, or the difference in validation approach.

For validation of a IMU prediction model to be meaningful to a clinician, it has been suggested that the average level of error for each new person should be reported [42], which is a strength of the LOSOCV method compared to the other validation methods (e.g., k-fold cross validation). Our results are similar to those of previous studies that use LOSOCV, rather than studies that use other validation approaches (e.g., [22,25,26,27]), and studies that use real IMU data compared to those that use simulated IMU data (see Section 4.3.3. Augmented and Simulated Data). For example, Wouda et al. [24] validated a LSTM model using IMU training data collected from a healthy population for time-series prediction of knee flexion during running. They used LOSOCV and reported an average RMSE of 12.1° (SD 1.5). In comparison, our model achieved lower average RMSE for walking swing and walk stance of 9.7° (SD 3.8) and 7.0° (SD 2.6). Although our model demonstrated lower average error, there was higher variability, which may be the result of using training data that included multiple activities rather than the single activity of walking. Our model also demonstrated good ability to predict the shape of the kinematic curve with excellent correlations (R > 0.9) for time-series prediction of all but one activity, comparing well to the models by Wouda et al. [24] (R = 0.94) and Renani et al. [26] (R = 0.99).

Using raw accelerometer and gyroscope data for training deep learning prediction models appears a promising tool to aid clinical decision making for clinicians managing people with movement disorders, such as knee osteoarthritis, as it mitigates the requirement for the magnetometer, which is prone to interference, especially in free-living conditions where the magnetic field is not uniform [45]. However, deep learning approaches using real IMU data for the prediction of knee kinematics have not yet reached the consistent low error achieved by Kalman filter-based approaches that report RMSE as low as 1° for multiple clinically relevant activities [19] or 5.04° using the proprietary software for the IMUs described in this study [14]. Various clinical, data handling and machine learning architecture considerations may help to reduce prediction error in future studies.

4.2. Clinical Considerations for Kinematic Prediction Models

Development of various machine learning models has the potential to have a significant impact for clinical populations, such as for people who have knee osteoarthritis. However, the majority of these studies have not described the clinical implications of such models; therefore, this section discusses clinical considerations for future development of machine and deep learning models for prediction of joint kinematics.

4.2.1. Variability of Movement in Clinical Populations

Although our model demonstrated a very small error and a high correlation for some participants, this was not the case across all participants. It is well established that people who have musculoskeletal or neurological health conditions move differently than healthy populations [8,46,47]. Across people who have knee osteoarthritis, there is diversity in movement patterns during functional activities related to disease severity [46]. Because of this heterogeneity of movement patterns across different conditions and even within a single diagnosis such as like knee osteoarthritis, it is important that models are trained and tested on the intended population for use. For example, Renani et al. [48] trained a CNN to predict spatiotemporal kinematics of the lower limb for people with knee osteoarthritis and after total knee replacement. Their model demonstrated consistently higher prediction error and variability across 12 spatiotemporal gait parameters for people that have knee osteoarthritis compared to people who had total knee replacement. Other studies have reported that the accuracy of human activity recognition models derived on data from healthy populations has had substantially reduced test accuracy in people who have health conditions, such as Parkinsonism [49,50]. It is currently unknown if the test accuracy of kinematic prediction models differs across populations. Given that people with knee osteoarthritis move differently and more variably than healthy people, the ability to generalise the kinematic prediction model accuracy between those populations should not be assumed. Future studies should consider testing prediction models on participants with health conditions of interest who demonstrate a range of movement impairments, and pain and disability levels.

4.2.2. Selecting Clinically Important Activities and Biomechanical Parameters

The clinically relevant use for predicting sagittal plane knee joint angles is to monitor biomechanics during functional activities in free-living environments and in-clinic to aid clinical decision making. Specifically, particular phases of activities (e.g., stance phase of ascending stairs) are of interest to clinicians because they are targets for rehabilitation.

Prior to this study, machine learning models for predicting knee joint kinematics that could potentially be useful for people with knee osteoarthritis have only been trained and tested on walking data [21,22,23,24,25,26,27,48]. Unlike those studies where a kinematic prediction model was developed for walking, our model is the first to be trained and tested on a range of clinically relevant activities for a specific clinical population. Although walking is the most frequently performed activity of the lower limbs, we selected three activities (walking, negotiating stairs and transitioning to/from a chair) that are recommended as part of a clinical physical assessment in medical guidelines for knee osteoarthritis [9]. To improve clinical utility of machine learning prediction using IMU data, future studies should investigate kinematic prediction models for a broader range of clinically important activities.

There are a broad range of kinematic and kinetic movement parameters that are of interest to clinicians and researchers for people with knee osteoarthritis [6]. Therefore, monitoring sagittal plane knee joint angles is only one movement parameter that could be recorded for clinically relevant activities in free-living conditions. Other movement parameters are also of interest because of their relationship with structural progression of knee osteoarthritis. Knee adduction moment, for example, is associated with the progression of medial compartment knee osteoarthritis [51,52]. There is early work investigating spatiotemporal kinematics [48], predicting knee moments and forces using deep learning approaches such as LSTM, CNN and ANN for the purposes of field monitoring [27,29,30]. To improve clinical utility of IMU machine/deep learning prediction models using IMU data, future studies should investigate integrating [12] human activity recognition [28] with both kinematic and kinetic prediction models (see Section 4.3.1) for a broad range of clinically relevant activities that include but are not limited to the activity of walking.

4.2.3. Reducing the Burden for Clinicians

Some studies use up to 17 IMUs across the whole body to train deep learning models for kinematic prediction of the lower limbs [24,48]. It is generally thought that having additional IMUs results in improved accuracy and reduced error for machine learning predictions using IMU data for human activity recognition [53,54]. However, having to use additional IMUs can be burdensome for clinicians. Our findings are similar to Hendry et al. [40], who investigated kinematic prediction for the hip and lumbar spine using IMUs to train a deep learning model for ballet dancers. They reported that their kinematic prediction model trained on only two IMUs placed on the lower limbs demonstrated less error (7.0°) than models that included additional training data from IMUs placed on the spine (7.8°). A novel finding in our study was that the prediction error with using data from only two IMUs on a single leg was less than that using four IMUs on two legs, which may be because of the asymmetrical and diverse nature of movement patterns that exist in people with knee osteoarthritis. Future studies should aim to determine the minimum number of IMUs required for specific conditions and activities, to reduce clinician burden.

4.3. Considerations for Future Data Handling and Machine Learning Models

4.3.1. Developing Data Handling Pipelines

Previously published kinematic [21,22,23,24,25,26,27,48] and kinetic [27,29,30] prediction models are currently only useful in conditions in which the wearer of the IMUs is observed, such as in a clinical environment. This is because in free-living conditions people wearing IMUs will perform other activities in addition to walking (e.g., transitioning to/from a chair and negotiating stairs). Therefore, biomechanical prediction models have limited use in free-living conditions without additional data processing that can automate the identification and labelling the long, continuous streams of data that are produced by IMUs.

Our approach was to train the kinematic prediction model on labelled data that could potentially be output from a human activity recognition deep learning algorithm as part of a data handling pipeline. We previously established a proof-of-concept about the development of a human activity recognition model [28] that can segment data into the phases of clinically important activities described in this study, which could be the first component of a data-handling pipeline.

However, it is currently unknown which method of data segmentation is most useful, minimally burdensome for clinicians and computationally efficient. We selected phases of activities because clinicians are typically interested in data from phases, rather than the whole gait cycle (see Section 4.2.2.). Data in other studies has been segmented in a variety of ways including continuous walking [22], three gait cycles [21], or single gait cycles [26,27]. The higher-order data segmentation in those studies may prove to be clinically useful for use in a human activity recognition model that includes other activities (e.g., going up stairs or sit-to-stand), and integration with a gait event detection algorithm [55].

4.3.2. Single vs. Multiple Models

We developed a single kinematic prediction model to include training data from multiple activities, which provides more generalisability and precludes the need to model every activity [30]. However, there is uncertainty about the superiority of universal single models for prediction of kinematics across multiple activities compared to multiple models that predict only specific activities. Stetter et al. [30] reported the development of an ANN to predict knee joint forces for 16 sports specific activities (e.g., walking, running, jumping, and cutting). They noted the possibility that their model had higher error compared to the study by Wouda et al. [24] was because of their use of a single model for the multiple activities [30]. Contrary to this, our single model for multiple activities had lower RMSE [24] and stronger correlations [21] than other approaches that only used training data from a single activity.

Furthermore, our double-leg model performed better than the single-leg model for bilateral simultaneous activities of sit-to-stand and stand-to-sit. Stetter et al. [30] demonstrated a similar effect where there a single-leg model had higher error for two leg activities (jump take-off and two leg jump landing) compared to single-leg activities.

These results together may indicate that, in future studies, activity-specific models should be directly compared to models trained to predict kinematics for multiple activities, and that models trained on both legs may impact the results of asynchronous movement such as walking.

4.3.3. Augmented and Simulated Data

One challenge of developing generalisable kinematic prediction models is the collection of a sufficient number of samples from a representative cohort of participants, a process which is burdensome. This challenge is highlighted by the large RMSE/MAE for the stair down stance, where there was the least number of training data for the model. One solution becoming increasing popular is to augment the training data by including simulated IMU data [23,26]. It has been demonstrated that adding simulated data results in a 27–45% improvement in RMSE, resulting in knee flexion RMSE between 1.4–5.22° [26,56]. These approaches using simulated and augmented data may provide additional benefit in models trained on data collected from clinical populations, such as people with knee osteoarthritis. In addition, using augmented data may help reduce the impact of misplacement of sensors by either clinicians or patients.

4.3.4. Deep Learning Architecture

We used BiLSTM following on from the work of Renani et al. [26]. BiLSTM is proposed to improve prediction accuracy because it transverses the input data twice, using both past and future data points, compared to traditional LSTM [39]. Future studies should investigate the performance of BiLSTM compared to traditional LSTM and other deep learning approaches for kinematic prediction. Although we used BiLSTM, there is some indication that combining multiple deep learning architectures, such as CNN with LSTM (ConvLSTM), can improve prediction accuracy for IMU data [57]. Hernandez et al. [22] demonstrated that this combined deep learning approach using ConvLSTM can provide good results for knee flexion time-series predictions (MAE 3 (SD 1.15), R = 0.99) using a nested k-fold validation using a 70% training, 15% validation and 15% test approach [22]. Researchers must further investigate the balance between predictive accuracy and the requirement for pre-processing of data. Mundt et al. [38] tested the predictive accuracy of a CNN, LSTM and multilayer perceptron network for lower limb kinematics and kinetics. They demonstrated superior accuracy with a CNN for prediction of kinematics, although the pre-processing requirements are high for this type of model compared to LSTM, which may be more suited to real-time applications.

5. Limitations

Because this study was a proof-of-concept investigation, there are a number of limitations. We included only 17 participants, did not have a representative number of female participants, and excluded people with high BMI. These factors may limit the generalisability of our model for the broader population with knee osteoarthritis. Further, there was an unbalanced dataset with a significantly different number of trials across activities, which may have affected the results. This study included clinically relevant activities described in clinical guidelines for people who have knee osteoarthritis [9]. However, there are additional activities that people perform daily that were not included. A single model for predicting kinematics for multiple activities was used in this study, which may have affected the prediction error, and it is unclear if a universal model is feasible for all activities a person may perform. Further investigation is required to determine the comparative accuracy of a single model for multiple activities versus individual models for each type of clinically relevant activity (or phase of activities) in clinical populations, such as people who have knee osteoarthritis.

6. Conclusions

This proof-of-concept study demonstrates that using IMU training data collected from people who have knee osteoarthritis to predict sagittal plane knee joint kinematics during multiple clinically important activities using a deep learning model is feasible. Our novel BiLSTM model demonstrated that using training data from as few as two IMUs placed on one leg performs with less error for most activities than with additional training data from IMUs on both legs. To be of clinical value, the model presented in this study could be combined with a human activity recognition system to monitor response to treatment in people with knee osteoarthritis.

Author Contributions

Conceptualization, J.-S.T., A.S., P.K., P.O., A.C.; data curation, J.-S.T., T.B., K.N., P.D.; formal analysis, J.-S.T., S.T., P.K., A.C., A.S.; investigation, J.-S.T., S.T., T.B., P.D., K.N., P.K., J.P.C., A.S., P.O., A.C.; methodology, J.-S.T., S.T., P.D., K.N., P.K., A.S., P.O., A.C.; project administration, J.-S.T., T.B., A.C.; resources, P.K., A.C.; supervision, J.P.C., P.K., A.S., P.O., A.C.; validation, J.-S.T., S.T.; visualization, J.-S.T., S.T.; writing—original draft, J.-S.T.; writing—review and editing, J.-S.T., S.T., T.B., P.D., K.N., P.K., J.P.C., A.S., P.O., A.C. All authors have read and agreed to the published version of the manuscript.

Funding

An Australian Government Research Training Program Scholarship was received by the lead author to support his capacity to undertake this research.

Institutional Review Board Statement

The study was conducted according to the guidelines of the Declaration of Helsinki, and approved by the Curtin University Human Research Ethics Committee (HRE2017-0695).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study may be available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

References

- Fukutani, N.; Iijima, H.; Aoyama, T.; Yamamoto, Y.; Hiraoka, M.; Miyanobu, K.; Jinnouchi, M.; Kaneda, E.; Tsuboyama, T.; Matsuda, S. Knee pain during activities of daily living and its relationship with physical activity in patients with early and severe knee osteoarthritis. Clin. Rheumatol. 2016, 35, 2307–2316. [Google Scholar] [CrossRef]

- Baliunas, A.J.; Hurwitz, D.E.; Ryals, A.B.; Karrar, A.; Case, J.P.; Block, J.A.; Andriacchi, T.P. Increased knee joint loads during walking are present in subjects with knee osteoarthritis. Osteoarthr. Cartil. 2002, 10, 573–579. [Google Scholar] [CrossRef] [Green Version]

- Bouchouras, G.; Patsika, G.; Hatzitaki, V.; Kellis, E. Kinematics and knee muscle activation during sit-to-stand movement in women with knee osteoarthritis. Clin. Biomech. 2015, 30, 599–607. [Google Scholar] [CrossRef] [PubMed]

- Hinman, R.S.; Bennell, K.L.; Metcalf, B.R.; Crossley, K.M. Delayed onset of quadriceps activity and altered knee joint kinematics during stair stepping in individuals with knee osteoarthritis. Arch. Phys. Med. Rehabil. 2002, 83, 1080–1086. [Google Scholar] [CrossRef] [PubMed]

- McCarthy, I.; Hodgins, D.; Mor, A.; Elbaz, A.; Segal, G. Analysis of knee flexion characteristics and how they alter with the onset of knee osteoarthritis: A case control study. BMC Musculoskelet. Disord. 2013, 14, 169. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tan, J.-S.T.; Tikoft, E.T.; O’Sullivan, P.; Smith, A.; Campbell, A.; Caneiro, J.P.; Kent, P. The Relationship Between Changes in Movement and Activity Limitation or Pain in People with Knee Osteoarthritis: A Systematic Review. J. Orthop. Sports Phys. Ther. 2021, 51, 492–502. [Google Scholar] [CrossRef]

- Davis, H.C.; Luc-Harkey, B.A.; Seeley, M.K.; Troy Blackburn, J.; Pietrosimone, B. Sagittal plane walking biomechanics in individuals with knee osteoarthritis after quadriceps strengthening. Osteoarthr. Cartil. 2019, 27, 771–780. [Google Scholar] [CrossRef]

- Wang, J.; Siddicky, S.F.; Oliver, T.E.; Dohm, M.P.; Barnes, C.L.; Mannen, E.M. Biomechanical Changes Following Knee Arthroplasty During Sit-To-Stand Transfers: Systematic Review. J. Arthroplast. 2019, 34, 2494–2501. [Google Scholar] [CrossRef]

- Dobson, F.; Hinman, R.S.; Roos, E.M.; Abbott, J.H.; Stratford, P.; Davis, A.M.; Buchbinder, R.; Snyder-Mackler, L.; Henrotin, Y.; Thumboo, J.; et al. OARSI recommended performance-based tests to assess physical function in people diagnosed with hip or knee osteoarthritis. Osteoarthr. Cartil. 2013, 21, 1042–1052. [Google Scholar] [CrossRef] [Green Version]

- Milanese, S.; Gordon, S.; Buettner, P.; Flavell, C.; Ruston, S.; Coe, D.; O’Sullivan, W.; McCormack, S. Reliability and concurrent validity of knee angle measurement: Smart phone app versus universal goniometer used by experienced and novice clinicians. Man. Ther. 2014, 19, 569–574. [Google Scholar] [CrossRef] [Green Version]

- Cudejko, T.; Button, K.; Willott, J.; Al-Amri, M. Applications of Wearable Technology in a Real-Life Setting in People with Knee Osteoarthritis: A Systematic Scoping Review. J. Clin. Med. 2021, 10, 5645. [Google Scholar] [CrossRef] [PubMed]

- Kobsar, D.; Masood, Z.; Khan, H.; Khalil, N.; Kiwan, M.Y.; Ridd, S.; Tobis, M. Wearable Inertial Sensors for Gait Analysis in Adults with Osteoarthritis—A Scoping Review. Sensors 2020, 20, 7143. [Google Scholar] [CrossRef]

- Small, S.R.; Bullock, G.S.; Khalid, S.; Barker, K.; Trivella, M.; Price, A.J. Current clinical utilisation of wearable motion sensors for the assessment of outcome following knee arthroplasty: A scoping review. BMJ Open 2019, 9, e033832. [Google Scholar] [CrossRef] [Green Version]

- Binnie, T.; Smith, A.; Kent, P.; Ng, L.; O’Sullivan, P.; Tan, J.-S.; Davey, P.C.; Campbell, A. Concurrent validation of inertial sensors for measurement of knee kinematics in individuals with knee osteoarthritis: A technical report. Health Technol. 2021. [Google Scholar] [CrossRef]

- Van der Straaten, R.; De Baets, L.; Jonkers, I.; Timmermans, A. Mobile assessment of the lower limb kinematics in healthy persons and in persons with degenerative knee disorders: A systematic review. Gait Posture 2018, 59, 229–241. [Google Scholar] [CrossRef] [PubMed]

- Rast, F.M.; Labruyère, R. Systematic review on the application of wearable inertial sensors to quantify everyday life motor activity in people with mobility impairments. J. NeuroEng. Rehabil. 2020, 17, 148. [Google Scholar] [CrossRef] [PubMed]

- Schall, M.C.; Fethke, N.B.; Chen, H.; Oyama, S.; Douphrate, D.I. Accuracy and repeatability of an inertial measurement unit system for field-based occupational studies. Ergonomics 2016, 59, 591–602. [Google Scholar] [CrossRef]

- de Vries, W.H.K.; Veeger, H.E.J.; Baten, C.T.M.; van der Helm, F.C.T. Magnetic distortion in motion labs, implications for validating inertial magnetic sensors. Gait Posture 2009, 29, 535–541. [Google Scholar] [CrossRef]

- Teufl, W.; Miezal, M.; Taetz, B.; Fröhlich, M.; Bleser, G. Validity of inertial sensor based 3D joint kinematics of static and dynamic sport and physiotherapy specific movements. PLoS ONE 2019, 14, e0213064. [Google Scholar] [CrossRef] [Green Version]

- Argent, R.; Drummond, S.; Remus, A.; O’Reilly, M.; Caulfield, B. Evaluating the use of machine learning in the assessment of joint angle using a single inertial sensor. J. Rehabil. Assist. Technol. Eng. 2019, 6. [Google Scholar] [CrossRef] [Green Version]

- Findlow, A.; Goulermas, J.Y.; Nester, C.; Howard, D.; Kenney, L.P.J. Predicting lower limb joint kinematics using wearable motion sensors. Gait Posture 2008, 28, 120–126. [Google Scholar] [CrossRef]

- Hernandez, V.; Dadkhah, D.; Babakeshizadeh, V.; Kulić, D. Lower body kinematics estimation from wearable sensors for walking and running: A deep learning approach. Gait Posture 2021, 83, 185–193. [Google Scholar] [CrossRef]

- Mundt, M.; Koeppe, A.; David, S.; Witter, T.; Bamer, F.; Potthast, W.; Markert, B. Estimation of Gait Mechanics Based on Simulated and Measured IMU Data Using an Artificial Neural Network. Front. Bioeng. Biotechnol. 2020, 8, 41. [Google Scholar] [CrossRef]

- Wouda, F.J.; Giuberti, M.; Bellusci, G.; Maartens, E.; Reenalda, J.; van Beijnum, B.-J.F.; Veltink, P.H. Estimation of Vertical Ground Reaction Forces and Sagittal Knee Kinematics During Running Using Three Inertial Sensors. Front. Physiol. 2018, 9, 218. [Google Scholar] [CrossRef]

- Rapp, E.; Shin, S.; Thomsen, W.; Ferber, R.; Halilaj, E. Estimation of Kinematics from Inertial Measurement Units Using a Combined Deep Learning and Optimization Framework. J. Biomech. 2021, 116, 110229. [Google Scholar] [CrossRef]

- Renani, M.S.; Eustace, A.M.; Myers, C.A.; Clary, C.W. The Use of Synthetic IMU Signals in the Training of Deep Learning Models Significantly Improves the Accuracy of Joint Kinematic Predictions. Sensors 2021, 21, 5876. [Google Scholar] [CrossRef] [PubMed]

- Mundt, M.; Thomsen, W.; Witter, T.; Koeppe, A.; David, S.; Bamer, F.; Potthast, W.; Markert, B. Prediction of lower limb joint angles and moments during gait using artificial neural networks. Med. Biol. Eng. Comput. 2020, 58, 211–225. [Google Scholar] [CrossRef] [PubMed]

- Tan, J.-S.; Beheshti, B.K.; Binnie, T.; Davey, P.; Caneiro, J.P.; Kent, P.; Smith, A.; O’Sullivan, P.; Campbell, A. Human Activity Recognition for People with Knee Osteoarthritis—A Proof-of-Concept. Sensors 2021, 21, 3381. [Google Scholar] [CrossRef]

- Stetter, B.J.; Krafft, F.C.; Ringhof, S.; Stein, T.; Sell, S. A Machine Learning and Wearable Sensor Based Approach to Estimate External Knee Flexion and Adduction Moments During Various Locomotion Tasks. Front. Bioeng. Biotechnol. 2020, 8, 9. [Google Scholar] [CrossRef] [PubMed]

- Stetter, B.J.; Ringhof, S.; Krafft, F.C.; Sell, S.; Stein, T. Estimation of Knee Joint Forces in Sport Movements Using Wearable Sensors and Machine Learning. Sensors 2019, 19, 3690. [Google Scholar] [CrossRef] [Green Version]

- National Clinical Guideline Centre (UK). Osteoarthritis: Care and Management in Adults. Available online: https://www.ncbi.nlm.nih.gov/books/NBK333067/ (accessed on 8 September 2021).

- Roos, E.M.; Lohmander, L.S. The Knee injury and Osteoarthritis Outcome Score (KOOS): From joint injury to osteoarthritis. Health Qual. Life Outcomes 2003, 1, 64. [Google Scholar] [CrossRef] [Green Version]

- Ehara, Y.; Fujimoto, H.; Miyazaki, S.; Tanaka, S.; Yamamoto, S. Comparison of the performance of 3D camera systems. Gait Posture 1995, 3, 166–169. [Google Scholar] [CrossRef]

- Richards, J.G. The measurement of human motion: A comparison of commercially available systems. Hum. Mov. Sci. 1999, 18, 589–602. [Google Scholar] [CrossRef]

- Wu, G.; Siegler, S.; Allard, P.; Kirtley, C.; Leardini, A.; Rosenbaum, D.; Whittle, M.; D’Lima, D.D.; Cristofolini, L.; Witte, H.; et al. ISB recommendation on definitions of joint coordinate system of various joints for the reporting of human joint motion—Part I: Ankle, hip, and spine. J. Biomech. 2002, 35, 543–548. [Google Scholar] [CrossRef]

- Besier, T.F.; Sturnieks, D.L.; Alderson, J.A.; Lloyd, D.G. Repeatability of gait data using a functional hip joint centre and a mean helical knee axis. J. Biomech. 2003, 36, 1159–1168. [Google Scholar] [CrossRef]

- Mundt, M.; Koeppe, A.; Bamer, F.; David, S.; Markert, B. Artificial Neural Networks in Motion Analysis—Applications of Unsupervised and Heuristic Feature Selection Techniques. Sensors 2020, 20, 4581. [Google Scholar] [CrossRef]

- Mundt, M.; Johnson, W.R.; Potthast, W.; Markert, B.; Mian, A.; Alderson, J. A Comparison of Three Neural Network Approaches for Estimating Joint Angles and Moments from Inertial Measurement Units. Sensors 2021, 21, 4535. [Google Scholar] [CrossRef] [PubMed]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The Performance of LSTM and BiLSTM in Forecasting Time Series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 3285–3292. [Google Scholar]

- Hendry, D.; Napier, K.; Hosking, R.; Chai, K.; Davey, P.; Hopper, L.; Wild, C.; O’Sullivan, P.; Straker, L.; Campbell, A. Development of a Machine Learning Model for the Estimation of Hip and Lumbar Angles in Ballet Dancers. Med. Probl. Perform. Artist. 2021, 36, 61–71. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Gholamiangonabadi, D.; Kiselov, N.; Grolinger, K. Deep Neural Networks for Human Activity Recognition with Wearable Sensors: Leave-One-Subject-Out Cross-Validation for Model Selection. IEEE Access 2020, 8, 133982–133994. [Google Scholar] [CrossRef]

- Ren, L.; Jones, R.K.; Howard, D. Whole body inverse dynamics over a complete gait cycle based only on measured kinematics. J. Biomech. 2008, 41, 2750–2759. [Google Scholar] [CrossRef] [PubMed]

- Corey, D.M.; Dunlap, W.P.; Burke, M.J. Averaging Correlations: Expected Values and Bias in Combined Pearson rs and Fisher’s z Transformations. J. Gen. Psychol. 1998, 125, 245–261. [Google Scholar] [CrossRef]

- Weygers, I.; Kok, M.; Konings, M.; Hallez, H.; De Vroey, H.; Claeys, K. Inertial Sensor-Based Lower Limb Joint Kinematics: A Methodological Systematic Review. Sensors 2020, 20, 673. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Astephen, J.L.; Deluzio, K.J.; Caldwell, G.E.; Dunbar, M.J. Biomechanical changes at the hip, knee, and ankle joints during gait are associated with knee osteoarthritis severity. J. Orthop. Res. 2008, 26, 332–341. [Google Scholar] [CrossRef] [Green Version]

- Zanardi, A.P.J.; da Silva, E.S.; Costa, R.R.; Passos-Monteiro, E.; dos Santos, I.O.; Kruel, L.F.M.; Peyré-Tartaruga, L.A. Gait parameters of Parkinson’s disease compared with healthy controls: A systematic review and meta-analysis. Sci. Rep. 2021, 11, 752. [Google Scholar] [CrossRef]

- Renani, M.S.; Myers, C.A.; Zandie, R.; Mahoor, M.H.; Davidson, B.S.; Clary, C.W. Deep Learning in Gait Parameter Prediction for OA and TKA Patients Wearing IMU Sensors. Sensors 2020, 20, 5553. [Google Scholar] [CrossRef]

- Albert, M.; Toledo, S.; Shapiro, M.; Koerding, K. Using Mobile Phones for Activity Recognition in Parkinson’s Patients. Front. Neurol. 2012, 3, 158. [Google Scholar] [CrossRef] [Green Version]

- Lonini, L.; Gupta, A.; Kording, K.; Jayaraman, A. Activity recognition in patients with lower limb impairments: Do we need training data from each patient? In Proceedings of the 2016 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Orlando, FL, USA, 16–20 August 2016; pp. 3265–3268. [Google Scholar]

- Chehab, E.F.; Favre, J.; Erhart-Hledik, J.C.; Andriacchi, T.P. Baseline knee adduction and flexion moments during walking are both associated with 5 year cartilage changes in patients with medial knee osteoarthritis. Osteoarthr. Cartil. 2014, 22, 1833–1839. [Google Scholar] [CrossRef] [Green Version]

- Miyazaki, T.; Wada, M.; Kawahara, H.; Sato, M.; Baba, H.; Shimada, S. Dynamic load at baseline can predict radiographic disease progression in medial compartment knee osteoarthritis. Ann. Rheum. Dis. 2002, 61, 617–622. [Google Scholar] [CrossRef] [PubMed]

- Hendry, D.; Chai, K.; Campbell, A.; Hopper, L.; O’Sullivan, P.; Straker, L. Development of a Human Activity Recognition System for Ballet Tasks. Sports Med. Open 2020, 6, 10. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Joo, H.; Lee, J.; Chee, Y. Automatic Classification of Squat Posture Using Inertial Sensors: Deep Learning Approach. Sensors 2020, 20, 361. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Fadillioglu, C.; Stetter, B.J.; Ringhof, S.; Krafft, F.C.; Sell, S.; Stein, T. Automated gait event detection for a variety of locomotion tasks using a novel gyroscope-based algorithm. Gait Posture 2020, 81, 102–108. [Google Scholar] [CrossRef]

- Dorschky, E.; Nitschke, M.; Martindale, C.F.; van den Bogert, A.J.; Koelewijn, A.D.; Eskofier, B.M. CNN-Based Estimation of Sagittal Plane Walking and Running Biomechanics From Measured and Simulated Inertial Sensor Data. Front. Bioeng. Biotechnol. 2020, 8, 604. [Google Scholar] [CrossRef] [PubMed]

- Ascioglu, G.; Senol, Y. Design of a wearable wireless multi-sensor monitoring system and application for activity recognition using deep learning. IEEE Access 2020, 8, 169183–169195. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).