Classifying Goliath Grouper (Epinephelus itajara) Behaviors from a Novel, Multi-Sensor Tag

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Site and Capture

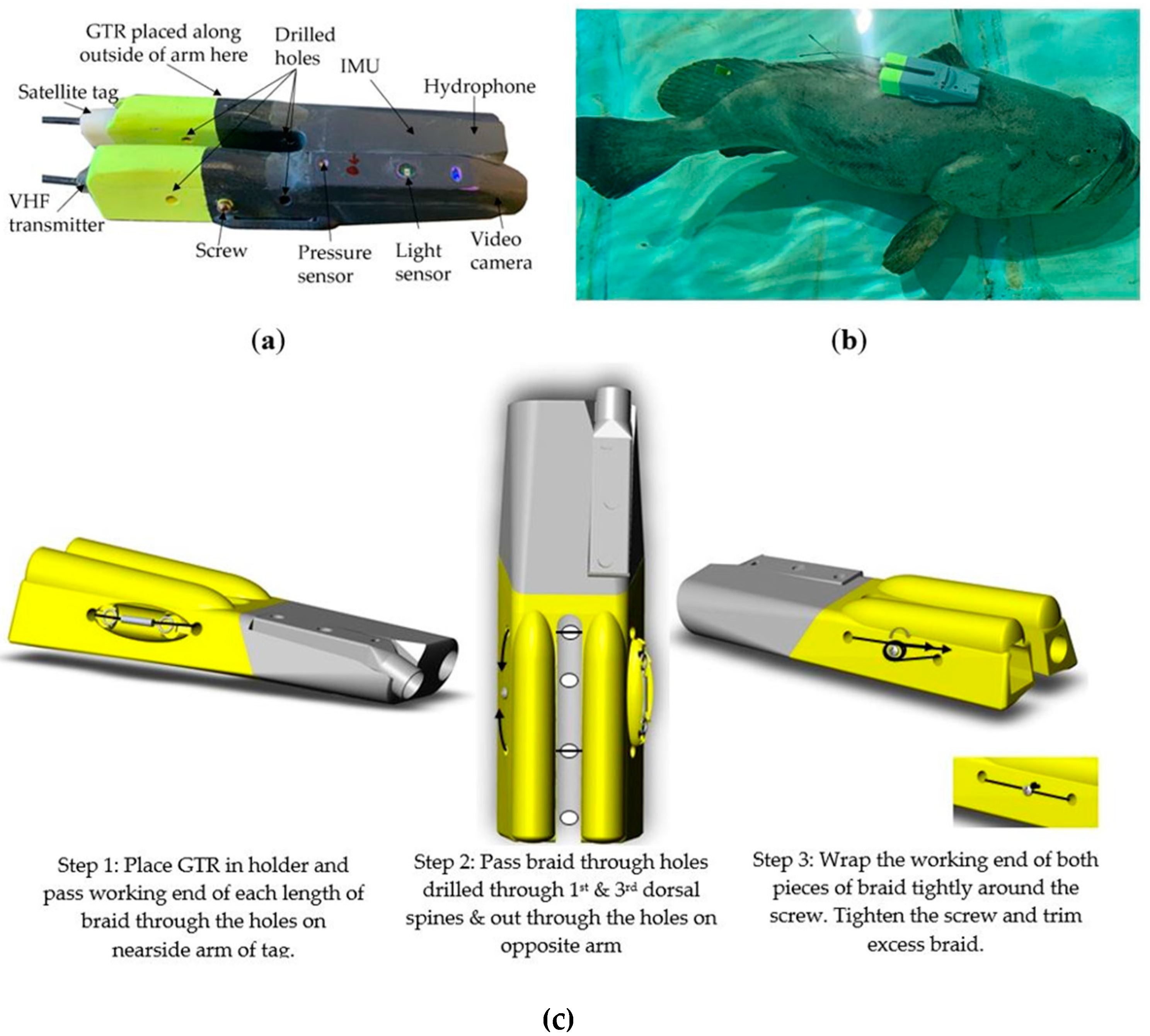

2.2. Tag Attachment

2.3. Data Analysis

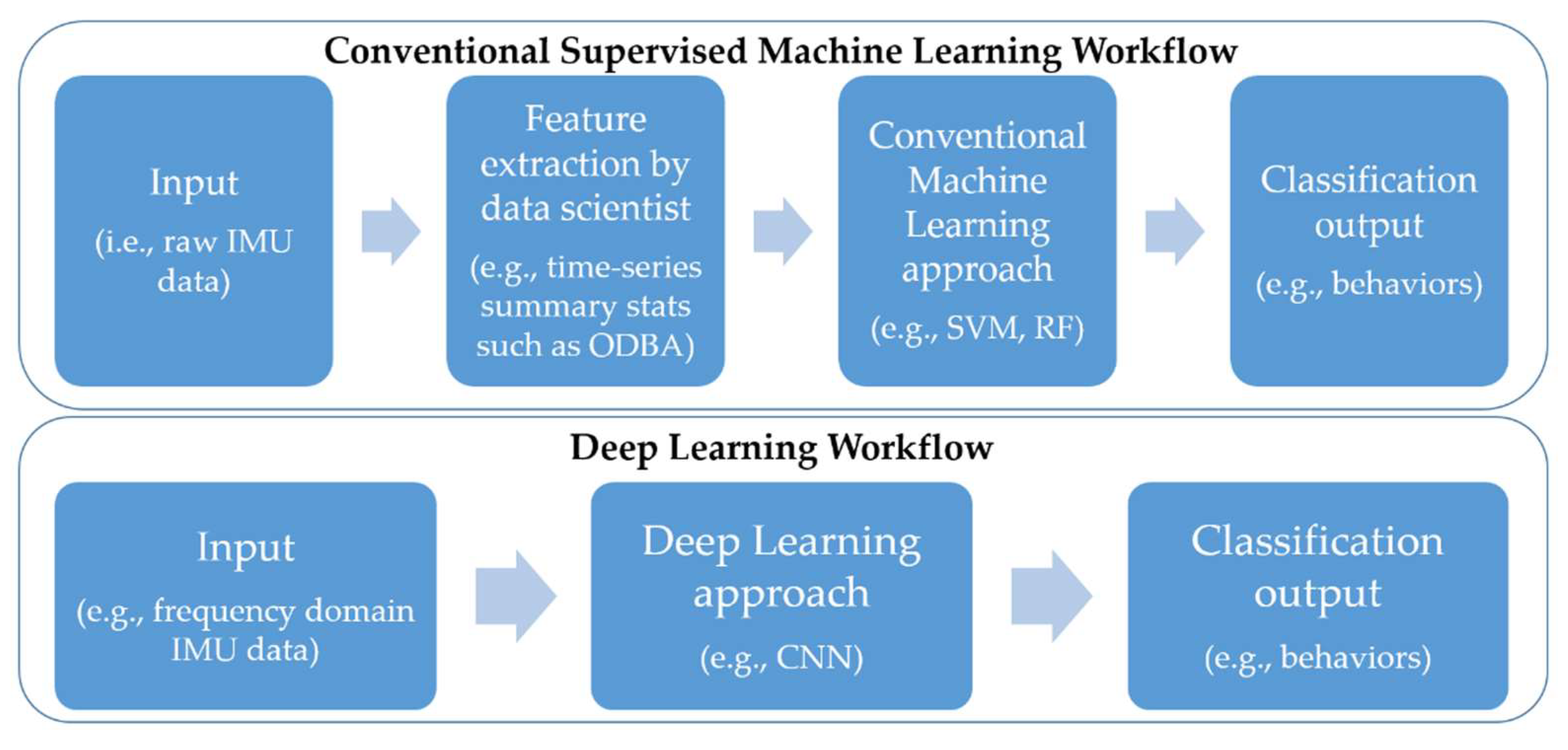

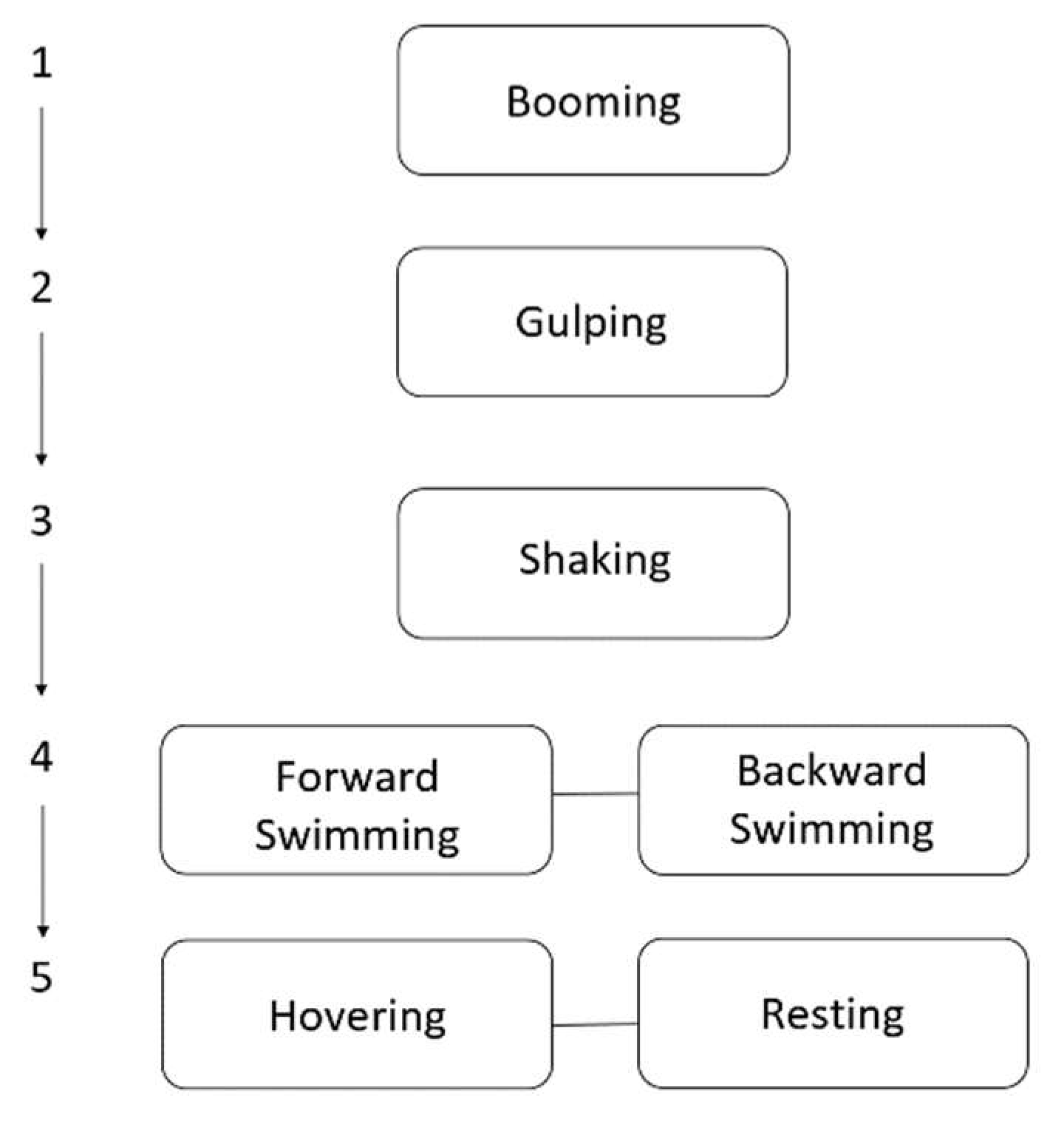

2.3.1. Ethogram and Feature Extraction

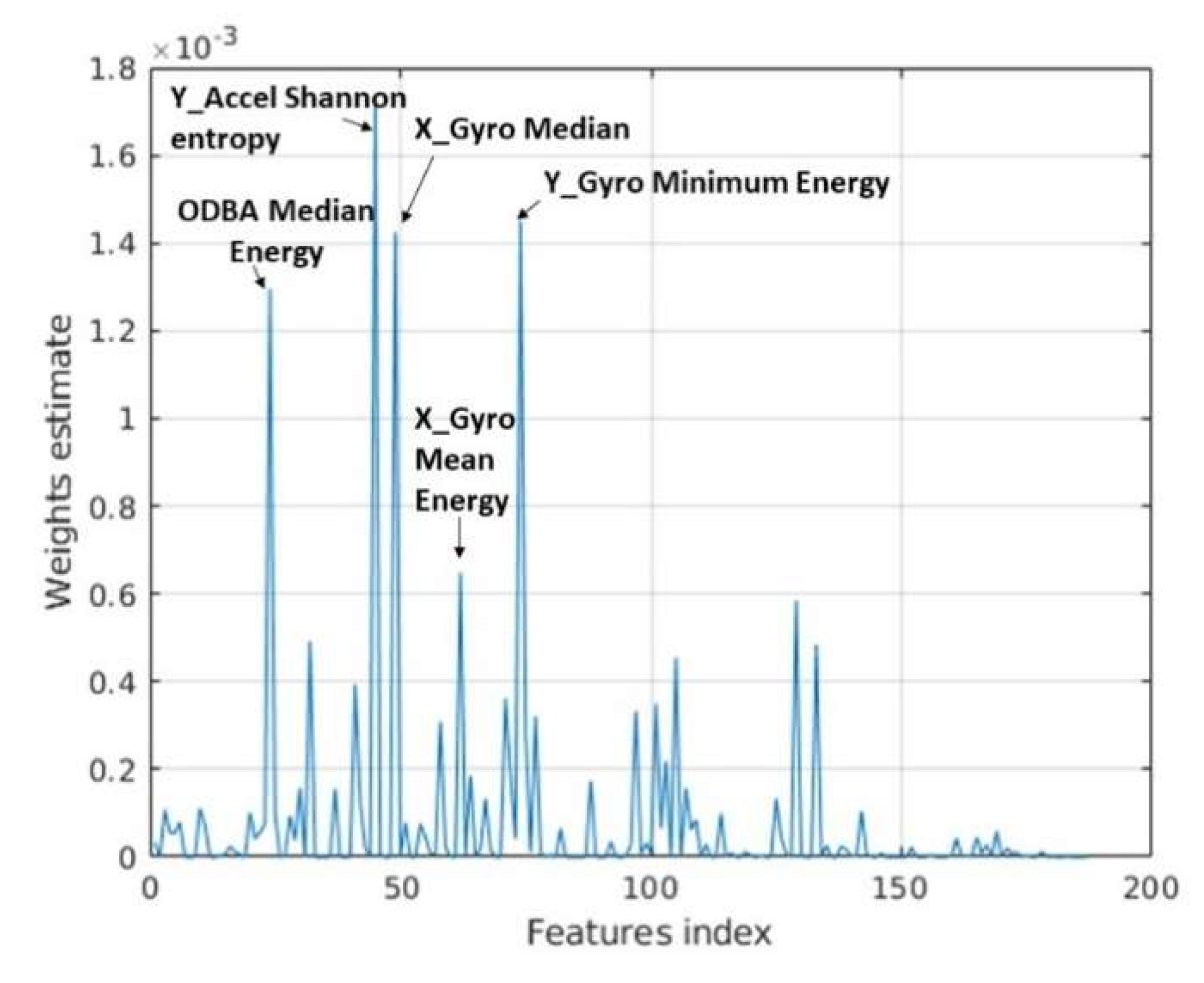

2.3.2. Conventional Machine Learning Models

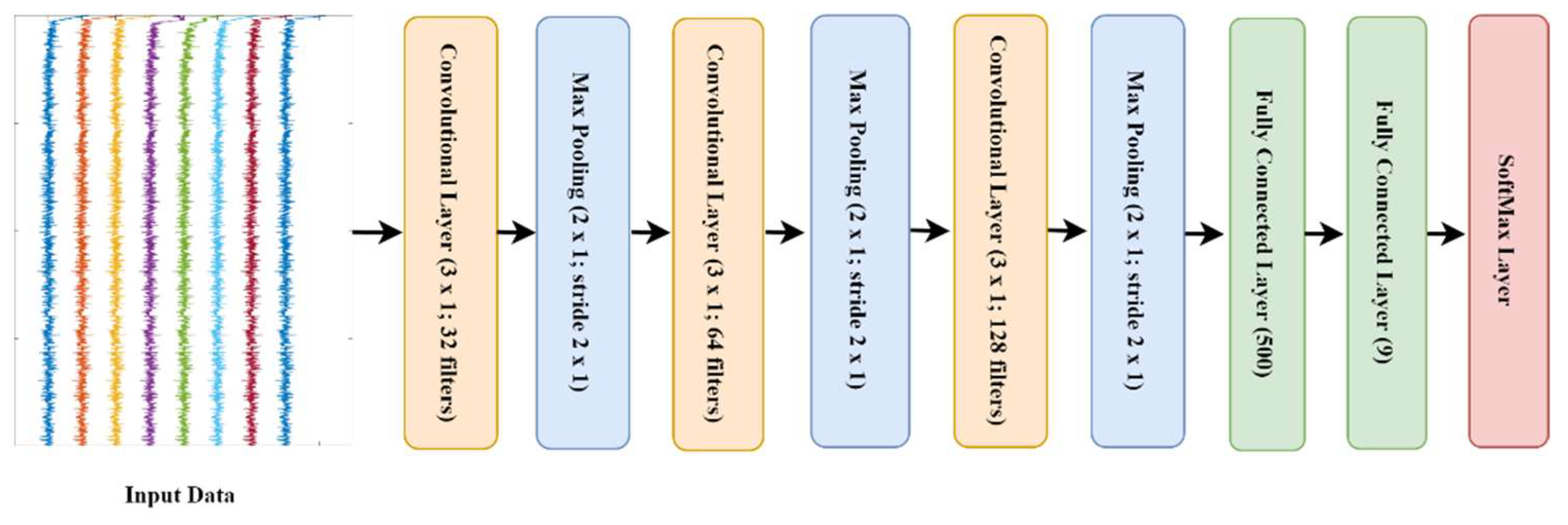

2.3.3. Deep Learning Approach

- l1: 32 kernels of size (3 × 1) which work on each frequency transformation of the input data, this is followed by maxpooling of pool size [2, 1] with stride two.

- l3: 64 kernels of size (3 × 1) which work on each frequency transformation of the input data, this is followed by maxpooling of pool size [2, 1] with stride two.

- l5: 128 kernels of size (3 × 1) which work on each frequency transformation of the input data, this is followed by maxpooling of pool size [2, 1] with stride two.

- l7: a fully connected layer with 500 nodes followed by drop out layer with probability 0.25.

- l9: a fully connected layer with 9 nodes followed by Softmax activation layer.

2.3.4. Data Augmentation

2.3.5. Performance Measures

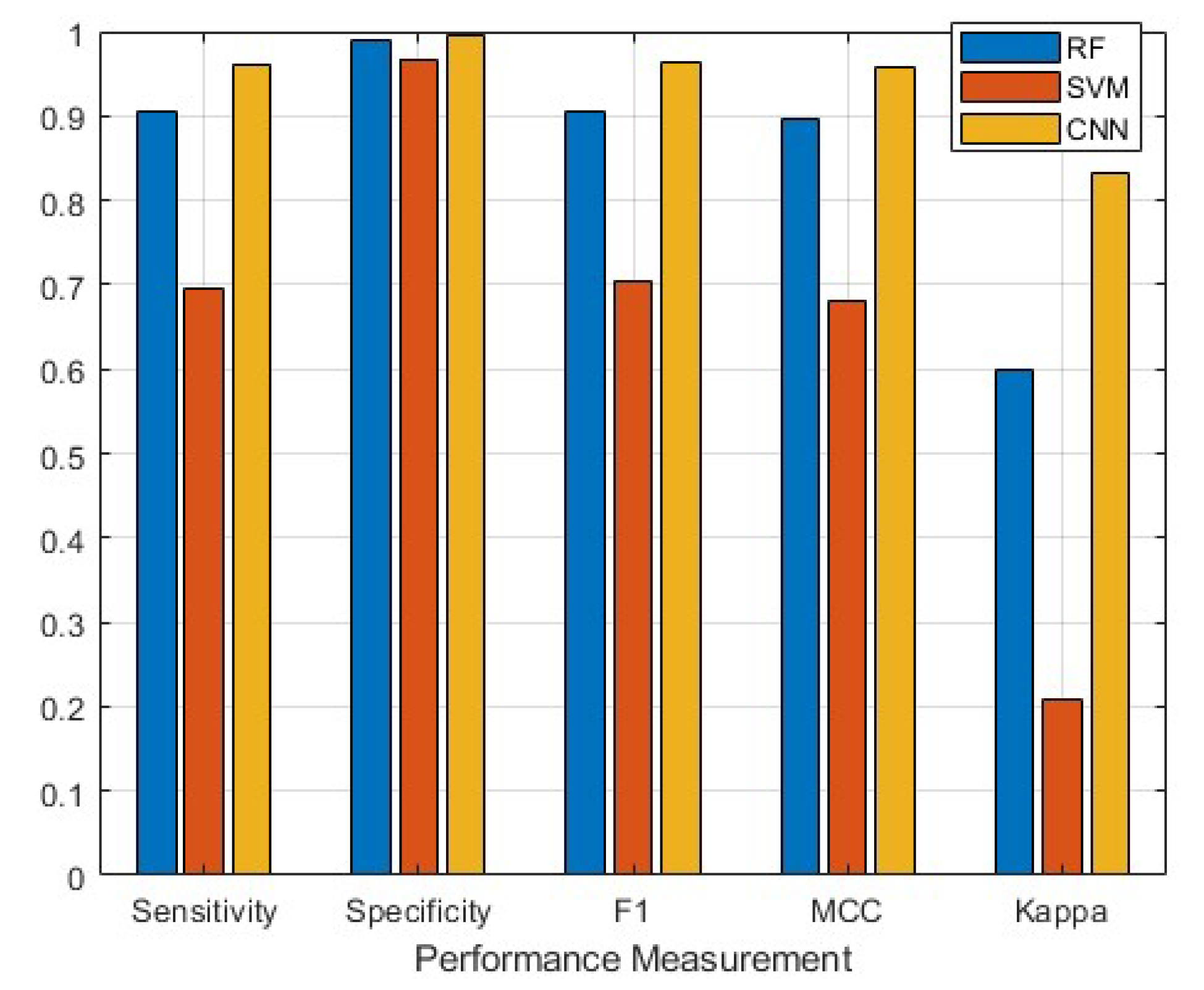

3. Results

3.1. Ethogram Development

3.2. Classifier Performance

4. Discussion

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Whitford, M.; Klimley, A.P. An overview of behavioral, physiological, and environmental sensors used in animal biotelemetry and biologging studies. Anim. Biotelemetry 2019, 7, 1–24. [Google Scholar] [CrossRef]

- Sims, D.W. Tracking and analysis techniques for understanding free-ranging shark movements and behavior. In Sharks and Their Relatives II: Biodiversity, Adaptive Physiology, and Conservation; Carrier, J., Musick, J., Heithaus, M.R., Eds.; CRC Press: Boca Raton, FL, USA, 2010; pp. 351–392. [Google Scholar]

- Kawabata, Y.; Noda, T.; Nakashima, Y.; Nanami, A.; Sato, T.; Takebe, T.; Mitamura, H.; Arai, N.; Yamaguchi, T.; Soyano, K. Use of a gyroscope/accelerometer data logger to identify alternative feeding behaviours in fish. J. Exp. Biol. 2014, 217, 3204–3208. [Google Scholar] [CrossRef] [Green Version]

- Hounslow, J.L. Establishing Best Practice for the Classification of Shark Behaviour from Bio-Logging Data. Honors Thesis, Murdoch University, Perth, Australia, 2018. [Google Scholar]

- Noda, T.; Kawabata, Y.; Arai, N.; Mitamura, H.; Watanabe, S. Animal-mounted gyroscope/accelerometer/magnetometer: In situ measurement of the movement performance of fast-start behaviour in fish. J. Exp. Mar. Bio. Ecol. 2014, 451, 55–68. [Google Scholar] [CrossRef] [Green Version]

- Nathan, R.; Spiegel, O.; Fortmann-Roe, S.; Harel, R.; Wikelski, M.; Getz, W.M. Using tri-axial acceleration data to identify behavioral modes of free-ranging animals: General concepts and tools illustrated for griffon vultures. J. Exp. Biol. 2012, 215, 986–996. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kadar, J.P.; Ladds, M.A.; Day, J.; Lyall, B.; Brown, C. Assessment of machine learning models to identify Port Jackson shark behaviours using tri-axial accelerometers. Sensors 2020, 20, 7096. [Google Scholar] [CrossRef] [PubMed]

- Brewster, L.R.; Dale, J.J.; Guttridge, T.L.; Gruber, S.H.; Hansell, A.C.; Elliott, M.; Cowx, I.G.; Whitney, N.M.; Gleiss, A.C. Development and application of a machine learning algorithm for classification of elasmobranch behaviour from accelerometry data. Mar. Biol. 2018, 165, 1–19. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jeantet, L.; Vigon, V.; Geiger, S.; Chevallier, D. Fully Convolutional Neural Network: A solution to infer animal behaviours from multi-sensor data. Ecol. Modell. 2021, 450, 109555. [Google Scholar] [CrossRef]

- Murphy, K.P. Machine Learning: A Probabilistic Perspective, 1st ed.; The MIT Press: Cambridge, MA, USA, 2012. [Google Scholar]

- Hastie, T.; Tibshirani, R.; Friedman, J. The Elements of Statistical Learning: Data Mining, Inference, and Prediction; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2009. [Google Scholar]

- Lee, H.; Kwon, H. Going deeper with contextual CNN for hyperspectral image classification. IEEE Trans. Image Process. 2017, 26, 4843–4855. [Google Scholar] [CrossRef] [Green Version]

- Peng, H.; Li, J.; He, Y.; Liu, Y.; Bao, M.; Wang, L.; Song, Y.; Yang, Q. Large-scale hierarchical text classification with recursively regularized deep graph-CNN. In Proceedings of the 2018 World Wide Web Conference (WWW 2018), Lyon, France, 23–27 April 2018; Association for Computing Machinery, Inc.: New York, NY, USA, 2018; pp. 1063–1072. [Google Scholar]

- Du, Z.; Xiao, X.; Uversky, V.N. Classification of chromosomal DNA sequences using hybrid deep learning architectures. Curr. Bioinform. 2020, 15, 1130–1136. [Google Scholar] [CrossRef]

- Ibrahim, A.K.; Zhuang, H.; Chérubin, L.M.; Schärer-Umpierre, M.T.; Erdol, N. Automatic classification of grouper species by their sounds using deep neural networks. J. Acoust. Soc. Am. 2018, 144, EL196–EL202. [Google Scholar] [CrossRef] [Green Version]

- Hur, T.; Bang, J.; Huynh-The, T.; Lee, J.; Kim, J.I.; Lee, S. Iss2Image: A novel signal-encoding technique for CNN-based human activity recognition. Sensors 2018, 18, 3910. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Almaslukh, B.; Artoli, A.M.; Al-Muhtadi, J. A robust deep learning approach for position-independent smartphone-based human activity recognition. Sensors 2018, 18, 3726. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ignatov, A. Real-time human activity recognition from accelerometer data using Convolutional Neural Networks. Appl. Soft Comput. J. 2018, 62, 915–922. [Google Scholar] [CrossRef]

- Avilés-Cruz, C.; Ferreyra-Ramírez, A.; Zúñiga-López, A.; Villegas-Cortéz, J. Coarse-fine convolutional deep-learning strategy for human activity recognition. Sensors 2019, 19, 1556. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Uddin, M.Z.; Hassan, M.M. Activity Recognition for cognitive assistance using body sensors data and deep convolutional neural network. IEEE Sens. J. 2019, 19, 8413–8419. [Google Scholar] [CrossRef]

- Inoue, M.; Inoue, S.; Nishida, T. Deep recurrent neural network for mobile human activity recognition with high throughput. Artif. Life Robot. 2018, 23, 173–185. [Google Scholar] [CrossRef] [Green Version]

- Chen, W.H.; Betancourt Baca, C.A.; Tou, C.H. LSTM-RNNs combined with scene information for human activity recognition. In Proceedings of the 19th International Conference on e-Health Networking, Applications and Services (Healthcom 2017), Dalian, China, 12–15 October 2017; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2017; pp. 1–6. [Google Scholar]

- Malshika Welhenge, A.; Taparugssanagorn, A. Human activity classification using long short-term memory network. Signal Image Video Process. 2019, 13, 651–656. [Google Scholar] [CrossRef]

- Ordóñez, F.J.; Roggen, D. Deep convolutional and LSTM recurrent neural networks for multimodal wearable activity recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; ISBN 9780262035613. [Google Scholar]

- Karim, F.; Majumdar, S.; Darabi, H.; Chen, S. LSTM fully convolutional networks for time series classification. IEEE Access 2017, 6, 1662–1669. [Google Scholar] [CrossRef]

- Jewell, O.J.D.; Gleiss, A.C.; Jorgensen, S.J.; Andrzejaczek, S.; Moxley, J.H.; Beatty, S.J.; Wikelski, M.; Block, B.A.; Chapple, T.K. Cryptic habitat use of white sharks in kelp forest revealed by animal-borne video. Biol. Lett. 2019, 26, 20190085. [Google Scholar] [CrossRef] [Green Version]

- Byrnes, E.E.; Daly, R.; Leos-Barajas, V.; Langrock, R.; Gleiss, A.C. Evaluating the constraints governing activity patterns of a coastal marine top predator. Mar. Biol. 2021, 168, 11. [Google Scholar] [CrossRef]

- Gleiss, A.C.; Wright, S.; Liebsch, N.; Wilson, R.P.; Norman, B. Contrasting diel patterns in vertical movement and locomotor activity of whale sharks at Ningaloo Reef. Mar. Biol. 2013, 160, 2981–2992. [Google Scholar] [CrossRef]

- Gleiss, A.C.; Schallert, R.J.; Dale, J.J.; Wilson, S.G.; Block, B.A. Direct measurement of swimming and diving kinematics of giant Atlantic bluefin tuna (Thunnus thynnus). R. Soc. Open Sci. 2019, 6, 190203. [Google Scholar] [CrossRef] [PubMed]

- Furukawa, S.; Kawabe, R.; Ohshimo, S.; Fujioka, K.; Nishihara, G.N.; Tsuda, Y.; Aoshima, T.; Kanehara, H.; Nakata, H. Vertical movement of dolphinfish Coryphaena hippurus as recorded by acceleration data-loggers in the northern East China Sea. Environ. Biol. Fishes 2011, 92, 89–99. [Google Scholar] [CrossRef]

- Clarke, T.M.; Whitmarsh, S.K.; Hounslow, J.L.; Gleiss, A.C.; Payne, N.L.; Huveneers, C. Using tri-axial accelerometer loggers to identify spawning behaviours of large pelagic fish. Mov. Ecol. 2021, 9, 26. [Google Scholar] [CrossRef]

- Craig, M.T.; Sadovy de Mitcheson, Y.J.; Hemmstra, P.C. Groupers of the World: A Field and Market Guide. National Inquiry Services Centre; NISC (Pty) Ltd.: Grahamstown, South Africa, 2011; ISBN 978-1-920033-11-8. [Google Scholar]

- Erisman, B.; Heyman, W.; Kobara, S.; Ezer, T.; Pittman, S.; Aburto-Oropeza, O.; Nemeth, R.S. Fish spawning aggregations: Where well-placed management actions can yield big benefits for fisheries and conservation. Fish Fish. 2017, 18, 128–144. [Google Scholar] [CrossRef] [Green Version]

- Hughes, A.T.; Hamilton, R.J.; Choat, J.H.; Rhodes, K.L. Declining grouper spawning aggregations in Western Province, Solomon Islands, signal the need for a modified management approach. PLoS ONE 2020, 15, e0230485. [Google Scholar] [CrossRef]

- Sadovy, Y. The case of the disappearing grouper: Epinephelus striatus, the Nassau grouper, in the Caribbean and western Atlantic. J. Fish Biol. 1997, 46, 961–976. [Google Scholar] [CrossRef]

- Sala, E.; Ballesteros, E.; Starr, R.M. Rapid decline of Nassau grouper spawning aggregations in Belize: Fishery management and conservation needs. Fisheries 2001, 26, 23–30. [Google Scholar] [CrossRef]

- Bullock, L.H.; Murphy, M.D.; Godcharles, M.F.; Mitchell, M.E. Age, growth, and reproduction of jewfish Epinephelus itajara in the eastern Gulf of Mexico. Fish. Bull. 1992, 90, 243–249. [Google Scholar]

- Bertoncini, A.A.; Aguilar-Perera, A.; Barreiros, J.; Craig, M.T.; Ferreira, B.; Koenig, C. Epinephelus itajara (Atlantic Goliath Grouper). In The IUCN Red List of Threatened Species; IUCN: Gland, Switzerland, 2018. [Google Scholar] [CrossRef]

- Collins, A.B.; Motta, P.J. A kinematic investigation into the feeding behavior of the Goliath grouper Epinephelus itajara. Environ. Biol. Fishes 2017, 100, 309–323. [Google Scholar] [CrossRef]

- Collins, A.; Barbieri, L.R. Behavior, Habitat, and Abundance of the Goliath Grouper, Epinephelus itajara, in the Central Eastern Gulf of Mexico; Fish and Wildlife Research Institute, Florida Fish & Wildlife Conservation Commission: St. Petersburg, FL, USA, 2010. [Google Scholar]

- Mann, D.A.; Locascio, J.V.; Coleman, F.C.; Koenig, C.C. Goliath grouper Epinephelus itajara sound production and movement patterns on aggregation sites. Endanger. Species Res. 2009, 7, 229–236. [Google Scholar] [CrossRef]

- Malinowski, C.; Coleman, F.; Koenig, C.; Locascio, J.; Murie, D. Are atlantic goliath grouper, Epinephelus itajara, establishing more northerly spawning sites? Evidence from the northeast Gulf of Mexico. Bull. Mar. Sci. 2019, 95, 371–391. [Google Scholar] [CrossRef]

- Collins, A. An Investigation into the Habitat, Behavior and Opportunistic Feeding Strategies of the Protected Goliath Grouper (Epinephelus itajara). Ph.D. Thesis, University of South Florida, Tampa, FL, USA, 2014. [Google Scholar]

- Brown, D.D.; Kays, R.; Wikelski, M.; Wilson, R.; Klimley, A. Observing the unwatchable through acceleration logging of animal behavior. Anim. Biotelemetry 2013, 1, 20. [Google Scholar] [CrossRef] [Green Version]

- Whitney, N.M.; Lear, K.O.; Gleiss Adrian, C.; Payne, N.L.; White, C.F. Advances in the Application of High-Resolution Biologgers to Elasmobranch Fishes. In Shark Research: Emerging Technologies and Applications for the Field and Laboratory; Carrier, J.C., Heithaus, M.R., Simpfendorfer, C.A., Eds.; CRC Press: Boca Raton, FL, USA, 2018; ISBN 1315317109. [Google Scholar]

- Yoshida, N.; Mitamura, H.; Sasaki, M.; Okamoto, H.; Yoshida, T.; Arai, N. Preliminary study on measuring activity of the red-spotted grouper, Epinephelus akaara, using a novel acoustic acceleration transmitter. In Proceedings of the Design Symposium on Conservation of Ecosystem (the 12th SEASTAR2000 Workshop), Bangkok, Thailand, 20–21 February 2013; pp. 99–102. [Google Scholar] [CrossRef]

- Horie, J.; Mitamura, H.; Ina, Y.; Mashino, Y.; Noda, T.; Moriya, K.; Arai, N.; Sasakura, T. Development of a method for classifying and transmitting high-resolution feeding behavior of fish using an acceleration pinger. Anim. Biotelemetry 2017, 5, 12. [Google Scholar] [CrossRef]

- Myre, B.L.; Guertin, J.; Selcer, K.; Valverde, R.A. Ovarian Dynamics in Free-Ranging Loggerhead Sea Turtles (Caretta caretta). Copeia 2016, 104, 921–929. [Google Scholar] [CrossRef]

- Bentley, B.P.; McGlashan, J.K.; Bresette, M.J.; Wyneken, J. No evidence of selection against anomalous scute arrangements between juvenile and adult sea turtles in Florida. J. Morphol. 2021, 282, 173–184. [Google Scholar] [CrossRef]

- Ladds, M.A.; Thompson, A.P.; Kadar, J.-P.; Slip, D.J.; Hocking, D.P.; Harcourt, R.G. Super machine learning: Improving accuracy and reducing variance of behaviour classification from accelerometry. Anim. Biotelemetry 2017, 5, 8. [Google Scholar] [CrossRef] [Green Version]

- Sakai, K.; Oishi, K.; Miwa, M.; Kumagai, H.; Hirooka, H. Behavior classification of goats using 9-axis multi sensors: The effect of imbalanced datasets on classification performance. Comput. Electron. Agric. 2019, 166, 105027. [Google Scholar] [CrossRef]

- Wilson, R.P.; White, C.R.; Quintana, F.; Halsey, L.G.; Liebsch, N.; Martin, G.R.; Butler, P.J. Moving towards acceleration for estimates of activity-specific metabolic rate in free-living animals: The case of the cormorant. J. Anim. Ecol. 2006, 75, 1081–1090. [Google Scholar] [CrossRef]

- R Core Team. R: A Language and Environment for Statistical Computing; Version 4.0.2; R Foundation for Statistical Computing: Vienna, Austria, 2021. [Google Scholar]

- Chung, W.Y.; Purwar, A.; Sharma, A. Frequency domain approach for activity classification using accelerometer. In Proceedings of the 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vancouver, BC, Canada, 20–25 August 2008; pp. 1120–1123. [Google Scholar]

- Martiskainen, P.; Järvinen, M.; Skön, J.P.; Tiirikainen, J.; Kolehmainen, M.; Mononen, J. Cow behaviour pattern recognition using a three-dimensional accelerometer and support vector machines. Appl. Anim. Behav. Sci. 2009, 119, 32–38. [Google Scholar] [CrossRef]

- Glass, T.W.; Breed, G.A.; Robards, M.D.; Williams, C.T.; Kielland, K. Accounting for unknown behaviors of free-living animals in accelerometer-based classification models: Demonstration on a wide-ranging mesopredator. Ecol. Inform. 2020, 60, 101152. [Google Scholar] [CrossRef]

- Tatler, J.; Cassey, P.; Prowse, T.A.A. High accuracy at low frequency: Detailed behavioural classification from accelerometer data. J. Exp. Biol. 2018, 29, jeb184085. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Brieman, L.; Friedman, J.; Stone, C.J.; Olshen, R.A. Classficiation and Regression Trees; CRC Press: Wadsworth, OH, USA, 1984. [Google Scholar]

- Wen, Q.; Sun, L.; Yang, F.; Song, X.; Gao, J.; Wang, X.; Xu, H. Time series data augmentation for deep learning: A Survey. In Proceedings of the 30th International Joint Conference on Artificial Intelligence (IJCAI 2021), Montreal, QC, Canada, 19–26 August 2021. [Google Scholar]

- Lim, S.K.; Loo, Y.; Tran, N.T.; Cheung, N.M.; Roig, G.; Elovici, Y. DOPING: Generative data augmentation for unsupervised anomaly detection with GAN. In Proceedings of the IEEE International Conference on Data Mining (ICDM 2018), Singapore, 17–20 November 2018; Institute of Electrical and Electronics Engineers Inc.: New York, NY, USA, 2018; pp. 1122–1127. [Google Scholar]

- Rashid, K.M.; Louis, J. Times-series data augmentation and deep learning for construction equipment activity recognition. Adv. Eng. Informatics 2019, 42, 100944. [Google Scholar] [CrossRef]

- Garcia-Ceja, E.; Riegler, M.; Kvernberg, A.K.; Torresen, J. User-adaptive models for activity and emotion recognition using deep transfer learning and data augmentation. User Model. User-Adapt. Interact. 2020, 30, 365–393. [Google Scholar] [CrossRef]

- Matthews, B.W. Comparison of the predicted and observed secondary structure of T4 phage lysozyme. BBA Protein Struct. 1975, 405, 442–451. [Google Scholar] [CrossRef]

- Viera, A.J.; Garrett, J.M. Understanding Interobserver Agreement: The Kappa Statistic. Fam. Med. 2005, 37, 360–363. [Google Scholar]

- Landis, J.R.; Koch, G.G. The measurement of observer agreement for categorical data. Biometrics 1977, 33, 159. [Google Scholar] [CrossRef] [Green Version]

- Bueno, L.S.; Bertoncini, A.A.; Koenig, C.C.; Coleman, F.C.; Freitas, M.O.; Leite, J.R.; De Souza, T.F.; Hostim-Silva, M. Evidence for spawning aggregations of the endangered Atlantic goliath grouper Epinephelus itajara in southern Brazil. J. Fish Biol. 2016, 89, 876–889. [Google Scholar] [CrossRef]

- Koenig, C.C.; Bueno, L.S.; Coleman, F.C.; Cusick, J.A.; Ellis, R.D.; Kingon, K.; Locascio, J.V.; Malinowski, C.; Murie, D.J.; Stallings, C.D. Diel, lunar, and seasonal spawning patterns of the Atlantic goliath grouper, Epinephelus itajara, off Florida, United States. Bull. Mar. Sci. 2017, 93, 391–406. [Google Scholar] [CrossRef] [Green Version]

- Rowell, T.J.; Schärer, M.T.; Appeldoorn, R.S.; Nemeth, M.I.; Mann, D.A.; Rivera, J.A. Sound production as an indicator of red hind density at a spawning aggregation. Mar. Ecol. Prog. Ser. 2012, 462, 241–250. [Google Scholar] [CrossRef] [Green Version]

- Getz, E.T.; Kline, R.J. Utilizing accelerometer telemetry tags to compare red snapper (Lutjanus campechanus [Poey, 1860]) behavior on artificial and natural reefs. J. Exp. Mar. Bio. Ecol. 2019, 519, 151202. [Google Scholar] [CrossRef]

- Barnett, A.; Payne, N.L.; Semmens, J.M.; Fitzpatrick, R. Ecotourism increases the field metabolic rate of whitetip reef sharks. Biol. Conserv. 2016, 199, 132–136. [Google Scholar] [CrossRef]

- Watanabe, Y.Y.; Wei, Q.; Du, H.; Li, L.; Miyazaki, N. Swimming behavior of Chinese sturgeon in natural habitat as compared to that in a deep reservoir: Preliminary evidence for anthropogenic impacts. Environ. Biol. Fishes 2012, 96, 123–130. [Google Scholar] [CrossRef]

- Koenig, C.C.; Coleman, F.C.; Malinowski, C.R. Atlantic Goliath Grouper of Florida: To fish or not to fish. Fisheries 2020, 45, 20–32. [Google Scholar] [CrossRef]

- Whitney, N.M.; White, C.F.; Gleiss, A.C.; Schwieterman, G.D.; Anderson, P.; Hueter, R.E.; Skomal, G.B. A novel method for determining post-release mortality, behavior, and recovery period using acceleration data loggers. Fish. Res. 2016, 183, 210–221. [Google Scholar] [CrossRef]

- Lennox, R.J.; Brownscombe, J.W.; Cooke, S.J.; Danylchuk, A.J. Post-release behaviour and survival of recreationally-angled arapaima (Arapaima cf. arapaima) assessed with accelerometer biologgers. Fish. Res. 2018, 207, 197–203. [Google Scholar] [CrossRef]

- Shideler, G.S.; Carter, D.W.; Liese, C.; Serafy, J.E. Lifting the goliath grouper harvest ban: Angler perspectives and willingness to pay. Fish. Res. 2015, 161, 156–165. [Google Scholar] [CrossRef]

- Shideler, G.S.; Pierce, B. Recreational diver willingness to pay for goliath grouper encounters during the months of their spawning aggregation off eastern Florida, USA. Ocean Coast. Manag. 2016, 129, 36–43. [Google Scholar] [CrossRef]

- Simard, P.; Wall, K.R.; Mann, D.A.; Wall, C.C.; Stallings, C.D. Quantification of Boat Visitation Rates at Artificial and Natural Reefs in the Eastern Gulf of Mexico Using Acoustic Recorders. PLoS ONE 2016, 11, e0160695. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Le Roux, S.P.; Wolhuter, R.; Stevens, N.; Niesler, T. Reduced energy and memory requirements by on-board behavior classification for animal-borne sensor applications. IEEE Sens. J. 2018, 18, 4261–4268. [Google Scholar] [CrossRef]

| Deployment | Tagging Date | Fish Total Length (cm) | Fish Girth (cm) | Video Duration (hh:mm) | Accelerometer Sampling Frequency (Hz) 1 | Approximate Tag Retention Duration (h) |

|---|---|---|---|---|---|---|

| Fish 1 | 30/03/2020 | 135.5 | 99.2 | 10:08 | 50 | 68.00 |

| Fish 2 | 10/06/2020 | 189.0 | 130.5 | 09:58 | 50 | 70.50 |

| Fish 3 | 30/06/2020 | 161.0 | 107.8 | 02:49 | 50 | 70.25 |

| Fish 4 | 10/07/2020 | 139.0 | 94.8 | 10:30 | 200 | 76.00 |

| Fish 5 | 17/07/2020 | 140.0 | 99.2 | 10:30 | 200 | 70.50 |

| Fish 6 | 29/07/2020 | 189.0 | 124.4 | 10:00 | 200 | 56.00 |

| Behavior | Description |

|---|---|

| Backward Swimming | Reversing motion that occurs by undulating the pectoral fins. |

| Boom | Low-frequency single-pulse sound. |

| Gulping | Quick mouth movement that does not produce sound. |

| Burst Swimming | Fast forward movement, usually in response to a stimulus. |

| Feeding | Consumption of a prey item. |

| Forward Swimming | Forward movement that results in side-to-side swaying of the tag, reflecting the gait and tail-beat of the animal. |

| Gliding | Forward movement that does not result in swaying of the tag. |

| Hovering | Occurs when the animal appears largely motionless in the water column (rather than resting on substrate). May include small movements/adjustments. |

| Turning | A change in direction. |

| Listing | Less exaggerated than rolling. Animal rotates on its longitudinal axis to an angle <45°. |

| Resting | Animal appears to sit motionless on the substrate. |

| Rolling | Animal rotates on its longitudinal axis to an angle greater than 45°. This behavior may involve the individual full inverting its body so the dorsal surface makes contact with the substrate. |

| Shaking | Vigorous side-to-side movement. Often accompanies a boom or occurs during interactions with conspecifics. |

| Behavior | Fish 1 | Fish 2 | Fish 3 | Fish 4 | Fish 5 | Fish 6 | Total |

|---|---|---|---|---|---|---|---|

| Backward Swimming | 1344 | 312 | 25 | 393 | - | 57 | 2131 |

| Boom | 26 | 136 | 11 | 10 | 101 | 31 | 315 |

| Gulping | 45 | 26 | 16 | 136 | 33 | 3 | 259 |

| Burst Swimming * | 107 | 3 | - | - | 227 | - | 337 |

| Feeding * | - | - | - | 58 | - | - | 58 |

| Forward Swimming | 5501 | 5631 | 1577 | 6716 | 26,277 | 2313 | 48,015 |

| Gliding * | 339 | 6 | - | - | - | - | 345 |

| Hovering | 20,325 | 6750 | 7722 | 29,869 | 7846 | 32,663 | 105,175 |

| Turning | 176 | 285 | 183 | 22 | 2026 | - | 2692 |

| Listing | 58 | 72 | 6 | 53 | 157 | 37 | 383 |

| Resting | 6368 | 21,648 | 365 | 5 | 473 | 82 | 28,941 |

| Rolling * | 3 | 8 | - | 9 | 39 | 11 | 70 |

| Shaking | 190 | 589 | 121 | 542 | 155 | 415 | 2012 |

| Kappa | |||

|---|---|---|---|

| Behavior | SVM | RF | CNN |

| Resting | 0.8555 | 0.8414 | 0.8450 |

| Hovering | 0.8030 | 0.7927 | 0.7938 |

| Forward Swimming | 0.7889 | 0.8032 | 0.8121 |

| Backward Swimming | 0.8022 | 0.7971 | 0.8587 |

| Boom | 0.9114 | 0.8798 | 0.8014 |

| Shaking | 0.8566 | 0.8645 | 0.7508 |

| Listing | 0.7580 | 0.7589 | 0.8693 |

| Turning | 0.8450 | 0.8317 | 0.4480 |

| Gulping | 0.4512 | 0.4511 | 0.8293 |

| Overall Performance | 0.2097 | 0.5996 | 0.8331 |

| Sensitivity | |||

|---|---|---|---|

| Behavior | SVM | RF | CNN |

| Resting | 0.6733 | 0.8640 | 0.9262 |

| Hovering | 0.8673 | 0.9078 | 0.9443 |

| Forward Swimming | 0.8251 | 0.7631 | 0.8007 |

| Backward Swimming | 0.6905 | 0.9785 | 0.9945 |

| Boom | 0.3282 | 0.8733 | 1.0000 |

| Shaking | 0.6355 | 0.8472 | 1.0000 |

| Listing | 0.7494 | 0.9822 | 0.9922 |

| Turning | 0.6032 | 0.9668 | 1.0000 |

| Gulping | 0.8961 | 0.9682 | 1.0000 |

| Overall Performance | 0.6965 | 0.9057 | 0.9620 |

| Specificity | |||

|---|---|---|---|

| Behavior | SVM | RF | CNN |

| Resting | 0.9929 | 0.9947 | 0.9985 |

| Hovering | 0.9884 | 0.9873 | 0.9895 |

| Forward Swimming | 0.9633 | 0.9772 | 0.9941 |

| Backward Swimming | 0.9619 | 0.9958 | 0.9936 |

| Boom | 0.9961 | 0.9917 | 0.9993 |

| Shaking | 0.9579 | 0.9857 | 0.9989 |

| Listing | 0.9581 | 0.9946 | 0.9980 |

| Turning | 0.9699 | 0.9967 | 0.9906 |

| Gulping | 0.9194 | 0.9865 | 0.9996 |

| Overall Performance | 0.9675 | 0.9900 | 0.9958 |

| F1-Score | |||

|---|---|---|---|

| Behavior | SVM | RF | CNN |

| Resting | 0.7689 | 0.8988 | 0.9531 |

| Hovering | 0.8802 | 0.9002 | 0.9273 |

| Forward Swimming | 0.7666 | 0.7779 | 0.8644 |

| Backward Swimming | 0.6805 | 0.9708 | 0.9550 |

| Boom | 0.4743 | 0.8722 | 0.9967 |

| Shaking | 0.5708 | 0.8280 | 0.9960 |

| Listing | 0.7314 | 0.9717 | 0.9815 |

| Turning | 0.6228 | 0.9656 | 0.9877 |

| Gulping | 0.8504 | 0.9665 | 0.9976 |

| Overall Performance | 0.7051 | 0.9057 | 0.9621 |

| Matthews Correlation Coefficient | |||

|---|---|---|---|

| Behavior | SVM | RF | CNN |

| Resting | 0.7600 | 0.8909 | 0.9497 |

| Hovering | 0.8671 | 0.8885 | 0.9191 |

| Forward Swimming | 0.7407 | 0.7532 | 0.8535 |

| Backward Swimming | 0.6441 | 0.9676 | 0.9524 |

| Boom | 0.5127 | 0.8639 | 0.9963 |

| Shaking | 0.5401 | 0.8155 | 0.9954 |

| Listing | 0.6931 | 0.9679 | 0.9803 |

| Turning | 0.5904 | 0.9624 | 0.9831 |

| Gulping | 0.7913 | 0.9537 | 0.9974 |

| Overall Performance | 0.6821 | 0.8959 | 0.9586 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Brewster, L.R.; Ibrahim, A.K.; DeGroot, B.C.; Ostendorf, T.J.; Zhuang, H.; Chérubin, L.M.; Ajemian, M.J. Classifying Goliath Grouper (Epinephelus itajara) Behaviors from a Novel, Multi-Sensor Tag. Sensors 2021, 21, 6392. https://doi.org/10.3390/s21196392

Brewster LR, Ibrahim AK, DeGroot BC, Ostendorf TJ, Zhuang H, Chérubin LM, Ajemian MJ. Classifying Goliath Grouper (Epinephelus itajara) Behaviors from a Novel, Multi-Sensor Tag. Sensors. 2021; 21(19):6392. https://doi.org/10.3390/s21196392

Chicago/Turabian StyleBrewster, Lauran R., Ali K. Ibrahim, Breanna C. DeGroot, Thomas J. Ostendorf, Hanqi Zhuang, Laurent M. Chérubin, and Matthew J. Ajemian. 2021. "Classifying Goliath Grouper (Epinephelus itajara) Behaviors from a Novel, Multi-Sensor Tag" Sensors 21, no. 19: 6392. https://doi.org/10.3390/s21196392

APA StyleBrewster, L. R., Ibrahim, A. K., DeGroot, B. C., Ostendorf, T. J., Zhuang, H., Chérubin, L. M., & Ajemian, M. J. (2021). Classifying Goliath Grouper (Epinephelus itajara) Behaviors from a Novel, Multi-Sensor Tag. Sensors, 21(19), 6392. https://doi.org/10.3390/s21196392