1. Introduction

1.1. Generative AI Models in Architectural Design

Generative design methodologies have a long history in architectural practice. Since the 1960s, architects have been employing rule-based generative systems such as pattern language [

1], shape grammars [

2], expert systems [

3], and optimization techniques [

4] to automate design processes and generate architectural form. Although these methods were initially met with skepticism [

5,

6], the generative design paradigm re-emerged in the 1990s, when architects used techniques and models like evolutionary algorithms [

7,

8,

9,

10], cellular automata [

11,

12], and multi-agent systems [

13,

14], to generate and optimize architectural form, shape, and floor-plans in relation to functional, structural, or environmental parameters. However, despite their promising endeavors, an early study by Grobman et al. [

6], showed that up till 2009, generative design methods were rare in mainstream architectural practice. Yet, recently, academic interest in generative design has resurfaced. Castro Pena et al. (2021) report an 85% increase in publications related to generative methods since 2015 [

15], reflecting a broader shift toward data-driven and approximation-based approaches enabled by recent advances in deep learning (DL) and Generative Artificial Intelligence (GenAI). Recent systematic reviews on GenAI in architectural design indicate a significant surge, particularly since 2020, in studies about the application of data-driven deep learning models. These models have emerged as central to a rapidly evolving research agenda for architectural design, which is primarily oriented toward the development of novel computational methods for early-stage concept imagery, three-dimensional massing, floor-plan generation, and urban-scale design.

1.2. Deep Learning-Based Generative AI

The rise in deep learning has brought renewed attention to GenAI systems—which diverge from earlier rule-based models by learning patterns from large datasets [

16]. Deep learning (DL) is a subsymbolic associative machine learning method that encompasses artificial neural networks trained on large datasets, most commonly consisting of images and texts. Deep learning generative systems employ Generative Adversarial Networks (GANs), Variational Autoencoders (VAEs), Diffusion models, and Transformers. GANs comprise two neural networks, a generator which creates fake data and the discriminator, which learns to distinguish fake from real data, thus making the generator produce increasingly valid outputs. Conditional GANs are variants of GANs in which both the generator and discriminator are conditioned on additional information (such as class labels or other attributes), enabling the model to generate data that meet specific criteria or contexts. VAEs are generative models that consist of an encoder–decoder pair. VAEs generate new data by sampling from a learned latent space of input data representations, enforcing a probabilistic structure for smooth interpolation within the latent space. Diffusion Models are a class of generative models that gradually add noise to training data and learn to reverse this process to generate new data. They produce high-quality synthetic samples by iteratively denoising an initial random noise distribution seed. Transformers are neural network architectures based on self-attention mechanisms that can parallel process sequential data and thus achieve state-of-the-art performance in tasks such as Natural Language Processing [

17].

While the use of DL models as generative design techniques signals a growing enthusiasm for AI-driven design experimentation, their practical utility for architectural design is an open question. This study addresses this issue by systematically reviewing recent literature and documented case studies, following the PRISMA 2020 guidelines, to evaluate the relevance of data-driven generative techniques for architectural design. Specifically, it examines the degree to which DL-based GenAI methods can be meaningfully integrated into professional design workflows for everyday architectural practice. To this end, the study introduces a system of five assessment indicators: (1) the relevance of the type of generated output for architectural design, (2) the extent of seamless integration of design tools along the pipeline, (3) the extent to which the method used maps onto standard design workflow from schematic to construction documents stages, (4) the requirement for custom-made tools and scripting skills, and (5) the need for technical support/expertise. Such a structured system is necessary because existing reviews often remain descriptive, whereas our contribution provides a systematic, replicable, and comparable assessment of how these methods map onto professional architectural design workflows.

1.3. Review Objectives and Questions

The objective of this systematic review is to evaluate the extent to which DL-based GenAI methods can be effectively integrated into architectural design workflows. While recent studies have highlighted the rapid growth of GenAI applications in architecture, their practice-readiness and alignment with standard CAD/BIM-centered processes remain unclear. To address this gap, the study is guided by the following research questions:

What forms of output (e.g., raster images, meshes/voxels/graphs, CAD/BIM-native models) are produced by DL-based GenAI methods, and how suitable are they for architectural practice?

To what extent are GenAI toolchains integrated or fragmented, and how does this affect their applicability in design workflows?

How do GenAI methods align with conventional architectural workflow stages (schematic design, design development, construction documentation)?

What level of customization or coding effort is required to employ these methods, and are they accessible through off-the-shelf interfaces?

What degree of specialized expertise beyond typical architectural training is necessary to operate these tools effectively?

By systematically addressing these questions through the five-indicator framework (Output Representation Type, Pipeline Integration, Workflow Standardization, Tool Readiness, and Technical Skillset), the study seeks to provide a structured, replicable, and transparent assessment of the capacity of DL-based GenAI to contribute to professional design practice.

1.4. Previous Reviews

Reviews about GenAI methods in architectural design have significantly increased since 2020. Some reviews see data-driven GenAI models as part of a wider field of generative design research that encompasses additional generative techniques. These include search-based and rule-based systems—most often genetic algorithms, shape grammars, and cellular automata. Other, more recent reviews, focus exclusively on the use and impact of data-driven DL-based GenAI models on architectural practice. These reviews discuss the problems architects face with GenAI models in the design process, sometimes proposing strategies to overcome them.

Castro Pena et al. (2021) [

15] reviewed 75 scientific publications from 1995 onward to rank the frequency of occurrence of various types of generative design methods in the literature. The authors observe that DL-based AI generators for conceptual stage shape or floor plan optimization are a rising trend, although outweighed—in terms of study count—by interactive evolutionary computation and cellular automata. The authors implicitly suggest that DL methods are mostly research prototypes, with limited integration in conventional CAD/BIM architectural design workflows.

Bölek et al. (2023) [

18] sorted generative methods into six classes in order to subsequently examine their application domains in architectural design. They argue that GenAI holds transformative potential for architectural practice—enabling new modes of design thinking. They observe that in 242 reviewed papers, data-driven DL models are an emerging and increasing popular trend, but not as prevalent as other generative methods (like evolutionary computation). Citing uses of GANs for floor-plan generation or façade design, they point out that workflow integration of DL-based GenAI methods is generally prototype level. Realizing the potential of GenAI requires advances in accessibility, technical innovation, and interdisciplinary collaboration.

Vissers-Similon et al. (2024) [

16] found that DL generators in architectural design have been sharply increasing since 2020. They point to Transformer models, along with graph machine learning and evolutionary computation, as those methods with the greatest potential to impact the early stages of architectural design. Despite the fact that Transformer models are easy to slot into current ideation workflows for fast sketch and image generation there is a growing research trend in employing GANs, VAEs, and Diffusion models for 3D output and direct integration into CAD/BIM environments [

19,

20,

21,

22,

23,

24].

Zhuang et al.’s (2025) [

25] research highlighted the fact that generative design techniques, which were traditionally based on rule-driven algorithms, have lately shifted towards machine learning models. These include both classic machine learning systems as well as data-driven DL models, like GANs and VAEs. Outcomes of this latter paradigm may range from raster images, topology graphs and vector shapes, to mesh models and, more rarely, NURBS surfaces and solids—depending on the toolchain of the design workflow. This approach, as the authors argue, can eventually transform the practice of architecture by enabling efficient conceptual design exploration, complex performance evaluation, and simulation-informed creativity. Tighter workflow integration can be achieved by tactics such as pairing smaller conditional GAN/VAE models with scripted constraints. However, the authors implicitly suggest that realizing the full potential of DL-based GenAI requires overcoming several hurdles: heterogeneous AEC data formats, insufficient data availability, and lack of controllability and interpretability.

Li et al. (2025) [

26] observed that architects are rather hesitant in using AI models in architectural design, with only occasional deployment, due to algorithmic complexity and the demand for specialized expertise. Despite architects’ awareness of generative AI’s potential, integration into everyday design practice remains narrow and uneven and when employed, it is mostly for image generation. The authors argue that research efforts should focus on mapping different generative tools across the entire workflow, as well as building unified, modular platforms where tools can be chained or swapped without rebuilding the pipeline. In addition, they propose embedding domain-specific requirements and regulation-aware, real-time, user-friendly features so that advanced GenAI can transform into an everyday, end-to-end design partner.

Lystbæk (2025) [

17] suggests that, despite technical limitations, GenAI models can substantively enhance creativity and productivity across design stages, while respecting the discipline’s standards and constraints. He observes that many architectural firms are experimenting with general-purpose AI platforms rather than industry-specific or custom-trained systems, which could have allowed for a more reliable and domain-specific deployment in architectural design workflow. Professional architects recognize the transformative potential of generative models. Yet, concerns over AI “hallucinations”, unresolved issues of intellectual property, and even the environmental footprint of large models, have mitigated their enthusiasm for broad deployment. The author suggests that AI models in architectural practice could be used for managing secondary and mundane tasks. These could include automated documentation, data analysis, or early-stage massing studies, that hardly impact critical design decisions. Then, large pre-trained models could be fine-tuned on domain-specific data, including validation metrics and output controls. This could improve contextual awareness and potential incorporation into existing CAD/BIM workflows.

These reviews show that DL-based GenAI methods are mostly used at the early stages of architectural design, for brainstorming ideas and exploring formal configurations. These could be images, 3D massing models, or floor plan layouts. Yet, the integration of GenAI methods in later phases of design development is still limited, due to technical and practical barriers. There is also a lack of specialized expertise and computational resources, scarcity of high-quality architectural datasets, and concerns about outcome reliability and low interpretability in generated outputs. When DL-based GenAI models are used beyond the conceptual design stage, they remain stand-alone prototypes, with limited CAD/BIM workflow integration. Moreover, they are largely contingent upon bespoke scripting, which demands programming expertise, custom bridges between heterogeneous platforms and file formats, as well as ad hoc pipelines. This results in fragmented toolchains that depend on manual hand-offs between design environments and design phases. However, although the above reviews suggest a chasm between research prototyping and design workflow integration, they only describe the problem. They do not provide a systematic, transparent, and replicable assessment method, i.e., a model that could evaluate, in quantitative terms, workflow integration maturity and practice readiness of these methods.

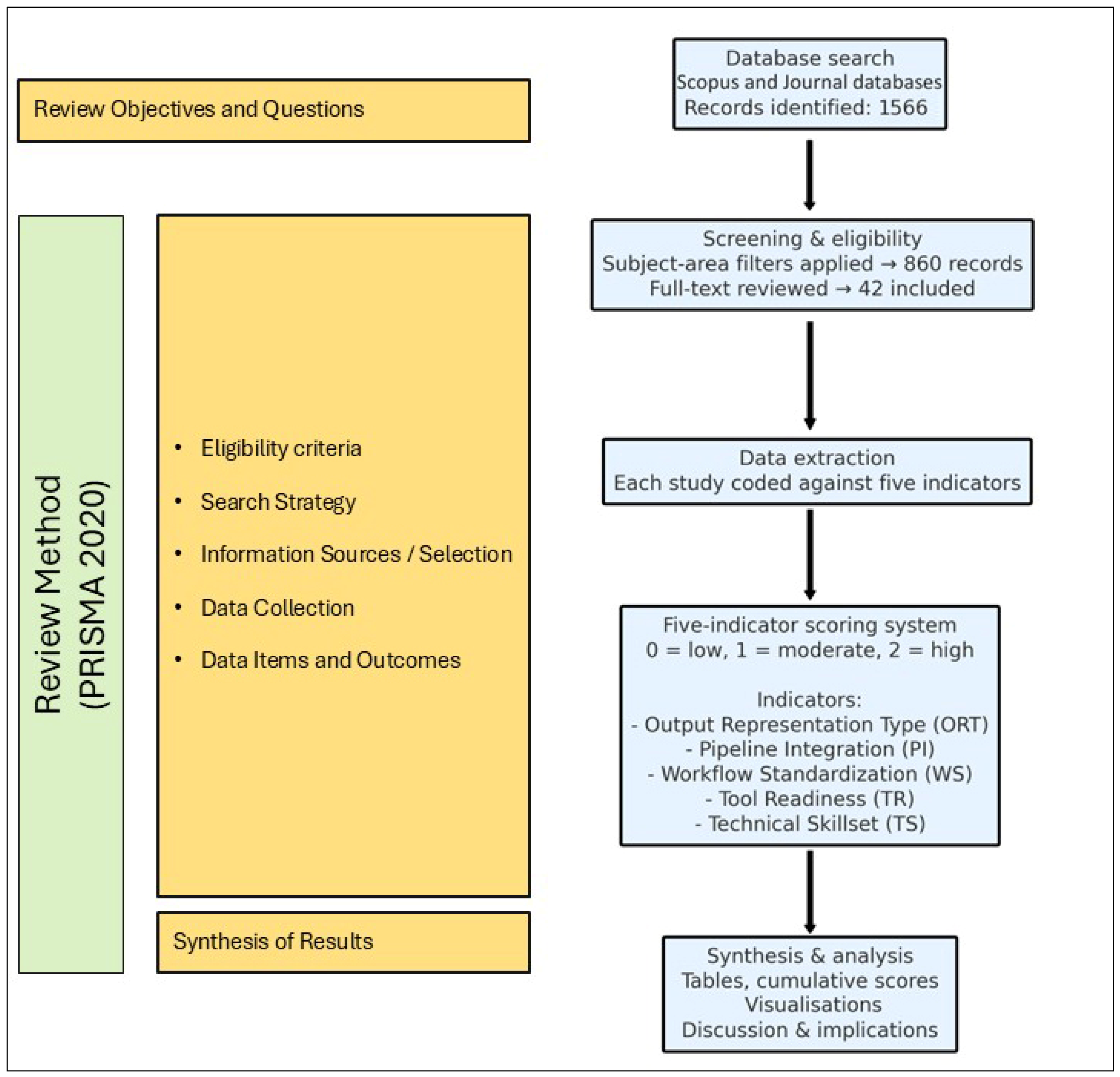

2. Methods

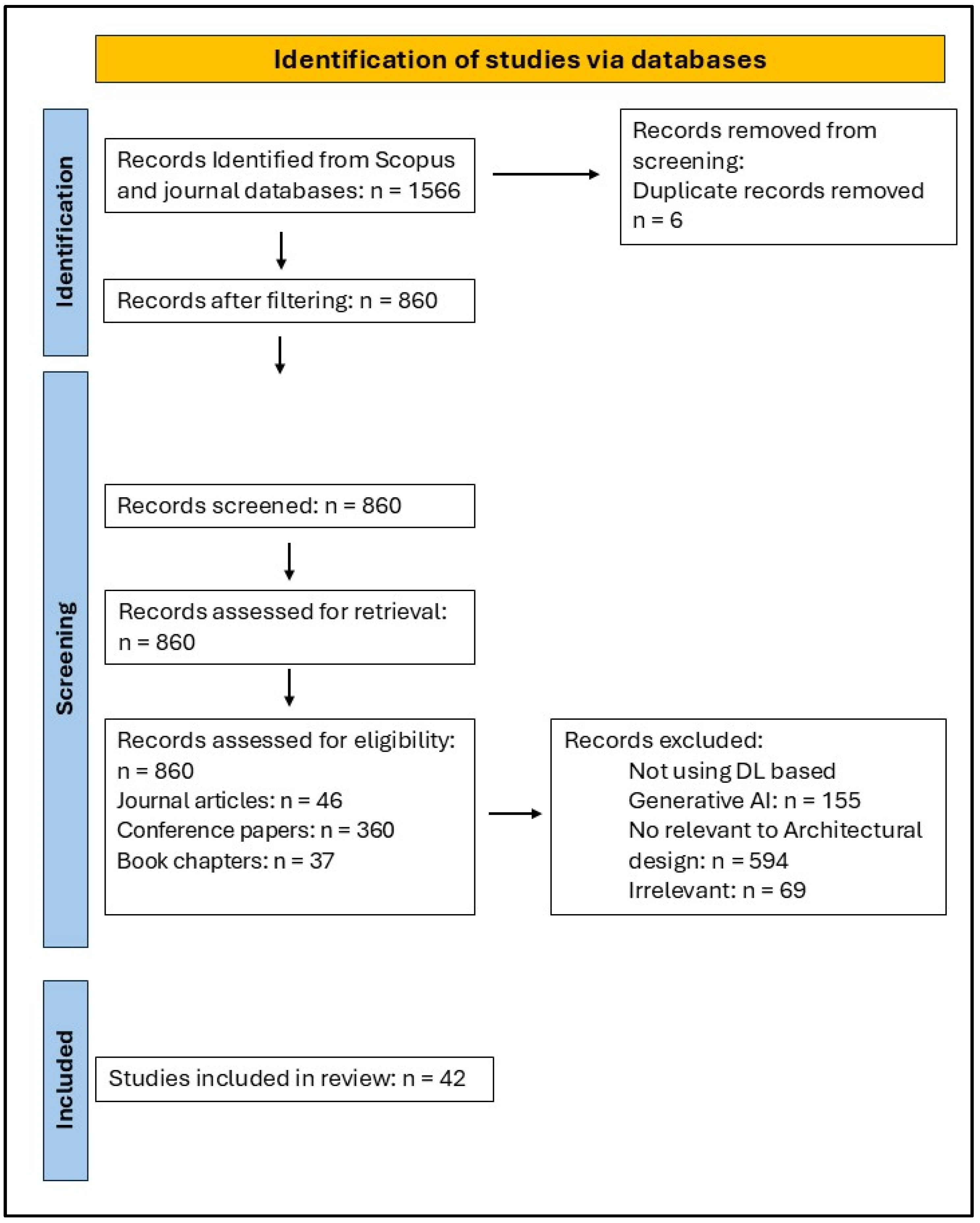

This study was conducted as a systematic review and reported in accordance with the PRISMA 2020 guidelines. PRISMA principles [

27] were used throughout the study to ensure the validity and transparency of the methodology. The objective was to identify, select, and synthesize peer-reviewed studies on the use of deep learning-based generative AI (GenAI) methods in architectural design workflows. A diagram of the overall review method and a PRISMA flowchart are depicted in

Figure 1 and

Figure 2, respectively.

Eligibility Criteria. The studies included fulfilled the following criteria:

- (1)

They reported on the application of DL-based GenAI methods to architectural design regardless of the degree of implementation. DL models that were combined with other generative systems (such as agent-based models, genetic algorithms, or shape grammars) that cooperatively produced the design outcome were also included.

- (2)

They focused on the generation of architectural form (including three-dimensional configurations, spatial layouts, massing, and façades). Although form, in the context of architecture, is not easy to define, in the context of this study, form would involve conceptual representations of three-dimensional spatial configurations and shapes, volumes, spatial layouts, and massing of buildings or structures. Techniques that generated building facades or floorplans were also selected as long as they implied or led to some three-dimensional configuration.

- (3)

When optimization techniques were employed (e.g., structural, environmental, or comfort-related) the generation of form was significantly affected.

Search Strategy. Only peer-reviewed journal articles, edited book chapters, and conference proceedings published between 2015 and 2025 were considered, as these reflect the state-of-the-art and ensure methodological rigor. Non-academic sources (e.g., white papers, proprietary reports) were excluded. The search was limited to academic journals, edited volumes and well-known conference proceedings because (1) they represent the state-of-the-art in deep learning generative AI in architectural design and routinely report on prototypes with potential professional uptake; (2) they allow for quality control—leading journals and conferences use rigorous peer review, so methods are well-documented; and (3) they involve a comparable scope because they target design-stage generative workflows rather than purely construction-engineering papers. Industry case studies, which might be documented elsewhere, may capture more highly scored methods and projects, but were outside our present scope.

Protocol and Registration. No review protocol was registered for this study.

Information Sources and Selection. A comprehensive search was carried out in Scopus and relevant architectural and computer science journal databases within the 2015–2025 timeframe. The search was last updated in early 2025. This review window was chosen because of the radical increase in publications on generative design since 2015, as well as the mainstream employment of DL models in architectural generative design practice—especially after 2020. A Boolean search was conducted with the following keywords: “deep learning”, AND “architectural design”, AND “artificial intelligence”. The full search string and database filters are available upon request. Subject-area filtering was applied to exclude irrelevant domains (e.g., unrelated engineering or non-design computer science studies). The initial search yielded 1566 records. After applying subject-area filters, 860 records remained. These comprised 463 journal articles, 360 conference papers, and 37 book chapters. These were screened for relevance by reviewing titles and abstracts. A total of 42 studies met all eligibility criteria and were included in the review.

Data Collection Process. The data collection method combined qualitative indicators with quantifiable proxies to assess workflow integration for each study. For each study included, data were extracted according to five (5) workflow-integration indicators which were then evaluated on a three-tier scale. These indicators assess the output format representation; the level of integration of the generative workflow pipeline; the alignment of techniques used with standard architectural design practice workflows; the need for scripting and custom-made tools; and the requirement for specialized knowledge and technical support from non-architects. These indicators are described more analytically below.

Output Representation Type (ORT). This indicator determines the final output format of the workflow. Workflow output may vary, from raster imagery (Tier-0) to voxels, topology graphs, or mesh models (Tier-1) and vector-based or CAD/BIM-native geometries (Tier-2). Output is considered to be the final outcome of the process, even when pipelines are customized for indirect conversion of images or meshes into CAD/BIM-ready formats.

Pipeline Integration (PI). The PI indicator assesses the extent to which the tools used along the pipeline of the workflow are integrated. When the workflow combines more than four loosely coupled tools with usually manual hand-offs, studies score Tier-0. When two to three tools are linked via scripts or plug-ins, studies score Tier-1. When a single platform or fully embedded plug-in is used, with no exports or imports, then studies score Tier-2.

Workflow Standardization (WS). Standard design workflows usually follow the Schematic Design/Design Development/Construction Documents (SD/DD/CDs) pipeline, which indicates the typical phases of a design and construction project commonly used in architecture and engineering. This is a structured approach that takes a project from initial concept to detailed construction plans, ensuring a systematic and organized process. In the context of our research, for papers to align with this standard scheme, they must output CAD/BIM native models coupled with site and code constraints, structural and environmental performance, comfort metrics documentation, etc. These should be Tier-2 case studies, which almost always output parametric geometry, NURBS editable models, or IFC. A mesh-based, raster-to-vector or raster-to-3D-massing pipeline should be Tier-1. Studies in which only stylistic, conceptual, and mood board generation are present stay at Tier-0.

Tool Readiness (TR). This indicator determines the requirement for heavy or light custom or off-the-shelf tools in the design workflow. Sometimes there is a demand for custom-made tools and heavy bespoke programming essential for dataset training and further design development. These are Tier-0 studies. Tier-1 studies introduce occasional short scripts, visual code, or macros, while Tier-2 studies do not require heavy programming or coding, often because they use off-the-shelf user interfaces.

Technical Skillset (TS). This indicator assesses the requirement for technical expertise beyond typical architectural skillsets, including technical support from programmers and computer scientists. Standard architectural skillsets do not go beyond mainstream digital drafting or visual coding software and parametric modeling plugins. Tier-0 studies should be those that require the competent skills of data scientists and engineers, such as heavy deep ML/RL expertise, as well as Python/C#/API scripting and GPU management. Moderate use of algorithmic node-graph editors (like Grasshopper and Dynamo) and off-the-shelf API bridges, light scripting, and plug-in configuration skills, should indicate Tier-1 studies. Studies that operate with familiar CAD/BIM or prompt-based web user interfaces, not requiring scripting or any kind of model training, should be Tier-2.

Data Items and Outcomes. These five indicators were scored on a three-tier scale (0 = low integration, 1 = moderate integration, 2 = high integration). This rubric was designed to assess the degree to which GenAI methods map onto professional design workflows, from early schematic design through to construction documentation.

Table 1 presents the three-tier scale for each indicator.

Risk of Bias and Quality Assessment. In line with PRISMA 2020, we considered potential sources of bias in the included studies. Because architectural design research does not employ standardized trial or experimental protocols, conventional bias assessment tools (e.g., ROBIS) were not applicable. Instead, we evaluated studies using our five-indicator rubric (Output Representation Type, Pipeline Integration, Workflow Standardization, Tool Readiness, and Technical Skillset), which indirectly reflects the maturity and reproducibility of each workflow. We recognize that this approach does not assess all possible biases (such as selective reporting or dataset availability), but it provides a structured, transparent framework tailored to the architectural design domain.

3. Results

A total of 42 peer-reviewed studies on DL-based GenAI methods in architectural design were evaluated in terms of five indicators: ORT, PI, WS, TR, and TS. Three discrete representation tiers were used for each indicator. The titles, publication details, and reference list numbers of 42 selected studies are listed chronologically in

Table 2, along with their scores on a three-tier scale for each indicator.

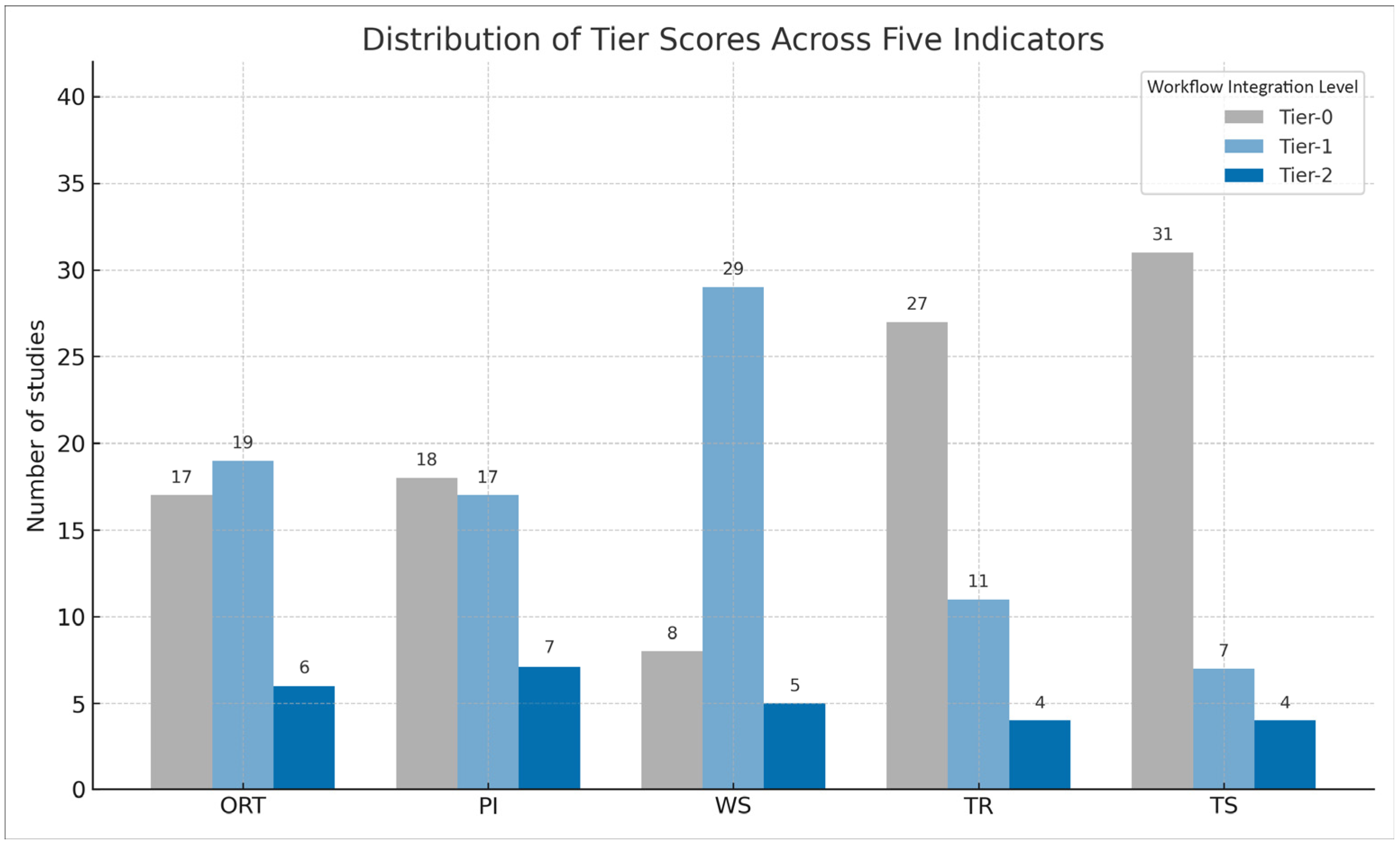

Output Representation Type (ORT): Table S1 (see Supplementary Materials) shows the three-tier scale score and rationale for each study for Output Representation Type indicator.

Table 3 shows the number and % distribution of studies for each tier score for the ORT. Seventeen (17) papers scored Tier-0 (40%). Their respective generative methods produced raster outputs, through text-to-image diffusion models and GANs or conceptual diagram generators. Typical examples include [

34,

61], where text-to-image diffusion or GAN models are leveraged for rapid visual ideation, mood board creation, or conceptual stage exploration and dialog with clients. Although these are useful for practitioners, they cannot be readily scaled, dimensioned, or adapted within CAD/BIM environments, forcing designers to redraw or remodel outputs before schematic design can commence.

Tier-1 studies account for nineteen (19) papers, almost as many as the raster output methods. Studies such as [

20,

52] export density voxels, triangular meshes, or layout topology graphs, either via three-dimensional format dataset GAN training (3DGANs) or by converting 2D image heat maps and signed distance fields via modelers like Rhino, SketchUp, or Blender. These processes can be used for diagrammatic form conceptualization, syntactic exploration, early-stage massing, or environmental performance analyses without complete reconstruction. These studies move beyond pure imagery, but they lack editable parametric geometry, layers, or metadata for CAD/BIM-ready modeling. Also, they demand further manual or scripted conversion for downstream design development.

Tier-2 studies (15%), accounted for only six (6) papers, as exemplified, for example, in [

24,

61]. Their methods manage to export AI-derived data into platforms like Grasshopper and Revit to ultimately produce CAD/BIM-native geometries (such as editable NURBS surfaces/B-Reps/solids, etc.), preserving semantic object attributes and material parameters. However, achieving this level of integration requires custom APIs and Python plug-ins, CAD-to-BIM bridges like Rhino.Inside, or advanced middleware, like Autodesk Forge and Dynamo. Nonetheless, Tier-2 outputs demonstrably support multi-phase workflows, allowing for concept, analysis, and documentation to proceed without data loss. For example, workflows generating parametric floor plans in Grasshopper or Revit allow architects to integrate generative results directly into documentation and coordination. The relative scarcity of CAD/BIM-native outputs demonstrates that GenAI tools—although promising for creative exploration—remain largely disconnected from professional production environments.

Pipeline Integration (PI): Table S2 (see Supplementary Materials) shows the three-tier scale score and rationale for each study for the PI indicator.

Table 4 shows the number and % distribution of studies for each Tier score for the Pipeline Integration indicator. Tier-0 and Tier-1 studies dominate the corpus. For 43% of the studies (Tier-0), workflow is fragmented into four or more distinct bits with usually manual hand-offs, such as separate AI training code (e.g., CycleGAN), and image-to-mesh post-processing methods. A typical sequence starts with Python script and a custom ML model; then, Photoshop is used for image masking, a module for image vectorization, Illustrator for vector drawing refinement, and Revit for redrawing the imported vector file. This manual conversion of raster output into CAD/BIM-ready drawing means that the workflow is profoundly interrupted along the process, with potential file-format loss, version mismatch, and human error.

Tier-1, representing 17 studies, reflects workflows with a moderate degree of tool integration. In these cases, two or three applications are linked together with partial automation, allowing for smoother hand-offs than Tier-0 but still requiring movement across distinct platforms. Typical examples include pipelines where a diffusion model produces a height-map that is then translated into a Grasshopper mesh, or where a variational autoencoder is coupled with an artificial neural network to drive EnergyPlus simulations through Rhino/Grasshopper. These systems often rely on API calls, Rhino.Inside connections to Revit, or Grasshopper plug-ins to bridge between environments, creating a quasi-continuous pipeline. One illustrative case is Abdelmoula et al.’s SketchPLAN, which connects Python-based GAN segmentation with Rhino and Revit, enabling raster outputs to be transformed into editable BIM elements. Such strategies act as the “glue” between research code and CAD/BIM software, preserving metadata and leveraging specialized engines not natively available in design platforms. However, even at Tier-1, the workflow remains fragmented: custom scripts and bridging modules are necessary, and the toolchain does not yet collapse into a seamless, single-platform experience accessible to practitioners.

Only 17% of the corpus—seven (7) studies—attain Tier-2. In this case, studies employ a single design environment or plug-in, meaning that the designer works entirely inside a single user interface, or through a bespoke pipeline. A single interface would deliver only 2D raster images, such as Zhang et al.’s ComfyUI (a node-graph graphical user interface), which means they acquire Tier-0 on the ORT indicator. A bespoke development on the other hand, like Okonta et al.’s system that links Natural Language Processing with Revit, demonstrates that deep integration is technically feasible but usually demands custom python scripts and add-ins, API wrappers, or extended visual code components. Nevertheless, Tier-2 workflows deliver the greatest downstream value: reduced translation effort, consistent parameter sets, and immediate compatibility with practice standards.

Workflow Standardization (WS): Table S3 (see Supplementary Materials) shows the three-tier scale score and rationale for each study for WS indicator.

Table 5 shows the number and % distribution of studies for each tier score for the Workflow Standardization indicator. Eight (8) papers scored Tier-0 because they follow an experimental track where the generated output is interesting research material but cannot be mapped onto any typical architectural design workflow without wholesale rework. Tier-0 studies showcase novel AI engines—GAN-based images and reinforcement learning sandboxes—yet explicitly state that outputs are “for inspiration only” or require manual redrawing before practical use.

Twenty-nine (29) papers scored Tier-1 because their respective workflow supports a single stage integration—typically concept sketching, façade mood-boarding, or massing studies. Yet, hand-off to schematic design or BIM phase is manual. Tier-1 studies embed GenAI into one single task. Examples include early massing exploration through Sebestyén et al.’s density voxels, space-planning through Eisenstadt et al.’s graph-based floor-plan topologies, and Kakooee & Dillenburger’s reinforcement learning layout, or interior mood-boarding through Lee et al.’s prompt framework.

Only five (5) papers (12%) reach multi-stage integration in the workflow. These Tier-2 studies include, for example: Abdelmoula et al.’s SketchPLAN and Okonta et al.’s bridge between natural language processing and Revit, both of which deposit native BIM objects that remain editable through documentation. Veloso’s agent academy is paired with a Grasshopper pipeline that can carry bubble diagrams into performance analysis without metadata loss. These methods’ outputs feed directly into schematic design and carry editable drawing data (such as NURBS and Grasshopper definitions) forward to design development in standard BIM-friendly pipelines.

Tool Readiness (TR): Table S4 (see Supplementary Materials) shows the three-tier scale score and rationale for each study for the TR indicator.

Table 6 shows the number and % distribution of studies for each Tier score for the Tool Readiness indicator. The highest proportion of studies (Νo = 27, 65%) scored Tier-0. These papers introduce custom machine learning training algorithms and datasets [

20]), reinforcement learning spatial agents [

35,

39], and graph-neural networks [

52]. Even when the generative engine itself is open-source, authors routinely append preprocessing, post-processing, and evaluation code that lies outside commercial CAD/BIM ecosystems. The result is a patchwork of notebooks, scripts, and API calls—powerful for experimentation but unusual in day-to-day practice.

Tier-1 papers, which account for one fourth of the corpus (No = 11, 26%), show a transitional pattern. Researchers use ready-made plug-ins (Karamba for Finite Element Methods, LunchBox-ML for regression, ComfyUI for diffusion images), adding only data flow guiding modules, like Grasshopper canvases or CSV import macros. Although flexible, these methods still assume some fluency in scripting and parametric modeling.

Only four studies achieved Tier-2. These methods [

40,

41,

51] usually employ web-based text-to-image off-the-shelf services (Midjourney, Dall-E2) or open-source platforms (Stable Diffusion) with no need for coding, just prompt literacy. Although a no-code generative system might be gradually possible, currently off-the-shelf generative techniques that do not demand bespoke scripting are usually lightweight ideation tools that stop at raster imagery.

Technical Skillset (TS): Table S5 (see Supplementary Materials) shows the three-tier scale score and rationale for each study for the TS indicator.

Table 7 shows the number and % distribution of studies for each tier score for the Technical Skillset indicator. The dominance of Tier-0 studies (No = 31, 74%) reflects a field still driven by research prototypes. Reinforcement learning graph-based space planning, diffusion/3DGAN pipelines, and optimizers coupled with performance modules commonly require custom scripting, data curation, GPU setup, and API bridges that typically exceed the skillsets of architects. In practice, these methods imply interdisciplinary teams and outsourcing to machine learning specialists.

Tier-1 papers make up 17% of the corpus. Workflow complexity is mitigated by parametric design environments and custom modules like Grasshopper with Karamba and machine learning components, or node-graph user interfaces such as ComfyUI. End-users still need to understand data flow, parameters, and plug-in interactions, but do not train models or write substantial code. This level is increasingly attainable for computationally literate practices.

Tier-2 remains rare and bifurcates into two types of studies. One is prompt-only ideation (text-to-image), which is technically accessible but delivers raster outputs with limited downstream design utility. The other involves compiled or tightly embedded add-ins (e.g., Revit-native tools or Rhino.Inside bridges packaged for end-users). These achieve genuine “no-code” operation inside common design software, but they demand significant engineering skills up front—hence their scarcity in the corpus.

4. Discussion

In terms of Output Representation Type (ORT), 85% of the studies in the corpus attain Tier-0 (raster imagery) and Tier-1 (voxel grids, graphs, and meshes). This implies that, at the moment, GenAI design tools operate mainly as visual ideation and conceptual or massing exploration aids. For more studies to achieve Tier-2, this will require diffusion or GAN models capable of generating structured geometry for CAD/BIM downstream integration, or plug-and-play exporters that embed semantic metadata automatically. Tier-1 cases are usually conducted within web-based raster output diffusion services that naturally score highly in Tool Readiness (TR). However, both Tier-1 and some Tier-0 cases demonstrate considerable efforts to convert outputs into usable file formats; this includes mesh clean-up, retopology, API bridges, mesh-to-NURBS and raster-to-vector strategies, highlighting a persistent chasm between research prototypes and mainstream practice. Although these methods achieve low scores in Workflow Standardization (WS), they showcase an emerging pattern, that of hybrid pipelines, that start with raster images and finish with parametric geometries without data loss.

Pipelines are often fragmented, particularly when researchers need to manually import raster output into standard drafting or post-processing tools, risking file-format compatibility, version inconsistencies, and potential human error. For the Pipeline Integration indicator (PI), Tier-2 studies usually involve single web-based platforms like Stable Diffusion and Midjourney. However, these studies score low on workflow standardization because they do not proceed beyond the conceptual and schematic design stage. Studies that integrate or directly embed GenAI models in CAD/BIM platforms are rare. For pipelines to achieve higher integration with less tool hand-offs, they would require advanced software-engineering resources and skills, overcoming licensing restrictions and open APIs. In this case, common machine learning tasks should be packaged into CAD/BIM environments, pushing more workflows into Tier-2.

The dominance of Tier-1 papers (No = 29, 40%) in Workflow Standardization (WS) demonstrates that, with modest engineering effort, workflows can be easily mapped onto the schematic stage of design development. This commonly includes images, meshes, voxel grids, and topology diagrams, for concept and mood board sketching, site massing exploration, room adjacency diagramming, etc. For Tier-1 studies to be able to deliver recognizable hand-offs to the next design stage, it would require geometric re-modeling of the outcomes and possible restructuring of the workflow to account for code compliance, site metrics, and construction limitations. However, some Tier-2 workflows (No = 5) demonstrate a sort of standard end-to-end design thinking that seems to bridge the gap between creative novelty and professional pragmatism. Using native geometry exporters, metadata API bridges, custom scripting modules, and intricate visual code definitions, these workflows achieve some level of seamless multi-stage alignment. This indicates their dependence on custom tools and highlights the need for more technical expertise and computer engineering resources.

Indeed, for the Tool Readiness indicator (TR), two–thirds (No = 27, 65%) of the studies rely on substantial custom programming, ad hoc model training, and dataset curation efforts. This reflects both the novelty of the domain and the scarcity of ready-to-use domain-specific datasets. It also shows the rarity of open-source modules for advanced machine learning integration in CAD/BIM environments. Only four (No = 4) studies use off-the-shelf tools, but they mostly employ prompt-based input and pretrained platforms like Stable Diffusion. One-fourth of the studies (No = 11, 26%) employ custom or off-the-shelf code, or macros. Without proper infrastructure, bespoke coding will remain the norm, forcing sophisticated AI workflows to heavily rely on machine learning experts.

Thus, in terms of Technical Skillset (TS), three-quarters of the studies (No = 31, 74%) demand highly specialized machine learning expertise; this would involve dataset curation, labeling, pre- and post-processing, GPU setup skills, and metadata management. Shifting Tier-0 studies to Tier-2 would need containerized components and plug-ins, robust user interfaces with schema-aware nodes for common tasks, and shared datasets that will allow architects to operate sophisticated methods with ordinary professional skills. Until then, most high-performing GenAI methods in architectural design will remain dependent on specialist expertise.

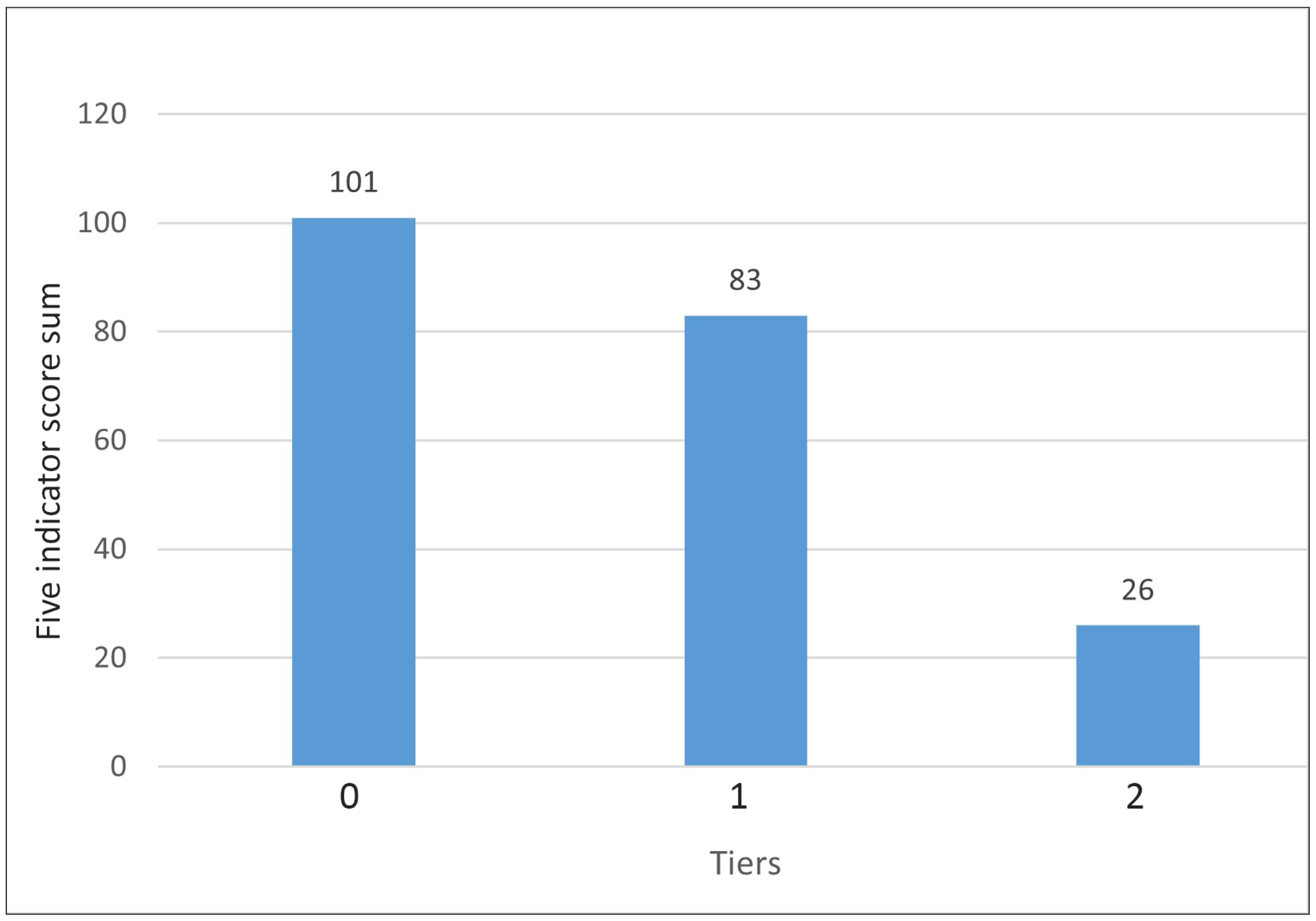

The column chart in

Figure 3 presents the cumulative Tier scores across all five indicators for the 42 studies. Notably, Tier-2 scores—the level indicating the highest degree of practice readiness—are the lowest overall (total = 26). This underscores that current GenAI methods rarely achieve seamless integration into professional workflows. That is, only 12% of all indicator scores across the corpus reached Tier-2, highlighting the rarity of high-integration, practice-ready workflows. The stacked column chart in

Figure 4 highlights that Tier-2 proportions are consistently low across all indicators, underscoring the limited practice-readiness of DL-based GenAI methods in architectural design workflows.

Implications for Practice: From Academic Prototypes to Practice-Ready Tools

Most studies across the reviewed literature remain at the prototype stage and are hardly integrated with mainstream architectural workflows. Although they frequently demonstrate technical novelty, they demand skills and infrastructures that extend well beyond the competencies of most architectural practices. They usually generate raster images or non-native meshes, and rely heavily on bespoke scripting, machine learning expertise, and fragmented toolchains. This persistence of Tier-0 and Tier-1 studies in the corpus is due to several technical, professional, and systemic factors, most of which have already been highlighted in previous reviews on GenAI for architectural design.

The high proportion of Tier-0 and Tier-1 studies is due to several technical reasons. As Abdelmoula et al. [

24] showed, even promising raster-to-BIM pipelines require custom APIs and Python plug-ins, highlighting ongoing technical barriers. Further technical barriers include the demand for costly computational power able to store and process large, multi-layered, and semantically rich BIM models; the lack of standardization and interoperability of file formats; insufficient output control; stylistic bias; and so on. As already mentioned, Zhuang et al. [

25] have noted that heterogeneous AEC data formats, insufficient data availability, and lack of controllability hinder workflow maturity for DL-based GenAI systems.

In professional practice, architects tend to be cautious in adopting tools that are perceived as speculative, unreliable, or opaque. Concerns about authorship, intellectual property, and the interpretability of “black-box” models often reinforce resistance, particularly when outputs cannot be fully controlled or validated. As Li et al. [

59] observed, architects remain hesitant to deploy AI because of algorithmic complexity and the need for specialized expertise. Also, practitioners may fear legal liability if GenAI that was trained on their BIM data produces unsafe or non-code compliant outputs. Indeed, Lystbæk [

17] argued that many firms prefer experimenting with general-purpose AI tools (e.g., Midjourney, ChatGPT) rather than committing to domain-specific systems, partly due to concerns about authorship, reliability, and IP issues.

Systemic factors include the lack of large, high-quality, and openly shared architectural datasets, which prevents the training of robust, domain-specific models comparable to those in computer vision or NLP. Confidentiality of design data, proprietary standards, and the absence of coordinated data-sharing frameworks exacerbate this gap. These factors help explain why, despite rapid academic interest and experimentation, most GenAI applications in architecture remain confined to early-stage ideation, rather than advancing toward CAD/BIM-ready, multi-stage workflow integration. Zhuang et al. [

25] and Li et al. [

59] pointed out that the absence of large, high-quality, openly shared architectural datasets is a major bottleneck for robust GenAI training and reproducibility. Some reviews have also mentioned confidentiality of design data and proprietary standards as obstacles to dataset sharing [

16,

17].

Only a minority of studies in the corpus were Tier-2 industry-ready cases. These encompass either direct CAD/BIM-native outputs or tightly integrated pipelines via plug-ins and APIs such as Grasshopper/Revit bridges. Tier-2 studies are significant because they reduce the translation gap between generative exploration and professional project delivery. For practice, this distinction highlights both the potential and the limitations of current GenAI research. Architects can readily use existing diffusion and GAN-based tools for early ideation and visual exploration, producing concept imagery, schematic massings, or exploratory floor plan layouts. Yet, meaningful integration into the full design workflow will require packaging deep learning modules into environments architects already use (Revit, ArchiCAD, Rhino/Grasshopper), and accessible user interfaces that minimize the need for custom coding and technical expertise. It will also require exported CAD/BIM models that preserve parametric editability and metadata, as well as tightly integrated API bridges and plug-ins, so that generative outputs flow directly into established workflows without manual conversion.

Recognizing the divide between academic experimentation and practice is essential for charting future development. The assessment indicators employed in this review and the tier score outcomes reveal the potential of experimental methods to be applied in professional practice. They suggest that academic researchers and practitioners should take further steps to make GenAI a dependable part of architectural design workflows. These steps would not only minimize adoption barriers but also enable GenAI to support a broader range of tasks across the design-to-delivery pipeline. For software developers, aligning GenAI outputs with CAD/BIM standards and embedding generative capabilities into existing platforms will be critical to ensure practical uptake in professional workflows. Many of the AI features currently embedded in mainstream design platforms provide only raster-based outputs or operate as assistive modules, rather than tools for generating CAD or BIM geometry from user prompts. For example, Graphisoft’s AI Visualizer, which is integrated into ArchiCAD, can generate images from mass objects, sketch inputs, and text prompts. Moreover, ArchiCAD’s forthcoming AI Assistant by Nemetschek will blend model queries, support documentation, and integrate with its AI visualizer. Also, such AI-augmented features are already embedded in Autodesk software (such as smart assistants with automation, suggestion, and predictive features). Although these AI modules do not provide generative components for fully supported and integrated CAD/BIM-native output, some indications of future scenarios are already in place; Graphisoft experiments with modules capable of generating parametric BIM objects from 2D drawings or catalog data, while Autodesk has proposed the “Neural CAD” concept, an experimental module for generating BIM or CAD elements from images, sketches, mass geometry objects, and text prompts. Although promising, these are in the proof-of-concept stage, not yet widely deployed as part of ArchiCAD’s and Revit’s core workspace.

5. Conclusions

This review evaluated 42 studies of DL-based GenAI methods for architectural design using a five-indicator framework—Output Representation Type (ORT), Pipeline Integration (PI), Workflow Standardization (WS), Tool Readiness (TR), and Technical Skillset (TS)—to assess how well these methods map onto architectural practice workflows. The corpus was assembled from Scopus and leading volumes, journals, and conferences proceedings (2015–2025) under clear eligibility criteria focused on form-generation.

Across studies, most GenAI outputs are not CAD/BIM-native representations: 40% of the outcomes are raster imagery (ORT Tier-0), 45% meshes/voxels/graphs, and only 15% are CAD/BIM-native geometry. Toolchain pipelines are typically fragmented: 43% of cases use more than four loosely coupled steps (PI Tier-0), 40% link two to three tools, and 17% operate in a single platform or embedded plug-in. Most studies map onto the schematic design phase (WS Tier-1 = 69%), with rare multi-stage, BIM-compatible pipelines (WS Tier-2 = 12%). The dominant pattern is heavy bespoke coding (TR Tier-0 = 65%) and specialist skill requirements (TS Tier-0 = 74%). The cumulative Tier-2 score across all five indicators for all 42 studies was 26, compared to a score of 101 for Tier-0. That is, only 12% of all five indicator scores across the corpus reached Tier-2, which suggests that practice readiness is low.

Overall, this review has shown that while most DL-based generative methods remain fragmented and limited to schematic ideation, only a small fraction (Tier-2 studies) achieve multi-stage CAD/BIM integration. Therefore, findings substantiate a significant chasm between ideation-oriented experimentation and mainstream CAD/BIM-based practice and delivery. The persistence of Tier-0 and Tier-1 studies in the corpus is firstly due to technical factors, such as fragmented toolchains, image-based output, demand for advanced engineering skills, computational cost, lack of controllability, heterogeneous file formats, or stylistic bias. It is also due to cultural and systemic factors: professional skepticism against the complex algorithmic nature of DL-based models, authorship and reliability issues, legal liability and confidentiality, and the absence of incentives for high-quality and openly shared domain-specific datasets.

Unlike prior reviews, which primarily describe the growth of GenAI applications in architecture, our study contributes a structured, five-indicator rubric that functions as a workflow maturity model. This model provides a replicable way to assess practice-readiness, highlighting where tools are stuck in Tier-0 experimental prototypes and where they approach Tier-2 integration into standard delivery workflows. By framing generative AI adoption through a maturity lens, our review offers a systematic benchmark for both researchers and practitioners to evaluate progress and identify gaps that must be closed for GenAI to move from experimentation to everyday practice. Limitations of this study include a focus on academic sources (likely undercounting proprietary industry deployments), selection of mostly early-stage form-exploration studies in GenAI, and a fast-moving domain of design research. Nonetheless, the five-indicator rubric offers a practical workflow integration maturity index for tracking progress over time and across domains.

Future Directions

As discussed, adoption and full integration of GenAI in architectural design depends to some extent on resolving technical barriers. Closing the gap between ideation-oriented experimentation and CAD/BIM-based delivery will require in-practice studies, studies that track GenAI downstream along the design development stages, and methods that minimize the demand for overly advanced engineering skills. This means shifting outputs from pixels to CAD/BIM geometry and metadata; compiling GenAI models into embedded modules, plug-ins, and API bridges (e.g., Rhino.Inside/Revit add-ins) that minimize hand-offs; containerized components and schema-aware GUIs that can be managed by typical architectural skillsets; and shared datasets focused on practice limitations such as code compliance, environmental and structural metrics, etc. Two Tier-2 archetypes already visible—prompt-only ideation (accessible but raster-bound) and tightly embedded add-ins (practice-ready but engineering-intensive)—suggest a pragmatic development path.

Also, the evolution of GenAI in architecture will inevitably depend on overcoming major cultural and systemic barriers, professional skepticism, and ecosystem challenges. The ethical and socio-political concerns that these challenges raise cannot be overlooked. Issues of data ownership and intellectual property complicate both training and deployment, as most models rely on datasets whose provenance and licensing are often opaque. Bias and access to energy are also problems faced by the AI ecosystem. Bias embedded in training data risks reproducing narrow stylistic conventions or excluding culturally diverse design vocabularies, thereby limiting rather than expanding architectural imagination. Additionally, the environmental costs of training and running large-scale models—measured in energy consumption and carbon emissions—pose further challenges for a discipline increasingly concerned with sustainability. These issues, while not the primary focus of this review, are increasingly relevant for practitioners because they underscore the need for critical, responsible integration of GenAI into architectural workflows. Overcoming these problems would require shared data infrastructures with anonymization protocols; domain-specific CAD/BIM datasets built by industry consortia and academic bodies to overcome data scarcity; collaboration between firms, universities, and professionals to secure common licensing and metadata standards for these datasets; and governance frameworks to resolve authorship and liability issues. Professional bodies should set standards for ethical use, dataset sharing, or carbon accounting of AI tools in architecture.

Having said that, several possible scenarios ahead are plausible. While, as we showed, text-to-BIM or text-to-CAD GenAI is an active area of research [

19,

21,

22,

64], some preliminary off-the-shelf applications are already in use; platforms like BlenderGPT, Hypar, and Spline AI are currently capable of generating CAD drawings and 3D models from text prompts. So, one likely future scenario is the embedding of text-to-plan and text-to-façade GenAI modules directly within mainstream CAD/BIM platforms—such as Revit, ArchiCAD, or Rhino/Grasshopper—to allow designers to generate and iteratively refine parametric floorplans, façades, or massing studies. Thus, existing workflows will be able to offer rapid iteration without leaving the primary design environment, reduce data loss across hand-offs, and make generative exploration accessible to architects without coding expertise. This scenario could also include no-code GenAI pathways. In this case, Grasshopper or Dynamo plug-ins could act as GenAI bridges, enabling architects to experiment with generative models through visual scripting rather than bespoke programming. This would democratize experimentation and accelerate adoption in practice.

Another future scenario would require shared benchmark datasets that could allow for the standardized evaluation of design models, along with integrated AI modules to assist with performance analysis and regulation checks. A consortium model—linking universities, professional practices, and software vendors—could establish open repositories of BIM-native geometry, annotated floorplans, and performance-linked models. This would parallel the role of ImageNet in computer vision and provide the foundation for robust, generalizable GenAI models in architecture.

Furthermore, another scenario would involve mainstream CAD/BIM environments hosting “AI co-pilots” capable of generating regulation-aware, editable, and semantically rich models, enabling true end-to-end AI-augmented workflows. Finally, the evolution of ethical and governance frameworks will shape GenAI’s trajectory. Professional bodies and industry standards could establish guidelines on dataset provenance, energy use, and intellectual property, ensuring that generative tools are aligned with architectural values of sustainability, inclusivity, and accountability.

Taken together, these scenarios suggest that the next stage of GenAI in architecture will not be driven by technical novelty alone, but by integration, collaboration, and governance—factors that can translate research advances into actionable tools for everyday design practice. By framing GenAI in this way we can envision concrete pathways from today’s experimental prototypes to tomorrow’s practice-ready design partners.