1. Introduction

Vector network analysis [

1,

2,

3,

4] is fundamental in high-frequency electrical metrology. Measurements with a vector network analyzer (VNA) enable the precise characterization of electrical components and systems. To ensure the reliability and traceability of these measurements to the International System of Units (SI), rigorous modeling of the measurement process is essential [

5].

METAS VNA Tools [

6] is a comprehensive metrology software suite developed by METAS to support the evaluation and uncertainty analysis of VNA measurements. Designed primarily for use in national metrology institutes and (calibration) laboratories,

VNA Tools integrates measurement models in a modular fashion, facilitating standardized workflows and enabling detailed systematic investigations.

According to [

7], metrological traceability requires “… an unbroken chain of calibrations, each contributing to measurement uncertainty”. As noted in [

5] the traceability chain is not necessarily a simple linear structure, it may branch into multiple paths that later converge.

VNA Tools is built upon the uncertainty engine

METAS UncLib [

8,

9], which implements uncertainty propagation in a specific way to support the integrity of traceability chains of diverse architectures. Specifically, it enables the propagation of uncertainty budgets along the traceability chain by maintaining a record of influence quantities and their associated uncertainties. This mechanism preserves the integrity of the traceability chain even in cases where, for example, devices under test (DUTs) are calibrated at different times on the same measurement system and/or the same reference standards are subsequently combined in a later measurement.

The paper is structured as follows: It begins with an introduction to measurements with VNAs and challenges related to it. This is followed by a review of the concept of multivariate linear uncertainty propagation and its implementation using uncertainty objects. Next, METAS VNA Tools and METAS UncLib are introduced and the implementation of uncertainty objects is described. Building on these more conceptual foundations, the paper continues by discussing how METAS VNA Tools provides added value to the end user in support of the digital traceability chain. Finally, the paper concludes with a brief discussion.

2. VNA Measurements

VNAs measure the reflection and transmission characteristics of a device under test (DUT) in amplitude and phase as a function of frequency, normalized to a reference impedance. VNAs exist in various form factors, ranging from relatively large, highly precise desktop models—typically employed at national metrology institutes (NMI)—to compact hand held devices. Most common configurations feature two or four measurement ports, although models with a larger number of ports are available as well. VNAs are used to perform measurements with different transmission media, including coaxial lines, metallic wave guides, and planar structures.

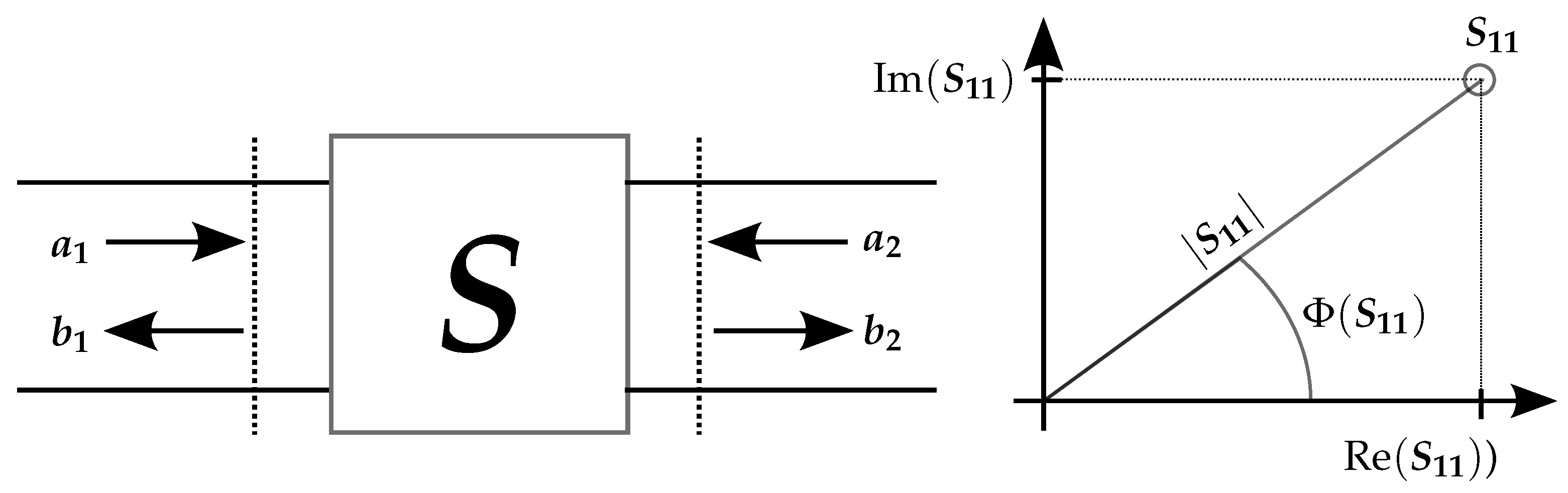

The quantities measured by VNAs are known as scattering parameters (S-parameters), which characterize components with respect to their reflective and transmissive behavior. S-parameters represent one of various possibilities for describing a linear electrical network, see

Figure 1. The S-parameters of individual components can be cascaded to determine the behavior of a larger system, such as a measurement chain. Consequently, they play an important role in radio frequency (RF) and microwave (MW) system design. Moreover, S-parameters are fundamental quantities in RF and MW metrology, as their determination is often a prerequisite for accurately measuring other RF and MW quantities—such as RF power or RF noise—to the need to account for impedance mismatch effects.

S-parameters are two-dimensional quantities and may be presented either in polar (amplitude and phase) or Cartesian (complex numbers) coordinates, as illustrated in

Figure 1.

S-parameters typically exhibit significant frequency dependence, necessitating repeated measurements at sufficiently small frequency intervals. For broad frequency ranges over tens of GHz, it is common to acquire several hundred measurement points to adequately capture the frequency response of the DUT.

A notable characteristic of VNAs is that the measurements are inherently inaccurate due to unavoidable systematic errors, if these errors are not corrected for. The errors arise from leakage, loss, mismatch effects, and variations in electrical paths within the measurement setups. They need to be determined by measuring a set of known characterized reflection and eventually also transmission standards, a process referred to as VNA calibration. The subsequent measurement with the DUT is then corrected with the error coefficients determined in VNA calibration, a process referred to as VNA error correction.

Various established VNA calibration algorithms are in use [

10], with their suitability depending on the specific measurement scenario. Influencing factors include the DUT characteristics, VNA architecture, availability of calibration standards, required accuracy, and practical constraints. VNA calibration and VNA error correction are based on error models, which are often represented graphically using so-called signal flow graphs. Signal flow graphs are particularly effective depicting linear networks, and established methods exist [

11,

12] for deriving mathematical equations that relate the measured S-parameters, the unknown S-parameters of the DUT, the characterization data of the calibration standards, and the error coefficients (systematic errors). These equations are used both to determine the error coefficients from the measurements of the calibration standards and to correct the DUT measurement.

The SI traceability of VNA measurements is established through the use of characterized calibration standards. At the top level, national metrology institutes (NMI) perform a primary electrical characterization of suitable calibration standards using a combination of analytical and numerical modeling, as well as dimensional and electrical measurements to establish direct traceability to the SI units [

13,

14,

15]. These primary standards are used to calibrate a VNA, which then can be employed to characterize a set of secondary calibration standards. In this way, traceability is disseminated through the various levels of the metrological pyramid to the end user, via sets of VNA calibration and verification standards. Calibration standards serve as reference standards for disseminating traceability, while verification standards provide an independent assessment of the validity of the measurement model and consequently also the associated uncertainty evaluation.

Central to the integrity of the traceability chain is the reliable determination of the measurement uncertainty associated with S-parameter measurements. According to the Guide to the Expression of Uncertainty in Measurement (GUM) [

16], uncertainty evaluation should be based on a measurement model that relates the observed quantity to the true value of the measurand, accounting for all relevant influences. In VNA metrology, the modern form of a measurement model is derived from the error models used for VNA calibration and VNA error correction. The signal flow graphs of the error models are further extended to include additional effects influencing the measurements, such as VNA linearity, drift and noise, cable movement, and connector repeatability [

10]. The mathematical equations derived from the signal flow graphs constitute the measurement models.

Due to the two-step measurement sequence (VNA calibration and VNA error correction) these equations become inherently complex. Furthermore, all terms in the model are two-dimensional, necessitating the use of multivariate methods [

17,

18,

19] to account for correlation effects. Many influencing factors exhibit a significant frequency dependence, meaning that uncertainty calculations must be repeated for each frequency point. Also, correlations between frequencies are present and should ideally be accounted for. They might become relevant when S-parameter data is processed further, e.g., when applying a Fourier transformation to evaluate the data in the time domain. Overall, the evaluation of the measurement uncertainty associated with VNA measurements is a demanding task, which requires dedicated software.

3. Measurement Models and Uncertainty Evaluation

In the following, we will denote unknown quantities by uppercase letters and their estimates by lowercase letters.

A measurement model is a fundamental element in the evaluation of uncertainties associated with the results of the measurements. It has the following basic mathematical form

In vector notation, this can be expressed as

Each component of the output quantity

is functionally dependent on the input quantities contained in

. The uncertainties associated with the measurements (estimates)

of the input quantities

are represented by a covariance (uncertainty) matrix

with squared standard uncertainties as the diagonal elements and covariances as the off-diagonal elements.

The correlations between input quantities are captured in the off-diagonal elements, which can be written as

with

denoting the correlation coefficient between

and

. It is well known, see, e.g., [

17], that the propagation of the uncertainties

, associated with

, to uncertainties

, associated with

, is governed by the following equation.

Here,

represents the Jacobian matrix (the ′ indicating the transpose), which contains the derivatives of the components in

y with respect to the components in

x.

The derivatives in (

3) are evaluated at the value of the estimate

x. The chosen syntax should be read as

These equations form the general mathematical framework of linear uncertainty propagation, as described in [

16,

17]. This approach relies on a linear approximation of the measurement model and is generally sufficient for a reliable evaluation of measurement uncertainties. In cases involving significant non-linearity, the GUM supplements [

17,

20] promote the use of the Monte Carlo method to propagate distributions representing the state of knowledge.

VNA Tools is to a large extent based on linear uncertainty propagation, mainly for performance reasons. Accordingly, the subsequent discussion will focus on the linear approach, although a similar framework can be implemented for Monte Carlo based uncertainty propagation.

For simple cases, such as a single output quantity without correlations, linear uncertainty propagation can be performed manually or using spreadsheet software. However, this approach becomes impractical when multiple output quantities, correlations, or complex equations are involved. In such scenarios, software-based computation is preferred. An intuitive approach involves explicitly programming the derivatives, i.e., the Jacobian matrix of the entire set of equations, and computing

using (

2).

In the following, we discuss how (

2) can be evaluated more efficiently using the technique of automatic or algorithmic differentiation (AD), see, e.g., [

21,

22,

23], and how the explicit storage of large covariance matrices can be avoided while still maintaining correlation tracking. The technique was introduced into metrology already some time ago [

24,

25,

26], and is based on uncertainty objects.

4. Uncertainty Objects

Any measurement model represented by an explicit analytical mathematical equation, as in (

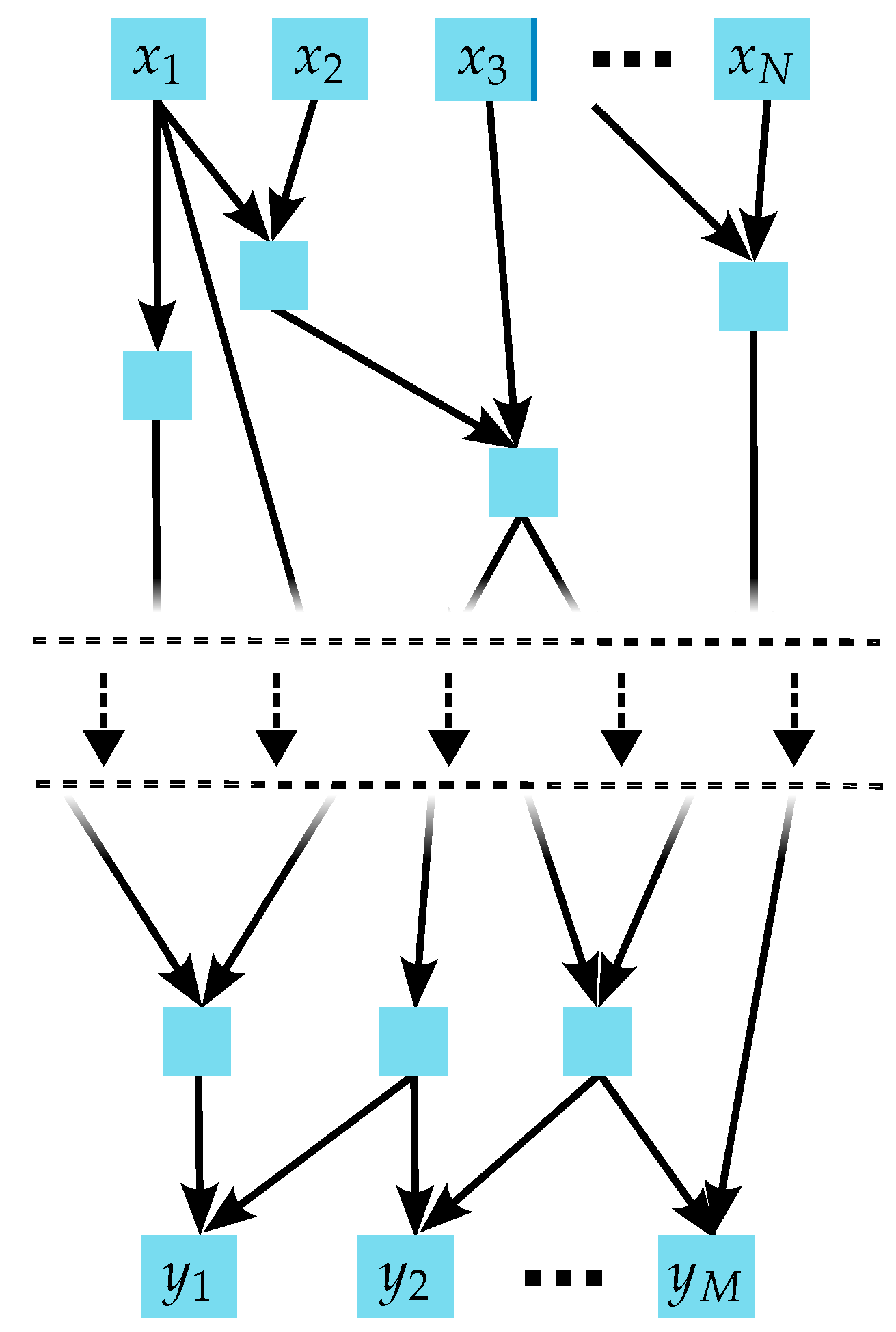

1), can be decomposed into a sequence of elementary operations and functions, such as the basic arithmetic operations (addition, multiplication, etc.) and transcendental functions (trigonometric, logarithmic, etc.). This decomposition is illustrated graphically in

Figure 2.

The top layer of blue boxes, labeled as

,

, represents the input quantities into the measurement model. The bottom layer, labeled

,

, corresponds to the output quantities. The intermediate blue boxes represent results of elementary operations with the arrows defining the inputs to these elementary operations. Each intermediate result serves as the input to the next elementary operation, ultimately constructing the full equation. Computers internally decompose arbitrarily complicated explicit analytical equations into such elementary steps, a process that can be exploited through the implementation of the technique of AD. Each blue box in

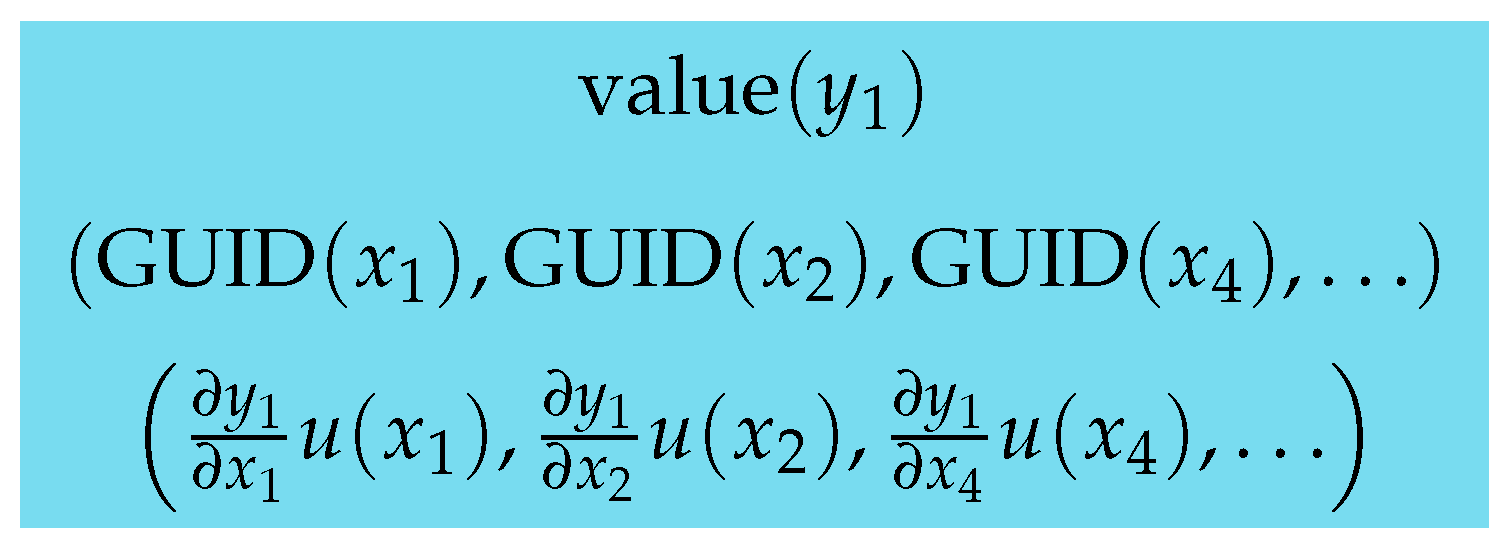

Figure 2 denotes an intermediate result, stored in memory as an uncertainty object. This object encapsulates the value, a list of dependencies, and an associated list of the products of sensitivities and uncertainties, as shown in

Figure 3.

The value represents the numerical estimate of the quantity. The list of dependencies identifies the relevant , on which the intermediate result depends. The list of sensitivities contains the derivatives of the intermediate result with respect to the relevant multiplied with the uncertainties associated with the . As the equation tree is traversed, these lists are updated at each step. For operations involving a single input, the dependency list remains unchanged. For operations involving two inputs (e.g., binary arithmetic operations), the two dependency lists are concatenated, with duplicate entries removed.

To ensure uniqueness and consistency, the

are assigned globally unique identifiers (GUIDs). GUIDs are automatically generated during the creation of uncertainty objects, following the RFC 9562 standard [

27]. This ensures a very low probability of collision. However, it remains the user’s responsibility to maintain a clear and unique mapping between input variables representing systematic effects and the corresponding stored uncertainty objects. Failure to uphold this correspondence—such as repeatedly generating uncertainty objects for the same systematic effect—may result in overlooked correlation effects, potentially compromising the integrity of the uncertainty evaluation. This is further illustrated in the mass example in

Section 6.

When the input quantities

are correlated, it is not necessary to store a covariance matrix. Instead, a Cholesky decomposition of the covariance matrix associated with the correlated inputs can be performed (see, e.g., [

28]):

Linear uncertainty propagation for

can then be expressed as:

The vector

is then stored in the uncertainty object, replacing the sensitivities multiplied by the standard uncertainties.

Figure 3 illustrates thus a simplified representation of an uncertainty object in the uncorrelated case.

Finally, the list of sensitivities is updated using the chain rule and sum rule of differentiation. This requires the explicit programming of the derivatives for the entire but also manageable set of elementary mathematical functions. Once implemented, derivatives of arbitrary complicated explicit equations can be computed automatically and with machine precision. For a more detailed introduction and examples, see [

24].

The use of AD eliminates the need to manually program derivatives of complex equations, thereby reducing the potential for coding errors. The object methods are written such that uncertainty propagation is implemented and object attributes are updated as described. Arithmetic operators and elementary mathematical functions can be overloaded, allowing calculations with uncertainty objects to be performed using the same syntax as with ordinary numbers. Uncertainty propagation occurs quasi-automatically in the background

With the information in the uncertainty objects, the covariance matrix for any set of intermediate or final results can be computed on demand using (

2). Because dependencies are tracked explicitly, there is no need to store large covariance matrices, which offers significant advantages for both the evaluation and communication of measurement uncertainties. Questions regarding the extent of correlation information to be calculated, stored and transmitted become obsolete. Providing the

and

objects suffices to compute uncertainties and correlations as needed. Furthermore, correlations of measurements on the same measurement system but, e.g., at different points in time will still be recognized, due to the unique identifiers of the shared input quantities.

5. METAS VNA Tools and METAS UncLib

VNA Tools is a free software suite that operates independently of the VNA firmware. The software controls VNA settings and measurement sequences directly from a PC, processes raw measurement data, and performs all calculations on the PC. It has a wide range of features, which are not further discussed here. Details can be found in [

6,

29,

30]. In the following the focus remains on the evaluation of measurement uncertainties and the seamless dissemination of digital traceability.

VNA Tools integrates several key components to facilitate comprehensive and reliable uncertainty evaluation in accordance with the EURAMET VNA Guide [

10], which advocates rigorous modeling of the entire measurement process. It incorporates relevant measurement models that consider all influence quantities across various calibration and error correction algorithms and different transmission line media. A dedicated database stores the characterization data of these influences, which are essential for determining the associated uncertainties. The database entries are measurement setup and component specific. They are based on characterization data and characterization measurements, which are also supported by

VNA Tools. The software also features a measurement journal that logs all relevant actions during the measurement process with time stamps, such as measurements, re-connections, and cable movements, thereby enabling accurate tracking of uncertainty contributions. Both random and systematic effects are appropriately addressed, with particular attention given to the time-dependent nature of drift and its correlation across measurements. Repeated measurements can be evaluated with a Type A analysis and expanded uncertainties with 95% coverage can be calculated. The software also generates comprehensive and detailed uncertainty budgets, which support further investigations by identifying dominant uncertainty sources and guiding improvements in measurement setups and procedures. A verification process is also supported to ensure the appropriateness of the applied measurement models.

Uncertainty propagation is handled by an engine based on the

METAS UncLib library [

8], which performs multivariate uncertainty propagation in accordance with GUM Supplement 2 [

17]. The library supports three different modes of uncertainty propagation, linear propagation (according to [

16,

17]), numerical propagation via Monte Carlo methods (according to [

17,

20]) and propagation based on higher order Taylor approximations of the measurement model.

METAS VNA Tools centers around the linear uncertainty propagation module

LinProp of the

UncLib. The

LinProp module adheres to the principles outlined in

Section 3 and

Section 4, encapsulating value, dependencies and sensitivities in so-called

LinProp objects. Input quantities for the measurement models are instantiated as

LinProp objects based on influence data stored in the

VNA Tools database.

From a data management perspective,

VNA Tools introduces a robust storage concept using dedicated and documented XML and binary formats [

31], capturing the complete dependency and sensitivity information of the

LinProp objects. This capability is particularly valuable for communicating measurement results, enabling modular and traceable uncertainty evaluation throughout the traceability chain. Export and import to and from other common data formats is supported as well. However, the export to other commonly used data formats in VNA metrology will generally lead to loss of information.

A further valuable and convenient feature of VNA Tools is its virtualization capability. NMIs and accredited calibration laboratories are required to declare their measurement scope as part of their quality assurance processes and for mutual recognition. This scope describes the laboratory’s measurement capabilities. In the case of S-parameters, it includes the frequency range covered and the associated best measurement uncertainties. These uncertainties depend not only on frequency but also on the value of the measurand, i.e., its magnitude and phase, making it cumbersome to evaluate best uncertainties by performing measurements across the entire three-dimensional parameter space. VNA Tools includes a built-in virtual VNA that simulates a series of measurements by sampling values across the full parameter space defined by the scope. It automatically generates tables of best uncertainties as functions of frequency and measurand values. These calculations are based on the uncertainty influences stored in the VNA Tools database and reliably provide achievable uncertainties for a given measurement setup.

6. Support of the Digital Traceability Chain

The delivery of a calibration certificate often marks a discontinuity in the traceability chain, as the recipient typically receives only a list of values and associated uncertainties. Efforts related to digital calibration certificates aim to address this issue by providing the data in electronic form and enabling machine-readable certificates. VNA Tools offers significant added value to the end user, extending beyond the mere distribution of certificates and calibration data, particularly through its advanced handling of uncertainties.

METAS issues S-parameter calibration certificates in digitally signed electronic format. For illustrative purposes, the certificates include a limited subset of the data in tabular form. The complete dataset is provided electronically and referenced in the certificate via a hash key, ensuring unique assignment and enabling the detection of any deliberate or accidental modifications. The certificates are issued in PDF/A3 format (ISO 19005-3) [

32], which supports direct embedding of calibration data within the certificate file. Embedding into a machine-readable digital calibration certificate, as promoted by [

33,

34], is also supported. The calibration data is stored in one of the documented

VNA Tools data formats [

31], allowing full restoration of the information contained in the

LinProp objects.

Due to the free availability of VNA Tools, recipients of calibration certificates have full flexibility in utilizing the calibration data. By loading the data into the software, users can reconstruct the LinProp objects with the exact same state of information as the provider of the certificate. This enables users to characterize their own working calibration standards based on the received characterized standards. The same mechanism applies when working standards are used to calibrate items at the next level of the traceability chain. In this way, traceability dissemination from one level to the next occurs without disruption or loss of information.

The approach to uncertainty handling, i.e., by keeping track of dependencies and sensitivities and by updating them at each calibration step, offers several advantages over traditional methods that rely solely on propagating uncertainties and covariance matrices. This mechanism ensures that the full uncertainty budget is propagated along the traceability chain and is incrementally supplemented with additional contributions from each measurement step. The end user thus gains access to the accumulated uncertainty budget of the entire traceability chain. This supports current trends in data provenance and scientific transparency and is valuable for systematic investigations of measurement processes. Furthermore, with the information contained in the

LinProp objects, users can display uncertainties and correlations on demand.

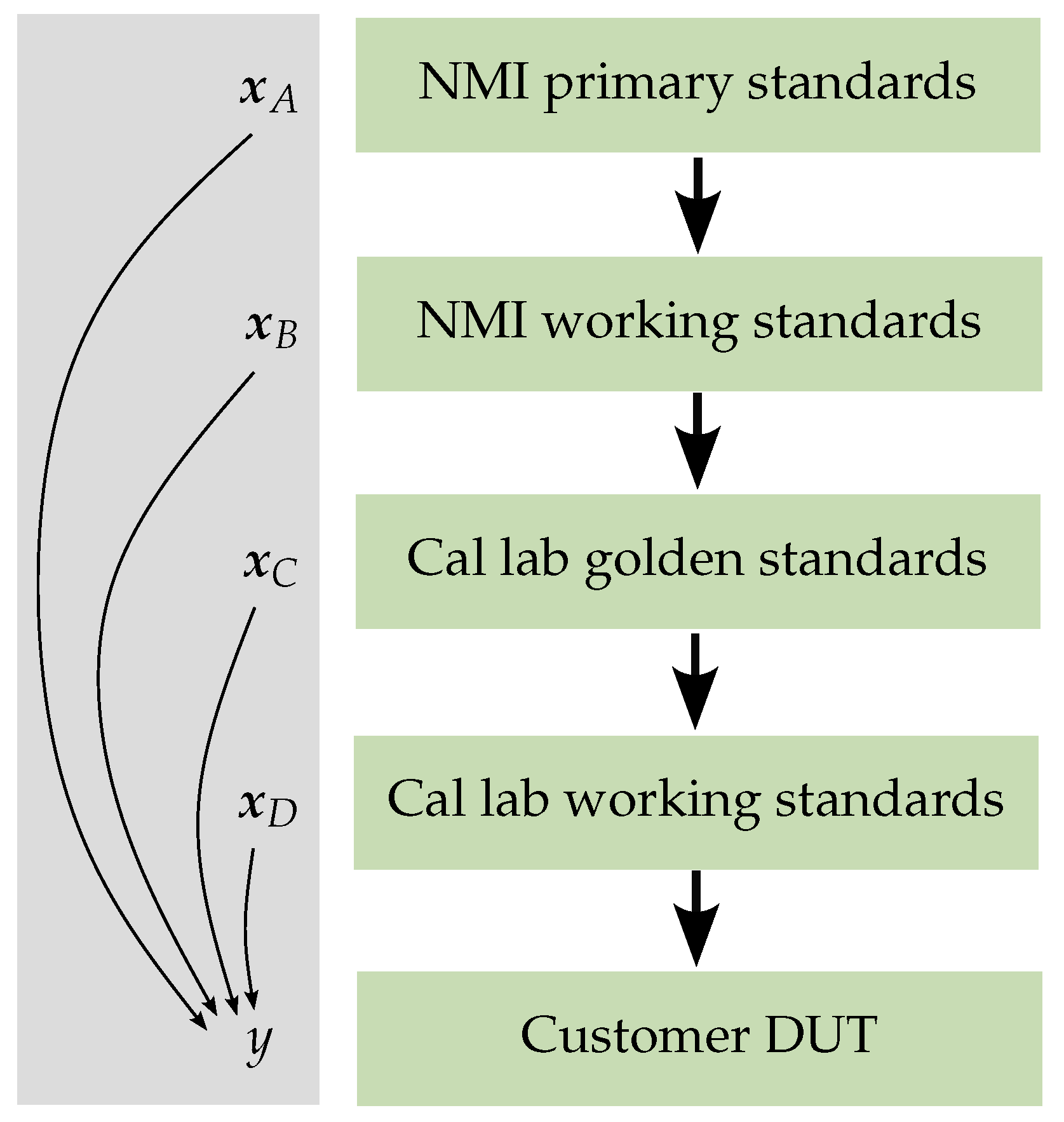

Figure 4 illustrates how a measurement of a customer’s DUT in a calibration laboratory can be traced back to a primary calibration at an NMI.

The schematic highlights the influence quantities affecting measurements at each level of the traceability chain, represented by the vectors , , , and . When the concept of uncertainty objects is applied consistently throughout the chain, the uncertainty object y, representing the measurement result of the customer’s DUT, inherently incorporates all these influences. These are captured in its vector of dependencies, each associated with corresponding sensitivity coefficients. As a result, the uncertainty budget of the entire traceability chain is intrinsically embedded within the uncertainty object y.

Traceability chains that deviate from a simple linear structure are not uncommon. Typically, multiple calibration and verification standards are used in a VNA measurement. These standards may not have been calibrated during the same campaign, but their characterization may still be traceable to common reference standards. Such correlations are recognized during uncertainty evaluation and verification. Thanks to the unique identifiers of dependencies within the LinProp objects, shared influences are properly accounted for, helping to prevent both underestimation and overestimation of measurement uncertainty in complex traceability scenarios.

As a simple illustrative example, we consider the calibration of two mass standards,

and

, which were calibrated by the same NMI or calibration laboratory, but at different times. For simplicity, we assume that both were measured as exactly 100 g. These two standards are then combined to form a single 200 g standard, which is subsequently used to calibrate a balance. We further assume a simplified measurement model was applied during the calibration of the two mass standards

Here, the measured value

is affected by a systematic error

and a random error

. It is not unrealistic to assume that

is the same in both characterizations, if they were compared against the same reference standard. However, when the characterization of the two mass standards is documented on separate calibration certificates, this correlation is typically not recognized. As a result, when the two masses are used together, the uncertainty associated with the combined mass standard

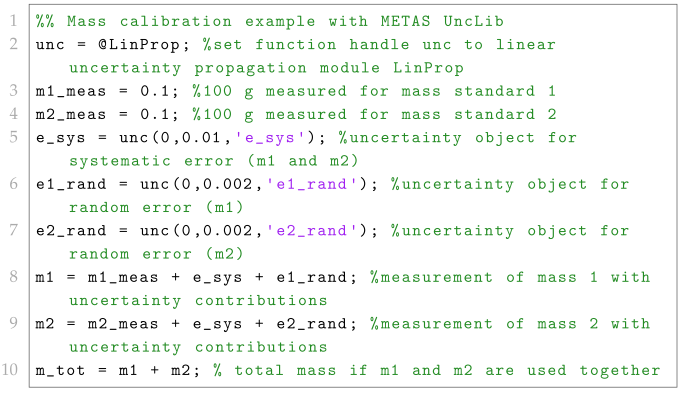

is underestimated due to the neglected correlation. This situation is illustrated by the sample MATLAB R2025b [

35] code shown in Listing 1.

| Listing 1. MATLAB code showing an example of two correlated mass standards used together in a

calibration. Details are discussed in the text. |

![Metrology 05 00072 i001 Metrology 05 00072 i001]() |

The sample code uses the MATLAB wrapper of the

METAS UncLib and should be largely self-explanatory thanks to the accompanying comments. It implements the simplified model given in Equation (

6) for the calibration of the two mass standards. The uncertainty object associated with the systematic error is created only once. If it were created separately for each mass, the correlation would not be captured, as the resulting uncertainty objects would have different GUIDs. In this specific example, neglecting the correlation would lead to an underestimation of the combined uncertainty by nearly 30%.

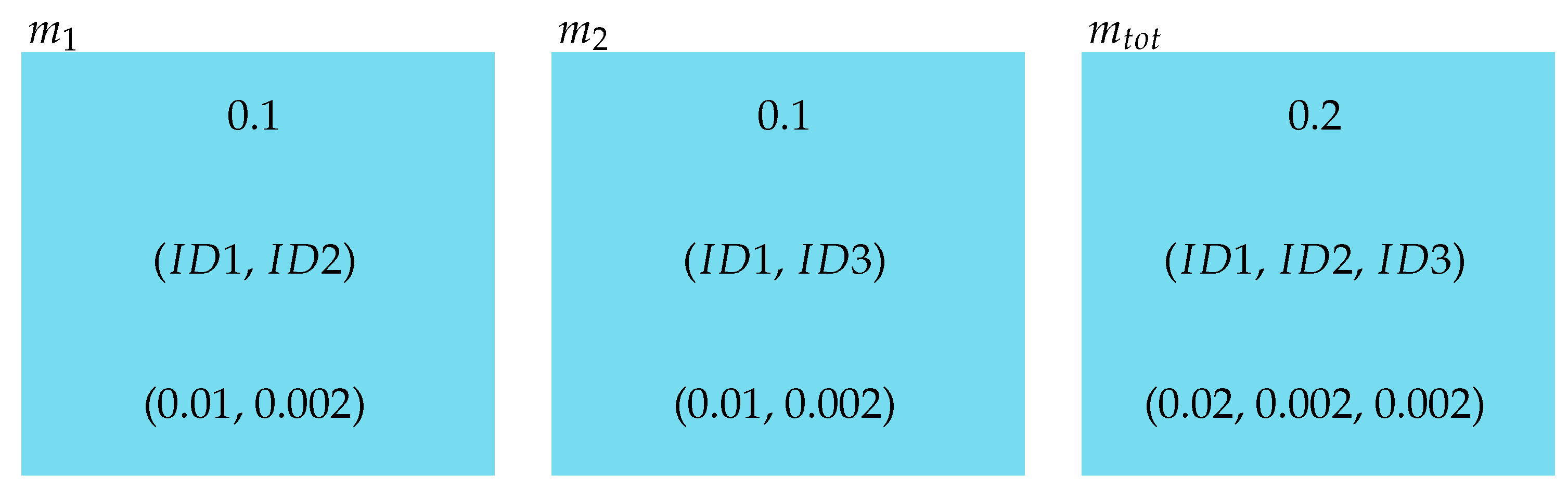

The uncertainty objects representing

,

and

are shown in

Figure 5. The GUID labeled

represents the systematic uncertainty contribution and appears in both

and

, thereby ensuring that the correlation is correctly accounted for when the two masses are combined into

. Thanks to the concept of uncertainty objects, correlation information is preserved via the GUIDs, eliminating the need to transmit covariance matrices.

In the example MATLAB code, the uncertainty object related to the systematic influence is created at runtime. Each time the program is executed, a new object with a unique GUID is generated. In a real-world implementation, however, uncertainty objects associated with systematic influences should be stored externally, e.g., in a file, and loaded during program execution. This ensures that correlations arising from repeated use of a reference standard at different times and in different measurement scenarios are properly taken into account. VNA Tools uses database entries for this purpose.

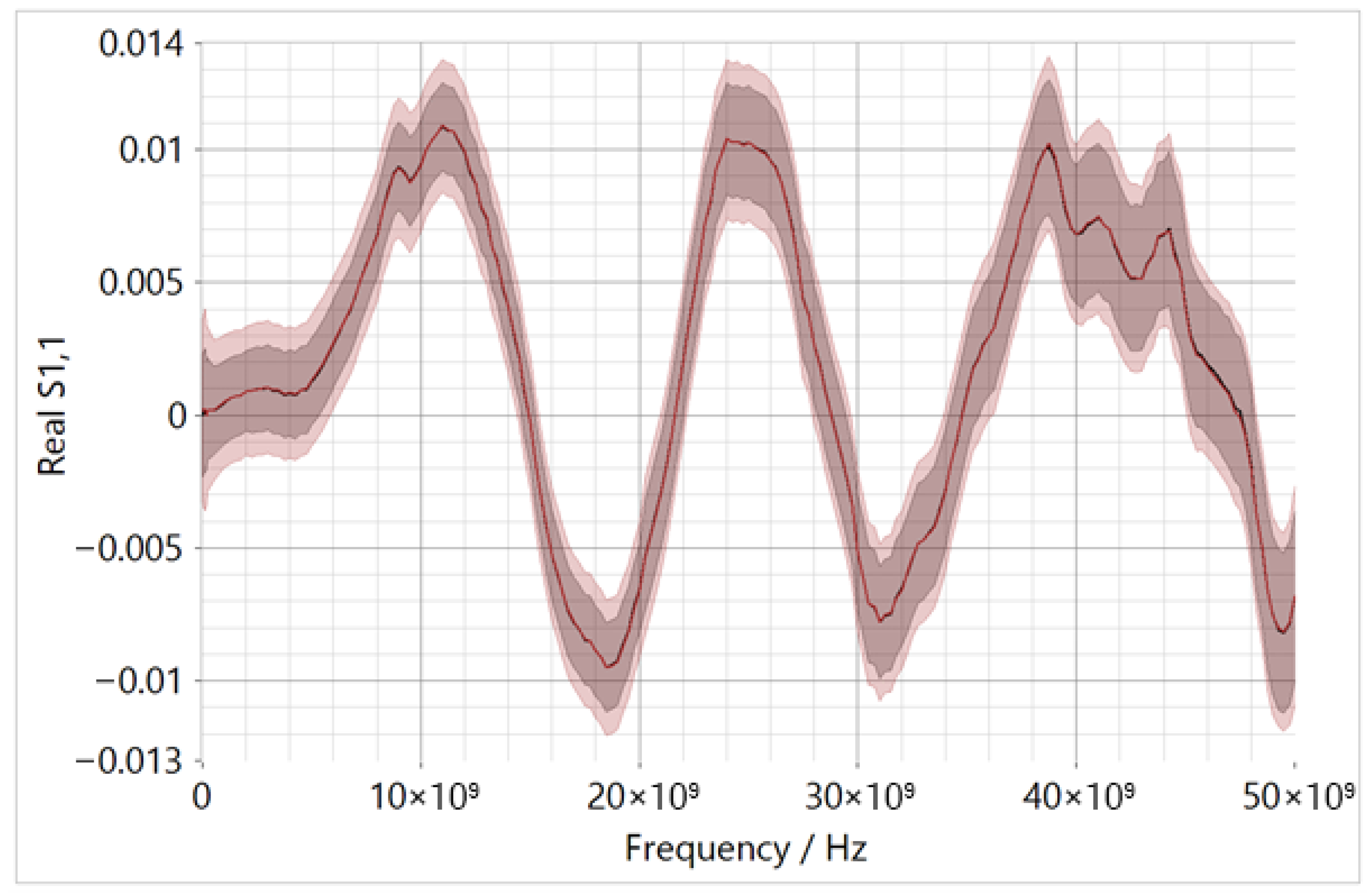

A more advanced example from VNA metrology, illustrating the impact of neglecting correlation effects, is shown in

Figure 6.

The figure shows the VNA measurement of a load (DUT) across a frequency range, specifically displaying the real component of . The VNA was calibrated using a set of calibration standards, with more standards employed than required. This practice, common at high-accuracy levels, leads to an over-determined system with more equations than unknowns for determining the VNA’s error coefficients. Consequently, a regression-based solution is applied. VNA Tools includes a dedicated algorithm, referred to as optimization calibration, for this purpose. In the example shown, multiple calibration loads were used. The uncertainty interval shown in black was calculated without accounting for correlations between the calibration standards, while the red interval includes these correlations. The significant difference between the two highlights the importance of properly considering correlation effects in uncertainty evaluation.

7. Discussion

Providing uncertainty and correlation information through LinProp objects introduces a certain level of abstraction. However, achieving the same level of comprehensiveness and integrity in the traceability chain using direct representations of uncertainties and covariances would be difficult, if not impossible.

End users, however, have different needs and may not be interested in the full detail offered by the mechanisms described thus far. Practical constraints, such as file size limitations, may also apply. Disseminating LinProp objects along the traceability chain can result in file sizes reaching tens or even hundreds of megabytes. To accommodate different needs and limitations, VNA Tools provides the capability to truncate dependencies and replace them with uncertainties and covariances at any desired level. This reduces file size at the expense of some information loss.

Commercial solutions are also available [

36,

37] that incorporate the

VNA Tools suite. These tools offer a more guided and controlled approach to VNA measurements, which may be advantageous for less experienced users, compared to the flexibility provided by

VNA Tools. These commercial solutions maintain a high level of interoperability with

VNA Tools through its documented data formats [

31]. For example, the solution described in [

36] allows exporting the entire project, including the measurement journal, for further analysis in

VNA Tools. This feature is particularly useful when a technician performing routine calibration encounters an unusual result that requires more systematic investigation by a metrology specialist.

These commercial tools interact with VNA Tools via the Real Time Interface (RTI), a stable, high-level software interface that provides access to VNA Tools functionality. Many measurement laboratories already operate within overarching software environments that support testing, calibration, development, or production processes. The RTI facilitates the integration of VNA Tools into such environments, thereby promoting its adoption and usability.

The combination of interoperability, flexibility, and adaptability has made

VNA Tools suitable for use across all levels of the traceability pyramid—from national metrology institutes to industrial laboratories. As a result, the software, along with its underlying models and data formats, has evolved into a quasi-standard that helps overcome fragmented structures and supports the digital transformation of metrology [

38].

Overall,

VNA Tools contributes to the development towards a truly digital and seamless traceability chain, thereby promoting good metrological practice. Key elements include the use of uncertainty objects, which enable the propagation of uncertainty budgets and the tracking of correlations across diverse traceability chain designs. Equally important is the support provided to users beyond the calibration certificate, with flexibility and customizability tailored to specific needs. There is potential to extend this approach further by embedding the measurement journal and, potentially, the measurement models directly into the certificates, thereby advancing the concept of full data provenance [

39] or data lineage in metrology.

Finally,

METAS UncLib is freely available as a standalone, general-purpose uncertainty engine that incorporates the essential mechanisms discussed. It supports not only explicit measurement models as shown by Equation (

1), but also non-analytical scenarios. An example is the nonlinear regression used in the VNA example discussed in

Section 6. In such cases, the numerical routine is first executed without uncertainty evaluation, and the uncertainty propagation is subsequently performed at the solution point. Ongoing development efforts aim to extend support to measurement models involving differential equations.

METAS UncLib can be applied in other areas of metrology to implement systems similar to VNA Tools for VNA measurements. Solutions in other domains that utilize uncertainty objects carrying complete traceability information—readily shareable with customers—can play a pivotal role in driving the digitalization of the global metrology infrastructure.