Tetanus Severity Classification in Low-Middle Income Countries through ECG Wearable Sensors and a 1D-Vision Transformer

Abstract

1. Introduction

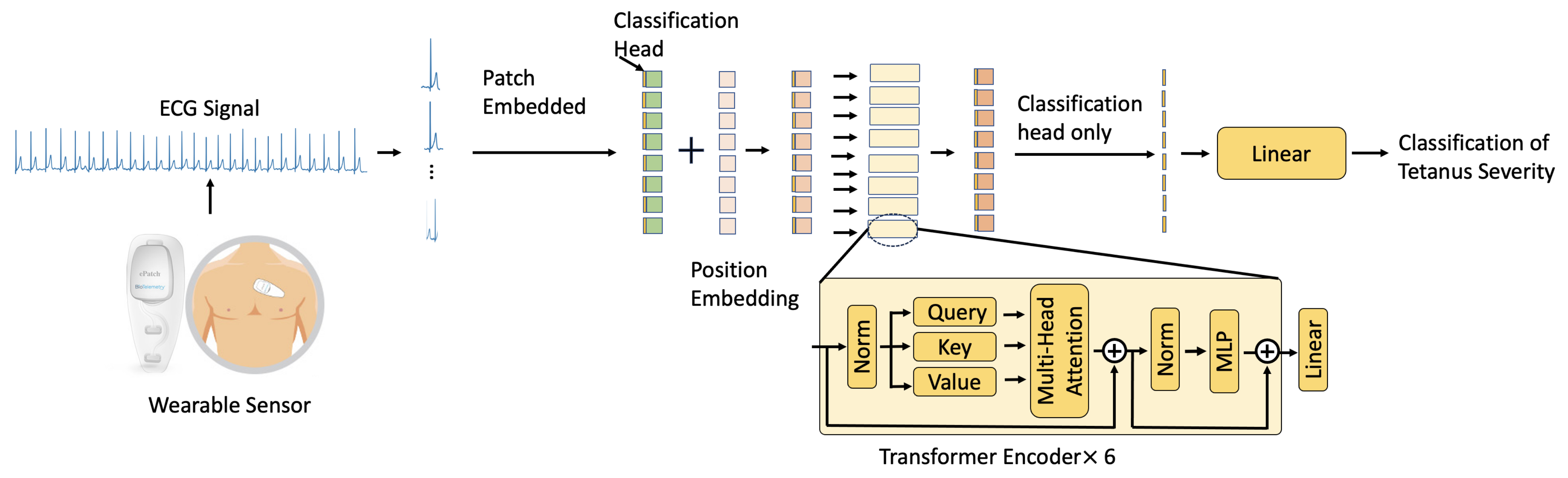

- We present a 1D-Vision Transformer model equipped with a self-attention mechanism that enables it to evaluate and assign importance to elements within the input ECG time series data while processing each specific element.

- This is the first time that a 1D Transformer-based method has been investigated to classify the severity of tetanus in LMICs. The proposed 1D-Vision Transformer outperforms the performance of the state-of-the-art 1D and 2D CNN methods in tetanus classification. It promises to improve clinical decision making in resource-constrained settings.

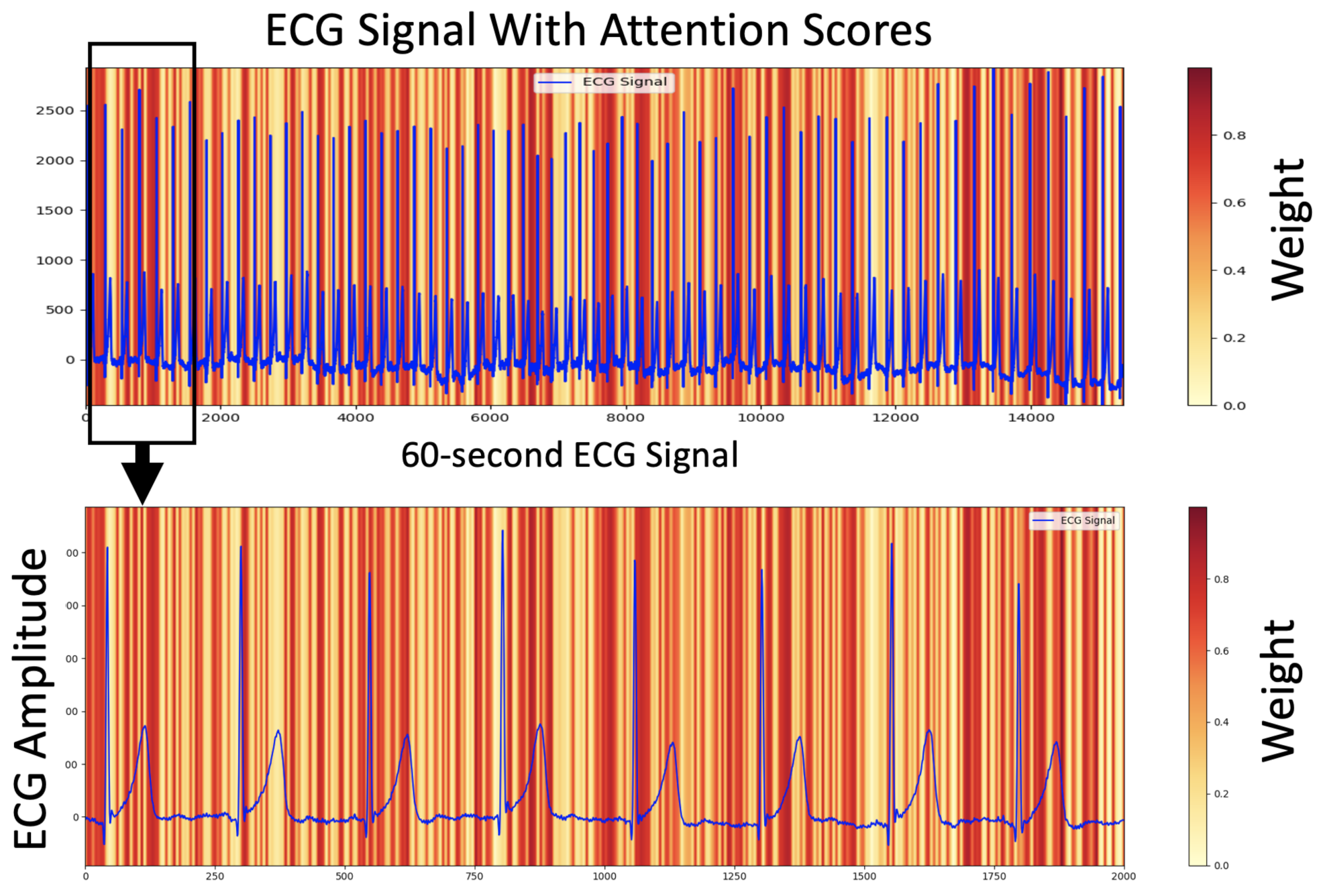

- We illustrate the relationship between the ECG signal and the proposed AI model’s decision using attention scores, showing how the signal exerts varying degrees of influence through different weights.

2. Related Work

3. Method

3.1. Data Pre-Processing

3.2. 1D-Vision Transformer

Multi-Head Self-Attention

4. Experiments

4.1. Recording ECG Data in Tetanus Patients

4.2. Implementation Details

4.3. Baselines

4.4. Evaluation Metrics

5. Experimental Results

5.1. Data Pre-Processing Analysis

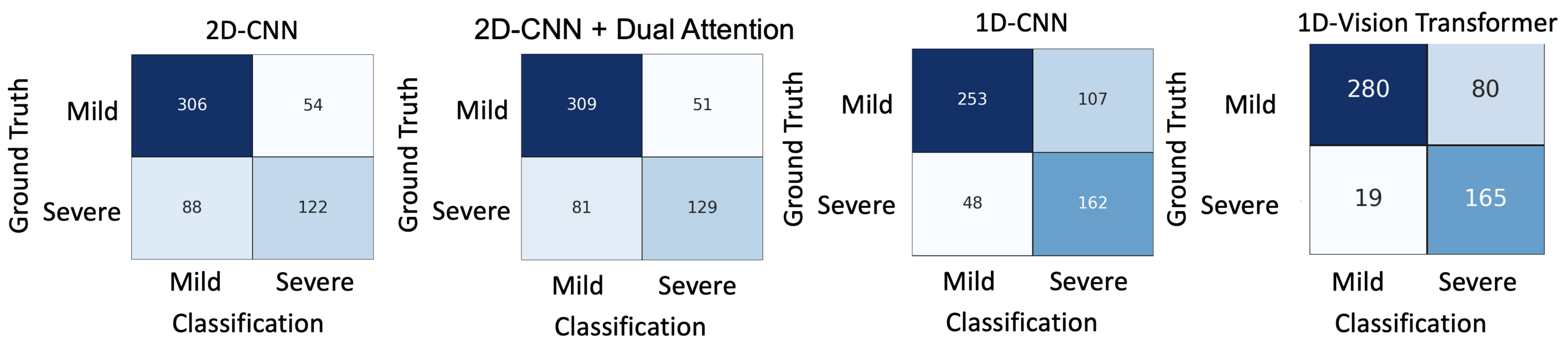

5.2. Comparisons

5.3. Interpretable ECG

5.4. Misclassification

6. Discussion

7. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Thwaites, C.L.; Yen, L.M.; Glover, C.; Tuan, P.Q.; Nga, N.T.N.; Parry, J.; Loan, H.T.; Bethell, D.; Day, N.P.J.; White, N.J.; et al. Predicting the clinical outcome of tetanus: The tetanus severity score. Trop. Med. Int. Health 2006, 11, 279–287. [Google Scholar] [PubMed]

- Yen, L.M.; Thwaites, C.L. Tetanus. Lancet 2019, 393, 1657–1668. [Google Scholar] [CrossRef] [PubMed]

- Thuy, D.B.; Campbell, J.I.; Thanh, T.T.; Thuy, C.T.; Loan, H.T.; Van Hao, N.; Minh, Y.L.; Boni, M.F.; Thwaites, C.L. Tetanus in southern Vietnam: Current situation. Am. J. Trop. Med. Hyg. 2017, 96, 93. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Liu, Z.; Yu, C.; Tan, K.; Gui, S.; Zhang, S.; Shen, Y. Global epidemiology and burden of tetanus from 1990 to 2019: A systematic analysis for the Global Burden of Disease Study 2019. Int. J. Infect. Dis. 2023, 132, 118–126. [Google Scholar] [PubMed]

- The Importance of Diagnostic Tests in Fighting Infectious Diseases. Available online: https://www.lifechanginginnovation.org/medtech-facts/importance-diagnostic-tests-fighting-infectious-diseases.html (accessed on 6 October 2021).

- Van, H.M.T.; Hao, N.V.; Phan Nguyen Quoc, K.; Hai, H.B.; Khoa, L.D.V.; Yen, L.M.; Nhat, P.T.H.; Hai Duong, H.T.; Thuy, D.B.; Zhu, T.; et al. Vital sign monitoring using wearable devices in a Vietnamese intensive care unit. BMJ Innov. 2021, 7, S7–S11. [Google Scholar]

- Mahieu, R.; Reydel, T.; Maamar, A.; Tadié, J.M.; Jamet, A.; Thille, A.W.; Chudeau, N.; Huntzinger, J.; Grangé, S.; Beduneau, G.; et al. Admission of tetanus patients to the ICU: A retrospective multicentre study. Ann. Intensive Care 2017, 7, 1–7. [Google Scholar] [CrossRef]

- Hung, T.M.; Van Hao, N.; Yen, L.M.; McBride, A.; Dat, V.Q.; van Doorn, H.R.; Loan, H.T.; Phong, N.T.; Llewelyn, M.J.; Nadjm, B.; et al. Direct Medical Costs of Tetanus, Dengue, and Sepsis Patients in an Intensive Care Unit in Vietnam. Front. Public Health 2022, 10, 1665. [Google Scholar] [CrossRef]

- Hung, T.M.; Clapham, H.E.; Bettis, A.A.; Cuong, H.Q.; Thwaites, G.E.; Wills, B.A.; Boni, M.F.; Turner, H.C. The estimates of the health and economic burden of dengue in Vietnam. Trends Parasitol. 2018, 34, 904–918. [Google Scholar] [CrossRef]

- Joshi, M.; Ashrafian, H.; Aufegger, L.; Khan, S.; Arora, S.; Cooke, G.; Darzi, A. Wearable sensors to improve detection of patient deterioration. Expert Rev. Med Devices 2019, 16, 145–154. [Google Scholar] [CrossRef]

- Lu, P.; Ghiasi, S.; Hagenah, J.; Hai, H.B.; Hao, N.V.; Khanh, P.N.Q.; Khoa, L.D.V.; VITAL Consortium; Thwaites, L.; Clifton, D.A.; et al. Classification of Tetanus Severity in Intensive-Care Settings for Low-Income Countries Using Wearable Sensing. Sensors 2022, 22, 6554. [Google Scholar] [CrossRef]

- Lu, P.; Wang, C.; Hagenah, J.; Ghiasi, S.; Zhu, T.; Thwaites, L.; Clifton, D.A. Improving Classification of Tetanus Severity for Patients in Low-Middle Income Countries Wearing ECG Sensors by Using a CNN-Transformer Network. IEEE Trans. Biomed. Eng. 2022, 70, 1340–1350. [Google Scholar] [CrossRef] [PubMed]

- Lu, H.; Clifton, D.; Lu, P.; Hirst, J.; MacKillop, L. A Deep Learning Approach of Blood Glucose Predictive Monitoring for Women with Gestational Diabetes. Res. Sq. 2023. [Google Scholar] [CrossRef]

- Chauhan, V.K.; Molaei, S.; Tania, M.H.; Thakur, A.; Zhu, T.; Clifton, D.A. Adversarial De-confounding in Individualised Treatment Effects Estimation. In Proceedings of the 26th International Conference on Artificial Intelligence and Statistics, PMLR, Valencia, Spain, 25–27 April 2023; Volume 206, pp. 837–849. [Google Scholar]

- Chauhan, V.K.; Thakur, A.; O’Donoghue, O.; Rohanian, O.; Clifton, D.A. Continuous Patient State Attention Models. medRxiv 2022. [Google Scholar] [CrossRef]

- Salaun, A.; Knight, S.; Wingfield, L.R.; Zhu, T. Interpretable Machine Learning in Kidney Offering: Multiple Outcome Prediction for Accepted Offers. medRxiv 2023. [Google Scholar] [CrossRef]

- Lu, P.; Barazzetti, L.; Chandran, V.; Gavaghan, K.A.; Weber, S.; Gerber, N.; Reyes, M. Super-Resolution Classification Improves Facial Nerve Segmentation from CBCT Imaging. In Proceedings of the CURAC, Saskatoon, SK, Canada, 27 May 2016; pp. 143–144. [Google Scholar]

- Lu, P.; Barazzetti, L.; Chandran, V.; Gavaghan, K.; Weber, S.; Gerber, N.; Reyes, M. Facial nerve image enhancement from CBCT using supervised learning technique. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 2964–2967. [Google Scholar]

- Lu, P.; Bai, W.; Rueckert, D.; Noble, J.A. Multiscale graph convolutional networks for cardiac motion analysis. In Proceedings of the International Conference on Functional Imaging and Modeling of the Heart, Stanford, CA, USA, 21–25 June 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 264–272. [Google Scholar]

- Ghiasi, S.; Zhu, T.; Lu, P.; Hagenah, J.; Khanh, P.N.Q.; Hao, N.V.; Vital Consortium; Thwaites, L.; Clifton, D.A. Sepsis Mortality Prediction Using Wearable Monitoring in Low–Middle Income Countries. Sensors 2022, 22, 3866. [Google Scholar] [CrossRef] [PubMed]

- Tadesse, G.A.; Zhu, T.; Le Nguyen Thanh, N.; Hung, N.T.; Duong, H.T.H.; Khanh, T.H.; Van Quang, P.; Tran, D.D.; Yen, L.M.; Van Doorn, R.; et al. Severity detection tool for patients with infectious disease. Healthc. Technol. Lett. 2020, 7, 45–50. [Google Scholar] [PubMed]

- Tadesse, G.A.; Javed, H.; Thanh, N.L.N.; Thi, H.D.H.; Thwaites, L.; Clifton, D.A.; Zhu, T. Multi-modal diagnosis of infectious diseases in the developing world. IEEE J. Biomed. Health Inform. 2020, 24, 2131–2141. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Tang, Y.; Nath, V.; Yang, D.; Myronenko, A.; Landman, B.; Roth, H.R.; Xu, D. Unetr: Transformers for 3d medical image segmentation. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 574–584. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. Transunet: Transformers make strong encoders for medical image segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

- Zhao, C.; Droste, R.; Drukker, L.; Papageorghiou, A.T.; Noble, J.A. Visual-Assisted Probe Movement Guidance for Obstetric Ultrasound Scanning Using Landmark Retrieval. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; Springer: Berlin/Heidelberg, Germany, 2021; pp. 670–679. [Google Scholar]

- Zhang, J.; Li, C.; Liu, G.; Min, M.; Wang, C.; Li, J.; Wang, Y.; Yan, H.; Zuo, Z.; Huang, W.; et al. A CNN-transformer hybrid approach for decoding visual neural activity into text. Comput. Methods Programs Biomed. 2022, 214, 106586. [Google Scholar] [CrossRef]

- Wu, H.; Chen, S.; Chen, G.; Wang, W.; Lei, B.; Wen, Z. FAT-Net: Feature adaptive transformers for automated skin lesion segmentation. Med. Image Anal. 2022, 76, 102327. [Google Scholar]

- Lu, P.; Creagh, A.P.; Lu, H.Y.; Hai, H.B.; Consortium, V.; Thwaites, L.; Clifton, D.A. 2D-WinSpatt-Net: A Dual Spatial Self-Attention Vision Transformer Boosts Classification of Tetanus Severity for Patients Wearing ECG Sensors in Low-and Middle-Income Countries. Sensors 2023, 23, 7705. [Google Scholar] [CrossRef] [PubMed]

- Byeon, Y.H.; Kwak, K.C. Pre-configured deep convolutional neural networks with various time-frequency representations for biometrics from ECG signals. Appl. Sci. 2019, 9, 4810. [Google Scholar]

- Virtanen, P.; Gommers, R.; Oliphant, T.E.; Haberland, M.; Reddy, T.; Cournapeau, D.; Burovski, E.; Peterson, P.; Weckesser, W.; Bright, J.; et al. SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python. Nat. Methods 2020, 17, 261–272. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Bradley, A.P. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef]

| Parameters | ||

|---|---|---|

| in_channels | 1 | the number of channels of the image |

| patch size | 48 | the size (resolution) of each patch |

| num_transformer_layer | 6 | the number of Transformer blocks |

| embed_dim | 384 | the embedding dimension |

| Mlp_size | 1024 | the number of neurons in the hidden layer |

| num_heads | 6 | the number of heads |

| mlp_drouppout | 0.1 | the dropout for the MLP layers |

| embedding_dropout | 0.1 | the dropout for the embeddings |

| num_class | 2 | the number of classes |

| 1D-Vision Transformer | F1-Score | Precision | Recall | Specificity | Accuracy | AUC |

|---|---|---|---|---|---|---|

| without data pre-processing | 0.74 ± 0.04 | 0.64 ±0.07 | 0.89 ± 0.04 | 0.73 ± 0.08 | 0.78 ± 0.05 | 0.81 ± 0.03 |

| with data pre-processing | 0.77 ± 0.06 | 0.70 ± 0.09 | 0.89 ± 0.13 | 0.78 ± 0.12 | 0.82 ± 0.06 | 0.84 ± 0.05 |

| The Time Series Image as the Input | ||||||

|---|---|---|---|---|---|---|

| Method | F1-Score | Precision | Recall | Specificity | Accuracy | AUC |

| 2D-CNN [11] | 0.61 ± 0.14 | 0.68 ± 0.07 | 0.57 ± 0.19 | 0.85 ± 0.02 | 0.75 ± 0.07 | 0.72 ± 0.09 |

| 2D-CNN + Dual Attention [11] | 0.65 ± 0.19 | 0.71 ± 0.17 | 0.61 ± 0.21 | 0.86 ± 0.09 | 0.76 ± 0.11 | 0.74 ± 0.13 |

| The ECG as the Input | ||||||

| Method | F1-Score | Precision | Recall | Specificity | Accuracy | AUC |

| 1D-CNN [11] | 0.65 ± 0.14 | 0.61 ± 0.05 | 0.77 ± 0.25 | 0.70 ± 0.13 | 0.73 ± 0.05 | 0.74 ± 0.08 |

| Proposed 1D-Vision Transformer | 0.77 ± 0.06 | 0.70 ± 0.09 | 0.89 ± 0.13 | 0.78 ± 0.12 | 0.82 ± 0.06 | 0.84 ± 0.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lu, P.; Wang, Z.; Ha Thi, H.D.; Hai, H.B.; VITAL Consortium; Thwaites, L.; Clifton, D.A. Tetanus Severity Classification in Low-Middle Income Countries through ECG Wearable Sensors and a 1D-Vision Transformer. BioMedInformatics 2024, 4, 285-294. https://doi.org/10.3390/biomedinformatics4010016

Lu P, Wang Z, Ha Thi HD, Hai HB, VITAL Consortium, Thwaites L, Clifton DA. Tetanus Severity Classification in Low-Middle Income Countries through ECG Wearable Sensors and a 1D-Vision Transformer. BioMedInformatics. 2024; 4(1):285-294. https://doi.org/10.3390/biomedinformatics4010016

Chicago/Turabian StyleLu, Ping, Zihao Wang, Hai Duong Ha Thi, Ho Bich Hai, VITAL Consortium, Louise Thwaites, and David A. Clifton. 2024. "Tetanus Severity Classification in Low-Middle Income Countries through ECG Wearable Sensors and a 1D-Vision Transformer" BioMedInformatics 4, no. 1: 285-294. https://doi.org/10.3390/biomedinformatics4010016

APA StyleLu, P., Wang, Z., Ha Thi, H. D., Hai, H. B., VITAL Consortium, Thwaites, L., & Clifton, D. A. (2024). Tetanus Severity Classification in Low-Middle Income Countries through ECG Wearable Sensors and a 1D-Vision Transformer. BioMedInformatics, 4(1), 285-294. https://doi.org/10.3390/biomedinformatics4010016