1. Introduction

For nearly two decades, Unmanned Aerial Systems (UAS) have been operated by researchers, practitioners, and conservationists as a flexible and capable pathway to acquire high spatial resolution remotely sensed data. While UAS are not a novel technology with many authors recognizing a long history of military development throughout the 20th century, recent advances in computer hardware and software have provided an increasingly beneficial role for this platform in various natural resource disciplines [

1,

2,

3,

4]. UAS, which are also referred to as Unpiloted Aerial Vehicles (UAV), drones, Remotely Piloted Aircraft (RPA), and aerial robotics, combine a system of sensing, communication, and control components to offer end-users the ability to collect project-specific remotely sensed data [

1,

5,

6]. The combination of these technologies presents a broad niche for UAS as a steppingstone between field-based sampling and coarser resolution remote sensing platforms. UAS offer the ability to cover larger areas than many field-based sampling approaches, while avoiding the burdens of cost and resolution (temporal and spatial) experienced with collecting remotely sensed data using piloted aircraft or satellite sensors [

7,

8]. There is a critical need for local scale information in many natural resource settings, including forests, freshwater ecosystems, grasslands and shrublands, and croplands [

9,

10,

11,

12]. Remotely sensed data, especially those derived from UAS, can be used as a basis for spatial inferences at these local scales [

1,

13]. While UAS are not a total solution for such data needs, they offer a powerful tool for supplementing and/or complementing other sampling and analysis approaches. To maximize the benefits experienced by integrating UAS, it is important to follow the best practices for collecting and processing the associated remotely sensed data [

6,

14,

15]. However, for many UAS applications there is still a lack of established methods to follow. Despite the increasing rate of adoption and refinement of UAS that has occurred over the last decade, there remains a realized gap in the collective knowledge and reporting of successful methods for acquiring and processing UAS data for photogrammetric applications [

16,

17,

18].

The confluence of principles in remote sensing, computer vision, geodesy, and geographic information systems (GIS) have formed a powerful association between UAS and digital aerial photogrammetry (DAP). DAP has built upon conventional photogrammetric mapping by automatically matching and processing the geometry of scenes, even with uncalibrated imagery, over large areas and complex environments [

19,

20]. Mathematical workflows, including Structure-from-Motion (SfM) and multi-view stereo (MVS) algorithms, have been linked to create an assortment of ultra-high-resolution spatial data products including photogrammetric point clouds, digital surface models (DSM), and orthomosaics [

20,

21,

22]. Although SfM-MVS consists of several stages of internal and external parameter corrections, as well as stages for point cloud and surface reconstruction; here, we have grouped these processes under the umbrella of DAP as a way of discussing the full suite of two-dimensional (2D) and 3D spatial data products generated from this workflow [

16,

23,

24]. For a full description of the SfM-MVS workflow, we refer to Westoby et al. [

24], Ludwig et al. [

17], and Tinkham and Swayze [

18]. One of the main advantages of DAP over conventional digital photogrammetry is the lack of requirement for highly consistent levels of overlap, camera positions, and internal calibration for the collected imagery [

20,

22,

25]. The more flexible processing framework has made UAS an ideal tool for collecting the input data for DAP, thereby lowering both the costs and technical barriers for generating highly precise and detailed 2D and 3D models [

20,

22]. In addition to solely image-based UAS analysis, UAS-light detection and ranging (lidar) applications have also become a practical reality for some natural resource applications [

26,

27,

28]. Like other complex sensors (e.g., hyperspectral or thermal) UAS-lidar showcases a promising future for UAS applications in natural resources, as a means of meeting the needs of individual and local scale end-users.

Over the last five years, methods for monitoring and managing natural resources have become increasingly digital. The increased adoption of digital tools and technologies has allowed for new research questions to be raised and historical challenges to be met from a new perspective [

29,

30]. Several natural resource disciplines have exemplified the adoption of these novel technologies including forestry, freshwater ecosystems, grasslands and shrublands, and agriculture. Forestry research has historically been conducted using traditional field sampling techniques, satellite imagery, or with imagery acquired from piloted aircraft [

31,

32,

33]. However, as UAS technologies and DAP processing have improved in accuracy and precision, consumer and commercial grade solutions have become accessible, creating a larger subgenre of forestry applications. Grasslands and shrublands, including a variety of non-forested vegetation communities, represent some of the most extensive ecosystems on the planet, yet still experience substantial degradation and overutilization [

34,

35,

36,

37]. A historic difficulty in mapping grassland and shrubland communities and the lack of transferability of best practices have been major challenges [

38,

39]. Freshwater ecosystems, including streams, rivers, reservoirs, and lakes, represent another challenge for remote sensing historically. Observations and analyses by satellite and airborne sensor data have been restricted, due in part to the coarse spatial and temporal resolutions [

40,

41]. UAS have been used to successfully collect imagery of freshwater ecosystem at scales relevant to a variety of objectives. Additionally, UAS have provided a path for expanding the coverage of in situ sampling, whether the sites be difficult to access or too large to cover by traditional methods [

42]. Example applications of UAS in freshwater ecosystems include collecting and analyzing aquatic vegetation, water quality monitoring, and the detection and measurement of cyanobacteria [

43,

44,

45,

46]. A similar pattern for remote sensing technologies has emerged for applications in agriculture. The historic integration of satellite and aerial sensor data is now being supplemented, or in some cases replaced, by users willing to adopt near-range remote sensing platforms such as UAS [

47,

48,

49]. As global food systems are increasingly challenged by climate change, the adoption of novel technologies, such as UAS, will provide new and adaptable solutions for existing agriculture mechanisms [

2,

48].

As UAS are increasingly integrated into multi-source data solutions for solving complex problems in natural resources, it becomes increasingly imperative that their capabilities and limitations are fully established [

14,

17,

50,

51]. Two of the primary components influencing the success of integrating UAS to meet challenges in natural resource monitoring and management are the acquisition and processing of the resulting sensor data [

17]. Many studies in the past have voiced a need for quantitatively evaluating the operational practices of UAS [

1,

21,

52,

53]. Characteristics such as the flying height of the aircraft, the overlap of the flight lines, the sensor choice, and the processing parameter settings have each been found to influence the model performance and subsequent estimation accuracy [

20,

54,

55,

56]. Despite the long-standing need for specific UAS operational guidelines, many modern UAS applications still acknowledge a gap in their ability to provide conclusive and accessible workflows, which could generate consistently optimal results [

6,

15,

18,

42]. For this reason, this review paper provides a synthesis of the current practices and trends in UAS flight planning and sensor data processing across several natural resource disciplines and provides responses to the following questions:

What Unmanned Aerial System (UAS) hardware and software characteristics have been preferred by current applications in natural resources, and how widely are these characteristics discussed?

What capabilities and limitations have been discussed for UAS applications in natural resource monitoring and management?

What are the primary perspectives on future research for UAS applications in specific natural resource disciplines?

This review paper is structured as follows:

Section 2 contains a discussion of the literature review parameters, including keywords for each discipline and filters applied to the search criteria. Additional figures in this section provide a systematic analysis of the types of Unmanned Aircraft (UA or aircraft), sensors, and processing workflows used in each of the disciplines.

Section 3,

Section 4,

Section 5 and

Section 6 outline the findings for UAS applications in forestry, freshwater ecosystems, grasslands and shrublands, and agriculture, respectively. Finally,

Section 7 presents a discussion on the shared principles and future needs for UAS flight planning and sensor data processing throughout natural ecosystems.

2. Materials and Methods

For this review, all scientific articles were queried using the Web of Science [

57]. The literature searches for each natural resource topic, forests, freshwater ecosystems, grasslands and shrublands, and agriculture, were conducted by an independent natural resource professional with co-established instructions. These literature searches were conducted with the following parameters: (1) all searches were completed during the 2021 calendar year and were restricted to publication dates within only the five most recent years (i.e., 2016–January 2022); (2) keywords were field specific, as needed, and included references to both the designated natural resource field and UAS; and (3) search results were sorted by relevance. The keywords implemented in each of the four queries can be seen in

Table 1. Sorting the results by relevance minimized the occurrence of articles that did not focus on UAS applications as their main objective or articles that focused on UAS communications or piloting developments (i.e., contained no remote sensing or sampling analysis). Both primary application articles and review articles were collected during these queries. Each review article that was found prior to collecting a minimum of 30 application articles in a given discipline was also archived and reviewed here. The review article(s) most complementary to our study objectives for each of the four natural resource disciplines are also given in

Table 1. Once a minimum of 30 application articles for each specific discipline were saved and validated, the search was considered complete. In total, this literature search resulted in 150 scientific articles, including 126 UAS application articles and a combination of 24 background and literature review papers.

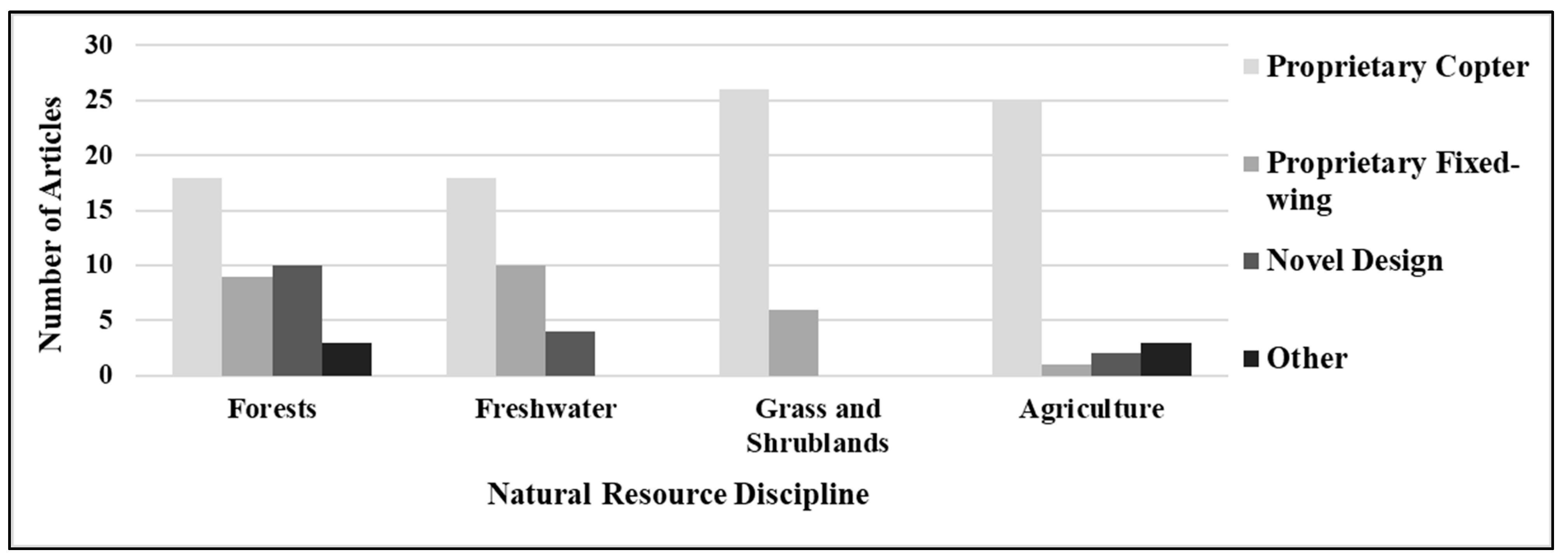

A systematic analysis of the methods for each of the primary application articles was completed to provide a discussion of the (1) types of unpiloted aircraft, (2) types of sensors, (3) thoroughness of the flight planning and data processing procedure details, and (4) processing framework used throughout each of the four disciplines. The results of this analysis are shown in

Figure 1,

Figure 2,

Figure 3 and

Figure 4. The first analysis of the literature (

Figure 1) was established to observe the configurations of unpiloted aircraft commonly used in natural resource applications. Both proprietary rotary-wing (“copter”) and fixed-wing aircraft were included in this analysis. These proprietary systems included copters from companies such as DJI (Shenzhen, China) and Microdrones (Siegen, Germany), as well as fixed-wing aircraft from companies such as SenseFly (Cheseaux-sur-Lausanne, Switzerland). Additionally, a ‘novel design’ category was included to consider the presence and abundance of applications that operated UAS specifically configured/built for their study or objective, and not provided as a complete kit from notable companies. Lastly, an ‘other’ category was included to recognize articles that did not specify the company or model type for their unpiloted aircraft.

A second analysis performed on the primary application articles conducted across all four of the natural resource disciplines evaluated the types of UAS sensors employed. Each of the four disciplines demonstrated the utility and necessity of including a diverse suite of remote sensing sensors (

Figure 2). For this analysis, seven categories were defined. These included first ‘natural color’ sensors sensing only Blue, Green, and Red (RGB) wavelengths and ‘multispectral’ sensors, which collected up to 20 spectral bands. Additional categories were defined for more specific or complex sensors, such as ‘hyperspectral’, ‘lidar’, and ‘thermal’ UAS applications. A ‘fusion’ category was included to denote applications which combined the data collected by multiple UAS sensors or with data collected from another remote sensing platform. Lastly, an ‘other’ category was included to define applications that focused analysis on UAS-based sampling or did not specify a sensor type. Articles that included the use of more than one UAS sensor type (either for comparison or for fusion) were counted for each respective sensor.

To establish the degree to which UAS applications among these four natural disciplines relied on specific and standardized methods for collecting and processing their sensor data, a third analysis of the articles was conducted (

Figure 3). During this assessment, articles were defined as being ‘complete’ in their discussion of flight planning and processing procedures based on the readers ability to replicate the study. If information regarding the flying height of the aircraft, image or flight line overlap, or weather conditions were not provided, the methods were considered ‘incomplete’. Additionally, in consideration of the processing workflow, the article must have provided clear details on the software or processing method (e.g., use of Agisoft PhotoScan (St. Petersburg, Russia) or specific Python package(s)), quality parameter settings, and filtering applied to the resulting spatial data models to be considered ‘complete’. A more fitting discussion of the flight planning and processing protocols was defined as ‘complete with references’ if it included citations and consideration of methodological choices. A final ‘other’ category was included here to denote applications that did not require either a full discussion of their flight planning or full discussion of their processing workflow based on their study design.

The methods available for processing the structural and spectral data provided by UAS sensors are numerous and diverse. While some paired or proprietary software solutions exist for specific UAS (such as SenseFly and Pix4D Mapper (Prilly, Switzerland)), a multitude of other open source or non-UAS specific tools are available to be used to fulfill either a complete or single stage of the processing and analysis workflow. In this final analysis of the literature for all four natural resource fields (

Figure 4), the number of articles completing their initial processing using a specific software or data processing solution was evaluated. The categories considered only the methods included following pre-processing, which were used to generate the spectral, structural, geometric, or textural data (i.e., 2D and 3D spatial data products such as an orthomosaic or photogrammetric point cloud). Proprietary software solutions such as ‘Pix4D’, ‘Agisoft MetaShape’ (previously ‘PhotoScan’), and Environmental Systems Research Institute (ESRI) ‘ArcGIS’ (Redlands, CA, USA) were defined as individual categories. An ‘other proprietary’ category was included to specify programs owned and managed by other commercial companies (e.g., DJI Terra). The open-source category, ‘open-source’, defined UAS applications which were completed using non-proprietary software or coding libraries. An ‘other’ category was included to distinguish articles that did not require digital image matching or spatial data modeling, such as those studies that performed their analysis on a singular image of the study site.

3. UAS Applications in Forestry

Forests are an important ecosystem that humans directly and indirectly rely on for services such as timber production, improving and supporting water quality, carbon sequestration, serving as a habitat for wildlife populations, recreation, and culturally significant locations [

63]. Management and stand practices must be employed to conserve and sustain forests, and to estimate conditions quantitatively or qualitatively. The forestry-specific applications represented a wide range of forest types, from boreal to tropical, regenerating to uneven aged, and mixed natural forests to well-managed plantations [

62,

64,

65,

66,

67,

68]. The surveyed articles integrated UAS for updating forest inventories, generating enhanced forest inventories (EFIs), estimating structural metrics, classifying tree species, assessing forest health, evaluating forest regeneration, or estimating damage from events such as wildfires and windthrow [

29,

69,

70]. The benefits experienced from using UAS-based sensor data for forestry applications included the reduction of resource requirements for traditional field work techniques. These resources included fewer time and financial costs, as well as a lowered reliance entirely on field-measured data [

6]. Additionally, the data and discussion generated from studies that used UAS were valuable for ensuring correct forest management plans were employed, based on the largest amount of relevant information. The benefits of integrating UAS are supported by the results of studies such as Moe et al. [

66,

71], whose research found that that tree spatial positions and diameter at breast height (DBH) values based on digital photogrammetry had comparable prediction accuracy to field-measured and lidar measured trees. The authors concluded that UAS could be used for assisting or entirely supporting foresters and land managers in searching for high-value timber trees and estimating tree size in large mixed-wood forests [

66]. In addition to articles that focused on establishing case studies on specific UAS-based analyses, environmental policy makers are exploring how to best translate UAS-based forestry data and results into actionable insights [

72].

3.1. Aircraft and Sensors

The UAS-based forestry applications synthesized in this review incorporated many configurations of UAS.

Figure 1 shows the higher degree of diversity in aircraft types, in comparison to the other natural resource disciplines. Many of the forestry articles used multiple UAS platforms during their operations to acquire, compare, and fuse data across multiple sensors. Common UAS manufacturers and models included DJI, specifically their phantom 3 and 4 models, and SenseFly, with their eBee models. The most common workflow included using a single UAS, sensor, and flight planning protocol. Novel designed UAS were engineered for some research studies. In Hillman et al.’s [

28] study, a custom built UAS (University of Tasmania, Australia) was utilized for lidar data acquisition, and a DJI Phantom 4 Pro for natural color imagery acquisition. In Goodbody et al. [

58], a military-grade quadcopter UAS was used for natural color imagery acquisition, while airborne lidar (ALS) data were acquired with a piloted Cessna aircraft as a source of reference data.

More than other natural resource disciplines, forestry-specific applications featured a high number of studies, which included more than one UAS or more than one sensor, and by association, multiple data acquisition flights [

28,

65,

73]. This is best exemplified in Webster et al. [

73]. An Optris PI450 Thermal Imager (Portsmouth, NH, USA), a Panasonic Lumix DMC-GH4 DSLR camera (RGB) (Osaka, Japan), and a Riegl LMS Q560 LiDAR sensor (Horn, Austria) were used in this study. Webster et al. [

73] successfully generated 3D point clouds from the natural color imagery, thermal imagery, and a lidar scan to characterize the structural and thermal data of vegetation in a heterogenous sub-alpine Eastern Swiss Alps Forest. In addition to using multiple sensors, the natural color and thermal imagery in this study were acquired with a UAS, while the lidar data were acquired from multiple piloted helicopter flights (i.e., ALS). This study is diverse with data acquisition methods, and merges DAP methods for natural color and thermal imagery data processing, while also integrating lidar data into their study for data validation [

73]. Such an emphasis on multi-source data acquisition and analysis is an impressive foreshadowing for the future of forestry remote sensing using UAS.

3.2. Flight Planning

Flight planning is often overlooked as a small detail in data acquisition using a UAS, as seen in the number of studies with incomplete flight parameter details (

Figure 3). Flying heights, altitudes, sensor systems, climatic factors, and imagery overlaps have conclusively shown impact on the UAS sensor data processing time, quality of results, and quality of outputs [

52,

53,

74]. If authors provided enough detail on the flight parameters, as well as the parameters selected to process the subsequent data, then they have ensured their results are relatable, reproducible, and confident. Many of the forestry-based studies provided flying heights, or altitudes, and overlaps. Nineteen of the reviewed studies were comprehensive in their flight plan details, including flying heights or altitudes, overlaps, and climatic conditions or weather for the data acquisition flights. Three of the thirty studies provided comprehensive flight parameters and externally referenced sources as to why and how their respective flight plans were chosen [

73,

74,

75]. The United States Federal Aviation Administration (FAA) has regulated the use of UAS, including their speed, operating hours, and flying heights within the United States. Flying heights are not to exceed approx. 122 m (400 feet) above ground level, per FAA regulation (Small Unmanned Aircraft Systems, 14 C.F.R. §107). All the reviewed studies conducted in the United States obeyed this regulation, while many international studies exhibited more freedom in their flying height selection. Some studies flew lower for lidar and normal color imagery acquisition, with some of the lowest flying heights documented at 30 m and 50 m, respectively [

11,

76]. In the other extreme, Liu et al. [

77] flew at 800 m above the ground for multispectral imagery acquisition. Swayze et al. [

56], similar to Fraser and Congalton [

6], found that a flying height closer to the 120 m threshold in the United States could provide an effective balance between resolution, efficiency, and accuracy for forest surveying. The other key component of flight planning is imagery overlap, which can be divided into forward and side overlap. Because all the studies stated an overlap to some degree, it was observed that the most common forward and side overlap was 80–90% [

67,

78,

79,

80]. Several of the reviewed studies on UAS applications in forestry included multiple remote sensing systems, and therefore, multiple flight plans. A common trend among these studies was to detail the flight parameters for one of the missions but provide no or few parameters for the other(s). Stating flight parameters should be considered an important detail in forestry studies, as the resulting sensor data estimates could potentially be adversely impacted.

3.3. Processing

Processing data can be divided into pre- and post-processing. For forestry applications, post-processing usually entails quantifying and calculating metrics, determining spatial positions, assessing accuracies from multiple models, estimating species and health, or calculating other silvicultural metrics. In many cases, UAS sensor data processing required additional processing and statistical software to be used beyond Agisoft, Pix4D, and ESRI ArcGIS. Many of the studies utilized a combination of proprietary SfM software, open source, custom code, or a third party for processing their data due to multiple types of sensor data being acquired [

81,

82,

83].

Figure 4 visually expresses the number of times software were used throughout the UAS applications in forestry. The most common type of model produced during these studies was the photogrammetric point clouds. As a result, the most common processing steps discussed within the reviewed literature came from the stages associated with generating such point clouds. Generating point clouds requires images to first be aligned [

22,

24,

84]. Within the image alignment stage comes choosing values for image alignment accuracy, keypoint selection, and minimum and maximum number of tie points. The studies reviewed for this section varied immensely with how much detail was provided for each of these parameters. In Krisanski et al. [

85], a table was provided that detailed the values chosen for the settings and stages of their point cloud generations in Agisoft. Photogrammetric processing parameters are key factors that control the products’ quality, accuracy, and replicability. Krisanski et al. [

85] stated their processing parameters in an easily readable, well-organized table that can be translated or replicated for similar research studies. Many of the UAS applications strived to use high accuracy and high-quality settings during their data processing, selecting ‘high’ or ‘ultra-high’ quality and ‘aggressive’ filtering during the photo alignment and dense point cloud generation steps [

76,

86]. Ramalho de Oliveria et al. [

76] referenced a United States Geological Survey (USGS) National UAS Project Office workflow to ensure the highest quality feature matches among their sets of photos in the generation of their dense-matching point cloud.

Many of the reviewed forestry applications sought to complete similar objectives to one another, such as measuring tree heights and DBH. Though these publications had similar objectives, the way in which each estimated these metrics was different. Iizuka et al. [

87] and Cao et al. [

88] estimated tree heights and DBH in their publications. Iizuka et al. [

87] used Agisoft to create their point cloud, as well as their DSM, orthomosaics, digital terrain models (DTM), and canopy height models (CHM). Cao et al. [

88] used Pix4D to generate their normal color imagery photogrammetric point cloud. These two publications show that even though studies share similar objectives for forestry applications, the data processing software and methodology may vary. Each of the forestry applications stated the processing parameters and workflows used in their methodology to some degree. In association with the use of more than one aircraft or sensor, some studies used two or more processing software packages to create their spatial data models perpetuating a trend in which the processing parameters of one dataset were detailed, while the other dataset(s) had minimal or no discussion [

28,

66,

80]. Graphical flowcharts were provided in some studies as a superb representation of these processing considerations. These visualizations depicted the processing and statistical analyses throughout the respective articles [

80,

89,

90]. Flowcharts are a valuable tool for readers to understand the steps and processing parameters, enhancing the replicability of the publication for continued research.

3.4. Qualitative and Quantitative Acheivements

The articles reviewed here produced results that contribute important findings on forestry applications which included UAS technologies. Forestry research is an increasingly important field of study as climate change, deforestation, human development, and other anthropogenically caused degradation threatens forests across the world [

9,

91,

92]. Many of the articles found during this literature review compared suites of metrics from DAP conducted using RGB imagery, UAS-lidar, ALS, terrestrial lidar, or field collected data to further understand the advantages and disadvantages of different data acquisition types, or to assess relative accuracies of metrics [

58,

88,

93,

94]. ALS data, acquired from a piloted aircraft, were used in many forestry focused applications. These applications included normalizing UAS-DAP point clouds, building digital terrain models (DTM

ALS), calculating structural metrics, assisting in the classification of ground vs. nonground surfaces, or for comparing UAS-DAP to the ALS-derived biophysical estimates [

93,

95,

96]. While ALS has been used as a primary source for forest inventory biometrics for decades, some studies are beginning to showcase UAS sensor data as a functional and effective alternative or supplement. Puliti et al. [

96] used UAS-DAP for estimating biophysical properties in forests under regeneration. Their results determined that the use of UAS data provided more accurate predictions than an ALS data or field assessment, with a reduction in their relative root-mean-square-error (RMSE) of 50% for stem density compared to ALS data [

96]. In addition to comparing different suites of metrics to another, some applications assessed the accuracy of individual models and metrics from certain data acquisition types. Tomaštík et al. [

83] assessed the accuracy of DAP point clouds in partially open forest canopies, concluding that the overall accuracy of DAP point clouds was high and reliable for applications which involve horizontal and vertical measurements. The findings of Puliti et al.’s [

96] comparative study and Tomaštík et al.’s [

83] accuracy assessment of photogrammetric point clouds contribute to understanding optimal practices for forestry research with UAS.

Biodiversity, biomass, regeneration, and health status are important characteristics of forest communities. These characteristics contextualize changes occurring on the species and growth of a forest, which allows humans to best understand how to conserve, sustain, or manage the ecosystem [

63]. UAS were used to assess biodiversity, regeneration, and health in three of the papers on different forest types, ranging from mixed broadleaf-conifer forests to boreal forests, and forest stands within the interior cedar-hemlock biogeoclimatic ecosystem zone [

65,

86,

97]. These applications were completed using varying UAS, sensors, flight parameters, and processing software. One important finding from the biodiversity assessment study by Saarinen et al. [

65] concluded that the most reliable biodiversity predictions, when assessed by structural variability or successional stage, came from the fusion of UAS natural color and hyperspectral point clouds in Boreal forests. In addition to assessing forest biodiversity, regeneration, and health, many of the reviewed studies used UAS-DAP to estimate wildfire severity [

28], biomass [

68,

75], tree growth [

81], tree detection [

79], windthrow [

95], and forest structural metrics, such as DBH, height, stem density, basal area, and volume [

66,

87,

88,

90,

96].

The UAS applications in forestry reviewed from the last five years each contributed significant findings to the global pool of forestry research acquired with UAS-DAP. Some projects introduced new methods, approaches, and modeling practices for UAS-based forestry data. Mohan et al. [

79] recognized their research as a pioneering study for automatic individual tree detection (ITD) in open canopy mixed conifer forests. The authors presented a simplified framework for automated ITD from DAP-derived CHM based on algorithms originally designed for lidar point clouds. The results demonstrated that CHMs generated from digital photogrammetry, coupled with a local-maxima algorithm, are capable of estimating tree counts with an acceptable accuracy (F-score > 0.80) in open canopy mixed conifer forests [

79]. Based on this accuracy, the authors propose that this ITD algorithm has the potential to also estimate aboveground biomass (AGB), stem volume, and forest monitoring activities [

79]. Aguilar et al. [

67] researched methodologies based on DAP to generate DTMs in leaf-off phenological conditions of a teak plantation in Ecuador. This study resulted in an average plot-level vertical error of −3.1 cm, with 86.3% of the 58 modeled-reference plots having a DTM mean vertical error less than 5 cm (absolute value). The authors credit their accuracies to modeling their study site in leaf-off conditions, achieving high accuracy photogrammetric bundle adjustments during the computing of internal and external camera orientation, and good performance of both the ground points filtering algorithm and the interpolation method applied during the final DTM generation [

67]. The methods provided in this article show potential for AGB modeling over similar study locations [

67]. These two publications produced excellent qualitative and quantitative results in an effort to find the most efficient remote sensing methodologies using UAS of forest ecosystems.

3.5. Current Limitations

Each of the UAS applications in forestry encountered some limitations during the analytical stage of the research. Many of the limitations were specific to the forest types being researched. One specific example is modeling ground area and terrain in forests with dense vegetation cover [

98]. Many of the reviewed applications compared the accuracy of a normalized, reference, or combined lidar DTM to DAP 3D models built by other sensors (e.g., natural color or multispectral) [

90]. This interjection of UAS-lidar or ALS could prove beneficial for generating excellent terrain models (i.e., reference data), but is a more costly technology to acquire, especially for large study areas [

75]. Additionally, ALS point densities used in forest inventorying (typically with a resolution of 1–5 points m

2) frequently misdetect small objects, such as young trees [

96]. Other limitations of UAS-DAP included the relatively short amount of time that a UAS can spend in flight, the need to fly during hours where the impacts of shadows would be minimal, and the potential for changes in wind direction or speed to impair the desired spacing between images [

74,

99,

100]. Computer processing of UAS sensor data can also be demanding and time consuming. Jayanthunga et al. [

68] could not implement an ‘ultra-high’ quality SfM workflow on their area of interest with Agisoft due to excessive processing time and computer power requirements. The authors had to work around this limitation by generating four separate dense point clouds for leaf-on imagery with alternate quality levels [

68]. The four separate dense point clouds included downscaled original images by factors of 4 (4, 16, 64, and 256). Though the authors were able to work around this limitation, data processing can be a hindrance for researchers processing data with computers that do not have ample hardware resources. Even with adequate computer configurations, processing can still take hours to days to complete [

68]. In another example, Iizuka et al. [

87] reported that it took 23 h to process approximately 27 hectares of data to generate the three-dimensional models in Agisoft [

87]. Though there are technological, physical, and digital limitations to DAP, the reviewed studies on forestry applications unanimously acknowledge the utility, reliability, accuracy, and cost-effectiveness of UAS as a whole [

58,

80].

3.6. Future Research

Discussion on future research in UAS applications in forestry usually called for expanding on specific research applications, objectives, or project-specific limitations. For instance, Krisanksi et al. [

85], who flew a UAS through a forest to photogrammetrically estimate tree diameters, stated that improved collision avoidance systems or reliable GNSS, which supported autonomous flight, would benefit future research in similar complex, unstructured environments. Another example of specific future research can be seen in Hillman et al.’s [

28] study on UAS-DAP and lidar mapping approaches for estimating fire severity. The discussion of this research stated that UAS sensor data models should be assessed to determine the capability of representing fine-fuel, as well as developing metrics that can represent the under-canopy vegetation change [

28]. The ultimate goal of UAS-DAP forestry research is to establish enough confidence in the technology for use in forestry management, conservation, and sustainability practices [

72,

74]. Such applications could include updating forest inventories, estimating structural metrics, classifying tree species, assessing forest health, evaluating forest regeneration, or estimating damage from events such as wildfires and windthrow. Puliti and Granhus [

72] researched ways to directly translate raw UAS imagery into actionable insights for assessing forest regeneration. The findings were encouraging, as DAP had a higher overall accuracy than ALS data. Additionally, this research was among the first study where the operational costs for silvicultural treatments are modeled using remotely sensed data [

72]. More evaluations of future applications in forestry should strive to include a discussion on the costs and achieved benefit from integrating UAS.

4. UAS Applications in Freshwater Ecosystems

High accuracy and high precision are difficult but imperative to achieve for successfully studying freshwater ecosystem using remote sensing. The water quality of freshwater lakes, rivers, and reservoirs are rapidly changing across the globe due to increased watershed development and climate change in addition to many region-specific occurrences [

101]. Developing methodologies for remotely studying freshwater ecosystems provides researchers and policy makers with data at greater temporal and spatial scales compared with complimentary in situ measurements. The most common remotely studied components of freshwater ecosystems are suspended sediment, submerged aquatic vegetation (SAV), chlorophyll-a, and algae. These are all considered optical parameters; parameter sensors can directly measure based on reflectance spectra. Non-optical parameters are those that are not directly visible to sensors and include nitrogen, phosphorus, dissolved oxygen, etc. Often, optical water quality parameters intended for the assessment of water quality—for designated uses of drinking water sources or primary contact recreation—contain very distinct thresholds for public safety. For example, the World Health Organization (WHO) has a created guidance thresholds of cyanobacteria for safe primary contact recreation and drinking water sources [

102]. The guidelines indicate 10 µg of chlorophyll-a/L when cyanobacteria are the dominant species (or 20,000 cyanobacterial cells/mL) creates a “relatively low probability of adverse health effects”, while a concentration of 50 µg/L (or 100,000 cyanobacterial cells/mL) indicates a moderate potential for short- and long-term illnesses, if consumed [

102]. Additionally, the US Environmental Protection Agency (EPA) has indicated that for children aged 6–10, the threshold for recommended recreation and swimming if microcystins (toxins associated with certain species of cyanobacteria) are present is 8 µg/L [

103]. Therefore, inaccurately measuring concentrations of optical parameters using remote sensing methodologies in this one example can lead to a cascade of societal impacts and possible misguided swimming advisories among many other impacts.

Remotely sensed data allow for timely data collection with significantly greater spatial resolution than in situ measurements. Traditional in situ measurements consist of collecting grab samples for laboratory analysis and field meter readings from specific locations, often only one to a few per waterbody per sampling event. Moreover, these few samples are expected to represent the full waterbody, including all coves and channels. A remote sensing approach can provide full waterbody or stream reach coverage, can be rapidly deployed when desired, and can yield a faster turnaround of results. Remote platforms can also access areas, which may be unsafe or inaccessible to the field scientist (e.g., shallow non-navigable streams, backcountry lakes without road access for boat launching, and extremely contaminated waterbodies where sampler safety is the upmost priority). Unmanned aerial systems are gaining traction in freshwater ecosystem studies with significant growth from 2016 through 2018, before exponentially growing through 2021. However, UAS studies are often coupled with traditional in situ measurements to account for accuracy, correlations, or model creations.

4.1. Aircraft and Sensors

The most commonly used aircraft for freshwater ecosystem studies are proprietary copters predominantly consisting of DJI Phantom and Inspire models. In freshwater ecosystems, DJI models have been used to study algae [

46,

104,

105,

106,

107], SAV [

43,

106], chlorophyll-a [

100], and suspended sediment [

45,

108,

109]. Other copters included the Tarot FY 680 (Wenzhou, China), DJI M600 Pro, and the 3D Robotics IRIS quadcopter (Berkeley, CA, USA). The Bergen RC copter (Bergen, WI, USA) was also used to monitor algae and SAV [

43,

110]. The most commonly used proprietary fixed-wing systems consisted of SenseFly models, particularly the Swinglet and eBee. SenseFly models were commonly used to study chlorophyll-a [

111,

112], SAV [

42], and suspended sediment [

44,

45,

113]. Novel UAS are commonly constructed for the use of aqueous sample collection [

114]. A few studies for monitoring algae incorporated novel copter designs [

46,

110,

115], while one used a Helikite UAS balloon (Hampshire, UK) to raise a sensor to the desired altitude [

40].

Sensors used for studying algae, chlorophyll-a, SAV, and suspended sediment include natural color sensors, multispectral sensors, and hyperspectral sensors. Additionally, lidar (particularly green lidar) and thermal imaging sensors have been used to measure water depth and temperature, respectively, but will not be further discussed here [

116,

117]. Canon (Tokyo, Japan), GoPro (San Mateo, CA, USA), and Sony (Tokyo, Japan) were found to be the top three RGB camera manufacturers used in the 30 papers reviewed here and span all four parameters, including algae [

46,

115], chlorophyll-a [

44,

112], SAV [

118,

119,

120], and suspended sediment [

41]. Contrary to the many aircraft models used, only three types of multispectral sensors were deployed in these studies: Parrot Sequoia (six times), MicaSense (CA, USA) RedEdge (three times), and Tetracam MCA-6 (Chatsworth, CA, USA) (one time). Additionally, the selection of hyperspectral sensors followed no trends across the four parameters and included a GER 1500 model by the Geophysical and Environmental Research corporation (Milbrook NY, USA) [

104] OceanOptics (now OceanInsite) (Florida, USA) [

43,

110], ASIA (Marina Bay, Singapore) [

121], Headwall (Bolton, MA, USA) [

122], Resonon (Bozeman, MT, USA) [

123], HyMap (Sydney, Australia) [

124], and MicroHIS (Rijswijk, The Netherlands) [

125]. Lastly, it was common for a few studies to modify RGB sensors to record Blue, Green, and NIR bands [

44,

111,

112].

Typically applied to satellite imagery, scientists have used images from the Sentinel-3 Ocean and Land Color Instrument (OLCI) and MERIS satellites to measure the cyanobacteria index (CI) to identify the presence and concentration of a photosynthetic pigment (i.e., chlorophyll-a or phycocyanin) [

107,

126]. Sharp et al. [

107] used multispectral imagery from Sentinel-3 and Medium Resolution Imaging Spectrometer (MERIS) (300 m resolution) with multispectral imagery from a MicaSense RedEdge sensor (~12 cm resolution) to study at which scales the CI is most accurate. Kupssinsku et al. [

112] created a methodology for predicting concentrations of chlorophyll-a and total suspended sediment using imagery from Sentinel 2 and a modified RGB sensor. Bands 2 (Blue), 3 (Green), and 8 (visible and near infrared, VNIR) were used from Sentinel 2, and Blue, Green, and NIR wavelengths from the modified RGB sensor and imported into a machine-learning algorithm that was then compared to in situ data interpolated maps.

The most technically demanding optical parameter discussed here is algae, particularly cyanobacteria. Douglas Greene et al. [

105] found that a MicaSense RedEdge multispectral camera was “insufficient for estimating pigment concentrations [of phycocyanin–the unique photosynthetic pigment in cyanobacteria] under most conditions”. This study inferred that hyperspectral sensors would likely produce more precise and accurate results. However, multispectral cameras were sufficient for quantifying chlorophyll-a with NDVIs to in situ sample correlations producing R

2 values of 0.88 [

127], and of 0.70 [

128], and with a successful 86% detection accuracy of the presence of cyanobacteria [

46]. Quantifying a cyanobacteria bloom through UAS imagery into transferrable units set by the WHO and US EPA remains difficult with multispectral sensors, although it has been achieved using imagery from the Sentinel-3 OLCI and MERIS satellites [

107,

129]. Larson et al. [

113] found higher accuracy when measuring spectral reflectance of a turbid river with a field hyperspectral spectroradiometer compared to the UAS attached multispectral MicaSense Sequoia sensor. Their linear and stepwise regression models favored the use of multiple bands and numerous band ratios compared to single bands, and the R

2adj values were higher for the field hyperspectral spectroradiometer than the MicaSense Sequoia data.

4.2. Flight Planning

Lyu et al. [

115] focused on the pre-processing technology and flight parameters to monitor cyanobacteria harmful algal blooms. The authors began with identifying the effective resolution needed to be lower than 5 cm. Tested flight planning methodologies included flight duration, speed, height, and necessary overlaps. Additional sensor parameters tested included tilt, blur, and calibrations. Where flying heights were provided (25 of the 30 of the reviewed studies on freshwater environments), all except three were at or below 170 m, with an average of 101 m. Image overlaps ranged from 50 to 86%, and ground sample distances were mostly less than 13 cm. It was a customary practice to use ground control points (GCPs) to aid in the georeferencing process (16 of 30). GCPs have been discussed as being extremely important during post-processing stage of image mosaicking and geoprocessing. Notes on weather during UAS missions varied across the board but were often not included. Some studies selected sunny and calm days to collect their data [

40,

100,

107], while others flew on cloudy to overcast days to minimize sun glint [

42,

105]. To avoid sun angle glint, Lyu et al. [

115] recommended that users aim for less than 30-degree sun angle [

115]. These tests led to their ability to achieve a “maximum information acquisition efficiency”, which allowed Lyu et al. [

115] to detect cyanobacteria prior to a bloom formation and identify cyanobacteria apart from background algae and submerged aquatic vegetation. The only standard consensus for weather conditions was to fly on days where wind speed was low.

4.3. Processing

Liu et al. [

100] provided their post-processing methodology in great detail as well and included pre-processing, geometric registration, and radiation calibration equations with each data processing step, but did not mention any software used in the analysis. The methodology included geometric registration, and selected wavelengths for radiation calibration before stitching the images together. Finally, water quality results were incorporated into the model to create a map portraying the spatial distribution of the water quality parameters [

100]. Using Agisoft, Corti Meneses et al. [

118,

119] described their very detailed image processing methodology and includes references to describe specific settings used in Agisoft to create their point clouds of aquatic vegetation. In their study of aquatic reed extent and classification, a range of quality and matching parameters were tested through a method of trial and error, due to their flights being conducted manually [

118]. Density was determined from hyperspectral data with RGB imagery using OPALS 2.2.0 software (software unique to this study), and the authors included a mention of every function used in the software for the analysis as well as additional equations. Detailed and complete processing functions and methodologies using Pix4D or Agisoft were not explained in any of the studies using these platforms for studies of freshwater ecosystem. Although there is no established workflow or methodology for analyzing optical parameters of freshwater ecosystems, the Pix4D software is commonly used in the orthorectifying process of digital image analysis (

Figure 4).

Regression and correlation algorithms were often employed to create links between in situ data—being water samples or field surveys—with UAS-derived reflectance. Spectral indices (SI or vegetation indices) were frequently used as well for chlorophyll-a, algae, and SAV studies. The most common methods for analyses include variations of NDVI and “mathematical algorithms”. NDVI typically consist of analyzing Red and NIR wavelengths [

105,

128]. Variations of the NDVI include a green to NIR analysis (e.g., NDWI) [

44,

45,

127], and are most commonly found within chlorophyll-a studies. Mathematical algorithms dominate in the analytical methodologies for algae and chlorophyll-a studies. Many SI have been mathematically modeled [

46,

104,

121], including the cyanobacteria index [

107,

110], and with the powers of machine learning classifiers such as random forests [

106,

112,

115,

122], they can be implemented concurrently.

Data analysis software most commonly used includes coding platforms, such as R [

43,

106,

107], Python [

105,

112,

125], and Matlab (Natick, MA, USA) [

108,

109,

130]. ArcGIS was also commonly used across studies for data analysis [

43,

106,

108,

109]. However, the most common data analysis software consists of a mix of other proprietary platforms including but not limited to Adobe Photoshop (San Jose, CA, USA) [

46] and eCognition (Sunnyvale, CA, USA) [

43]. Douglas Greene et al. [

105] were the only ones to use a Python script for their image geo-referencing using equations based on each image’s pixel dimensions and rotations. The authors include their Python script in a supplemental information document, a practice that is becoming more commonly emphasized in open science.

4.4. Qualitative and Quantitative Acheivements

Identifying the proper sensors to use are a function of available technology, and the study question at hand. Most studies monitoring the distribution of SAV found successful results with RGB cameras, and a few opted for modified RGB or multispectral sensors to include data in the NIR wavelengths. Others found the blue, green, and NIR portions of the electromagnetic spectrum particularly helpful, thus creating modified SI as blue and green light has better water penetration abilities than NIR. Modified SI include NDWI ((NIR − G/NIR + G)), BNDVI ((NIR − B/NIR + B)), NGBDI ((G − B/G + B)), and NGRDI ((G − R/G + R)) [

105,

127]. The more detailed algae and cyanobacteria studies focusing on a specific species of algae present turn toward hyperspectral sensors due to the numerous and narrow bands in which they sense.

Studies monitoring and or quantifying optical components of freshwater ecosystems often include comparisons from UAS-derived measurements to in situ data. Becker et al. [

110] found their UAS data had a higher accuracy when compared to the FieldSpec (Malvern, UK) spectral measurements (in situ reference data) (R

2 = 0.987, RMSE = 0.982) for areas of the lake where there was no surface accumulation of algal scum. Other studies found high levels of accuracy using UAS as well, including Qu et al. [

46], who produced a detection accuracy for cyanobacteria of 86% with false negative and false positive results, resulting in 6% and 8% of the error, respectively. Lastly, Douglas Greene et al. [

105] were able to predict the concentration of microcystin (a toxic component produced by certain species of cyanobacteria) within 33%. There was more variability in the accuracy of SI to measure chlorophyll-a; for example, Choo et al. [

128] (R

2 = 0.7031) and Kim et al. [

127] (R

2 = 0.88). Regression models and machine learning algorithms produced slightly greater accuracies for this application as found in Kupssinsku et al. [

112] (R

2 = 0.89) and Lu et al. [

122] (R

2 = 0.96).

SAV studies, comparing UAS-derived measurements to in situ data, often aimed to identify certain species of SAV from other present SAV. This analysis was performed primarily to differentiate an invasive species from native species. High accuracies can be obtained from supervised classification algorithms. A few great examples of SAV classification success include: (1) Chabot et al. [

42] with an overall accuracy of submerged features of 84%, (2) an overall accuracy between 90 and 92% depending on analytical method used in Corti Menses et al. [

119], and (3) an overall accuracy of 93% for Brazilian waterweed in Underwood et al. [

124]. Lastly, regression analyses and reflectance values for suspended sediment yielded high accuracies for total suspended sediment in Prior et al. [

108] (average TSS R

2 = 0.97) and Kwon et al. [

125] (R

2 = 0.90 using a support vector regression model).

4.5. Current Limitations

A common hindrance for UAS in studying freshwater ecosystems is the spectral resolutions of the sensors used. For certain applications, RGB or multispectral sensors are appropriate to use, such as suspended sediment and SAV, but for others, including more detailed studies of algae or non-optical parameters, hyperspectral sensors are suggested. The enhanced spectral resolution comes with additional costs and aircrafts in which they can communicate. Nonetheless, UAS for studying and monitoring freshwater ecosystems is a growing market and niche globally.

Traditionally, the process of creating an orthomosaic was created for non-aquatic ecosystems, such as forests or agricultural fields, where tie points can be used to facilitate the orthomosaic process. However, in aqueous studies, the surface of the water is often a homogenous structure, which decreases the number of tie points possible, thus making geoprocessing challenging [

131]. Many of the studies reviewed here identify this limitation. A common workaround is to include ground control points or ensure enough of the shoreline is present in each photo [

41,

44,

46,

104,

111,

120,

127]. Sharp et al. [

107] found the inconsistency of the surface of the water due to waves and the lack of reference points made it impossible for part of their dataset to be included in analyses. Kislik et al. [

106] used a single image analysis approach for part of their study to avoid the need for stitching images after identifying the challenges mosaicking images have when images are of a uniform surface.

Rather than focusing on the identification of tie points for image stitching, others turned to the geometric properties of each image. Using Python scripts, Kislik et al. [

106] used the metadata for each image (including centroids, footprints, ground sample distances, and altitude) to mosaic the images together. Douglas Greene et al. [

105] had a similar, yet novel approach using ERDAS Imagine software (Stockholm, Sweden) and Python. Extracted metadata for each image consisted of the two-dimensional coordinates, degree of rotation, and altitude using camera and flight specifications at the time of image capture and trigonometric functions in Python to georeference each image. The Python script used is provided in the supplementary information [

105].

As most aquatic parameters, including algae, chlorophyll-a, and suspended sediment, are components of the water column, they are not stationary objects and can occur in different concentrations at varying locations throughout a waterbody. For example, unlike measuring the DBH of a tree using a UAS where the UAS-derived measurements can be checked with reference data of the same exact tree in the same exact location, concentrations of aquatic parameters can change in a single spot from the time of UAS image capture to the time of in situ sampling. This is not as limiting for SAV, as rooted macrophytes are not suspended and moving vertically and horizontally within the water column. Only a few studies identified the possible temporal difference between their UAS data and in situ data. Most studies failed to address this discrepancy apart from those injecting known concentrations of suspended sediment in fabricated research flow paths [

108,

125]. Ehmann et al. [

45] noted the time lag between UAS image capture and sample collection (less than 10 min), whereas others did not. Additionally, Pyo et al. [

121] mentioned this discrepancy in temporal sampling could be a partial cause of error. Douglas Greene et al. [

105] and Becker et al. [

110] stated that their findings show a high level of variability of harmful algal bloom concentration on a one to two-day time scale.

The cost of certain technological components of UAS missions for studying freshwater ecosystems has been identified as a strong limitation in producing the most accurate results possible. Douglas Greene et al. [

105] explicitly stated that “a multispectral camera is insufficient for estimating pigment concentrations [of phycocyanin] under most conditions. As hyperspectral imaging becomes more affordable…more targeted predictions of cyanobacteria will be possible”. Becket et al. [

110] and Veronez et al. [

41] also noted the high, and therefore limiting, cost of hyperspectral imagers. Overall, 13 of the studies reviewed for the application of UAS in freshwater ecosystems mentioned that in general, UAS are a low-cost method to achieve a high spatial and temporal resolution when monitoring freshwater ecosystems.

4.6. Future Research

The intended end goal of any water quality study is to gain knowledge to assist in the improvement of water quality. Ideally, data gathered through a UAS would be acquired, processed, and synthesized as quickly as possible. In the case of harmful algal blooms, rapid acquisition of results is needed to guide actions taken by management agencies for public health protection. Qu et al. [

46] mentioned they had an extremely fast (less than two hours) turnaround of results for their study from the time of aerial mission through image and data analysis to results. Future research could streamline this process into a user-friendly methodology and be incorporated into risk management plans [

105]. Additionally, future research should incorporate additional site parameters, including bathymetry or water depth, as benthic substrate if visible from the surface is likely to influence suspended sediment reflectance values [

42,

109]. Many papers also mention the need for future studies to repeat their studies and a greater temporal coverage of the seasons, during different flow regimes, and with waterbodies in alternate regions [

60,

105,

109]. Due to the optical properties of water, scientists are intrigued with the blue band of multispectral sensors [

42]. Scientists have mentioned future research should include a BlueNDVI and studies of penetration depth of blue sensors dependent on ambient water quality [

105,

132] as well as directly compare multispectral imagery and hyperspectral imagery, which includes a blue band [

109]. Lastly, future research needs to focus on creating a universal workflow for image stitching across a uniform surface such as water.

5. UAS Applications in Grasslands and Shrublands

The conservation and greater understanding of grasslands and shrublands is becoming increasingly critical as climate change, the introduction of invasive species, and increased human disturbances continue to rapidly degrade these ecosystems [

26,

35,

133,

134]. Grassland and shrubland ecosystems support a considerable amount of biodiversity and play important roles in water conservation, hydrological regulation, and carbon storage. Still, these ecosystems are highly sensitive to disturbances [

37,

135,

136,

137]. In comparison to other ecosystems, grasslands and shrublands often have smaller and more heterogeneous vegetative structure. This diversity in structure and composition results in difficulties for using traditional remote sensing methods, such as satellite imagery, in conventional applications including plant species classification and aboveground biomass (AGB) estimations [

39,

138,

139]. Additionally, manual collection of field-based data is often time-intensive and expensive, or even inaccurate due to the often highly mixed nature of grasses and shrubs [

37]. UAS can be used to effectively and non-destructively monitor and map grassland and shrubland areas, as well as provide critical spectral information [

61,

135,

140]. UAS provide the spatial resolution that traditional remotely sensed data often cannot, which has allowed UAS to become an increasingly popular tool. The precision, resolution, and adaptability of UAS have allowed them to become an asset in understanding and effectively monitoring grassland and shrubland species composition, local scale biodiversity, AGB, fractional vegetation cover (FVC), and other quantitative aspects of these ecosystems [

136,

138,

141]. Technologies such as UAS-lidar serve as a primary example of this increase in capability, having been proven to create 3D models of study areas, which can aid the aforementioned estimations [

26,

142,

143].

Several of the reviewed papers specific to this discipline have discussed the apparent lack of grassland and shrubland representation in UAS research. As the use of UAS has increased in natural resource applications, grasslands and shrublands have been slower to adopt this technology, unlike other ecosystems, such as forests and croplands [

143,

144,

145]. Many of these same authors identified the need for additional future research to be conducted related to UAS monitoring of grasslands and shrublands. The increased adoption of UAS and similar precision technologies will assist in the determination of best management and conservation practices, especially in regard to the many disturbances that continue to degrade these natural systems [

34,

36,

146]. Most similar to this review of UAS applications in grasslands and shrublands is a study by Hernandez-Satin et al. [

61]. However, the focus of their analysis is on understory vegetation and not areas classified directly as grasslands and shrublands.

5.1. Aircraft and Sensors

A variety of UAS were employed for research of grasslands and shrublands, with studies often using more than one system for remotely sensed data collection [

35,

147]. By far the most commonly used UAS were aircrafts manufactured by DJI, including several variations of the Matrice, Phantom, and Inspire models [

35,

134,

148]. Among these applications, the DJI Phantom 4 Pro was the most ubiquitous, with applications ranging from fire predictions [

133], disturbance detection [

135], structure mapping [

144], and FVC estimations [

149], to AGB estimations [

38]. Of the studies using the Phantom 4 Pro, all used the integrated CMOS sensor rather than a custom sensor combination. Multiple studies featured a multi-source data analysis by integrating UAS sensor data with satellite imagery. For example, Rampant et al. [

133] utilized the DJI Phantom 4 Pro with Landsat 8 Operational Land Imager (OLI) imagery to study fire predictions. Lin et al. [

141] used Phantom 3 Pro with Landsat 8 OLI imagery to estimate FVC. Lastly, Ndyamboti et al. [

149] estimated FVC with UAS and used these data to upscale and validate previous Landsat 8 imagery.

While no singular sensor stood out among the rest, Sony [

136,

142,

150] and Parrot [

36,

135,

151] were the two most common brands. In grasslands and shrublands, the often highly heterogeneous nature of vegetation requires sensors with high spatial resolutions. Because of this, sensors were often chosen specifically for their spatial resolutions. For example, Ndyamboti et al. [

149] used a Reigl VZ 1000 scanner due to its ultra-high spatial resolution of <5 cm to develop high-accuracy FVC estimates. The median spatial resolution (pixel size or ground sampling distance (gsd)) throughout the UAS applications in grasslands and shrublands was 3.75 cm, exhibiting the necessity of ultra-high resolution in this discipline. Still, a wide variety of multispectral UAS sensors were utilized in grassland and shrubland analysis [

35,

39,

138,

151]. Studies that integrated multispectral sensors often focused on the Red-Edge and NIR wavelengths. One of the most commonly used sensors within the reviewed literature, the Parrot Sequoia, collects data in Green, Red, Red-Edge, and NIR bands. Dedicated multispectral sensors, especially ones using NIR, were able to accomplish a large variety of applications with high accuracies [

151,

152,

153]. As with other natural resource disciplines, more advanced sensors such as hyperspectral imagers were seldomly featured. Only four studies utilized hyperspectral imagery, perhaps due to the expensive nature of hyperspectral sensors [

135,

147,

153,

154].

5.2. Flight Planning

Unlike in some other natural resource disciplines, UAS flying heights were commonly provided within the grassland and shrubland application papers. Additionally, most papers also mentioned side and forward overlaps of images. Specific flight parameters beyond flying height and image overlap, however, were often lacking. Flying heights for grasslands and shrublands varied greatly from 5 m above ground to 120 m [

36,

149,

151]. The average flying height throughout all papers reviewed was approximately 60 m above ground, while the most common flying height was 30 m. Although few papers cited established methods, many mentioned that their flight parameters, including flying heights, were chosen to produce specific or desired spatial resolutions. Fraser et al. [

150] reported very specific flight details, mentioning that parameters were selected to ensure that the UAS “imaged each ground location with up to 60 photos at 3–15 mm resolution”, resulting in sub-centimeter pixel sizes for some SfM models. Interestingly, several UAS applications in grasslands and shrublands featured flight planning parameters with forward overlap being much higher than side overlap [

136,

142,

153]. This design allows the users to collect a high number of images, while considerably reducing the flight time. Most studies utilized flight planning software in determining specific parameters, including Mission Planner [

39,

147,

155], FragMAP [

140,

141,

156], Pix4D Capture [

35,

137], and Altizure [

38,

134]. However, specific details regarding how these planning software programs were configured and why they were chosen were not given.

5.3. Processing

Typical processing measures for grassland and shrublands included image quality evaluations, orthorectification, geometric correction, and radiometric correction [

133,

138,

152,

157], or occasionally emissivity correction [

153,

154]. These pre-processing and spatial data modeling procedures were used to support applications including classifications and FVC or AGB estimations. Lu and He [

138] discussed each of these steps within their study, focused on species classification, in well-organized sections, and included a visual workflow that simplified these processes. The Ndyamboti et al. [

149] study, which estimated FVC in grasslands, also discussed processing in detail, mentioning that orthorectified data were transformed into DSM.

Several studies used spectral indices to process and further analyze data. The NDVI was the most commonly used index for shrub and grass monitoring; it was used for a variety of purposes, including to define shrubs and grasses from bare ground and to estimate FVC [

137,

141]. However, Strong et al. [

148] used an Enhanced NDVI (ENDVI) to differentiate grasslands at a community level and found that the ENDVI was more effective at delineating species than the NDVI. Other spectral indices, such as the Enhanced Vegetation Index (EVI), Soil Adjusted Vegetation Index (SAVI), and Blue green ratio index (BGRI), have also been shown to be useful in analyzing grassland metrics [

139,

141].

While a variety of spatial data modeling software packages were used, ArcGIS, Agisoft, and Pix4D were the most common software used for processing of UAS sensor data of grasslands and shrublands. Approximately half of the UAS applications related to grasslands and shrublands mentioned using a specific SfM processing workflow. Most of these studies utilized Agisoft or Pix4D, which feature semi-automated workflows and proprietary SfM-MVS algorithms. However, of the studies which mentioned SfM, few provided in-depth discussions of SfM methods, including the quality settings or filtering methods applied. Two studies which did discuss SfM workflows in detail were Fraser et al. [

150] and Forsmoo et al. [

155]. Fraser et al. [

150] investigated the use of UAS and SfM to characterize tundra shrub vegetation; in this study, a general processing overview was given with detailed SfM processing steps described in supplementary materials. Forsmoo et al. [

155] studied grassland sward heights and measurements, including an in-depth discussion of the SfM processing workflow that was used. These methods were supported by a visual flow chart emphasizing each step. This study was also unique in that it was the only one within the papers reviewed to use the MICMAC (Paris, France) open-source processing software. Other proprietary software includes eCognition [

39,

134,

147], ENVI (Broomfield, CO, USA) [

138,

148,

158], and Spectral Pro (Bozeman, MT, USA) [

153,

154]. Many studies utilized several different software packages for a variety of different purposes throughout pre- and post-processing. Lu and He [

152], for example, used ENVI for image quality evaluation and radiometric correction, Agisoft for image mosaicking and orthorectification, ArcGIS for geometric correction, and eCognition for object-based classification.

5.4. Qualitative and Quantitative Acheivements

One of the main objectives for UAS applications in this discipline was to accurately classify grassland and shrubland species, despite the often highly mixed nature of these ecosystems [

138,

147,

152]. Collecting species-level data of grasslands and shrublands is critical for gaining a better understanding their form and function. This information can then be used for conservation and management purposes [

39]. Several of the UAS applications were able to classify grasses and shrubs with relatively high accuracies through the use of UAS. One particularly formative study by Lu and He [

152] exhibited the ability of UAS to classify ecologically and economically important species within grasslands. This study performed classifications on several grassland species, including Awnless brome (

Bromus inermis), goldenrod (

Solidago canadensis L.), milkweed (

Asclepias L.), and fescue (

Festuca rubra L.), and found that classification models had an overall accuracy of 85%. Another study by Melville et al. [

147] used a hyperspectral UAS to classify lowland grasslands. This study was able to successfully classify five different vegetation classes (

Wilsonia rotundifolia,

Danthonia/

Poa,

Themeda, triandra

Acacia dealbata, and soil) with an overall accuracy ranging from 71% to 93%. The RF training model showed much higher accuracies than accuracies obtained for final classification results, exhibiting the importance of object-based RF classification models for species composition and classifications from UAS-derived data.

Another common application within grasslands and shrublands was AGB and FVC estimations [

140,

149]. AGB is a key indicator of carbon sinks and can be used to effectively estimate carbon stocks, especially within shrubs [

137,

159]. Several UAS applications were able to effectively use DAP workflows to estimate these metrics. One study, from Zhao et al. [

159], classified individual shrubs using UAS and LiDAR data with an overall accuracy of 91.8%, and found that textural aspects of UAS data were the most important predictor of individual shrub AGB. Another, from Abdullah et al. [

137], estimated AGB and below ground biomass (BGB) and used these results to quantify carbon stocks in desert shrubs, although their accuracy was not validated.

5.5. Current Limitations

A common limitation of UAS applications in grasslands and shrublands is the spectral resolution of the sensor, or the number of bands that the sensor can collect data in. Limited spectral resolutions may result in a decreased ability to discriminate between different vegetation types, as sensors with greater spectral ranges will detect greater amounts of discrete spectral information [

147]. Spectral resolution is particularly critical for species classification and composition estimates [

138,

147]. Additionally, lesser numbers of bands limit the number of spectral indices that can be calculated, especially if the sensor is unable to collect data in NIR. It should be noted, however, that hyperspectral sensors are much more expensive than multispectral, which may result in difficulties accessing these sensors. Lu et al. [

138] modified a Canon digital camera in their study by replacing the red band with NIR, but still mentioned that this sensor was limited in that it only collected data in three bands. A compromise between limited multispectral and more comprehensive hyperspectral sensors may be sensors which can detect several visible as well as non-visible (e.g., NIR or thermal) wavelengths. In other disciplines as well as commercial markets, intuitively designed sensors such as the commonly used Parrot Sequoia, MicaSense Dual Camera Imaging System, or Tetracam Micro-MCA system.

Several studies also noted difficulties in measuring AGB, height, and other quantitative aspects of vegetative biomass. Both underestimated or overestimated estimations were observed and may have been caused by a variety of elements, including discrepancies in training data, the specific modeling methods used, and the vegetation size and density [

26,

144,

155]. Examples of these measurement errors can be seen in [

155], which included detailed discussions of overestimated taller sward heights and the underestimated shorter sward heights. This study used a drop disc method, which measures a sample of sward height, and could explain some of the overestimations. Underestimations, on the other hand, may have been caused by increased homogeneity in shorter sward populations [

155]. In another example, Musso et al. [

144] found that UAS bias when estimating structural attributes of shrubs increased as shrub size and density increased. Madsen et al. [

26] also noted that absolute biomass change of the shrub species (

C. scoparius) may have been overestimated in their study due to accidental inclusion of additional shrub, forb, and grass species in training data. Separating out these species within training data samples proved difficult due to their mixed nature.

While grasslands and shrublands often must be sensed using high spatial resolutions, multiple studies discussed the issues that arise when the spatial resolution of sensors used are too high. Lu et al. [

138], for example, noted that while high spatial resolution can offer great spectral and textural detail, ultra-high spatial resolution UAS sensor data can “bring challenges for segmentation and feature selection”. Similarly, Abdullah et al. [