Robotic Psychology: A PRISMA Systematic Review on Social-Robot-Based Interventions in Psychological Domains

Abstract

:1. Introduction

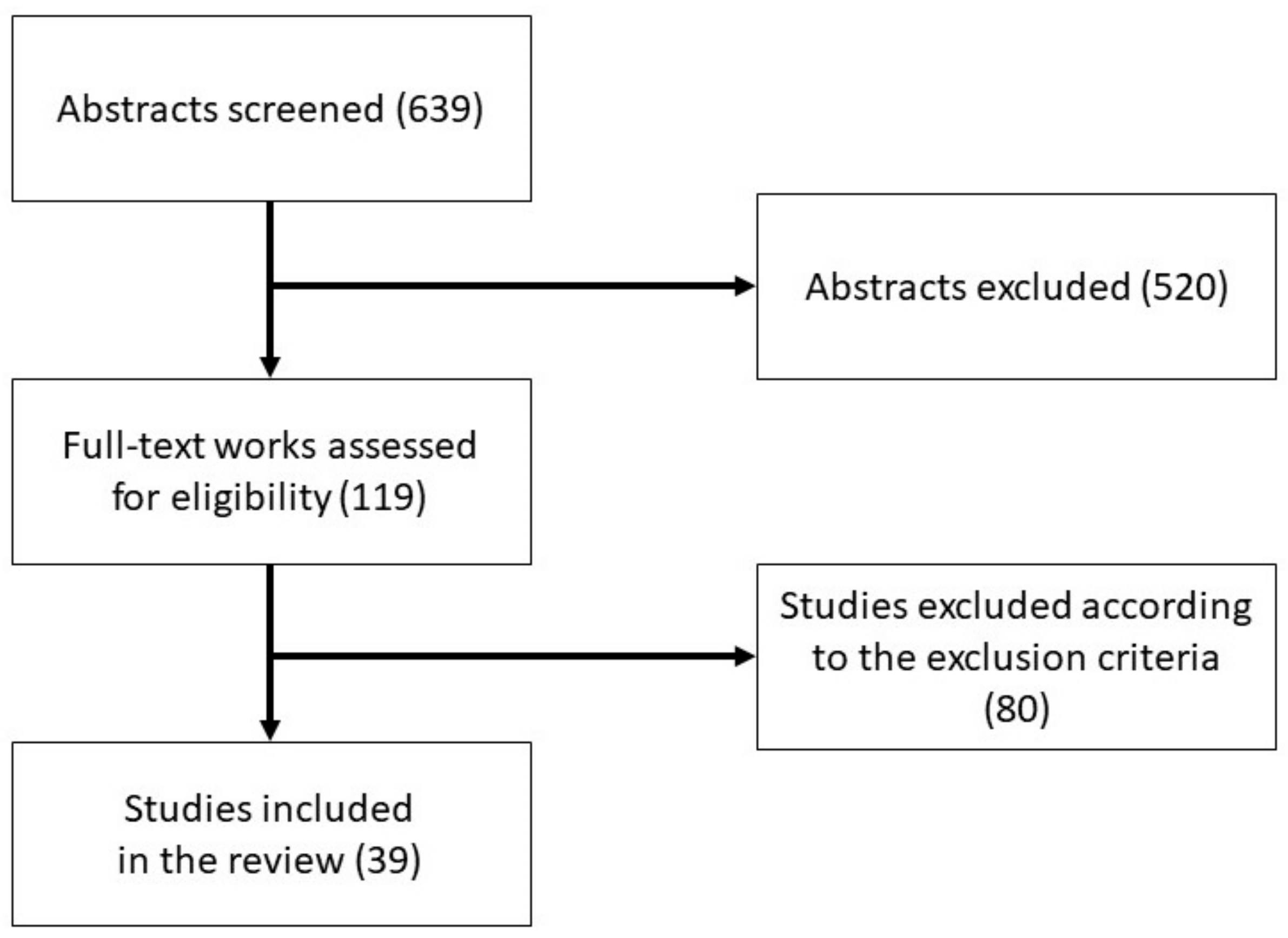

2. Methods and Procedures

Search and Selection Strategy

3. Results

3.1. Characteristics of the Studies

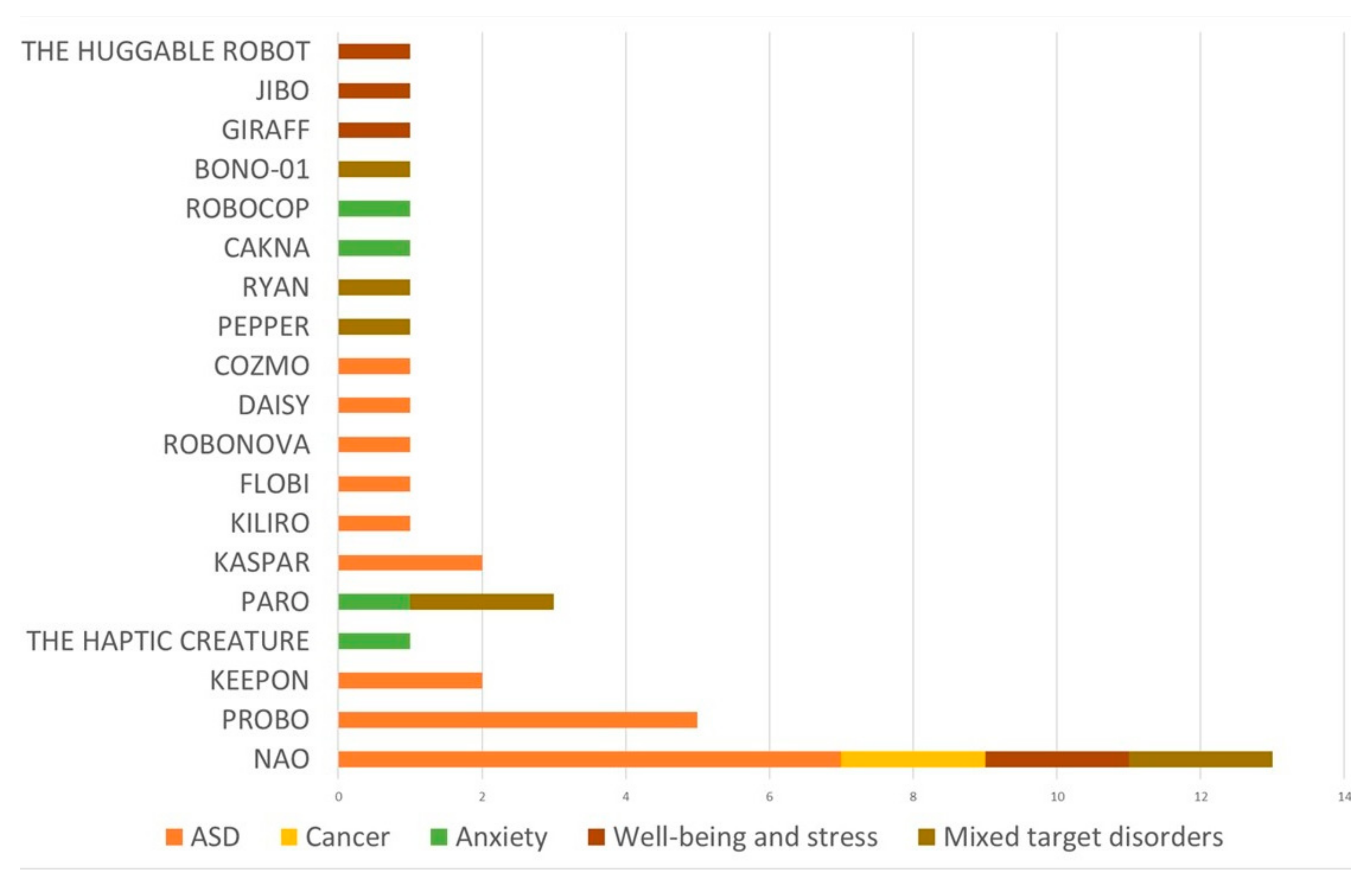

3.2. Study Results Organized by Robot Employed

3.3. Study Results Organized by Target Application and Frameworks

3.3.1. Autism Spectrum Disorder (ASD)

3.3.2. Cancer

3.3.3. Anxiety

3.3.4. Wellbeing

3.3.5. Mixed Target Disorders

3.4. Risk of Bias

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

| Ref. | Year | Country | Study Type | Sample Size | Sample Description | Gender Distribution | Age Range and Mean Age (SD) | Type of Robot | Target Dimension |

|---|---|---|---|---|---|---|---|---|---|

| Autism Spectrum Disorder | |||||||||

| [43] | 2012 | Romania | Single case study | 4 | Children with ASD (all with moderate autism) | 50% male 50% female | Range = 4–9; Mage (SD) = NR | PROBO | Social skills and play abilities (i.e., level of prompting needed to share toys, greet, thank) |

| [71] | 2013 | Romania | Single case study | 3 | Children with ASD | 100% male | Range = 5–6; Mage (SD) = NR | PROBO | Emotion recognition |

| [51] | 2017 | Romania | Experimental design | 27 | Children with ASD | 74% male 26% female | Range = 6–12; Mage (SD) = 8.7 (1.8) | Keepon | Social knowledge; Beliefs (rational and irrational); Emotion intensity; Adaptive behaviors |

| [44] | 2016 | Belgium | Repeated measure design | 30 | Children with ASD | 90% male 10% female | Range = 5–7; Mage (SD) = 6.67 (0.92) | PROBO | Emotion recognition; Social behaviors (i.e., eye-contact, initiations of joint attention, verbal utterances, positive affect) and asocial behaviors (i.e., non-response and evading task behaviors) |

| [32] | 2012 | Romania | Single case study | 4 | 3 children with ASD (2 in moderate and 1 in severe range and epileptic modifications) and 1 child with elements of autism and delays in language development | 100% male | Range = 2–6; Mage (SD) = 4.2 (1.67) | NAO | Social engagement (i.e., free initiations frequency, eye gaze contact duration and gaze shifting frequency, smile and laughter duration) |

| [46] | 2016 | Belgium | Repeated measure design | 30 | Children with ASD | 90% male 10% female | Range = 4–8; Mage (SD) = 6.67 (0.92) | PROBO | Collaborative behavior and play; Engagement (i.e., eye contact, social behaviors, affect, verbal behaviors) |

| [52] | 2015 | Romania | Quasi-experimental design with between-subjects comparison | 81 | Group 1: 40 typically developing children; Group 2: 41 children with ASD | NR | Group 1: Range = 4–7; Mage (SD) = 5.4 (0.4); Group 2: Range = 4–13; Mage (SD) = 8.4 (2.2) | Keepon | Cognitive flexibility; Learning performance (i.e., perseverative errors, regressive errors, lose-shift errors); Attentional engagement; Positive affect |

| [58] | 2013 | Germany | Quasi-experimental design with between subjects comparison | 24 | Group 1: 9 children with ASD (7 children with Asperger’s autism, 1 with childhood autism, and 1 with autism with atypical symptoms); Group 2: 15 healthy volunteers | 100% male | Group 1: Range = 12–28; Mage = 21 Group 2: Range = 18–28; Mage = 23.40 | Flobi | Gaze behavior and eye contact |

| [45] | 2014 | Romania | Experimental Pilot study | 11 | Children with ASD in high functioning range | 100% male | Range = 4–7; Mage (SD) = NR | PROBO | Play skills (i.e., play performance and collaborative play); Engagement in play (i.e., stereotypical behaviors and positive emotions); Social skills (i.e., contingent utterances, verbal initiations, and eye contact) |

| [30] | 2019 | Romania | Single-case alternative treatment design | 21 | Children with ASD | NR | NR | NAO | Social skills (i.e., imitation, turn-taking, joint attention); Completing figures and categorizing items |

| [33] | 2020 | Romania | Single-subjects design | 5 | Children with ASD (3 with low functioning, 1 with moderate, and 1 with high functioning | 60% male 40% female | Range = 5–7; Mage = 4.6 | NAO | Social interaction and communication (i.e., turn taking, engagement, eye contact, verbal utterances); Behaviors (stereotypical, maladaptive, and adaptive); Emotions (functional and dysfunctional negative emotions, and positive emotions) |

| [29] | 2019 | Italy | Randomized control trial | 14 | Children with ASD | 85.71% male 14.29% female | Range = 4–8; Intervention group: Mage (SD) = 73.3 months (16.1) Control group: Mage (SD) 82.1 months (12.4) | NAO | Socio-emotional understanding (i.e., contextualized emotion recognition, comprehension, emotional perspective-taking) |

| [35] | 2018 | Romania | Single-subject design | 5 | Children with ASD (2 with low functioning, 1 with moderate and 2 with high functioning) | 80% male 20% female | Range = 3–5 Mage = 4.78 | NAO | Joint attention (i.e., social cues such as gaze orientation, pointing, and vocal instruction) |

| [59] | 2017 | New Zealand | Within-subject design without control group | 7 | Children with ASD | NR | Range = 6–16; Mage (SD) = 10.0 (3.51) | KiliRo | Stress levels (trough protein and alpha-amylase levels) |

| [31] | 2017 | Romania | Randomized controlled trial | 40 | Children with ASD | NR | Range = 3–6; Mage (SD) = NR | NAO | Social skills (i.e., imitation, joint attention, and turn taking) |

| [56] | 2015 | Belgium/Romania | Two-way mixed factorial design | 21 | Children with ASD | 85.71% male 14.29% female | Range = 4.5–8; Mage = 6.19 (0.99) | Robonova | Social interaction (i.e., eye gaze, imitation, testing behaviors); Positive affect |

| [53] | 2019 | Greece | Longitudinal design with no control group | 7 | Children with ASD/ASC (autism spectrum condition) (from low to high functioning, 1 child with severe language difficulties, 1 non-verbal child) | 71.42% male 28.58% female | Range = 7–11; Mage = NR | KASPAR | Communication and social skills (i.e., prompted and unprompted speech, unprompted imitation, gesture recognition, seeking response and affection, self-initiated play, focus, and attention) |

| [34] | 2012 | Malasya | Pilot case study | 1 | Child with ASD in high functioning range | 100% male | Age = 10 | NAO | Social interaction |

| [55] | 2019 | Greece | Experimental design | 12 | 6 children with ASD (highly functional) and 6 typically developing children | 83.33% male 16.67% female | Range = 6–9; Mage (SD) = NR | Daisy | Social skills (i.e., participation, turn taking, providing information, following rules and instructions) |

| [57] | 2021 | Italy | Two-period crossover design (repeated-measure design) | 24 | Children with ASC | 79.17% male 20.83% female | Range = 4–7; Mage (SD) = 5.79 (1.02) | Cozmo | Social skills (i.e., initiating, responding and maintaining social interaction, initiating and responding to behavioural requests, joint attention) |

| [54] | 2019 | United Kingdom | Pre–post design pilot study | 12 | 11 children with ASD and 1 child with global developmental delay, which gave the child ASD traits | 58.33% male 41.67% female | Range = 11–14; Mage (SD) = NR | KASPAR | Visual perspective taking and theory of mind |

| Cancer | |||||||||

| [36] | 2014 | Iran | Experimental design | 10 | Children with cancer | NR | Range = 7–12 Experimental group: Mage (SD) = 9.5 (1.26) Control group: Mage (SD) = 9.4 (1.36) | NAO | Anxiety; Depression; Anger |

| [37] | 2016 | Iran | Experimental design | 10 | Children with cancer | 9.1% male 90.90% female | Range = 7–12 Experimental group: Mage (SD) = 9.5 (1.63) Control group: Mage (SD) = 9.8 (1.36) | NAO | Anxiety; Depression; Anger |

| Mixed Target Disorders * | |||||||||

| [48] | 2017 | Holland | Single-subject design | 5 | Adults with moderate to severe intellectual disabilities | 40% male 60% female | Range = 59–70; Mage = 64 | Paro | Mood and alertness |

| [60] | 2020 | Japan | Experimental design | 28 | Participants all novices to mindfulness practices | NR | Range = NR Mage (SD) = 20.2 (3.4) | Pepper robot | EEG asymmetry and mood |

| [67] | 2019 | Colorado | Single-subject design | 4 | Elderly with depression | 25% male 75% female | Range = 62–93; Mage = 76 | Ryan (a she-robot) | Natural language; Involvement; Sentiments; Symptoms of depression |

| [40] | 2021 | Scotland | Within-subjects experimental design | 114 | University students | Experiment 1: 38.5% male 61.5% females Experiment 2: 40.7% male 59.30% females Experiment 3: 32.8% male 67.20% females | Experiment 1: Range = 17–42; Mage (SD) = 24.42 (6.40) Experiment 2: Range = 20–62 Mage (SD) = 28.60 (9.61) Experiment 3: Range = 18–43; Mage (SD) = 23.02 (4.88) | NAO e Google MINI | Self-disclosure (i.e., length, sentimentality, voice pitch, harmonicity, energy) |

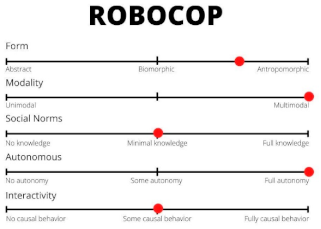

| [63] | 2014 | Japan | Experimental design | 16 | Healthy elderly | Group 1: 83.33% male 16.67% female Groups 2: NR | Group 1: Range = NR Mage = 70 Groups 2: Range = NR Mage = 72 | Bono-01 | Amusement (i.e., laughter) |

| [42] | 2020 | Australia | Pilot randomized controlled trial with stepped-wedge design | 26 | Adults wanting to reduce snack intake | 81.31% male 18.69% female | Range = 19–69; Mage (SD) = 37 (13.47) | NAO | Number of snack episodes; Motivational thoughts; Craving cognitions; Confidence to control snacking |

| [49] | 2016 | California | Pre–post design pilot study with no control group | 23 | Veterans (82.1% with dementia diagnosis, 12.5% without dementia, 5.4% data missing) | 100% male | Range = 58–97; Mage (SD) = 80 (10.6) | PARO | Negative behavioral states (i.e., anxiety, sadness, yelling behavior, isolation, pain, wandering/pacing); Positive behavioral states (i.e., calmness, bright affect, sleeping, conversing) |

| Anxiety | |||||||||

| [68] | 2019 | Malaysia | Pre–post design pilot study with no control group | 21 | Young adults with mild to moderate anxiety traits | 60% male; 40% female | Range = 19–23; Mage (SD) = 21.5 (1.6) | CAKNA | Anxiety |

| [50] | 2012 | Canada | Within subjects design participants | 15 | Children at high risk of anxiety | 40% male; 60% female | Range = 10–17; Mage (SD) = 13.7 (2.3) | Haptic Creature | Anxiety |

| [47] | 2018 | Connecticut | Experimental design | 87 | Children in early school years | 47.1% male 52.9% female | Range = 6–9; Mage (SD) = 8.15 (1.14) | Paro | Mood (positive and negative); Anxiety; Arousal |

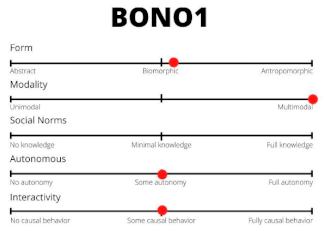

| [69] | 2017 | Massachusetts (Boston) | Within-subject design, counterbalanced design across two sessions | 12 | 12 students and professionals (7 categorized as high competence public speakers; 5 with moderate competence) | 75% male 25% female | Range = 22–28; Mage = 24 | RoboCOP | Anxiety and speaker confidence |

| Wellbeing And Stress | |||||||||

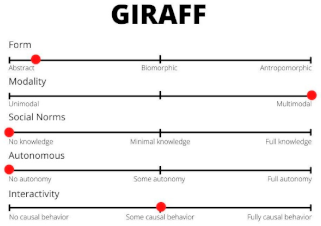

| [61] | 2014 | Holland | Within-subject design | 37 | 8 elderly (3 retired and 5 working at the university in education or related) and 29 non-elderly (11 students and 10 researchers; 8 with university-related professions) | Elderly: 62.5% male 37.5% female Non-elderly: 37.93% male 62.06% female | Elderly: Range = 62–83; Mage (SD) = 70.38 (7.84). Non-elderly: Range = 20–55; Mage (SD) = 30.48 (7.49) | Giraff | Mood |

| [41] | 2017 | Massachusetts | Randomized clinical trial study | 54 | 20 children in Inpatient Surgical Unit; 1 in Medical Surgical Intensive Care Unit; 24 in Hematology/Oncology Unit; 9 in the Bone Marrow Transplant Unit | 61.11% male; 38.9% female | Range = 3–10; Mage (SD) = 6.09 (2.33) | Huggable robot | Socio-emotional wellbeing (i.e., affect, joyful play, stress and anxiety) |

| [62] | 2020 | Massachusetts | Pre–post design with no control group | 35 | Undergraduate students | 20% male 77.14% female 2.86% other | NR | Jibo robot and a Samsung Galaxy tablet | Psychological wellbeing; Mood; Readiness to change |

| [39] | 2017 | Holland | Between-subjects factorial design | 67 | Healthy people | 50.7% male 49.3% female | Range = 18–78; Mage (SD) = 47.93 (19.96) | NAO | Arousal; Robot-touch experience; Robot perception; Prosocial behaviors (i.e., willingness to donate money) |

| [38] | 2016 | Germany | Pilot study: early concept evaluation with a focus group | 5 | Children waiting to see a doctor | 80% male 20% female | Range = 5–12; Mage (SD) = NR | NAO (a.k.a. Murphy Miserable Robot) | Feelings in the waiting situation; Experience (i.e., stimulation, empathy, positive coping) |

| Ref. | Main Findings | Study Limitations | Risk of Biases |

|---|---|---|---|

| Autism Spectrum Disorder | |||

| [43] | The robot-assisted intervention was associated with a statistically significant decrease of the level of prompt the children needed to perform the social behavior. | Limited generalizability due to small sample size; the therapist could not stop the robot playing a story when necessary. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to use of single-case design. |

| [71] | Situation-based emotion recognition performance was increased by PROBO’s active face with a moderate to large effect size; no qualitative differences were found in the valence (positive or negative) of the emotion. | Limited generalizability due to small sample size and limited representativeness of the population; possible experimenter effect. | Sampling bias due to small sample size (possible type II error) and low representativeness; sampling bias due to recruitment of a male-only sample; bias due to use of single case design. |

| [51] | An increase in rational beliefs and a decrease in emotion intensity was found in both the robot-enhanced therapy and in the treatment-as-usual group with a large effect size. No significant differences were shown in social knowledge, irrational beliefs, and adaptive behaviors in both groups. | Limited generalizability due to relatively small sample size. | Sampling bias due to small sample size (possible type II error). |

| [44] | Eye contact with the interaction partner was significantly higher in the robot condition compared to the human agent condition with a medium effect size. No significant differences were shown in detecting preferences of the robot or the adult, nor in the positive affect, frequency of asocial behaviors, and verbal utterances in the robot condition. | Limited generalizability due to relatively small sample size and single exposure to the conditions; the vast majority of the sample was male; technical constraints of the robot; the robot was not capable of reacting to social advances made by the children with gestures. | Sampling bias due to small sample size (possible type II error) and low representativeness. |

| [32] | A significant increase was found in frequency of initiations with or without prompt in the robot interaction in half of the sample. A general low level of free initiations was found in both the robot and human condition. Eye gaze, smile, and laughter were increased in half of the sample in the robot condition. The frequency of shared eye gaze was increased in just one child in the robot interaction. | Limited generalizability due to small sample size and lack of female sample; the robot is capable of imitating only gross arm movements and it is not fast enough for a perfect contingency; possible habituation effect; rigidity of the experimental setup. | Sampling bias due to small sample size (possible type II error) and low representativeness; sampling bias due to recruitment of a male-only sample; bias due to missing data; bias due to use of single case design; bias due to possible habituation effect. |

| [46] | No significant differences were observed between the robot and human condition for any of the variables (i.e., children performed well in both conditions, the robot triggered a similar frequency of independent and collaborative play behaviors as with an adult interaction partner). | Limited generalizability due to relatively small sample size and single exposure to the conditions; the vast majority of the sample was male; technical constraints of the robot; the robot was not capable of reacting to social advances made by the children with gestures. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to technical constraints of the robot. |

| [52] | A significantly higher number of errors was found in the robot condition when learning the task as compared to the human condition, with a large effect size. No significant differences were found in the number of perseverative, regressive, and lose-shift errors in the two conditions. A significantly higher number of attentional engagement episodes and positive affects were found in the robot condition, respectively, with a large and medium effect size. | Limited generalizability due to relatively small sample size and single exposure to the conditions; no specified gender distribution; possible effect of familiarity; wide age range in the experimental group and between the groups. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to possible effect of familiarity. |

| [58] | The level of face fixation was higher in the robot condition even if a significant decrease in eye contact was found at the end of interaction. | Limited generalizability due to relatively small sample size and lack of female participants. | Sampling bias due to small sample size (possible type II error) and low representativeness; sampling bias due to recruitment of a male-only sample. |

| [45] | More collaborative play and engagement and less stereotyped behaviors were found in the robot condition. For play performance, eye contact, positive emotions, and contingent utterances, no significant differences were found between the two conditions. | Limited generalizability due to small sample size and single exposure to the conditions. | Sampling bias due to small sample size (possible type II error) and low representativeness; sampling bias due to recruitment of a male-only sample. |

| [30] | In the robot intervention, improvements were shown in imitation, turn-taking tasks (i.e., sharing information), completing a series of figures, and categorizing items, but not in joint attention. Both the standard human treatment and robot-enhanced therapy had a positive effect on the clinical ASD symptoms, reducing their severity. | Limited generalizability due to relatively small sample size; shortcomings of participants’ demographic information. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to use of single-case alternative treatment design. |

| [33] | Similar levels of performance were found for turn-taking skills across standard human treatment and robot-enhanced treatment. The effect of the robot condition was higher and mostly statistically significant on adaptive behaviors, even if this condition also led to some increases in stereotyped and maladaptive behaviors. More interest and eye gaze were found in the robot condition for some of the children as compared to human interaction. | Limited generalizability due to small sample size; rigidity of the experimental setup. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to use of single subject design. |

| [29] | A large effect of the social robot was found in promoting learning of socio-emotional understanding skills. An increased ability to understand beliefs, emotions (i.e., anger, disgust, fear, happiness, sadness, and shame), and thoughts of others was found. | Limited generalizability due to small sample size; the vast majority of sample was male; possible co-therapist effect; difference in the complexity of the tasks between control and robot groups. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to possible co-therapist effect; bias due to different complexity of the tasks between control and robot groups. |

| [35] | The use of different social cues for prompting joint attention with the robot increased the performance of the children (i.e., the use of pointing with or without vocal instructions, in addition to gaze orientation, led to more joint attention engagement and maximum performance than gaze orientation alone). | Limited generalizability due to small sample size; the vast majority of sample was male. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to use of single subject design. |

| [59] | A statistically significant reduction was found in the average protein level and in the alpha-amylase levels (i.e., indicators of stress) after interacting with the robot. | Limited generalizability due to small sample size; absence of a control group; no specified gender distribution; other factors may have influenced protein levels and alpha-amylase (i.e., medications, body temperature). | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to absence of a control group; bias due to possible influence of confounding factors. |

| [31] | A significant difference was found on joint attention favoring the standard human treatment intervention as compared with the robot condition. Comparisons on imitation and turn taking were not significant. | Limited generalizability due to small number of sessions; no specified gender distribution; unequal distribution of the participants in the two conditions (due to the blind randomization process) possibly affected the statistical power with reduction of significant effect detection. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to unequal distribution of the participants in the two conditions. |

| [56] | The robot was able to elicit testing behaviors when the behavior was not a perfect mirroring, More attention was paid to the robot than the human. | Limited generalizability due to relatively small sample size; the vast majority of sample was male; absence of a control group. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to absence of a control group. |

| [53] | An improvement was found in the communication and psychomotor function domains with moderate effect sizes. An increase was found in unprompted imitation (i.e., poses, sequence of movements, and facial expressions) and prompted speech, with a large and significant effect, and in gesture recognition, with a moderate and significant effect. A positive increasing trend was found for prompted imitation and unprompted speech, with a moderate to large effect. A decrease was found in self-initiated play behavior, with a small to moderate effect. An increase was found in focus, attention, and the behavior of seeking a response, with a moderate but not significant effect. | Limited generalizability due to small sample size; absence of a control group; possible examiner effect; the vast majority of sample was male; the behavioural coding was only conducted with the robot and not with the human session; impact of children’s mood variability on the interaction with the robot. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to absence of a control group; bias due to possible examiner effect. |

| [34] | Autistic behaviors were suppressed during the child–robot interaction and more eye contact was observed between the child and robot compared to the interaction between child and teacher. The robot’s simple appearance compared to humans elicited the child’s interest to sustain interaction. | Limited generalizability due to small sample size. | Sampling bias due to recruitment of a male-only sample; bias due to use of single case study. |

| [55] | A statistically significant improvement was found in children’s social performance in the robot condition for activities in groups, providing information, and turn taking. The skill of following the rule did not show statistical significance. | Limited generalizability due to small sample size; the vast majority of sample was male. | Sampling bias due to small sample size (possible type II error) and low representativeness. |

| [57] | The combination of standard therapy and robot-assisted training was more effective than the standard therapy alone in improving the children’s tendency to initiate social interaction and behavioural requests. A marginal difference was found in the tendency to maintain social interaction and respond to behavioural requests in the combined therapies. No significant difference was found in the tendency to respond to social interaction, initiate, maintain, and respond to joint attention behaviors. | Limited generalizability due to relatively small sample size; high drop-out rate (1/3 of the initial sample); the vast majority of sample was male. | Sampling bias due to small sample size (possible type II error) and low representativeness; sampling bias due to lack of sampling criteria explication; bias due to high drop-out rate. |

| [54] | Visual perspective taking was improved over sessions with the robot in children with moderate to high functioning. | Limited generalizability due to relatively small sample size; the progression criteria in games could not be respected because of the wide heterogeneity in children’s ability. | Sampling bias due to small sample size (possible type II error) and low representativeness; sampling bias due to lack of inclusion criteria. |

| Cancer | |||

| [36] | A significant reduction was found in the levels of anxiety, depression, and anger in patients after treatment in the robotic group. | Limited generalizability due to small sample size; no specified gender distribution; most of the participants knew the research team before the study began. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to possible Rosenthal effect. |

| [37] | A significant decrease was found in anxiety, depression, and anger after receiving the therapy in the robot group. | Limited generalizability due to small sample size; the vast majority of sample was female; drop-out due to lack of willingness to come to the medical centers (i.e., unpleasant memories); most of the participants knew the research team before the study began. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to drop-out during sessions; bias due to possible Rosenthal effect. |

| Mixed Target Disorders * | |||

| [48] | The robot showed a positive influence on mood (as rated by daily supervisors and self-rated) and alertness, with an increase in activity and interaction with the environment and a decrease in agitation in just one participant. No significant beneficial effects were found in the rest of the sample. | Limited generalizability due to small sample size; limitations due to small reliability of AB design; the self-report scale on mood might have been too complicated for some of the participants. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to missing data. |

| [60] | A significantly greater right-sided activity was found in the occipital gamma band (attributed to increased sensory awareness and open monitoring) during robot interaction in the meditation group compared to the control group. No significant differences were found in the frontal asymmetry of the two groups. An improvement was found in mood after interaction with the robot. | Limited generalizability due to relatively small sample size; no specified gender distribution. | Sampling bias due to small sample size (possible type II error) and low representativeness; measurement bias due to using an instrument for mood assessment (i.e., the Positive and Negative Affect Scale) that did not have adequate indicators to capture all affective dimensions. |

| [67] | Participants improved their natural language with an increase in average sentence length. High feelings of comfort and enjoyment were reported with the robot. Improvements were found in Patient Health Questionnaire-9 scores in 75% of the sample and in Geriatric Depression Scale scores in 50% of the sample. | Limited generalizability due to small sample size; the vast majority of the sample was male; possible role of confounding variables (i.e., the excitement of participating in an experiment). | Sampling bias due to small sample size (possible type II error) and low representativeness. |

| [40] | The robot was able to elicit self-disclosure but less so than the human. The embodiment was an important feature in eliciting self-disclosure and influencing its quantity and quality. | Limited representativeness due to recruitment of university students only; limited ecological laboratory experiment. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to compensation for participating in the study. |

| [63] | The frequency of laughter evoked by the robot was mostly higher than that evoked by the original human speaker. The robot was accepted as a participant in the group conversation. | Limited generalizability due to small sample size; the vast majority of the sample was male. | Sampling bias due to small sample size (possible type II error) and low representativeness; sampling bias due to lack of sampling criteria explication. |

| [42] | A significant reduction was found in snack episodes and frequency of craving imagery in the robot treatment. A significant increase was found in perceived confidence to control snack intake for time duration, specific scenarios, and emotional states but not for confidence in the number of days of adherence. Significant positive correlations were found between snack episode reductions and working alliance, including a personal bond between the social robot and the participant. | Limited generalizability due to small sample size; the vast majority of sample was male; short study duration. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to lack of completion of initial measurement (i.e., high-calorie diary recall segment). |

| [49] | A significant decrease was found in negative behavioral states (i.e., observed anxiety, sadness, yelling, isolative behaviors, reports of pain, wandering/pacing) after the robot intervention. A significant increase was found in positive behavioral states (i.e., calmness, bright affect, sleep behavior, and conversing) after the robot intervention. The presence of pre-test positive or negative behaviors was significantly associated, respectively, with post-test positive and negative behaviors. The robot effect was not moderated by a dementia diagnosis, except for one case that approached significance. | Limited generalizability due to small sample size; limited generalizability due to unblinding, and inability to distinguish the effects of staff from effects of the robot; absence of control group; absence of females in sample; drop-out due to death, transfer, or discharge. Officially, the study was conducted as a non-research activity | Sampling bias due to small sample size (possible type II error) and low representativeness; sampling bias due to recruitment of a male-only sample; possible observer bias (unblinded observational study) or selection bias; bias due to no randomization; bias due to absence of a control group; bias due to drop-out. |

| Anxiety | |||

| [68] | A significant improvement was found in State-Trait Anxiety (Form Y) scores after using the robot-based system. | Limited generalizability due to small sample size; shortcomings of activity explication; only state anxiety was evaluated. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to absence of a control group. |

| [50] | A significant decrease was found in state anxiety, heart rate, and breathing rate during active trials with the robot (the robot was breathing). A significant increase was found in emotional valence (i.e., the positivity of the emotional response was significantly strengthened). | Limited generalizability due to small sample size; limited generalizability due to population with highly positive attitude towards pets; familiarity with the task before the study began. | Sampling bias due to small sample size (possible type II error) and low representativeness; sampling bias due to lack of inclusion of sample with target diagnosis; bias due to familiarity with the task. |

| [47] | A significant improvement was found in positive affect after interacting with the robot in a stressful task, with a large effect. No effect was found in negative affect, anxiety, or arousal. | Limited generalizability due to relatively small sample size and brief interactions with the social assistive robot; only short-term changes in anxiety, mood, and arousal were analyzed. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to compensation for participating in the study. |

| [69] | No significant effects were found on state anxiety or speaker confidence even if the robot led to improvements in the rehearsal experience of presenters. | Limited generalizability due to small convenience sample size; the vast majority of the sample was male; limited ecological validity. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to compensation for participating in the study. |

| Wellbeing And Stress | |||

| [61] | An improvement was found in mood when the robot was presented as a coach intended to perform a psychological exercise. A decrease was shown in mood when the robot was presented as a conversation partner. Communication problems and less confidence were reported by the elderly interacting with the robot as compared with younger participants. | Limited generalizability due to relatively small sample size; limited generalizability due to artificiality of the scenario and low ecological validity. | Sampling bias due to small sample size (possible type II error) and low representativeness; sampling bias due to lack of sampling criteria explication; bias due to elderly participants’ communication problems with the robot. |

| [41] | Positive emotions (i.e., joy) were elicited by the robot both in its embodied and avatar form. The effect was comparable between the robot and an avatar. The robot effect was higher than the effect elicited by a non-interactive toy. The embodiment did not seem necessary to elicit positive emotions. | Limited generalizability due to relatively small sample size. | Sampling bias due to small sample size (possible type II error). |

| [62] | A statistically significant improvement was found in psychological wellbeing, overall mood, and readiness to change behavior in the group with low neuroticism and high conscientiousness after the robot intervention. A significant improvement was found in psychological wellbeing also in the group with high neuroticism and low conscientiousness, but not in mood or readiness to change. | Limited generalizability due to relatively small sample size; no specified information about age; the vast majority of the sample was female; no randomization to a control group; most of the sample voluntarily signed up to the study. | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to absence of a control group; self-selection bias. |

| [39] | No significant effect of a robot-initiated touch was found on physiological measures of arousal nor on prosocial behaviors (i.e., the amount of money participants were willing to donate or the amount of time participants were willing to offer for participating in another questionnaire). | Limited generalizability due to relatively small sample size; limitations due to technical features (i.e., too quickly or slowly or rigid movements of the robot responses may have affected the spontaneity of the interaction). | Sampling bias due to small sample size (possible type II error) and low representativeness; bias due to compensation for participating in the study. |

| [38] | Empathy was the most reported level of experience when interacting with the robot that was seen as a friend. Stimulation (i.e., being interested in the robot to forget the situation) was also reported, when the robot was seen as a means of distraction. | Limited generalizability due to small sample size; the vast majority of the sample was male. | Sampling bias due to lack of sampling criteria explication. |

| Robot | Brief Description | Classification 1 |

|---|---|---|

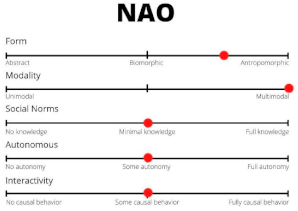

NAO (a.k.a. Murphy miserable robot) Source for image: [39] | NAO is a humanoid robot produced by Aldebaran Robotics, designed to interact with people. It is a medium-sized (57–58 cm) entertainment robot that includes an onboard computer and networking capabilities at its core. Its platform is programmable and it can handle multiple applications. It can walk, dance, speak, and recognize faces and objects. Now in its sixth generation, it is used in research, education, and healthcare all over the world. |  |

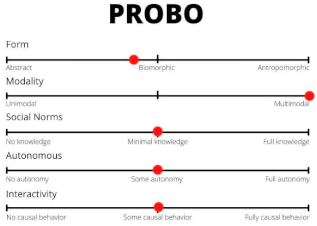

PROBO Source for image: [87] | Probo is an animal-like green robot with an attractive trunk or proboscis, animated ears, eyes, eyebrows, eyelids, mouth, neck, and an interactive belly screen. It provides a soft touch and a huggable appearance, and it is designed to act as a social interface. It is usually used as a platform to study human robot interaction (HRI), while employing human-like social cues and communication modalities. The robot has a fully actuated head, with 20 degrees of freedom, and it is capable of expressing attention and emotions via its gaze and facial expressions and making eye-contact. |  |

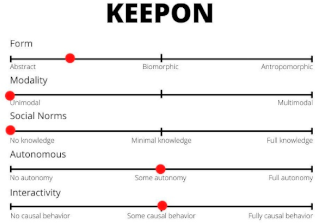

KEEPON Source for image: [51] | Keepon is a small robot with a silicone-rubber body, resembling a yellow snowman. The robot sits on a black cylinder containing four motors and two circuit boards, via which its movements can be manipulated. Its head and belly deform whenever it changes posture or someone touches it, and it can nod, turn, lean side-to-side and bob. Keepon has been widely used in human–robot interaction studies with social behaviors. |  |

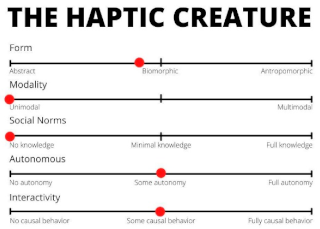

THE HAPTIC CREATURE Source for image: [50] | The Haptic Creature is an animal-like robot that is able to breathe, purr, and change the stiffness of its ears. It was developed by Steve Yohanan to study the communication of emotion through touch. Under its soft artificial fur, the robot takes advantage of an array of touch sensors to perceive a person’s touch and by changing the depth and rate of its breathing, the symmetry between the inhale and exhale, the strength of its purring, and the stiffness of its ears, it is able to communicate different emotions. |  |

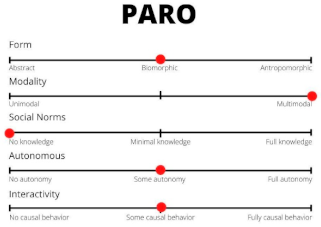

PARO Source for image: [49] | Paro is a baby harp-seal-like robot with white fur. It is equipped with four primary “senses”, such as sight (via a light sensor), hearing (including sound directionality and speech recognition), balance, and tactile sensation. It has been developed to provoke social reactions by having soft fur, by reacting to its environment with sounds (i.e., showing a diurnal sleep–wake cycle if reinforced in the form of stroking or petting), and by moving its head, tail, and eyelid. |  |

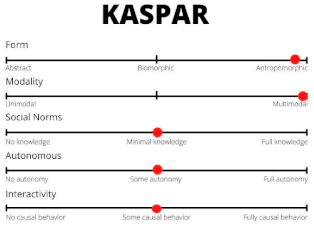

KASPAR Source for image: [88] | Kaspar is a humanoid child-sized robot developed by the Adaptive Systems Research Group at the University of Hertfordshire. It can move its arms, head, and torso; blink; make facial expressions (e.g., smile when “happy”); and vocalize pre-programmed sounds, words, and sentences by using a neutral human voice. It can operate in a semi-autonomous manner when controlled via a wireless keypad to perform various pre-programmed actions or actions can also be triggered by activating the robot’s tactile sensors placed on different parts of its body. |  |

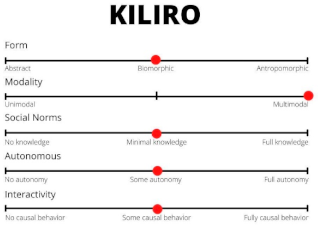

KILIRO Source for image: [59] | KiliRo is a parrot-like robot capable of recognizing English alphabets from A through Z and Arabic numerals from 0 through 9 using the QR code scanning algorithm. When a number or an alphabet is shown to the robot, it reads the QR code pasted in the model and the corresponding text is converted into speech using the text-to-speech module. It also has seven touch sensors used to recognize the tactile interaction preferences of children with the KiliRo robot and, if touched, the LED light on the robot glows, encouraging the children for more physical interaction with KiliRo. |  |

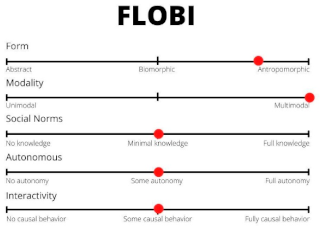

FLOBI Source for image: [89] | The humanoid robot head Flobi is able to communicate (using an external loudspeaker positioned next to the robot head) and to display a range of facial expressions. |  |

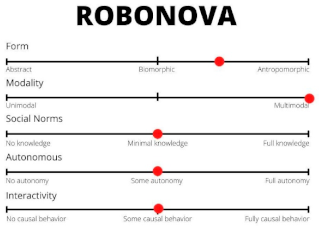

ROBONOVA Source for image: [90] | Robonova is a small humanoid robot developed by Hitec Robotics with high speed of execution and 16 degrees of freedom, and fundamental abilities for achieving a high level of temporal contingency between the acts of the user and those of the robot. It contains remote control ability through IR, RC, or a Bluetooth joystick. |  |

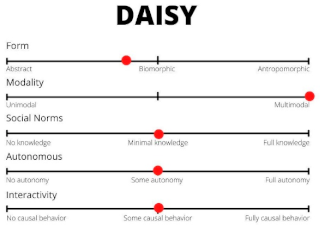

DAISY Source for image: [91] | Daisy is a semi-autonomous robot in the shape of a light blue and purple flower that resembles a stuffed soft toy. The face of the robot has two eyes with eyebrows that blink and look around and a mouth that speaks words and about 400 preinstalled phrases categorized according to their meaning with the lip sync technique. Daisy can be remotely controlled to perform sequences of movements and facial expressions. |  |

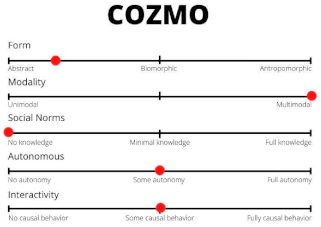

COZMO Source for image: [92] | Cozmo robot is a small toy robot inspired by Pixar’s Wall-E and Eve, controlled through a smartphone app with different modes. It recognizes human faces, pets, and its toy cubes and uses different sensors to track its body orientation. It also displays emotions using sounds and animations. It does not have speech recognition and mainly interacts through beeps, movements, and animated eyes. |  |

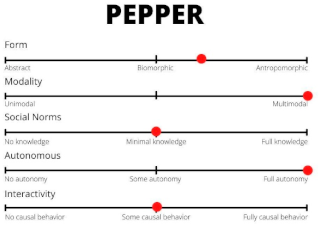

PEPPER Source for image: [93] | Pepper is a humanoid robot developed by SoftBank Robotics, capable of exhibiting body language, perceiving and interacting with its surroundings, and moving around. It can also analyze people’s expressions and voice tones, using the latest advances and proprietary algorithms in voice and emotion recognition to spark interactions. |  |

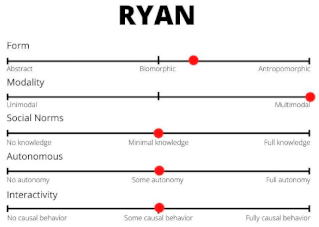

RYAN Source for image: [94] | Ryan is a social robot developed by DreamFace Technologies, LLC. for face-to-face human–robot communication in different social, educational, and therapeutic contexts. It has an emotive and expressive animation-based face with accurate visual speech and can communicate through spoken dialogue. On its chest, there is also a tablet that can receive the user’s input or show videos or images to them. It can also be equipped with a dialog manager called ProgramR that the robot uses to process the users’ input and reply properly to them. |  |

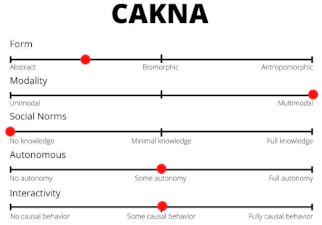

CAKNA Source for image: [95] | Cakna stands for collaborative, knowledge-oriented, and autonomous agent or means “care” in Malay language. It is half a meter tall and is designed to sit on a table or countertop. It has a limited ability to express state to the user using physical or anthropomorphic displays (e.g., mouth, arms, hands). |  |

ROBOCOP Source for image: [69] Source for image: [69] | RoboCOP (robotic coach for oral presentations) is an automated anthropomorphic robot head for presentation rehearsal. It actively listens to a presenter’s speech and offers detailed spoken feedback on five key aspects of presentations: content coverage, speaking rate, filler rate, pitch variety, and audience orientation. It also provides high-level advice on the presentation goal and audience benefits, as well as talk organization, introduction, and close. |  |

BONO-01 Source for image: [63] | Bono-01 is a conversation support robot dressed in baby clothes, capable of network communication control, transmission and reception of images, and responding to facilitate conversations. |  |

GIRAFF Source for image: [96] | The Giraff telepresence robot is generally used to achieve remote communication between two parties. It consists of a mobile robotic base equipped with a web camera, a microphone, and a screen. Users interact through the robotic device with a remote peer who connects through a client interface. The client interface is on the remote user site and it allows the user to teleoperate the Giraff and navigate around (by pointing directly on the real time video image and pushing the left button on the mouse) while speaking through a microphone and a web camera, as well as receive the video and audio stream from the Giraff. |  |

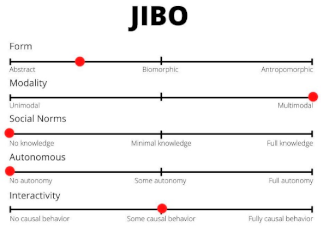

JIBO Source for image: [97] | JIBO is a commercial robot equipped with basic skills, such as weather forecast, jokes, music, and interactive games. It is meant to be placed on a table, desk, or counter and contains no wheels or other mechanisms to travel to a different location, but it is able to express itself with body poses and gestures using just a torso and head. The center of JIBO’s face has a touch screen that usually displays JIBO’s eye (a grey circle) that moves along with JIBO’s body (e.g., looking to the right as JIBO turns its body right). However, the screen can also be used for other purposes, such as displaying text, images, or videos. The touch aspects can be used to identify objects or make selections on the screen. |  |

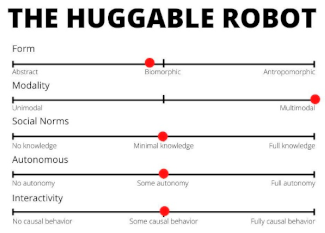

THE HUGGABLE ROBOT Source for image: [41] | The Huggable robot is a blue and green teddy-bear-like robot with a big head and large eyes that intensify the sense of infancy and make it more appealing for young children. It has twelve degrees of freedom (DOF) to perform animate and expressive motions and it is capable of manual look-at and point-at behaviors. It is also able to talk and move up and down the muzzle accordingly. |  |

References

- Pollak, A.; Paliga, M.; Pulopulos, M.M.; Kozusznik, B.; Kozusznik, M.W. Stress in Manual and Autonomous Modes of Collaboration with a Cobot. Comput. Hum. Behav. 2020, 112, 106469. [Google Scholar] [CrossRef]

- Wainer, J.; Feil-seifer, D.J.; Shell, D.A.; Mataric, M.J. The Role of Physical Embodiment in Human-Robot Interaction. In Proceedings of the ROMAN 2006—The 15th IEEE International Symposium on Robot and Human Interactive Communication, Hatfield, UK, 6–8 September 2006; pp. 117–122. [Google Scholar]

- Haring, K.S.; Satterfield, K.M.; Tossell, C.C.; de Visser, E.J.; Lyons, J.R.; Mancuso, V.F.; Finomore, V.S.; Funke, G.J. Robot Authority in Human-Robot Teaming: Effects of Human-Likeness and Physical Embodiment on Compliance. Front. Psychol. 2021, 12, 625713. [Google Scholar] [CrossRef] [PubMed]

- Holland, J.; Kingston, L.; McCarthy, C.; Armstrong, E.; O’Dwyer, P.; Merz, F.; McConnell, M. Service Robots in the Healthcare Sector. Robotics 2021, 10, 47. [Google Scholar] [CrossRef]

- Van Wynsberghe, A. Service Robots, Care Ethics, and Design. Ethics Inf. Technol. 2016, 18, 311–321. [Google Scholar] [CrossRef] [Green Version]

- Wan, S.; Gu, Z.; Ni, Q. Cognitive Computing and Wireless Communications on the Edge for Healthcare Service Robots. Comput. Commun. 2020, 149, 99–106. [Google Scholar] [CrossRef]

- Sarrica, M.; Brondi, S.; Fortunati, L. How Many Facets Does a “Social Robot” Have? A Review of Scientific and Popular Definitions Online. Inf. Technol. People 2019, 33, 1–21. [Google Scholar] [CrossRef]

- Bartneck, C.; Forlizzi, J. A Design-Centred Framework for Social Human-Robot Interaction. In Proceedings of the RO-MAN 2004. 13th IEEE International Workshop on Robot and Human Interactive Communication (IEEE Catalog No.04TH8759), Kurashiki, Japan, 22 September 2004; pp. 591–594. [Google Scholar]

- Chen, S.-C.; Moyle, W.; Jones, C.; Petsky, H. A Social Robot Intervention on Depression, Loneliness, and Quality of Life for Taiwanese Older Adults in Long-Term Care. Int. Psychogeriatr. 2020, 32, 981–991. [Google Scholar] [CrossRef]

- Costescu, C.A.; Vanderborght, B.; David, D.O. The Effects of Robot-Enhanced Psychotherapy: A Meta-Analysis. Rev. Gen. Psychol. 2014, 18, 127–136. [Google Scholar] [CrossRef] [Green Version]

- Libin, A.V.; Libin, E.V. Person-Robot Interactions from the Robopsychologists’ Point of View: The Robotic Psychology and Robotherapy Approach. Proc. IEEE 2004, 92, 1789–1803. [Google Scholar] [CrossRef]

- Gelso, C.J.; Hayes, J.A. The Psychotherapy Relationship: Theory, Research, and Practice; John Wiley & Sons Inc: Hoboken, NJ, USA, 1998; 304p, ISBN 978-0-471-12720-8. [Google Scholar]

- Høglend, P. Exploration of the Patient-Therapist Relationship in Psychotherapy. Am. J. Psychiatry 2014, 171, 1056–1066. [Google Scholar] [CrossRef]

- Roos, J.; Werbart, A. Therapist and Relationship Factors Influencing Dropout from Individual Psychotherapy: A Literature Review. Psychother. Res. 2013, 23, 394–418. [Google Scholar] [CrossRef]

- Cheok, A.D.; Levy, D.; Karunanayaka, K. Lovotics: Love and Sex with Robots. In Emotion in Games: Theory and Praxis; Socio-Affective Computing; Karpouzis, K., Yannakakis, G.N., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 303–328. ISBN 978-3-319-41316-7. [Google Scholar]

- Cheok, A.D.; Karunanayaka, K.; Zhang, E.Y. Lovotics: Human—Robot Love and Sex Relationships. In Robot Ethics 2.0: New Challenges in Philosophy, Law, and Society; Lin, P., Abney, K., Jenkins, R., Eds.; Oxford University Press: Oxford, UK, 2017; Volume 193, pp. 193–213. ISBN 978-0-19-065295-1. [Google Scholar]

- Lin, Y.; Tudor-Sfetea, C.; Siddiqui, S.; Sherwani, Y.; Ahmed, M.; Eisingerich, A.B. Effective Behavioral Changes through a Digital MHealth App: Exploring the Impact of Hedonic Well-Being, Psychological Empowerment and Inspiration. JMIR mHealth uHealth 2018, 6, e10024. [Google Scholar] [CrossRef]

- Straten, C.L.; van Kühne, R.; Peter, J.; de Jong, C.; Barco, A. Closeness, Trust, and Perceived Social Support in Child-Robot Relationship Formation: Development and Validation of Three Self-Report Scales. Interact. Stud. 2020, 21, 57–84. [Google Scholar] [CrossRef]

- Ta, V.; Griffith, C.; Boatfield, C.; Wang, X.; Civitello, M.; Bader, H.; DeCero, E.; Loggarakis, A. User Experiences of Social Support From Companion Chatbots in Everyday Contexts: Thematic Analysis. J. Med. Internet Res. 2020, 22, e16235. [Google Scholar] [CrossRef] [PubMed]

- Angantyr, M.; Eklund, J.; Hansen, E.M. A Comparison of Empathy for Humans and Empathy for Animals. Anthrozoös 2011, 24, 369–377. [Google Scholar] [CrossRef]

- Mattiassi, A.D.A.; Sarrica, M.; Cavallo, F.; Fortunati, L. What Do Humans Feel with Mistreated Humans, Animals, Robots, and Objects? Exploring the Role of Cognitive Empathy. Motiv. Emot. 2021, 45, 543–555. [Google Scholar] [CrossRef]

- Misselhorn, C. Empathy with Inanimate Objects and the Uncanny Valley. Minds Mach. 2009, 19, 345–359. [Google Scholar] [CrossRef]

- Young, A.; Khalil, K.A.; Wharton, J. Empathy for Animals: A Review of the Existing Literature. Curator Mus. J. 2018, 61, 327–343. [Google Scholar] [CrossRef]

- Marchetti, A.; Manzi, F.; Itakura, S.; Massaro, D. Theory of Mind and Humanoid Robots From a Lifespan Perspective. Z. Für Psychol. 2018, 226, 98–109. [Google Scholar] [CrossRef]

- Schmetkamp, S. Understanding AI—Can and Should We Empathize with Robots? Rev. Philos. Psychol. 2020, 11, 881–897. [Google Scholar] [CrossRef]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders: Dsm-5, 5th ed.; American Psychiatric Pub Inc.: Washington, DC, USA, 2013; ISBN 978-0-89042-555-8. [Google Scholar]

- Norcross, J.C.; Beutler, L.E. A Prescriptive Eclectic Approach to Psychotherapy Training. J. Psychother. Integr. 2000, 10, 247–261. [Google Scholar] [CrossRef]

- Beutler, L.E. Making Science Matter in Clinical Practice: Redefining Psychotherapy. Clin. Psychol. Sci. Pract. 2009, 16, 301–317. [Google Scholar] [CrossRef]

- Marino, F.; Chilà, P.; Sfrazzetto, S.T.; Carrozza, C.; Crimi, I.; Failla, C.; Busà, M.; Bernava, G.; Tartarisco, G.; Vagni, D.; et al. Outcomes of a Robot-Assisted Social-Emotional Understanding Intervention for Young Children with Autism Spectrum Disorders. J. Autism Dev. Disord. 2020, 50, 1973–1987. [Google Scholar] [CrossRef] [PubMed]

- Cao, H.-L.; Gomez Esteban, P.; Bartlett, M.; Baxter, P.; Belpaeme, T.; Billing, E.; Cai, H.; Coeckelbergh, M.; Pop, C.; David, D. Robot-Enhanced Therapy: Development and Validation of Supervised Autonomous Robotic System for Autism Spectrum Disorders Therapy. IEEE Robot. Autom. Mag. 2019, 26, 49–58. [Google Scholar] [CrossRef]

- David, D. Tasks for Social Robots (Supervised Autonomous Version) on Developing Social Skills; Dream Publishing: Jamtara, India, 2017; pp. 1–18. [Google Scholar]

- Tapus, A.; Peca, A.; Aly, A.; Pop, C.; Jisa, L.; Pintea, S.; Rusu, A.S.; David, D.O. Children with Autism Social Engagement in Interaction with Nao, an Imitative Robot: A Series of Single Case Experiments. Interact. Stud. 2012, 13, 315–347. [Google Scholar] [CrossRef]

- David, D.O.; Costescu, C.A.; Matu, S.; Szentagotai, A.; Dobrean, A. Effects of a Robot-Enhanced Intervention for Children With ASD on Teaching Turn-Taking Skills. J. Educ. Comput. Res. 2020, 58, 29–62. [Google Scholar] [CrossRef]

- Shamsuddin, S.; Yussof, H.; Ismail, L.I.; Mohamed, S.; Hanapiah, F.A.; Zahari, N.I. Initial Response in HRI- a Case Study on Evaluation of Child with Autism Spectrum Disorders Interacting with a Humanoid Robot NAO. Procedia Eng. 2012, 41, 1448–1455. [Google Scholar] [CrossRef]

- David, D.O.; Costescu, C.A.; Matu, S.; Szentagotai, A.; Dobrean, A. Developing Joint Attention for Children with Autism in Robot-Enhanced Therapy. Int. J. Soc. Robot. 2018, 10, 595–605. [Google Scholar] [CrossRef]

- Alemi, M.; Meghdari, A.; Ghanbarzadeh, A.; Moghadam, L.J.; Ghanbarzadeh, A. Impact of a Social Humanoid Robot as a Therapy Assistant in Children Cancer Treatment. In Proceedings of the Social Robotics, Sydney, NSW, Australia, 27–29 October 2014; Beetz, M., Johnston, B., Williams, M.-A., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 11–22. [Google Scholar]

- Alemi, M.; Ghanbarzadeh, A.; Meghdari, A.; Moghadam, L.J. Clinical Application of a Humanoid Robot in Pediatric Cancer Interventions. Int. J. Soc. Robot. 2016, 8, 743–759. [Google Scholar] [CrossRef]

- Ullrich, D.; Diefenbach, S.; Butz, A. Murphy Miserable Robot: A Companion to Support Children’s Well-Being in Emotionally Difficult Situations. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 3234–3240. [Google Scholar]

- van der Hout, V.M. The Touch of a Robotic Friend: Can a Touch of a Robot, When the Robot and the Person Have Bonded with Each Other, Calm a Person down during a Stressful Moment? Master’s Thesis, University of Twente, Twente, The Netherlands, 2017. [Google Scholar]

- Laban, G.; George, J.-N.; Morrison, V.; Cross, E.S. Tell Me More! Assessing Interactions with Social Robots from Speech. Paladyn J. Behav. Robot. 2021, 12, 136–159. [Google Scholar] [CrossRef]

- Jeong, S. The Impact of Social Robots on Young Patients’ Socio-Emotional Wellbeing in a Pediatric Inpatient Care Context. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2017. [Google Scholar]

- Robinson, N.L.; Connolly, J.; Hides, L.; Kavanagh, D.J. Social Robots as Treatment Agents: Pilot Randomized Controlled Trial to Deliver a Behavior Change Intervention. Internet Interv. 2020, 21, 100320. [Google Scholar] [CrossRef]

- Vanderborght, B.; Simut, R.; Saldien, J.; Pop, C.; Rusu, A.S.; Pintea, S.; Lefeber, D.; David, D.O. Using the Social Robot Probo as a Social Story Telling Agent for Children with ASD. Interact. Stud. 2012, 13, 348–372. [Google Scholar] [CrossRef]

- Simut, R.E.; Vanderfaeillie, J.; Peca, A.; Van de Perre, G.; Vanderborght, B. Children with Autism Spectrum Disorders Make a Fruit Salad with Probo, the Social Robot: An Interaction Study. J. Autism Dev. Disord. 2016, 46, 113–126. [Google Scholar] [CrossRef]

- Pop, C.A.; Pintea, S.; Vanderborght, B.; David, D.O. Enhancing Play Skills, Engagement and Social Skills in a Play Task in ASD Children by Using Robot-Based Interventions. A Pilot Study. Interact. Stud. 2014, 15, 292–320. [Google Scholar] [CrossRef]

- Simut, R.; Costescu, C.A.; Vanderfaeillie, J.; Van De Perre, G.; Vanderborght, B.; Lefeber, D. Can You Cure Me? Children with Autism Spectrum Disorders Playing a Doctor Game With a Social Robot. Int. J. Sch. Health 2016, 3, 1–9. [Google Scholar] [CrossRef] [Green Version]

- Crossman, M.K.; Kazdin, A.E.; Kitt, E.R. The Influence of a Socially Assistive Robot on Mood, Anxiety, and Arousal in Children. Prof. Psychol. Res. Pract. 2018, 49, 48–56. [Google Scholar] [CrossRef]

- Wagemaker, E.; Dekkers, T.J.; van Rentergem, J.A.A.; Volkers, K.M.; Huizenga, H.M. Advances in Mental Health Care: Five N = 1 Studies on the Effects of the Robot Seal Paro in Adults With Severe Intellectual Disabilities. J. Ment. Health Res. Intellect. Disabil. 2017, 10, 309–320. [Google Scholar] [CrossRef] [Green Version]

- Lane, G.W.; Noronha, D.; Rivera, A.; Craig, K.; Yee, C.; Mills, B.; Villanueva, E. Effectiveness of a Social Robot, “Paro,” in a VA Long-Term Care Setting. Psychol. Serv. 2016, 13, 292–299. [Google Scholar] [CrossRef]

- Sefidgar, Y.S. TAMER: Touch-Guided Anxiety Management via Engagement with a Robotic Pet Efficacy Evaluation and the First Steps of the Interaction Design. Ph.D. Thesis, University of British Columbia, Vancouver, BC, Canada, 2012. [Google Scholar]

- Costescu, C.; Vanderborght, B.; Daniel, D. Robot-Enhanced Cbt for Dysfunctional Emotions in Social Situations For Children With ASD. J. Evid.-Based Psychother. 2017, 17, 119–132. [Google Scholar] [CrossRef]

- Costescu, C.A.; Vanderborght, B.; David, D.O. Reversal Learning Task in Children with Autism Spectrum Disorder: A Robot-Based Approach. J. Autism Dev. Disord. 2015, 45, 3715–3725. [Google Scholar] [CrossRef]

- Karakosta, E.; Dautenhahn, K.; Syrdal, D.S.; Wood, L.J.; Robins, B. Using the Humanoid Robot Kaspar in a Greek School Environment to Support Children with Autism Spectrum Condition. Paladyn J. Behav. Robot. 2019, 10, 298–317. [Google Scholar] [CrossRef]

- Wood, L.J.; Robins, B.; Lakatos, G.; Syrdal, D.S.; Zaraki, A.; Dautenhahn, K. Developing a Protocol and Experimental Setup for Using a Humanoid Robot to Assist Children with Autism to Develop Visual Perspective Taking Skills. Paladyn J. Behav. Robot. 2019, 10, 167–179. [Google Scholar] [CrossRef]

- Pliasa, S.; Fachantidis, N. Using Daisy Robot as a Motive for Children with ASD to Participate in Triadic Activities. Themes ELearning 2019, 12, 35–50. [Google Scholar]

- Peca, A.; Simut, R.; Pintea, S.; Vanderborght, B. Are Children with ASD More Prone to Test the Intentions of the Robonova Robot Compared to a Human? Int. J. Soc. Robot. 2015, 7, 629–639. [Google Scholar] [CrossRef]

- Ghiglino, D.; Chevalier, P.; Floris, F.; Priolo, T.; Wykowska, A. Follow the White Robot: Efficacy of Robot-Assistive Training for Children with Autism Spectrum Disorder. Res. Autism Spectr. Disord. 2021, 86, 101822. [Google Scholar] [CrossRef]

- Damm, O.; Malchus, K.; Jaecks, P.; Krach, S.; Paulus, F.; Naber, M.; Jansen, A.; Kamp-Becker, I.; Einhaeuser-Treyer, W.; Stenneken, P.; et al. Different Gaze Behavior in Human-Robot Interaction in Asperger’s Syndrome: An Eye-Tracking Study. In Proceedings of the 2013 IEEE RO-MAN, Gyeongju, Korea, 26–29 August 2013; pp. 368–369. [Google Scholar]

- Bharatharaj, J.; Huang, L.; Al-Jumaily, A.; Elara, M.R.; Krägeloh, C. Investigating the Effects of Robot-Assisted Therapy among Children with Autism Spectrum Disorder Using Bio-Markers. IOP Conf. Ser. Mater. Sci. Eng. 2017, 234, 012017. [Google Scholar] [CrossRef]

- Alimardani, M.; Kemmeren, L.; Okumura, K.; Hiraki, K. Robot-Assisted Mindfulness Practice: Analysis of Neurophysiological Responses and Affective State Change. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 8–12 August 2020; pp. 683–689. [Google Scholar]

- Gallego Pérez, J.; Lohse, M.; Evers, V. Robots for the Psychological Wellbeing of the Elderly. In Proceedings of the HRI 2014 Workshop on Socially Assistive Robots for the Aging Population, Bielefeld, Germany, 3–6 March 2014. [Google Scholar]

- Jeong, S.; Alghowinem, S.; Aymerich-Franch, L.; Arias, K.; Lapedriza, A.; Picard, R.; Park, H.W.; Breazeal, C. A Robotic Positive Psychology Coach to Improve College Students’ Wellbeing. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 8–12 August 2020; pp. 187–194. [Google Scholar]

- Yamaguchi, K.; Nergui, M.; Otake, M. A Robot Presenting Reproduced Stories among Older Adults in Group Conversation. Appl. Mech. Mater. 2014, 541–542, 1120–1126. [Google Scholar] [CrossRef]

- Bucher, M.A.; Suzuki, T.; Samuel, D.B. A Meta-Analytic Review of Personality Traits and Their Associations with Mental Health Treatment Outcomes. Clin. Psychol. Rev. 2019, 70, 51–63. [Google Scholar] [CrossRef]

- Barlow, D.H.; Curreri, A.J.; Woodard, L.S. Neuroticism and Disorders of Emotion: A New Synthesis. Curr. Dir. Psychol. Sci. 2021, 30, 09637214211030253. [Google Scholar] [CrossRef]

- Widiger, T.A.; Oltmanns, J.R. Neuroticism Is a Fundamental Domain of Personality with Enormous Public Health Implications. World Psychiatry 2017, 16, 144–145. [Google Scholar] [CrossRef] [Green Version]

- Dino, F.; Zandie, R.; Abdollahi, H.; Schoeder, S.; Mahoor, M.H. Delivering Cognitive Behavioral Therapy Using A Conversational Social Robot. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 2089–2095. [Google Scholar]

- Aziz, A.A.; Yusoff, N.; Yusoff, A.N.M.; Kabir, F. Towards Robot Therapist In-the-Loop for Persons with Anxiety Traits. In Proceedings of the International Conference on Universal Wellbeing, Kuala Lumpur, Malaysia, 4–6 December 2019. [Google Scholar]

- Trinh, H.; Asadi, R.; Edge, D.; Bickmore, T. RoboCOP: A Robotic Coach for Oral Presentations. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 1–24. [Google Scholar] [CrossRef] [Green Version]

- Salter, T.; Davey, N.; Michaud, F. Designing Developing QueBall, a Robotic Device for Autism Therapy. In Proceedings of the The 23rd IEEE International Symposium on Robot and Human Interactive Communication, Edinburgh, UK, 25–29 August 2014; pp. 574–579. [Google Scholar]

- Pop, C.A.; Simut, R.; Pintea, S.; Saldien, J.; Rusu, A.; David, D.; Vanderfaeillie, J.; Lefeber, D.; Vanderborght, B. Can the Social Robot Probo Help Children with Autism to Identify Situation-Based Emotions? A Series of Single Case Experiments. Int. J. Hum. Robot. 2013, 10, 1350025. [Google Scholar] [CrossRef]

- Barker, S.B.; Dawson, K.S. The Effects of Animal-Assisted Therapy on Anxiety Ratings of Hospitalized Psychiatric Patients. Psychiatr. Serv. 1998, 49, 797–801. [Google Scholar] [CrossRef] [PubMed]

- Li, J. The Benefit of Being Physically Present: A Survey of Experimental Works Comparing Copresent Robots, Telepresent Robots and Virtual Agents. Int. J. Hum.-Comput. Stud. 2015, 77, 23–37. [Google Scholar] [CrossRef]

- Lee, K.M.; Jung, Y.; Kim, J.; Kim, S.R. Are Physically Embodied Social Agents Better than Disembodied Social Agents?: The Effects of Physical Embodiment, Tactile Interaction, and People’s Loneliness in Human–Robot Interaction. Int. J. Hum.-Comput. Stud. 2006, 64, 962–973. [Google Scholar] [CrossRef]

- Kruglanski, A.W.; Riter, A.; Amitai, A.; Margolin, B.-S.; Shabtai, L.; Zaksh, D. Can Money Enhance Intrinsic Motivation? A Test of the Content-Consequence Hypothesis. J. Pers. Soc. Psychol. 1975, 31, 744–750. [Google Scholar] [CrossRef]

- Belpaeme, T.; Kennedy, J.; Ramachandran, A.; Scassellati, B.; Tanaka, F. Social Robots for Education: A Review. Sci. Robot. 2018, 3. [Google Scholar] [CrossRef] [Green Version]

- Pu, L.; Moyle, W.; Jones, C.; Todorovic, M. The Effectiveness of Social Robots for Older Adults: A Systematic Review and Meta-Analysis of Randomized Controlled Studies. Gerontologist 2019, 59, e37–e51. [Google Scholar] [CrossRef]

- Logan, D.E.; Breazeal, C.; Goodwin, M.S.; Jeong, S.; O’Connell, B.; Smith-Freedman, D.; Heathers, J.; Weinstock, P. Social Robots for Hospitalized Children. Pediatrics 2019, 144, e20181511. [Google Scholar] [CrossRef]

- Jung, Y.; Lee, K.M. Effects of Physical Embodiment on Social Presence of Social Robots; International Society for Presence Research: Valencia, Spain, 2004; pp. 80–87. [Google Scholar]

- Thielbar, K.O.; Triandafilou, K.M.; Barry, A.J.; Yuan, N.; Nishimoto, A.; Johnson, J.; Stoykov, M.E.; Tsoupikova, D.; Kamper, D.G. Home-Based Upper Extremity Stroke Therapy Using a Multiuser Virtual Reality Environment: A Randomized Trial. Arch. Phys. Med. Rehabil. 2020, 101, 196–203. [Google Scholar] [CrossRef]

- Dodds, P.; Martyn, K.; Brown, M. Infection Prevention and Control Challenges of Using a Therapeutic Robot. Nurs. Older People 2018, 30, 34–40. [Google Scholar] [CrossRef]

- Schultz, E. Furry Therapists: The Advantages and Disadvantages of Implementing Animal Therapy; University of Wisconsin-Stout: Menomonie, WI, USA, 2006. [Google Scholar]

- Maloney, D.; Zamanifard, S.; Freeman, G. Anonymity vs. Familiarity: Self-Disclosure and Privacy in Social Virtual Reality. In Proceedings of the 26th ACM Symposium on Virtual Reality Software and Technology, Osaka, Japan, 1–4 November 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–9. [Google Scholar]

- Feingold Polak, R.; Tzedek, S.L. Social Robot for Rehabilitation: Expert Clinicians and Post-Stroke Patients’ Evaluation Following a Long-Term Intervention. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 151–160, ISBN 978-1-4503-6746-2. [Google Scholar]

- Martí Carrillo, F.; Butchart, J.; Knight, S.; Scheinberg, A.; Wise, L.; Sterling, L.; McCarthy, C. Adapting a General-Purpose Social Robot for Paediatric Rehabilitation through In Situ Design. ACM Trans. Hum.-Robot Interact. 2018, 7, 1–30. [Google Scholar] [CrossRef] [Green Version]

- Céspedes, N.; Múnera, M.; Gómez, C.; Cifuentes, C.A. Social Human-Robot Interaction for Gait Rehabilitation. IEEE Trans. Neural Syst. Rehabil. Eng. 2020, 28, 1299–1307. [Google Scholar] [CrossRef]

- Goris, K.; Saldien, J.; Vanderborght, B.; Lefeber, D. How to Achieve the Huggable Behavior of the Social Robot Probo? A Reflection on the Actuators. Mechatronics 2011, 21, 490–500. [Google Scholar] [CrossRef]

- Dautenhahn, K.; Nehaniv, C.L.; Walters, M.L.; Robins, B.; Kose-Bagci, H.; Assif, N.; Blow, M. KASPAR—A Minimally Expressive Humanoid Robot for Human–Robot Interaction Research. Appl. Bionics Biomech. 2009, 6, 369–397. [Google Scholar] [CrossRef] [Green Version]

- Lütkebohle, I.; Hegel, F.; Schulz, S.; Hackel, M.; Wrede, B.; Wachsmuth, S.; Sagerer, G. The Bielefeld Anthropomorphic Robot Head “Flobi”. In Proceedings of the 2010 IEEE International Conference on Robotics and Automation, Paris, France, 3–7 May 2010; pp. 3384–3391. [Google Scholar]

- Grunberg, D.; Ellenberg, R.; Kim, Y.E.; Oh, P.Y. From RoboNova to HUBO: Platforms for Robot Dance. In Proceedings of the Progress in Robotics, Incheon, Korea, 16–20 August 2009; Kim, J.-H., Ge, S.S., Vadakkepat, P., Jesse, N., Al Manum, A., Puthusserypady, K.S., Rückert, U., Sitte, J., Witkowski, U., Nakatsu, R., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 19–24. [Google Scholar]

- Pliasa, S.; Fachantidis, N. Can a Robot Be an Efficient Mediator in Promoting Dyadic Activities among Children with Autism Spectrum Disorders and Children of Typical Development? In Proceedings of the 9th Balkan Conference on Informatics, Sofia, Bulgaria, 26–28 September 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–6. [Google Scholar]

- Pelikan, H.R.M.; Broth, M.; Keevallik, L. “Are You Sad, Cozmo?” How Humans Make Sense of a Home Robot’s Emotion Displays. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, UK, 23–26 March 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 461–470, ISBN 978-1-4503-6746-2. [Google Scholar]

- Pandey, A.K.; Gelin, R. A Mass-Produced Sociable Humanoid Robot: Pepper: The First Machine of Its Kind. IEEE Robot. Autom. Mag. 2018, 25, 40–48. [Google Scholar] [CrossRef]

- Askari, F.; Feng, H.; Sweeny, T.D.; Mahoor, M.H. A Pilot Study on Facial Expression Recognition Ability of Autistic Children Using Ryan, A Rear-Projected Humanoid Robot. In Proceedings of the 2018 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), Nanjing, China, 27–31 August 2018; pp. 790–795. [Google Scholar]

- Aziz, A.A.; Fahad, A.S.; Ahmad, F. CAKNA: A Personalized Robot-Based Platform for Anxiety States Therapy. Intell. Environ. 2017, 2017, 141–150. [Google Scholar] [CrossRef]

- Tiberio, L.; Padua, L.; Pellegrino, A.R.; Aprile, I.; Cortellessa, G.; Cesta, A. Assessing the Tolerance of a Telepresence Robot in Users with Mild Cognitive Impairment—A Protocol for Studying Users’ Physiological Response. In Proceedings of the HRI 2011 Workshop on Social Robotic Telepresence, Lausanne, Switzerland, 6 March 2011; CNR, ISTC, Roma, ITA: Lausanne, Switzerland, 2011; pp. 23–28. [Google Scholar]

- Yeung, G.; Bailey, A.; Afshan, A.; Tinkler, M.; Pérez, M.Q.; Martin, A.; Pogossian, A.A.; Spaulding, S.; Park, H.; Muco, M.; et al. A Robotic Interface for the Administration of Language, Literacy, and Speech Pathology Assessments for Children. In Proceedings of the SLaTE, Coimbra, Portugal, 27–28 June 2019. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Duradoni, M.; Colombini, G.; Russo, P.A.; Guazzini, A. Robotic Psychology: A PRISMA Systematic Review on Social-Robot-Based Interventions in Psychological Domains. J 2021, 4, 664-697. https://doi.org/10.3390/j4040048

Duradoni M, Colombini G, Russo PA, Guazzini A. Robotic Psychology: A PRISMA Systematic Review on Social-Robot-Based Interventions in Psychological Domains. J. 2021; 4(4):664-697. https://doi.org/10.3390/j4040048

Chicago/Turabian StyleDuradoni, Mirko, Giulia Colombini, Paola Andrea Russo, and Andrea Guazzini. 2021. "Robotic Psychology: A PRISMA Systematic Review on Social-Robot-Based Interventions in Psychological Domains" J 4, no. 4: 664-697. https://doi.org/10.3390/j4040048

APA StyleDuradoni, M., Colombini, G., Russo, P. A., & Guazzini, A. (2021). Robotic Psychology: A PRISMA Systematic Review on Social-Robot-Based Interventions in Psychological Domains. J, 4(4), 664-697. https://doi.org/10.3390/j4040048