GGT-YOLO: A Novel Object Detection Algorithm for Drone-Based Maritime Cruising

Abstract

1. Introduction

2. Related Work

3. Materials and Methods

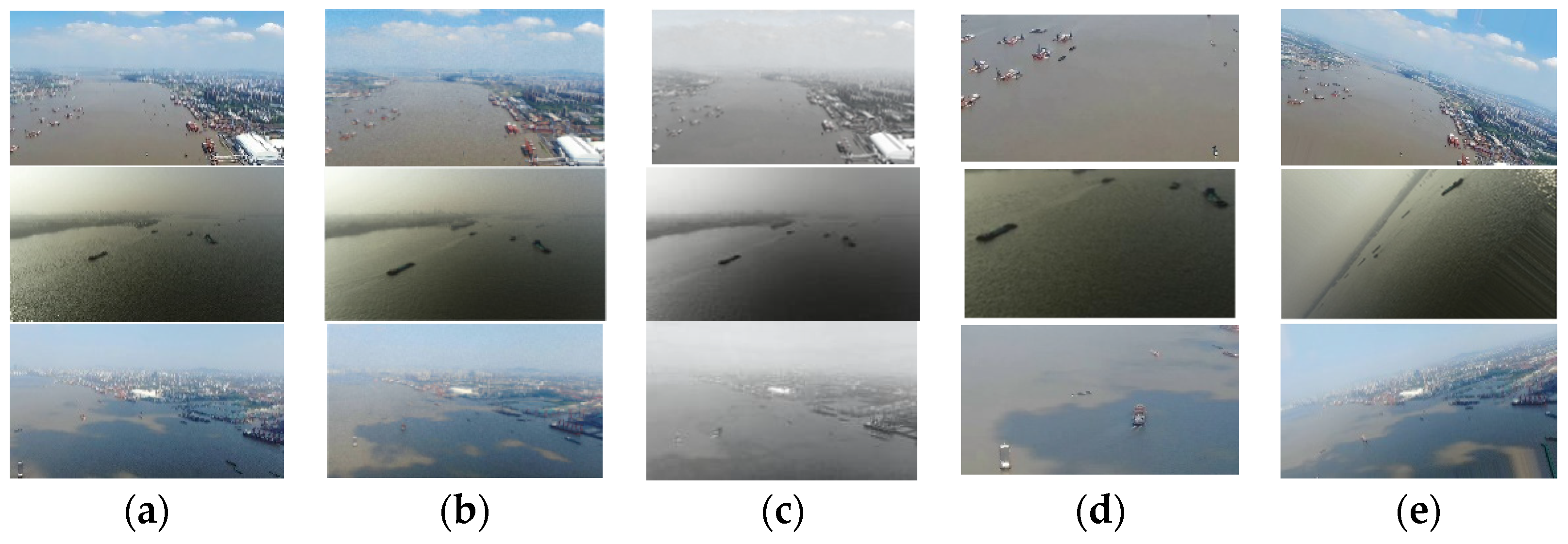

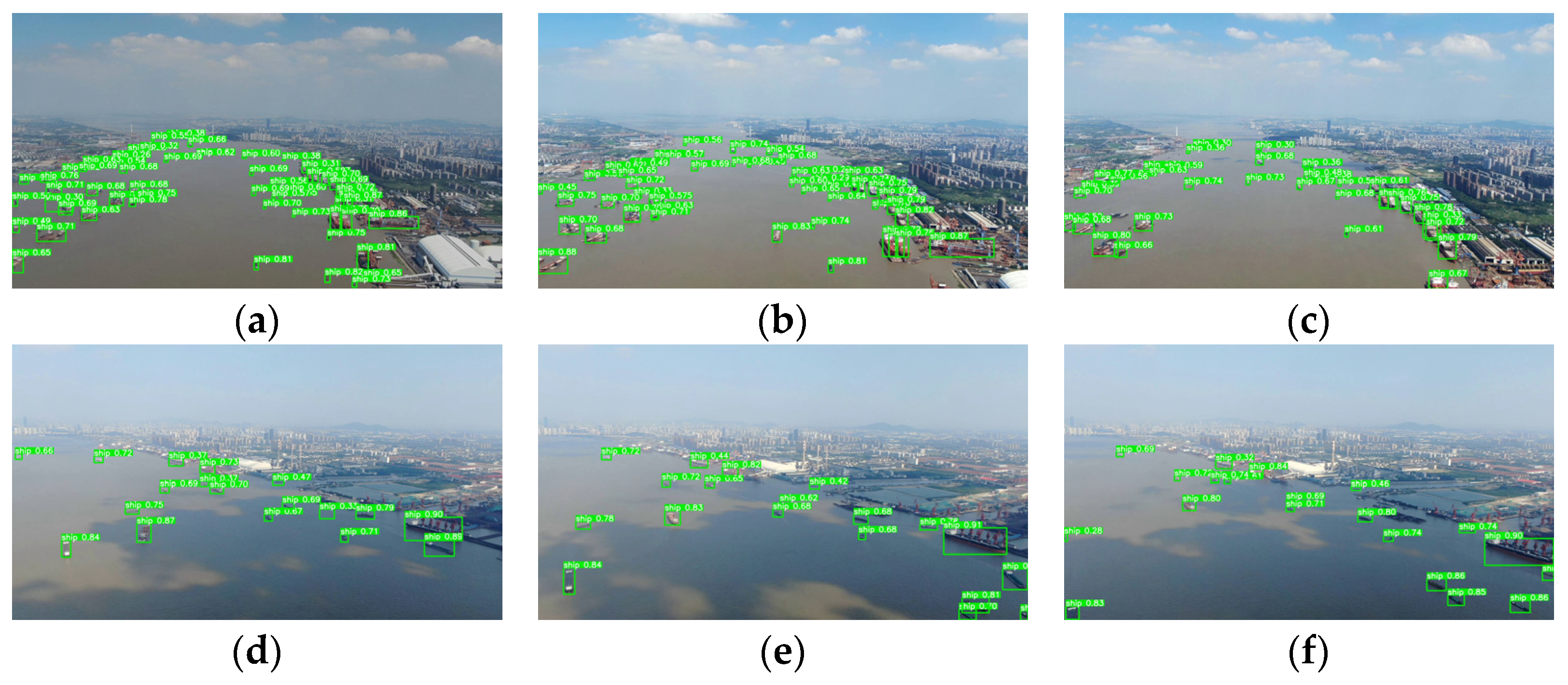

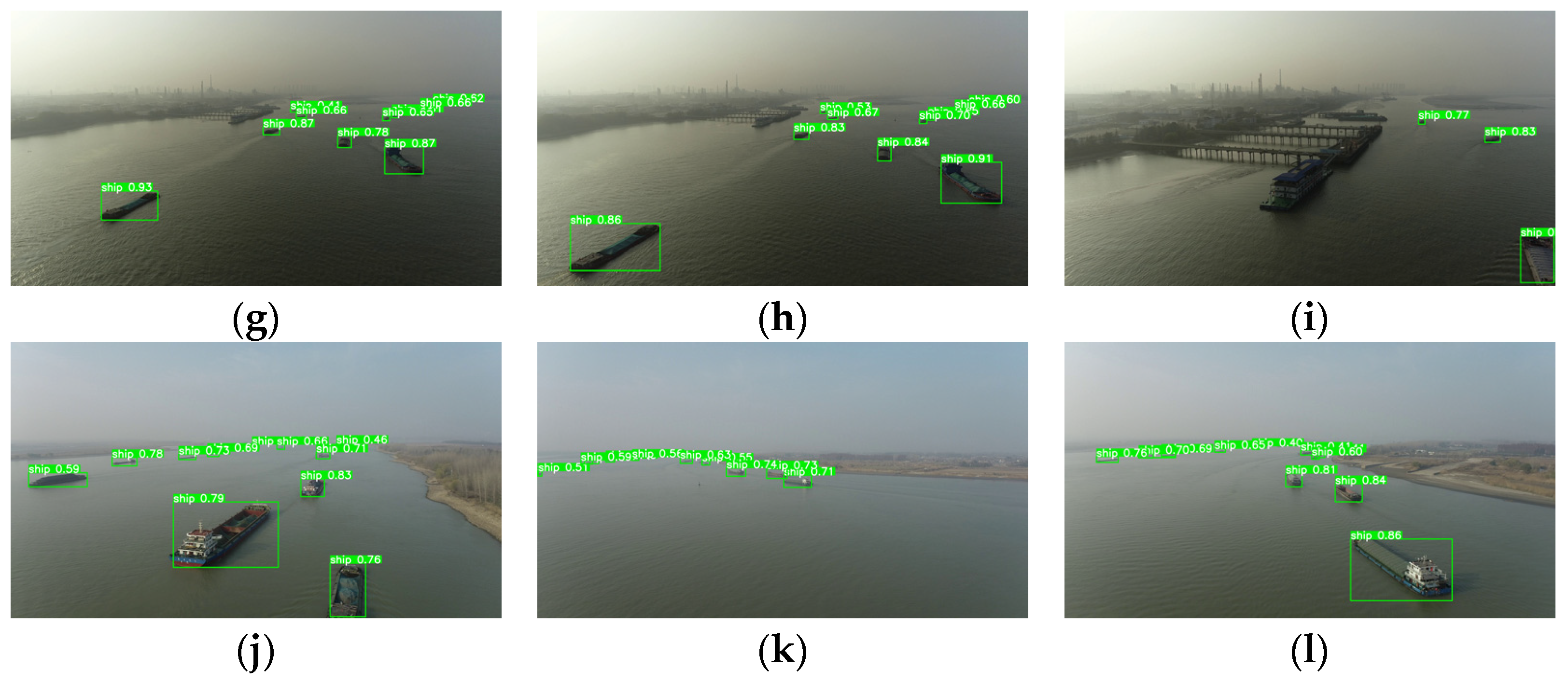

3.1. MariDrone Dataset

3.2. GGT-YOLO Algorithm

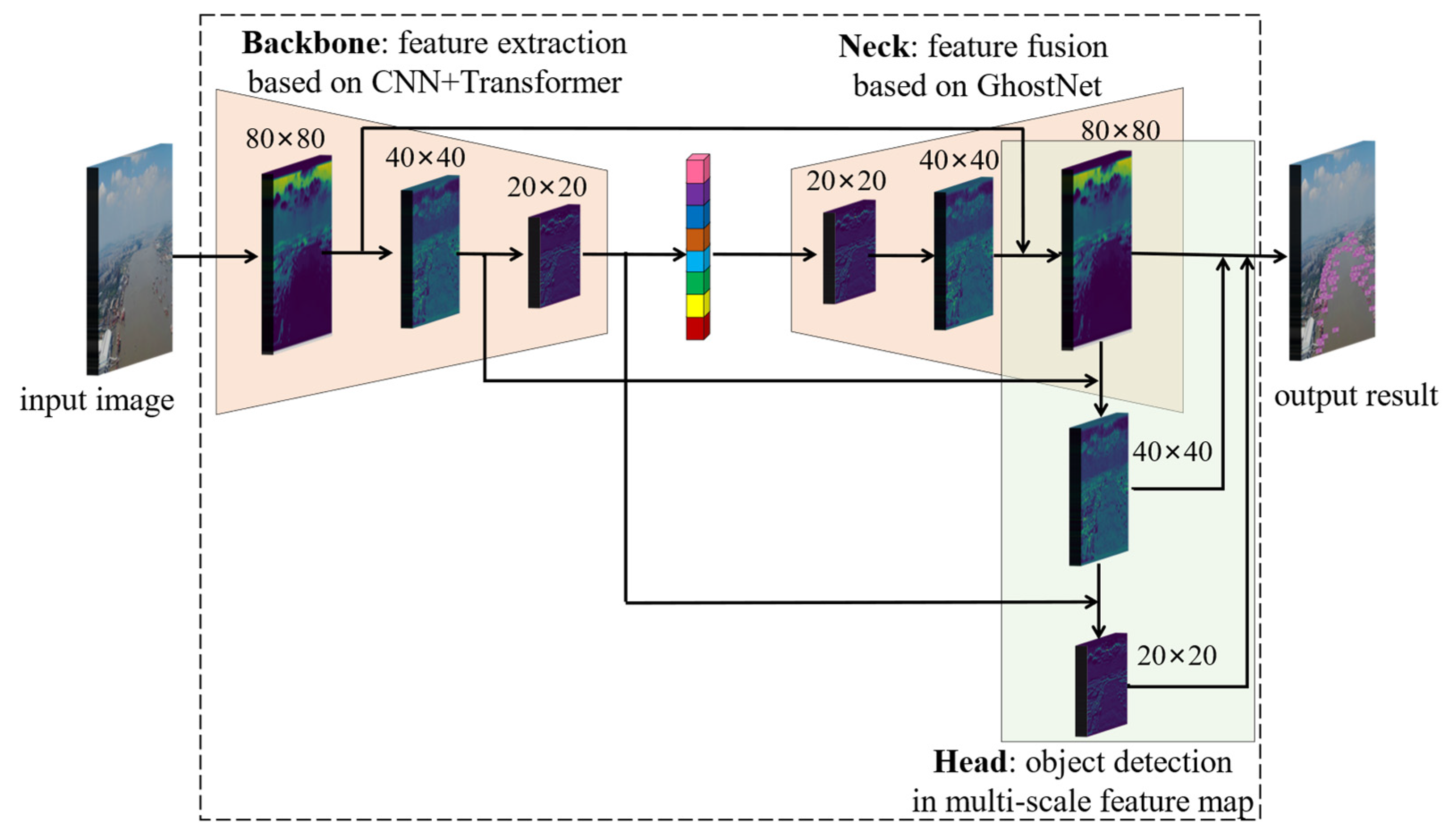

3.2.1. Object Detection Framework

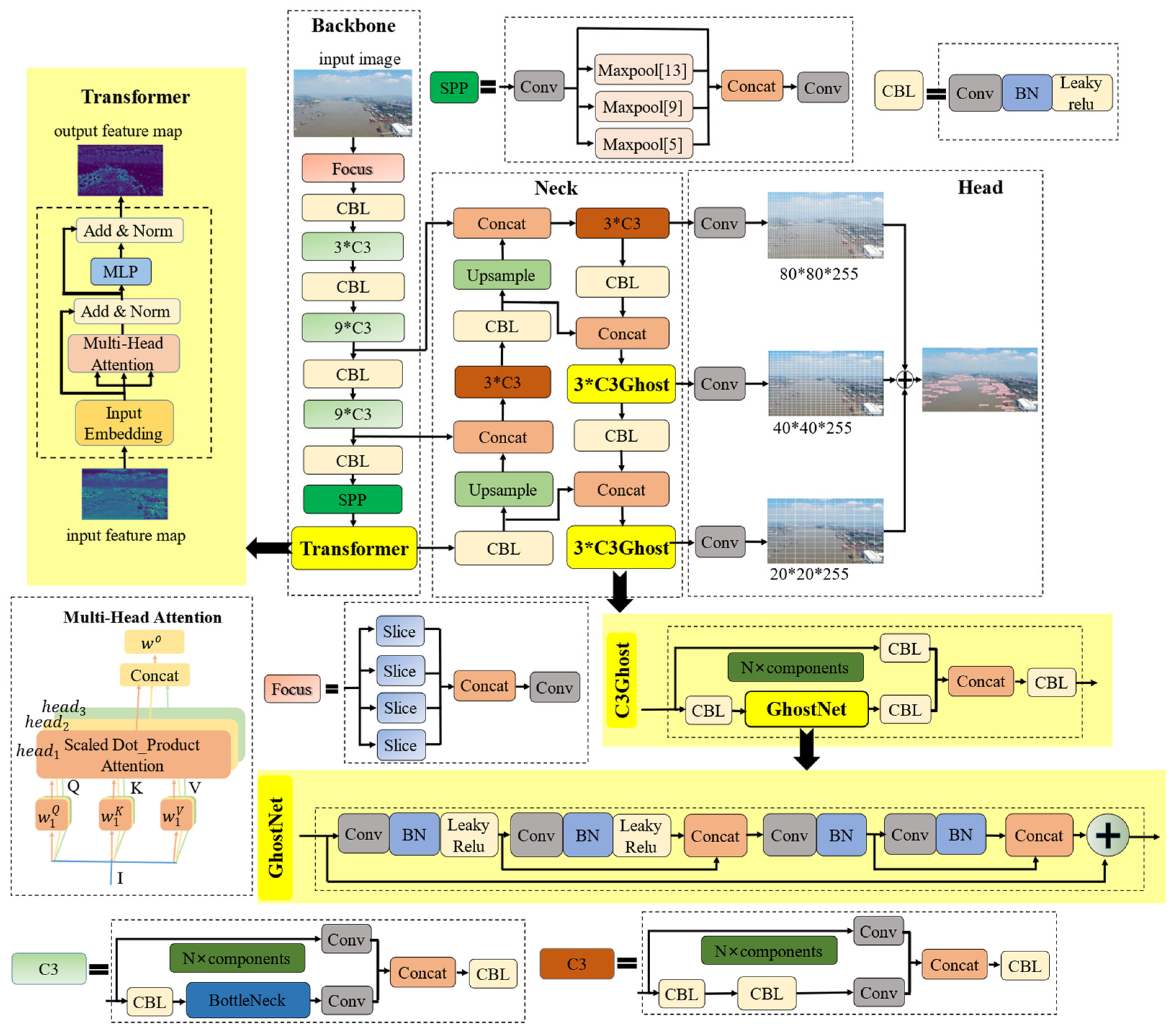

3.2.2. Feature Extraction Optimization

3.2.3. Network Lightweight Optimization

4. Experimental and Discussion

4.1. Evaluation Criteria

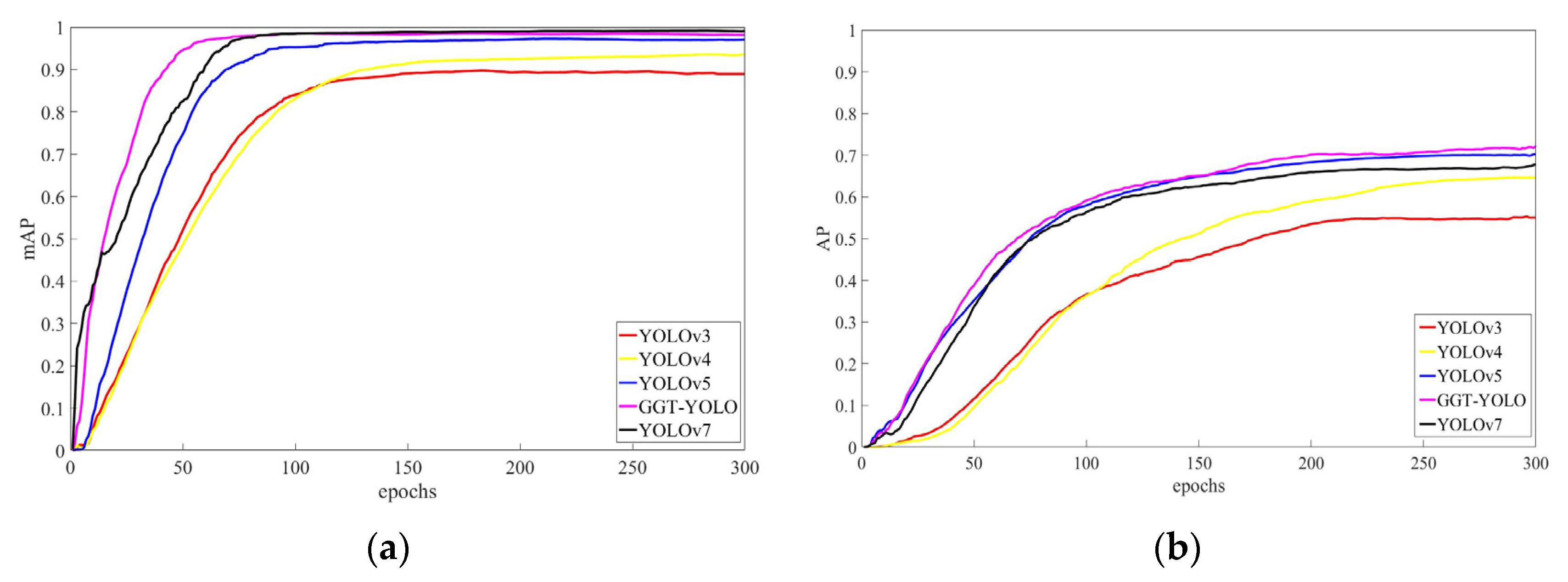

4.2. Performance Analysis

4.3. Comparative Analysis

4.4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Drone Industry Insights. Global Drone Market Report 2022–2030. Available online: https://droneii.com/ (accessed on 23 October 2022).

- Liu, Y.; Liu, H.; Tian, Y.; Sun, C. Reinforcement Learning Based Two-level Control Framework of UAV Swarm for Cooperative Persistent Surveillance in an Unknown Urban Area. Aerosp. Sci. Technol. 2020, 98, 105671. [Google Scholar] [CrossRef]

- Yuan, H.; Xiao, C.; Wang, Y.; Peng, X.; Wen, Y.; Li, Q. Maritime Vessel Emission Monitoring by An UAV Gas Sensor System. Ocean Eng. 2020, 218, 105206. [Google Scholar] [CrossRef]

- Jeong, G.Y.; Nguyen, T.N.; Tran, D.K.; Hoang, T.B.H. Applying Unmanned Aerial Vehicle Photogrammetry for Measuring Dimension of Structural Elements in Traditional Timber Building. Measurement 2020, 153, 107386. [Google Scholar] [CrossRef]

- Zhang, X.; Zhao, P.; Hu, Q.; Ai, M.; Hu, D.; Li, J. A UAV-based Panoramic Oblique Photogrammetry (POP) Approach Using Spherical Projection. ISPRS J. Photogramm. Remote Sens. 2020, 159, 198–219. [Google Scholar]

- Yuan, H.; Xiao, C.; Zhan, W.; Wang, Y.; Shi, C.; Ye, H.; Jiang, K.; Ye, Z.; Zhou, C.; Wen, Y.; et al. Target Detection, Positioning and Tracking Using New UAV Gas Sensor Systems: Simulation and Analysis. J. Intell. Robot. Syst. 2019, 94, 871–882. [Google Scholar] [CrossRef]

- Zou, Z.; Shi, Z.; Guo, Y.; Ye, J. Object Detection in 20 Years: A Survey. arXiv 2019, arXiv:1905.05055. [Google Scholar] [CrossRef]

- Li, W.; Li, F.; Luo, Y.; Wang, P. A Survey of Deep Learning-Based Object Detection. IEEE Access 2019, 7, 128837–128868. [Google Scholar]

- VisDrone Dataset. Available online: http://aiskyeye.com/download/ (accessed on 23 October 2022).

- Okutama-Action Dataset. Available online: https://github.com/miquelmarti/Okutama-Action (accessed on 23 October 2022).

- Ultralytics. YOLOv5. Available online: https://github.com/ultralytics/yolov5 (accessed on 1 November 2020).

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Houlsby, N. An Image is Worth 16 × 16 words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar] [CrossRef]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C. GhostNet: More Features from Cheap Operations. arXiv 2020, arXiv:1911.11907. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Y.; Liu, T.; Lin, Z.; Wang, S. DAGN: A Real-Time UAV Remote Sensing Image Vehicle Detection Framework. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1884–1888. [Google Scholar] [CrossRef]

- Senthilnath, J.; Varia, N.; Dokania, A.; Anand, G.; Benediktsson, J.A. Deep TEC: Deep Transfer Learning with Ensemble Classifier for Road Extraction from UAV Imagery. Remote Sens. 2020, 12, 245. [Google Scholar] [CrossRef]

- Zhu, J.; Zhong, J.; Ma, T.; Huang, X.; Zhang, W.; Zhou, Y. Pavement Distress Detection Using Convolutional Neural Networks with Images Captured via UAV. Autom. Constr. 2022, 133, 103991. [Google Scholar] [CrossRef]

- Liu, Y.; Yang, F.; Hu, P. Small-Object Detection in UAV-Captured Images via Multi-Branch Parallel Feature Pyramid Networks. IEEE Access 2020, 8, 145740–145750. [Google Scholar] [CrossRef]

- Liu, J.; Wang, Z.; Wu, Y.; Qin, Y.; Cao, X.; Huang, Y. An Improved Faster R-CNN for UAV-Based Catenary Support Device Inspection. Int. J. Softw. Eng. Knowl. Eng. 2020, 30, 941–959. [Google Scholar] [CrossRef]

- Sun, W.; Dai, L.; Zhang, X.; Chang, P.; He, X. RSOD: Real-time small object detection algorithm in UAV-based traffic monitoring. Appl. Intell. 2022, 52, 8448–8463. [Google Scholar] [CrossRef]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Liu, M.; Wang, X.; Zhou, A.; Fu, X.; Ma, Y.; Piao, C. UAV-YOLO: Small Object Detection on Unmanned Aerial Vehicle Perspective. Sensors 2020, 20, 2238. [Google Scholar] [CrossRef]

- Tan, L.; Lv, X.; Lian, X.; Wang, G. YOLOv4_Drone: UAV Image Target Detection Based on An Improved YOLOv4 Algorithm. Comput. Electr. Eng. 2021, 93, 107261. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Zhan, W.; Sun, C.; Wang, M.; She, J.; Zhang, Y.; Zhang, Z.; Sun, Y. An Improved Yolov5 Real-time Detection Method for Small Objects Captured by UAV. Soft. Comput. 2022, 26, 361–373. [Google Scholar] [CrossRef]

- Zhao, J.; Zhang, X.; Yan, J.; Qiu, X.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W. A Wheat Spike Detection Method in UAV Images Based on Improved YOLOv5. Remote Sens. 2021, 13, 3095. [Google Scholar] [CrossRef]

- Chen, G.; Wang, H.; Chen, K.; Li, Z.; Song, Z.; Liu, Y.; Chen, W.; Knoll, A. A Survey of the Four Pillars for Small Object Detection: Multiscale Representation, Contextual Information, Super-Resolution, and Region Proposal. IEEE Trans. Syst. Man. Cybern. Syst. 2022, 52, 936–953. [Google Scholar] [CrossRef]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.; Bochkovskiy, A.; Liao, H.M. YOLOv7: Trainable Bag-of-freebies Sets New State-of-the-art for Real-time Object Detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar] [CrossRef]

- Liu, Y.; Cao, S.; Lasang, P.; Shen, S. Modular Lightweight Network for Road Object Detection Using a Feature Fusion Approach. IEEE Trans. Syst. Man. Cybern. Syst. 2021, 51, 4716–4728. [Google Scholar] [CrossRef]

- Lv, Y.; Liu, J.; Chi, W.; Chen, G.; Sun, L. An Inverted Residual Based Lightweight Network for Object Detection in Sweeping Robots. Appl. Intell. 2022, 52, 12206–12221. [Google Scholar] [CrossRef]

- Javadi, S.; Dahl, M.; Pettersson, M.I. Vehicle Detection in Aerial Images Based on 3D Depth Maps and Deep Neural Networks. IEEE Access 2021, 9, 8381–8391. [Google Scholar] [CrossRef]

- Li, D.; Sun, X.; Elkhouchlaa, H.; Jia, Y.; Yao, Z.; Lin, P.; Li, J.; Lu, H. Fast Detection and Location of Longan Fruits Using UAV Images. Comput. Electron. Agric. 2021, 190, 106465. [Google Scholar] [CrossRef]

- Kou, M.; Zhou, L.; Zhang, J.; Zhang, H. Research Advances on Object Detection in Unmanned Aerial Vehicle Imagery. Meas. Control Technol. 2020, 39, 47–61. [Google Scholar]

- Prasad, D.K.; Prasath, C.K.; Rajan, D.; Rachmawati, L.; Rajabally, E.; Quek, C. Object Detection in A Maritime Environment: Performance Evaluation of Background Subtraction Methods. IEEE Trans. Intell. Transp. Syst. 2019, 20, 1787–1802. [Google Scholar] [CrossRef]

- Prasad, D.K.; Dong, H.; Rajan, D.; Chai, Q. Are Object Detection Assessment Criteria Ready for Maritime Computer Vision? IEEE Trans. Intell. Transp. Syst. 2020, 21, 5295–5304. [Google Scholar] [CrossRef]

- Shao, Z.; Wu, W.; Wang, Z.; Wan, D.; Li, C. SeaShips: A Large-Scale Precisely Annotated Dataset for Ship Detection. IEEE Trans. Multimed. 2018, 20, 2593–2604. [Google Scholar] [CrossRef]

- Iancu, B.; Soloviev, V.; Zelioli, L.; Lilius, J. ABOships-An Inshore and Offshore Maritime Vessel Detection Dataset with Precise Annotations. Remote Sens. 2021, 13, 988. [Google Scholar] [CrossRef]

- Gallegos, A.; Pertusa, A.; Gil, P.; Fisher, R.B. Detection of Bodies in Maritime Rescue Operations using Unmanned Aerial Vehicles with Multispectral Cameras. J. Field Robot. 2019, 36, 782–796. [Google Scholar] [CrossRef]

- Liu, T.; Pang, B.; Zhang, L. Sea Surface Object Detection Algorithm Based on YOLOv4 Fused with Reverse Depth wise Separable Convolution (RDSC) for USV. J. Mar. Sci. Eng. 2021, 9, 753. [Google Scholar] [CrossRef]

- Ghahremani, A.; Alkanat, T.; Bondarev, E.; de With, P.H.N. Maritime vessel Re-identification: Novel VR-VCA dataset and a Multi-branch Architecture MVR-net. Mach. Vis. Appl. 2021, 32, 71. [Google Scholar] [CrossRef]

- Li, Y.; Zhang, S.; Wang, W. A Lightweight Faster R-CNN for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2022, 19, 4006105. [Google Scholar] [CrossRef]

- Nie, T.; Han, X.; He, B.; Li, X.; Liu, H.; Bi, G. Ship Detection in Panchromatic Optical Remote Sensing Images Based on Visual Saliency and Multi-Dimensional Feature Description. Remote Sens. 2020, 12, 152. [Google Scholar] [CrossRef]

- Guo, H.; Yang, X.; Wang, N.; Gao, X. A CenterNet Plus Plus model for Ship Detection in SAR Images. Pattern Recognit. 2021, 112, 107787. [Google Scholar] [CrossRef]

- Long, Y.; Gong, Y.; Xiao, Z.; Liu, Q. Accurate Object Localization in Remote Sensing Images Based on Convolutional Neural Networks. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2486–2498. [Google Scholar] [CrossRef]

| Algorithms | P (%) | R (%) | AP (%) | Parameters (×106) | FLOPs (×109) |

|---|---|---|---|---|---|

| YOLOv3 | 64.3 | 63.6 | 57.8 | 61.9 | / |

| YOLOv4 | 71.0 | 66.2 | 65.6 | 64.1 | / |

| YOLOv5 | 80.6 | 69.2 | 70.2 | 7.1 | 16.3 |

| YOLOv7 | 74.8 | 70.9 | 67.0 | 37.1 | 104.9 |

| GGT-YOLO | 82.0 | 71.8 | 72.1 | 6.2 | 15.1 |

| Algorithms | P (%) | R (%) | mAP (%) | ||

|---|---|---|---|---|---|

| Aircraft | Oiltank | Aircraft | Oiltank | ||

| YOLOv3 | 91.9 | 86.1 | 90.7 | 85.5 | 88.3 |

| YOLOv4 | 96.4 | 92.6 | 94.3 | 86.2 | 93.9 |

| YOLOv5 | 98.7 | 95.3 | 95.9 | 93.7 | 96.5 |

| YOLOv7 | 99.6 | 96.8 | 97.5 | 98.1 | 98.7 |

| GGT-YOLO | 98 | 96.2 | 96.7 | 97.4 | 97.5 |

| Model | B1 | B2 | B3 | B4 | B5 |

|---|---|---|---|---|---|

| G-YOLO | GhostNet | ||||

| GG-YOLO | GhostNet | GhostNet | |||

| T-YOLO | Transformer | ||||

| TT-YOLO | Transformer | Transformer | |||

| GT-YOLO | Transformer | GhostNet | |||

| GGT-YOLO | Transformer | GhostNet | GhostNet | ||

| GGGT-YOLO | Transformer | GhostNet | GhostNet | GhostNet |

| Model | P (%) | R (%) | AP (%) | Parameters (×106) | FLOPs (109) |

|---|---|---|---|---|---|

| YOLOv5 | 80.6 | 69.2 | 70.2 | 7.05 | 16.3 |

| T-YOLO | 81.3 | 71.6 | 71.8 | 7.05 | 16.1 |

| TT-YOLO | 84.4 | 67.5 | 71.9 | 7.06 | 15.9 |

| G-YOLO | 83.3 | 70.9 | 70.4 | 6.40 | 15.8 |

| GG-YOLO | 77.4 | 68.5 | 69.6 | 6.23 | 15.3 |

| GT-YOLO | 82.0 | 69.6 | 72.4 | 6.40 | 15.6 |

| GGT-YOLO | 82.0 | 71.8 | 72.1 | 6.23 | 15.1 |

| GGGT-YOLO | 82.0 | 64.7 | 66.7 | 6.19 | 14.5 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Y.; Yuan, H.; Wang, Y.; Xiao, C. GGT-YOLO: A Novel Object Detection Algorithm for Drone-Based Maritime Cruising. Drones 2022, 6, 335. https://doi.org/10.3390/drones6110335

Li Y, Yuan H, Wang Y, Xiao C. GGT-YOLO: A Novel Object Detection Algorithm for Drone-Based Maritime Cruising. Drones. 2022; 6(11):335. https://doi.org/10.3390/drones6110335

Chicago/Turabian StyleLi, Yongshuai, Haiwen Yuan, Yanfeng Wang, and Changshi Xiao. 2022. "GGT-YOLO: A Novel Object Detection Algorithm for Drone-Based Maritime Cruising" Drones 6, no. 11: 335. https://doi.org/10.3390/drones6110335

APA StyleLi, Y., Yuan, H., Wang, Y., & Xiao, C. (2022). GGT-YOLO: A Novel Object Detection Algorithm for Drone-Based Maritime Cruising. Drones, 6(11), 335. https://doi.org/10.3390/drones6110335