Abstract

This paper aims to provide an overview of the capabilities of unmanned systems to monitor and manage aquaculture farms that support precision aquaculture using the Internet of Things. The locations of aquaculture farms are diverse, which is a big challenge on accessibility. For offshore fish cages, there is a difficulty and risk in the continuous monitoring considering the presence of waves, water currents, and other underwater environmental factors. Aquaculture farm management and surveillance operations require collecting data on water quality, water pollutants, water temperature, fish behavior, and current/wave velocity, which requires tremendous labor cost, and effort. Unmanned vehicle technologies provide greater efficiency and accuracy to execute these functions. They are even capable of cage detection and illegal fishing surveillance when equipped with sensors and other technologies. Additionally, to provide a more large-scale scope, this document explores the capacity of unmanned vehicles as a communication gateway to facilitate offshore cages equipped with robust, low-cost sensors capable of underwater and in-air wireless connectivity. The capabilities of existing commercial systems, the Internet of Things, and artificial intelligence combined with drones are also presented to provide a precise aquaculture framework.

1. Introduction

Fisheries and aquaculture play an essential role in feeding the growing population and are critical for the livelihood of millions of people in the world. Based on the long-term assessment by the Although the Food and Agriculture Organization (FAO) has assessed the continuous declination of marine fish resources [1], many interventions were made by government institutions, private organizations, and individuals to increase awareness of the importance of the world’s fishery resource. Strict implementation of fishing regulations and water environment conservation has increased fishery production and sustainability. Despite these developments and with the expected increasing population of 8.5 billion by 2030, the increase in demand for marine commodities cannot be sustained any longer by wild fish stocks. Aquaculture is involved in farming of fish, shellfish, and other aquatic plants and have been a great help in food security. In the past years, it is the fastest-growing product in the food sector [2] and is emerging as an alternative to commercial fishing [3]. With this trend, the expansion of aquaculture plays a significant role in ensuring food sufficiency, improved nutrition, food availability, affordability, and security.

In 2018, world aquaculture reached 114.5 million tons of production record [1], making this industry marketable and promising. However, with the increasing global population, aquaculture production must also continue to increase to meet the food demand of the growing population. With this significant contribution of the aquaculture industry in alleviating poverty [4,5,6] and increasing income [5,6], employment [3,7], economic growth [8,9,10], reducing hunger for food, and increasing the nutrition of the population [9,11,12], one of the main challenges in aquaculture production is sustainability [13].

1.1. Challenges in Aquaculture Production, Supervision and Management

One of the indicators of the success of an aquaculture venture depends on the correct selection of the aquaculture site. Aquaculture farm types vary from small-scale rural farms to large-scale commercial systems. For choosing a farm location, a good quality water source is a must since surface water such as river, stream, or spring is prone to pollutions. They are also intermittently available since it is affected by weather such as drought or typhoons. Aquaculture farms are in lakes, rivers, reservoirs (extensive aquaculture), coastal lagoons, land-based coastal, in-shore, and offshore areas [14].

Coastal lagoons are shallow estuarine systems; they are productive and highly vulnerable [15]. Aquaculture in coastal lagoons is more heterogeneous in terms of cultivated species, techniques, extent [16], which can lead to reduced water quality, habitat destruction, and biodiversity loss which limits or restricts fish and shellfish farming concessions [17]. Land-based farming is also becoming famous due to less environmental impact on coastal areas and reducing the cost of transportation. Compared with open-water fish farming, monitoring is easy due to accessibility, and quick adjustments can be made to achieve optimal living conditions of aquaculture products [18]. Despite this, land-based coastal aquaculture is more constrained [14], and mass mortalities due to disease spread is fast, and sudden change in water temperature is also apparent.

In-shore farm locations are close to the open fishing grounds with minimal shore currents. However, concerns such as wind and wave protection currents brought by small boat fishers [19] are also evident. Offshore aquaculture farms’ locations are in the deep-sea water. Since they are far from the shore, this reduces the negative environmental impact of fish farming. Despite the higher investment requirements for this farm location and some requiring importation of cages and equipment from other countries [14], its utilization offers a great potential to expand the industry in many parts of the world. Currents and greater depths generally increase the assimilation capacity and energy of the offshore environment and offer vast advantages for aquaculture farming. Since offshore cages are far away from the coast, there is an increased cost in terms of management and daily routine operations for farm visits and monitoring [20]. Recent technological innovations in offshore cage systems make it possible for aquaculture operations in the open ocean, and this industry is rapidly increasing in different parts of the world.

Aquaculture production is very costly considering the requirement in terms of human labor and feeds. The big aquaculture farms are located offshore in deep and open ocean waters, allowing them to produce with a large number. Many of the offshore fish cages are submerged in water and they can only be reached by boats and ships. This method limits the accessibility with additional capital costs [21]. Meanwhile, feeds have the highest share during the production period [22]. Farming systems are also diverse in terms of methods, practices, and facilities. The presence of climate change highly affects the quality of aquaculture production (e.g., change in water temperature, water becomes acidic); it has now become a threat to sustainable global fish production [2]. Global food loss and waste are also severe problems and concerns. Proper handling from production, harvest to consumption is also essential to prevent the identified problems and preserve the production quality [1].

Aside from feeding, farming in the grow-out phase involves tasks such as size grading and distribution of fish to maintain acceptable stocking densities, monitoring water quality and fish welfare, net cleaning, and structural maintenance. All these operations are significant to obtain good growth to ensure fish welfare. Attaining profitability and sustainability in production requires a high degree of regularity in all these operations [23].

Offshore aquaculture farms that have large-scale productions require high manual labor and close human interactions to perform monitoring and management. Proper farm management requires regular monitoring, observation, and recording. For example, to monitor the growth of the fish, the farmer must evaluate the utilization of the feeds utilized and assess the fish growth to optimize stocking, transfer, and harvests. According to FAO, the extent of farm monitoring depends on the educational level and skill of the farmer, the farmer’s interest in good management and profit, the size and organization of the aquaculture farm, and the external assistance available to farmers. Commercial farms need a close monitor of fish stocks. Farmers should also be aware of various parameters for growth measurement, production, and survival of aquaculture stocks. In ensuring this achievement, farms visits should be at least once a day to check if water quality is good and if fish are healthy. Close fish monitoring determines growth, the efficiency of feeding, and adjustment of daily feeding ratio to save feed costs. Checking the adequacy of the stocking rate will enable the transfer of larger fish or marketed immediately and if the stock has reached the target weights, production and harvesting schedule can be changed [24].

According to Wang et al. [25], intelligent aquaculture is now moving beyond data toward decision-making. Intelligent aquaculture farms should be capable of carrying out all-around fine control on various elements such as intelligent feeding, water quality control, behavior analysis, biomass estimation, disease diagnosis, equipment working condition, and fault warning. It is significant to collect data from the aquaculture site to monitor and use technologies, such as sensors and unmanned systems to integrate artificial intelligence (AI) for a smarter fish farm. As an example, with feeding management considerations, feed cost has the highest share in the production period [22]. So, there is a need to reduce the cost to maximize the profit by making sure that the fish is not overfed, which is an added cost, or making sure that fish is not underfed, which affects the fish growth and density, thus, affect the production quality. Bait machines help automate the feeding process, but for it to be fully optimized, information is required of the level of fish feeding satiety or hunger. Information such as disturbance on the water surface can be a basis to determine the level of fish hunger or feeding intensity. Such information can be captured by the UAV using its camera sensors and sends the information to the cloud to perform data analysis using AI services such as deep learning techniques to evaluate the fish feeding intensity level. The analysis results will be forwarded to the baiting machine to determine how much food to dispense. If fish feeding intensity is high, the feeding machine continues to give food, and otherwise, when it is none, it will stop giving food [26].

1.2. Aquaculture’s Technological Innovation for Precision Farming

With the challenges mentioned for aquaculture production, there is a need to identify and adopt various strategies. To address these previously mentioned issues, technology integration in the past decades has become famous for automating or helping aquaculture farmers monitor and manage their farms for improved aquaculture sustainability. Technological innovations (such as breeding systems, feeds, vaccines) and non-technological innovations (e.g., improved regulatory frameworks, organizational structures, market standards) have enabled the growth of the aquaculture industry. Radical and systemic innovations are necessary to achieve the ecological and social sustainability of aquaculture [27]. Integrating smart fish farming as a new scientific method can optimize and efficiently use available resources. It will also promote sustainable development in aquaculture through deep integration of the Internet of Things (IoT), big data, cloud computing, artificial intelligence, and other modern technologies. A new mode of fishing production is achieved with its real-time data collection, quantitative decision making, intelligent control, precise investment, and personalized service [28]. Various technological innovations are already available to improve aquaculture production and management [29]. The availability of unmanned vehicles equipped with aerial cameras, sensors, and computational capability is very famous for site surveillance [30].

Precision fish farming described by Føre et al. [31] aims to apply control engineering principles in fish production to improve farm monitoring, control, and allow documentation of biological processes. This method makes it possible for commercial aquaculture to transition from a traditional experience-based production method to a knowledge-based production method using emerging technologies and automated systems that address the challenges of aquaculture monitoring and management. Precision fish farming aims to improve the accuracy, precision, and repeatability of farming operations. The preciseness facilitates more autonomous and continuous biomass/animal monitoring. It also provides higher reliable decision support and reduces dependences from manual labor and subjective assessments to improve worker safety and welfare. Furthermore, O’Donncha and Grant [32] described precision aquaculture as a set of disparate and interconnected sensors deployed to monitor, analyze, interpret, and provide decision support for farm operations. Precision farming in the ocean will help farmers respond to natural fluctuations and impact operations using real-time sensor technologies and will no longer rely on direct human observations and human-centric data acquisition. Thus, artificial intelligence (AI) and IoT connectivity now support farm decision-making.

Unmanned vehicles or aircraft is one of the emerging technologies for various personal, businesses, and governments, particularly in the military field intended for different purposes. Recently, it has become well-utilized in agriculture and aquaculture in managing and monitoring fish due to its availability and affordability [33]. They are capable of reaching remote areas requiring a small amount of time and effort. Users can control the flight or navigation using only a remote control or a mobile application. When UAVs were introduced around the 20th century, their intended function was for military purposes [34,35,36]. However, in the last few years, drones’ capability has prospered and is now capable of accomplishing multiple and simultaneous functions. Such capabilities are aerial photography [37], shipping and delivery [38,39,40], data collection [41,42], search and rescue operations during disasters or calamities [43], agricultural crop monitoring [44], natural calamity monitoring, and tracking [45]. UAVs were also successfully integrated into marine science and conservation. In the paper of de Lima et al. [46], the authors provided an overview of the application of unoccupied aircraft systems (UAS) to conserve marine science. As part of their study, they used electro-optical RGB cameras for multispectral, thermal infrared, and hyperspectral systems. Their applications of UAS in marine science and conservation include animal morphometrics and individual health, animal population assessment, behavioral ecology, habitat assessment and coastal geomorphology, management, maritime archaeology and infrastructure, pollutants, and physical and biological oceanography. Some of these mentioned applications could also be utilized in the aquaculture environment.

Today, drones have been successful in collecting environmental data and fish behavior at the aquaculture site for monitoring [47]. In the work of Ubina et al. [30], an autonomous drone performs visual surveillance to monitor fish feeding activities, detect nets, moorings, cages, and detect suspicious objects (e.g., people, ships). The drone is capable of flying above the aquaculture site to perform area surveillance and auto-navigate based on the instructions or commands provided. The autonomous drone can understand the position of the target objects through the information provided by the cloud, which makes it more intelligent than the usual drone navigation scheme. It becomes an intelligent flying robot to capture distant objects and valuable data. The drone can also execute a new route based on the path planning generated by the cloud, unlike the non-autonomous drone, which only follows a specific path [30]. Their autonomous capability reduces the need for human interactions; actual site monitoring and inspection activities can be controlled or reduced [23].

The paper is organized as follows: Section 2 is the methodology; Section 3 provides the unmanned vehicle system platforms. Section 4, on the other hand, presents the framework of the aquaculture monitoring management using unmanned vehicles while in Section 5 is the unmanned vehicles capability as communication gateway and IoT device data collector. Section 6 provides how unmanned vehicles are used for site surveillance, Section 7 is for aquaculture farm monitoring and management function, and Section 8 contains the regulations and requirements for unmanned vehicle system operations. Lastly, Section 9 is the challenges and future trends, and Section 10 is the conclusions.

2. Methodology

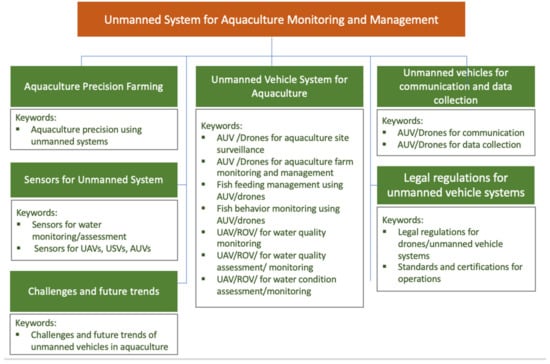

This paper’s purpose is to conduct a review of literature and studies conducted for unmanned systems’ applicability to perform aquaculture monitoring and surveillance. The majority of the literature search was made using the Web of Science (WOS) database. Factors considered in the preference of articles include relevance to the related keywords provided for the search and the number of paper citations. There were no restrictions on the date of publication. Figure 1 is the taxonomy used for keyword extraction in the Web of Science database to determine the trend and the number of works involving unmanned vehicle systems for aquaculture. The authors also used Google Scholar, IEEE Xplore and Science Direct to search for related works.

Figure 1.

Taxonomy for keyword extraction in the database search.

The articles from the keyword search were the basis in identifying the capabilities, progress, gaps, and challenges of unmanned vehicle systems for aquaculture site monitoring and management. We also conducted data analysis based on the search results from the WOS database to know the trend or research interest based on the number of published journal articles for each year. Graphs were generated to present the result of the analysis. Samples of the results are in Figure 2, Figure 3 and Figure 4.

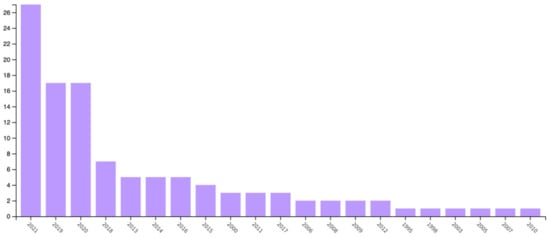

Figure 2.

Publication result by year using the keyword aquaculture precision farming.

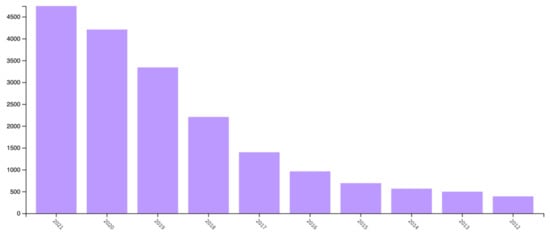

Figure 3.

Publication result by year using the keywords “aquaculture precision” and “unmanned vehicle” or “unmanned system”.

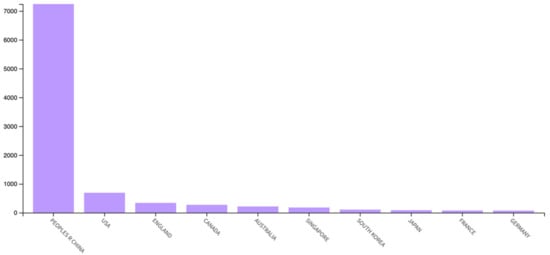

Figure 4.

Publication result by country using the keywords “aquaculture” and “unmanned vehicle” or “unmanned system”.

3. Unmanned Vehicle System Platforms

Unmanned vehicles can improve mission safety, repeatability and reduce operational costs [48]. The tasks performed by unmanned vehicles are typically dangerous or relatively expensive to use humans to execute. In addition, they are assigned jobs that are simple but repetitive and less expensive to implement without humans [49]. Low-cost, off-the-shelf systems are now emerging, but many still require customization [48] to meet the specific requirement for aquaculture monitoring and management. The work of Verfuss et al. [50] provides the detail of the current state-of-the-art autonomous technologies for marine species observation and detection. Although it does not focus on aquaculture, underlying principles, and requirements can be adopted in aquaculture monitoring.

In this paper, the authors describe the capabilities and limitations of unmanned vehicle systems to perform monitoring and management of aquaculture farms. The functions are to assess water quality, water pollutants, water temperature, fish feeding, water currents, drones as a communication gateway, cage detection, farm management, and surveillance of illegal fishing are content of this review paper as a mechanism to achieve precise aquaculture. There are different classifications of unmanned vehicles considered in this paper for aquaculture monitoring and management: unmanned aircraft systems, autonomous underwater vehicles, and unmanned surface vehicles. Each of the unmanned vehicle systems has its respective capabilities and limitations. However, they can be used together to collaborate and attain the goal of aquaculture monitoring and management. The strength of unmanned vehicles can address the issues or limitations of the other types to increase robustness and efficiency.

3.1. Unmanned Aircraft Systems (UAS)

Unmanned aircraft systems (UAS) or unmanned aerial vehicles (UAVs) provide an alternative platform that addresses the limitations of manned-aerial surveys. According to Jones et al. [51], UAS does not require hundreds of hundreds of dollars to perform surveillance and works best for geospatial accuracy of the acquired data and survey repeatability. A potential advantage of UASs is lower operating costs and consistency of flight path and image acquisition. UAS should be small, with an electric motor, easy to use, affordable, and record and store onboard data to prevent data loss or degradation from the transmission [50]. For real-time monitoring, UAS should send data using its wireless capability. Since they are pilotless aircraft, they can operate in dangerous environments inaccessible to humans [52]. For surveillance and monitoring, they have sensors such as cameras flying into the sky to monitor the target interests [53]. Cameras installed in UAVs can also serve as data collectors and send them into a repository system. Additionally, recent developments in UAS provide longer flight durations and improved mission safety. Although UAS has strong potential for aquaculture monitoring, its success still depends on various factors such as aircraft flight capability, type of sensor, purpose, and regulatory requirements for operations for a specific platform [54].

At the highest level, the three main UAS components are unmanned aerial vehicles, ground control, and the communication data link [55]. Low-cost or multi-rotor drones are easy to control and maneuver with the ability to take off and land vertically. Multirotor UAS has lightweight materials such as plastic, aluminum, or carbon fiber to increase efficiency, and wingspans range from 35 to 150 cm. They can be ideal for small areas and can be controlled from the deck of a small boat [56], but they are limited in terms of flight time and capacity to withstand strong wind conditions. An alternative to multi-rotor drones is single rotor or helicopter drones [57]; they are built for power and durability, with long-lasting flight time with heavy payload capability. However, single rotor drones are harder to fly, and they can be expensive and with more complex requirements.

Fixed-wing drones can travel several kilometers and fly at a high altitude and speeds and cover larger areas for surveillance. They can also carry more payloads, have more endurance which can perform long-term operations. They can be fully autonomous and do not require piloting skills [58]. Like the single rotor drones, fixed-winged drones are expensive and need the training to fly non-autonomous aircrafts. Aside from being difficult to land, they can only move forward, unlike the other two drones that can hover in the target area.

3.2. Autonomous Underwater Vehicles (AUVs)

Autonomous underwater vehicles (AUVs) or remotely operated underwater vehicles (ROV) are waterproof and submersible in the water as they are equipped with cameras to capture images and videos and other sensors to collect data such as water quality. Some of the capabilities of sensors in ROV can perform data collection such as water temperature, depth level, chemical, biological, and physical properties. They are equipped with lithium-ion batteries that enable longer or extended time [59] for navigation or data collection. AUVs are now preferred to use human divers to perform underwater inspections, which is lesser in cost and provides better safety. They can provide a 4D view of the dynamic underwater environment capable of carrying a wide range of payloads or sensors. As the ROV moves to the water, its sensors can perform spatial and time-series measurements [60].

One of the challenges of AUVs since it is submerged underwater is high navigational precision [61], communication, and localization due to the impossibility of relying on radio communications and global positioning systems [62]. There are many devised alternatives in dealing with these challenges. One of them is the integration of geophysical maps to match the sensor measurements known as Geophysical Navigation [63]. In addition, UV navigation that uses a differential-Global Navigation System (DGPs) is with high precision. When submerged in water, its position is estimated by measuring its relative speed over the current or seabed using an Acoustic Doppler Current Profiler (ADCP). For more precise navigation, an inertial navigation unit is used with positioning from a sonar system [60].

Vehicle endurance is also one of the requirements of AUVs and should be less dependent on weather and water current or pressure. AUVs should be equipped with reliable navigation to perform surveillance functions such as fishnet inspections and fish monitoring. In the paper of Bernalte Sánche et al. [64], the authors presented the summary of navigation and mapping of UAV embedded systems for offshore underwater inspections where sensors and technologies are combined to create a functional system for improved performance. Niu et al. [60] listed in their paper the specifications of candidate sensors embedded in AUVs such as salinity, hydrocarbons, nutrients, and chlorophyll.

3.3. Unmanned Surface Vehicles (USVs)

Unmanned surface vehicles (USVs) or autonomous surface craft [65] operate on the water without human intervention. They were developed to support unmanned operations such as environmental monitoring and data gathering [62]. USVs should be easy to handle and durable in the field environment. USVs can get up close to objects or targets to quickly close to gather high-resolution images. It is also fast-moving, can cover large accurate sensors, and execute run-time missions [66]. However, the autonomy level of USVs is still limited when being deployed to conduct multiple tasks simultaneously [67]. For USV to form immense heterogeneous communication and surveillance networks, they can cooperate with other UVs such as UAVs. One unique potential of USV is to simultaneously communicate with other vehicles located either above or below the water surface areas. USVs can also act as relays between vehicles operating underwater, inland, in air, or in space [68].

Combining various unmanned vehicle systems can maximize their strengths to collaborate and perform more expansive tasks and coverage to address the limitations of each type. In its collaboration, UAVs and USVs can cruise synergically to provide richer information functioning as an electronic patrol system. A USV-UAV collaborative technique can perform tasks such as mapping and payload transportation. In this way, it can handle more complex tasks with increased robustness through redundancy, increased efficiency by task distribution, and reduced cost of operations [66]. These heterogeneous vehicles can work collaboratively to achieve large-scale and comprehensive monitoring. Although there are still many open research issues for heterogeneous vehicle collaboration [69], the possibility of its exploration should increase performance, adaptability, flexibility, and fault tolerance [66].

4. Unmanned Vehicles and Sensors

Unmanned systems’ navigation and monitoring capabilities concerning several quantities in their environment strictly depend on their sensors [70], measurement systems, and data processing algorithms. Sensor fault detection is also essential to ensure safety and reliability. UVs have different numbers, types, and combinations of sensors mounted in various ways to measure information using specific, diverse, and customized algorithms. Therefore, finding an optimal sensor that can perform various tasks, applications, and types is an unsolvable problem. Individual sensor specifications and characteristics affect the performance of UV aside from other factors such as operating conditions and environment [71]. One of the primary purposes of the sensor is to collect data relevant to a mission beyond plat-form navigation. Examples of data collected by sensors include acoustic profiles, radar, and infrared signatures, electro-optical images, local ocean depth, and turbidity. Major sensor subtypes are sonar, radar, environmental, and light or optic sensors [72]. Generally, aerial systems rely on electro-optical imaging sensors, while underwater and surface vehicles mostly rely on acoustic methods [48].

UAV’s flight position and orientation are determined by combining accelerometers, tilt sensors and, gyroscopes [71]. Aside from GPS, and based on Table 1, USVs can also use radars or inertial navigation systems (INS) if the satellite signal is unavailable. Since UAVs are vulnerable to weather conditions such as rain or wind, they should be equipped with wind-resistant equipment. Visual cameras can have shockproof and waterproof casings for protection. Extreme wind, rain, or storms can cause UAVs to deviate from intended missions, or small UAVs cannot operate in such weather conditions. UAVs must adapt to atmospheric density and temperature changes to preserve their aerodynamic performance.

Table 1.

Navigational payloads characteristics.

The most common sensor payload is cameras. Although smaller cameras are lighter and easier to deploy, larger cameras provide better image quality. RGB digital cameras provide high-spatial-resolution. The spatial resolution of the RGB sensor determines the quality of the acquired images [71]. The work of Liu et al. [83] provided a detailed discussion of the various sensors shown in Table 2.

Table 2.

Characteristics of exteroceptive sensors; adapted from Balestrieri et al., Liu et al. and Qiu et al. [71,83,84].

One of the challenges to facilitate image and video collection in the underwater environment is data quality, and AUV should be capable of collecting high-definition data for monitoring. Image captures of AUV are affected by the amount of light available in the underwater environment is poor due to the scattering light or turbidity for shallow coastal water [71]. AUVs are not capable of GPS signals; instead, they depend on acoustics, sonar, cameras, INSs, or combinations of such systems to navigate. For sonars, they are highly utilized for detection, tracking, and identification, but it is limited since sound propagation depends on temperature and salinity, and calibration is also required [72].

For unmanned surface vehicles, the water environment is affected by wind, waves, currents, sea fog, and water reflection [85]. There are remedies or solutions to dealing with these environmental disturbances to make the USV more robust. Monocular vision is strongly affected by weather and illumination conditions, which requires a high amount of calculating costs when obtaining high-resolution images [71]. Image stabilization, image defogging, wave information perception, and multi-camera methods are some solutions to deal with the factors affecting image quality due to weather conditions. For stereo vision, its lenses can calculate the flight time to generate a depth map that serves as an obstacle map for near-field collision avoidance. They can also extract color and motion from the environment but can be affected by weather and illumination conditions such as a narrow field of view. Likewise, infrared visons can operate during day and night since they can overcome problems caused by light conditions (night and fog). Omnidirectional cameras can have a large field view but require high computational cost; images from this type of camera are affected by illumination and weather conditions, as well. Infrared cameras also have good quality performance at night but are limited to providing color and texture information, and their accuracy is low. Event cameras are good in reducing the transmission and processing time but generate low-resolution outputs, and like others, it is affected by weather and illumination conditions [85]. Table 3 shows the advantages and limitations of various USV sensors.

Table 3.

Advantages and limitations of various sensors for USVs.

To determine underwater quality sensors, factors to be considered are physical, chemical, and biological parameters [90]. In the paper of Bhardwaj et al. [91], the authors enumerated the requirements for aquaculture sensors. First, sensors should sense data over long periods without being cleaned, maintained, or replaced. Second, they should have a low energy demand to maximize the energy or power of the UV to perform longer monitoring. Third, sensors require waterproof isolation or the requirement such as avoiding corrosion and biofouling. Fourth, since organisms in the sea can alter the sensor surface and change the transparency and color, the potential flight path must be properly designed. Fifth, sensors should have no harmful effect on the fish. Avoid sensors that use ultraviolet light, acoustic beams that can be felt by the fish, and magnetic fields that can disturb fish activities. In addition, sensors should not alter fish swimming or feeding activities. Sensors must then be low maintenance, low cost, low battery-consuming, robust, waterproof, non-metallic, withstand biofouling, and have no effects on organisms. Modern real-time water quality sensors such as optical and bio-sensors have higher sensitivity, selectivity, and quick response time with the possibility of real-time analysis of data [92].

Although sensor fusion is possible, it could add cost to the operation and UV pay-loads. Its integration will complement the various strengths and capabilities to achieve higher accuracy and increased system robustness. When selecting a sensor, one must consider the cost, specifications, application requirements, power, and environmental conditions.

5. Framework of the Aquaculture Monitoring and Management Using Unmanned Vehicles

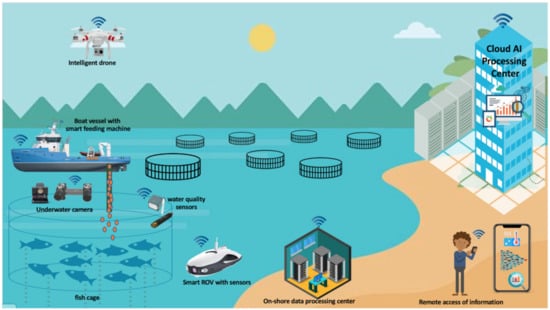

The architecture presented in Figure 5 provides a framework on how a drone works with sensors such as underwater cameras and water quality devices. These sensors are installed in the fish cage to collect data through a WIFI communication channel to transmit data to a cloud system. The cloud server serves as a repository and is equipped with data processing and analytics capabilities using AI-based techniques (e.g., computer vision, deep learning). The enormous amount of data collected from the underwater environment using sensors provides a non-invasive and non-intrusive method. This approach can achieve real-time image analysis for aquaculture operators [47]. Different data can be collected from the aquaculture site using these sensors to monitor the behavior of fish and the water quality of the aquaculture farm. The collected data informs the aquaculture farmers and enables them to provide immediate farm interventions to ensure farm produce and processes are optimized and of high quality to help increase production and income. The data collected, such as the level of food satiety of fish, as a specific example, are analyzed and transformed into meaningful information to dispense food from the smart feeding machine. A high level of satiety means continuous dispensing of food, while a low level of satiety means the amount of food dispensed is reduced or stopped. Real-time information with these mechanisms will achieve optimal aquaculture performance.

Figure 5.

Architecture for aquaculture monitoring and management using drones.

In addition to the ability of the unmanned vehicles to capture or collect data from the aquaculture site, its mobility could be used as a communication channel connecting underwater cameras and sensors to the cloud as a Wi-Fi gateway that provides more services for precise aquaculture. Since the cameras installed in aerial drones have limitations and cannot capture underwater events, fish cages are equipped with stationary cameras (e.g., sonar, stereo camera systems) and other sensors to perform specific tasks. The drone now eliminates long cables for connection with improved reliable connection and communication [30]. Aerial drones work best for functions that involve mapping, site surveillance, inspection, and photogrammetric surveys. AUV and USV, on the other hand, can do other monitoring and assessment functions such as water quality and conditions that cannot be fully addressed by aerial drones. There are additional costs and technical requirements for this method. However, one can take advantage of its more extensive area and scope for monitoring functions.

With this ability, users, such as aquaculture farm owners, can remotely monitor their aquaculture farms and assess fish welfare and stock. With the vast and varied amount of data collection from the aquaculture site, data-driven management of fish production is now possible. This scheme improves the ability of farmers to monitor, control, and document biological processes on their farms so that they can understand the environmental conditions that affect the welfare and growth of fish [93].

6. Unmanned Vehicles as Communication Gateway and IoT Device Data Collector

In developed countries where access to the Internet is not a problem, the Internet of Things (IoT) is helpful to farmers. This new connectivity help increase production, reduce operating costs, and enhance labor efficiency. The Internet of Things (IoT) has made promising and remarkable progress in collecting data and establishing real-time processing through the cloud using wireless communication channels. With the presence of 5G technology, it is a great advantage to combine UAVs and IoT to extend coverage to rural or remote areas [94], which are the locations of aquaculture farms; thus, it is just appropriate to exploit this capability. The presence of LTE 45/5G networks and mobile edge computing now broadens the coverage of UAVs [95] and is even capable of performing real-time video surveillance [96].

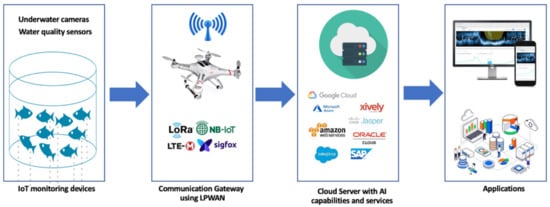

The drone as a flying gateway is equipped with LTE cellular networks to base stations and a lightweight antenna to collect data. UAVs acts as the intermediate node allowing data collection from sensors and transmitting them to their target destinations. The drone then flies to the location of the IoT devices to offer additional coverage or support to the aquaculture farm in case there are problems with the wired connection of the devices. The gateway can receive sensor data and send these collected data to the servers [94] to integrate additional processing strategies, such as artificial intelligence and deep learning techniques. A drone can also serve as a node of the wireless sensor network where IoT communication is not available to receive the collected data from the node. Then it moves to an area where wireless IoT communication is possible and transfers the data to the IoT server [97]. Various sensor devices can connect to aquaculture cages and farms, such as underwater cameras and water quality sensors; Arduino [94] and Raspberry Pi can be embedded as part of an IoT platform, as shown in Figure 6.

Figure 6.

IoT platform using drone as a communication gateway.

In maximizing the drone’s capability, it is significant to optimize its energy consumptions. The work of Min et al. [97] proposes a dynamic rendezvous node estimation scheme considering the average drone speed and data collection latency to increase the data collection success rate. Many devices can be embedded on the drone to provide a better wireless communication network. The Lower Power Wide Area Network (LPWAN) gateway onboard can be installed in the UAV. The LoRa gateway is famous for its coverage and lower power consumption in its deployment.

Short-range communication devices are convenient to enable communication between sensors and gateways, such as Bluetooth, ZigBee, and Wi-Fi. However, with drones as a communication gateway, Lower Power Wide Area Network (LPWAN) is much of an advantage to provide extended communication coverage. The different types of LPWAN in Table 4 present their advantages and disadvantages. A comparative study with LPWAN technologies for large-scale IoT deployment and smart applications based on IoT is utilized [98,99]. In the work of Yan et al. [100], a comprehensive survey was made on UAV communication channel modeling, taking into account the propagation losses and link fading, including the challenges and open issues for the development of UAV communication.

Table 4.

Comparison of LPWAN wireless technologies.

With this capability of drones as a communication gateway, it can now serve as a medium to help achieve the goal of precise aquaculture. The drone can now provide wireless communication for IoT devices to send data to the cloud for processing, thus acting as a data collection medium. Data acquisition using UAVs is less expensive and more convenient than hiring manned aircraft, especially in remote and inaccessible places such as offshore aquaculture farms. UAVs, when combined with deep learning, can provide tremendous innovation for aquaculture farm management.

With all the identified potentials of drones as a communication channel [106], cameras, and sensors (e.g., stereo camera system, sonar devices) to capture the underwater environment is promising. The drone collects and then sends the data to the cloud to employ AI services using computer vision and deep learning techniques. The processed information provides information to users about the current conditions of the aquaculture farms. Fish survey activities [107] that can be performed includes fish behavior detection such as schooling [108,109,110], swimming [111,112,113], stress response [110,114,115], tracking [116,117], and feeding [112,118,119]. To determine the satiety or feeding level of fish used for demand feeder includes fish feeding intensity evaluation [26,120] and detection of uneaten food pellets [120,121]. The video collected from the aquaculture site through the drones can help estimate fish growth [122,123], fish count [124,125,126], and fish length and density estimation [127,128,129,130,131] as a device to transmit this information to the cloud for processing and data analytics to make predictions or estimates [132,133].

7. Aquaculture Site Surveillance Using Unmanned Vehicles

Illegal fishing is a global problem that threatens the viability of fishing industries and causes profit loss to farmers. On-the-ground surveillance is the typical way to monitor or minimize this practice [134], but with a very high operational cost. Submersible drones and UAVs are now capable of detecting illegal fishing activities [135] and are lower in terms of cost [136,137].

An unmanned system surveillance composed of fish farmers, vessels, and fish stocks was used to detect unauthorized fishing vessels [138] with an advantage in speed and size, making them capable of being unnoticed when performing surveillance. Automatic ship classification is relevant for maritime surveillance in detecting illegal fishing activities, which immensely affects the income of aquaculture farmers. Gallego [139] uses drones to capture aerial images for the detection and classification of ships. In the work of Marques et al. [140], aerial image sequences acquired by sensors mounted on a UAV detect vessels using sea vessel detection algorithms. A surveillance system framework was proposed using drone aerial images, drone technology, and deep learning [141] to eliminate illegal fishing activities. The ship is detected to identify its position and then classify the hull plate vessels to determine among them are authorized or not. The drone provides visual information using its installed camera. Additionally, crabs are highly valued commercial commodities, and also used drones with infrared cameras to detect crab traps and floats [134,135] to prevent illegal activities.

Remote sensing platforms or technologies with global positioning system capabilities, such as drones, have the ability for marine spatial planning to provide a wide spatial-temporal range for marine and aquaculture surveillance [142]. The drone is also applied to 3D mapping [143], aerial mapping [144], and low-altitude photogrammetric survey [145]. A semantic scene modeling was integrated to manage aquaculture farms using autonomous drones and a cloud-based aquaculture surveillance system as an AIoT platform. The scene modeling algorithm transfers information to the drone using the aquaculture cloud to monitor fish, persons, nets, and feeding levels daily. The drone acts as an intelligent flying robot to manage aquaculture sites [146].

The UAV with an onboard camera was also used for cage detection. The UAV’s GPS is a guide to approximate the location of the cages, and applying image recognition methods follows to obtain the fish cage and the relative position of the UAV. This collected information will be the basis of the drone to adjust its position and proceed to the target object [147]. Additionally, UAVs could also be used for cage farming environment inspection [29] without requiring the installation of a hardware system in each cage which entails a higher cost in farming. Even a single UAV system can fly around all fish cages to capture data of the aquaculture cage environment, thus, a drastic reduction of the aquaculture operation cost. An inventory of salmon spawning nests is executed using UAVs to capture high-resolution images and videos to identify spawning locations and habitat characteristics; its abundance and distribution are metrics to monitor and evaluate adult salmon populations [148].

In Japan, they developed an agile ROV to perform underwater surveillance that provides real-time monitoring. The designed ROV is for easy transport, short startup time, effortless control, capable of high-resolution images at a low cost [149]. Drones are also applied to fishery damage assessment of natural hazards. It can survey fish groups, assist in salvage operations, and conduct aquaculture surveys and management after disasters [150]. In India, an autonomous AUV replaced the expensive sonar equipment to perform surveillance and relays the data and the global positioning system location. The drone provides a mechanism to serve as a bird’s eye view to monitor the surrounding ocean surface like a person with normal vision can see [151]. Autonomous vehicles are also applied to increase spatial and temporal coverage. They can transit remote target areas with real-time observations with more potential than traditional ship-based surveys. Unmanned surface vehicles with two sail drones (USVs) were equipped with echo sounders to perform acoustic observations [152].

In the work of Livanos et al. [153], an AUV prototype was proposed as an IoT-enabled device. Machine vision techniques were incorporated to enable correct positioning and intelligent navigation in the underwater environment where GPS locations are limited due to its physical limitation to transmit communication signals through wireless networks. The AUV was programmed to record video and scan the fish cage net area and save this information in its onboard memory storage. Its navigation scheme is based on a combined optical recognition/validation system with photogrammetry as applied to a reference target of known characteristics attached to the fishnet. The AUV captures video data of the fish cage area under a relatively close distance successively to address the fishnet consistency problem. The AUV architecture is cost-effective to automate the inspection of aquaculture cages equipped and accomplished a real-time behavior capability.

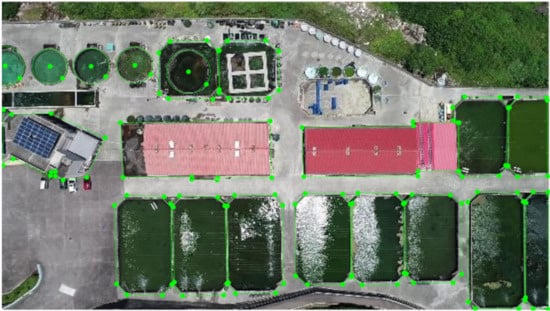

In the work of Kellaris e al. [154], drones were evaluated as monitoring tools for seaweeds using a low-cost aircraft. Compared to satellites and typical airborne systems as sources of images, drones achieve a very high spatial resolution that addresses the problems on habitats with high heterogeneity and species differentiation, which apply to seaweed habitat. A sample of the captured image for aquaculture site surveillance using a drone is in Figure 7. With the application of drones in surveying, it is now more accessible with a more large-scale range and scope of integration to aquaculture, fisheries, and marine-related applications. Table 5 shows the different types of drones and the embedded sensors for site surveillance and their corresponding applications.

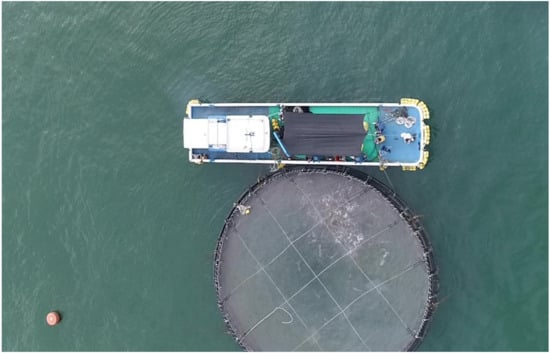

Figure 7.

Aerial view of in-land aquaculture site with scene modelling with detected objects such as fish pen, cages and house for site surveillance.

Table 5.

Unmanned vehicles and its application to aquaculture site surveillance.

As much as possible, the position of offshore aquaculture cages is relatively close to onshore facilities to minimize distance-related costs of transport and maintenance services [155]. Table 6 provides the characteristics of the three aquaculture farm locations: coast, off-coast, and offshore based on physical and hydrodynamical settings. In the table, the work of Chu et al. [156], they provided a review on the cage and containment tanks designs for offshore fish farming and Holmer [157] provided the characteristics. The limitations in terms of accessibility to aquaculture farms is affected by weather conditions.

Table 6.

Characteristics of coast, off-coast, and offshore aquaculture farms; adapted from Chu et al., Holmer and Marine Fish Farms [156,157,158].

The data provided in the table, especially the distance of the cages from the shore, are significant since they help determine the capability of the unmanned vehicle to perform navigation and monitoring. In Taiwan, the distance from the shore to the offshore cages range from 2 to 11 km, while the inshore cages are one kilometer away. The distance of the fish cages from the shore is significant in terms of the amount of time the unmanned vehicle needs to travel. Commercial UAVs are widely used for inspection since they are low-cost, but they are limited in terms of flight hours and payload capacity. Table 7 shows the characteristics of the UAV’s performance measures.

Table 7.

Characteristics of UAV types; adapted from Gupta et al., Fotouhi et l., Shi et al. and Delavarpour et al. [58,159,160,161].

Since the battery life of UAVs to perform extended navigation is limited most especially those with small size [162] (16 to 30 min for commercial drones), this restricts its operational range. For example, DJI Mavic Air 2, a quadcopter drone UAV that costs approximately $800, has only 34 min flight time. Meanwhile, military drones have longer flying times, but cost millions of dollars. Fixed-wing drones with longer flight hours (120 min), such as Autel’s Dragonfish [163], cost around $99,000. Hybrid drones such as the SkyFront Perimeter 8 multirotor can fly up to 5 h [164]. UAV’s flying time is also affected by the payload it carries; the fewer payloads, UAV will have a longer navigation time.

UAVs are also limited in their capacity to fly during bad weather. There are commercially available drones that can fly in windy conditions. But this scenario can be extremely difficult and challenging. One has to undergo a drone training course to make sure that setups are optimized to fly in difficult conditions, or one has to purchase high-end AUVs that cost hundreds of thousands of dollars, but many could not afford or might find it not practical. There are consumer-grade drone models that are available for windy conditions. The DJI Mavic Pro 2 can handle up to 15 mph though there are claims that it can reach a wind resistance up to 24 mph. Some commercially available drones can still fly in windy conditions but cannot withstand a tropical depression or a typhoon with at least sustained gusts of 30 mph. Although there are many efforts and studies for commercial-grade unmanned vehicle systems to advance their robustness and adapt to harsh weather conditions, this vision remains a challenge.

The capability of commercial-grade UVs to perform long-term mission is a challenge as well. The locations of coastal farms are close to the shore, so the flight time is shorter, and more time to perform navigation and its assigned mission compared to offshore farms, which are kilometers from the shore. In the case of offshore farms, if a UV takes off from the shore, it can no longer maximize its power once it reaches its destination since the battery is consumed for traveling. Thus, only limited time is available to perform its supposed function. However, there are many ways to extend and maximize their performance, such as lower altitude and smaller payloads. Instead of taking off from the shore or land area, they can take off from the barge. To assist the smart feeding machine for the fish feeding process, as an example, UV can take off from the barge or ship and does not need to travel a long distance from the shore. The operator can fly or control the UAV from the barge; it can return when finished monitoring. With this, there will be more time for the desired or target mission.

Aquaculture farms need to be visited at least once a day, and this is done during feeding time. The duration of a UV’s mission depends on the function it must perform. Performing a water quality will not require some hours since the UV can get a water sample and perform analysis right away if it is equipped with sensors to measure water quality. On the other hand, monitoring the feeding activity requires longer hours; large offshore aquaculture farms have 24 cages where each cage is 100 m (standard size) in terms of the circumference. For each cage, there is an approximate distance of 5 m away from each other. To perform feeding in such conditions, it takes around 15 to 20 min to feed one cage, and 24 cages require almost a day of feeding activity. With the amount of time to monitor the feeding of the fish, one commercial-grade UV is not sufficient since it has limited power. Thus, multiple vehicles are needed to carry out a complete monitoring mission and data collection. During harsh weather conditions, fish cages are submerged in the water, and no fish feeding activity is carried out.

8. Aquaculture Farm Monitoring and Management

Drones are capable of monitoring fish farms in aquaculture, especially on offshore sites. Its affordability and mobility have allowed for a more open scope and access to difficult areas to reach and with high risks. The continued mechanization and automation of farm monitoring using drones, sensors, and artificial intelligence will enable farmers to inspect their farms, acquire more information needed for decision making, manage and interact with their farms efficiently. Furthermore, with the rapid growth of the aquaculture industry, drones will enable the monitoring of the growing farm site. Drones can replace the supply and demand for laborers and high-cost work in the aquaculture industry, thus ensuring that the management of the fish farm becomes stable by reducing farm deaths. To enable monitoring of the growing environment at the aquaculture farm site, using a drone as an image collection device, an integrated controller for posture stabilization and a remote device to control drones can capture underwater images in real-time [165].

An aquatic platform [166] composed of USVs and buoys has a self-organizing capability performing a mission and path planning in the water environment. This platform can communicate with other devices, sense the environment (water or air), and serve as a communication channel using data gateways stations (DTS). The data taken by the USVs and Buoys using the attached sensors are forwarded to the server to be accessible to aquaculture workers to improve or maintain the aquaculture performance. Sousa et al. [167] designed and developed an innovative electric marine ASV (autonomous surface vehicle) with a simplified sail system controlled by electric actuators. This vehicle is capable of exploration and patrolling. Aside from reducing cost, since no fuel is required, it will be capable of endless autonomy, maximizes the limited energy to manage sails using propulsion power using solar cells and wind generators.

Aerial and underwater drones also have enormous potential to monitor offshore kelp aquaculture farms. Giant kelps with their same growth rate and versatility make them an attractive aquaculture crop that requires high-frequency monitoring to ensure success, maximize production, and optimize its nutritional content and biomass. Regular monitoring of these offshore farms can use sensors mounted to aerial and underwater drones. A small unoccupied aircraft system (sUAS) can carry a lightweight optical sensor. It can then estimate the canopy area, density, and tissue nitrogen content based on time and space scales, which are significant to observe changes in kelp. To provide a natural image of the kelp forest canopy, sUAS have sensors such as color, multispectral and hyperspectral cameras [168].

An integrated system to count wild scallops based on vision was developed by Rasmussen et al. [169] to measure population health. Sequential images were collected using AUV and used convolutional neural networks (CNNs) to process those collected images for object detection. The images used as a dataset were captured by a downward-pointing digital camera installed in the nose of the AUV. In the work of Ferraro [170], UAV was also used to collect color photos and side-scan sonar images of the seafloor to perform a quantitative estimate of incidental mortality using a precise and non-invasive method for sea scallops. AUV was also used to capture a reliable image of the seafloor to determine the density and size of the scallops, thus providing an accurate set of data for site surveys. It also offers an efficient and productive platform to collect sea scallop images for stock assessment since it can be quickly deployed and retrieved [171].

Oysters were also detected and counted using ROVs for small-size aquaculture/oyster farms with robotics and artificial intelligence for monitoring. The ROV’s front is mounted with a camera and two led lights. The camera feed streams to the remote machine, then used by the operator to perform underwater navigation. Additionally, the ROV was equipped with an additional GoPro camera and LED lights to view the seafloor. A graphic user interface called Qground Control (QGC) was installed to acquire underwater images of oysters by the ROV. The QGC sends commands to the device and receives the camera and other sensory information on the ground station machine or remote machine; the ROV can be controlled manually or automatically controlled. For manual control of the ROV, control commands are sent to the QGC through a wireless controller [172]. The Argus Mini, an observation class ROV built for inspection and intervention operations in shallow waters and can be used in offshore, inshore, and fish farming industries. It is equipped with six thrusters in which four are placed in the horizontal plane, and two are in the vertical plane to guarantee actuation in 4 degrees of freedom to resist water surges, sways, heaves, and yaw. The ROV is equipped with sensors to perform net cage inspection [173].

An underwater drone was developed integrating 360 degrees panoramic camera, deep learning, and open-source hardware to investigate and observe the environment such as the sea, aquarium, and lakes for fish recognition in real-time. The drone was also equipped with Raspberry Pi to compute module with GPU for processing and achieving real-time panoramic image generation [174]. Other application of UV includes periodic fish cage inspection [175], fish behavior observation [176], salmon protection [177], and fish tracking [178]. Table 8 presents the different application of unmanned vehicles for aquaculture farm monitoring and management.

Table 8.

UVs and its application to aquaculture farm monitoring and management.

8.1. Fish Feed Management

The welfare of fish in aquaculture comes from improving standards and quality for fish production technologies and aquaculture products. The well-being of fish has direct implications for production and sustainability. Fish under good welfare conditions are less susceptible to disease, hence, manifest better growth and higher food conversion rate providing better quality [179]. There are many indicators to assess fish welfare, such as fish behavior and characteristics.

Many developed technologies can automate processes, such as underwater cameras to observe fish behavior and characteristics and provide visual observations in fish cages. However, installation and configuration of underwater cameras are laborious, particularly in an offshore area. They should be equipped with cables for communication and transmission and power source for continuous data collection. There are underwater cameras that are equipped with batteries but can only work for a limited time. For such cameras, it is necessary for physical installation, and it will be difficult to keep changing and charging the battery now and then. For underwater cameras with a power source (e.g., solar power), when the source malfunctions, these devices cannot perform data collection and surveillance. With these limitations, drones become helpful as an alternative or added support for underwater cameras to provide visual functionalities for fish behaviors and characteristics.

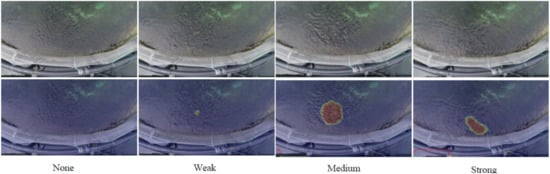

Feeding management in aquaculture is a challenging task since the visibility of the feeding process is limited, and it is laborious to have a precise measurement. Machine feeders became available to assist fish farmers in dispensing food. However, such a mechanism, when not accurately monitored, would lead to food waste and profit loss. Feeding using pellets that floats above the water should be observed when to discontinue or continue feeding. In the work of Ubina et al. [26], a drone equipped with an RGB camera captures the surveillance video of the water surface using optical flows to measure fish feeding level as shown in Figure 8. The authors conducted various experiments such as the different altitudes and viewing angles to determine the best visuals and features of the fish feeding. The images were processed using a deep convolutional neural network to classify the different feeding levels. The drone provides a non-invasive way for fish observation, which is more reliable than human investigations and observations.

Figure 8.

Image capture of the drone to evaluate fish feeding intensity using four different feeding intensity levels and the detected optical flow [26].

For a typical fish feeding to offshore locations, the feeds are transported in a boat or ship (see Figure 9). Then the pellets are dispensed using machine feeders, creating an annular feed distribution pattern across the water surface, and covering a limited percentage of the surface area. As an alternative method to determine the distribution of the pellets in the water surface, a UAV of Skøien et al. [121] was used to observe and characterize the motion and measure the spatial distribution of the pellets of the feed spreaders in sea cage aquaculture where the camera is always perpendicular to the water surface. The UAV also recorded the pellet surface impacts from the air together with the position and direction of the spreader. For this work, the UAV is fast with minimal equipment installation and a viable alternative in collecting pellets which can help farmers achieve feeding optimization.

Figure 9.

The aerial footage using UAV to facilitate optimized feeding using feeders transported in boats.

To estimate the spatial distribution of feed pellets in salmon fish cages, a UAV provides a simple and faster setup, as it covers a large area of the surface of the sea cage. The UAV captures the aerial videos using a 4K camera from a top-view position of the hamster wheel in the fish cage during the feeding experiment. The UAV used for this work was DJI Inspire 1 and was positioned above the rotor spreader. But images taken outdoors are challenging, and it needs immediate adjustment to lighting conditions changes. These difficulties are induced by the reflection of the clouds on the water surface area and sometimes caused by slight variations in the camera position. For accurate estimations, the splashes of the dropping pellets must be identified and extracted to count or measure the splashes relative to the spreader in the image. A technique was integrated using top-head imaging as a processing step to extract brighter pixels from the image corresponding to splashes [180].

8.2. Fish Behavior Observation

A bio-interactive (BA-1) AUV monitors fish interactively and can stay in the environment where the fish resides. It can be swimming together with the fish to monitor their movements in a pen-free offshore aquaculture system. The vehicle can provide a stimulus to the fish and observe their behavior caused by stimulation. The UAV was designed to have hovering and cruising capability with bio-interactive functionality with an LED lighting system. It can also operate simultaneously with other BA-1 AUVs as its multiple AUVs capability feature. The BA-1 is equipped with sensors to perform navigation, collision avoidance, localization, self-status monitoring, and payload. The device was tested in tanks and aquaculture pens with sea bream species. Once the fish becomes familiar with the vehicle, it can come close to the demand feeding system to receive the bait [181] and assist in the smart feeding process.

A UAV device with GoPro cameras for its video recording tracks monitors the behavior in space and time of GPS-tagged sunfish. For communication, the vehicle uses Wi-Fi or GSM/HSDPA. Remotely sensed environmental characteristics were extracted for each position of sunfish and used as parameters to determine their behavioral patterns [182]. Spatial movements of fish are vital in maintaining fish populations and monitoring their progress. A multi-AUV state-estimator system helps determine the 3D position of tagged fish, also its distance and depth measurements. The system is composed of two AUVs with a torpedo-shaped vehicle. The attached rear propeller in the UAV determines the location, and the four fins control the pitch, raw, and yaw of the device. It is also equipped with two processors that communicate with the sensors and actuators [183]. A stereovision AUV was utilized to assess the size and abundance of ocean perch in temperate water. The AUV hovers above the target area with a constant altitude of 2 m and with a slow flying speed above the seafloor as it captures images using a pair of downward-looking Pixelfly HiRes (1360 × 1024 pixel) digital cameras [184].

8.3. Water Quality and Pollutants Detection and Assessment

Fish are in close contact with water, which is one of the most critical factors for fish welfare, which requires continuous and close monitoring. Poor water quality can lead to acute and chronic health and welfare problems, so water quality should be at optimal levels. Aquaculture is also significantly affected by climate change which results in changes in abiotic (sea temperature, oxygen level, salinity, and acidity) and biotic conditions (primary production and food webs) that will significantly cause disturbance in growth and size [1]. Parameters that reflect water quality [179] include temperature, conductivity, pH, oxygen concentration, and nitrogenous compounds such as ammonia, nitrate, and nitrite concentration. Traditional water assessments and predictions collect water samples and submit them for laboratory inspections, or some have physical-chemical test devices carried [185]. This method is a burdensome one and requires a physical presence to conduct water quality assessments. Many aquaculture farms rely on mechanical equipment to ensure water quality, which includes oxygenation pumps, independent rescue power systems, and aeration/oxygenation equipment. Although they are helpful, they have limitations when installed in open-sea cages or offshore aquaculture sites and require additional configurations and setup. Drones have become very helpful to perform on-site water monitoring, sampling, and testing due to their high mobility, reliability, and flexibility to carry water quality sensors. A combination of UAV and wireless sensor network (WSN) in the work of Wang et al. [186] was designed for a groundwater quality parameter and the acquisition of drone spectrum information. Their proposed approach provides a new mechanism on how remote sensing with UAVs can rapidly monitor water quality in aquaculture.

An electrochemical sensor array to predict and assess water quality data using the pH of the water, dissolved oxygen, and ammonia nitrogen is carried by a floating structure UAV in T shape that can take off and land on the water surface. The sensor bears the capability of real-time detection and transmits its result to the sever backstage using the cloud server through a wireless network [185]. Furthermore, catastrophic events such as spills of hazardous agents (e.g., oil) in the ocean can cause massive damage to aquaculture products. To detect similar leaks like the fluorescent dye in the water, Powers et al. [187] used USV by mounting a fluorescence sensor underneath for detection. An unmanned aircraft system (UAS) visualized the fluorescent dye, and the USV takes samples from different areas of the dye plume.

Water sample collection based on in situ measurable water quality indicators can increase the efficiency and precision of collected data. To achieve the goal of preciseness, an adaptive water sampling device was developed using a UAV with multiple sensors capable of measuring dissolved oxygen, pH level, electrical conductivity, temperature, and turbidity. The device was tested using seven locations and was successful in providing water quality assessment [188]. In addition, in the works of Ore et al. [189], Dunbabin et al. [190] and Doi et al. [191], UAVs were used to obtain water samples that require less effort and faster data collection.

An extensive study on how drone technology assists in water sampling to achieve the goal of biological and physiological chemical data from the water environment can be found in the work of Lally et al. [192] and was characterized mainly using remote sensing. Spectral images captured by UAV were also used to assess water quality, such as algae blooms, to determine the chlorophyll content of the water [193], turbidity, and colored dissolved organic matter [194]. Other studies also show the use of drones with attached thermal cameras, such as miniaturized thermal infrared [195], to capture images for measuring surface water temperature, and environmental contamination [196].

The work of Sibanda et al. [197] shows a systematic review to assess the quality and quantity of water using UAVs. In Table 9, dissolved oxygen, turbidity, pH level, ammonia nitrogen, nitrate, water temperature, chlorophyll-a, redox potential, phytoplankton counts, salinity, colored dissolved organic matter (CDOM), fluorescent dye, and electrical conductivity were among the collected parameters for water monitoring. Additionally, the DJI brand of drones is the commonly used commercial type. Some UAVs have sensors specific to their functions (e.g., dissolve oxygen sensors test dissolve oxygen). Many customized UAVs were also used to perform a water quality assessment to meet the specific needs of each work and as an improvement to existing commercial capabilities such as navigation, strength, and mobility capabilities.

Table 9.

Type of UVs and parameters used for water quality assessment and monitoring.

8.4. Water Quality Condition

Aquaculture farms have raised environmental concerns, and an increase in aquaculture production will pose a huge environmental challenge. Climate change is considered a threat to aquaculture production [21]. Sea-level rise, frequent and extreme weather (e.g., winds and storms) events are also projected to increase in the future [1]. For sustainable growth in aquaculture production, it is necessary to adapt to climate to produce more fish, and environmental impacts could not affect its operations.

UVs are commonly applied for image acquisition in the field of geophysical science to generate high-resolution maps. There is an increasing demand for high-performance geophysical observational methodologies, and UV technology combined with optical sensing to quantify the character of water surface flows is a possibility. Water surface flow affects the growth and health of aquaculture products with its environmental impacts from sea lice, escaped fish, and release of toxic chemicals and organic emissions to the water area [204]. It is also essential for farming fish in cages for replenishment of oxygen and removal of organic waste [156]. Water velocity also has a profound impact on fish metabolism, growth, behavior, and welfare. A higher velocity can boost the growth of farmed fish. In the work of Li et al. [205], it determines the protein content of the fish muscle using moderate swimming exercise. Using moderate water velocity exhibited a higher level of the protein content of the fish muscle. The growth performance of Atlantic salmon was also monitored using lower salinity and higher water velocity with positive effects on the growth of the salmon [98]. Another positive influence of higher velocity on fish welfare is in the work of Tauro et al. [204], where improvements of flesh texture, general robustness, and lower aggression lead to a reduced stress response. On the other hand, very high velocities increase oxygen need and anaerobic metabolism and cause exhaustion, reduced growth, and affect fish welfare. Moreover, excessive current flow causes the fish to excessively use its energy in swimming. Outrageous waves in an offshore environment, on the other hand, damages cage structures and moorings and can cause fish injury. A severe wave condition can be a hazardous situation and can cause an interruption in the routines or operations of farmers [156].

With the mentioned importance of measuring water surface flow and velocity for fish growth, drones can be integrated to perform such functions. Flying drones [204] were used to observe the water environment to produce accurate surface flow maps of submeter water bodies. This aerial platform enables complete remote measurements for on-site surveys. To measure the water velocities that integrate UVs, the work of Detertm and Weitbrecht [99] shows its effectivity to perform such function. A technique on how a drone can retrieve a two-dimensional wavenumber spectrum of sea waves from sun glitter images was proposed by [206], which shows the potential of the drone to investigate the surface wave field. Airborne drones were compared with satellite images to determine the state of the sea in the ocean and the dynamics of the coastal areas. Optical technologies that use spatial resolution optical images derive anomalies in the elevation of the water surface induced by wind-generated ocean waves [207].

In Table 10, UVs are equipped with cameras to collect data from the water environment. The majority of UV used are the commercial DJI Phantom, which is famous for its affordability and is sought-after but is reported to have a small amount of image distortion that can affect the images. According to Streßer et al. [208] and Fairleyet al. [209], some fixes were made with the gimbal pitch to make it independent of the aircraft’s motion.

Table 10.

Application of UVs to perform water condition monitoring.

9. Legal Regulations and Requirements for Unmanned Vehicle Systems

Potential users of unmanned vehicle systems, especially unmanned aerial systems (UAS), should be aware of the current and proposed regulations to understand their potential impacts and restrictions. The permitted sites for UAS should be first determined; flight restrictions for UAS in the offshore locations of aquaculture sites should be within the allowable time of the day. One of the challenges to consider when using UAS is that regulations are not fully established and are currently changing. The user must always check the updated rules in advance [54] of the scheduled flight or mission.

9.1. Standards and Certifications

New policies and regulations for UAS must be planned and implemented to ensure there is a safe, reliable, and efficient use of the vehicles. Developing standards is one of the most crucial issues for UAS since UAVs should be interoperable with the existing systems. In managing the electromagnetic spectrum and bandwidth, it is critical of UAVs not to be operating in crowded frequency and bandwidth spectrum. It is also essential to be aware of the published standardization agreements by NATO for UAVs. This standard defines the standard message formats and data protocols. It provides a standard interface between UAVs and ground coalitions. It also represents the coalition-shared database that allows information sharing between intelligent sources. In the US, the Federal Aviation Administration (FAA) has provided certification for remote pilots, including commercial operators [221]. UAVs used for public operations should have a certificate from the FAA; operators must comply with all federal and laws, rules, and regulations of each area, state or country [222].

9.2. Regulations and Legal Issues

In Canada, drones weighing from 250 g to 25 kg must be registered with Transport Canada, and pilots must have a drone pilot certificate. Pilots must mark their drones with their registration number before flying and drones should be seen at all times. While flying, they should be below 122 m in the air. The places where drones are prohibited to fly include 5.6 km from airports or 1.9 km from heliports. In the US, each state has its respective laws and regulatory requirements. In Taiwan, drones are prohibited to fly in sensitive areas such as government or military installations. Drone flights are permitted only within a visual line of sight and are limited to daylight hours between sunrise and sunset without prior authorization. A drone operator permit is required if the drone weighs more than 2 kg. In Germany, drones weighing more than 5 kg should obtain authorization from the aviation authority. When applying for permission, a map indicating the launch area and operating space, consent declaration from the property owner, timing, technical details about the UAS, data privacy statement, and a letter of no objection from the competent regulatory or law enforcement agency [223].

UAV regulations and policies of different countries have some common ground. However, they still differ in many aspects in terms of requirements and implementation. When used for a specific purpose, according to Demir et al. [222], aviation regulations determine the rules for the AUV minimum flight requirements. In most countries, UAVs are used in separate airspace zones. National regulations are also laid out to ensure safe operations of different UAVs in their respective national airspaces.