A Lightweight, Robust Exploitation System for Temporal Stacks of UAS Data: Use Case for Forward-Deployed Military or Emergency Responders

Abstract

1. Introduction

1.1. Unmanned Aerial System (UAS)-Derived Three-Dimensional (3D) Data More Ubiquitous

1.2. Challenges with UAS-Derived 3D Analysis

2. Material and Methods

2.1. Study Area

2.2. Preprocessing and Data

2.3. Computing Resources

3. Theory and Calculation

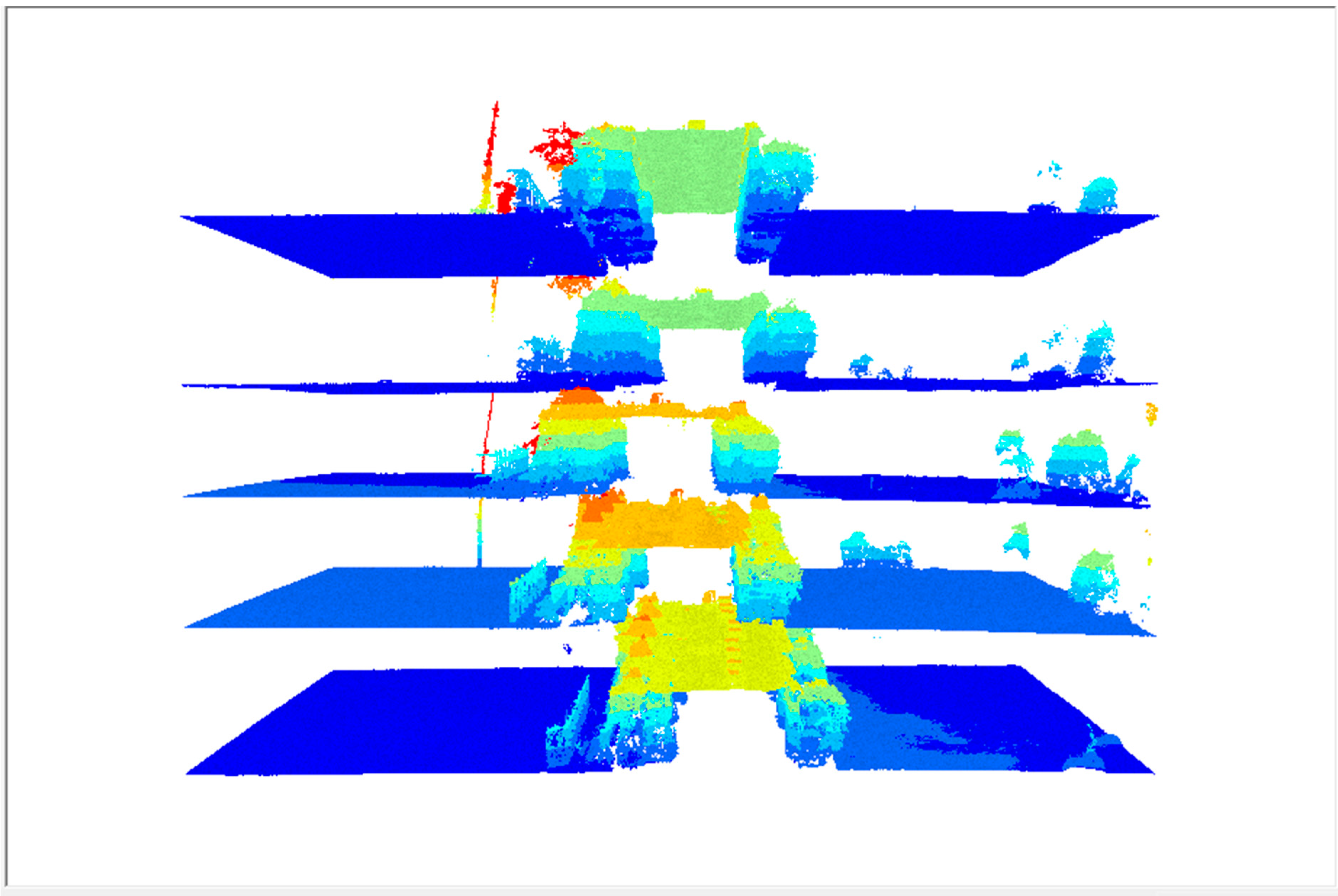

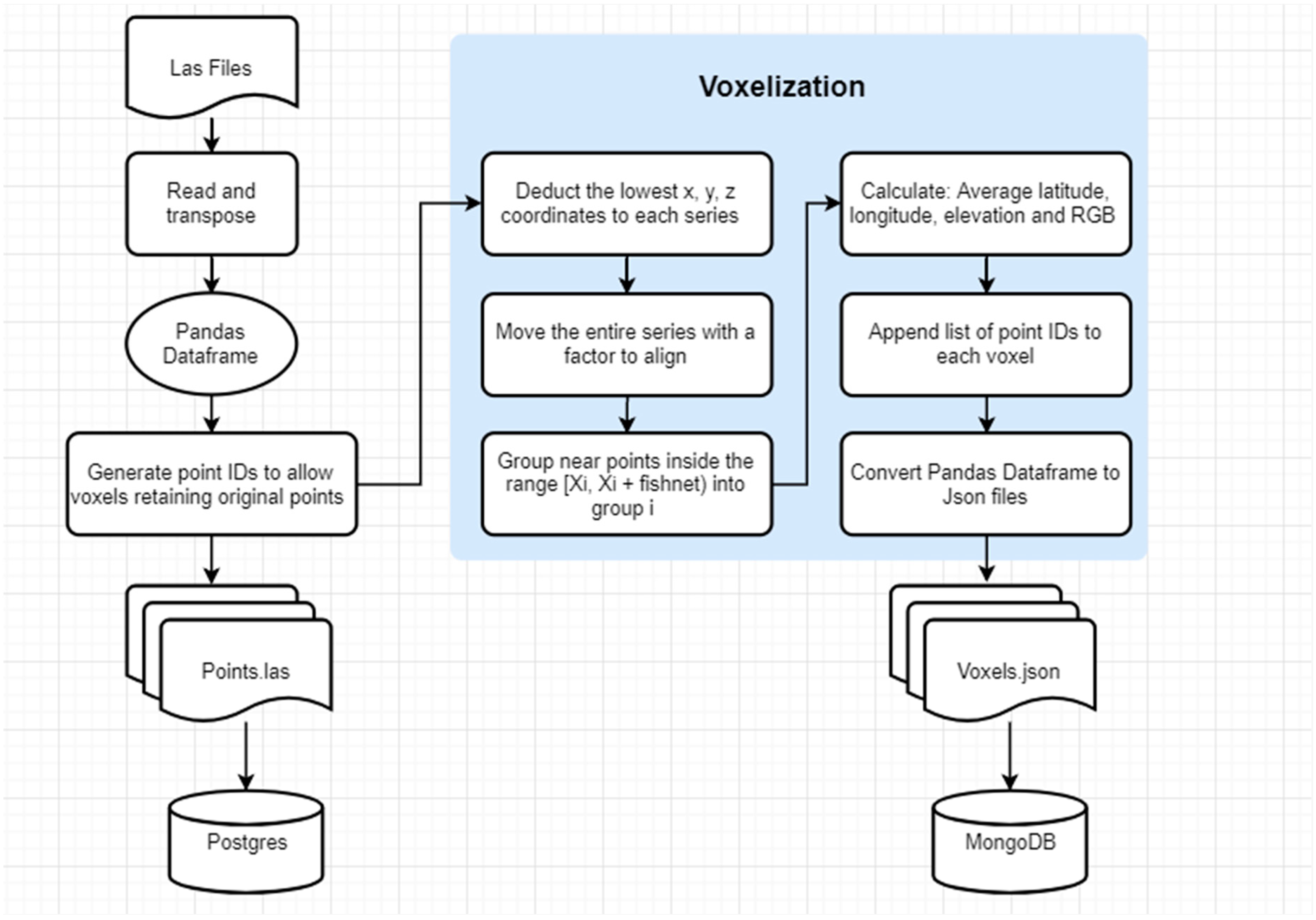

3.1. Voxelization

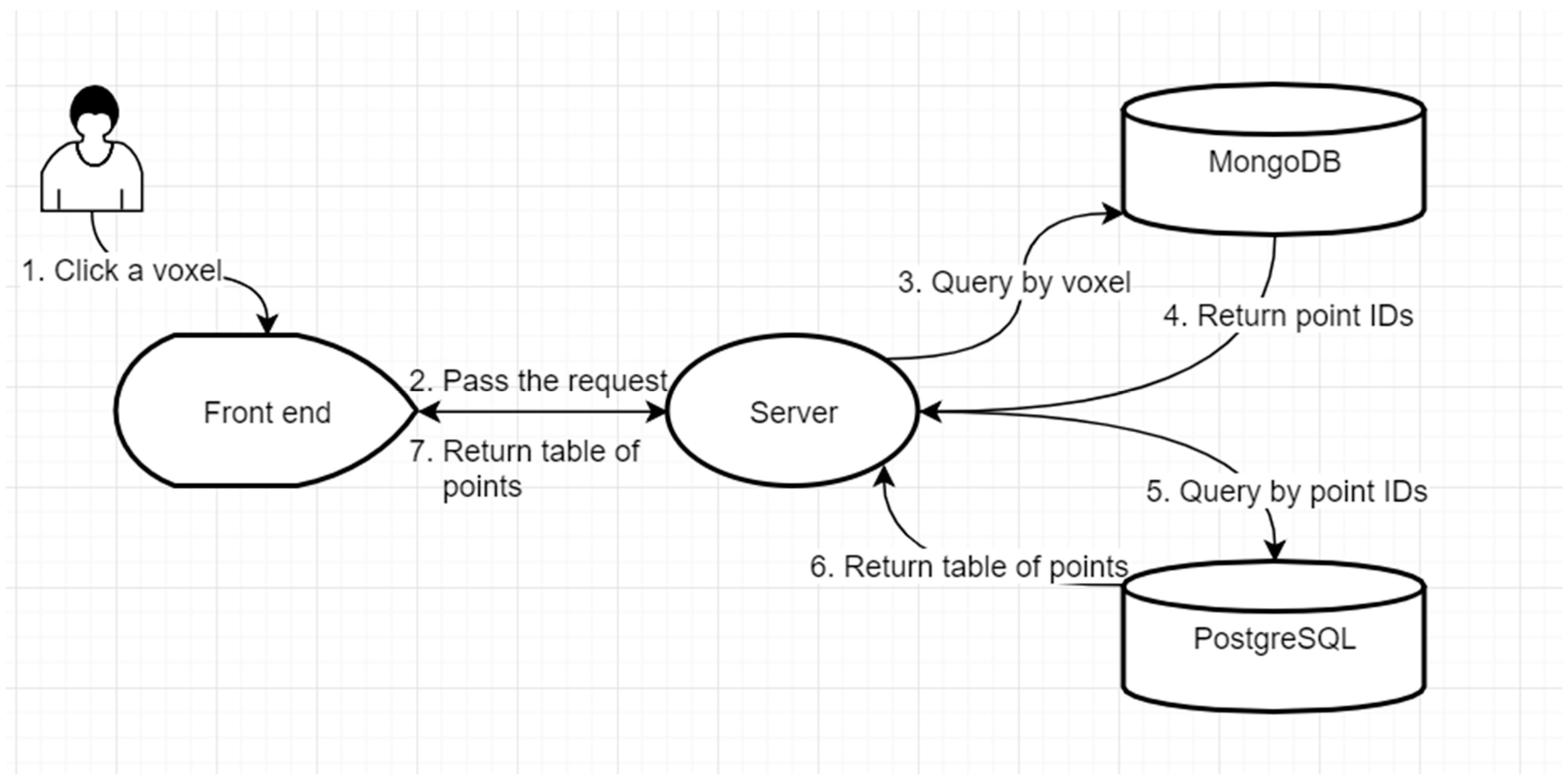

3.2. Database (MongoDB and PostGres)

3.3. CesiumJS Front-End

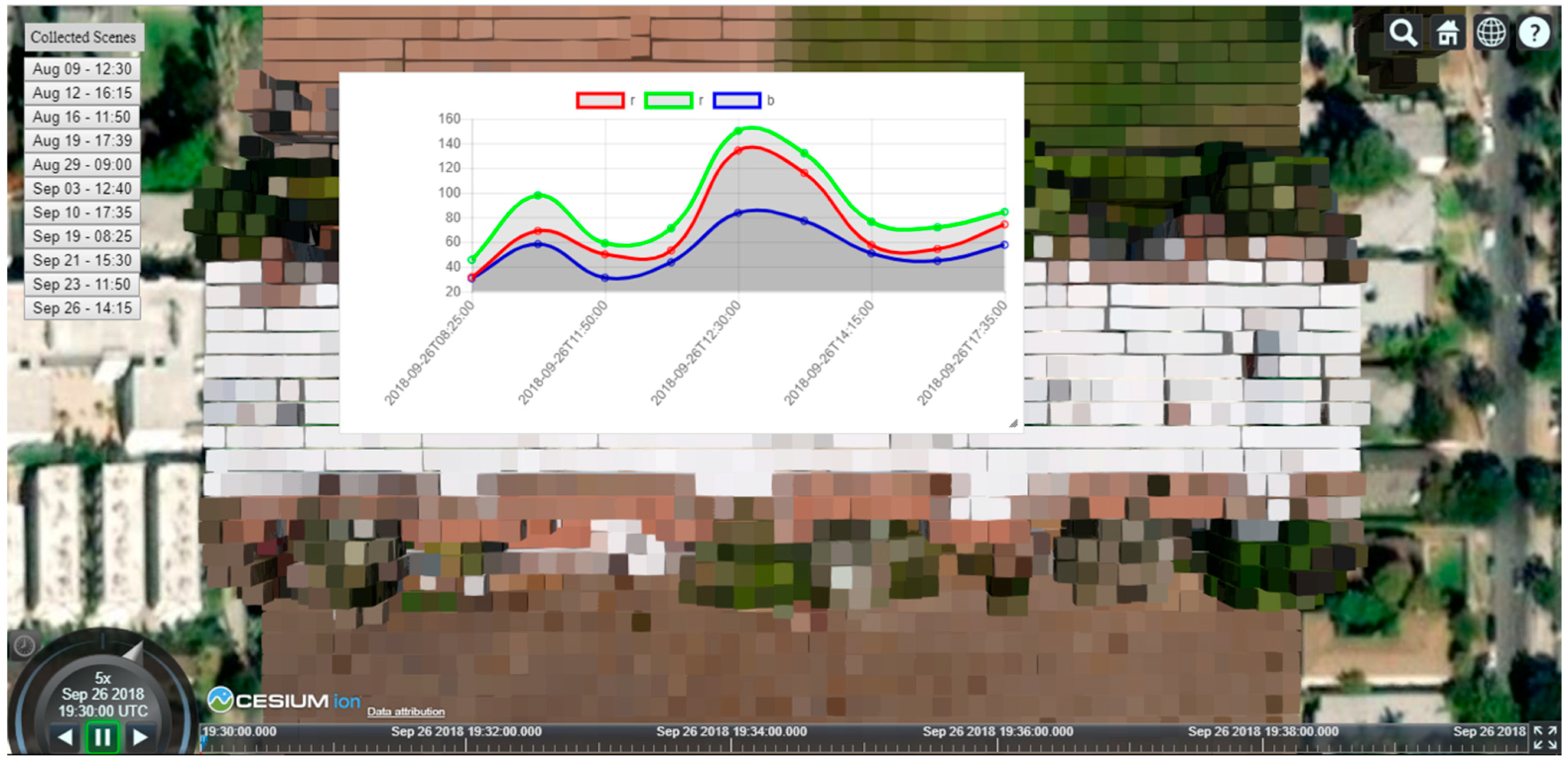

4. Results

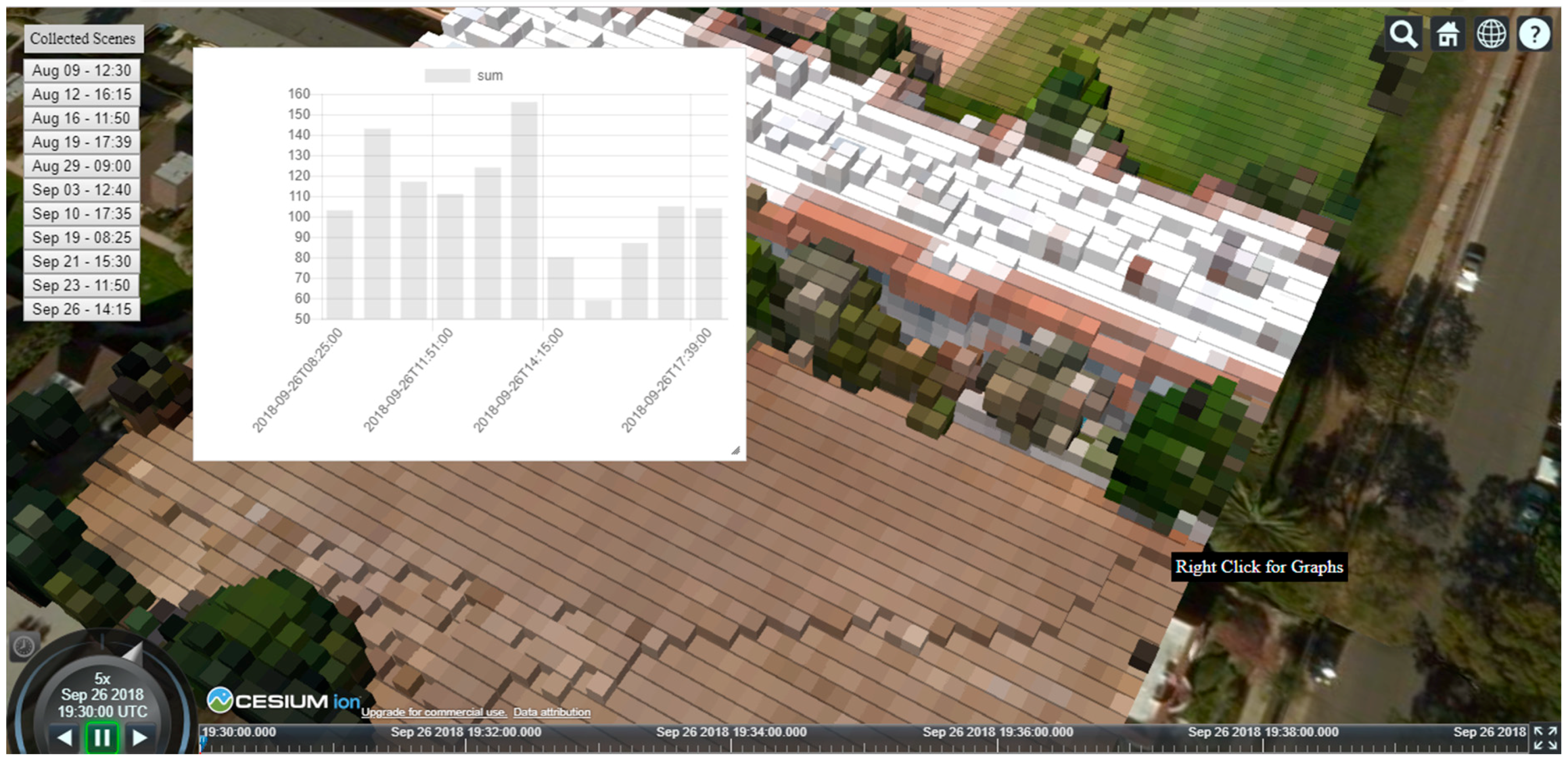

4.1. Front-End Rendering

4.2. Query and Analysis Tools

5. Discussion and Conclusions

Author Contributions

Funding

Acknowledgements

Conflicts of Interest

Abbreviations

| AGL | Above Ground Level |

| FAA | Federal Aviation Administration |

| GCP | Ground Control Point |

| GPS | Global Positioning System |

| LAS | Log ASCII Standard |

| LIDAR | Light Detection and Ranging |

| RBG | Red Blue Green |

| RTK | Real Time Kinetic |

| UAS | Unmanned Aerial System |

References

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef]

- Chabot, D. Trends in drone research and applications as the Journal of Unmanned Vehicle Systems turns five. J. Unmanned Veh. Syst. 2018, 6, vi–xv. [Google Scholar] [CrossRef]

- Van Blyenburgh, P. 2013–2014 RPAS Yearbook: Remotely Piloted Aircraft Systems: The Global Perspective 2013/2014; UVS International: Paris, France, 2013. [Google Scholar]

- DJI. Phantom 4 Pro Specs. Available online: https://www.dji.com/phantom-4-pro/info (accessed on 11 January 2019).

- Sensefly. eBee RTK Technical Specifications. Available online: http://www.sensefly.com/ (accessed on 14 January 2019).

- Pajić, V.; Govedarica, M.; Amović, M. Model of Point Cloud Data Management System in Big Data Paradigm. ISPRS Int. J. Geo-Inf. 2018, 7, 265. [Google Scholar] [CrossRef]

- Siebert, S.; Teizer, J. Mobile 3D mapping for surveying earthwork projects using an Unmanned Aerial Vehicle (UAV) system. Autom. Constr. 2014, 41, 1–14. [Google Scholar] [CrossRef]

- Colomina, I.; de la Tecnologia, P.M. Towards a New Paradigm for High-Resolution Low-Cost Photogrammetry and Remote Sensing. In Proceedings of the ISPRS XXI Congress, Beijing, China, 3 July 2008; pp. 1201–1206. [Google Scholar]

- Eisenbeiß, H. UAV Photogrammetry. Ph.D. Thesis, ETH Zurich, Zurich, Switzerland, 2009. [Google Scholar]

- Remondino, F.; Barazzetti, L.; Nex, F.; Scaioni, M.; Sarazzi, D. UAV photogrammetry for mapping and 3d modeling—Current status and future perspectives. Int. Arch. Photogramm. Remote. Sens. Spat. Inf. Sci. 2011, 38, C22. [Google Scholar] [CrossRef]

- Pix4D. Professional Photogrammetry and Drone-Mapping. Available online: https://www.pix4d.com/ (accessed on 5 January 2019).

- Lerner, K.L.; Lerner, B.W. Gale Encyclopedia of Science; Gale: Farmington Hills, MI, USA, 2004; Volume 6. [Google Scholar]

- Schenk, T. Concepts and algorithms in digital photogrammetry. ISPRS J. Photogramm. Remote Sens. 1994, 49, 2–8. [Google Scholar] [CrossRef]

- Grimes, J.G. Global Positioning System Standard Positioning Service Performance Standard; US Department of Defense: Washington, DC, USA, 2008.

- Boerner, R.; Hoegner, L.; Stilla, U. Voxel based segmentation of large airborne topobathymetric lidar data. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Hannover, Germany, 6–9 June 2017; Volume 42. [Google Scholar]

- Papon, J.; Abramov, A.; Schoeler, M.; Worgotter, F. Voxel cloud connectivity segmentation-supervoxels for point clouds. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 2027–2034. [Google Scholar]

- Xu, Y.; Boerner, R.; Yao, W.; Hoegner, L.; Stilla, U. Automated coarse registration of point clouds in 3d urban scenes using voxel based plane constraint. In Proceedings of the ISPRS Annals of Photogrammetry, Remote Sensing & Spatial Information Sciences, Wuhan, China, 18–22 September 2017; Volume 4. [Google Scholar]

- Antunes, R.R.; Blaschke, T.; Tiede, D.; Bias, E.; Costa, G.; Happ, P. Proof of concept of a novel cloud computing approach for object-based remote sensing data analysis and classification. GISci. Remote Sens. 2019, 56, 536–553. [Google Scholar] [CrossRef]

- Rowena, L. Using Lidar to Assess Destruction in Puerto Rico. Available online: http://news.mit.edu/2018/mit-lincoln-laboratory-team-uses-lidar-assess-damage-puerto-rico-0830 (accessed on 1 January 2019).

- Wathen, M.; Link, N.; Iles, P.; Jinkerson, J.; Mrstik, P.; Kusevic, K.; Kovats, D. Real-time 3d change detection of ieds. In Proceedings of the Laser Radar Technology and Applications XVII, Baltimore, MI, USA, 14 May 2012; p. 837908. [Google Scholar]

- Liew, S.; Huang, X.; Lin, E.; Shi, C.; Yee, A.; Tandon, A. Integration of tree database derived from satellite imagery and lidar point cloud data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 105–111. [Google Scholar] [CrossRef]

| Processing Option | Selection |

|---|---|

| Keypoints Image Scale | Full |

| Matching Image Pairs | Aerial Grid or Corridor |

| Matching Strategy | Geometrically Verified Matching |

| Targeted Number of Keypoints | Automatic |

| Calibration | Accurate Geolocation and Orientation |

| Processing Option | Selection |

|---|---|

| Image Scale | (½) Half Image Size |

| Point Density | Optimal |

| Minimum Number of Matches | 3 |

| Export | LAS |

| Matching Window Size | 9 × 9 pixels |

| Processing Area | Use Processing Area |

| Annotations | Use Annotations |

| Limit Camera Depth | Use Limit Camera Depth Automatically |

| Description | Value | |

|---|---|---|

| Position | cartographicDegrees | [longitude, latitude, elevation] |

| Box | dimensions/cartesian | [x, y, z] |

| material/solidColor/color/rgba | [r, g, b, a] | |

| Availability | Time interval | |

| Points | pointIndexes | List of point IDs |

| size | Number of points |

| Tool | Description |

|---|---|

| 3D RGB Rendering | Rendering of voxels based on R,G,B values |

| 3D Point Count Classification Rendering | Rendering of voxels based on Point Count classifications |

| Temporal Voxel Attribute Pull | Ability to retrieve and graph temporal values for R,G,B (can be customized for other attribute values) |

| Temporal Voxel Point Count Pull | Ability to retrieve and graph temporal values of voxel point count |

| Temporal Clustering Metric Pull | Ability to retrieve and graph clustering metric of voxels |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Marx, A.; Chou, Y.-H.; Mercy, K.; Windisch, R. A Lightweight, Robust Exploitation System for Temporal Stacks of UAS Data: Use Case for Forward-Deployed Military or Emergency Responders. Drones 2019, 3, 29. https://doi.org/10.3390/drones3010029

Marx A, Chou Y-H, Mercy K, Windisch R. A Lightweight, Robust Exploitation System for Temporal Stacks of UAS Data: Use Case for Forward-Deployed Military or Emergency Responders. Drones. 2019; 3(1):29. https://doi.org/10.3390/drones3010029

Chicago/Turabian StyleMarx, Andrew, Yu-Hsi Chou, Kevin Mercy, and Richard Windisch. 2019. "A Lightweight, Robust Exploitation System for Temporal Stacks of UAS Data: Use Case for Forward-Deployed Military or Emergency Responders" Drones 3, no. 1: 29. https://doi.org/10.3390/drones3010029

APA StyleMarx, A., Chou, Y.-H., Mercy, K., & Windisch, R. (2019). A Lightweight, Robust Exploitation System for Temporal Stacks of UAS Data: Use Case for Forward-Deployed Military or Emergency Responders. Drones, 3(1), 29. https://doi.org/10.3390/drones3010029