1. Introduction

Milgram’s virtuality continuum conceptualizes the composition of environments based upon the presence and absence of virtual and real objects [

1]. Due to this conceptualization, the field of human-computer interaction has a growing interest in understanding how these forms of reality along the continuum influence learning and user experience. Beyond understanding how people perceive this mix of virtual and real objects, researchers must understand how the composition of the environment influences not only perceptual factors, but how these factors contribute to higher cognitive processes. Virtual Reality (VR) has successfully provided an environment for training users on perception and action tasks [

2]. For example, novice pilots often train in simulators that are designed to focus on cognitive tasks to prevent unnecessary distraction from irrelevant controls [

3]. This environment allows them to rehearse procedures without the risks associated with performing tasks incorrectly in a real-world environment. However, virtual reality simulation based trainers have limitations in interaction design. Some control panels may require a different interaction other than what would be required in the actual operational environment, such as pushing a button, or the space constraints of a real cockpit. By extension, it follows that Augmented Reality (AR) may fill a gap related to this capability by leveraging real world cues to enhance performance and fidelity. The increasing availability of commercial, off-the-shelf technology, such as the Microsoft Hololens, makes AR a viable tool for a variety of training domains. This is particularly applicable in a domain such as sports, where not only visual cues are necessary, but spatial awareness and environmental cues are required for successful performance [

4].

The extant literature on ToT is primarily derived from healthcare, aviation, and military domains [

5]. ToT in AR-based simulations/applications has been studied in the context of assembly or manufacturing tasks [

6,

7]. Our study extends this current literature by highlighting the need for further research in complex rule set environments, namely in the domain of sports. Due to the typical hands on approach of learning sports rules from a coach or other instructor, we are interested in exploring some of the exposure effects that might help prior to experiential forms of learning. By exposing students to the rules and regulations prior to playing the game, it may lessen the burden of learning both physical and cognitive tasks at the same time for novice players. In addition to extending current transfer of training literature to the AR domain, we explore user experience across several interfaces including an Augmented Reality interface and a Virtual Reality interface. We evaluated differences in perceived user experience and whether learning outcomes are influenced by the type of interface presented to the user. The experiment starts with an intervention followed by a post-test, this decision is based on evaluation designs used with intelligent tutoring systems (ITS) [

8]. Because ITS are designed to present personalized information to the end user, it follows that studies have demonstrated that this form of instruction may lead to better learning outcomes than reading a paper manual or watching a video tutorial alone [

9].

Previous studies on referee behavior and decision making demonstrated that referees make an average of 44.4 decisions alone during a typical cup match [

10]. Although our goal in this paper is to train novice players to better understand soccer rules, this information does lend itself well to better understanding the kind of cognitive demands made on observers of a soccer match who have interest in knowing what is occurring throughout the game and how the plays affect the game outcomes. Additionally, if experts are required to make this many decisions alone, we can better understand how overwhelming it is for a novice player to make calls about rule violations. Therefore, with this as the motivation for the present work, we aim to lessen the cognitive demands novices face when learning soccer for the first time, by isolating the rule comprehension aspect of the game. To the best of our knowledge, no other paper has studied the differences in user perception of all three interface types while also exploring the utility of adding an ITS to provide feedback to the user. Therefore, the purpose of this study is to report whether there are differences in learning outcomes between VR, AR, and a traditional Desktop interface. For these reasons, we present the following hypotheses. First, we contend that participants will perform just as well, if not better, in the AR condition when compared to the other two study conditions, Desktop and VR. Because the content is the same for all three interface conditions we claim that the AR environment allows them to view examples in the real world context, providing a more enjoyable user experience. The presence of the participants in the real world environment allows them to focus on the most important visual aspects required for understanding the rules which contributes to better learning outcomes. Second, we suggest that the AR environment will result in a more enjoyable user experience due to the availability of visual cues and affordances in the real-world environment [

11]. We point to these, as potential areas for further research. We also conducted a preliminary evaluation of user experience, to better understand which condition provided the most interesting and enjoyable experience for participants. Primarily, we are interested in understanding whether there are differences in reported user experiences between AR and VR.

We found that participants after the experiment overall achieved a similar understanding of the soccer rules under the three conditions. This is supported by: (1) the fact that just one participant in each condition did not pass all quiz assessments after the third attempt and (2) the significance found in the perceived increase of knowledge participants reported. However, to carry out this knowledge more learning reinforcements are needed by the Desktop interface than in the VR and AR condition. We also detected that the reported user experience favors AR and VR over Desktop. Interestingly, even though some users reported discomfort (due to device weight) on the use of the Hololens for the duration of the study, they rated the experience less frustrating and more interesting than the other two counterparts.

2. Related Work

Increasingly, studies in sports training emphasize the importance of training and improving perceptual skills [

12]. Typically, these studies are grounded in either expertise-performance based theories or frameworks that focus on decision making or other aspects of macrocognition [

13]. Recent research expanded this macrocognitive approach to using simulated environments for sports training. For example, VR has been implemented as an environment for training behavioral and physiological responses related to performance anxiety [

14]. Thus, the application for VR in a variety of sports-related contexts lends itself well to extending this research into AR applications. AR for sports is a rapidly growing area for research due to the increasing availability of AR head-mounted displays and mobile AR interfaces. This broad, but relatively new application of AR provides a rich area for exploration. For example, Kajastila et al. demonstrated the utility of AR in planning and navigating surfaces in the context of team rock climbing [

15]. Additionally, Sano et al. have studied the effects of using AR as a training tool to help novice players visualize the velocity and trajectories of a soccer ball [

16].

To better understand whether these results apply across multiple sports and team sports, we study the effects of three different interfaces and ITS combined system to support and facilitate learning the common rules of a soccer match. The ITS in this experiment is a product of the Army Research Lab’s Generalized Intelligent Tutoring Framework [

17] (GIFT). This ITS is designed to be configurable for any domain. Due to the robust nature of GIFT, we explore this as a potential combination for maximizing learning outcomes in the context of learning soccer rules and regulations [

18]. The adaptive nature of the tool allows the user to enhance their learning experience by practicing concepts and viewing more direct examples of plays (See

Figure 1). Additional work involving AR has shown clear benefits in assembly tasks, for instance in [

4] was found that AR combined with ITS improves student performance and in [

19] the AR trained group had fewer unsolved errors compare to the group that followed a filmed demonstration.

We tested six of the most common concepts derived directly from official International Football Association Board “Laws of the Game 2016/2017” documentation [

20]. These six concepts included: offside rules, free kicks, penalty kicks, throw-ins, goal kicks, and corner kicks. We chose these concepts as they represent common scenarios encountered in soccer matches. Soccer provides a domain that is unique in the sense that the participant demographic we chose is not familiar with soccer tasks or rules. This prevents bias from skewing the results of the study since it is not a task that would create a learning effect. Additionally, soccer represents a sport that relies heavily on visual cues. This allows us to explore some of the proxemic measurements associated between conditions and whether these measurements contribute to a positive user experience or increased performance measured in terms of learning outcomes. Although other studies have demonstrated the utility of using video simulation to improve a novice player’s ability to anticipate opponent movements, these studies primarily emphasize skill-building and improvement [

21].

3. Materials and Methods

We conducted a study in which we examined three interface types: a traditional desktop, a virtual reality condition, and an augmented reality condition.

3.1. Subjects and Apparatus

A total of 36 participants (23 male, 13 female) were randomly assigned to one of the three interface conditions. The participants were all university students between 18 and 35 years of age (Mean = 23.1, Median = 21). For the desktop and VR scenarios, we used a computer running Windows 10 with 16 GB of RAM and a 3.4 GHz i7 processor, with an Nvidia GeForce GTX 1080 using 8 GB VRAM. Specifically, for virtual reality we used the HTC Vive to immerse our participants and one Vive controller to navigate around the environment and interact with the GUI. For the AR scenario, we used a Microsoft Hololens to display virtual overlays in the physical world, an Xbox One controller to interface with the GUI and a Surface Pro 3 running Windows 10 with 8 GB of RAM and a 2.3 GHz i7 processor. Each condition made use of the same simulation, which was developed in Unity3D version 2017.1.0f3.

3.2. Software Description (ITS)

A tutoring system application was developed using the Generalized Intelligent Framework for Tutoring (GIFT) [

17].

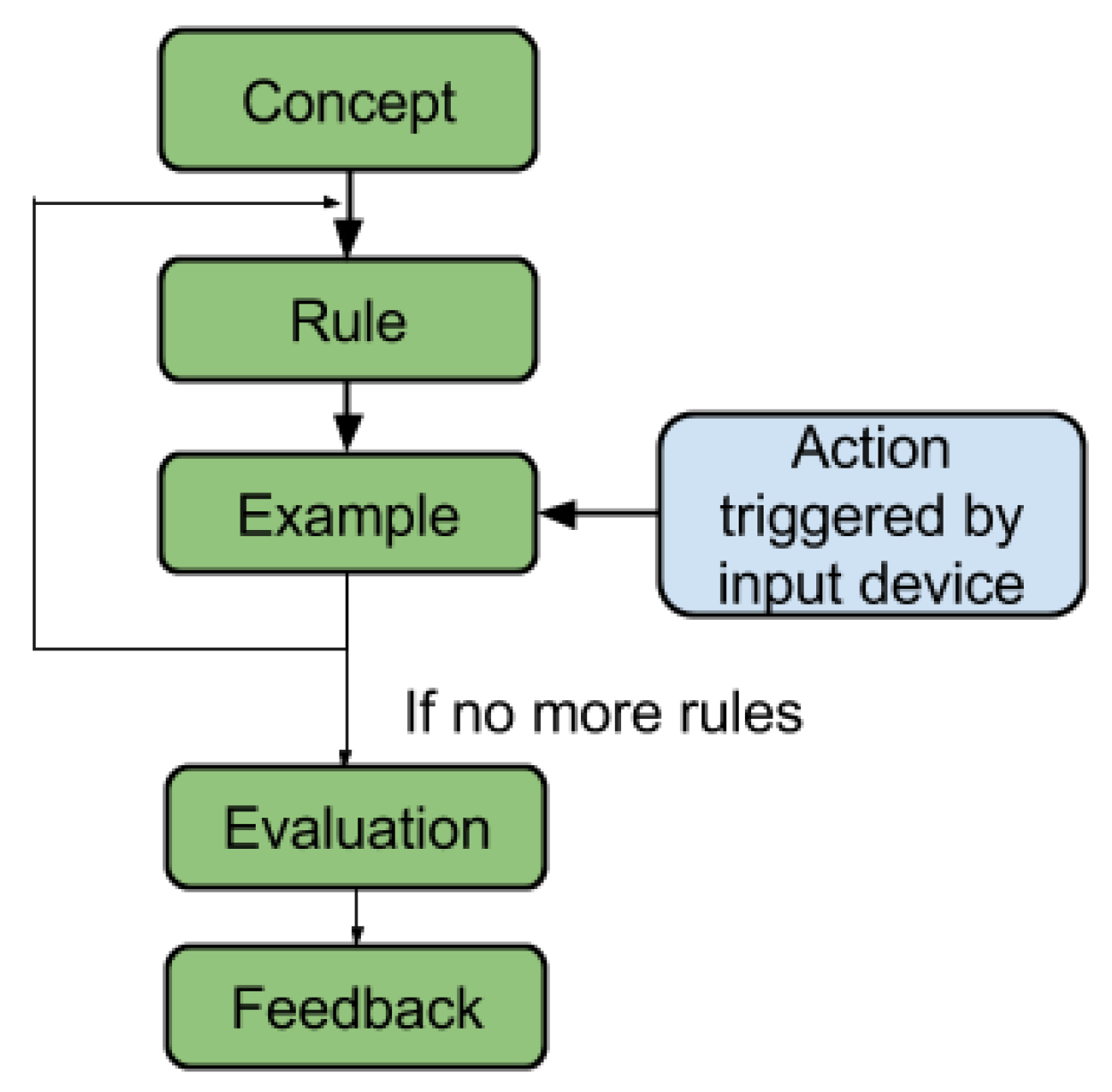

Figure 1 illustrates the course flow, starting with content about the overall description about the game to concepts and terminology in the following sections. Each course node maps to supporting media elements from a Unity application. “Concept” and “Rule” (

Figure 2) are visualized in a screen canvas for desktop and a floating canvas in the case of the VR and AR setup. The application loads the respective scene identified by unique keywords defined for each scenario. The “Evaluation” component manages the adaptive strategy used for the experiment based on the Engine For Management of Adaptive Pedagogy (EMAP) [

22]. Finally, the “Feedback” object shows the outcome of the student after each concept providing feedback for incorrect answers. The process repeats for each concept until the six of them are completed.

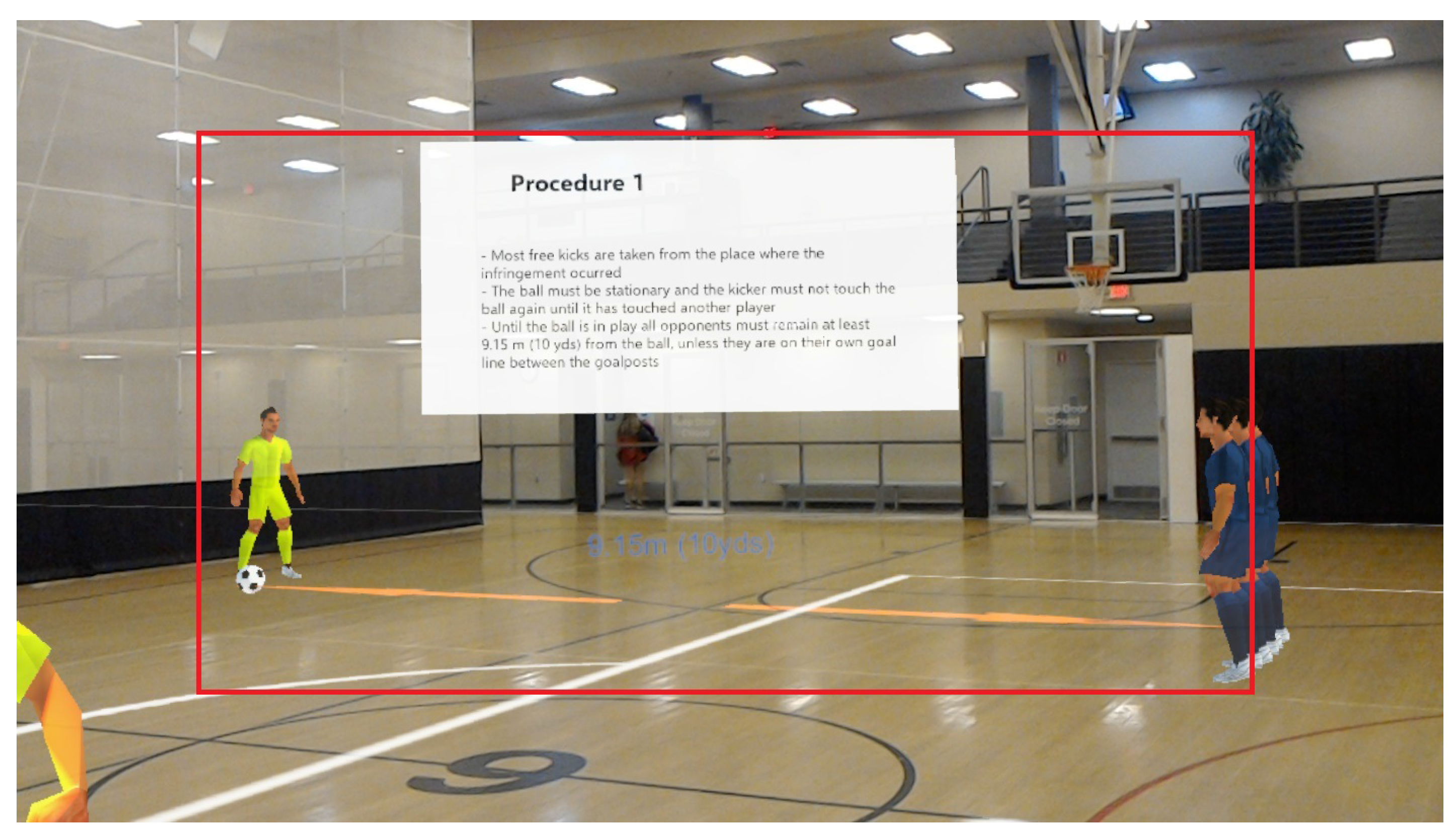

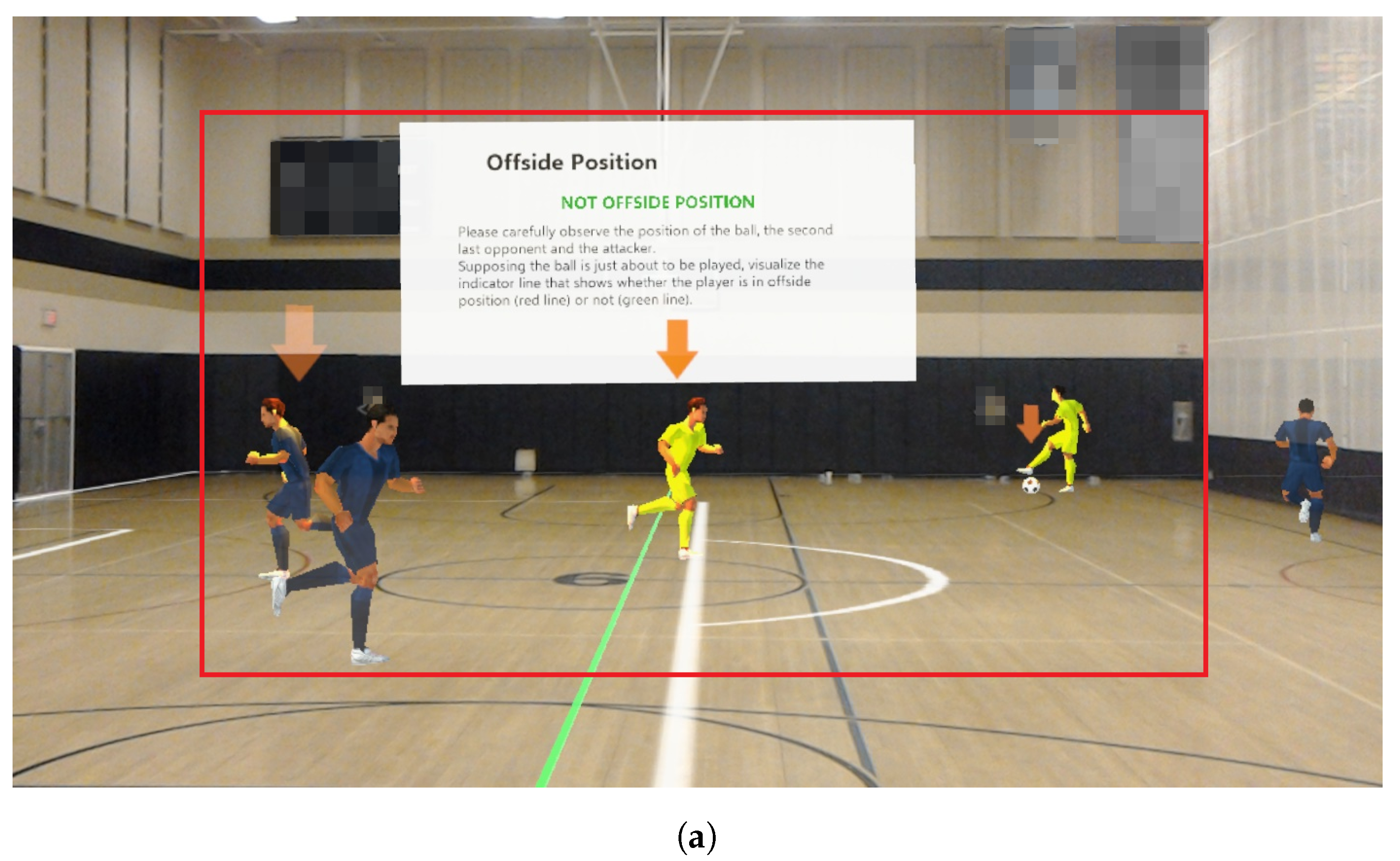

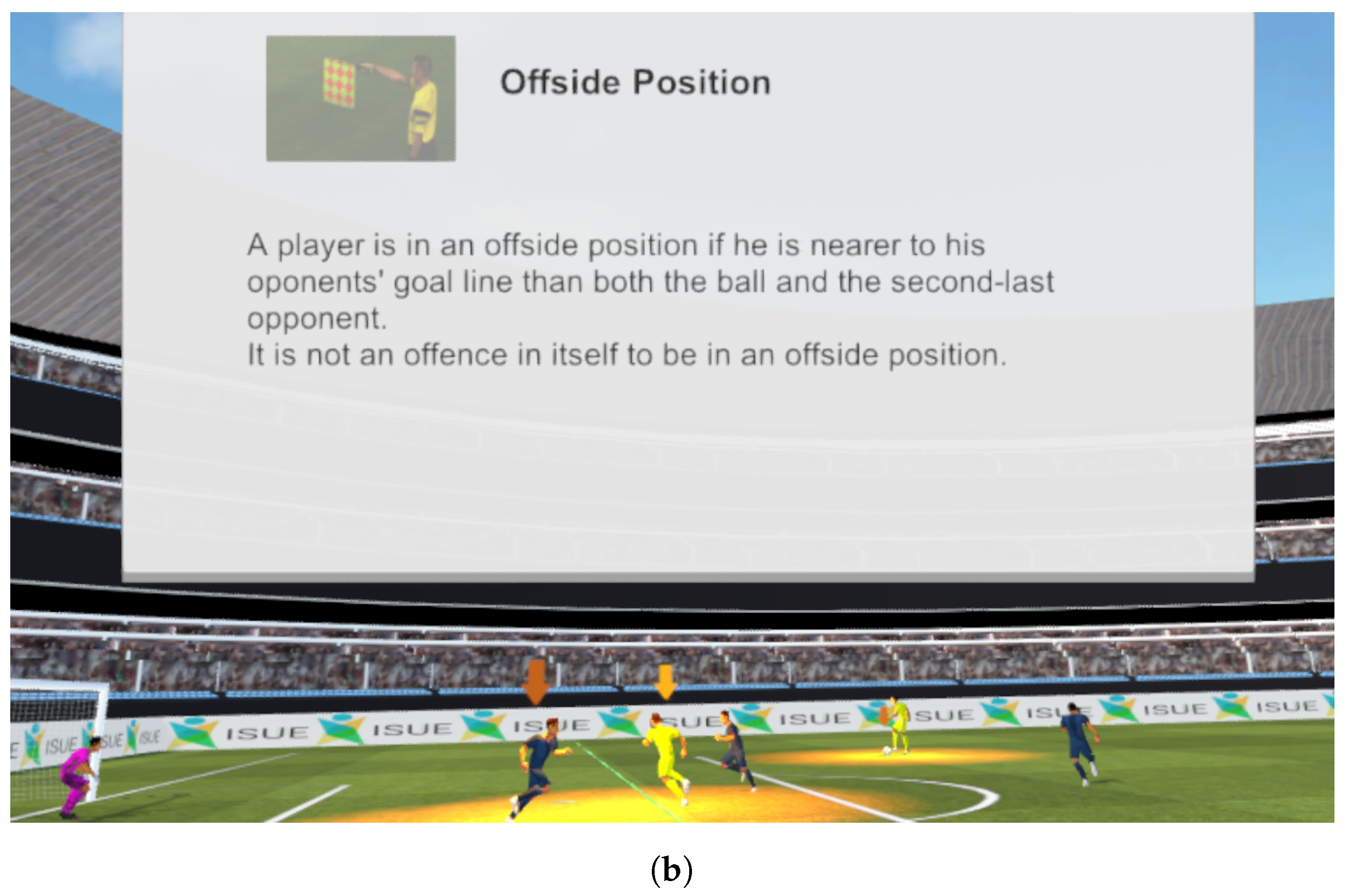

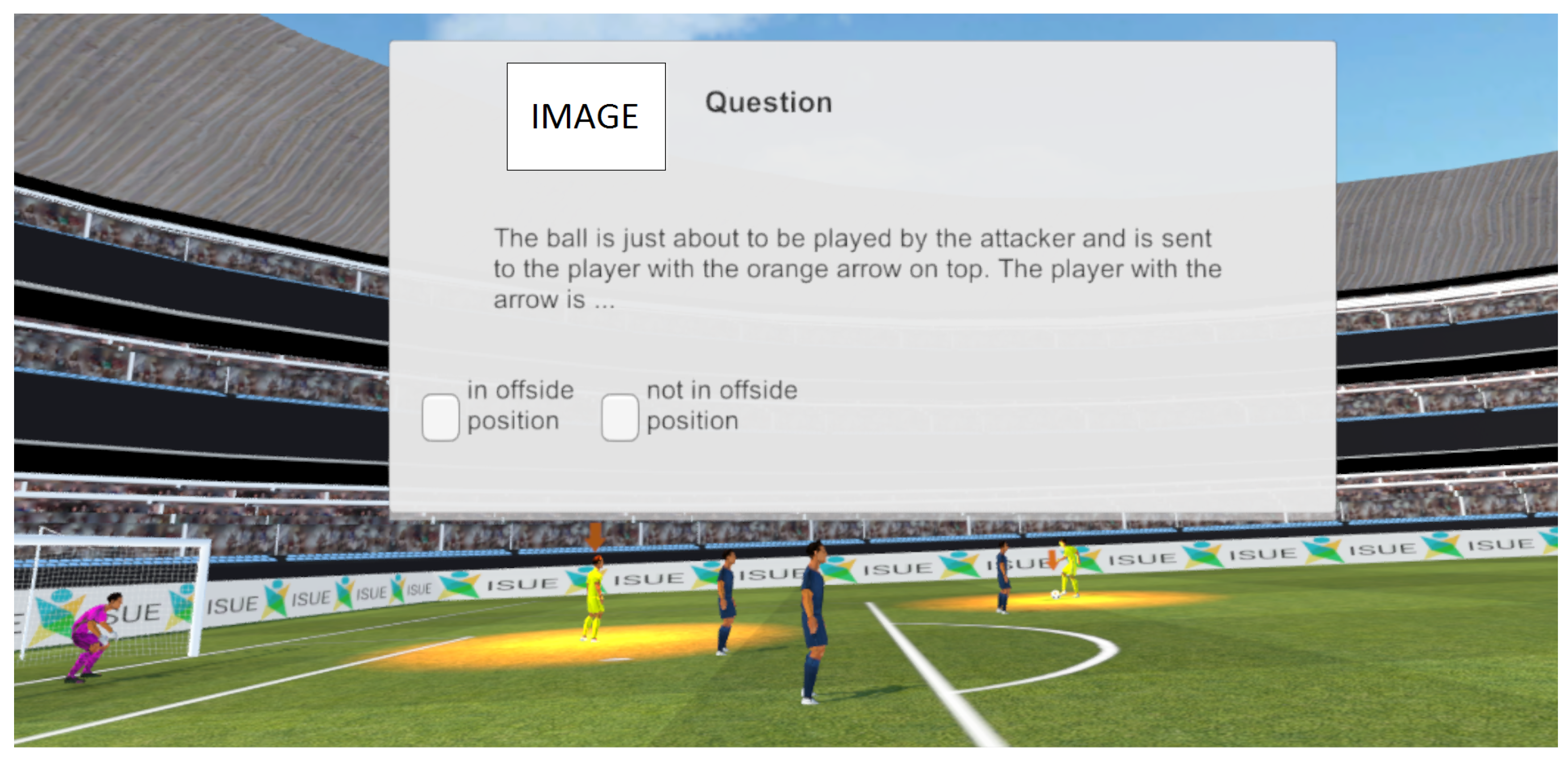

The Unity application uses 3D models and animations bought from the asset store but the logic is implemented for each concept and evaluation. A total of 45 scenes are divided as follows: Introduction (1), Offside (1) (see

Figure 3), Free Kick (2), Penalty Kick (2), Throw-in (1), Goal Kick (1), Corner Kick (1) and six scenes for each concept for evaluations (36). For each setup: desktop, VR and AR changes were made in order to adapt the scenarios to each device used. The screen canvas used for desktop needed to be replaced by a floating canvas in VR and AR. For AR, due to the small FOV of the device, space was optimized to fill in content in a readable manner. The Unity application connected via XML-RPC to the GIFT local instance.

In

Figure 3 an example shows how the content is presented. In this scene, players in yellow are attacking the goal area of the defending players in blue, with the goal to the left of the viewer. The user decides the correct moment an attacking player can legally pass the ball to his teammate, by considering both his teammate’s back and forth movements from the area and the defenders’ positions. If the user decides that the player can pass the ball to a teammate whose indicator line of action is green, it is a legal pass, since that teammate is not closer to the last defending player than both the second last defender and the ball (rule explained in

Figure 3b). This correct scenario is labeled in green bold lettering (‘NOT OFFSIDE POSITION’), otherwise, the player’s indicator line will be red and labeled ‘OFFSIDE POSITION’ in red lettering.

Using EMAP guidelines [

22], student learning outcomes are evaluated with a question bank of 36 questions for 6 concepts, each supported with learning content developed with Unity. For each concept, the six questions were divided into easy (2), medium (2) and hard (2). At evaluation time, the student is provided with three questions, one at each difficulty level. For each question, the student’s response is based on specific events happening on the soccer field. All questions are multiple-choice that either have 2 (see

Figure 4) or 5 options. The scoring system awards a single point for an easy question, two points for medium questions, and three points for hard questions. A student is required to answer 2 questions correctly in order to be “above expectation” and pass on to the next concept; otherwise, a student is “at below expectation” and “at expectation” which starts the remediation loop for the concept failed [

22]. Using intelligent feedback provided from the incorrect answers, the remediation loop involves a review of the content highlighting key failing aspects to end back in recall. Questions are not repeated in the first two recalls but they are in the third.

3.3. Interface Setup

We conducted a study in which we examined three interface types: a traditional desktop, virtual reality, and augmented reality.

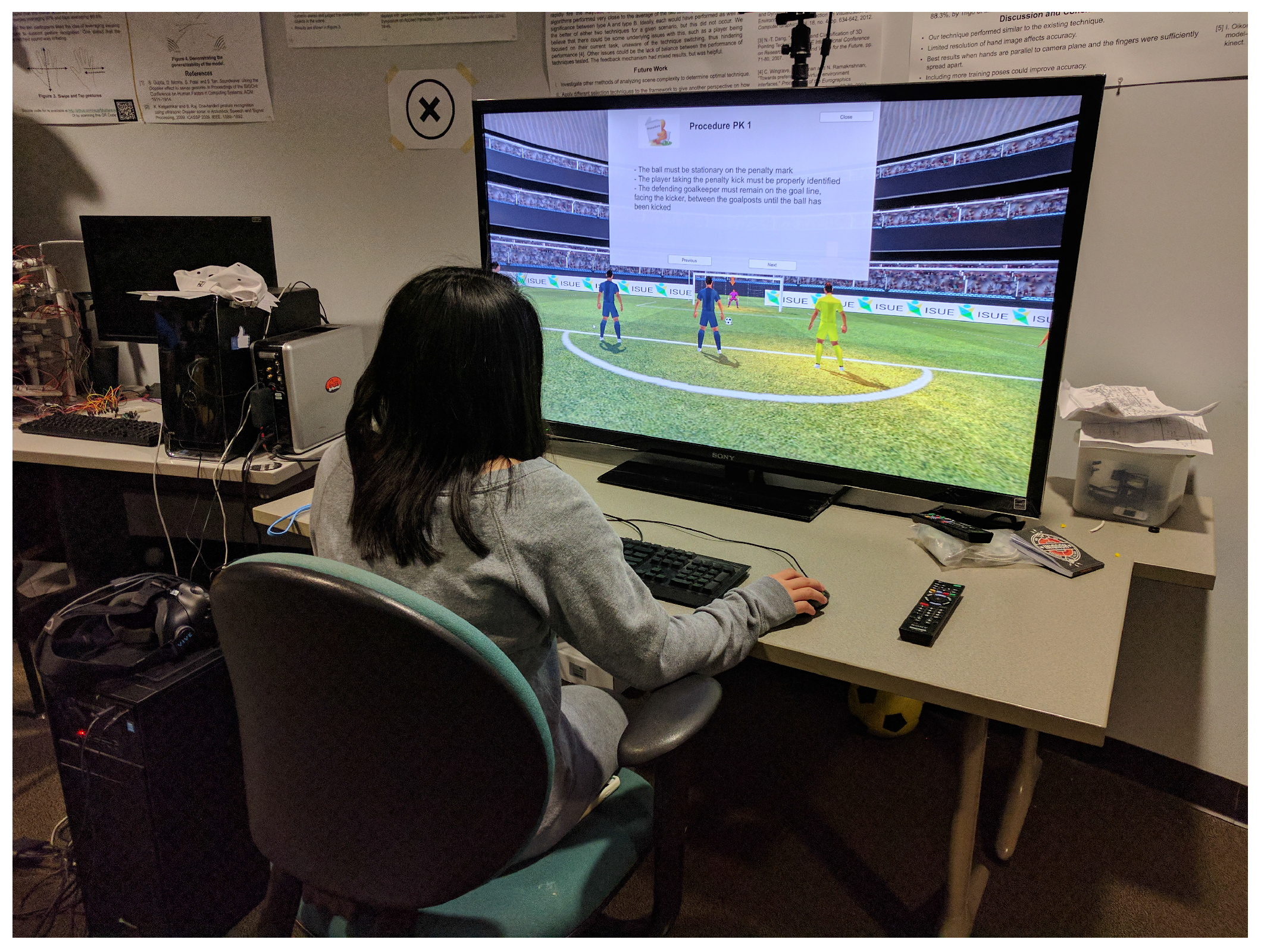

Desktop: For the desktop setting the experiment was performed in a lab environment. The virtual soccer field dimensions are 45 m × 90 m. A user views the scenarios on a flat-panel TV display while navigating and selecting with a keyboard and mouse. To move around the scene, keyboard inputs are used for changing viewing positions, while the mouse for changing viewing angles (see

Figure 5). Both mouse and keyboard are used for navigating between learning content and attempting the quizzes.

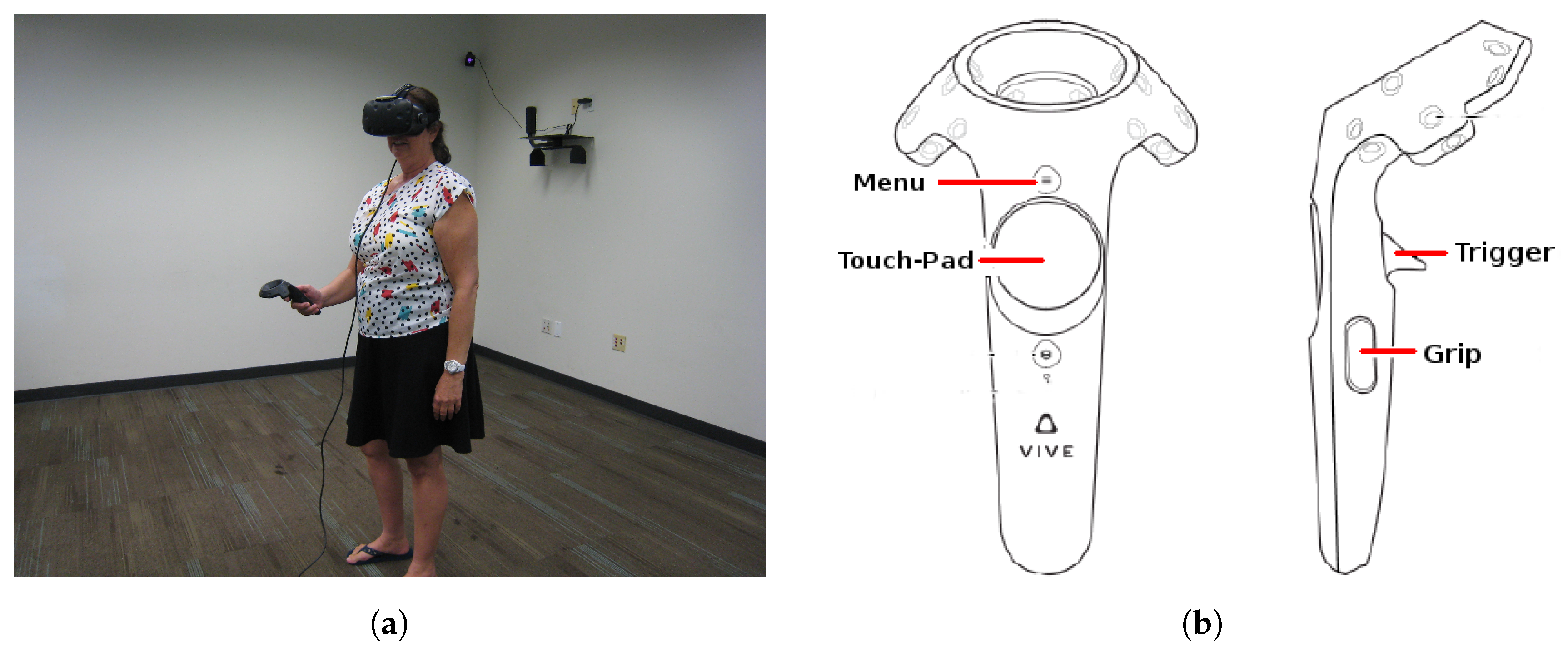

Virtual Reality: Users performed the experiment in a lab environment within a space of 3 m × 4 m. The virtual soccer field dimensions are 45 m × 90 m. The content is viewed through a Head-Mounted Display (HMD) interacting with a HTC-Vive controller (

Figure 6). The HTC-Vive provides significantly greater immersion, but at a cost of the users’ spatial awareness of their physical surrounding. This is however, mitigated by using the controller for teleportation via Trigger pressed and pointing to a valid location; at nearer distances, the user can choose to walk so long they remain within the configured safe zone before and after travel.

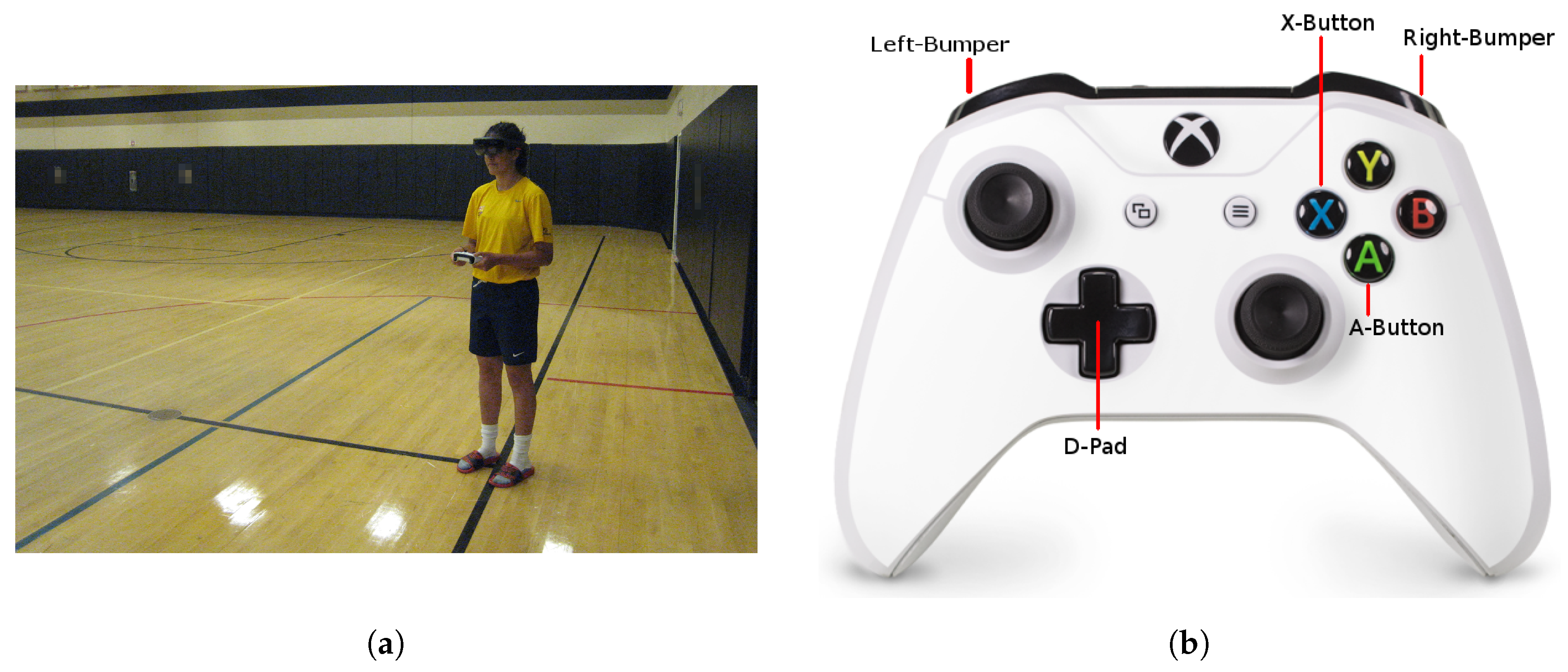

Augmented Reality: This experiment location took place in an indoor soccer field facility with approximate dimensions of 40 m × 70 m. The virtual soccer field is scaled to 40 m × 70 m to fit right into the real environment. The scenarios are adapted for AR deployment; except for players and the soccer ball, all other remaining virtual objects, such as the stadium, soccer field, and goal posts were removed from the scene. Virtual player placements are relative to the real goal, and users need to ambulate around the soccer field for better views when needed. Content is visualized through Hololens and an Xbox One S controller was used as an input device (

Figure 7).

3.4. Experimental Procedure

Participants were screened based upon their level of experience playing soccer on a formal team or club, and preferences regarding watching televised sports. A questionnaire was used to screen participants for these factors. After passing screening, participants completed a pre-test to rate their knowledge and familiarity with the scenario concepts using Likert scale ratings from 1 to 7, with 1 representing “little knowledge” and 7 representing “very knowledgeable”. Following this questionnaire, the users were then assigned to their scenario and given instructions on how to complete the study, including a quick overview of the corresponding input device. In the virtual reality scenario, the Vive trigger button was used to teleport around the environment. The touchpad was used to cycle through the GUI prompts; clicking right meant go to the next window, and clicking left meant go to the previous. In the AR scenario, the Xbox controller directional buttons right and left, respectively, performed the same functions.

The scenario began with the user viewing two teams (yellow and blue) of soccer player computer controlled agents. The participant starting position is at the center of the blue team goal behind the goalkeeper. Through the experiment all concepts are explained on the blue side, which represents the defensive side. The yellow team represented the attacking team. The avatars could be seen running, dribbling and kicking the ball, throwing in from out of bounds, and even tackling opponents. A floating GUI window was used to convey information regarding each step of the simulation. This GUI was displayed in front of the users’ viewport and allowed to be hidden to users discretion. When the user clicked a button to go to the next or previous GUI prompt, the text would change, and the avatars would move to a corresponding spot on the virtual field. The GUI cycled through a randomly predetermined order of lessons (called “concepts”) for the user to learn, including direct and indirect free kicks; offsides; throw-ins; goal kicks; penalty kicks; and corner kicks.

After the user reviewed all points for a given concept, recall was invoked and three questions were presented to the user. Using an input device, see

Table 1, the user could select a multiple-choice answer. If more than one question was answered incorrect, feedback was provided and the remediation loop started, so that the user could reinforce knowledge. We logged any mistakes made at each concept quiz. This process continued until all concepts were completed.

At the conclusion of the study, participants were asked to complete a questionnaire that quantified their understanding of soccer rules and required them to rate their experience with the system [

23]. This questionnaire included the same initial knowledge questions asked in the pre-questionnaire, with some additional user experience questions added. Participants also had the option to write in any feedback regarding the system or their experience. See

Table 2 for the questions asked in the questionnaire.

4. Results

We performed multiple analyses to interpret both our objective data and the qualitative user experience data. First, we conducted an analysis of how many adaptations were required (overall) for each experimental condition

Table 3. An adaptation involves a reinforcement phase for 2 or more incorrectly answered questions in the next iteration. We wanted to understand which study conditions required multiple iterations of the concept. The Desktop condition users required the most adaptations at 25. Following this, the AR condition users adapted a total of 19 times, and the VR users adapted the least at 16 times.

For the pre/post-test data, we performed a Wilcoxon Signed Rank Test to determine changes between the pre and post-tests. All groups demonstrated statistical significance for all concepts. This is not totally unexpected since we would expect to see improvement following the intervention. Statistical values are presented in

Table 4.

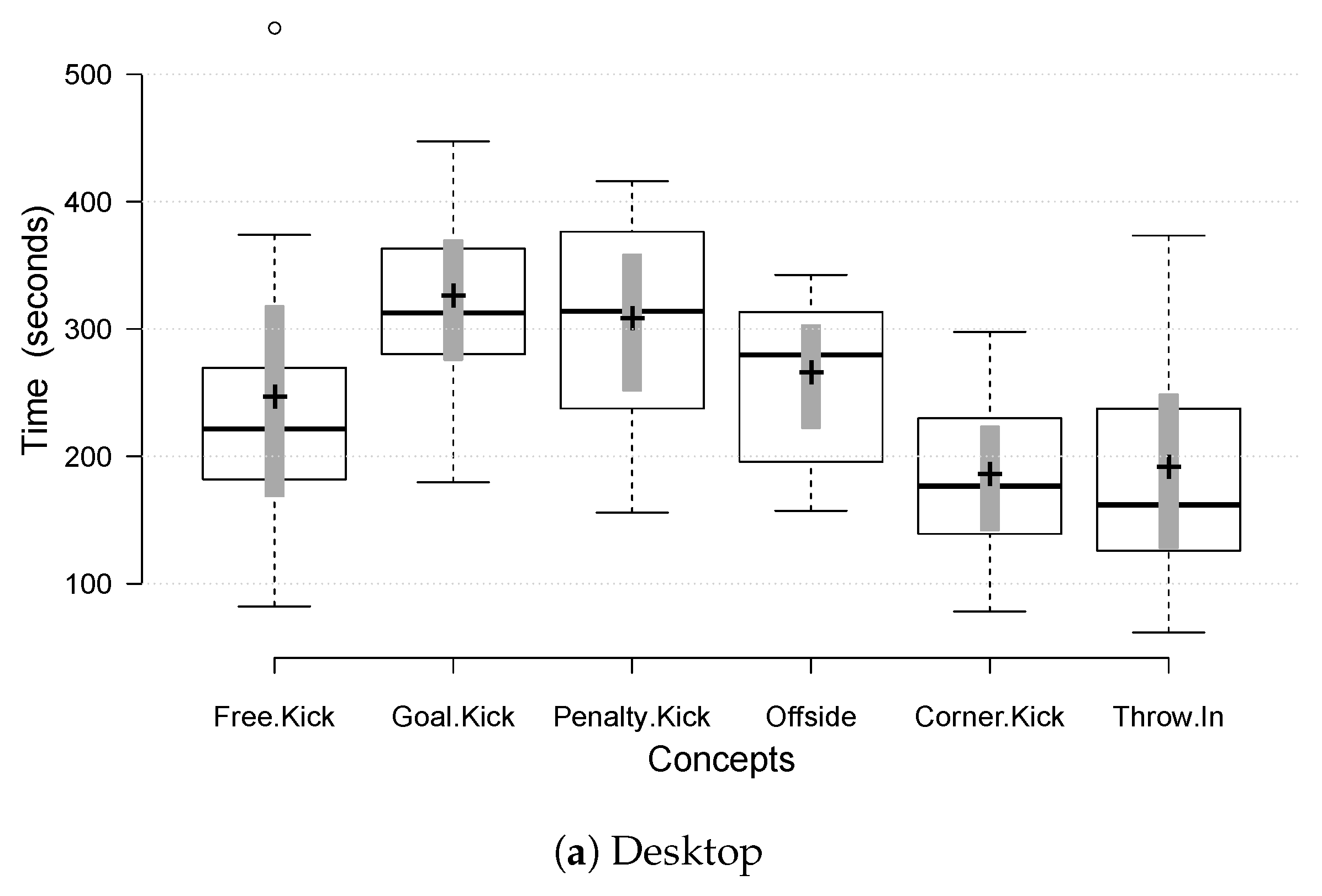

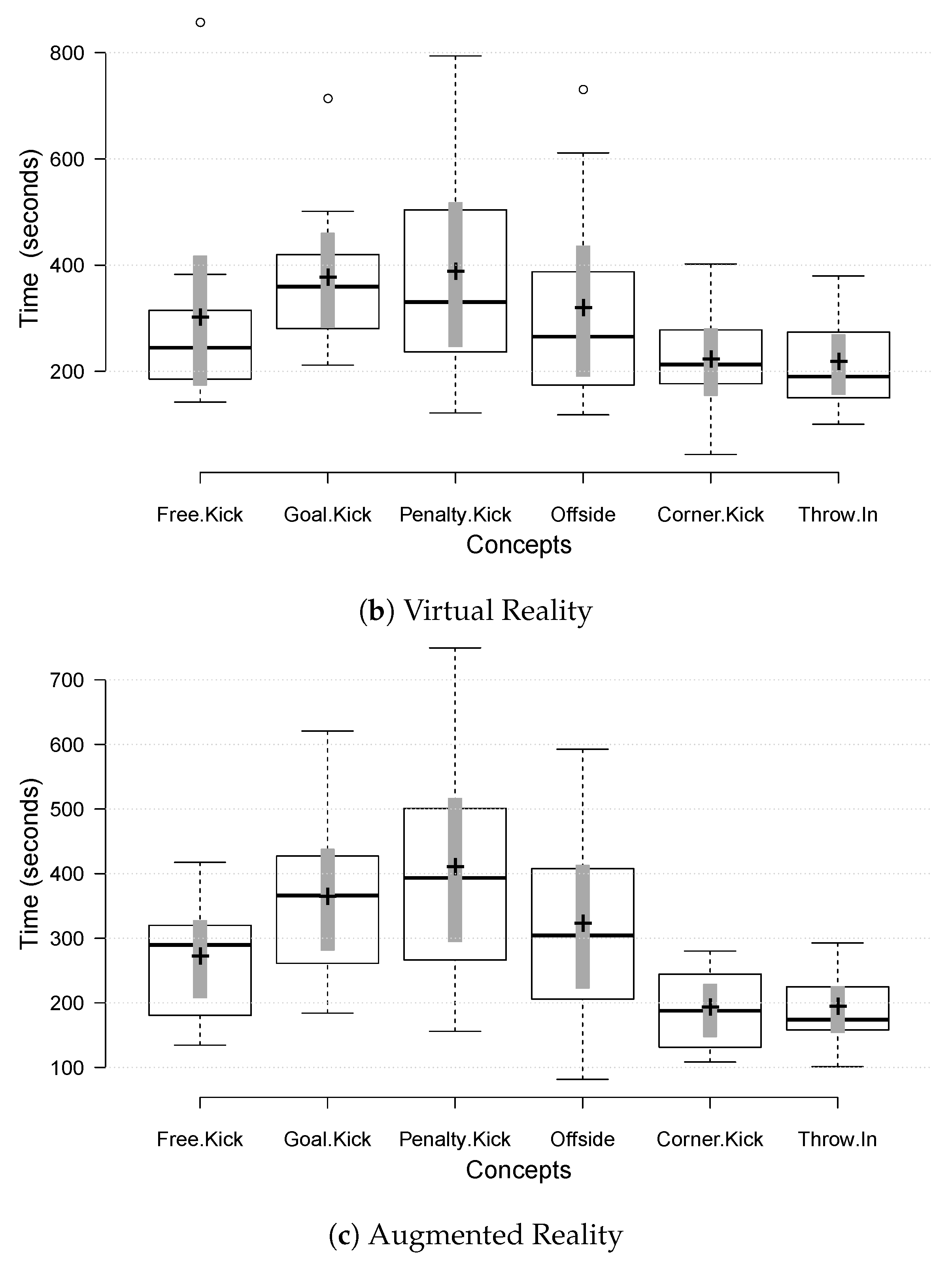

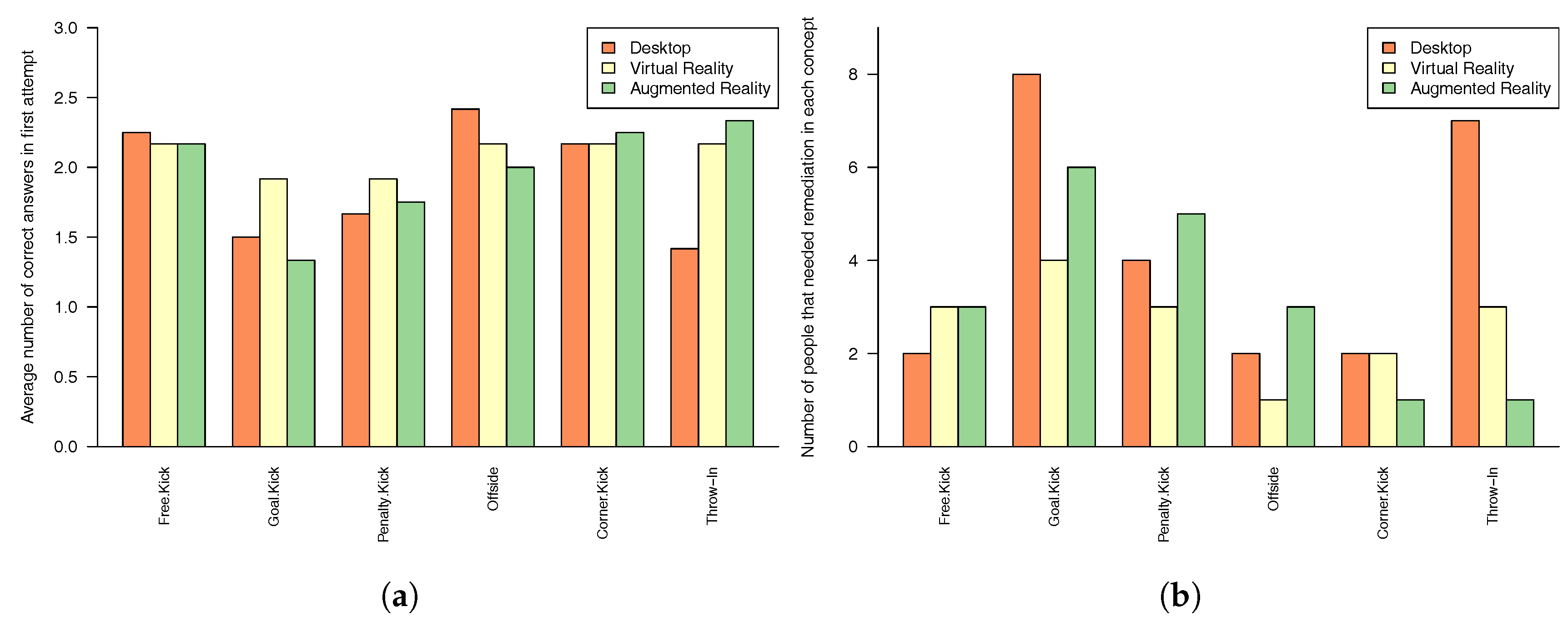

For each concept, Deliberation Time (see

Figure 8) and the number of correct answers for each quiz attempt are recorded. Deliberation Time is recorded from the moment a participant commences learning a concept, and ends when the quiz begins. If remediation loops are required, Deliberation Time is calculated as the mean amount of time taken for all attempts. Additionally, the number of questions answered correctly at the first attempt is recorded for each concept, and its mean is shown in

Figure 9a.

Figure 9b shows the number of participants that required adaptations.

5. Discussion

Alternative methods for sports training have been investigated in a variety of settings and environments with varying levels of success. In this paper, we extend the existing literature to better understand the influence of both virtual and augmented reality in teaching soccer rules. From the results, we can see that participants in the desktop condition required the most adaptation overall by frequency count (25 adaptations total). We attribute this to the input device. We contend that the mapping of the keyboard and mouse inputs did not translate intuitively and required more training for the participant to become adept at using the interface. This is also supported by the fact that 9 out of the 12 desktop participants required adaptation on the first concept, regardless of the actual concept presented

Table 5. Additionally, we noticed that the throw-ins concept also seemed to be challenging for participants assigned to the desktop condition. Interestingly enough, in the previous paper by Helsen et al., throw-ins were the most frequent decision referees had to make alone [

10]. This presents an interesting finding in relation to our work since we can postulate that the visual cues afforded by the desktop environment may not be sufficient for decision making in the throw-in scenarios.

Overall, the VR environment required the least amount of adaptation by frequency (16 total). This is interesting since the VR participants tended to rate their prior knowledge of soccer before the intervention as lower than the other two groups (

Table 4). From

Table 6, we can see that AR outperformed the VR and Desktop conditions with regard to overall satisfaction with feedback the system provided. Also, participants perceived a higher overall knowledge improvement in AR. However, users in the AR condition reported less stress and frustration while using the system. This question (Q7) was not reverse coded so a lower number indicates less stress. We cannot be sure whether this is due to the learning curve for the Vive controller since it is slightly different than the more well-known Xbox One S control system. Additionally, both the VR and AR participants rated the system as interesting to use, more so than their desktop counterparts.

In addition to the aforementioned factors, we want to note some of the limitations of the study that should be improved with further investigation. First and foremost, we recognize that the indoor environment of the AR condition may not be directly mapped to the VR and desktop outdoor conditions. Due to the inclement Florida weather and the risk of heat exhaustion, we were not able to test the AR condition in an outdoor soccer field. We instead had to compromise by choosing an indoor field. While the content was held constant, it should be noted that such conditions limited direct comparison in some of the environmental factors. For instance, constant lightning conditions and the presence of people in the surroundings provide variation in the study. Although we did not find statistical differences, it is still worth comparing these factors in more detail. Second, we recognize that some of the feedback the users provided would enhance the system. For example, a better way of differentiating the players and opponent’s teams may help scaffold the novice player’s understanding and improve their knowledge regarding what is happening in the scenario. Third, we acknowledge that the feedback of the system could be improved by tailoring it to the individual’s exact answer. In this case, if the participant did not score correctly, feedback would not explain exactly what they did incorrectly. Finally, with regard to the actual hardware we utilized, it is important to note that the Microsoft Hololens field of view limits the users’ ability to view all aspects of the scene. We contend that other augmented reality head mounted displays should be tested in these scenarios to better understand the implications of AR on learning. Although all participants were able to complete the experiment, 2 of them reported discomfort in the AR condition because of the weight of the equipment and the second one because of aching eyes due to sudden movements trying to find the ball in the field. HTC-Vive participants did not provide any feedback or express discomfort at all.

It is interesting to mention differences in participants navigation across the three conditions. First, all participants started at the goal center behind blue team goalkeeper. Generally, a user would look for the best point of view to visualize the scene. When the scenario changes, if a different point of view is needed participants would move otherwise they would stay. This decision, however was different across the interfaces. For desktop users freely stepped around. For virtual reality, participants did not walk significantly and just teleported around, most participants even requested a chair and performed the study seated. Users that were less familiar with the vive controller tended to move less. For Augmented Reality if the first scenario is FreeKick, Goal Kick or Corner Kick users would walk minimally and stay around the initial position until a change of position is required. Navigation was needed for scenarios such as Offside and Penalty Kick that required a side view of the field and further apart from the goal in order to visualize the most rendering into the hololens screen. If the course on the other side starts with PK or Offside and the user place itself on the side of the field he/she would stay on that side and move around a small area. In general, we observed that participants were not so keen to move around constantly as they were in the other two conditions, this due to the effortless of Desktop and VR navigation.

We found limited support for our hypothesis that AR/VR would outperform the desktop condition. While we did see that users tended to favor the AR condition according to our qualitative data, these comparisons are very close in number to the VR condition. Additionally, the fact that only three more adaptations total were required by the AR group, lends support to the idea that the AR and VR seemed to present the content in an engaging and interesting way. The participants expressed no discomfort with the VR equipment, which seems to suggest that all participants perceived this interface favorably. Additionally, users in the VR condition subjectively rated their initial knowledge as lower than the participants in the AR group (

Table 4). In the VR group, they rated their knowledge after the intervention as higher across almost all six concepts. Further investigation with a larger sample is necessary to determine if this is because this group really demonstrated less previous knowledge than the AR group. The AR users reported slightly higher satisfaction, leading to a more positive user experience.

6. Conclusions

In the field of education, researchers have previously found that by using augmented reality coupled with constructivist and situated learning theories on environments is positively correlated with students’ learning when compared to traditional methods [

24]. Similarly, Wojciechowski et al. found that STEM students displayed more positive attitudes towards AR scenarios due to perceived usefulness and enjoyment [

25] they provide. We contend that this is a rich area of exploration and that sports training could point to future areas of STEM education such as training abstract concepts or domains where full spatial information may not be available to the end user. Scenarios such as circuit layouts in rooms can benefit from the spatial relation of the environment with the educational content. The use of AR can scaffold novice players or learners to better understand where to direct their visual attention in these cases, which would allow them to focus training on the most important take aways from their training scenarios.

In this study, we found that though AR generally helped users learn favorably, this was not the case for all concepts. First, by observing the number of participants that required adaptation per concept (

Figure 9), it is shown that concepts that demonstrate player interaction in larger spaces were a problem for the AR condition. Specifically, three concepts that are rated unfavorably require the user to be aware of spatial positions not only of the soccer ball, but also of players who are dispersed further apart. It is expected that by using a wider field of view these concepts will have better results. On the other hand, the two concepts that favor AR do not necessitate a wide field of view. This requires that the user observes the trail and position of the soccer ball with respect to the field, such as positions relative to the goalposts and touch-line, and any direct ball contact with the affected players. Another important factor that we found in the AR condition is depth perception. In a real world environment, users have more depth perception cues that could lean towards more positive results for these concepts. We also observed that Mean Deliberation Time under each experiment condition did not affect user learning and frustration, even though AR requires more time and ambulation to complete the study.

Finally, we hope to expand upon this area by integrating physiological measures in addition to the subjective user feedback. These physiological measures could help us to better understand when the user feels frustrated or stressed while using the system and could provide more tailored, individualized feedback in these cases. Additionally, we hope to add audio or other sensory cues in to test the effects of a multi modal learning system.