An Automatic Question Generator for Chinese Comprehension

Abstract

1. Introduction

2. Related Works

2.1. AQG Methods

2.1.1. Template-Based Approach

2.1.2. Syntax-Based and Semantic-Based Approach

2.1.3. Sequence-to-Sequence (seq2seq) Approach

2.1.4. Transformer Approach

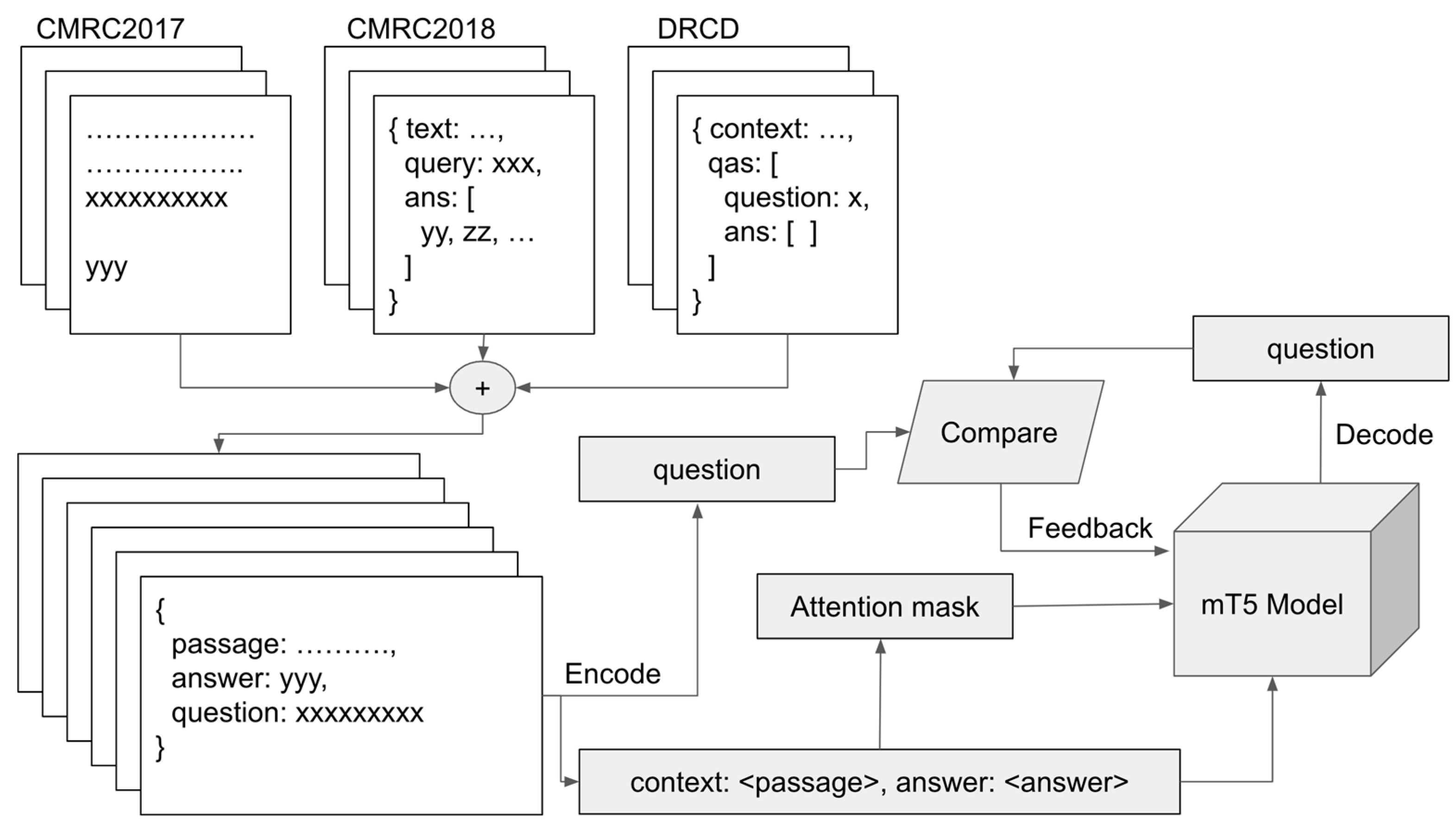

3. Materials and Methods

3.1. Dataset Preparation

3.1.1. CRMC2017 Dataset

3.1.2. CRMC2017 Dataset

3.1.3. DRCD Dataset

3.2. Encoder and Decoder

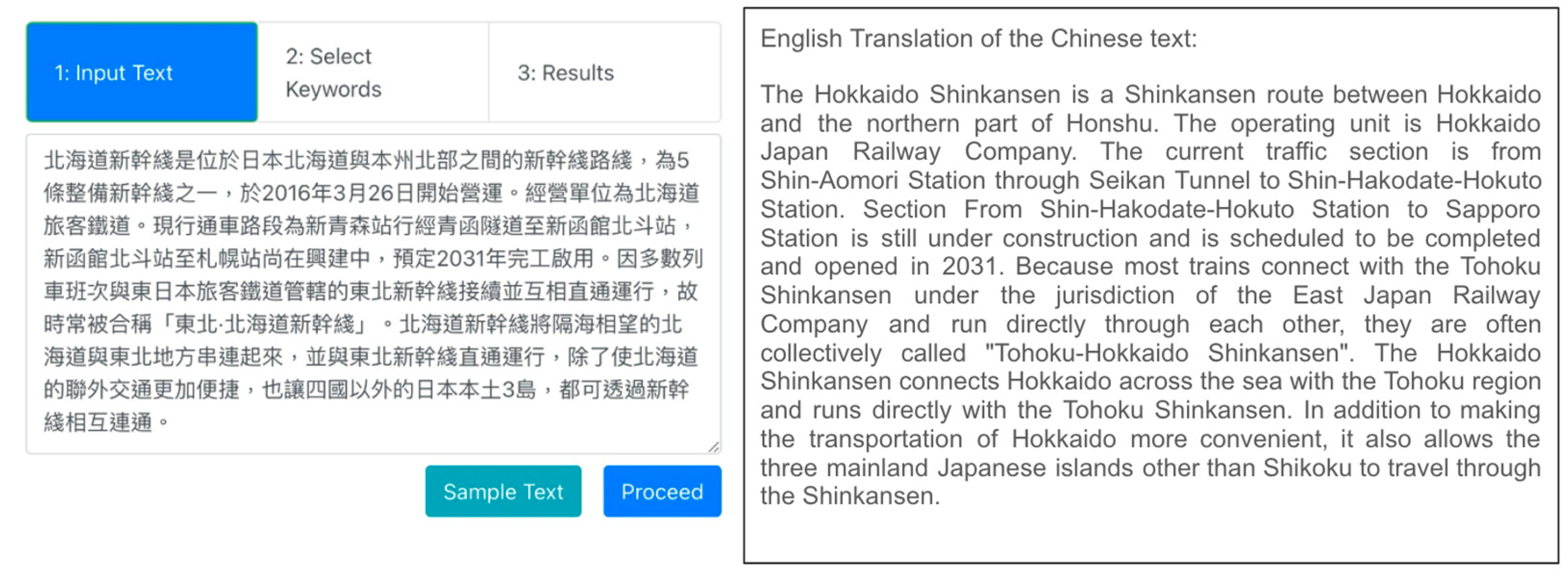

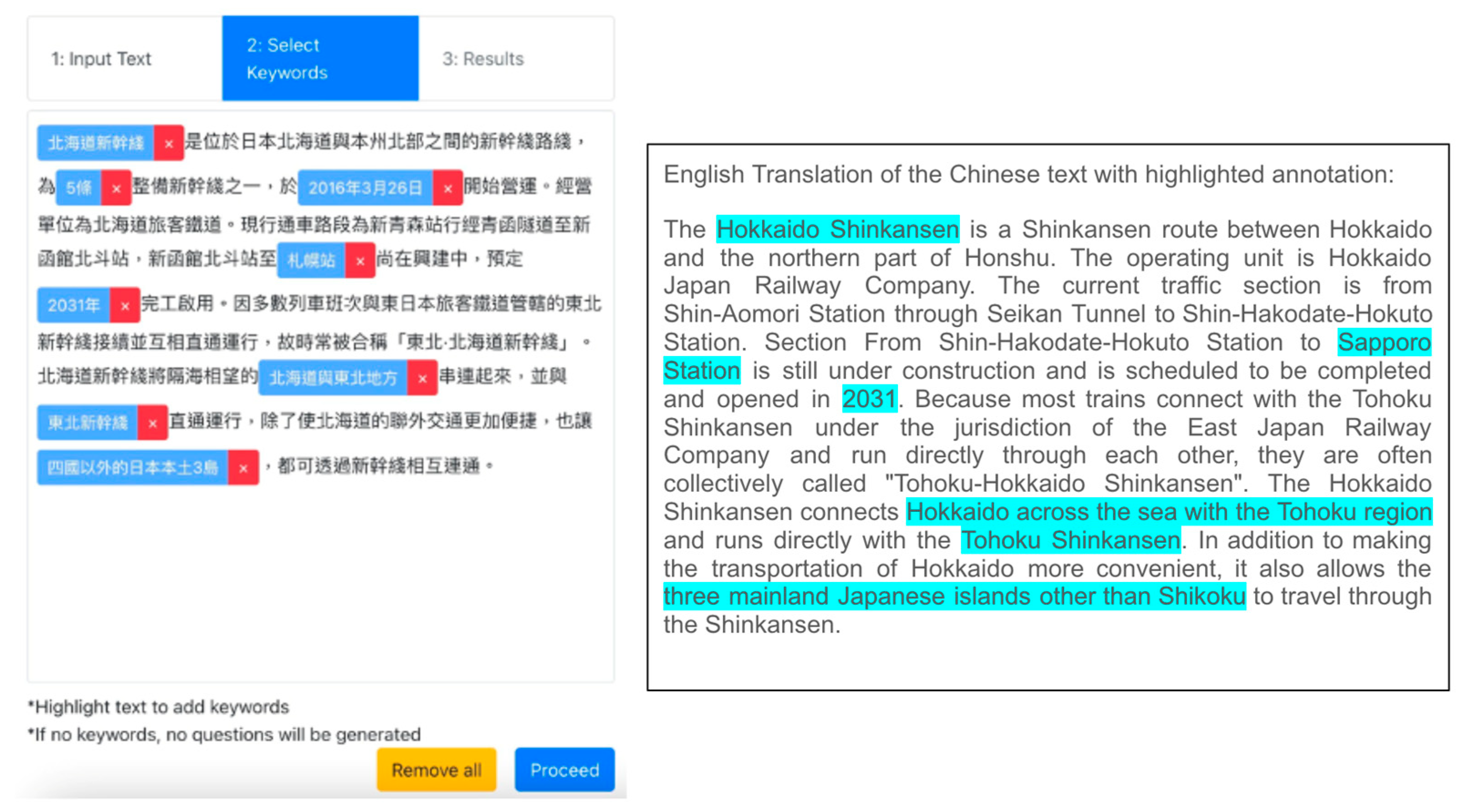

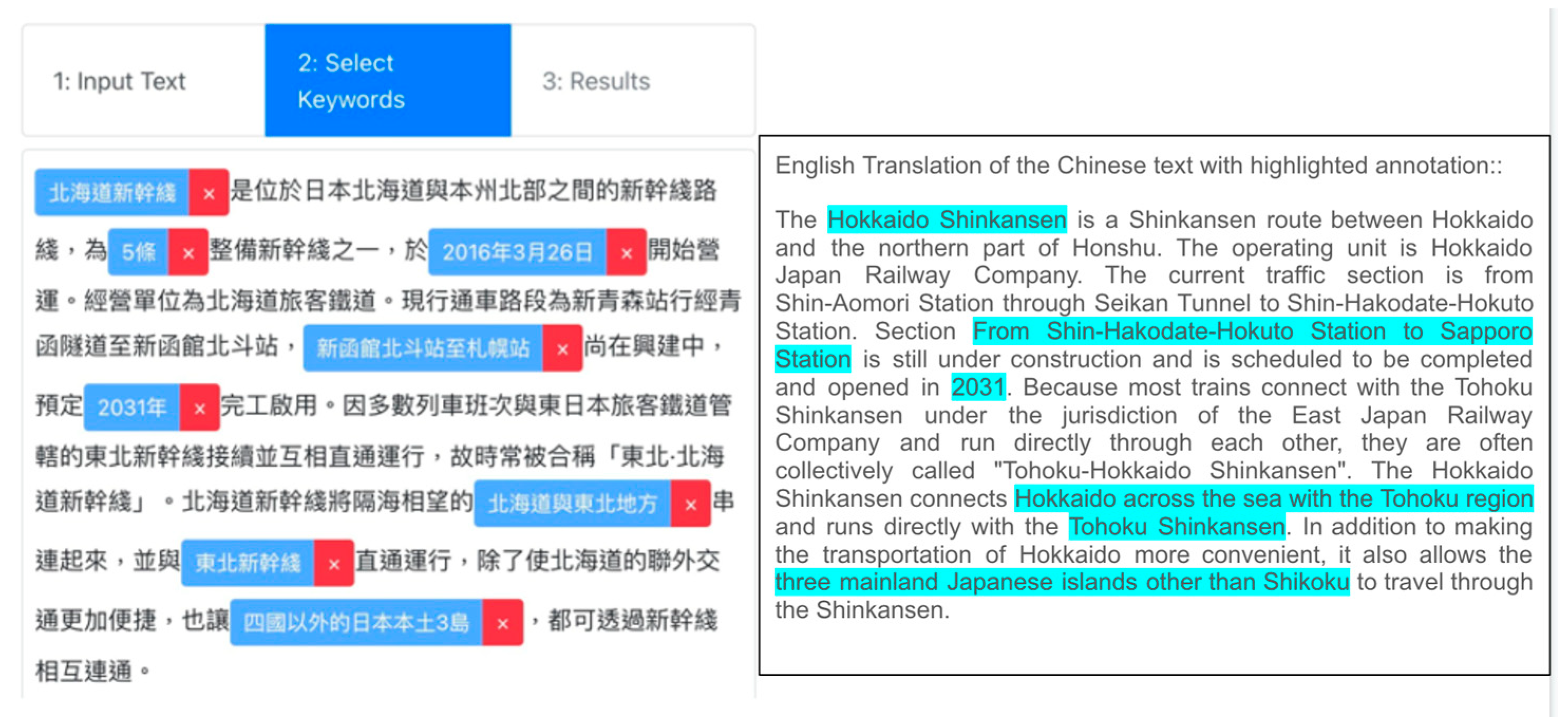

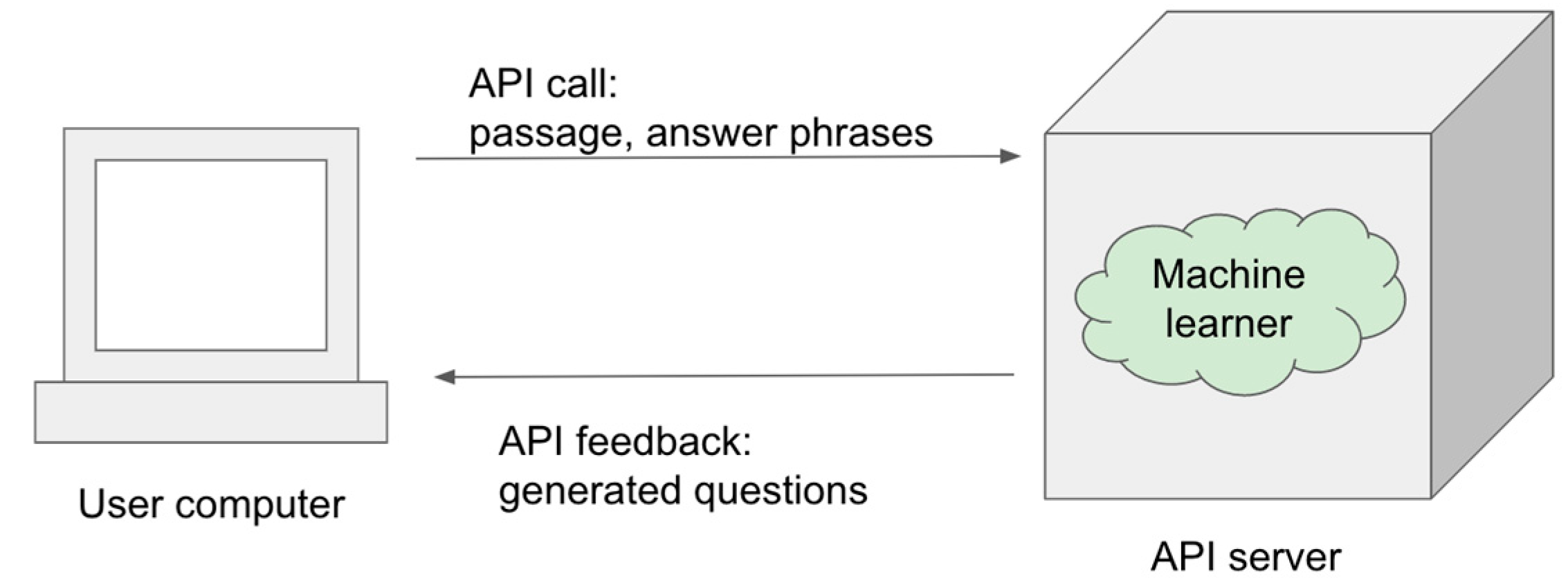

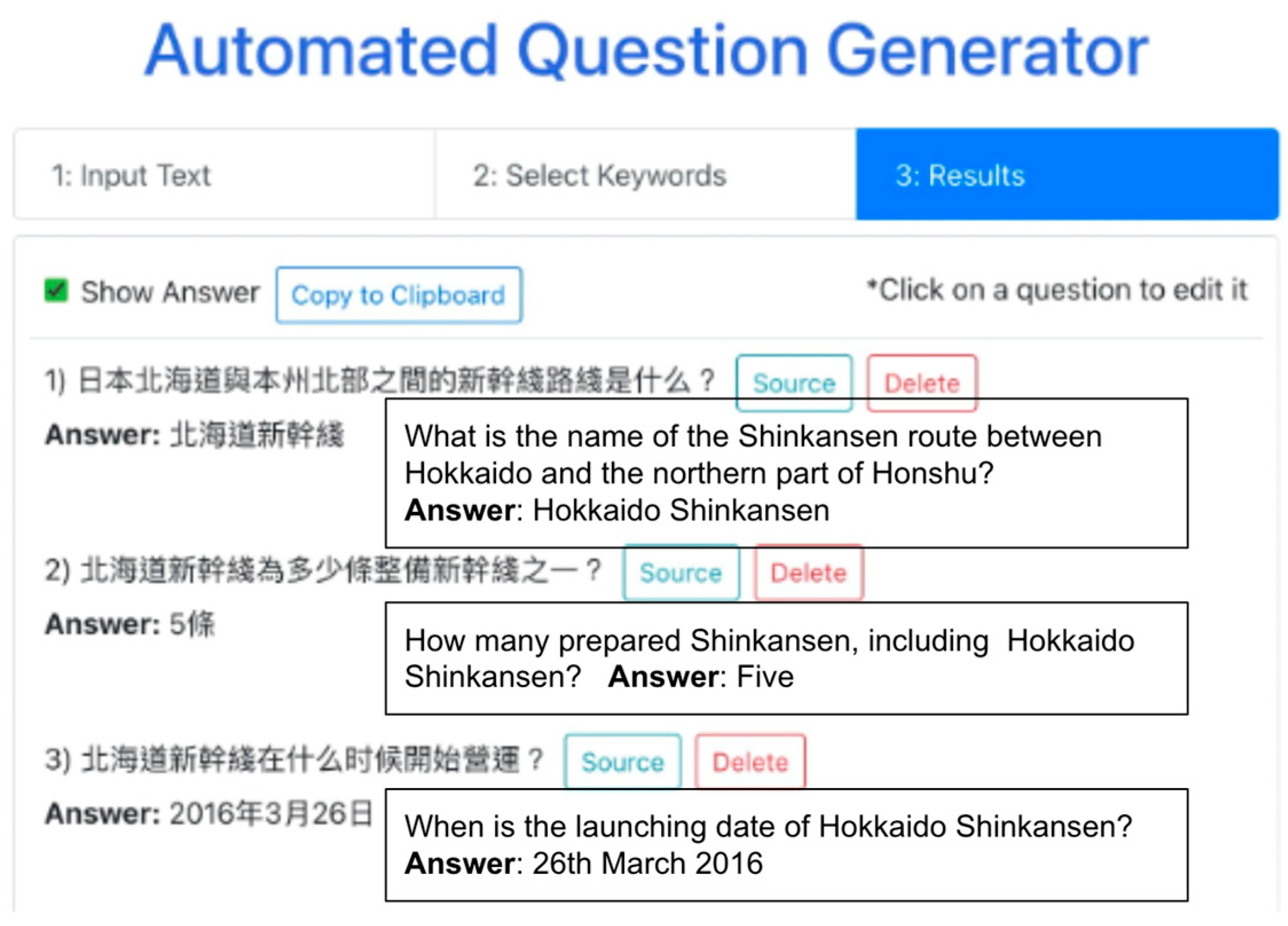

3.3. Application Design

4. Preliminary Results

4.1. Response Time

4.2. User Survey

4.3. Quality of Generated Question

5. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Test Data

Appendix A.1.1. Text 1

Appendix A.1.2. Text 2

Appendix A.1.3. Text 3

Appendix A.1.4. Text 4

Appendix A.1.5. Text 5

References

- Sadiku, L.M. The importance of four skills reading, speaking, writing, listening in a lesson hour. Eur. J. Lang. Lit. 2015, 1, 29–31. [Google Scholar] [CrossRef]

- Walton, E. The Language of Inclusive Education: Exploring Speaking, Listening, Reading and Writing. Routledge: London, UK, 2015. [Google Scholar]

- Cartwright, K.B. Cognitive development and reading: The relation of reading-specific multiple classification skill to reading comprehension in elementary school children. J. Educ. Psychol. 2002, 94, 56. [Google Scholar] [CrossRef]

- Wolfe, J.H. Automatic question generation from text-an aid to independent study. In Proceedings of the Acm Sigcse-Sigcue Technical Symposium on Computer Science and Education, Anaheim, CA, USA, 12–13 February 1976; pp. 104–112. [Google Scholar]

- Mitkov, R.; Le An, H.; Karamanis, N. A computer-aided environment for generating multiple-choice test items. Nat. Lang. Eng. 2006, 12, 177–194. [Google Scholar] [CrossRef]

- Kurdi, G.; Leo, J.; Parsia, B.; Sattler, U.; Al-Emari, S. A systematic review of automatic question generation for educational purposes. Int. J. Artif. Intell. Educ. 2020, 30, 121–204. [Google Scholar] [CrossRef]

- Cui, Y.; Liu, T.; Chen, Z.; Ma, W.; Wang, S.; Hu, G. Dataset for the First Evaluation on Chinese Machine Reading Comprehension. In Proceedings of the Eleventh International Conference on Language Resources and Evaluation (LREC 2018), Miyazaki, Japan, 7–12 May 2018. [Google Scholar]

- Cui, Y.; Liu, T.; Che, W.; Xiao, L.; Chen, Z.; Ma, W.; Wang, S.; Hu, G. A Span-Extraction Dataset for Chinese Machine Reading Comprehension. arXiv 2018, arXiv:1810.07366. [Google Scholar]

- Shao, C.C.; Liu, T.; Lai, Y.; Tseng, Y.; Tsai, S. Drcd: A chinese machine reading comprehension dataset. arXiv 2018, arXiv:1806.00920. [Google Scholar]

- Xue, L.; Constant, N.; Roberts, A.; Kale, M.; Al-Rfou, R.; Siddhant, A.; Barua, A.; Raffel, C. mT5: A Massively Multilingual Pre-trained Text-to-Text Transformer. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computer Linguistics: Human Language Technologies, 6–11 June 2021; pp. 483–498. [Google Scholar]

- Ranaldi, L.; Fallucchi, F.; Zanzotto, F.M. Dis-Cover AI Minds to Preserve Human Knowledge. Future Internet 2021, 14, 10. [Google Scholar] [CrossRef]

- Lin, H.; Zhang, P.; Ling, J.; Yang, Z.; Lee, L.K.; Liu, W. PS-Mixer: A Polar-Vector and Strength-Vector Mixer Model for Multimodal Sentiment Analysis. Inf. Process. Manag. 2023, 60, 103229. [Google Scholar] [CrossRef]

- Lee, L.K.; Chui, K.T.; Wang, J.; Fung, Y.C.; Tan, Z. An Improved Cross-Domain Sentiment Analysis Based on a Semi-Supervised Convolutional Neural Network. In Data Mining Approaches for Big Data and Sentiment Analysis in Social Media; IGI Global: Hershey, PA, USA, 2022; pp. 155–170. [Google Scholar]

- Fung, Y.C.; Lee, L.K.; Chui, K.T.; Cheung, G.H.K.; Tang, C.H.; Wong, S.M. Sentiment Analysis and Summarization of Facebook Posts on News Media. In Data Mining Approaches for Big Data and Sentiment Analysis in Social Media; IGI Global: Hershey, PA, USA, 2022; pp. 142–154. [Google Scholar]

- Kalady, S.; Elikkottil, A.; Das, R. Natural language question generation using syntax and keywords. In Proceedings of QG2010: The Third Workshop on Question Generation; Questiongeneration. Org: Pittsburgh, PA, USA, 2010; pp. 5–14. [Google Scholar]

- Heilman, M.; Smith, N.A. Question Generation Via Overgenerating Transformations And Ranking; Carnegie-Mellon University Pittsburgh pa Language Technologies InsT: Pittsburgh, PA, USA, 2009. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 3104–3112. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT, Minneapolis, MN, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Raffel, C.; Shazeer, N.; Roberts, A.; Lee, K.; Narang, S.; Matena, M.; Zhou, Y.; Li, W.; Liu, P.J. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 2020, 21, 1–67. [Google Scholar]

| No. of Words | No. of Questions | Time Used (sec) | Time Used per Questions (sec) | |

|---|---|---|---|---|

| Text 1 | 305 | 13 | 6.73 | 0.518 |

| Text 2 | 296 | 7 | 4.14 | 0.591 |

| Text 3 | 371 | 11 | 4.96 | 0.451 |

| Text 4 | 243 | 10 | 5.02 | 0.502 |

| Text 5 | 223 | 29 | 13.17 | 0.454 |

| Mean average time for one question (seconds) | 0.486 | |||

| Question | Percentage (%) | |||||

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | ||

| 1 | I am satisfied with the quality of questions. | 35.00 | 30.00 | 20.00 | 10.00 | 5.00 |

| 2 | I think the system is easy to use. | 0.00 | 10.00 | 15.00 | 45.00 | 30.00 |

| 3 | I think the system is easy to learn. | 10.00 | 5.00 | 30.00 | 20.00 | 35.00 |

| 4 | The system works the way I expected. | 30.00 | 35.00 | 15.00 | 15.00 | 5.00 |

| 5 | I like the user interface of the system. | 5.00 | 5.00 | 40.00 | 30.00 | 20.00 |

| 6 | Overall, I am satisfied with the system. | 15.00 | 35.00 | 30.00 | 15.00 | 5.00 |

| Type | ||

|---|---|---|

| What | Content | 違例駕駛記分制度是為了改善道路安全而設的。 The DOPs system is a measure designed to promote safety on the road. |

| Answer phrase | 為了改善道路安全 to promote safety on the road | |

| Generated question | 違例駕駛記分制度的目的是什麼? What is the purpose of the DOPs system? | |

| Who | Content | 外交部发言人汪文斌17日主持例行记者会, Foreign Ministry Spokesperson Wang Wenbin hosted a regular press conference on the 17th |

| Answer phrase | 汪文斌 Wang Wenbin | |

| Generated question | 哪一个人主持例行记者会? Who hosted a regular press conference? | |

| When | Content | 「安心出行」自2020年11月啟用至今, Since the launch of “Leave Home Safe” in November 2020, |

| Answer phrase | 2020年 The year 2020 | |

| Generated question | 「安心出行」什麼時候啓用至今? When did “Leave Home Safe” launch? | |

| Where | Content | 都大物理治療中心, 將於 2022 年 12 月 1 日在賽馬會校園盛大開幕。 The grand opening of the HKMU Physiotherapy Centre Limited in the Jockey Club Campus will be on 1 December 2022. |

| Answer phrase | 賽馬會校園 Jockey Club Campus | |

| Generated question | 都大物理治療中心在哪兒舉行開幕? Where will be the opening ceremony of HKMU Physiotherapy Centre Limited? | |

| Which | Content | 醫務衞生局局長盧寵茂表示, Lo, Director of the Medical and Health Bureau, said |

| Answer phrase | 醫務衞生局 Medical and Health Bureau | |

| Generated question | 盧寵茂是哪一個單位的負責人? Which unit is Lo in charge of? | |

| Why | Content | 疫苗通行證仍是重要措施, 包括可鼓勵市民加速接種疫苗, 因此市民要進入一些處所仍要出示疫苗通行證 The vaccine pass is still an important measure, including encouraging citizens to speed up vaccination, so citizens still have to show the vaccine pass to enter some premises |

| Answer phrase | 可鼓勵市民加速接種疫苗 encouraging citizens to speed up vaccination. | |

| Generated question | 爲何市民要進入一些處所仍要出示疫苗通行證? Why are citizens required to show their vaccine pass? | |

| How | Content | 北海道新幹綫無法與東海道新幹綫、山陽新幹綫、九州新幹綫直通運行, 需在東京站轉乘。 The Hokkaido Shinkansen cannot run directly with the Tokaido Shinkansen, Sanyo Shinkansen, and Kyushu Shinkansen, and needs to transfer at Tokyo Station. |

| Answer phrase | 需在東京站轉乘 Transfer at Tokyo Station. | |

| Generated question | 北海道新幹線如何轉乘東海道新幹線、山陽新幹線? How to transfer Hokkaido Shinkansen to Tokaido Shinkansen and Sanyo Shinkansen? |

| Defect | ||

|---|---|---|

| Wrong question word | Content | 都大物理治療中心, 將於 2022 年 12 月 1 日在賽馬會校園盛大開幕。 The grand opening of the HKMU Physiotherapy Centre Limited in the Jockey Club Campus will be on 1 December 2022. |

| Answer phrase | 都大物理治療中心 HKMU Physiotherapy Centre Limited | |

| Generated question | 哪一個實驗室將於2022年12月1 在賽馬會校園盛大開幕? Which laboratory will be opened on 1st December 2022? | |

| Explanation | The machine learner misunderstood that the HKMU Physiotherapy Centre Limited is a laboratory. | |

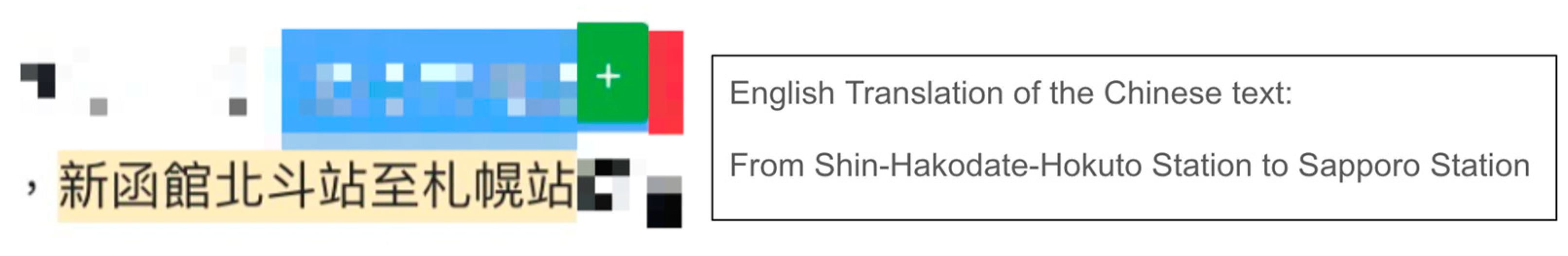

| Incorrect answer | Content | 現行通車路段為新青森站行經青函隧道至新函館北斗站, 新函館北斗站至札幌站尚在興建中, 預定2031年完工啟用。 The current traffic section is from Shin-Aomori Station through Seikan Tunnel to Shin-Hakodate-Hokuto Station. The section from Shin-Hakodate-Hokuto Station to Sapporo Station is still under construction and is scheduled to be completed and opened in 2031. |

| Answer phrase | 新函館北斗站至札幌站 From Shin-Hakodate-Hokuto Station to Sapporo Station | |

| Generated question | 現行通車路段爲新青森站行經青函隧道至哪站? Which station the current traffic section is from Shin-Aomori Station through Seikan Tunnel to? | |

| Explanation | The source is too long. The machine learner is not able to realize that the former part and the later part of the section are under different construction stages. | |

| Vague | Content | 駕駛人如在兩年內被記滿 15 分或以上, 法庭可以取消他持有或領取駕駛執照的資格一段時期。 If 15 or more DOPs have been incurred within 2 years, the driver can be disqualified by a court from holding or obtaining a driving license for a certain period. |

| Answer phrase | 15 分或以上 15 or more DOPs | |

| Generated Question | 駕駛人若在兩年內被記滿多少? How many DOPs have been incurred within 2 years? | |

| Explanation | The implication part of the content is not included in the question, leading it to be vague. | |

| Does not make sense | Content | 醫務衞生局局長盧寵茂表示, 當局決定撤銷市民掃瞄「安心出行」的相關法例及行政安排 Lo, director of the Medical and Health Bureau, said that the authorities have decided to revoke the relevant laws and administrative arrangements for citizens to scan “Leave Home Safe”. |

| Answer phrase | 撤銷市民掃瞄「安心出行」的相關法例及行政安排 revoke the relevant laws and administrative arrangements for citizens to scan “Leave Home Safe” | |

| Generated Question | 盧寵茂爲何要撤銷市民掃瞄「安心出行」的相關法例及行政安排? Why Lo has decided to revoke the relevant laws and administrative arrangements for citizens to scan “Leave Home Safe” | |

| Explanation | The machine learner copied the answer once as the question. | |

| Ungrammatical | Content | 北海道新幹綫除了使北海道的聯外交通更加便捷, 也讓四國以外的日本本土3島, 都可透過新幹綫相互連通。 In addition to making the transportation of Hokkaido more convenient, it also allows the three mainland Japanese islands other than Shikoku to travel through the Shinkansen. |

| Answer phrase | 四國以外的日本本土3島 the three mainland Japanese islands other than Shikoku | |

| Generated Question | 北海道新幹線除了使北海道的聯外交通更加便捷還有什麼? What else does the Hokkaido Shinkansen do besides making transportation in Hokkaido more convenient? | |

| Explanation | The machine learner does not know what the three mainland Japanese islands are, other than Shikoku. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fung, Y.-C.; Lee, L.-K.; Chui, K.T. An Automatic Question Generator for Chinese Comprehension. Inventions 2023, 8, 31. https://doi.org/10.3390/inventions8010031

Fung Y-C, Lee L-K, Chui KT. An Automatic Question Generator for Chinese Comprehension. Inventions. 2023; 8(1):31. https://doi.org/10.3390/inventions8010031

Chicago/Turabian StyleFung, Yin-Chun, Lap-Kei Lee, and Kwok Tai Chui. 2023. "An Automatic Question Generator for Chinese Comprehension" Inventions 8, no. 1: 31. https://doi.org/10.3390/inventions8010031

APA StyleFung, Y.-C., Lee, L.-K., & Chui, K. T. (2023). An Automatic Question Generator for Chinese Comprehension. Inventions, 8(1), 31. https://doi.org/10.3390/inventions8010031