Abstract

We aimed to compare the performance and interobserver agreement of radiologists manually segmenting images or those assisted by automatic segmentation. We further aimed to reduce interobserver variability and improve the consistency of radiomics features. This retrospective study included 327 patients diagnosed with prostate cancer from September 2016 to June 2018; images from 228 patients were used for automatic segmentation construction, and images from the remaining 99 were used for testing. First, four radiologists with varying experience levels retrospectively segmented 99 axial prostate images manually using T2-weighted fat-suppressed magnetic resonance imaging. Automatic segmentation was performed after 2 weeks. The Pyradiomics software package v3.1.0 was used to extract the texture features. The Dice coefficient and intraclass correlation coefficient (ICC) were used to evaluate segmentation performance and the interobserver consistency of prostate radiomics. The Wilcoxon rank sum test was used to compare the paired samples, with the significance level set at p < 0.05. The Dice coefficient was used to accurately measure the spatial overlap of manually delineated images. In all the 99 prostate segmentation result columns, the manual and automatic segmentation results of the senior group were significantly better than those of the junior group (p < 0.05). Automatic segmentation was more consistent than manual segmentation (p < 0.05), and the average ICC reached >0.85. The automatic segmentation annotation performance of junior radiologists was similar to that of senior radiologists performing manual segmentation. The ICC of radiomics features increased to excellent consistency (0.925 [0.888~0.950]). Automatic segmentation annotation provided better results than manual segmentation by radiologists. Our findings indicate that automatic segmentation annotation helps reduce variability in the perception and interpretation between radiologists with different experience levels and ensures the stability of radiomics features.

1. Introduction

Prostate cancer is the second leading cause of cancer-related death in men; in 2021, the official cancer statistics estimated there would be 248,530 (26%) new cases of prostate cancer [1]. Many studies have contributed to the early screening and detection of prostate cancer [2,3,4]. Artificial intelligence (AI)-based techniques, such as deep learning and machine learning, have made the evaluation of the screening and diagnosis, prediction of aggressiveness, and prognosis of prostate cancer faster and more accurate in recent years [2,3,4,5].

Prostate segmentation is a critical step in the automated detection or classification of prostate cancer using AI algorithms [6,7,8]. Accurate manual segmentation in medical imaging is a labor-intensive task and should be conducted by radiologists or physicians with extensive experience as radiologists with various levels of experience differ in their recognition of organ boundaries, especially on medical images of small organs or lesions. Two recent studies exploring the methods of eliminating interobserver variation [9,10] invited senior radiologists to serve as quality control after initial manual segmentation by junior or less-experienced radiologists and found that interobserver variation still existed among the senior radiologists following this workflow.

Many approaches have been proposed to implement automatic or fully automated segmentation in medical imaging to improve accuracy in an efficient manner. Moreover, automatic segmentation has become popular and freely accessible with the MONAI framework, which is an open source platform for deep learning in medical imaging [11]. Herein, we developed an automatic segmentation-based workflow for prostate segmentation using T2-weighted fat saturation images and aimed to investigate whether this type of AI-based workflow eliminates consistency differences among radiologists.

2. Materials and Methods

2.1. Study Population

This retrospective study was approved by the Institutional Review Board of our hospital and conducted in accordance with the tenets of the Helsinki Declaration of 1975 (revised in 2013). The requirement for informed consent was waived owing to the retrospective study design. Samples were collected from patients with prostate cancer who underwent magnetic resonance imaging (MRI) examination at our hospital between September 2016 and June 2018. The inclusion criteria were (1) preoperative MRI examination and (2) no prostate biopsy, surgery, radiotherapy, or endocrine therapy performed before the MRI examination. Patients who had undergone catheter placement or previous treatment for prostate cancer and exhibited artifacts on MRI were excluded from this study (Figure 1).

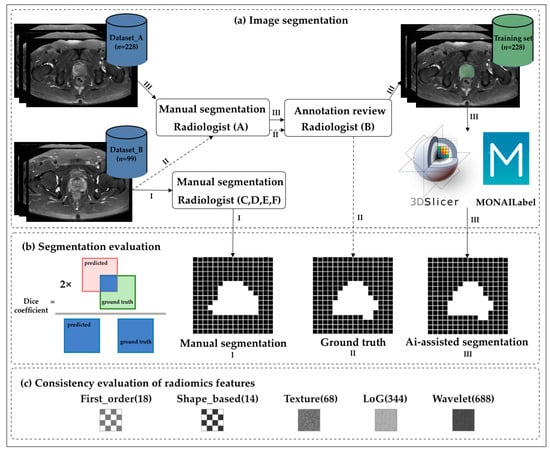

Figure 1.

Flowchart of the study. (a) The transverse axial fast spin echo T2-weighted images of the prostate were segmented manually and automatically by radiologists with different levels of experience. (b) The Dice coefficient was used to accurately measure the spatial overlap of manually delineated images. (c) The intraclass correlation coefficient was used to evaluate the stability of the radiomics features. The numbers in the figure indicate the number of features.

2.2. Dataset Description and MR Image Acquisition

All the images were obtained on a 3T MR System (MAGNETOM Skyra, Siemens Healthcare, Erlangen, Germany) using a standard 18-channel phased array body coil and a 32-channel integrated spine coil. Patients were given a small amount of food the day before the examination, after which they fasted for 4–6 h and then emptied their bowel and bladder as much as possible prior to the examination. During the examination, the coil was secured with a band to minimize motion artifacts attributed to the patient’s breathing. Axial T2-weighted fast spin-echo (T2FSE) imaging was performed with a slice thickness of 5–7 mm, no spacing, and a field of view of 12 × 12 cm2, including the entire prostate and seminal vesicle. All the MRI data were divided into two datasets, namely Dataset A for automatic segmentation training and Dataset B for testing. Datasets A and B contained T2FS images of 228 patients and 99 patients with prostate cancer, respectively (Table 1).

Table 1.

Clinical characteristics of the patients in Datasets A and B.

2.3. Manual Segmentation

Six radiologists from two centers annotated the prostate on T2FS imaging. Two chief radiologists (Radiologists A and B) with more than 15 years of experience in abdominopelvic diagnosis conducted the manual segmentation, which was used as the reference. All T2FS images from all 327 patients were first manually segmented by Radiologist A at one medical center and then confirmed by Radiologist B at the second medical center. Disagreements were resolved by discussion until consensus was reached.

After confirmation using the reference, two senior radiologists with more than 8 years of experience (Radiologists C and D) and two junior radiologists with 3 years of residency training in radiology (Radiologists E and F) from the second center completed the segmentation of 99 patients (Dataset B), first manually and 2 weeks later assisted by the automatic segmentation model. Manual segmentation of the prostate in all the T2FS images was performed using open-source software (3D Slicer, version 4.8.1; National Institutes of Health; https://www.slicer.org).

2.4. Automatic Computer-Aided Segmentation

MONAI_Label is a free, intelligent, open-source image annotation and learning tool. Modules that work with 3DSlicer enable users to create annotated datasets and build AI-based annotation models for clinical evaluation. To overcome the potential bias among the small number of readers, we developed a prostate-assisted segmentation model on the 3DSlicer platform based on MONAI_Label and trained it exclusively using Dataset A (Figure 2). In practical application scenarios, sufficient and high-quality datasets with high fidelity are usually not available, and the acquisition of perfect datasets becomes particularly challenging. Therefore, we utilized data augmentation to reduce the reliance on training data, thereby aiding the development of AI models with high accuracy and better speed. The model uses MONAI_Label’s built-in API to flip, rotate, crop, scale, translate, and shake the image to expand the training samples.

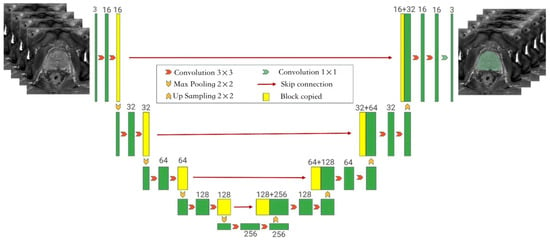

Figure 2.

The framework of the auto-segmentation model showing the Monai-3D-UNet structure proposed in this paper for prostate segmentation. The left side of the network acts as an encoder to extract features at different levels, while the right side acts as a decoder to aggregate features and segment masks. In the coding phase, the encoder extracts features from multiple scales and generates a fine-to-rough feature map. Fine feature maps contain lower-level features but more spatial information, while rough feature maps provide the opposite information. In the coding stage, the input is a 128 × 128 × 128 three-channel voxel and the output is a 128 × 128 × 128 voxel. Each layer of the coding part contains two 3 × 3 × 3 convolutions. After the convolution layer, BN + ReLU is used to activate the function, and then 2 × 2 × 2 max pooling is added. The stride is 2. In the decoding section, each layer has a 2 × 2 × 2 upper convolution operation with a stride of 2, followed by two 3 × 3 × 3 convolution and BN + ReLU activation functions. In the final layer, the 1 × 1 × 1 convolution reduces the number of output channels to the number of labels and uses Softmax as a loss function.

2.5. Data Analysis and Statistical Methods

Evaluation of the Segmentation Model

All the statistical analyses were performed using R (version 3.6.3), Python (version 3.9.7), and SPSS (version 22). The Wilcoxon rank sum test was used to compare the paired samples. The statistical significance level was set at p < 0.05. In the interobserver cohort, the Dice coefficient was used as a measure of the accuracy of the spatial overlap of the manually delineated images. A Dice coefficient of 0 indicates no overlap, and that of 1 indicates exact overlap.

2.6. Consistency Evaluation of the Radiomics Features

Pyradiomics was used to extract the radiomics features of Dataset B. We analyzed the first-order, texture, Laplacian of Gaussian (LoG), morphological, and wavelet features extracted using the Pyradiomics software package. The intraclass correlation coefficient (ICC) was used to evaluate feature stability; an ICC of 0.75–0.89 was considered good, and an ICC of >0.90 was considered to have excellent reproducibility [12]. Finally, we analyzed the consistency of feature extraction following manual segmentation by different radiologists, as well as after automatic segmentation.

3. Results

3.1. Evaluation of the Automatic Segmentation Model

The auto-segmentation model was trained using T2FS scans from Dataset A, consisting of images from 228 patients with prostate cancer with a mean age of 69 years. In the present model, 80% of the data were used for training and 20% were used for validation. Thus, 183 patients constituted the training set and the remaining 45 constituted the validation set. A total of 99 scans (mean age: 79 years) from Dataset B were collected for the testing set. Table 1 lists the basic characteristics of the population included in this experiment.

The learning rate of the model was 1 × 10−4, the batch size was equal to 1, and the Adam optimizer was used. The model was trained for 300 epochs on a server with an image processor. The random affine transformation was used for data augmentation. The training results of the automatic segmentation model are shown in Table 2. Similarly, we used the nnUNet model for our data training (see Table 2 for the results). Overall, the average Dice coefficient of the auto-segmentation model in the testing set was 0.831. The mean Dice values of the two segmentation models were higher (Dice coefficients > 0.9) in the training dataset; the Dice coefficients of the two segmentation models decreased in the testing set.

Table 2.

Performance of the segmentation model in the training, validation, and testing sets.

3.2. Evaluation of the Consistency of Image Segmentation by the Radiologists

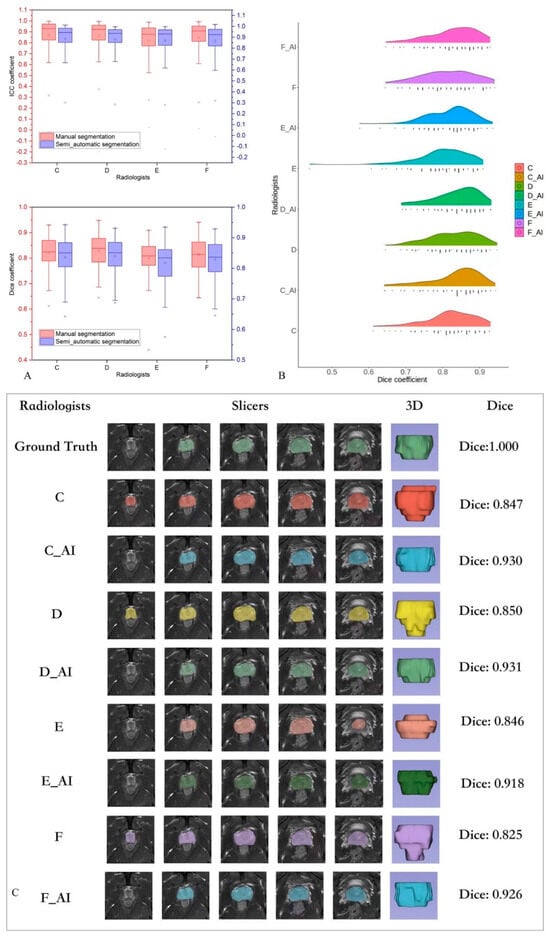

Table 3 presents the segmentation performance of the four radiologists on the testing set. In all 99 columns, the manual and automatic segmentation results of the senior group were significantly better than those of the junior group (p < 0.05). Figure 3A shows the box plot of the segmentation performance of the four radiologists on the testing set. The Dice coefficient was higher for all the radiologists using the automatic segmentation than for those performing manual segmentation, except for one senior radiologist who showed no significant improvement (p = 0.06). Similarly, we carefully divided the patients with prostate cancer in the testing set into low-grade groups (Grades 1 and 2) and high-grade groups (Grades 3, 4, and 5); however, no significant differences were observed in the manual and automatic labeling between the low- and high-grade group by the radiologists (p > 0.05). In the low-grade group, the model did not significantly improve the manual segmentation results (p > 0.05). The performance of junior radiologists did not significantly differ from that of both senior radiologists after automatic segmentation annotation (Table 4).

Table 3.

Dice coefficient and ICC of image segmentation by radiologists with different levels of seniority.

Figure 3.

(A) Box plot of the segmentation performance of four radiologists for the testing set. (B) Performance of all the radiologists: automatic segmentation annotation versus manual segmentation. (C) One sample of a radiologist’s performance and their automatic segmentation annotation.

Table 4.

Dice comparison between manual and semi-auto segmentation from different radiologists.

3.3. Evaluation of the Segmentation Model

Table 3 shows the consistency of the radiomics features extracted from the segmentation results from four radiologists. Consistency was higher for automatic segmentation images than for manual segmentation images (p < 0.05), and the average ICC reached >0.85. Figure 3A shows the box plot of the segmentation agreement between the four radiologists in the testing set.

3.4. Consistency Evaluation of the Radiomics Features

Among the five groups of radiomics features (Table 5), the first-order features showed better performance and the shape based features showed worse performance in the “excellent” ICC group. Overall, the number of “excellent” ICC features was lower in the manual segmentation group than in the automatic segmentation group.

Table 5.

Consistency results of radiomics features among radiologists with both manual and semi-auto segmentation.

4. Discussion

In this study, our trained automatic segmentation model demonstrated a median Dice coefficient of 0.850 (0.568, 0.940), as well as high efficiency (11 h) on T2FS imaging compared with nnUNet (median Dice coefficient 0.848 [0.667, 0.911]), which took 125 h. This automatic segmentation model is essentially a standard convolutional neural network (i.e., UNet) [11,13,14]. The performance metrics of this model were better than those of all the manual segmentations performed by the radiologists in this study. The performance of our model was close to the segmentation performance of senior radiologists following the assisted annotation, thereby suggesting the use of our model as a tool supporting the clinicians’ workflow for accurate diagnosis of prostate cancer.

Moreover, consistency significantly improved after automatic segmentation compared with manual segmentation, and the ICC of senior radiologists following automatic segmentation increased to perfect consistency (0.925 [0.888~0.950], Table 6). None of these findings have been reported in previous studies. In our sample, the Dice coefficient and ICC of both senior and junior radiologists significantly improved after automatic segmentation compared with manual segmentation. The Dice coefficients in groups of Grades 3, 4, and 5 in Dataset B (n = 61) of the three radiologists significantly differed between the automatic segmentation annotation and manual segmentation. Our findings indicate that the difficulty in segmenting the prostate in the higher-grade group may have resulted in more variability in manual segmentation compared with the lower-grade group.

Table 6.

Consistency evaluation of segmentation by different radiologists.

This study demonstrates that the performance of junior radiologists following automatic segmentation was similar to that of senior radiologists, while the performance of one junior radiologist was significantly worse compared with that of senior radiologists performing manual segmentation. This indicates that automatic segmentation annotation could reduce the variability in the perception of radiologists with different levels of experience, who may provide different interpretations or ratings [15,16,17], which is a critical issue in image segmentation (Table 4). However, our automatic segmentation annotation procedure could not eliminate the variability in perception and interpretation between junior and senior radiologists. We found a significant difference in the Dice coefficients between junior and senior radiologists even after automatic segmentation annotation, indicating that radiologists may still differ in their perception and interpretation of the prediction area of the automatic segmentation model based on their own experience (Figure 3). AI-based medical imaging analysis usually requires senior radiologists or physicians to manually segment or confirm the structures on medical images as the reference standard or ground truth [18]. Manual segmentation is a labor-intensive and time-consuming task for senior physicians, and it is expensive to hire more than one senior physician for large data-based studies. Our findings suggest that the auto-segmentation model can efficiently accomplish this type of image segmentation following confirmation by senior physicians.

Radiomics features are not stable between the region of interest sizes and volumes on computed tomography and MRI, which was reported in a study using a homogenous phantom without any texture differences [19,20]. Ensuring the stability of radiomics features is crucial for the accuracy of image-based prognostication and external generalization of prognostic models [21,22,23,24,25,26,27,28,29,30,31]. In this study, ICCs were used to evaluate the repeatability and reproducibility of the radiomics features. A MONAI_Label-based automatic prostate segmentation system was established to help guide the selection of stable radiomics features. Our results show that the ICC for all the features increased to ≥0.9 after auto-segmentation-assisted annotation, indicating that the auto-segmentation model can help reduce the segmentation variance among different radiologists and thereby greatly improve the number of reproducible the prostate radiomics features (Table 4).

Limitations

This study has certain limitations. First, this was a single-institution retrospective study with a limited number of patients; thus, it may not be representative of other institutions. However, the size of our cohort is very similar to other cohorts reported in the literature, which highlights the urgent need for radiomics studies with larger cohorts. In addition, this study focused on prostate cancer; thus, its applicability to other tumor sites has not been demonstrated. Despite the validation of the PyRadiomics platform, the results may differ from those with other radiomics feature extraction platforms. Finally, although we used ICC classification cutoffs commonly used in the literature (0.75 and 0.9) [12], these may not be ideal thresholds for feature inclusion in a prognostic model. Thus, clinically relevant thresholds for the future development of radiomics signature biomarkers for prostate cancer are unclear. Whether the repeatability of individual features significantly impacts the overall performance of a prediction model combining multiple features remains to be further investigated. Despite its limitations, our study systematically assessed the reproducibility of MRI-based radiomics in patients with prostate cancer, which has rarely been performed. Future research should analyze the correlation between radiomics features and clinical variables to reveal the most suitable radiomics features that should be included in prognostic models.

5. Conclusions

Our proposed auto-segmentation model exhibited better performance than radiologists performing manual segmentation, and automatic segmentation annotation was better than manual segmentation. Automatic segmentation annotation improved the workflow of image segmentation and reduced the variability in the perception and interpretation of radiologists with different degrees of experience. Furthermore, auto-segmentation-assisted annotation helps ensure the stability of the radiomics features. A large-scale dataset of a multi-center study may help extrapolate the results obtained in this study.

Author Contributions

Conceptualization, L.J., M.L. and D.G.; Methodology, Z.M., L.J., H.L. and D.G.; Software, Z.M.; Formal analysis, Z.M. and N.Y.; Investigation, L.J.; Resources, L.J. and M.L.; Data curation, L.J., P.G., D.L., N.Y., F.G. and M.L.; Writing—original draft, L.J.; Writing—review and editing, L.J., M.L. and D.G.; Supervision, M.L. and D.G. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Medical Engineering Jiont Fund of Fudan University [grant number, yg2022-22], Shanghai Key Lab of Forensic Medicine, Key Lab of Forensic Science, Ministry of Justice, China (Academy of Forensic Science) (grant number, KF202113)), Youth Medical Talents-Medical Imaging Practitioner Program (grant number, AB83030002019004), Science and Technology Planning Project of Shanghai Science and Technology Commission (grant number, 22Y11910700), Health Commission of Shanghai (grant number, 2018ZHYL0103), National Natural Science Foundation of China (grant number 61976238), and Shanghai “Rising Stars of Medical Talent” Youth Development Program “Outstanding Youth Medical Talents” (SHWJRS [2021]-99), Emerging Talent Program (XXRC2213) and Leading Talent Program (LJRC2202) of Huadong Hospital, and Excellent Academic Leaders of Shanghai (2022XD042). The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Institutional Review Board (or Ethics Committee) of Huasshan hospital (Approval No. 2023-489).

Informed Consent Statement

The need for obtaining informed patient consent was waived due to the retrospective nature of the study.

Data Availability Statement

All the data will be shared upon reasonable request by the corresponding author.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Siegel, R.L.; Miller, K.D.; Fuchs, H.E.; Jemal, A. Cancer statistics, 2021. CA Cancer J. Clin. 2021, 71, 7–33. [Google Scholar] [CrossRef]

- Cao, R.; Mohammadian Bajgiran, A.; Afshari Mirak, S.; Shakeri, S.; Zhong, X.; Enzmann, D.; Raman, S.; Sung, K. Joint prostate cancer detection and gleason score prediction in mp-MRI via FocalNet. IEEE Trans. Med. Imaging 2019, 38, 2496–2506. [Google Scholar] [CrossRef]

- Hectors, S.J.; Cherny, M.; Yadav, K.; Beksaç, A.T.; Thulasidass, H.; Lewis, S.; Davicioni, E.; Wang, P.; Tewari, A.K.; Taouli, B. Radiomics features measured with multiparametric magnetic resonance imaging predict prostate cancer aggressiveness. J. Urol. 2019, 202, 498–505. [Google Scholar] [CrossRef]

- Deniffel, D.; Salinas, E.; Ientilucci, M.; Evans, A.J.; Fleshner, N.; Ghai, S.; Hamilton, R.; Roberts, A.; Toi, A.; van der Kwast, T.; et al. Does the visibility of grade group 1 prostate cancer on baseline multiparametric magnetic resonance imaging impact clinical outcomes? J. Urol. 2020, 204, 1187–1194. [Google Scholar] [CrossRef]

- Vente, C.; Vos, P.; Hosseinzadeh, M.; Pluim, J.; Veta, M. Deep learning regression for prostate cancer detection and grading in bi-parametric MRI. IEEE Trans. Biomed. Eng. 2021, 68, 374–383. [Google Scholar] [CrossRef]

- Penzkofer, T.; Padhani, A.R.; Turkbey, B.; Haider, M.A.; Huisman, H.; Walz, J.; Salomon, G.; Schoots, I.G.; Richenberg, J.; Villeirs, G.; et al. ESUR/ESUI position paper: Developing artificial intelligence for precision diagnosis of prostate cancer using magnetic resonance imaging. Eur. Radiol. 2021, 31, 9567–9578. [Google Scholar] [CrossRef]

- Schelb, P.; Wang, X.; Radtke, J.P.; Wiesenfarth, M.; Kickingereder, P.; Stenzinger, A.; Hohenfellner, M.; Schlemmer, H.P.; Maier-Hein, K.H.; Bonekamp, D. Simulated clinical deployment of fully automatic deep learning for clinical prostate MRI assessment. Eur. Radiol. 2021, 31, 302–313. [Google Scholar] [CrossRef]

- Rouvière, O.; Moldovan, P.C.; Vlachomitrou, A.; Gouttard, S.; Riche, B.; Groth, A.; Rabotnikov, M.; Ruffion, A.; Colombel, M.; Crouzet, S.; et al. Combined model-based and deep learning-based automated 3D zonal segmentation of the prostate on T2-weighted MR images: Clinical evaluation. Eur. Radiol. 2022, 32, 3248–3259. [Google Scholar] [CrossRef]

- Becker, A.S.; Chaitanya, K.; Schawkat, K.; Muehlematter, U.J.; Hötker, A.M.; Konukoglu, E.; Donati, O.F. Variability of manual segmentation of the prostate in axial T2-weighted MRI: A multi-reader study. Eur. J. Radiol. 2019, 121, 108716. [Google Scholar] [CrossRef]

- Montagne, S.; Hamzaoui, D.; Allera, A.; Ezziane, M.; Luzurier, A.; Quint, R.; Kalai, M.; Ayache, N.; Delingette, H.; Renard-Penna, R. Challenge of prostate MRI segmentation on T2-weighted images: Inter-observer variability and impact of prostate morphology. Insights Imaging 2021, 12, 71. [Google Scholar] [CrossRef]

- Belue, M.J.; Harmon, S.A.; Patel, K.; Daryanani, A.; Yilmaz, E.C.; Pinto, P.A.; Wood, B.J.; Citrin, D.E.; Choyke, P.L.; Turkbey, B. Development of a 3D CNN-based AI model for automated segmentation of the prostatic urethra. Acad. Radiol. 2022, 29, 1404–1412. [Google Scholar] [CrossRef]

- Fiset, S.; Welch, M.L.; Weiss, J.; Pintilie, M.; Conway, J.L.; Milosevic, M.; Fyles, A.; Traverso, A.; Jaffray, D.; Metser, U.; et al. Repeatability and reproducibility of MRI-based radiomic features in cervical cancer. Radiother. Oncol. 2019, 135, 107–114. [Google Scholar] [CrossRef]

- Diaz-Pinto, A.; Alle, S.; Nath, V.; Tang, Y.; Ihsani, A.; Asad, M.; Pérez-García, F.; Mehta, P.; Li, W.; Flores, M.; et al. MONAI label: A framework for AI-assisted interactive labeling of 3D medical images. arXiv 2022. [Google Scholar] [CrossRef]

- Shapey, J.; Kujawa, A.; Dorent, R.; Wang, G.; Dimitriadis, A.; Grishchuk, D.; Paddick, I.; Kitchen, N.; Bradford, R.; Saeed, S.R.; et al. Segmentation of vestibular schwannoma from MRI, an open annotated dataset and baseline algorithm. Sci. Data 2021, 8, 286. [Google Scholar] [CrossRef]

- Benchoufi, M.; Matzner-Lober, E.; Molinari, N.; Jannot, A.S.; Soyer, P. Interobserver agreement issues in radiology. Diagn. Interv. Imaging 2020, 101, 639–641. [Google Scholar] [CrossRef]

- Gierada, D.S.; Rydzak, C.E.; Zei, M.; Rhea, L. Improved interobserver agreement on lung-RADS classification of solid nodules using semiautomated CT volumetry. Radiology 2020, 297, 675–684. [Google Scholar] [CrossRef]

- Kim, R.Y.; Oke, J.L.; Pickup, L.C.; Munden, R.F.; Dotson, T.L.; Bellinger, C.R.; Cohen, A.; Simoff, M.J.; Massion, P.P.; Filippini, C.; et al. Artificial intelligence tool for assessment of indeterminate pulmonary nodules detected with CT. Radiology 2022, 304, 683–691. [Google Scholar] [CrossRef]

- Fournel, J.; Bartoli, A.; Bendahan, D.; Guye, M.; Bernard, M.; Rauseo, E.; Khanji, M.Y.; Petersen, S.E.; Jacquier, A.; Ghattas, B. Medical image segmentation automatic quality control: A multi-dimensional approach. Med. Image Anal. 2021, 74, 102213. [Google Scholar] [CrossRef]

- Jensen, L.J.; Kim, D.; Elgeti, T.; Steffen, I.G.; Hamm, B.; Nagel, S.N. Stability of radiomic features across different region of interest sizes-a CT and MR phantom study. Tomography 2021, 7, 238–252. [Google Scholar] [CrossRef]

- Hertel, A.; Tharmaseelan, H.; Rotkopf, L.T.; Nörenberg, D.; Riffel, P.; Nikolaou, K.; Weiss, J.; Bamberg, F.; Schoenberg, S.O.; Froelich, M.F.; et al. Phantom-based radiomics feature test-retest stability analysis on photon-counting detector CT. Eur. Radiol. 2023, 33, 4905–4914. [Google Scholar] [CrossRef]

- Ferro, M.; de Cobelli, O.; Musi, G.; Del Giudice, F.; Carrieri, G.; Busetto, G.M.; Falagario, U.G.; Sciarra, A.; Maggi, M.; Crocetto, F.; et al. Radiomics in prostate cancer: An up-to-date review. Ther. Adv. Urol. 2022, 14. [Google Scholar] [CrossRef]

- Thulasi Seetha, S.; Garanzini, E.; Tenconi, C.; Marenghi, C.; Avuzzi, B.; Catanzaro, M.; Stagni, S.; Villa, S.; Chiorda, B.N.; Badenchini, F.; et al. Stability of Multi-Parametric Prostate MRI Radiomic Features to Variations in Segmentation. J. Pers. Med. 2023, 13, 1172. [Google Scholar] [CrossRef]

- Wan, Q.; Wang, Y.Z.; Li, X.C.; Xia, X.Y.; Wang, P.; Peng, Y.; Liang, C.H. The stability and repeatability of radiomics features based on lung diffusion-weighted imaging. Zhonghua Yi Xue Za Zhi 2022, 102, 190–195. [Google Scholar]

- Xu, H.; Lv, W.; Zhang, H.; Ma, J.; Zhao, P.; Lu, L. Evaluation and optimization of radiomics features stability to respiratory motion in 18 F-FDG 3D PET imaging. Med. Phys. 2021, 48, 5165–5178. [Google Scholar] [CrossRef]

- Jimenez-Del-Toro, O.; Aberle, C.; Bach, M.; Schaer, R.; Obmann, M.M.; Flouris, K.; Konukoglu, E.; Stieltjes, B.; Müller, H.; Depeursinge, A. The Discriminative Power and Stability of Radiomics Features With Computed Tomography Variations: Task-Based Analysis in an Anthropomorphic 3D-Printed CT Phantom. Investig. Radiol. 2021, 56, 820–825. [Google Scholar] [CrossRef]

- Tharmaseelan, H.; Rotkopf, L.T.; Ayx, I.; Hertel, A.; Nörenberg, D.; Schoenberg, S.O.; Froelich, M.F. Evaluation of radiomics feature stability in abdominal monoenergetic photon counting CT reconstructions. Sci. Rep. 2022, 12, 19594. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, M.; Cao, P.; Wong, E.M.F.; Ho, G.; Lam, T.P.W.; Han, L.; Lee, E.Y.P. CT-based deep learning segmentation of ovarian cancer and the stability of the extracted radiomics features. Quant. Imaging Med. Surg. 2023, 13, 5218–5229. [Google Scholar] [CrossRef]

- Scalco, E.; Rizzo, G.; Mastropietro, A. The stability of oncologic MRI radiomic features and the potential role of deep learning: A review. Phys. Med. Biol. 2022, 67, 09TR03. [Google Scholar] [CrossRef]

- Abunahel, B.M.; Pontre, B.; Ko, J.; Petrov, M.S. Towards developing a robust radiomics signature in diffuse diseases of the pancreas: Accuracy and stability of features derived from T1-weighted magnetic resonance imaging. J. Med. Imaging Radiat. Sci. 2022, 53, 420–428. [Google Scholar] [CrossRef]

- Ramli, Z.; Karim, M.K.A.; Effendy, N.; Abd Rahman, M.A.; Kechik, M.M.A.; Ibahim, M.J.; Haniff, N.S.M. Stability and Reproducibility of Radiomic Features Based on Various Segmentation Techniques on Cervical Cancer DWI-MRI. Diagnostics 2022, 12, 3125. [Google Scholar] [CrossRef]

- Gitto, S.; Bologna, M.; Corino, V.D.A.; Emili, I.; Albano, D.; Messina, C.; Armiraglio, E.; Parafioriti, A.; Luzzati, A.; Mainardi, L.; et al. Diffusion-weighted MRI radiomics of spine bone tumors: Feature stability and machine learning-based classification performance. Radiol. Med. 2022, 127, 518–525. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).