Underwater Sphere Classification Using AOTF-Based Multispectral LiDAR

Abstract

1. Introduction

2. Materials and Methods

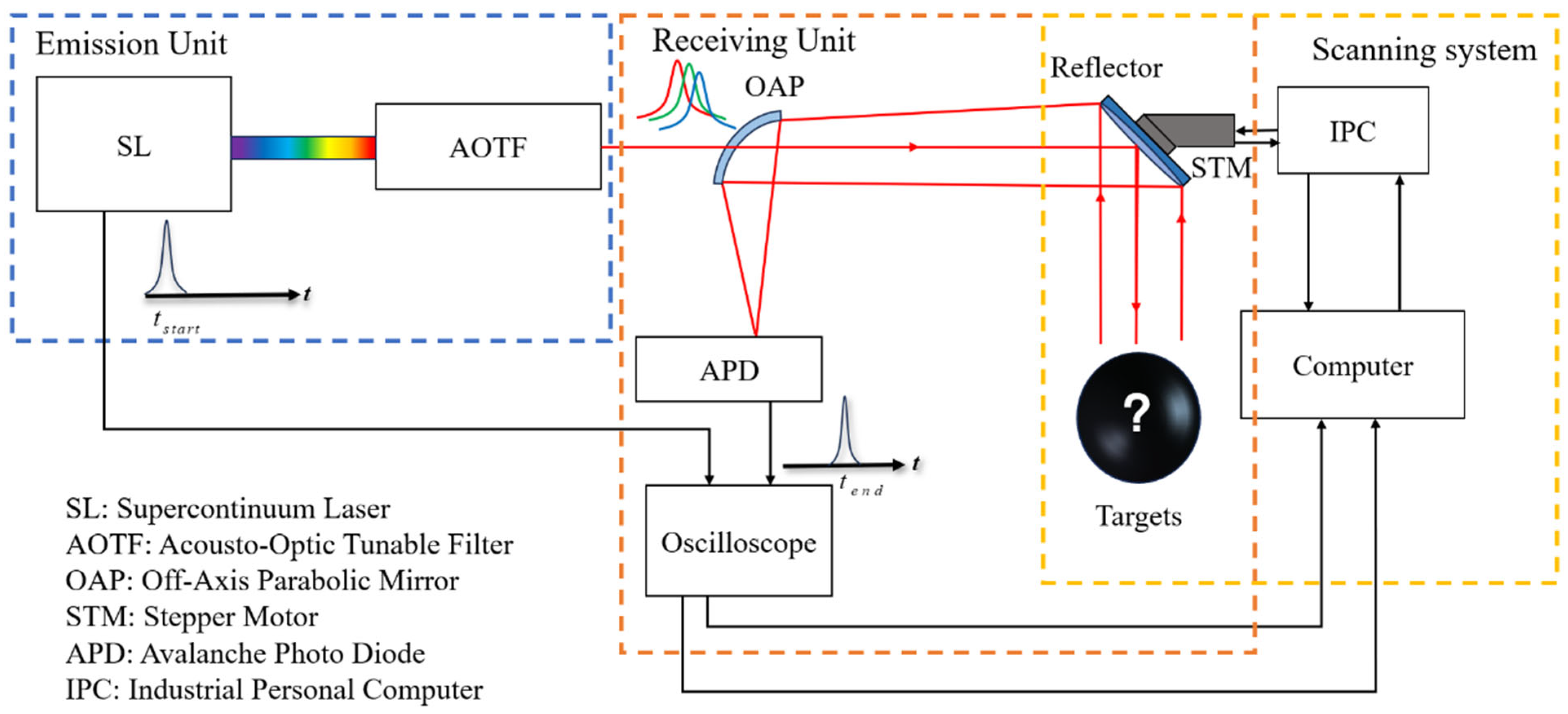

2.1. MSL System

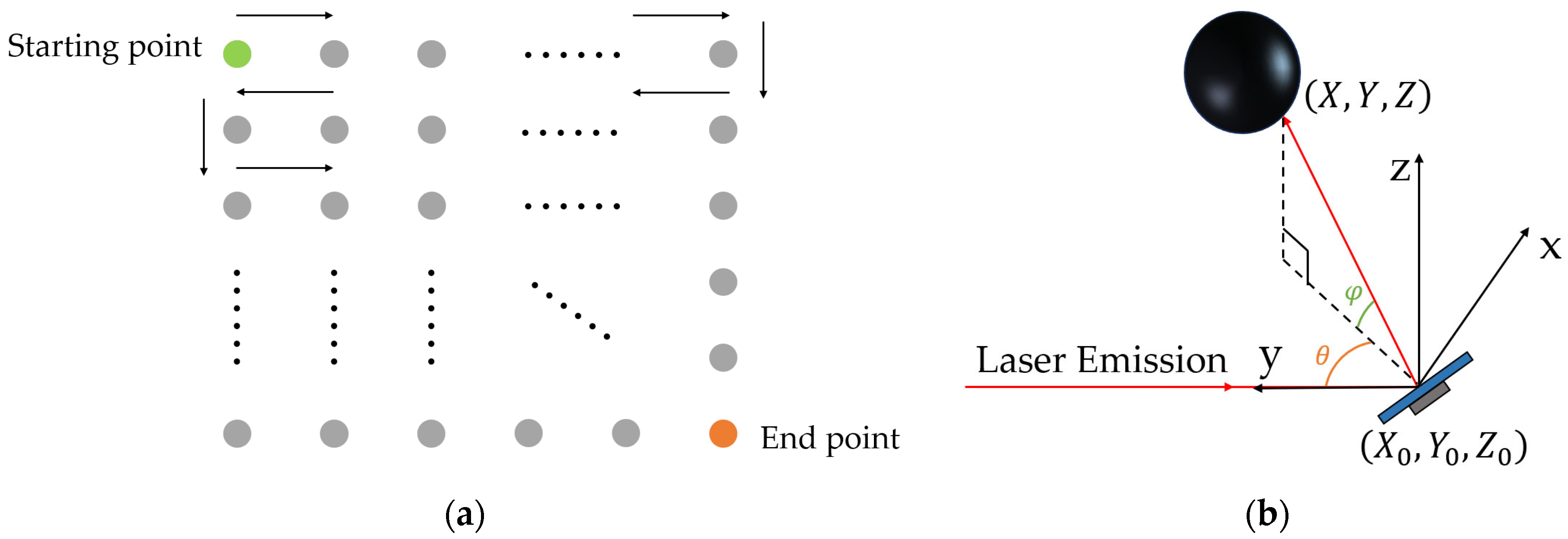

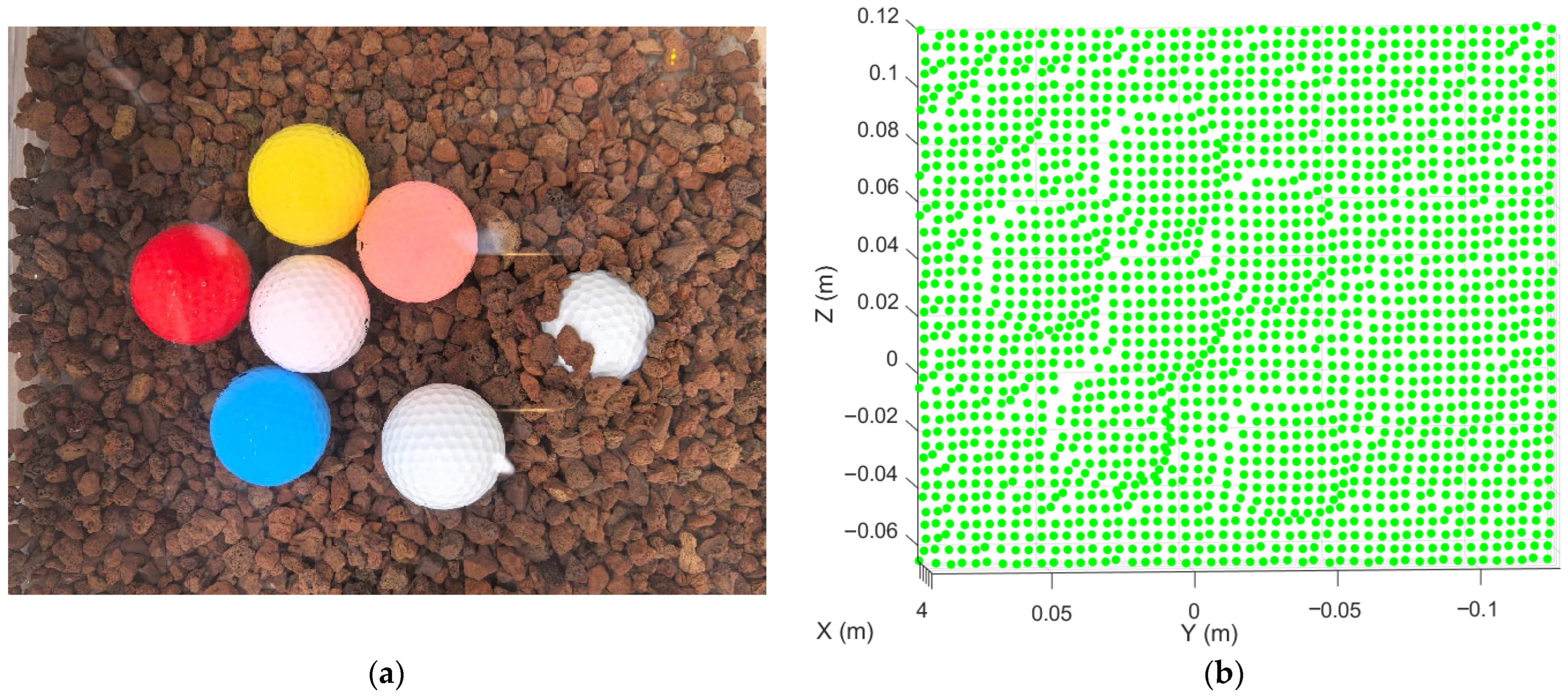

2.2. Laboratory Experiments

2.3. Methods

3. Results and Discussion

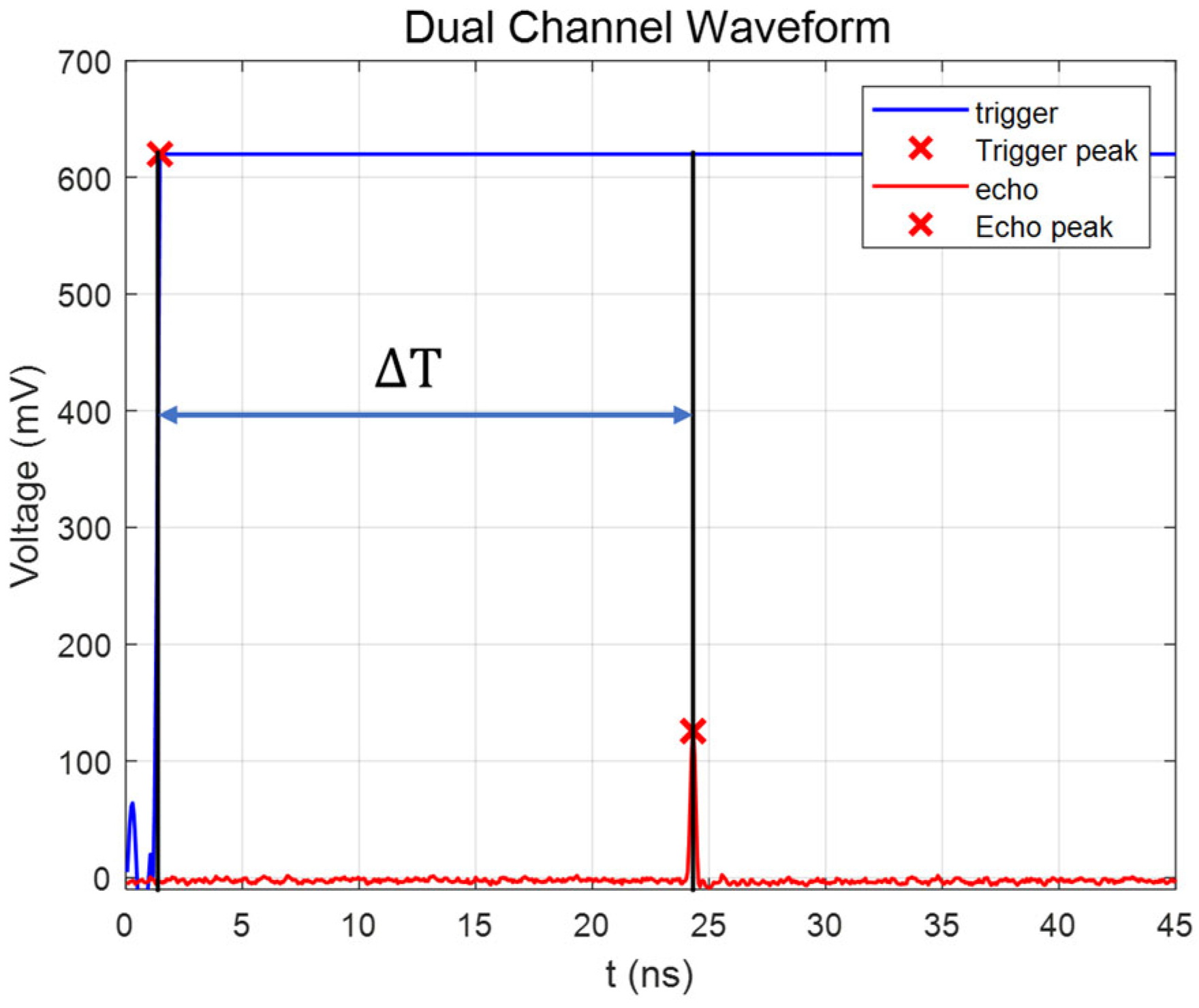

3.1. Range Measurements

- Measurement is affected by the ranging environment, and with the water itself being liquid, it may exhibit slight fluctuations, leading to ranging errors;

- Different wavelengths have varying laser energies. Higher laser energy leads to higher SNR, stronger anti-interference capability, and relatively better signal stability;

- Jitter in both the trigger signal and the laser itself can introduce measurement errors related to the stability of the laser.

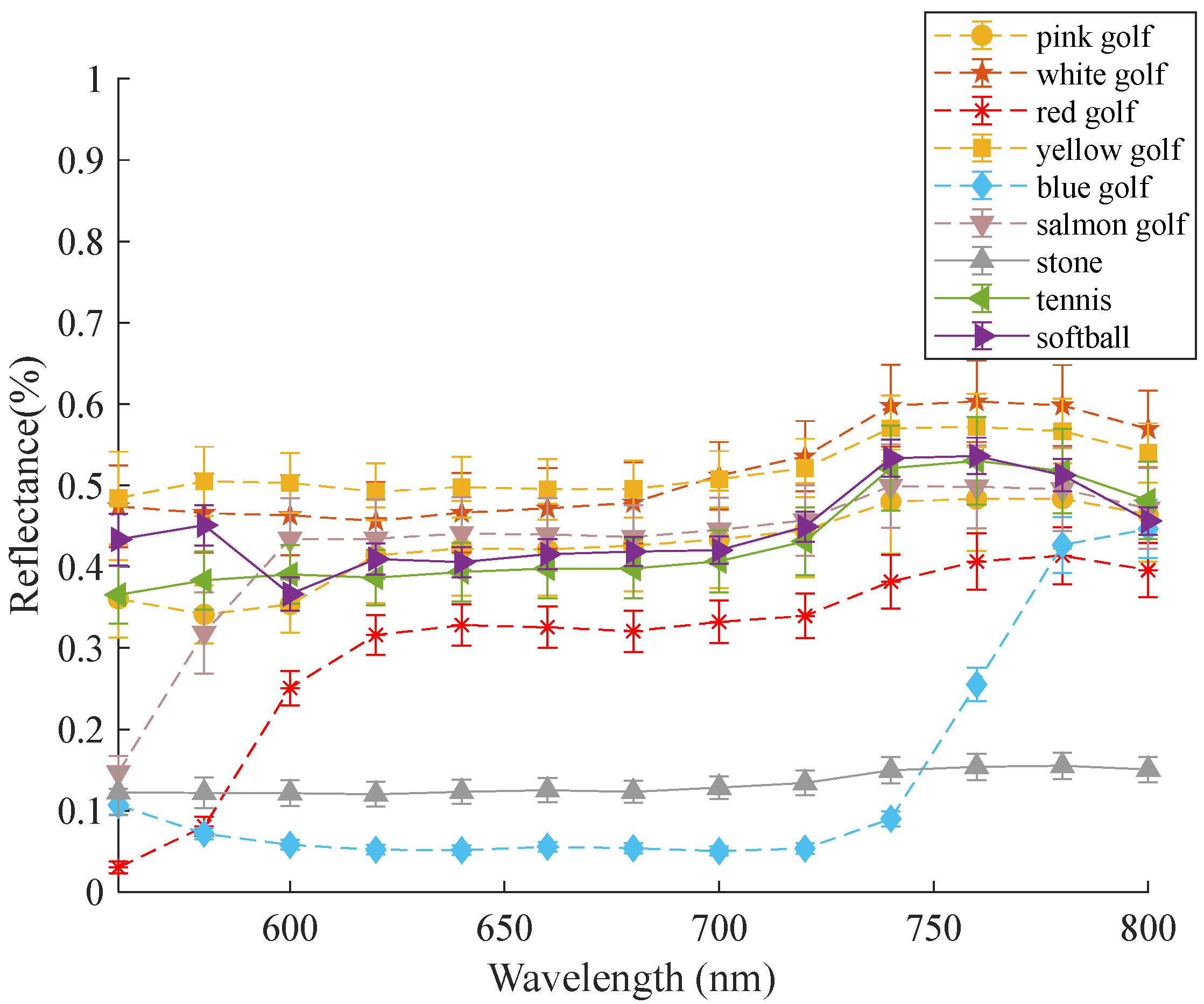

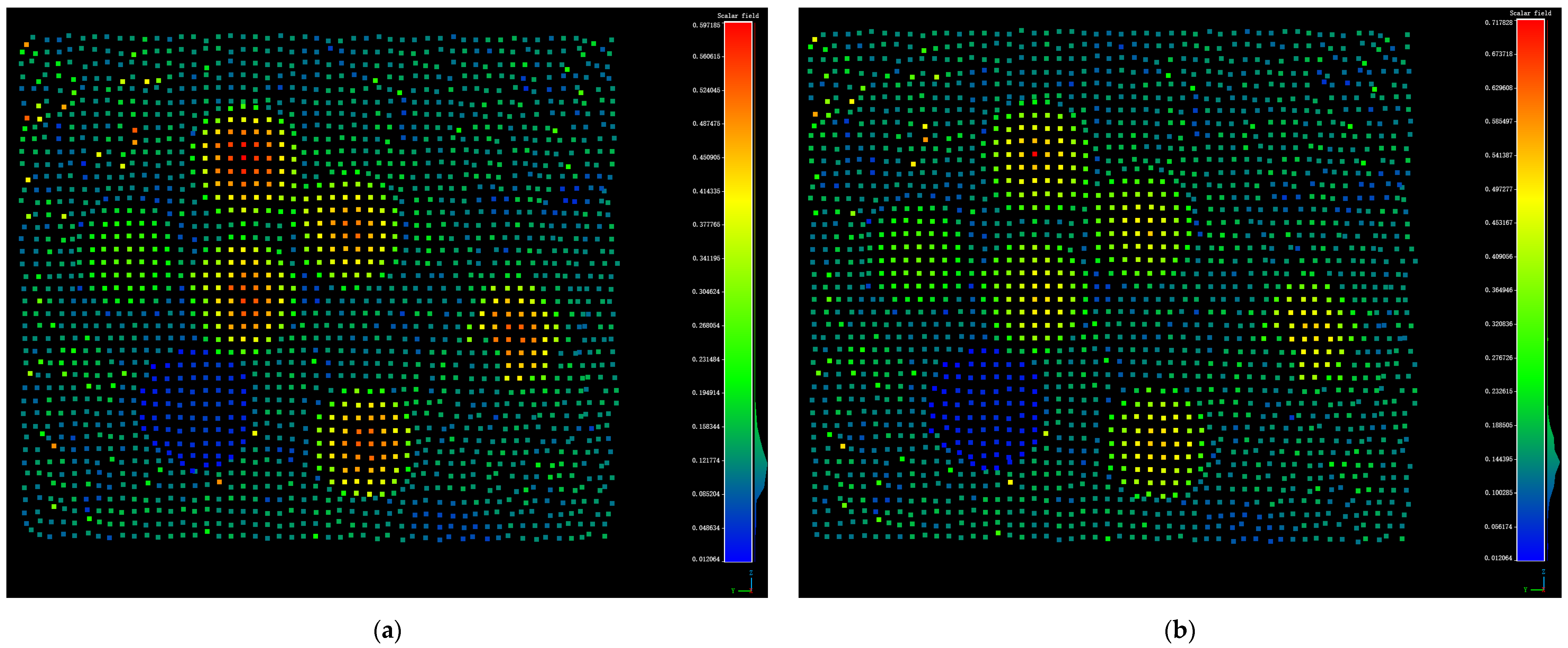

3.2. Data Processing and Classification Performance

3.3. Scanning Experiments

4. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Raffel, T.R.; Long, P.R. Retention of golf ball performance following up to one-year submergence in ponds. Int. J. Golf. Sci. 2023. [Google Scholar]

- Karins, J. Up to Par: Guaranteeing the Right of Quality Control in the Golf Ball Refurbishment Industry. J. Int’l Bus. L. 2019, 19, 105. [Google Scholar]

- Cong, Y.; Gu, C.; Zhang, T.; Gao, Y. Underwater robot sensing technology: A survey. Fundam. Res. 2021, 1, 337–345. [Google Scholar] [CrossRef]

- Bleier, M.; Nüchter, A. Low-Cost 3d Laser Scanning in Air Orwater Using Self-Calibrating Structured Light. The International Archives of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2017, 42, 105–112. [Google Scholar]

- Zhen, Z.; Quackenbush, L.J.; Zhang, L. Trends in Automatic Individual Tree Crown Detection and Delineation—Evolution of LiDAR Data. Remote Sens. 2016, 8, 333. [Google Scholar] [CrossRef]

- Taubert, F.; Fischer, R.; Knapp, N.; Huth, A. Deriving tree size distributions of tropical forests from Lidar. Remote Sens. 2021, 13, 131. [Google Scholar] [CrossRef]

- Caspari, G. The potential of new LiDAR datasets for archaeology in Switzerland. Remote Sens. 2023, 15, 1569. [Google Scholar] [CrossRef]

- Jones, L.; Hobbs, P. The application of terrestrial LiDAR for geohazard mapping, monitoring and modelling in the British Geological Survey. Remote Sens. 2021, 13, 395. [Google Scholar] [CrossRef]

- Lu, B.; Dao, P.; Liu, J.; He, Y.; Shang, J. Recent Advances of Hyperspectral Imaging Technology and Applications in Agriculture. Remote Sens. 2020, 12, 2659. [Google Scholar] [CrossRef]

- Mirzaie, M.; Darvishzadeh, R.; Shakiba, A.; Matkan, A.A.; Atzberger, C.; Skidmore, A. Comparative analysis of different uni- and multi-variate methods for estimation of vegetation water content using hyper-spectral measurements. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 1–11. [Google Scholar] [CrossRef]

- Diatmiko, G.P.K. Design and Verification of a Hyperspectral Imaging System for Outdoor Sports Lighting Measurements. Master’s Thesis, Itä-Suomen Yliopisto, Kuopio, Finland, 2023. [Google Scholar]

- Calin, M.A.; Parasca, S.V.; Savastru, D.; Manea, D. Hyperspectral imaging in the medical field: Present and future. Appl. Spectrosc. Rev. 2014, 49, 435–447. [Google Scholar] [CrossRef]

- Sun, G.; Jiao, Z.; Zhang, A.; Li, F.; Fu, H.; Li, Z. Hyperspectral image-based vegetation index (HSVI): A new vegetation index for urban ecological research. Int. J. Appl. Earth Obs. Geoinf. 2021, 103, 102529. [Google Scholar] [CrossRef]

- Sture, Ø.; Ludvigsen, M.; Aas, L.M.S. Autonomous underwater vehicles as a platform for underwater hyperspectral imaging. In Proceedings of the OCEANS 2017—Aberdeen, Aberdeen, UK, 19–22 June 2017; pp. 1–8. [Google Scholar]

- Ødegård, Ø.; Mogstad, A.A.; Johnsen, G.; Sørensen, A.J.; Ludvigsen, M. Underwater hyperspectral imaging: A new tool for marine archaeology. Appl. Opt. 2018, 57, 3214–3223. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep Learning-Based Classification of Hyperspectral Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Yoon, J. Hyperspectral Imaging for Clinical Applications. BioChip J. 2022, 16, 1–12. [Google Scholar] [CrossRef]

- Hakala, T.; Suomalainen, J.; Kaasalainen, S.; Chen, Y. Full waveform hyperspectral LiDAR for terrestrial laser scanning. Opt. Express 2012, 20, 7119–7127. [Google Scholar] [CrossRef]

- Chen, Y.; Jiang, C.; Hyyppa, J.; Qiu, S.; Wang, Z.; Tian, M.; Li, W. Feasibility Study of Ore Classification Using Active Hyperspectral LiDAR. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1785–1789. [Google Scholar] [CrossRef]

- Ray, P.; Salido-Monzú, D.; Camenzind, S.L.; Wieser, A. Supercontinuum-based hyperspectral LiDAR for precision laser scanning. Opt. Express 2023, 31, 33486–33499. [Google Scholar] [CrossRef]

- Morsdorf, F.; Nichol, C.; Malthus, T.; Woodhouse, I.H. Assessing forest structural and physiological information content of multi-spectral LiDAR waveforms by radiative transfer modelling. Remote Sens. Environ. 2009, 113, 2152–2163. [Google Scholar] [CrossRef]

- Chen, Y.; Luo, Q.; Guo, S.; Chen, W.; Hu, S.; Ma, J.; He, Y.; Huang, Y. Multispectral LiDAR-based underwater ore classification using a tunable laser source. Opt. Commun. 2024, 551, 129903. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, L.; Wu, H.; Zhou, M.; Chen, J.; Chen, Z.; Hu, J.; Chen, Y.; Wang, J.; Niu, Y.; et al. Hyperspectral LiDAR for Subsea Exploration: System Design and Performance Evaluation. Electronics 2025, 14, 1539. [Google Scholar] [CrossRef]

- Liu, Q.; Liu, B.; Wu, S.; Liu, J.; Zhang, K.; Song, X.; Chen, X.; Zhu, P. Design of the ship-borne multi-wavelength polarization ocean lidar system and measurement of seawater optical properties. EPJ Web Conf. 2020, 237, 7007. [Google Scholar] [CrossRef]

- Chen, Y.; Li, W.; Hyyppä, J.; Wang, N.; Jiang, C.; Meng, F.; Tang, L.; Puttonen, E.; Li, C. A 10-nm Spectral Resolution Hyperspectral LiDAR System Based on an Acousto-Optic Tunable Filter. Sensors 2019, 19, 1620. [Google Scholar] [CrossRef]

- Chen, B.; Shi, S.; Gong, W.; Zhang, Q.; Yang, J.; Du, L.; Sun, J.; Zhang, Z.; Song, S. Multispectral LiDAR Point Cloud Classification: A Two-Step Approach. Remote Sens. 2017, 9, 373. [Google Scholar] [CrossRef]

- Gong, W.; Sun, J.; Shi, S.; Yang, J.; Du, L.; Zhu, B.; Song, S. Investigating the potential of using the spatial and spectral information of multispectral LiDAR for object classification. Sensors 2015, 15, 21989–22002. [Google Scholar] [CrossRef]

- Solonenko, M.G.; Mobley, C.D. Inherent optical properties of Jerlov water types. Appl. Opt. 2015, 54, 5392–5401. [Google Scholar] [CrossRef]

| Wavelength (nm) | Average Range (m) | Standard Deviation (cm) | Error (cm) |

|---|---|---|---|

| 560 | 4.014 | 1.31 | 1.4 |

| 580 | 3.991 | 0.77 | 0.9 |

| 600 | 3.995 | 0.62 | 0.5 |

| 620 | 4.002 | 0.87 | 0.2 |

| 640 | 3.998 | 0.47 | 0.2 |

| 660 | 3.995 | 0.51 | 0.5 |

| 680 | 4.004 | 0.64 | 0.4 |

| 700 | 3.999 | 0.55 | 0.1 |

| 720 | 3.991 | 0.59 | 0.9 |

| 740 | 3.998 | 0.69 | 0.2 |

| 760 | 3.996 | 0.81 | 0.4 |

| 780 | 4.008 | 0.61 | 0.8 |

| 800 | 3.985 | 0.94 | 1.5 |

| Spectral Bands (nm) | Accuracy (%) | Kappa Coefficient |

|---|---|---|

| All Spectral Bands | 98.7 | 0.986 |

| 560 | 60.1 | 0.553 |

| 580 | 63.3 | 0.588 |

| 600 | 65.0 | 0.607 |

| 620 | 58.0 | 0.529 |

| 640 | 56.2 | 0.509 |

| 660 | 56.3 | 0.511 |

| 680 | 57.7 | 0.526 |

| 700 | 57.6 | 0.527 |

| 720 | 58.16 | 0.530 |

| 740 | 57.0 | 0.518 |

| 760 | 56.0 | 0.508 |

| 780 | 45.6 | 0.391 |

| 800 | 46.3 | 0.401 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ma, Y.; Zhang, H.; Wang, R.; Li, F.; He, T.; Liu, B.; Wang, Y.; Han, F. Underwater Sphere Classification Using AOTF-Based Multispectral LiDAR. Photonics 2025, 12, 998. https://doi.org/10.3390/photonics12100998

Ma Y, Zhang H, Wang R, Li F, He T, Liu B, Wang Y, Han F. Underwater Sphere Classification Using AOTF-Based Multispectral LiDAR. Photonics. 2025; 12(10):998. https://doi.org/10.3390/photonics12100998

Chicago/Turabian StyleMa, Yukai, Hao Zhang, Rui Wang, Fashuai Li, Tingting He, Boyu Liu, Yicheng Wang, and Fei Han. 2025. "Underwater Sphere Classification Using AOTF-Based Multispectral LiDAR" Photonics 12, no. 10: 998. https://doi.org/10.3390/photonics12100998

APA StyleMa, Y., Zhang, H., Wang, R., Li, F., He, T., Liu, B., Wang, Y., & Han, F. (2025). Underwater Sphere Classification Using AOTF-Based Multispectral LiDAR. Photonics, 12(10), 998. https://doi.org/10.3390/photonics12100998