DIALOGUE: A Generative AI-Based Pre–Post Simulation Study to Enhance Diagnostic Communication in Medical Students Through Virtual Type 2 Diabetes Scenarios

Abstract

1. Introduction

2. Materials and Methods

2.1. Study Design and Overview

2.2. Participants

2.3. Study Phases

2.3.1. Pre-Test Phase

2.3.2. Educational Intervention: AI-Based Training

2.3.3. Post-Test Phase

2.4. Evaluation Instruments

2.5. Data Collection and Analysis

2.6. Qualitative Analysis of AI Feedback

2.7. Use of Generative AI in the Study

2.8. Statistical Analysis

3. Results

3.1. Participant Flow and Baseline Characteristics

3.1.1. Participant Flow

3.1.2. Baseline Characteristics

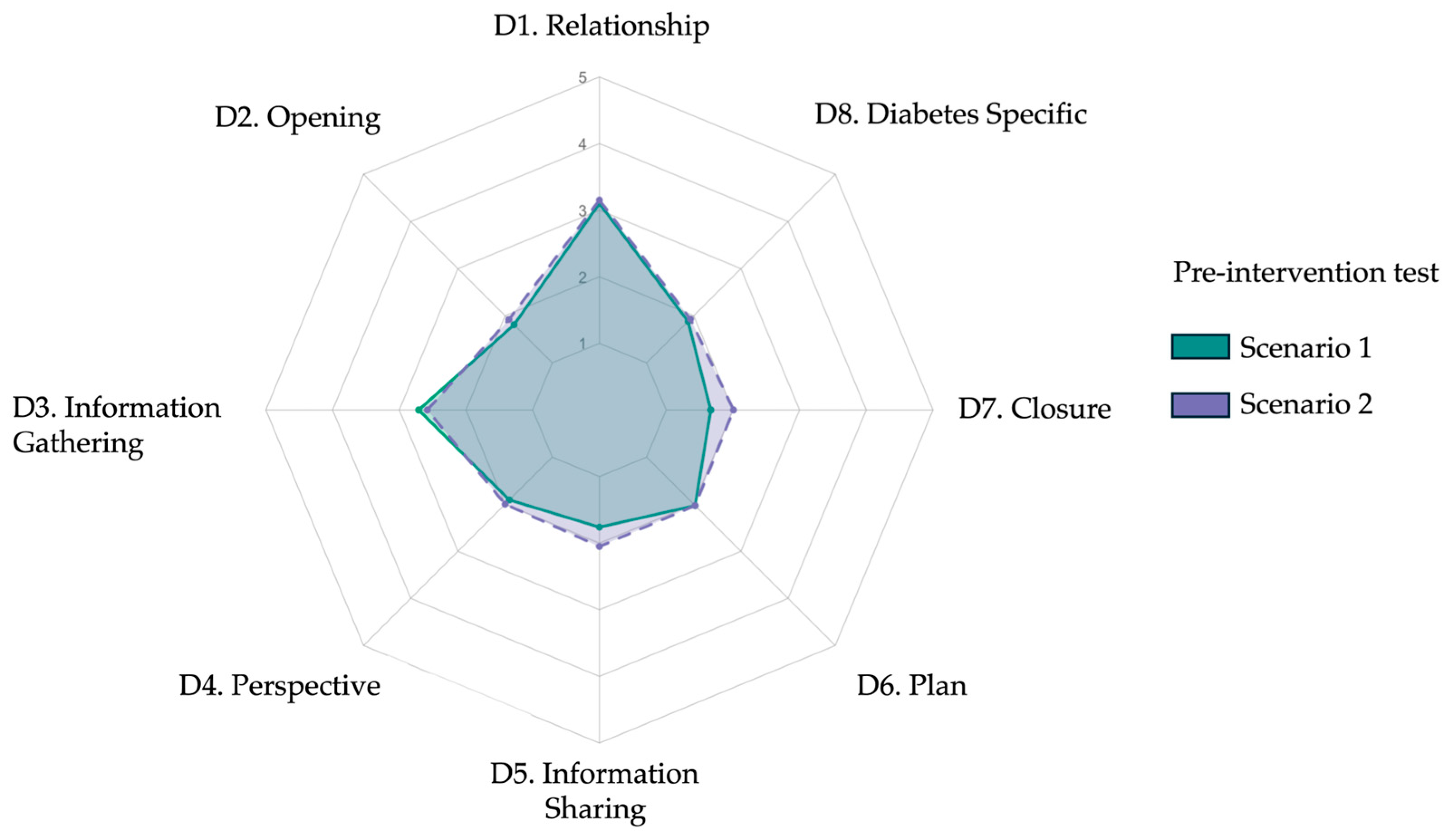

3.2. Baseline Diagnostic-Communication Performance

3.3. Post-Intervention Diagnostic Performance and Pre–Post Comparison

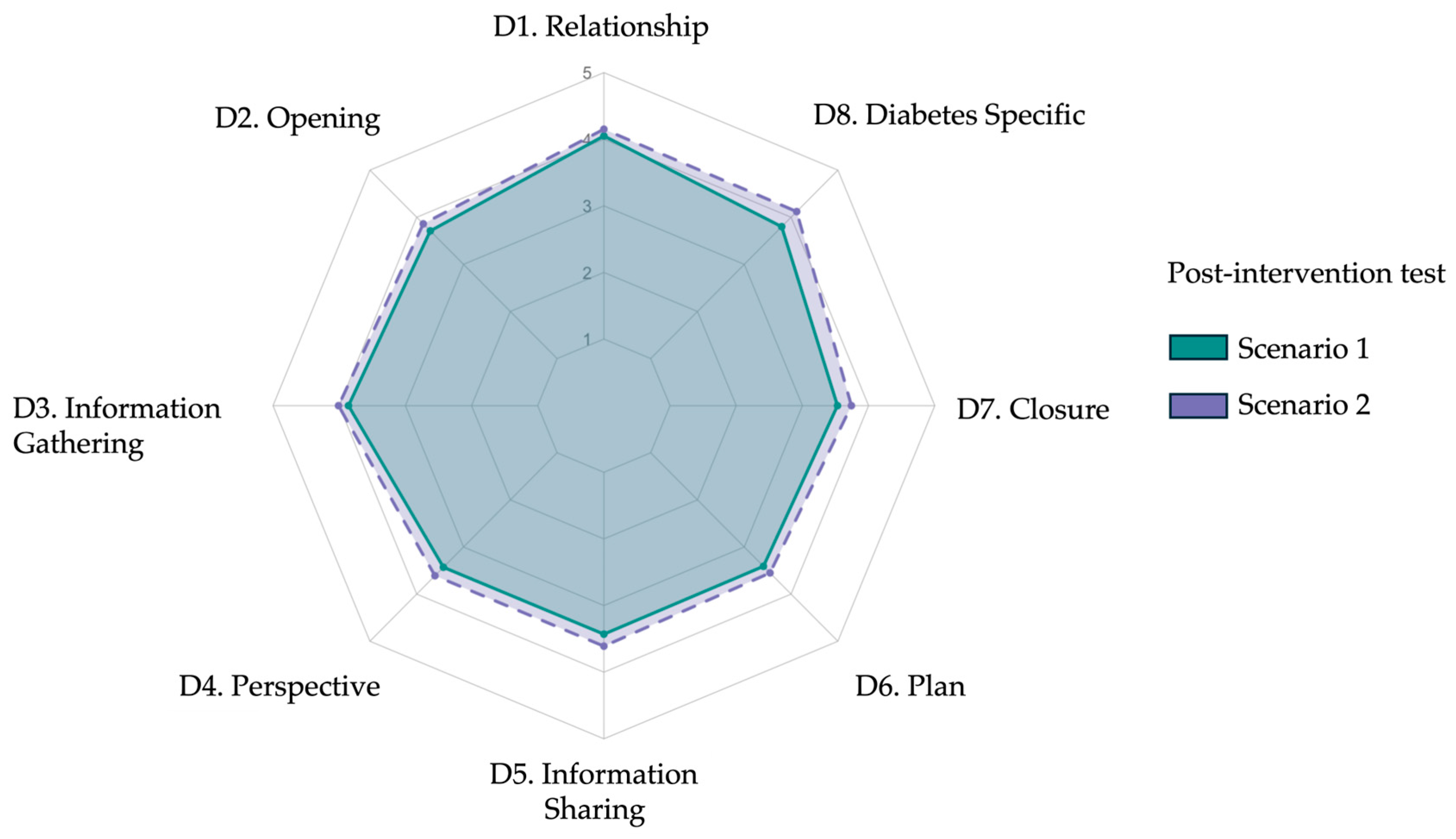

3.3.1. Post-Test Performance Across Scenarios

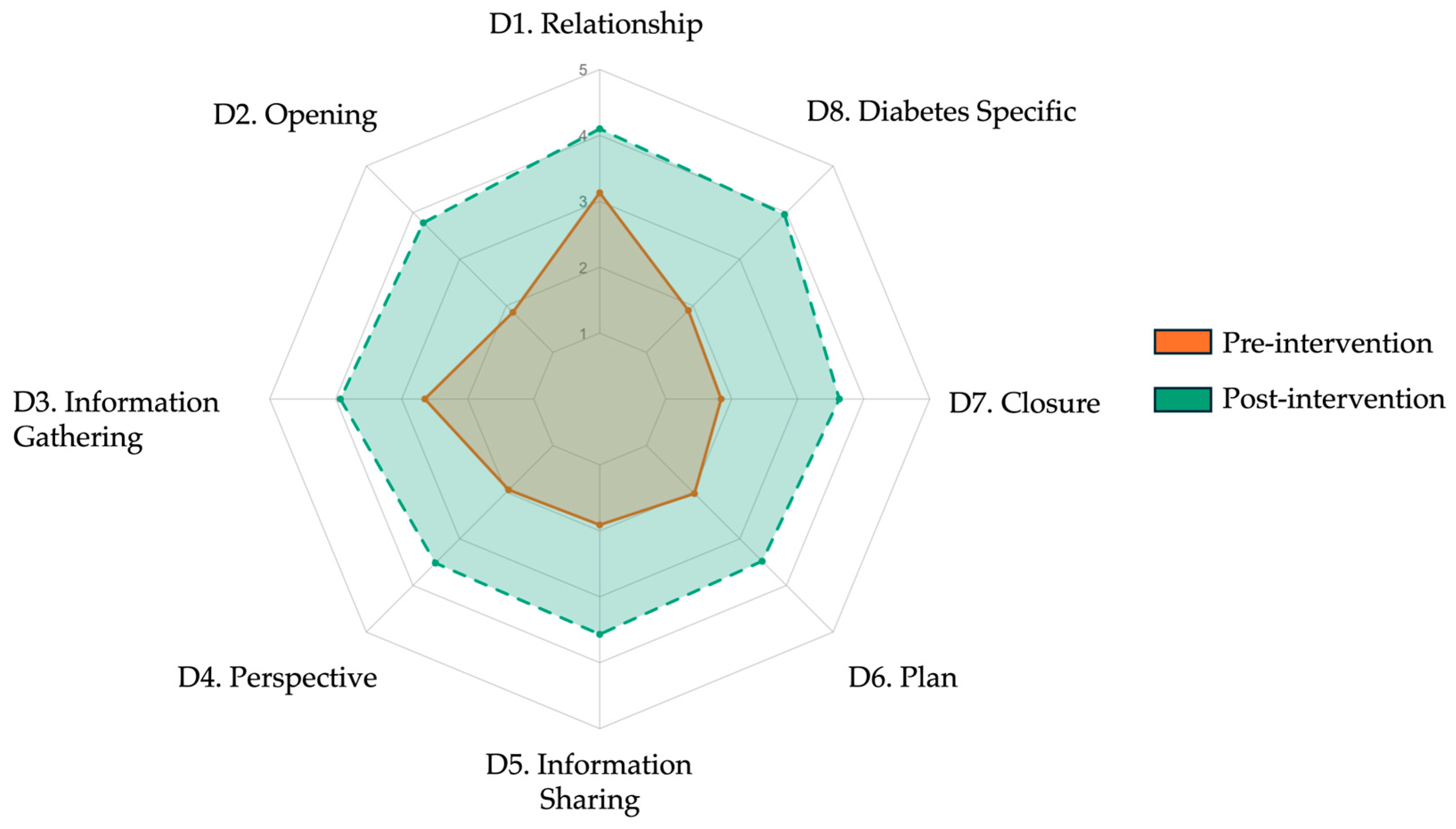

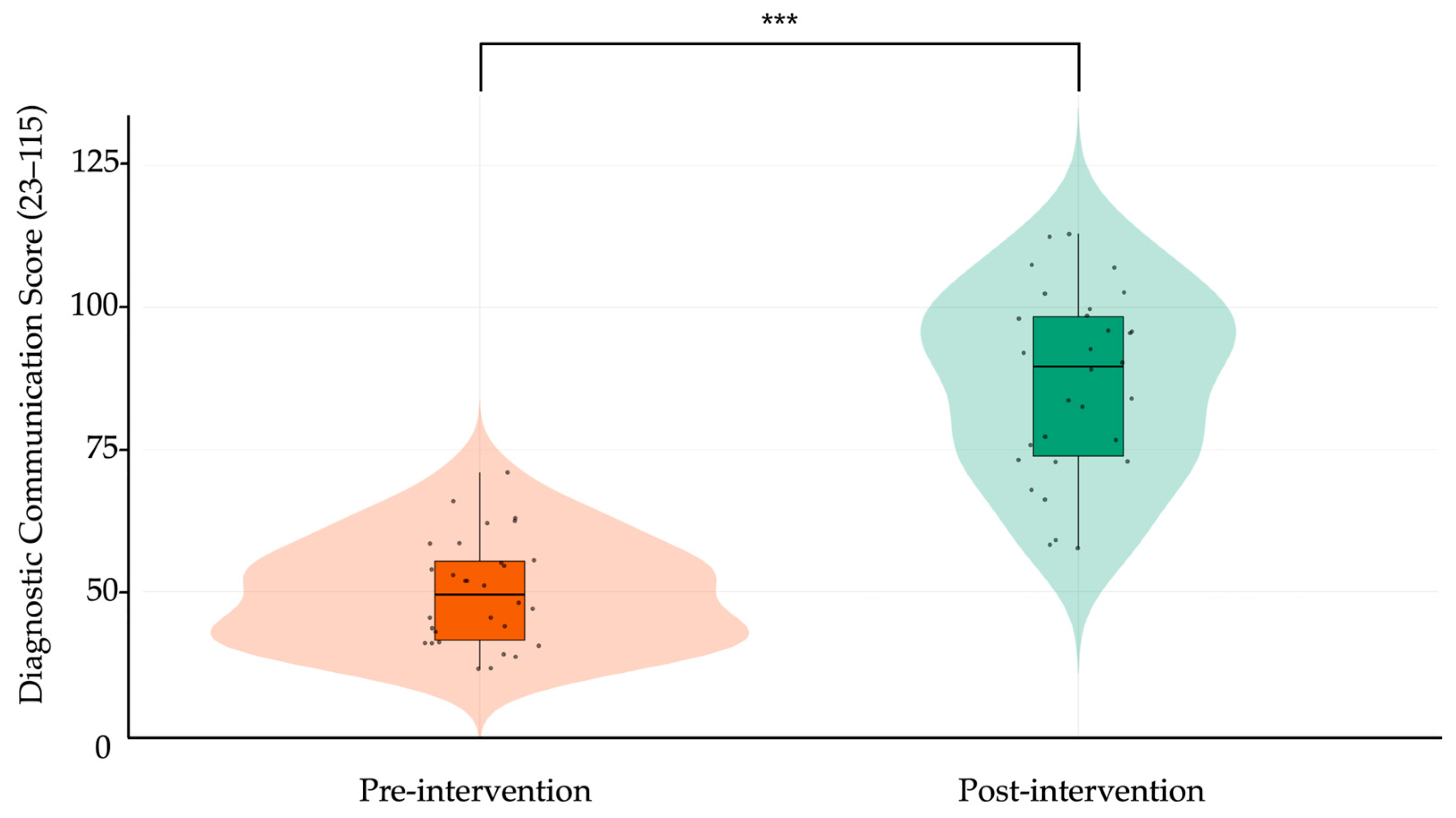

3.3.2. Pre–Post Intervention Gains

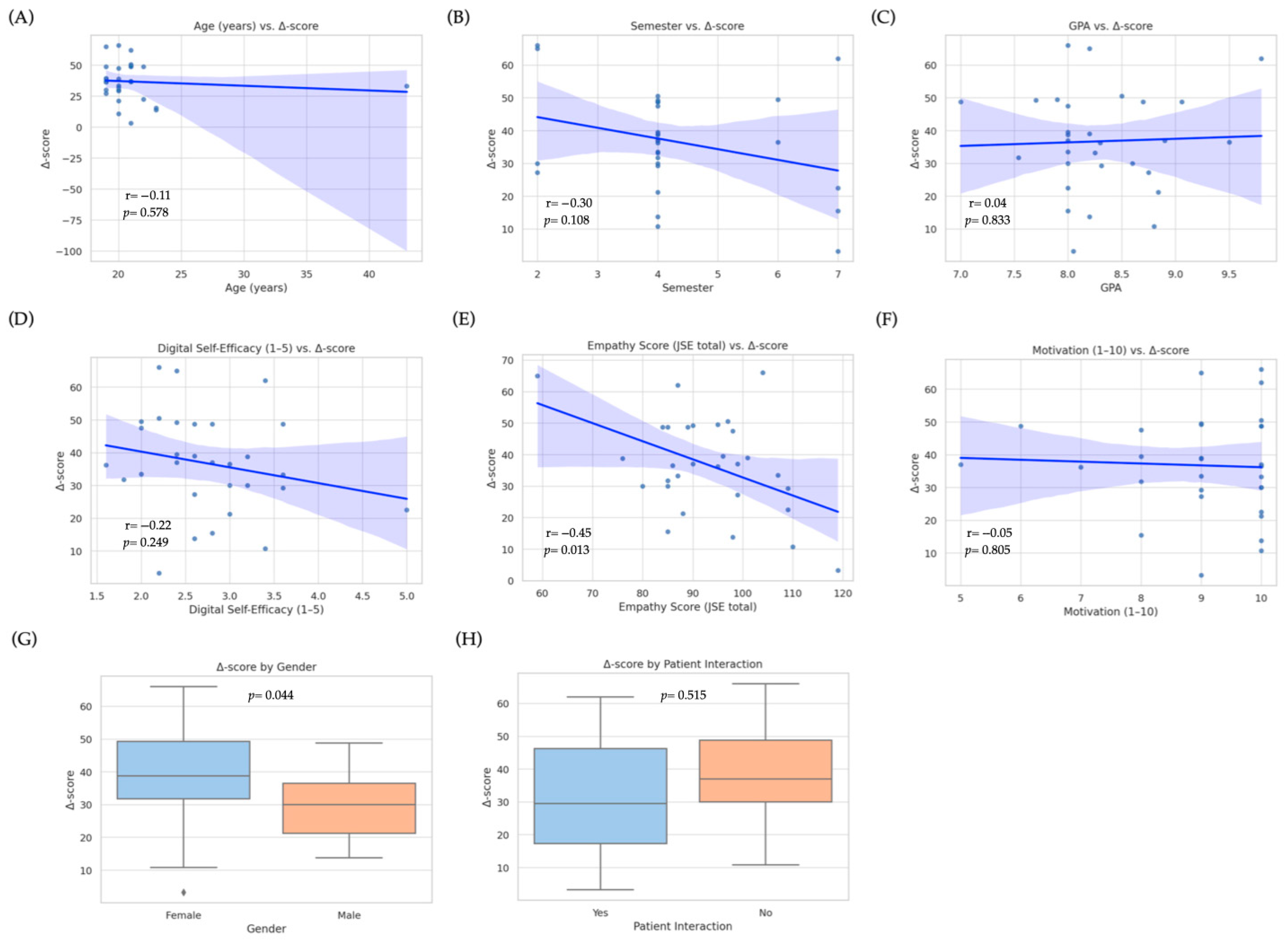

3.4. Predictors of Improvement

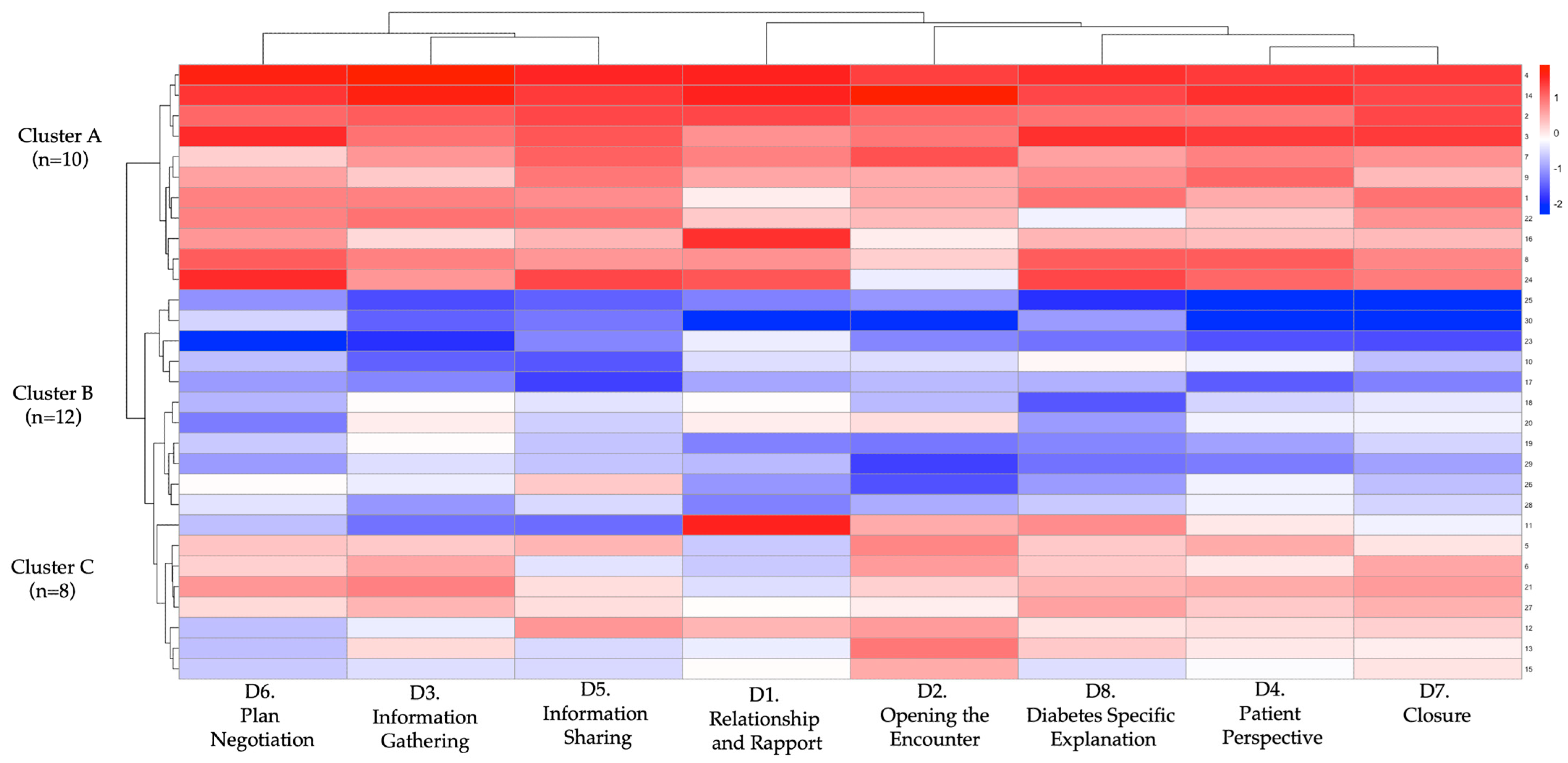

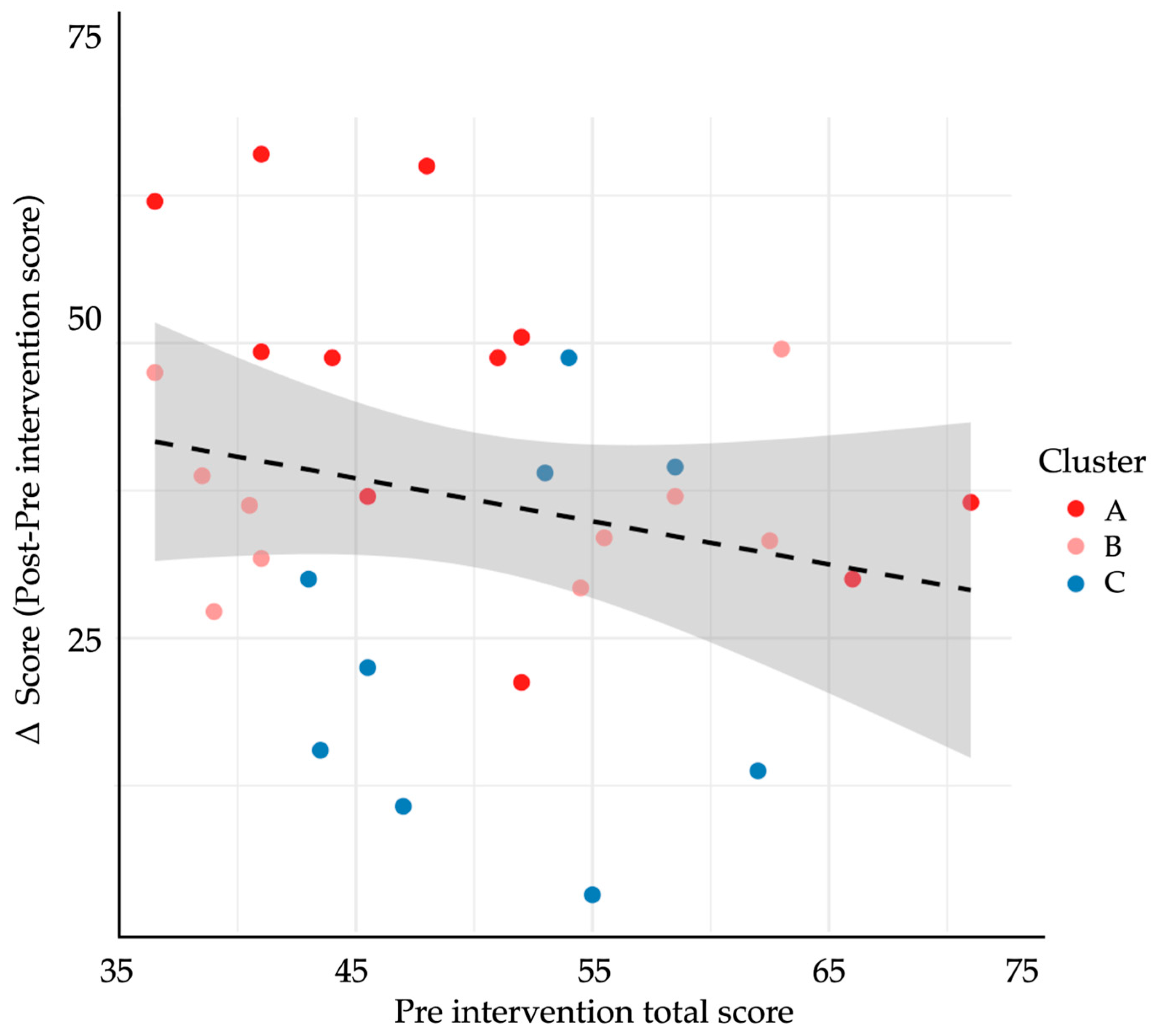

3.5. Learner Profiles and Cluster Patterns

3.6. Post-Test Surveys

3.7. Adverse Events and Missing Data

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AI | Artificial intelligence |

| AIC | Akaike information criterion |

| API | Application programming interface |

| GENAI | Generative artificial intelligence |

| GPA | Grade point average |

| GPT-4 | Generative Pretrained Transformer 4 |

| ICC | Intraclass correlation coefficient |

| ITT | Intention-to-treat |

| JSE | Jefferson Scale of Empathy |

| LLM | Large language model |

| OSCE | Objective structured clinical examination |

| SE | Standard error |

| SP | Standardized patient |

| SPIKES | Setting, perception, invitation, knowledge, emotions, strategy (protocol for breaking bad news) |

| T2DM | Type 2 diabetes mellitus |

| VIF | Variance inflation factor |

| WA | Weighted average |

References

- American Diabetes Association Professional Practice Committee. (2025). 4. Comprehensive medical evaluation and assessment of comorbidities: Standards of care in diabetes-2025. Diabetes Care, 48(Suppl. S1), S59–S85. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Chiu, J., Castro, B., Ballard, I., Nelson, K., Zarutskie, P., Olaiya, O. K., Song, D., & Zhao, Y. (2025). Exploration of the role of ChatGPT in teaching communication skills for medical students: A pilot study. Medical Science Educator. [Google Scholar] [CrossRef]

- Chokkakula, S., Chong, S., Yang, B., Jiang, H., Yu, J., Han, R., Attitalla, I. H., Yin, C., & Zhang, S. (2025). Quantum leap in medical mentorship: Exploring ChatGPT’s transition from textbooks to terabytes. Frontiers in Medicine, 12, 1517981. [Google Scholar] [CrossRef] [PubMed Central]

- Cook, D. A., Hatala, R., Brydges, R., Zendejas, B., Szostek, J. H., Wang, A. T., Erwin, P. J., & Hamstra, S. J. (2011). Technology-enhanced simulation for health professions education: A systematic review and meta-analysis. JAMA, 306(9), 978–988. [Google Scholar] [CrossRef]

- Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly, 13(3), 319–340. [Google Scholar] [CrossRef]

- Elendu, C., Amaechi, D. C., Okatta, A. U., Amaechi, E. C., Elendu, T. C., Ezeh, C. P., & Elendu, I. D. (2024). The impact of simulation-based training in medical education: A review. Medicine, 103(27), e38813. [Google Scholar] [CrossRef]

- Feng, S., & Shen, Y. (2023). ChatGPT and the future of medical education. Academic Medicine, 98(8), 867–868. [Google Scholar] [CrossRef]

- Hopewell, S., Chan, A.-W., Collins, G. S., Hróbjartsson, A., Moher, D., Schulz, K. F., Tunn, R., Aggarwal, R., Berkwits, M., Berlin, J. A., Bhandari, N., Butcher, N. J., Campbell, M. K., Chidebe, R. C. W., Elbourne, D., Farmer, A., Fergusson, D. A., Golub, R. M., Goodman, S. N., … Boutron, I. (2025). CONSORT 2025 statement: Updated guideline for reporting randomised trials. BMJ, 389, e081123. [Google Scholar] [CrossRef] [PubMed]

- Kalet, A., Pugnaire, M. P., Cole-Kelly, K., Janicik, R., Ferrara, E., Schwartz, M. D., Lipkin, M., Jr., & Lazare, A. (2004). Teaching communication in clinical clerkships: Models from the macy initiative in health communications. Academic Medicine, 79(6), 511–520. [Google Scholar] [CrossRef]

- Karagiannis, T., Advani, A., Davies, M. J., Del Prato, S., Dinneen, S. F., Kuss, O., Lorenzoni, V., Mathieu, C., Mauricio, D., Tankova, T., Zaccardi, F., Holt, R. I. G., & Tsapas, A. (2025). European association for the study of diabetes (EASD) Standard operating procedure for the development of guidelines. Diabetologia, 68(8), 1600–1615. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Kung, T. H., Cheatham, M., Medenilla, A., Sillos, C., De Leon, L., Elepaño, C., Madriaga, M., Aggabao, R., Diaz-Candido, G., Maningo, J., & Tseng, V. (2023). Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLoS Digital Health, 2(2), e0000198. [Google Scholar] [CrossRef] [PubMed Central]

- Makoul, G. (2001a). Essential elements of communication in medical encounters: The kalamazoo consensus statement. Academic Medicine, 76(4), 390–393. [Google Scholar] [CrossRef] [PubMed]

- Makoul, G. (2001b). The SEGUE Framework for teaching and assessing communication skills. Patient Education and Counseling, 45(1), 23–34. [Google Scholar] [CrossRef] [PubMed]

- Moezzi, M., Rasekh, S., Zare, E., & Karimi, M. (2024). Evaluating clinical communication skills of medical students, assistants, and professors. BMC Medical Education, 24(1), 19. [Google Scholar] [CrossRef]

- Nestel, D., & Tierney, T. (2007). Role-play for medical students learning about communication: Guidelines for maximising benefits. BMC Medical Education, 7(1), 3. [Google Scholar] [CrossRef]

- Öncü, S., Torun, F., & Ülkü, H. H. (2025). AI-powered standardised patients: Evaluating ChatGPT-4o’s impact on clinical case management in intern physicians. BMC Medical Education, 25(1), 278. [Google Scholar] [CrossRef]

- Poei, D. M., Tang, M. N., Kwong, K. M., Sakai, D. H. T., Choi, S. Y. T., & Chen, J. J. (2022). Increasing medical students’ confidence in delivering bad news using different teaching modalities. Hawaii Journal of Health and Social Welfare, 81(11), 302–308. [Google Scholar] [PubMed Central]

- Sallam, M. (2023). ChatGPT utility in healthcare education, research, and practice: Systematic review on the promising perspectives and valid concerns. Healthcare, 11(6), 887. [Google Scholar] [CrossRef] [PubMed Central]

- Santos, M. S., Cunha, L. M., Ferreira, A. J., & Drummond-Lage, A. P. (2025). From classroom to clinic: Addressing gaps in teaching and perceived preparedness for breaking bad news in medical education. BMC Medical Education, 25(1), 449. [Google Scholar] [CrossRef] [PubMed]

- Scherr, R., Halaseh, F. F., Spina, A., Andalib, S., & Rivera, R. (2023). ChatGPT interactive medical simulations for early clinical education: Case study. JMIR Medical Education, 9, e49877. [Google Scholar] [CrossRef]

- Shorey, S., Mattar, C., Pereira, T. L., & Choolani, M. (2024). A scoping review of ChatGPT’s role in healthcare education and research. Nurse Education Today, 135, 106121. [Google Scholar] [CrossRef]

- Skryd, A., & Lawrence, K. (2024). ChatGPT as a tool for medical education and clinical decision-making on the wards: Case study. JMIR Formative Research, 8, e51346. [Google Scholar] [CrossRef] [PubMed]

- Webb, J. J. (2023). Proof of concept: Using ChatGPT to teach emergency physicians how to break bad news. Cureus, 15(5), e38755. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Weisman, D., Sugarman, A., Huang, Y. M., Gelberg, L., Ganz, P. A., & Comulada, W. S. (2025). Development of a GPT-4-powered virtual simulated patient and communication training platform for medical students to practice discussing abnormal mammogram results with patients: Multiphase study. JMIR Formative Research, 9, e65670. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

| Variable | Category/Units | N (%) or Mean ± SD |

|---|---|---|

| Age | Years | 21.1 ± 4.1 |

| Sex | Female | 22 (73.33%) |

| Male | 8 (26.66%) | |

| Clinical semester | 2 | 4 (13.33%) |

| 4 | 19 (63.33%) | |

| 6 | 2 (6.66%) | |

| 7 | 5 (16.66%) | |

| Cumulative GPA | 0–10 | 8.31 ± 0.50 |

| Prior formal course in diagnostic communication | Yes | 0 (0%) |

| Prior ECOE completed | Median (IQR) | 0 (0%) |

| Prior experience with real patients | Yes | 12 (40.00%) |

| Prior ChatGPT/LLM use | ≥10 interactions | 21 (70%) |

| <10 interactions | 9 (30%) | |

| Never | 1 (3.33%) | |

| Digital self-efficacy † | Very high/high | 13 (43.33%) |

| Medium | 16 (53.33%) | |

| Low | 1 (3.33%) | |

| Device most used for simulation | Laptop/PC | 22 (73.33%) |

| Tablet | 4 (13.33%) | |

| Smartphone | 4 (13.33%) | |

| Self-rated internet quality | Good | 17 (56.66%) |

| Medium | 10 (32.25%) | |

| Poor | 3 (10%) | |

| Motivation score (1–10) | 0–10 | 9 |

| Self-confidence score, 1–5 | Explain lab results | 3.3 ± 0.7 |

| Explain DM criteria | 3.0 ± 0.8 | |

| Convey bad news empathetically | 3.2 ± 0.8 | |

| Teach-back | 2.3 ± 0.8 | |

| Anxiety reduction | 2.4 ± 0.7 | |

| Jefferson Scale of Empathy, total (20–140) | - | 116 ± 15 |

| Item | Domain/Skill (Abridged) | Scenario 1 Mean ± SD | Scenario 2 Mean ± SD | p |

|---|---|---|---|---|

| 1 | Relationship | 3.10 ± 0.58 | 3.15 ± 0.55 | 0.50 |

| 1.1 | Eye contact and open posture | 3.86 ± 0.73 | 3.83 ± 0.79 | 0.74 |

| 1.2 | Friendly body language | 3.46 ± 0.86 | 3.56 ± 0.72 | 0.41 |

| 1.3 | Self-introduction and role | 2.00 ± 1.16 | 2.06 ± 1.20 | 0.70 |

| 2 | Opening | 1.81 ± 0.71 | 1.92 ± 0.69 | 0.22 |

| 2.1 | Greeting and ID verification | 1.73 ± 0.63 | 1.63 ± 0.71 | 0.18 |

| 2.2 | States purpose of visit | 1.90 ± 0.84 | 2.13 ± 0.89 | 0.06 |

| 2.3 | Explores patient expectations | 1.80 ± 0.98 | 2.00 ± 0.94 | 0.22 |

| 3 | Information gathering | 2.71 ± 0.64 | 2.58 ± 0.69 | 0.12 |

| 3.1 | Uses open questions | 2.00 ± 1.01 | 2.30 ± 0.99 | 0.76 |

| 3.2 | Listens without interrupting | 4.00 ± 0.18 | 3.90 ± 0.54 | 0.32 |

| 3.3 | Summarizes to confirm | 2.13 ± 1.04 | 1.83 ± 1.11 | 0.08 |

| 4 | Patient perspective | 1.91 ± 0.85 | 2.00 ± 0.84 | 0.36 |

| 4.1 | Elicits beliefs about illness | 1.80 ± 1.06 | 1.70 ± 1.02 | 0.57 |

| 4.2 | Explores worries/concerns | 2.13 ± 0.97 | 1.96 ± 1.03 | 0.23 |

| 4.3 | Assesses daily-life impact | 1.8 ± 0.92 | 2.33 ± 1.12 | 0.02 |

| 5 | Information sharing | 1.76 ± 0.76 | 2.05 ± 0.83 | 0.18 |

| 5.1 | Explains diagnosis in plain language | 1.80 ± 1.06 | 1.70 ± 1.02 | 0.57 |

| 5.2 | Uses visual aids/examples | 2.13 ± 0.97 | 1.96 ± 1.03 | 0.23 |

| 5.3 | Checks understanding (teach-back) | 1.80 ± 0.92 | 2.33 ± 1.12 | 0.01 |

| 6 | Plan negotiation | 2.03 ± 0.65 | 2.03 ± 0.53 | 1.00 |

| 6.1 | Discusses therapeutic options | 2.36 ± 0.85 | 2.10 ± 0.99 | 0.07 |

| 6.2 | Involves patient in decisions | 2.06 ± 0.90 | 2.16 ± 0.74 | 0.44 |

| 6.3 | Negotiates realistic goals | 1.66 ± 0.80 | 1.83 ± 0.83 | 0.20 |

| 7 | Closure | 1.67 ± 0.62 | 2.01 ± 0.59 | 0.06 |

| 7.1 | Provides final summary | 1.63 ± 0.71 | 1.86 ± 0.81 | 0.05 |

| 7.2 | Checks for residual questions | 1.53 ± 0.93 | 1.86 ± 0.93 | 0.07 |

| 7.3 | Closes with empathy and follow-up | 1.86 ± 0.97 | 2.30 ± 0.83 | 0.02 |

| 8 | Diabetes specific | 1.88 ± 0.56 | 1.93 ± 0.56 | 0.73 |

| 8.1 | Explains lab results (HbA1c) | 1.80 ± 0.71 | 1.90 ± 0.71 | 0.50 |

| 8.2 | Provides initial plan and alleviates anxiety | 1.96 ± 0.71 | 1.90 ± 0.75 | 0.57 |

| Total | Overall score (23–115) | 48.83 ± 9.65 | 51.1 ± 9.82 | 0.05 |

| Item | Domain/Skill (Abridged) | Scenario 1 Mean ± SD | Scenario 2 Mean ± SD | p |

|---|---|---|---|---|

| 1 | Relationship | 4.05 ± 0.63 | 4.15 ± 0.67 | 0.36 |

| 1.1 | Eye contact and open posture | 4.15 ± 0.54 | 4.26 ± 0.86 | 0.21 |

| 1.2 | Friendly body language | 4.26 ± 0.55 | 4.26 ± 0.62 | 1.00 |

| 1.3 | Self-introduction and role | 3.85 ± 0.84 | 3.81 ± 0.86 | 0.82 |

| 2 | Opening | 3.71 ± 0.64 | 3.86 ± 0.81 | 0.25 |

| 2.1 | Greeting and ID verification | 3.81 ± 0.71 | 4.35 ± 0.84 | 0.01 |

| 2.2 | States purpose of visit | 3.68 ± 0.78 | 3.93 ± 0.96 | 0.11 |

| 2.3 | Explores patient expectations | 3.63 ± 0.81 | 3.30 ± 1.04 | 0.16 |

| 3 | Information gathering | 3.86 ± 0.60 | 4.01 ± 0.68 | 0.19 |

| 3.1 | Uses open questions | 3.88 ± 0.67 | 3.96 ± 0.00 | 0.56 |

| 3.2 | Listens without interrupting | 4.21 ± 0.31 | 4.43 ± 0.40 | 0.01 |

| 3.3 | Summarizes to confirm | 3.50 ± 1.09 | 3.63 ± 1.12 | 0.08 |

| 4 | Patient perspective | 3.43 ± 0.62 | 3.61 ± 0.54 | 0.31 |

| 4.1 | Elicits beliefs about illness | 3.21 ± 0.78 | 3.15 ± 0.82 | 0.79 |

| 4.2 | Explores worries/concerns | 3.91 ± 0.57 | 3.60 ± 0.53 | 0.11 |

| 4.3 | Assesses daily-life impact | 3.50 ± 0.75 | 3.76 ± 0.52 | 0.15 |

| 5 | Information sharing | 3.43 ± 1.05 | 3.61 ± 1.04 | 0.31 |

| 5.1 | Explains diagnosis in plain language | 3.21 ± 1.09 | 3.15 ± 0.97 | 0.79 |

| 5.2 | Uses visual aids/examples | 3.91 ± 1.16 | 3.60 ± 1.10 | 0.11 |

| 5.3 | Checks understanding (teach-back) | 3.50 ± 1.23 | 3.76 ± 1.16 | 0.15 |

| 6 | Plan negotiation | 3.41 ± 0.95 | 3.55 ± 1.00 | 0.34 |

| 6.1 | Discusses therapeutic options | 3.53 ± 0.97 | 3.70 ± 0.90 | 0.42 |

| 6.2 | Involves patient in decisions | 3.43 ± 1.11 | 3.55 ± 1.16 | 0.37 |

| 6.3 | Negotiates realistic goals | 3.26 ± 1.15 | 3.40 ± 1.10 | 0.47 |

| 7 | Closure | 3.53 ± 1.11 | 3.74 ± 0.99 | 0.14 |

| 7.1 | Provides final summary | 3.30 ± 1.24 | 3.46 ± 0.93 | 0.36 |

| 7.2 | Checks for residual questions | 3.62 ± 0.97 | 3.75 ± 1.02 | 0.41 |

| 7.3 | Closes with empathy and follow-up | 3.68 ± 1.28 | 4.01 ± 1.10 | 0.06 |

| 8 | Diabetes specific | 3.80 ± 0.81 | 4.12 ± 0.79 | 0.03 |

| 8.1 | Explains lab results (HbA1c) | 3.81 ± 0.80 | 4.05 ± 0.71 | 0.12 |

| 8.2 | Provides initial plan and alleviates anxiety | 3.78 ± 0.97 | 4.20 ± 0.74 | 0.02 |

| Total | Overall score (23–115) | 84.46 ± 17.75 | 88.93 ± 17.37 | 0.08 |

| Item | Domain/Skill (Abridged) | Pre-Intervention Mean ± SD | Post-Intervention Mean ± SD | Δ Mean | 95% CI (Δ) | Cohen’s d | p |

|---|---|---|---|---|---|---|---|

| 1 | Relationship | 3.13 ± 0.56 | 4.10 ± 0.65 | 0.97 | 0.74–1.20 | 1.59 | 0.001 |

| 1.1 | Eye contact and open posture | 3.85 ± 0.75 | 4.20 ± 0.60 | 0.35 | 0.09–0.61 | 0.51 | 0.004 |

| 1.2 | Friendly body language | 3.51 ± 0.79 | 4.26 ± 0.58 | 0.75 | 0.49–1.01 | 1.08 | 0.001 |

| 1.3 | Self-introduction and role | 2.03 ± 1.19 | 3.83 ± 0.84 | 1.80 | 1.41–2.19 | 1.74 | 0.001 |

| 2 | Opening | 1.86 ± 0.70 | 3.78 ± 0.73 | 1.92 | 1.65–2.19 | 2.68 | 0.001 |

| 2.1 | Greeting and ID verification | 1.68 ± 0.67 | 4.08 ± 0.83 | 2.40 | 2.12–2.68 | 3.18 | 0.001 |

| 2.2 | States purpose of visit | 2.01 ± 0.87 | 3.80 ± 0.87 | 1.79 | 1.46–2.12 | 2.05 | 0.001 |

| 2.3 | Explores patient expectations | 1.90 ± 0.96 | 3.46 ± 0.94 | 1.56 | 1.20–1.92 | 1.64 | 0.001 |

| 3 | Information gathering | 2.65 ± 0.56 | 3.93 ± 0.64 | 1.28 | 1.05–1.51 | 2.12 | 0.001 |

| 3.1 | Uses open questions | 2.01 ± 0.99 | 3.92 ± 0.68 | 1.91 | 1.59–2.23 | 2.24 | 0.001 |

| 3.2 | Listens without interrupting | 3.95 ± 0.38 | 4.32 ± 0.37 | 0.37 | 0.23–0.51 | 0.98 | 0.001 |

| 3.3 | Summarizes to confirm | 1.98 ± 1.11 | 3.56 ± 1.09 | 1.58 | 1.17–1.99 | 1.43 | 0.001 |

| 4 | Patient perspective | 1.95 ± 0.56 | 3.52 ± 1.04 | 1.57 | 1.25–1.89 | 1.87 | 0.001 |

| 4.1 | Elicits beliefs about illness | 1.75 ± 1.03 | 3.18 ± 1.13 | 1.43 | 1.02–1.84 | 1.32 | 0.001 |

| 4.2 | Explores worries/concerns | 2.05 ± 0.99 | 3.75 ± 1.13 | 1.70 | 1.30–2.10 | 1.60 | 0.001 |

| 4.3 | Assesses daily-life impact | 2.06 ± 1.05 | 3.63 ± 1.18 | 1.57 | 1.15–1.99 | 1.40 | 0.001 |

| 5 | Information sharing | 1.91 ± 0.56 | 3.57 ± 0.92 | 1.66 | 1.37–1.95 | 2.17 | 0.001 |

| 5.1 | Explains diagnosis in plain language | 2.28 ± 1.09 | 3.54 ± 1.05 | 1.26 | 0.86–1.66 | 1.17 | 0.001 |

| 5.2 | Uses visual aids/examples | 1.91 ± 0.99 | 3.06 ± 1.33 | 1.15 | 0.71–1.59 | 0.98 | 0.001 |

| 5.3 | Checks understanding (teach-back) | 1.53 ± 0.92 | 3.65 ± 1.10 | 2.12 | 1.74–2.50 | 2.09 | 0.001 |

| 6 | Plan negotiation | 2.03 ± 0.56 | 3.48 ± 0.97 | 1.45 | 1.15–1.75 | 1.83 | 0.001 |

| 6.1 | Discusses therapeutic options | 2.23 ± 092 | 3.61 ± 1.00 | 1.38 | 1.02–1.74 | 1.43 | 0.001 |

| 6.2 | Involves patient in decisions | 2.11 ± 0.82 | 3.49 ± 1.13 | 1.38 | 1.01–1.75 | 1.39 | 0.001 |

| 6.3 | Negotiates realistic goals | 1.75 ± 0.81 | 3.33 ± 1.11 | 1.58 | 1.21–1.95 | 1.62 | 0.001 |

| 7 | Closure | 1.84 ± 0.62 | 3.63 ± 1.05 | 1.79 | 1.46–2.12 | 2.07 | 0.001 |

| 7.1 | Provides final summary | 1.75 ± 0.77 | 3.38 ± 1.24 | 1.63 | 1.24–2.02 | 1.57 | 0.001 |

| 7.2 | Checks for residual questions | 1.70 ± 0.94 | 3.68 ± 0.99 | 1.98 | 1.62–2.34 | 2.05 | 0.001 |

| 7.3 | Closes with empathy and follow-up | 2.08 ± 0.92 | 3.85 ± 1.19 | 1.77 | 1.37–2.17 | 1.66 | 0.001 |

| 8 | DM-specific | 1.90 ± 0.56 | 3.96 ± 0.81 | 2.06 | 1.80–2.32 | 2.95 | 0.001 |

| 8.1 | Explains lab results (HbA1c) | 1.85 ± 0.70 | 3.93 ± 0.88 | 2.08 | 1.78–2.38 | 2.61 | 0.001 |

| 8.2 | Provides initial plan and alleviates anxiety | 1.93 ± 0.73 | 3.99 ± 0.89 | 2.06 | 1.75–2.37 | 2.53 | 0.001 |

| Total | Overall score (23–115) | 49.96 ± 9.72 | 86.70 ± 17.56 | 36.74 | 31.39–42.09 | 2.58 | 0.001 |

| Outcome Variable | Cluster A (n = 10) | Cluster B (n = 12) | Cluster C (n = 8) | p |

|---|---|---|---|---|

| Δ-score (total rubric) | 58.7 ± 10.2 | 33.4 ± 11.5 | 16.2 ± 9.3 | <0.001 |

| Relationship (Domain 1) | 1.23 ± 0.40 | 0.68 ± 0.28 | 0.31 ± 0.17 | <0.001 |

| Opening (Domain 2) | 1.79 ± 0.52 | 0.96 ± 0.41 | 0.37 ± 0.25 | <0.001 |

| Information gathering (D3) | 1.42 ± 0.61 | 0.89 ± 0.36 | 0.34 ± 0.30 | <0.001 |

| Patient perspective (D4) | 1.51 ± 0.77 | 0.79 ± 0.45 | 0.21 ± 0.33 | <0.001 |

| Information sharing (D5) | 1.76 ± 0.91 | 1.03 ± 0.59 | 0.45 ± 0.38 | <0.001 |

| Plan negotiation (Domain 6) | 1.55 ± 0.87 | 0.92 ± 0.44 | 0.33 ± 0.28 | <0.001 |

| Closure (Domain 7) | 1.62 ± 0.89 | 0.87 ± 0.53 | 0.41 ± 0.26 | <0.001 |

| DM-specific (Domain 8) | 2.04 ± 0.80 | 1.07 ± 0.62 | 0.54 ± 0.41 | <0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the University Association of Education and Psychology. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Suárez-García, R.X.; Chavez-Castañeda, Q.; Orrico-Pérez, R.; Valencia-Marin, S.; Castañeda-Ramírez, A.E.; Quiñones-Lara, E.; Ramos-Cortés, C.A.; Gaytán-Gómez, A.M.; Cortés-Rodríguez, J.; Jarquín-Ramírez, J.; et al. DIALOGUE: A Generative AI-Based Pre–Post Simulation Study to Enhance Diagnostic Communication in Medical Students Through Virtual Type 2 Diabetes Scenarios. Eur. J. Investig. Health Psychol. Educ. 2025, 15, 152. https://doi.org/10.3390/ejihpe15080152

Suárez-García RX, Chavez-Castañeda Q, Orrico-Pérez R, Valencia-Marin S, Castañeda-Ramírez AE, Quiñones-Lara E, Ramos-Cortés CA, Gaytán-Gómez AM, Cortés-Rodríguez J, Jarquín-Ramírez J, et al. DIALOGUE: A Generative AI-Based Pre–Post Simulation Study to Enhance Diagnostic Communication in Medical Students Through Virtual Type 2 Diabetes Scenarios. European Journal of Investigation in Health, Psychology and Education. 2025; 15(8):152. https://doi.org/10.3390/ejihpe15080152

Chicago/Turabian StyleSuárez-García, Ricardo Xopan, Quetzal Chavez-Castañeda, Rodrigo Orrico-Pérez, Sebastián Valencia-Marin, Ari Evelyn Castañeda-Ramírez, Efrén Quiñones-Lara, Claudio Adrián Ramos-Cortés, Areli Marlene Gaytán-Gómez, Jonathan Cortés-Rodríguez, Jazel Jarquín-Ramírez, and et al. 2025. "DIALOGUE: A Generative AI-Based Pre–Post Simulation Study to Enhance Diagnostic Communication in Medical Students Through Virtual Type 2 Diabetes Scenarios" European Journal of Investigation in Health, Psychology and Education 15, no. 8: 152. https://doi.org/10.3390/ejihpe15080152

APA StyleSuárez-García, R. X., Chavez-Castañeda, Q., Orrico-Pérez, R., Valencia-Marin, S., Castañeda-Ramírez, A. E., Quiñones-Lara, E., Ramos-Cortés, C. A., Gaytán-Gómez, A. M., Cortés-Rodríguez, J., Jarquín-Ramírez, J., Aguilar-Marchand, N. G., Valdés-Hernández, G., Campos-Martínez, T. E., Vilches-Flores, A., Leon-Cabrera, S., Méndez-Cruz, A. R., Jay-Jímenez, B. O., & Saldívar-Cerón, H. I. (2025). DIALOGUE: A Generative AI-Based Pre–Post Simulation Study to Enhance Diagnostic Communication in Medical Students Through Virtual Type 2 Diabetes Scenarios. European Journal of Investigation in Health, Psychology and Education, 15(8), 152. https://doi.org/10.3390/ejihpe15080152