Cross-Line Transfer of Initial Juice Sugar Content Prediction Model for Sugarcane Milling

Abstract

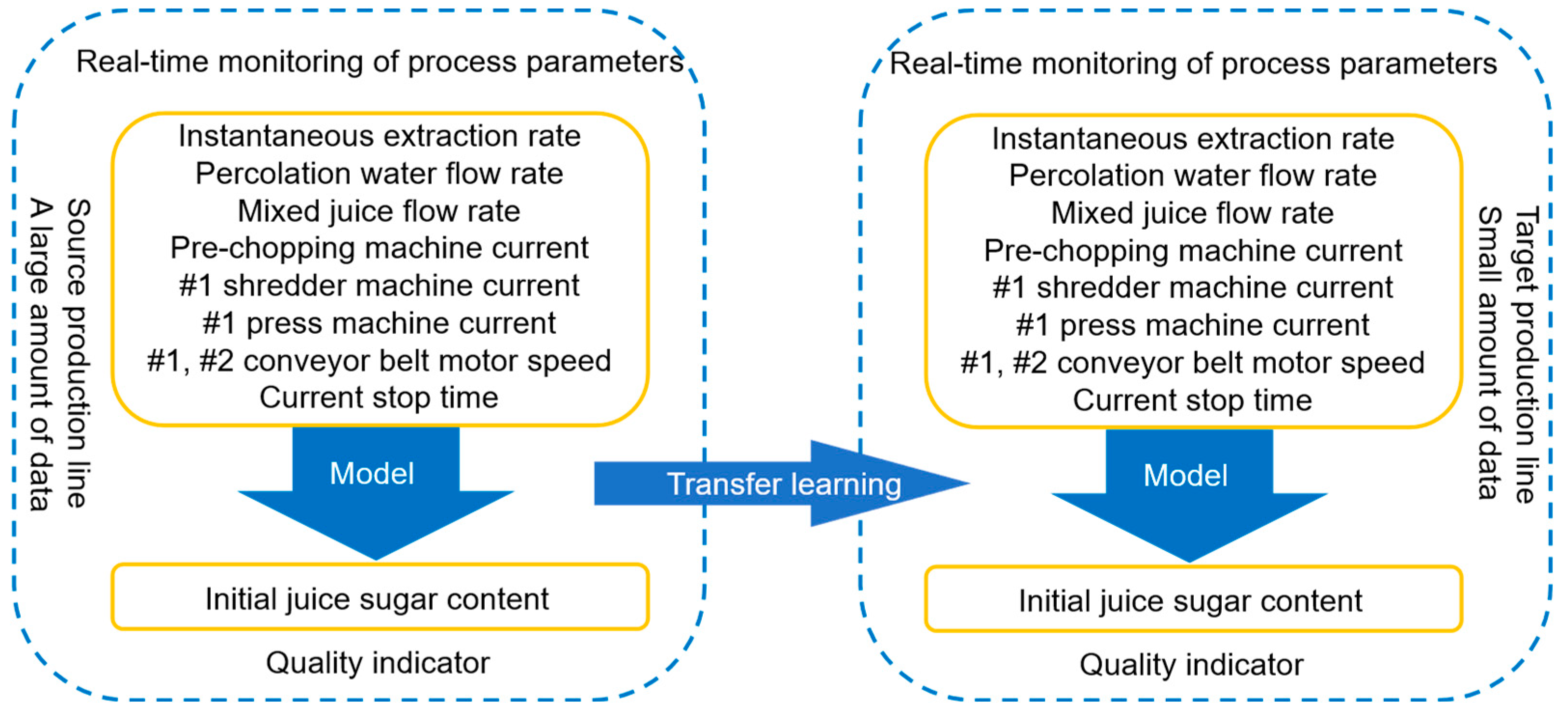

1. Introduction

- 1.

- Pre-training and Fine-tuning Strategy: We employ a transfer learning strategy where a model is first pre-trained on a source production line with abundant data and subsequently fine-tuned using a small amount of data from the target production line. This approach mitigates the performance degradation caused by domain shift.

- 2.

- Integration of Adversarial Semi-Supervised Learning: We incorporate adversarial semi-supervised learning during both the pre-training and fine-tuning stages. This allows the model to fully leverage the information contained within the vast amounts of unlabeled data present in both domains, thereby enhancing the model’s generalization capability.

- 3.

- Experimental Validation: We conduct comprehensive experiments using real-world data from two sugarcane milling production lines. The results validate the predictive effectiveness of the proposed method. Furthermore, ablation studies are performed to confirm the individual contribution of each key component of our approach.

2. Data and Methods

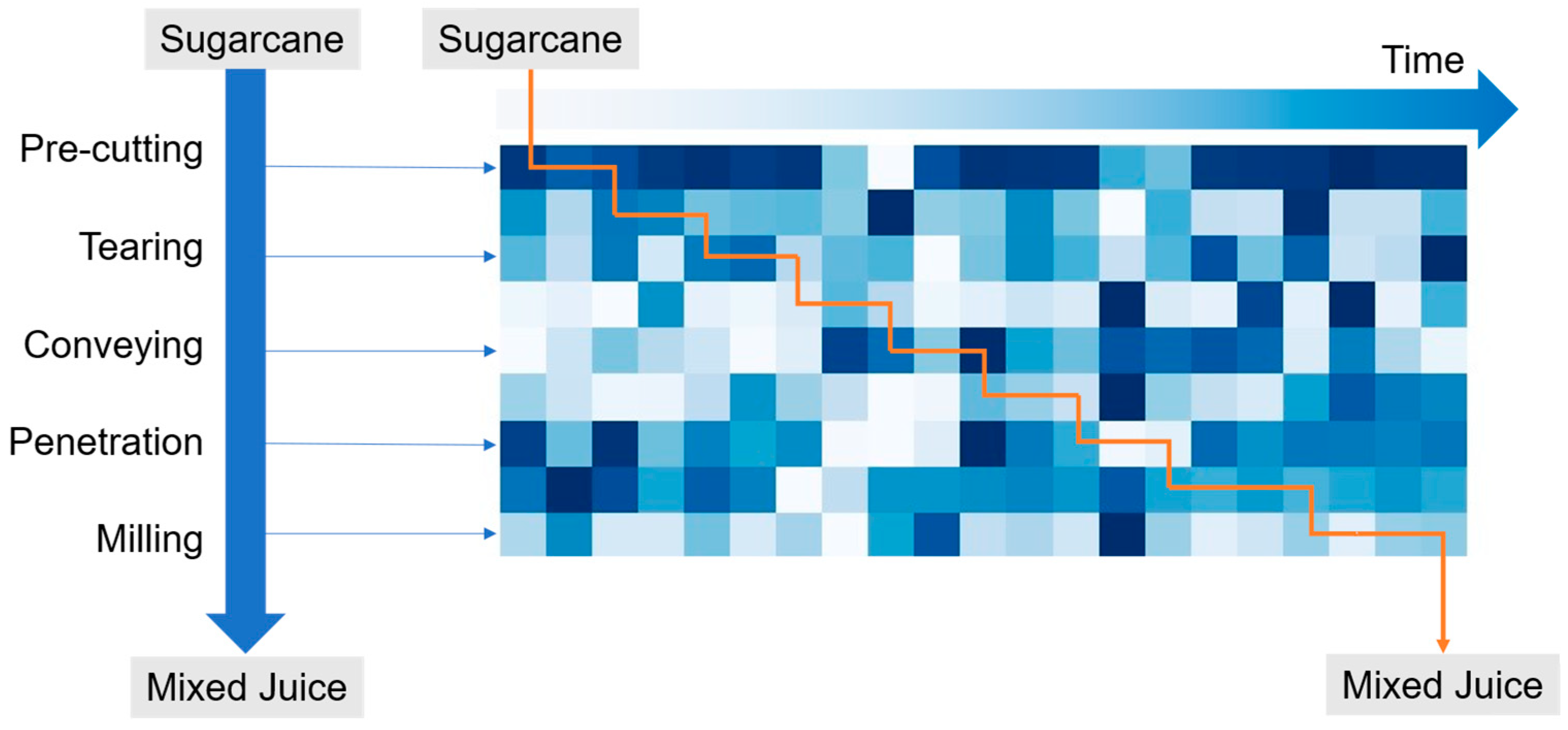

2.1. Sugarcane Milling Data

2.2. Problem Definition

- 1.

- The source production line data is abundant, while the target production line data is scarce, that is ≫ , ≫ .

- 2.

- There is an abundance of unlabeled data, while labeled data is scarce, i.e., ≫ , ≫ .

- 3.

- Domain Adaptation Problem: The feature space and target space are identical, i.e., and . However, the joint probability distributions differ, as .

- 4.

- Covariate Shift, specifically manifested as .

- 5.

- The conditional probability distributions are similar, i.e., .

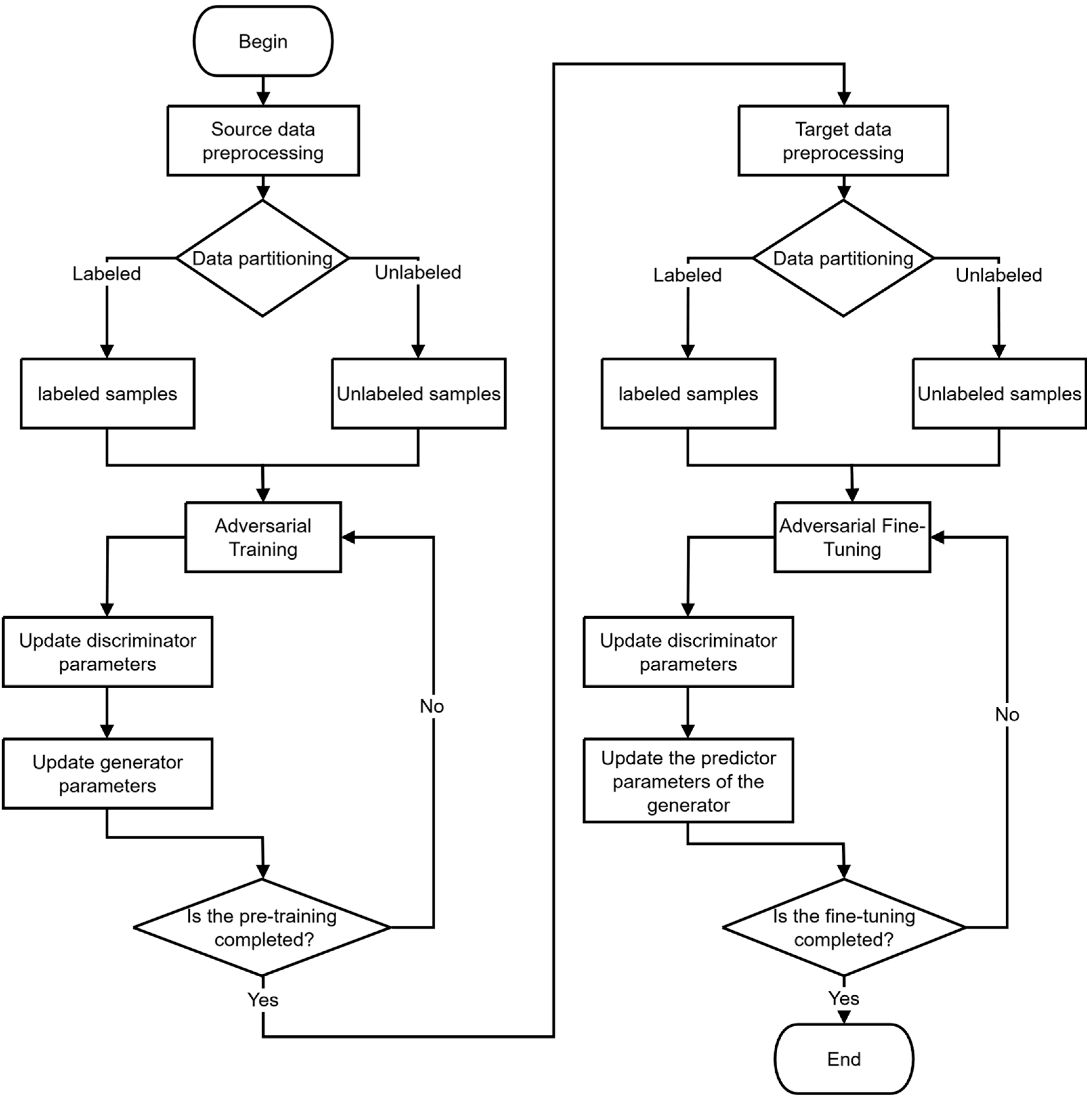

2.3. Pre-Training and Fine-Tuning

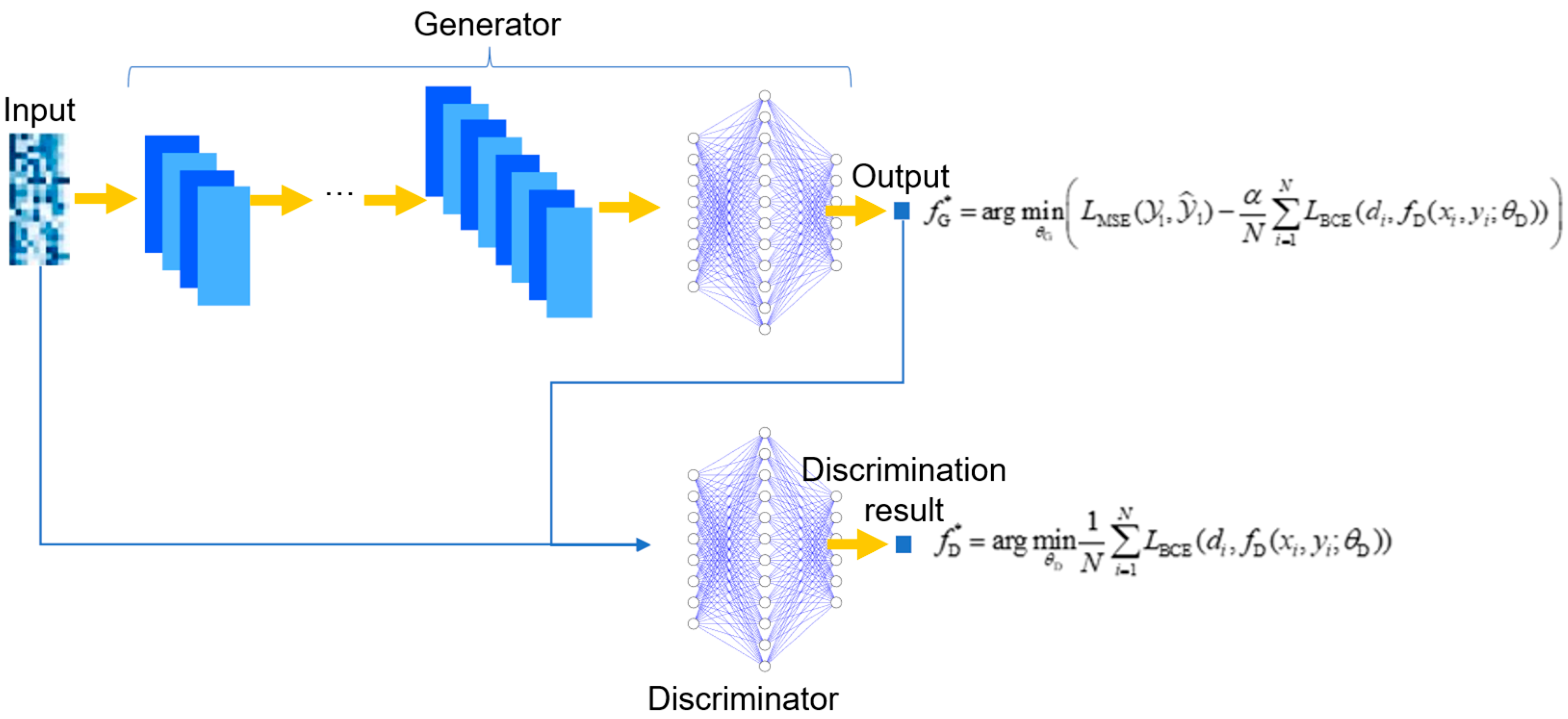

2.4. Adversarial-Based Semi-Supervised Learning

- (1)

- Input the labeled data and the unlabeled data into the generator to obtain the predictions for the labeled data and the pseudo-labels for the unlabeled data.

- (2)

- Input the labeled data and their ground-truth labels , as well as the unlabeled data and their pseudo-labels (generated by the generator) into the discriminator. The discriminator determines whether the labels ( or ) paired with the input data ( or ) are real (ground-truth) or pseudo.

- (3)

- Optimize the discriminator using the following objective function to determine as accurately as possible whether the sample is a labeled sample:where represents the parameters of the discriminator, represents the total number of samples, is a binary indicator variable: if the label for sample is a ground-truth measurement, and if it is a pseudo-label. is the discriminator’s estimated probability that the label for input is real. represents the binary cross-entropy loss function, which is used to measure the difference between the discriminator’s judgment and the actual situation, specifically as follows:

- (4)

- Optimize the generator using the following objective function, enabling the generator to accurately predict labeled data while also deceiving the discriminator as much as possible to generate more “realistic” pseudo-labels for unlabeled data, thereby enhancing the generalization ability of the generator:where represents the parameters of the generator, is the adversarial weight coefficient, a key hyperparameter that balances the contribution of the supervised loss and the adversarial loss, thus governing the update focus of the generator. is the predicted value of the labeled data, which is a function of the generator parameters , that is, , represents the prediction loss of the labeled data, which generally refers to the mean squared error loss in regression problems, that is:

- (5)

- By alternately optimizing steps 3 and 4, the generator and discriminator are balanced, ultimately resulting in a well-trained generator model that can be used for prediction tasks. The specific implementation process for adversarial semi-supervised learning is detailed in Algorithm 1.

Algorithm 1 Adversarial-based semi-supervised learning Input:

—Labeled feature set

—Labeled label set

—Unlabeled feature set

—Generator loss weight

—Number of iterations

—Discriminator update steps per round

—Generator update steps per round

Output:

—Final generator parameters

Begin

Initialize

For iteration = 1 to :

Generator prediction: Generate predictions for labeled and unlabeled data

:

Compute and accumulate discriminator loss on labeled and unlabeled data

For step = 1 to :

Compute and accumulate generator loss on labeled data

Compute and accumulate generator adversarial loss on unlabeled data

End

2.5. Adversarial Semi-Supervised Pre-Training Fine-Tuning Strategy

3. Results and Discussion

3.1. Data Preprocessing

3.1.1. Data Cleaning

3.1.2. Data Normalization

3.1.3. Constructing Coarse-Grained Data

3.1.4. Data Distribution Adaptation

- (1)

- During data processing, the weighted average of every adjacent m data points is taken, with higher weights assigned to closer time points and lower weights to more distant ones. This method reduces the time delay. The weighted average is:Among them,represents the value of the th data point in the sequence.is the position of the data point currently under consideration.is the weight of the th data point, specifically defined asIn this paper, is set to 2, and is set to 10.

- (2)

- Using the residual data, which is obtained by subtracting the weighted moving average of the data from the initial data, as the training data for the model.

- (3)

- When predicting future test data, to ensure the model focuses on learning the irregular fluctuations in the data, the weighted moving average of the previous data is used as the mean for the next data point.

3.2. Model Structure and Hyperparameter Settings

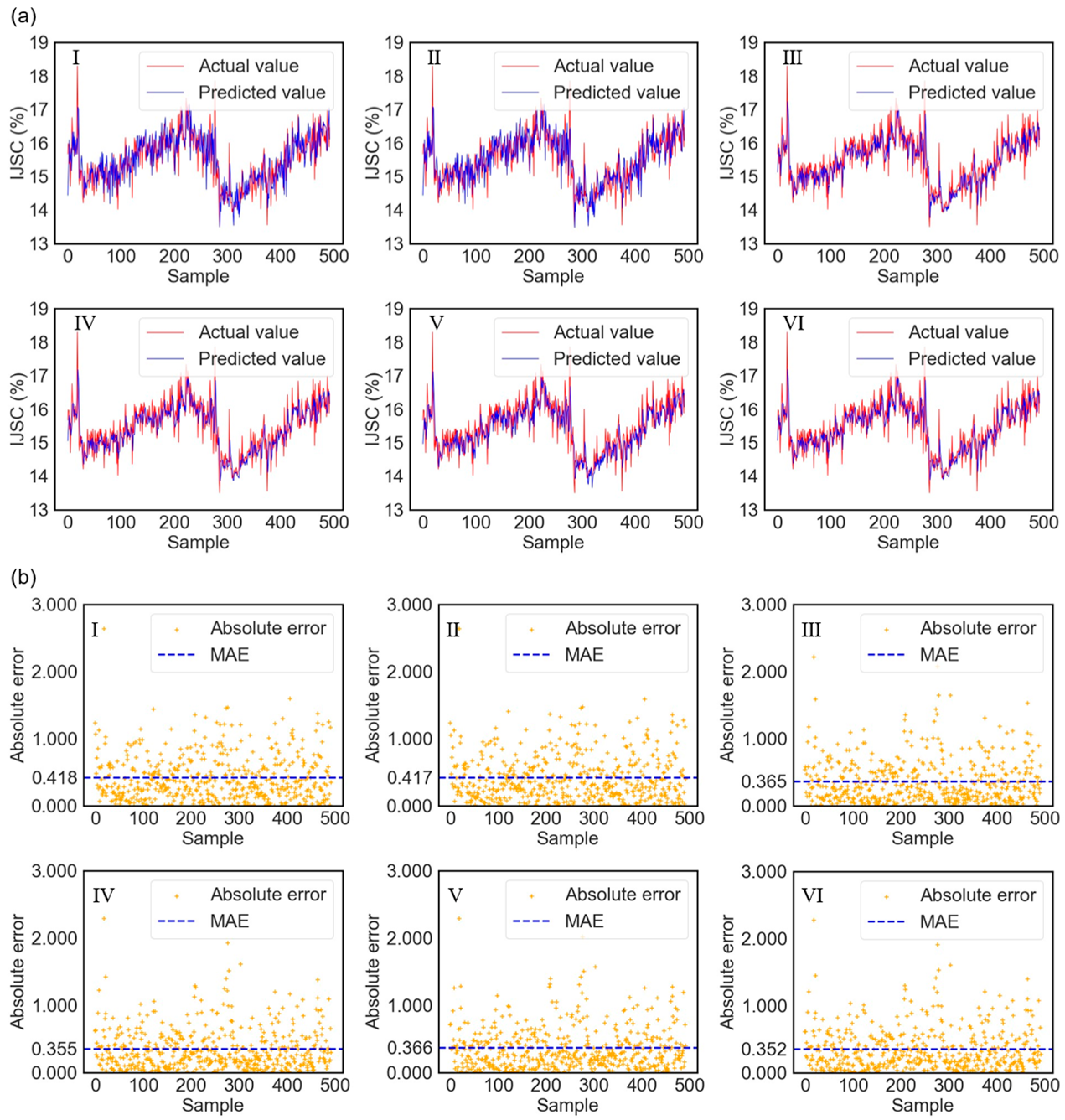

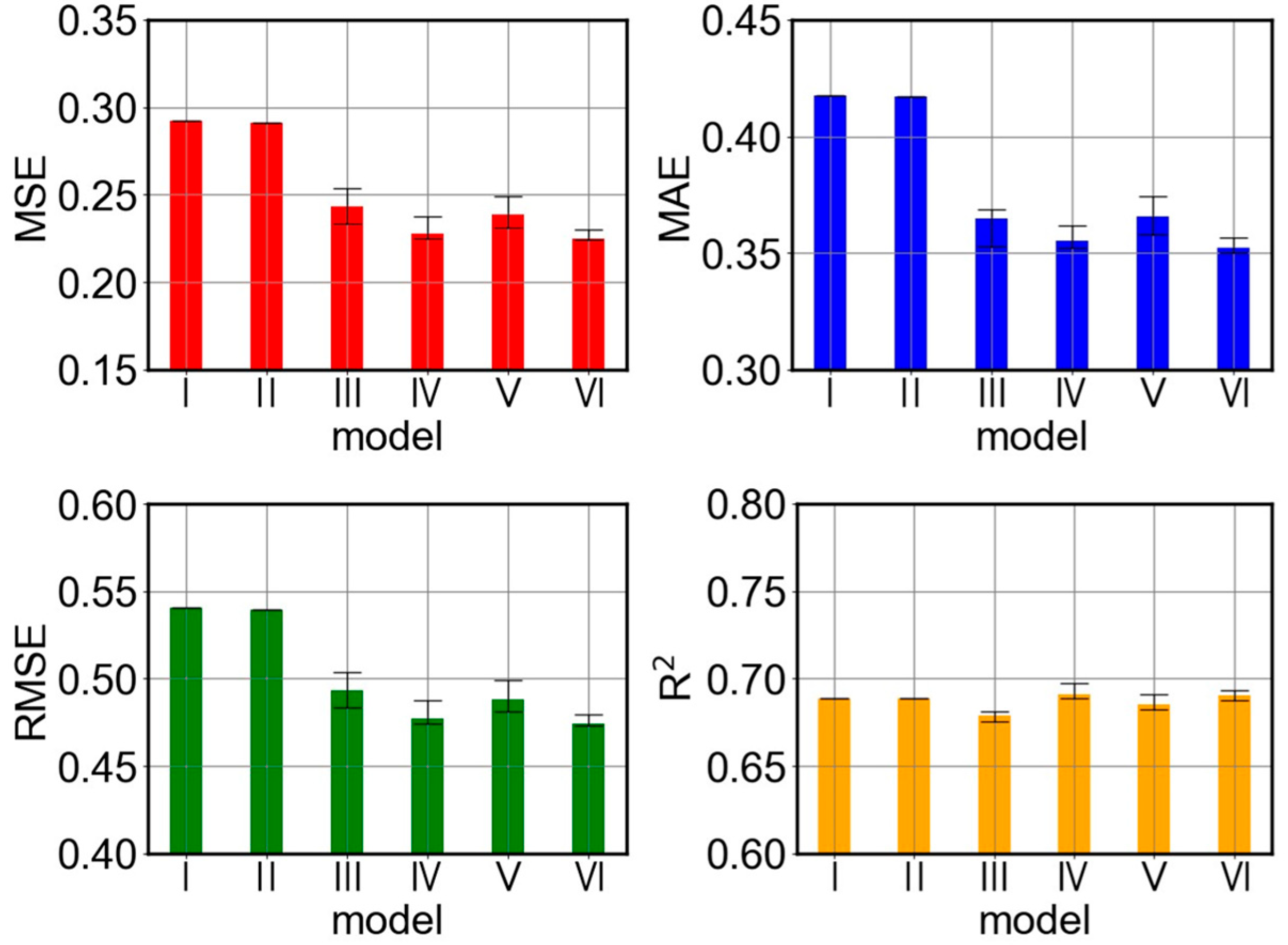

3.3. Prediction Results and Analysis

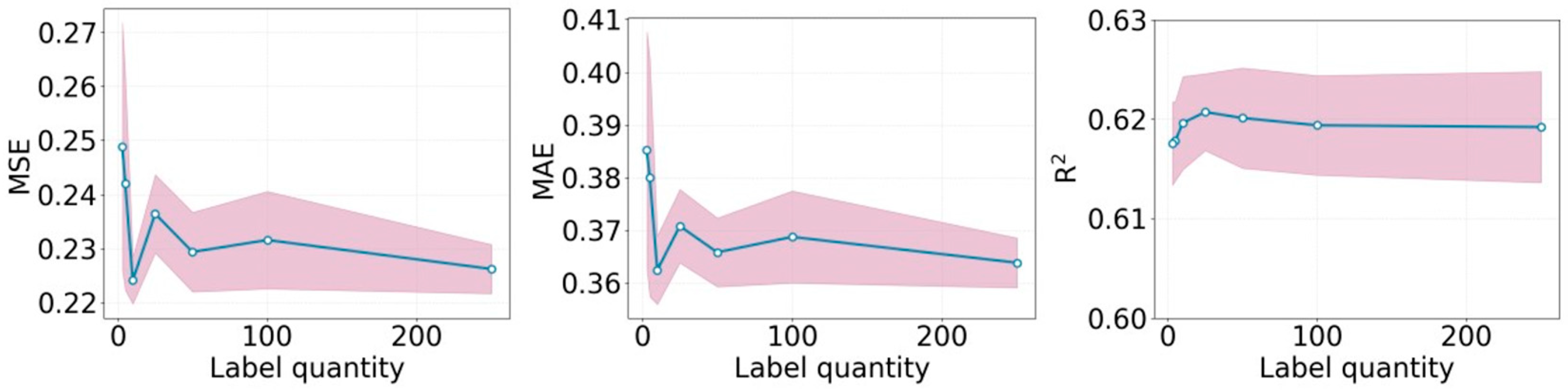

3.4. Effect of Label Quantity on Model Performance

4. Conclusions

- (1)

- The number and types of process parameters vary across different production lines, and it is of significant importance to study how to enable data-driven models to transfer between production lines with different parameters.

- (2)

- Collect more environmental data, sugarcane condition data, and mechanical assembly data to more accurately predict the quality indicators of sugarcane milling.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Oktarini, D.; Mohruni, A.S.; Sharif, S.; Yanis, M.; Madagaskar. Optimum milling parameters of sugarcane juice production using Artificial Neural Networks (ANN). J. Phys. Conf. Ser. 2019, 1167, 012016. [Google Scholar] [CrossRef]

- Meng, Y.; Chen, J.; Li, Z.; Zhang, Y.; Liang, L.; Zhu, J. Soft sensor with deep feature extraction for a sugarcane milling system. J. Food Process Eng. 2022, 45, e14066. [Google Scholar] [CrossRef]

- Li, Z.; Chen, J.; Meng, Y.; Zhu, J.; Li, J.; Zhang, Y.; Li, C. Multi-objective optimization of sugarcane milling system operations based on a deep data-driven model. Foods 2022, 11, 3845. [Google Scholar] [CrossRef] [PubMed]

- Qiu, M.; Meng, Y.; Chen, J.; Chen, Y.; Li, Z.; Li, J. Dual multi-objective optimisation of the cane milling process. Comput. Ind. Eng. 2023, 179, 109146. [Google Scholar] [CrossRef]

- Yang, T.; Mao, H.; Yin, Y.; Zhong, J.; Wen, J.; Ding, J.; Duan, Q. Hybrid data-driven modeling for accurate prediction of quality parameters in sugarcane milling with fine-tuning. J. Food Process Eng. 2023, 46, e14436. [Google Scholar] [CrossRef]

- Li, C.; Liu, Y.; Meng, Y.; Duan, Q. Sugarcane juice extraction prediction with Physical Informed Neural Networks. J. Food Eng. 2024, 364, 111774. [Google Scholar] [CrossRef]

- Xu, K.; Li, Y.; Liu, C.; Liu, X.; Hao, X.; Gao, J.; Maropoulos, P.G. Advanced Data Collection and Analysis in Data-Driven Manufacturing Process. Chin. J. Mech. Eng. (Engl. Ed.) 2020, 33, 1–21. [Google Scholar] [CrossRef]

- Fisher, O.J.; Watson, N.J.; Escrig, J.E.; Witt, R.; Porcu, L.; Bacon, D.; Rigley, M.; Gomes, R.L. Considerations, challenges and opportunities when developing data-driven models for process manufacturing systems. Comput. Chem. Eng. 2020, 140, 106881. [Google Scholar] [CrossRef]

- Yosinski, J.; Clune, J.; Bengio, Y.; Lipson, H. How transferable are features in deep neural networks? Adv. Neural Inf. Process. Syst. 2014, 27, 3320–3328. [Google Scholar] [CrossRef]

- Wang, H.; Liu, M.; Shen, W. Industrial-generative pre-trained transformer for intelligent manufacturing systems. IET Coll. Intel. Manuf. 2023, 5, e12078. [Google Scholar] [CrossRef]

- Wu, Y.; Liu, D.; Yuan, X.; Wang, Y. A just-in-time fine-tuning framework for deep learning of SAE in adaptive data-driven modeling of time-varying industrial processes. IEEE Sens. J. 2020, 21, 3497–3505. [Google Scholar] [CrossRef]

- Yesilbas, D.; Arnold, S.; Felker, A. Rethinking Pre-Training in Industrial Quality Control. In Proceedings of the 17th International Conference on Wirtschaftsinformatik, Nuremberg, Germany, 21–23 February 2022; Available online: https://aisel.aisnet.org/wi2022/analytics_talks/analytics_talks/2 (accessed on 14 March 2022).

- Yuan, H.; Yuan, Z.; Yu, S. Generative biomedical entity linking via knowledge base-guided pre-training and synonyms-aware fine-tuning. In Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Seattle, WA, USA, 10–15 July 2022; pp. 4038–4048. [Google Scholar] [CrossRef]

- Yarowsky, D. Unsupervised word sense disambiguation rivaling supervised methods. In Proceedings of the 33rd Annual Meeting of the Association for Computational Linguistics, Cambridge, MA, USA, 26–30 June 1995; pp. 189–196. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the 28th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; Volume 2, pp. 2672–2680. Available online: https://dl.acm.org/doi/10.5555/2969033.2969125 (accessed on 14 March 2022).

- Akhyar, F.; Furqon, E.N.; Lin, C.Y. Enhancing Precision with an Ensemble Generative Adversarial Network for Steel Surface Defect Detectors (EnsGAN-SDD). Sensors 2022, 22, 4257. [Google Scholar] [CrossRef] [PubMed]

- Qian, C.; Yu, W.; Lu, C.; Griffith, D.; Golmie, N. Toward generative adversarial networks for the industrial internet of things. IEEE Internet Things J. 2022, 9, 19147–19159. [Google Scholar] [CrossRef]

- Wang, A.; Zhang, S.; Chen, C.; Zheng, Z. P-4.10: Simulation Algorithm of Industrial Defects based on Generative Adversarial Network. Int. Conf. Disp. Technol. (ICDT 2020) 2021, 52, 510–513. [Google Scholar] [CrossRef]

- Abuduweili, A.; Li, X.; Shi, H.; Xu, C.-Z.; Dou, D. Adaptive consistency regularization for semi-supervised transfer learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6923–6932. [Google Scholar] [CrossRef]

- Sun, X.; Qiao, Y. Fake comments identification based on symbiotic articulation oftransfer learning and semi-supervised learning. J. Nanjing Univ. (Nat. Sci.) 2022, 58, 846–855. [Google Scholar] [CrossRef]

- Jiang, X.; Ge, Z. Augmented multidimensional convolutional neural network for industrial soft sensing. IEEE Trans. Instrum. Meas. 2021, 70, 1–10. [Google Scholar] [CrossRef]

- Perry, M.B. The Weighted Moving Average Technique. In Wiley Encyclopedia of Operations Research and Management Science; Wiley: Hoboken, NJ, USA, 2011. [Google Scholar] [CrossRef]

| Variable Name | Unit | Sampling Interval |

|---|---|---|

| Instantaneous Milling Volume | t/min | 1 min |

| Percolating Water Flow Volume | m3/h | 1 min |

| Mixed Juice Flow Volume | m3/h | 1 min |

| Pre-cutter Current | A | 1 min |

| #1 Crusher Current | A | 1 min |

| #1 Squeezer Current | A | 1 min |

| #1 Conveyor Belt Motor Speed | RPM | 1 min |

| #2 Conveyor Belt Motor Speed | RPM | 1 min |

| Current Stop Time | s | 1 min |

| Initial Juice Sugar Content | % | 3 times/day |

| Hyperparameters | Pre-Training | Fine-Tuning |

|---|---|---|

| Epochs | 174 | 136 |

| Generator learning rate | 0.001 | 0.0005 |

| Generator momentum | 0.9 | 0 |

| Discriminator learning rate | 0.0001 | 0.01 |

| Batch size of labeled data | 500 | 50 |

| Batch size of unlabeled data | 200 | 200 |

| Coefficient of the generator discrimination loss | 50 | 100 |

| Model | MSE | RMSE | MAE | R2 |

|---|---|---|---|---|

| Standard pre-trained model | 0.292 | 0.541 | 0.418 | 0.689 |

| Adversarial semi-supervised pre-trained model | 0.291 | 0.539 | 0.417 | 0.689 |

| Adversarial semi-supervised fine-tuned model | 0.243 ± 0.00862 | 0.493 ± 0.00875 | 0.365 ± 0.00695 | 0.679 ± 0.00179 |

| Fine-tuning without semi-supervised strategy | 0.228 ± 0.00521 | 0.477 ± 0.00540 | 0.355 ± 0.00410 | 0.691 ± 0.00316 |

| Pre-training without semi-supervised strategy | 0.238 ± 0.00670 | 0.488 ± 0.00693 | 0.366 ± 0.00563 | 0.685 ± 0.00360 |

| Complete adversarial semi-supervised pre-trained and fine-tuned model | 0.225 ± 0.00201 | 0.474 ± 0.00209 | 0.352 ± 0.00177 | 0.690 ± 0.00181 |

| Model Subjected to Wilcoxon Test with the Proposed Model | p-Value of the Wilcoxon Test |

|---|---|

| Standard pre-trained model | 9.494 × 10−9 |

| Adversarial semi-supervised pre-trained model | 4.987 × 10−9 |

| Adversarial semi-supervised fine-tuned model | 0.000665 |

| Fine-tuning without semi-supervised strategy | 0.000907 |

| Pre-training without semi-supervised strategy | 0.0093 |

| Number of Labels | MSE | MAE | R2 |

|---|---|---|---|

| 250 | 0.226 ± 0.00518 | 0.364 ± 0.00540 | 0.619 ± 0.00636 |

| 100 | 0.232 ± 0.0103 | 0.369 ± 0.00998 | 0.619 ± 0.00571 |

| 50 | 0.229 ± 0.0084 | 0.366 ± 0.00746 | 0.62 ± 0.00575 |

| 25 | 0.236 ± 0.00826 | 0.371 ± 0.00797 | 0.621 ± 0.00441 |

| 10 | 0.224 ± 0.00498 | 0.362 ± 0.00743 | 0.62 ± 0.00534 |

| 5 | 0.242 ± 0.0201 | 0.38 ± 0.023 | 0.619 ± 0.00408 |

| 3 | 0.249 ± 0.026 | 0.385 ± 0.0256 | 0.618 ± 0.00425 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, Y.; Yang, T.; Yin, Y.; Zhong, J.; Wen, J.; Meng, Y.; Ding, J.; Duan, Q. Cross-Line Transfer of Initial Juice Sugar Content Prediction Model for Sugarcane Milling. Processes 2025, 13, 3949. https://doi.org/10.3390/pr13123949

Qin Y, Yang T, Yin Y, Zhong J, Wen J, Meng Y, Ding J, Duan Q. Cross-Line Transfer of Initial Juice Sugar Content Prediction Model for Sugarcane Milling. Processes. 2025; 13(12):3949. https://doi.org/10.3390/pr13123949

Chicago/Turabian StyleQin, Yujie, Tao Yang, Yanqing Yin, Jiankang Zhong, Jieming Wen, Yanmei Meng, Jiang Ding, and Qingshan Duan. 2025. "Cross-Line Transfer of Initial Juice Sugar Content Prediction Model for Sugarcane Milling" Processes 13, no. 12: 3949. https://doi.org/10.3390/pr13123949

APA StyleQin, Y., Yang, T., Yin, Y., Zhong, J., Wen, J., Meng, Y., Ding, J., & Duan, Q. (2025). Cross-Line Transfer of Initial Juice Sugar Content Prediction Model for Sugarcane Milling. Processes, 13(12), 3949. https://doi.org/10.3390/pr13123949