Posture and Map Restoration in SLAM Using Trajectory Information

Abstract

:1. Introduction

- 1.

- Local Frame Matching, using the current LiDAR or camera reading to compare with the previous reading and estimate the new system pose based on the previous. This step is often easy to compute but suffers from high drift accumulation.

- 2.

- Local to Submap Matching, taking the current reading and comparing it to a submap. The submap is chosen by a sliding window centered at the current system pose. This step greatly reduces the drift accumulation but is limited by its computational complexity.

2. Contribution of This Study

- A trajectory matching strategy that combines the advantages of both high-frequency iterative positioning and low accuracy absolute positioning. Other than a frame to frame accuracy, our method seeks to improve the overall mapping accuracy by interpolating the system pose based on readings from a secondary trajectory frame.

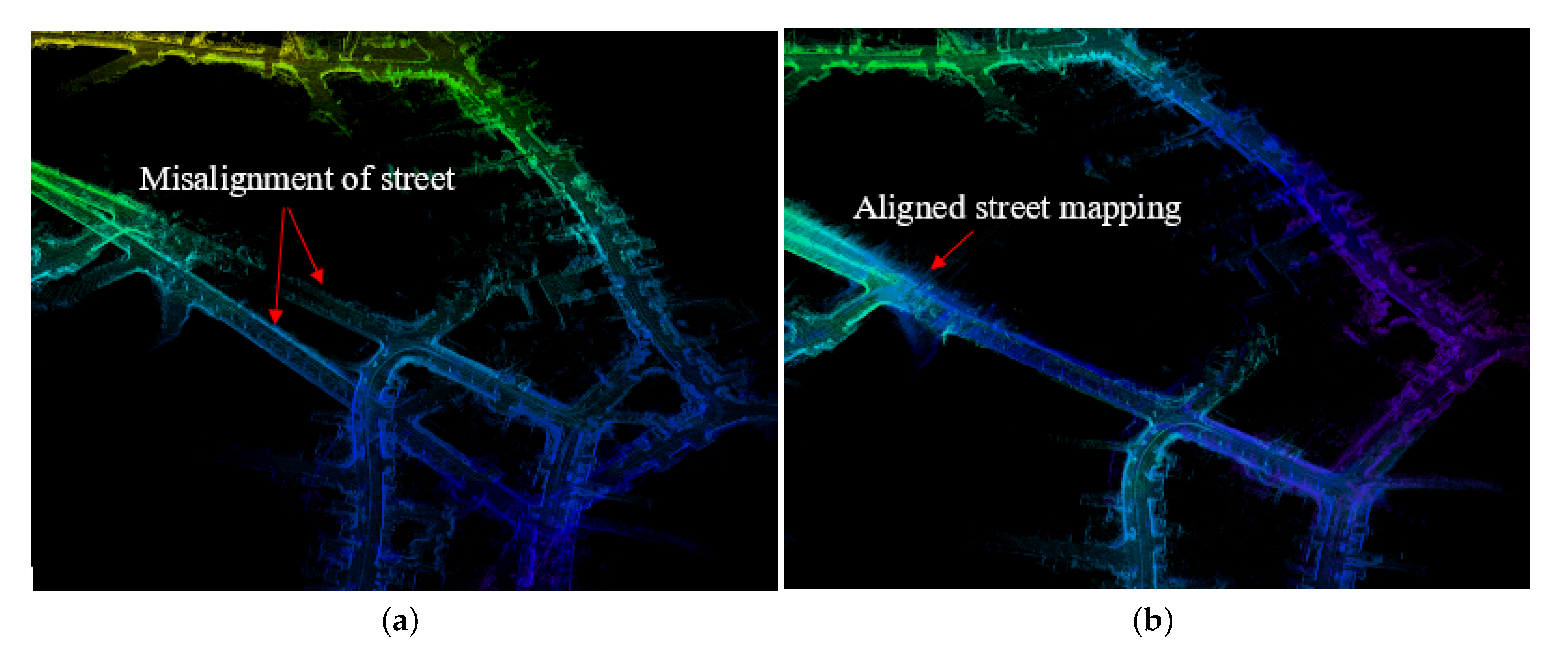

- Mapping results correction. Based on the outcome of the trajectory matching process, we took the transform information between the two frames and used it to correct the mapping results. We used a sliding window to control the size of the active area that participates in the correction, thus reducing its computational complexity.

- Loosely-integrated mapping process makes our method insensitive to the environment. While most of the absolute positioning systems, especially GNSS, faces reception problems in mixed environments, our method does not require a continuous trajectory. Instead, the algorithm samples the trajectory received and only outputs estimations when it finds a high-quality match.

- Easily adapted into different SLAM approaches. The proposed method does not affect the main process of SLAM. Instead, it works as an attachment of a targeted SLAM algorithm. We have demonstrated the ability to adopt the proposed method into different SLAM frameworks in the experiments section of this article. The applications vary from 2-D grid map SLAM to 3-D point cloud SLAM. Our study shows that the proposed method significantly improves the quality of the mapping results in all tests.

3. Proposed Method

3.1. Trajectory Alignment

| Algorithm 1 Map Orientation Correction. |

| Require: Trajectory from HectorSLAM since last iteration, Trajectory from Reference Trajectory since last iteration, number of iterations to sample the best match Ensure: Transformation Matrix

|

3.2. Map and Pose Correction in 2-D SLAM

3.3. Pose Interpolation and Correction in 3-D Point Clouds

4. Experiments

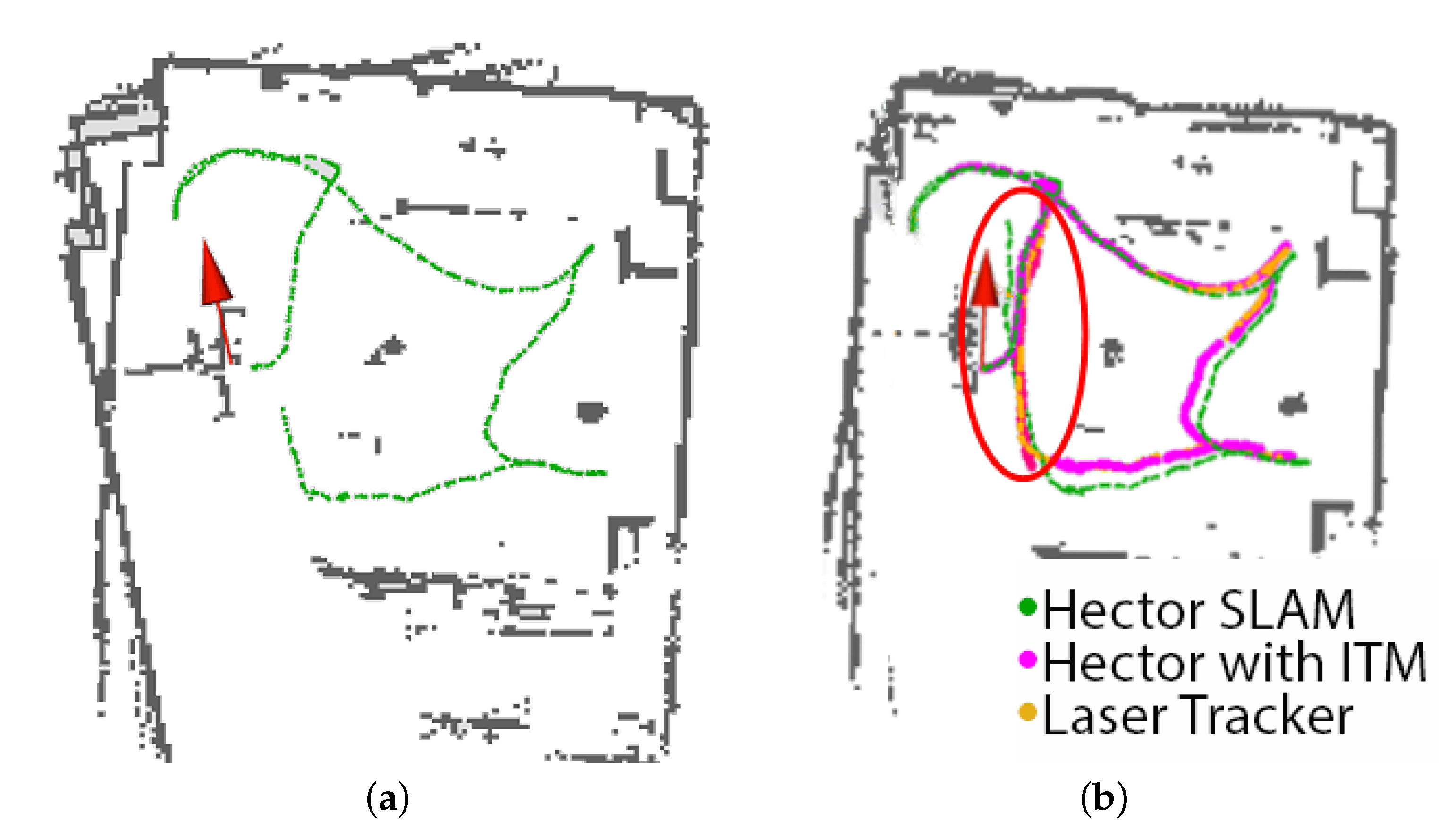

4.1. Indoor Experiment with Hector SLAM

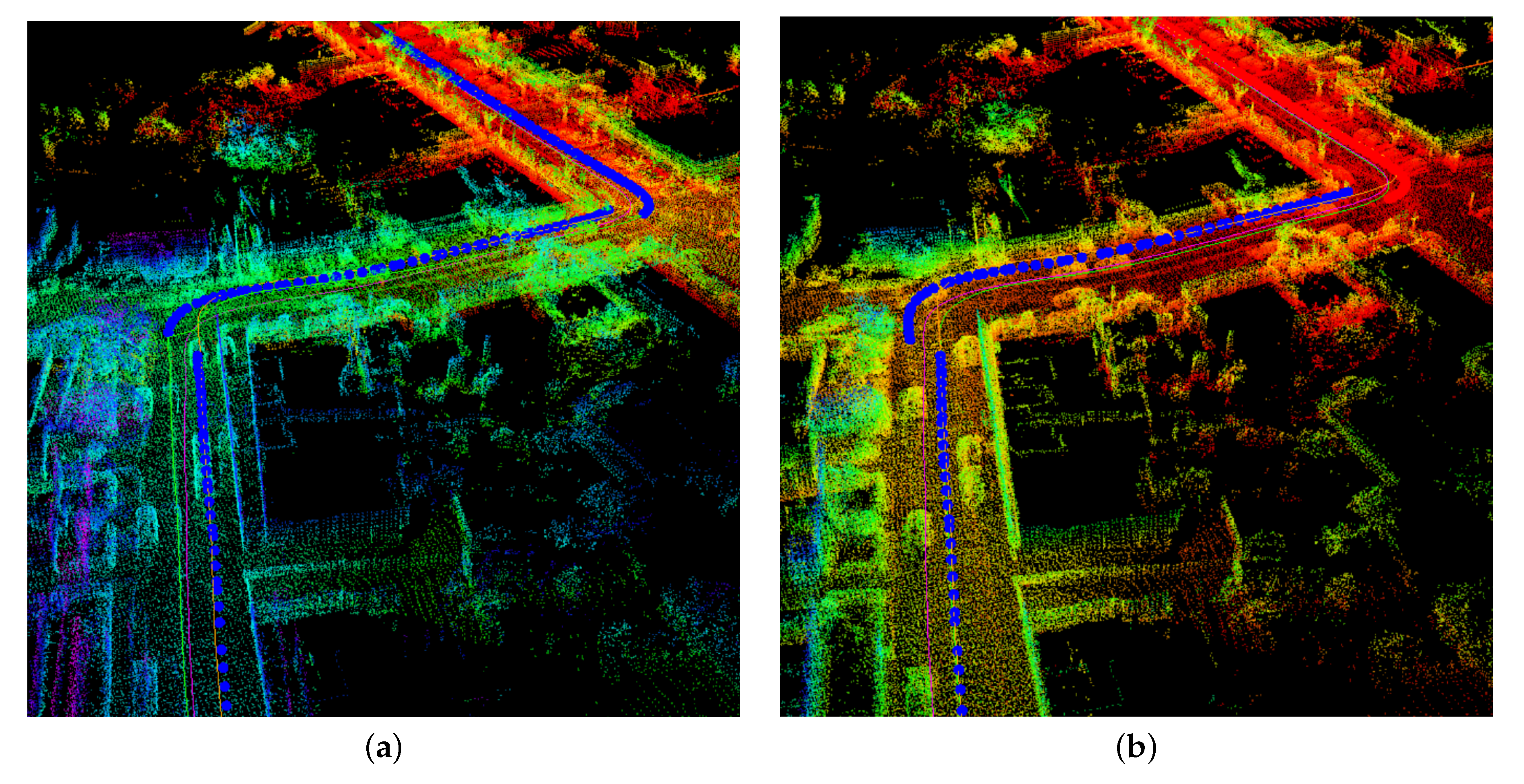

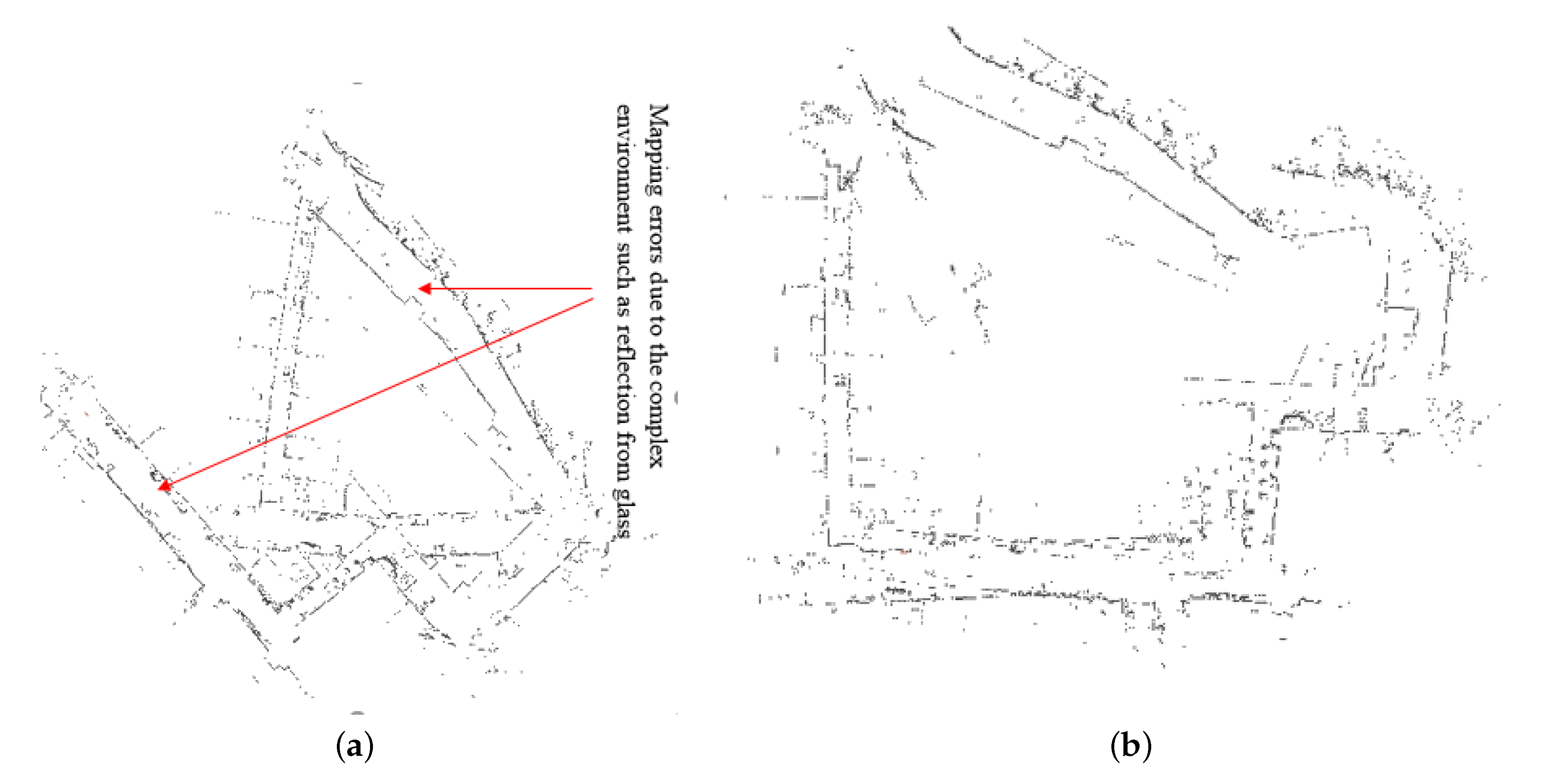

4.2. Indoor-Outdoor Experiment with Hector SLAM

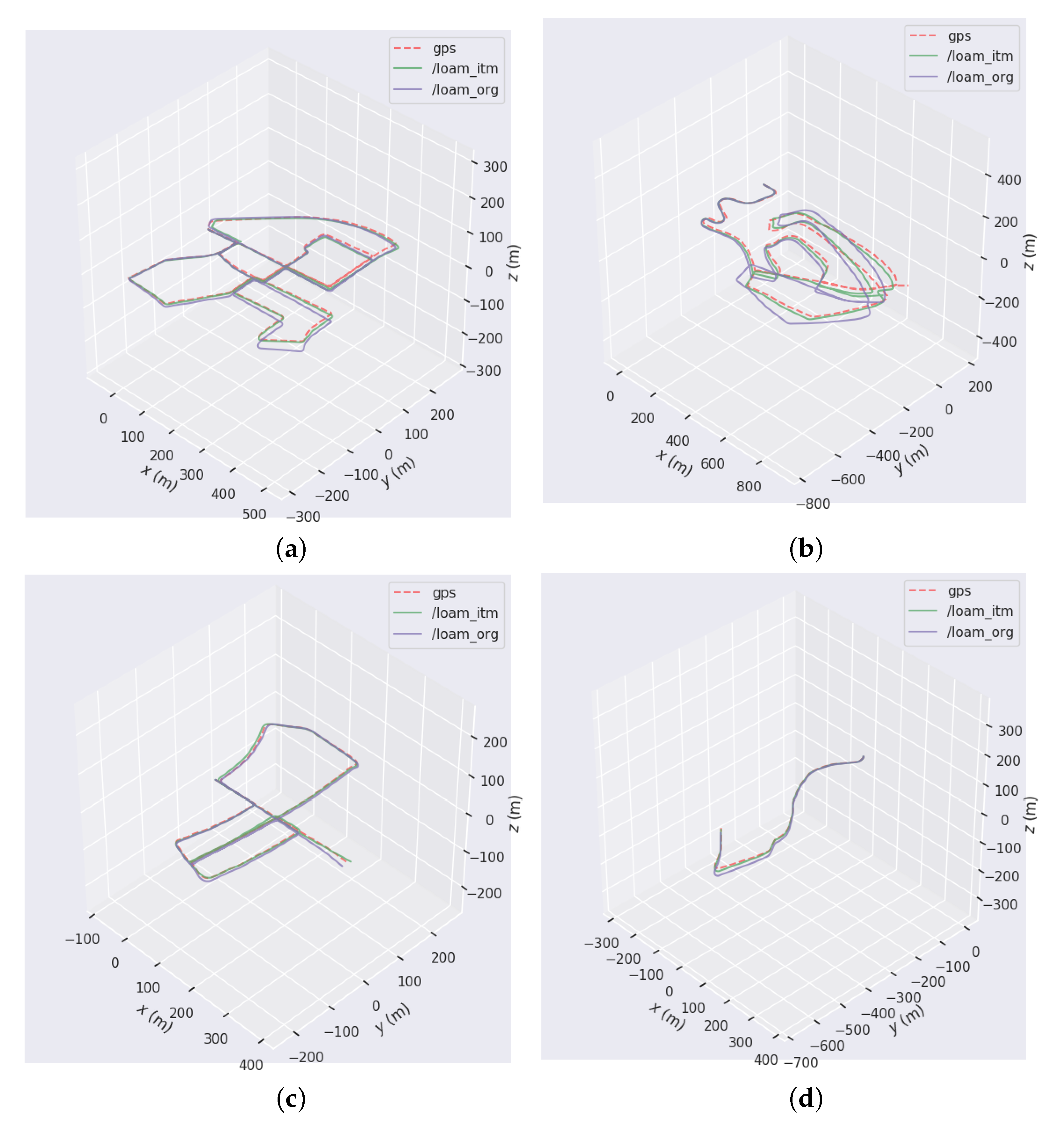

4.3. Testing on KITTI Dataset with LOAM

5. Conclusions and Discussion

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhan, Z.; Jian, W.; Li, Y.; Yue, Y. A slam map restoration algorithm based on submaps and an undirected connected graph. IEEE Access 2021, 9, 12657–12674. [Google Scholar] [CrossRef]

- Meng, Q.; Guo, H.; Zhao, X.; Cao, D.; Chen, H. Loop-closure detection with a multiresolution point cloud histogram mode in lidar odometry and mapping for intelligent vehicles. IEEE/ASME Trans. Mechatron. 2021, 26, 1307–1317. [Google Scholar] [CrossRef]

- Yang, T.; Li, P.; Zhang, H.; Li, J.; Li, Z. Monocular Vision SLAM-Based UAV Autonomous Landing in Emergencies and Unknown Environments. Electronics 2018, 7, 73. [Google Scholar] [CrossRef] [Green Version]

- Sadeghi Esfahlani, S.; Sanaei, A.; Ghorabian, M.; Shirvani, H. The Deep Convolutional Neural Network Role in the Autonomous Navigation of Mobile Robots (SROBO). Remote Sens. 2022, 14, 3324. [Google Scholar] [CrossRef]

- Kim, P.; Chen, J.; Cho, Y.K. SLAM-driven robotic mapping and registration of 3D point clouds. Autom. Constr. 2018, 89, 38–48. [Google Scholar] [CrossRef]

- Kohlbrecher, S.; Von Stryk, O.; Meyer, J.; Klingauf, U. A flexible and scalable slam system with full 3d motion estimation. In Proceedings of the 2011 IEEE International Symposium on Safety, Security, and Rescue Robotics, Kyoto, Japan, 1–5 November 2011; pp. 155–160. [Google Scholar]

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef] [Green Version]

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar]

- Vincent, R.; Limketkai, B.; Eriksen, M. Comparison of indoor robot localization techniques in the absence of GPS. In Proceedings of the Detection and Sensing of Mines, Explosive Objects, and Obscured Targets XV. International Society for Optics and Photonics; 2010; Volume 7664, p. 76641Z. Available online: https://spie.org/Publications/Proceedings/Volume/11750 (accessed on 9 July 2022).

- Segal, A.; Haehnel, D.; Thrun, S. Generalized-icp. In Proceedings of the Robotics: Science and Systems, Seattle, WA, USA, 28 June–1 July 2009; Volume 2, p. 435. [Google Scholar]

- Censi, A. An ICP variant using a point-to-line metric. In Proceedings of the 2008 IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; pp. 19–25. [Google Scholar]

- Besl, P.J.; McKay, N.D. Method for registration of 3-D shapes. In Proceedings of the Sensor Fusion IV: Control Paradigms and Data Structures; International Society for Optics and Photonics: Bellingham, WA, USA, 1992; Volume 1611, pp. 586–606. [Google Scholar]

- Das, A.; Servos, J.; Waslander, S.L. 3D scan registration using the normal distributions transform with ground segmentation and point cloud clustering. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 2207–2212. [Google Scholar]

- Das, A.; Waslander, S.L. Scan registration using segmented region growing NDT. Int. J. Robot. Res. 2014, 33, 1645–1663. [Google Scholar] [CrossRef]

- Merten, H. The three-dimensional normal-distributions transform. Threshold 2008, 10, 3. [Google Scholar]

- Fossel, J.; Hennes, D.; Claes, D.; Alers, S.; Tuyls, K. OctoSLAM: A 3D mapping approach to situational awareness of unmanned aerial vehicles. In Proceedings of the 2013 International Conference on Unmanned Aircraft Systems (ICUAS), Atlanta, GA, USA, 28–31 May 2013; pp. 179–188. [Google Scholar]

- Kwak, N.; Stasse, O.; Foissotte, T.; Yokoi, K. 3D grid and particle based SLAM for a humanoid robot. In Proceedings of the 2009 9th IEEE-RAS International Conference on Humanoid Robots, Paris, France, 7–10 December 2009; pp. 62–67. [Google Scholar]

- Nüchter, A.; Bleier, M.; Schauer, J.; Janotta, P. Continuous-Time SLAM—Improving Google’s Cartographer 3D Mapping. In Latest Developments in Reality-Based 3D Surveying and Modelling; MDPI: Basel, Switzerland, 2018; pp. 53–73. [Google Scholar]

- Bosse, M.; Zlot, R. Continuous 3D scan-matching with a spinning 2D laser. In Proceedings of the 2009 IEEE International Conference on Robotics and Automation, Kobe, Japan, 12–17 May 2009; pp. 4312–4319. [Google Scholar]

- Zlot, R.; Bosse, M. Efficient large-scale 3D mobile mapping and surface reconstruction of an underground mine. In Proceedings of the Field and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2014; pp. 479–493. [Google Scholar]

- Bosse, M.; Zlot, R.; Flick, P. Zebedee: Design of a spring-mounted 3-d range sensor with application to mobile mapping. IEEE Trans. Robot. 2012, 28, 1104–1119. [Google Scholar] [CrossRef]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-time. In Proceedings of the Robotics: Science and Systems; 2014; Volume 2. Available online: http://www.roboticsproceedings.org/ (accessed on 9 July 2022).

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Zhang, J.; Singh, S. Laser–visual–inertial odometry and mapping with high robustness and low drift. J. Field Robot. 2018, 35, 1242–1264. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Tardós, J.D. Orb-slam2: An open-source slam system for monocular, stereo, and rgb-d cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Tardós, J.D. Visual-inertial monocular SLAM with map reuse. IEEE Robot. Autom. Lett. 2017, 2, 796–803. [Google Scholar] [CrossRef] [Green Version]

- Campos, C.; Elvira, R.; Rodríguez, J.J.G.; Montiel, J.M.; Tardós, J.D. ORB-SLAM3: An accurate open-source library for visual, visual-inertial and multi-map SLAM. arXiv 2020, arXiv:2007.11898. [Google Scholar] [CrossRef]

- Lee, Y.C.; Chae, H.; Yu, W. Artificial landmark map building method based on grid SLAM in large scale indoor environment. In Proceedings of the 2010 IEEE International Conference on Systems, Man and Cybernetics, Istanbul, Turkey, 10–13 October 2010; pp. 4251–4256. [Google Scholar]

- Zhao, L.; Huang, S.; Dissanayake, G. Linear SLAM: A linear solution to the feature-based and pose graph SLAM based on submap joining. In Proceedings of the 2013 IEEE/RSJ International Conference on Intelligent Robots and Systems, Tokyo, Japan, 3–7 November 2013; pp. 24–30. [Google Scholar]

- Ni, K.; Dellaert, F. Multi-level submap based slam using nested dissection. In Proceedings of the 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems, Taipei, Taiwan, 18–22 October 2010; pp. 2558–2565. [Google Scholar]

- Chen, L.H.; Peng, C.C. A Robust 2D-SLAM Technology with Environmental Variation Adaptability. IEEE Sens. J. 2019, 19, 11475–11491. [Google Scholar] [CrossRef]

- Brand, C.; Schuster, M.J.; Hirschmuller, H.; Suppa, M. Submap matching for stereo-vision based indoor/outdoor SLAM. In Proceedings of the 2015 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 28 September–2 October 2015; pp. 5670–5677. [Google Scholar] [CrossRef] [Green Version]

- Jiménez, P.A.; Shirinzadeh, B. Laser interferometry measurements based calibration and error propagation identification for pose estimation in mobile robots. Robotica 2014, 32, 165–174. [Google Scholar] [CrossRef]

- Palomer, A.; Ridao, P.; Ribas, D. Inspection of an underwater structure using point-cloud SLAM with an AUV and a laser scanner. J. Field Robot. 2019, 36, 1333–1344. [Google Scholar] [CrossRef]

- Shirinzadeh, B. Laser-interferometry-based tracking for dynamic measurements. Ind. Robot. Int. J. 1998, 25, 35–41. [Google Scholar] [CrossRef]

- Gao, W.; Wang, K.; Ding, W.; Gao, F.; Qin, T.; Shen, S. Autonomous aerial robot using dual-fisheye cameras. J. Field Robot. 2020, 37, 497–514. [Google Scholar] [CrossRef]

- Kubelka, V.; Reinstein, M.; Svoboda, T. Tracked Robot Odometry for Obstacle Traversal in Sensory Deprived Environment. IEEE/ASME Trans. Mechatron. 2019, 24, 2745–2755. [Google Scholar] [CrossRef]

- Azim, A.; Aycard, O. Detection, classification and tracking of moving objects in a 3D environment. In Proceedings of the 2012 IEEE Intelligent Vehicles Symposium, Madrid, Spain, 3–7 June 2012; pp. 802–807. [Google Scholar]

- Asmar, D.C.; Zelek, J.S.; Abdallah, S.M. SmartSLAM: Localization and mapping across multi-environments. In Proceedings of the 2004 IEEE International Conference on Systems, Man and Cybernetics (IEEE Cat. No. 04CH37583), The Hague, The Netherlands, 10–13 October 2004; Volume 6, pp. 5240–5245. [Google Scholar]

- Collier, J.; Ramirez-Serrano, A. Environment classification for indoor/outdoor robotic mapping. In Proceedings of the 2009 Canadian Conference on Computer and Robot Vision, CRV 2009, Kelowna, BC, Canada, 25–27 May 2009; pp. 276–283. [Google Scholar] [CrossRef]

- Dill, E.; De Haag, M.U.; Duan, P.; Serrano, D.; Vilardaga, S. Seamless indoor-outdoor navigation for unmanned multi-sensor aerial platforms. In Proceedings of the Record—IEEE PLANS, Position Location and Navigation Symposium, Monterey, CA, USA, 5–8 May 2014; pp. 1174–1182. [Google Scholar] [CrossRef]

- Lee, Y.J.; Song, J.B. Three-dimensional iterative closest point-based outdoor SLAM using terrain classification. Intell. Serv. Robot. 2011, 4, 147–158. [Google Scholar] [CrossRef]

- Ilci, V.; Toth, C. High definition 3D map creation using GNSS/IMU/LiDAR sensor integration to support autonomous vehicle navigation. Sensors 2020, 20, 899. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Chiang, K.W.; Tsai, G.J.; Li, Y.H.; Li, Y.; El-Sheimy, N. Navigation Engine Design for Automated Driving Using INS/GNSS/3D LiDAR-SLAM and Integrity Assessment. Remote. Sens. 2020, 12, 1564. [Google Scholar] [CrossRef]

- Gálvez-López, D.; Tardos, J.D. Bags of binary words for fast place recognition in image sequences. IEEE Trans. Robot. 2012, 28, 1188–1197. [Google Scholar] [CrossRef]

- Caballero, F.; Pérez, J.; Merino, L. Long-term ground robot localization architecture for mixed indoor-outdoor scenarios. In Proceedings of the ISR/Robotik 2014: 41st International Symposium on Robotics, Munich, Germany, 2–3 June 2014; pp. 21–28. [Google Scholar]

- Mur-Artal, R.; Montiel, J.M.M.; Tardos, J.D. ORB-SLAM: A versatile and accurate monocular SLAM system. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Qin, T.; Li, P.; Shen, S. Vins-mono: A robust and versatile monocular visual-inertial state estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef] [Green Version]

- Rosinol, A.; Abate, M.; Chang, Y.; Carlone, L. Kimera: An open-source library for real-time metric-semantic localization and mapping. In Proceedings of the 2020 IEEE International Conference on Robotics and Automation (ICRA), Paris, France, 31 May–31 August 2020; pp. 1689–1696. [Google Scholar] [CrossRef]

- Caruso, D.; Eudes, A.; Sanfourche, M.; Vissière, D.; Le Besnerais, G. An inverse square root filter for robust indoor/outdoor magneto-visual-inertial odometry. In Proceedings of the 2017 International Conference on Indoor Positioning and Indoor Navigation, IPIN 2017, Sapporo, Japan, 8–21 September 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Liu, Q.; Liang, P.; Xia, J.; Wang, T.; Song, M.; Xu, X.; Zhang, J.; Fan, Y.; Liu, L. A highly accurate positioning solution for C-V2X systems. Sensors 2021, 21, 1175. [Google Scholar] [CrossRef]

- Wei, W.; Shirinzadeh, B.; Esakkiappan, S.; Ghafarian, M.; Al-Jodah, A. Orientation Correction for Hector SLAM at Starting Stage. In Proceedings of the 2019 7th International Conference on Robot Intelligence Technology and Applications (RiTA), Daejeon, Korea, 1–3 November 2019; pp. 125–129. [Google Scholar]

- Wei, W.; Shirinzadeh, B.; Nowell, R.; Ghafarian, M.; Ammar, M.M.; Shen, T. Enhancing solid state lidar mapping with a 2d spinning lidar in urban scenario slam on ground vehicles. Sensors 2021, 21, 1773. [Google Scholar] [CrossRef]

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012. [Google Scholar]

- Stanford Artificial Intelligence Laboratory. Robotic Operating System; Stanford Artificial Intelligence Laboratory: Stanford, CA, USA, 2018. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, W.; Ghafarian, M.; Shirinzadeh, B.; Al-Jodah, A.; Nowell, R. Posture and Map Restoration in SLAM Using Trajectory Information. Processes 2022, 10, 1433. https://doi.org/10.3390/pr10081433

Wei W, Ghafarian M, Shirinzadeh B, Al-Jodah A, Nowell R. Posture and Map Restoration in SLAM Using Trajectory Information. Processes. 2022; 10(8):1433. https://doi.org/10.3390/pr10081433

Chicago/Turabian StyleWei, Weichen, Mohammadali Ghafarian, Bijan Shirinzadeh, Ammar Al-Jodah, and Rohan Nowell. 2022. "Posture and Map Restoration in SLAM Using Trajectory Information" Processes 10, no. 8: 1433. https://doi.org/10.3390/pr10081433

APA StyleWei, W., Ghafarian, M., Shirinzadeh, B., Al-Jodah, A., & Nowell, R. (2022). Posture and Map Restoration in SLAM Using Trajectory Information. Processes, 10(8), 1433. https://doi.org/10.3390/pr10081433