A Guide for Game-Design-Based Gamification

Abstract

1. Introduction

“Gamification should be understood as a process. Specifically, it is the process of making activities more game-like. Conceiving of Gamification as a process creates a better fit between academic and practitioner perspectives. Even more important, it focuses attention on the creation of game-like experiences, pushing against shallow approaches that can easily become manipulative. A final benefit of this approach is that it connects Gamification to persuasive design.”Kevin Werbach [9]

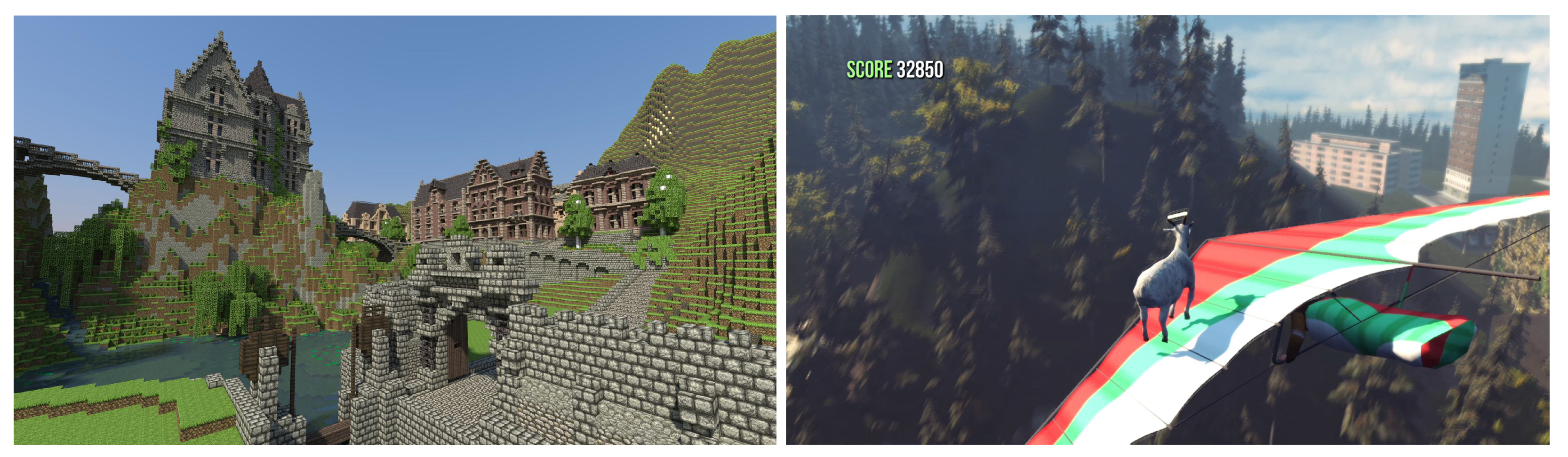

Acquiring Design Experience

2. Ten Relevant Characteristics for Game-Design-Based Gamification

2.1. Open Decision Space

- Correct decisions ill-form decision spaces.Many Gamification designs reside on questions or alternative paths, being only one of them correct. This represents and ill-formed decision space because there is no true decision to take. Trainees are not being asked to decide and progress but are being tested instead. For an environment to foster autonomy and provide a truly open decision space, decisions should not be designed as correct/incorrect. In contrast, decisions must produce consequences and trainees should be free to play with situations, environments and consequences, experimenting and learning from results.

- Discrete custom-designed decision spaces challenge autonomy.It is common to manually design all possible decision choices. It seems natural to attempt to directly transmit knowledge to trainees. We teachers tend to transform our knowledge into possible situations, producing some form of decision-tree. Decision space is reduced to pre-designed knowledge, what challenges autonomy. Trainees usually imagine decisions they would take but have to accommodate to designed choices. Creativity is prevented, curiosity diminishes and frustration raises. Open decision spaces that let trainees experiment with their ideas tend to be continuous, not pre-designed, more similar to simulations than to decision-trees.

- Failing to consider movements and interactions as decisions.The term ’decision’ is naturally related to high-level abstract thinking and neo-cortex processing. But any action a trainee performs on any instant is a decision. Many designs do not consider them as part of the decision space. This produces designs where either trainees cannot move or their movements are meaningless. As interacting with the world is one of our richest sources of information, failing to consider it greatly limits decision spaces.

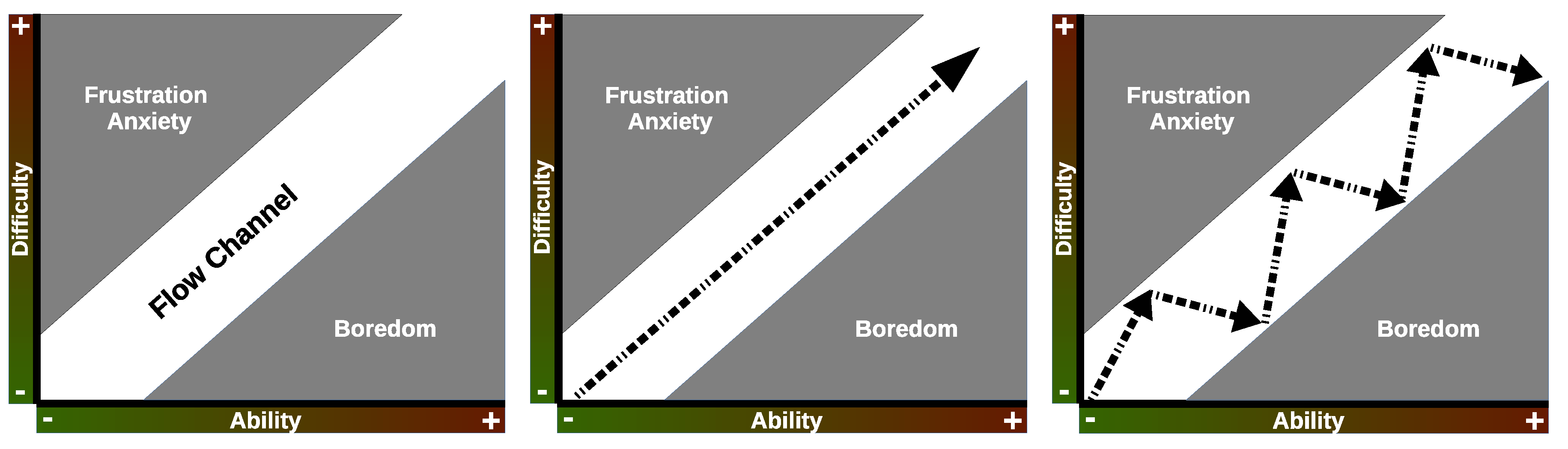

2.2. Challenge

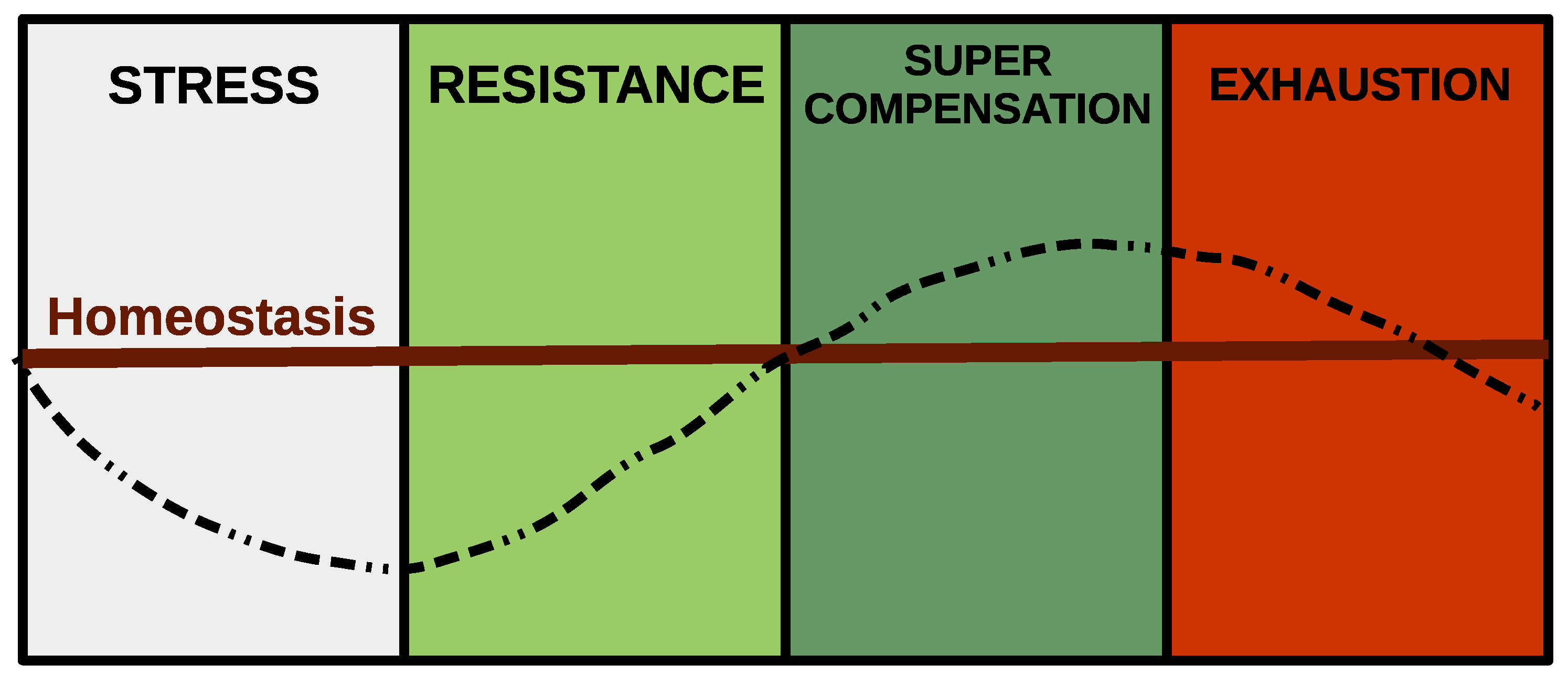

2.3. Learning by Trial and Error

2.4. Progress Assessment

2.5. Feedback

2.6. Randomness

2.7. Discovery

2.8. Emotional Entailment

2.9. Playfulness Enabled

2.10. Automation

3. The Rubric

4. Rubric Application Samples

4.1. Super Mario Bros (NES)

- [ 2 ]Open Decision Space. The game lets the user take movement decisions (actions) in a continuous world. Taking any two players that successfully finish one level, it is almost impossible that both of them perform the exact same actions. Player is in total control of the action having potentially infinite options in a continuous space.

- [ 2 ]Challenge. The game is composed of a series well designed levels to challenge players. Difficulty progression is sinusoidal, with some easier levels after more challenging ones. It is balanced and tested by designer intuition through iterations.

- [ 2 ]Learning by trial and error. As many games, learning by trial and error is in the very core of the game. Failure is permitted with a number of lives in one game but there is no limit of games per player. The player can complete the game regardless of the number of games or lives lost to learn. Even level design is thought to encourage players to learn by experimenting.

- [ 1 ]Progress assessment. The game assesses the progress of the player through levels and player status. Whenever a level is finished, the player does not repeat it even if lives are lost. Inside a level, the player always knows how to continue to achieve the end and feedback through movement, points, enemies and music reports the progress.

- [ 2 ]Feedback. Similar to most action-platformer games, there are sixty frames per second of continuous cause/effect feedback that lets the player sense control and learn. Moreover, game design informs of all events happening such us lives lost, enemies beaten, objects obtained and so forth.

- [ 1 ]Randomness. Although the game is predictable, with no actual random events happening, there are some enemies with elaborated movements that give the player some sense of unpredictability.

- [ 2 ]Discovery. Players discover new levels and worlds as they finish previous ones, in an unlock-like fashion. There are secret places, items and bonuses at different locations that reward players for their attention to detail and exploration. Also, there are some special behaviours of game elements that can be discovered by experimentation.

- [ 2 ]Emotional entailment. The complete game creates an emotional experience for the player with the aesthetics, characters, music and the story. It is completely conceived as an adventure in an imaginary world where characters live and become “real” in some sense for the player.

- [ 1 ]Playfulness enabled. Although the game has clear goals and rules, players have room to explore and be creative. In fact, communities of players have engaged in new challenges like the speed-run modalities, creating new rules on top of the game. The game was not thought to be played as a toy but players can and use it this way.

- [ 2 ]Automation. As a console game, meant to be played at home, the game is fully automated. Feedback is immediate and all rules are enforced automatically.

4.2. Unsuccessful 16-Week Gamified Semester

- [ 1 ]Open Decision Space. Although badges are reported as mandatory, some seem to be optional like coins. Also students can decide how to use earned coins. However, this seems like a small set of options with not much strategy involved.

- [ 0 ]Challenge. No description of badges seems to match with levels of difficulty or abilities required. They are focused on behaviour. There seems to be no consideration about difficulty.

- [ 0 ]Learning by trial and error. No consideration of opportunities, number of assignments or punishments.

- [ 1 ]Progress assessment. Number of badges earned, coins and the leaderboard give some form of progress assessment.

- [ 1 ]Feedback. Feedback seems to come mainly from teacher and the leaderboard is updated once a week. Therefore, feedback seems rather slow.

- [ 0 ]Randomness. Everything described in the system seems concretely specified, with no room for surprises or random events.

- [ 0 ]Discovery. Similarly, as everything seems predefined, there is nothing to unlock or discover.

- [ 0 ]Emotional entailment. There are no characters, no story, no aesthetics. The only content that could be related to emotions is the badge for dressing up like a videogame character.

- [ 0 ]Playfulness enabled. Similarly, description of the system does not involve any ability to use activities as toys or even play with strategies. Some creativity could be exhibited with the videogame character dressing badge or in the way to expend coins.

- [ 0 ]Automation. Nothing is automated. Even badges have to be claimed by students by filling up forms. However, the leaderboard being updated weekly can be perceived as some small form of automation by students.

4.3. Single Learning Activity: Solving a Linear-Equations System

- [ 1 ]Open Decision Space. Some minimal decisions can be considered regarding the solution method and the order in which to perform steps.

- [ 0 ]Challenge. It is a single activity, so there is no way to match activity with ability. Difficulty is fixed.

- [ 0 ]Learning by trial and error. While the trainee produces its solution there is no feedback, no way to know if decisions are good or bad. Therefore, no way to cause/effect learn.

- [ 0 ]Progress assessment. The only perceivable progress would be the steps done towards the solution but that is no form of progress assessment.

- [ 0 ]Feedback. There is no feedback response to actions and teacher feedback takes one week. Cause/effect learning is almost impossible.

- [ 0 ]Randomness. Everything is completely predictable and there are no surprises.

- [ 0 ]Discovery. As all content is fixed, there is no unlocking or discovery at all.

- [ 0 ]Emotional entailment. There are no characters, no story, no aesthetics, no content that could be related to emotions.

- [ 1 ]Playfulness enabled. There is some room to experiment with procedures or methods, but very limited.

- [ 0 ]Automation. Everything is manually performed, with no automation at all and a slow response time (one week).

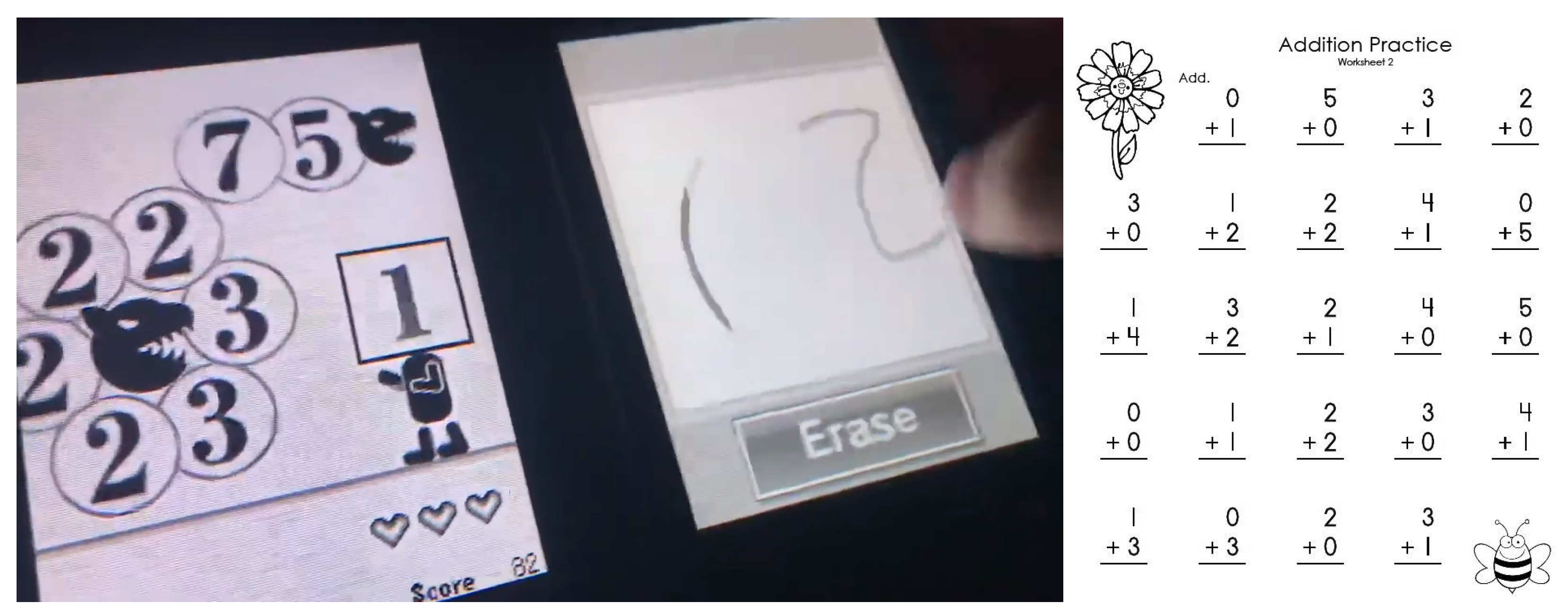

4.4. Gamified Version of the Linear-Equations Solving Activity

- Create an automatic generator of linear-equation systems to present students with hundreds of exercises instead of one.

- Classify generated systems into 6 levels of difficulty depending on their intrinsic characteristics, number of variables and numerical complexity.

- Form teams during solving sessions and have rules to require teams and individuals to develop strategies to distribute tasks and face challenges.

- Give points to valid solutions depending on the assigned difficulty of the system.

- Make difficulty levels unlockable and have clear unlocking rules to force them to appropriately master levels before proceeding.

- Have a student experience level (XP) that increases as students solve systems and successfully resolve proposed activities. Define experience levels and use them as a measure to form teams and unlock difficulty levels.

- Spread the activity across many sessions and maintain points, experience and levels. Let students evolve over the course.

- Produce random events that interrupt sessions and change rules surprisingly. Examples: A fleeting system that has to be solved fast, a red-code event in which students have to deactivate a bomb or a dizzy time during which solutions have to be given inverted.

- Define a set of achievements to give to students, including some secret ones to reward their research or detailed abilities.

- Automatize all the system with an application that lets students select systems, send solutions and receive instant status reports with their mobile phones.

- [ 1 ]Open Decision Space. Students have the freedom to define different strategies to solve tasks and challenges based on linear-equations systems.

- [ 1 ]Challenge. There are different difficulty levels defined as progressive and linear.

- [ 2 ]Learning by trial and error. Students are limited by factors like time during sessions but not by their mistakes. They can fail many times and continue, not limiting their final score.

- [ 2 ]Progress assessment. There are several measures like experience points, regular points, levels, achievements and unlocked difficulties that give students great detail on their progress.

- [ 1 ]Feedback. Students receive feedback from the system with respect to their solutions and actions. They see their progress and know if they have done right or wrong and they also can fix their failures. Feedback is not complete and sometimes cause-effect relationships maybe diffuse.

- [ 2 ]Randomness. Systems are generated, so randomness is present most of the time in the system. Moreover, random events are another source of purposively designed unpredictability for students, which induces surprises.

- [ 1 ]Discovery. There is some unlockable content and some secret levels and achievements, but design could be improved to include more surprises and learning through discovery.

- [ 1 ]Emotional entailment. Random events are based on simple stories like deactivating a bomb, for instance. Also, time limitations and surprises target emotions but there is a lack of a general story, some characters and appropriate aesthetics.

- [ 0 ]Playfulness enabled. There is a small subset of creativity involved in the way teams can approach tasks, but goals are clearly defined and there is not much room for free playing outside the rules of the system.

- [ 2 ]Automation. A mobile app with a server give a moderate level of automation and control with minimal manual intervention required. Feedback is immediate to responses, although it might be not detailed or complete. However, this last part is improbable with new versions of the app.

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Deterding, S.; Dixon, D.; Khaled, R.; Nacke, L. From Game Design Elements to Gamefulness: Defining “Gamification”. In Proceedings of the 15th International Academic MindTrek Conference: Envisioning Future Media Environments, Tampere, Finland, 28–30 September 2011; ACM: New York, NY, USA, 2011; pp. 9–15. [Google Scholar] [CrossRef]

- Nacke, L.E.; Deterding, S. The maturing of gamification research. Comput. Hum. Behav. 2017, 71, 450–454. [Google Scholar] [CrossRef]

- Linehan, C.; Kirman, B.; Lawson, S.; Chan, G. Practical, Appropriate, Empirically-validated Guidelines for Designing Educational Games. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Vancouver, BC, Canada, 7–12 May 2011; ACM: New York, NY, USA, 2011; pp. 1979–1988. [Google Scholar] [CrossRef]

- Nah, F.F.H.; Zeng, Q.; Telaprolu, V.R.; Ayyappa, A.P.; Eschenbrenner, B. Gamification of Education: A Review of Literature. In Lecture Notes in Computer Science; Nah, F.F.H., Ed.; Springer International Publishing: Cham, Switzerland, 2014; pp. 401–409. [Google Scholar] [CrossRef]

- Hamari, J.; Koivisto, J.; Sarsa, H. Does Gamification Work?—A Literature Review of Empirical Studies on Gamification. In Proceedings of the 47th Hawaii International Conference on System Sciences, Waikoloa, HI, USA, 6–9 January 2014. [Google Scholar] [CrossRef]

- Fitz-Walter, Z.; Johnson, D.; Wyeth, P.; Tjondronegoro, D.; Scott-Parker, B. Driven to drive? Investigating the effect of gamification on learner driver behavior, perceived motivation and user experience. Comput. Hum. Behav. 2017, 71, 586–595. [Google Scholar] [CrossRef]

- Kocakoyun, S.; Ozdamli, F. A Review of Research on Gamification Approach in Education. In Socialization; Morese, R., Palermo, S., Nervo, J., Eds.; IntechOpen: Rijeka, Croatia, 2018; Chapter 4. [Google Scholar] [CrossRef]

- Koivisto, J.; Hamari, J. The rise of motivational information systems: A review of gamification research. Int. J. Inf. Manag. 2019, 45, 191–210. [Google Scholar] [CrossRef]

- Werbach, K. (Re)Defining Gamification: A Process Approach. In Persuasive Technology; Spagnolli, A., Chittaro, L., Gamberini, L., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 266–272. [Google Scholar] [CrossRef]

- Chou, Y. Actionable Gamification: Beyond Points, Badges, and Leaderboards; Createspace Independent Publishing Platform: Milpitas, CA, USA, 2015. [Google Scholar]

- Deterding, S. Eudaimonic Design, or: Six Invitations to Rethink Gamification. In Rethinking Gamification; Fuchs, M., Fizek, S., Ruffino, P., Schrape, N., Eds.; Meson Press: Luneburg, Germany, 2014; pp. 305–331. [Google Scholar]

- Koster, R. A Theory of Fun for Game Design; Paraglyph Press: Scottsdale, AZ, USA, 2004. [Google Scholar]

- Schell, J. The Art of Game Design: A Book of Lenses; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2008. [Google Scholar]

- Gee, J.P. What Video Games Have to Teach Us About Learning and Literacy. Second Edition: Revised and Updated Edition; Palgrave Macmillan: New York, NY, USA, 2007; OCLC: ocn172569526. [Google Scholar]

- Gee, J.P. Good Video Games and Good Learning; Peter Lang Inc., International Academic Publishers: New York, NY, USA, 2007. [Google Scholar]

- Kreimeier, B. The Case For Game Design Patterns. Gamasutra Featured Article. Gamasutra, 2002. Available online: https://www.gamasutra.com/view/feature/132649/the_case_for_game_design_patterns.php (accessed on 4 November 2019).

- Lindley, C.A. Game Taxonomies: A High Level Framework for Game Analysis and Design. Gamasutra Featured Article. Gamasutra, 2003. Available online: https://www.gamasutra.com/view/feature/131205/game_taxonomies_a_high_level_.php (accessed on 4 November 2019).

- Hunicke, R.; Leblanc, M.; Zubek, R. MDA: A Formal Approach to Game Design and Game Research; AAAI Workshop: Challentes In Game Artificial Intelligence, Volume 1; Technical Report; AAAI: Menlo Park, CA, USA, 2004. [Google Scholar]

- Reeves, B.; Read, L. Total Engagement: Using Games and Virtual Worlds to Change the Way People Work and Businesses Compete; Harvard Business Review Press: Boston, MA, USA, 2009. [Google Scholar]

- Tondello, G.F.; Kappen, D.L.; Mekler, E.D.; Ganaba, M.; Nacke, L.E. Heuristic Evaluation for Gameful Design. In Proceedings of the 2016 Annual Symposium on Computer-Human Interaction in Play Companion Extended Abstracts, Austin, TX, USA, 16–19 October 2016; ACM: New York, NY, USA, 2016; pp. 315–323. [Google Scholar] [CrossRef]

- Rapp, A. Designing interactive systems through a game lens: An ethnographic approach. Comput. Hum. Behav. 2017, 71, 455–468. [Google Scholar] [CrossRef]

- Strawson, P.F.; Wittgenstein, L. Philosophical Investigations. Mind 1954, 63, 70. [Google Scholar] [CrossRef]

- Bogost, I. Persuasive Games: Exploitationware. Gamasutra, 2011. Available online: http://www.gamasutra.com/view/feature/6366/persuasive_games_exploitationware.php (accessed on 29 June 2019).

- Desurvire, H.; Wiberg, C. Game Usability Heuristics (PLAY) for Evaluating and Designing Better Games: The Next Iteration; Online Communities and Social Computing; Springer: Berlin/Heidelberg, Germany, 2009; pp. 557–566. [Google Scholar] [CrossRef]

- Tromp, N.; Hekkert, P.; Verbeek, P.P. Design for Socially Responsible Behavior: A Classification of Influence Based on Intended User Experience. Des. Issues 2011, 27, 3–19. [Google Scholar] [CrossRef]

- Linehan, C.; Bellord, G.; Kirman, B.; Morford, Z.H.; Roche, B. Learning Curves: Analysing Pace and Challenge in Four Successful Puzzle Games. In Proceedings of the First ACM SIGCHI Annual Symposium On Computer-Human Interaction in Play—CHI PLAY, Toronto, ON, Canada, 19–21 October 2014; ACM Press: New York, NY, USA, 2014; pp. 181–190. [Google Scholar] [CrossRef]

- Llorens-Largo, F.; Gallego-Durán, F.J.; Villagrá-Arnedo, C.J.; Compañ Rosique, P.; Satorre-Cuerda, R.; Molina-Carmona, R. Gamification of the Learning Process: Lessons Learned. IEEE Rev. Iberoamericana Tecnol. Aprendizaje 2016, 11, 227–234. [Google Scholar] [CrossRef]

- Villagrá-Arnedo, C.; Gallego-Durán, F.J.; Molina-Carmona, R.; Llorens-Largo, F. PLMan: Towards a Gamified Learning System. In Learning and Collaboration Technologies; Zaphiris, P., Ioannou, A., Eds.; Springer International Publishing: Cham, Switzerland, 2016; Volume 9753, pp. 82–93. [Google Scholar]

- Deci, E.L.; Ryan, R.M. Handbook of Self-Determination Research; University Rochester Press: Rochester, NY, USA, 2004. [Google Scholar]

- Demaine, E.D.; Grandoni, F. (Eds.) Super Mario Bros. is Harder/Easier Than We Thought, Leibniz International Proceedings in Informatics (LIPIcs); Schloss Dagstuhl–Leibniz-Zentrum fuer Informatik: Dagstuhl, Germany, 2016; Volume 49. [Google Scholar] [CrossRef]

- Nacke, L.; Lindley, C.A. Flow and Immersion in First-person Shooters: Measuring the Player’s Gameplay Experience. In Proceedings of the 2008 Conference on Future Play: Research, Play, Share, Toronto, ON, Canada, 3–5 November 2008; ACM: New York, NY, USA, 2008; pp. 81–88. [Google Scholar] [CrossRef]

- Csikszentmihalyi, M. Flow: The Psychology of Optimal Experience; Harper Perennial: New York, NY, USA, 1991. [Google Scholar]

- Selye, H. The general adaptation syndrome and the diseases of adaptation. J. Allergy Clin. Immunol. 1946, 17, 231–247. [Google Scholar] [CrossRef]

- Forget, A.; Chiasson, S.; Biddle, R. Lessons from Brain Age on persuasion for computer security. In Proceedings of the 27th International Conference on Human Factors in Computing Systems, Boston, MA, USA, 4–9 April 2009; pp. 4435–4440. [Google Scholar] [CrossRef]

- Ayton, P.; Fischer, I. The Hot Hand Fallacy and the Gambler’s Fallacy: Two faces of Subjective Randomness? Mem. Cognit. 2005, 32, 1369–1378. [Google Scholar] [CrossRef] [PubMed]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Prensky, M. Digital Game-Based Learning; McGraw-Hill: New York, NY, USA, 2001. [Google Scholar]

- Prensky, M. Don’t Bother Me Mom–I’M Learning! Paragon House Publishers: St.Paul, MN, USA, 2006. [Google Scholar]

- Huizinga, J. Homo Ludens: A Study of the Play-Element in Culture; Beacon Press: Boston, MA, USA, 1955. [Google Scholar]

- Jensen, L.J.; Barreto, D.; Valentine, K.D. Toward Broader Definitions of “Video Games”: Shifts in Narrative, Player Goals, Subject Matter, and Digital Play Environments. In Examining the Evolution of Gaming and Its Impact on Social, Cultural, and Political Perspectives; IGI Global: Hershey, PA, USA, 2016; pp. 1–37. [Google Scholar] [CrossRef]

- Hanus, M.D.; Fox, J. Assessing the effects of gamification in the classroom: A longitudinal study on intrinsic motivation, social comparison, satisfaction, effort, and academic performance. Comput. Educ. 2015, 80, 152–161. [Google Scholar] [CrossRef]

- Llorens-Largo, F.; Molina-Carmona, R.; Gallego-Durán, F.J.; Villagrá-Arnedo, C.J. Guía para la Gamificación de Actividades de Aprendizaje. Novatica 2018. Available online: https://www.novatica.es/guia-para-la-gamificacion-de-actividades-de-aprendizaje (accessed on 4 November 2019).

| Characteristic | 0 | 1 | 2 |

|---|---|---|---|

| Open Decision Space | Not open No real decisions to take Only Correct/Incorrect | Decision-tree like Designed decision space With options but limited | Completely open Multiple/Infinite options Continuous decision spaces |

| Challenge | Single difficulty/activity No activity-ability match Punishments prevent beneficial attempts | Incremental difficulty Speculative Design Subjective matching Subjective measures | Sinusoidal difficulty progression Designed activity-ability match Measured, balanced, tested |

| Learning by Trial and Error | Failure punished Max.Marks only achievable without failure | Failure permitted Max.Marks achievable with some failures | Failure encouraged for learning Max.Marks achievable independent of failures |

| Progress Assessment | No progress measures No feedback on progress | Some progress measures defined Some feedback on status/progress Lack of precision | All progress defined All progress measured Detailed feedback on status/progress Next steps are clear |

| Feedback | None/minimal feedback response to actions Cause-effect learning is difficult/impossible | Some feedback response Some actions w/feedback Feedback not immediate Some cause-effect learning is possible | All actions produce cause-effect feedback Feedback immediate or timely adequate Cause-effect learning |

| Randomness | Everything is predictable No randomness involved No surprises | Some unpredictability Some random events or parts of activities Speculative/casual design of random parts | Measured unpredictable content and random parts of activities Purposively designed Surprises included, designed and balanced |

| Discovery | No new content No discovery No unlocking Content is fixed | Activities presents new content on progress Some unlockable content New content does not deliver surprises | New content is presented at a measured pace Discoverable content rewards user interest Surprises on discovery |

| Emotional Entailment | No design that targets emotions No characters, stories or aesthetics Focus on factual content | Some form of design to target emotions Use of template stories characters or aesthetics Imaginary experiences | Specifically-designed characters, stories and/or aesthetics Design focuses on creating an emotional experience |

| Playfulness Enabled | Concrete goals Specific procedures No room to experiment No curiosity generated | Selectable goals and/or procedures Room for development of personal creations Optional activities with creative component | Selectable/generable goals Creative procedures Users may play with goals, content and procedures in non-predesigned ways Curiosity rewarded |

| Automation | No automation Manual intervention All or most of the rules are manually enforced Slow feedback response time | Some level of automation Optimized manual intervention Rules are partly enforced on an automatic way Improved feedback response time | Everything automated None or minimal manual intervention required Rules are/can be enforced automatically Immediate or fastest feedback response time |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gallego-Durán, F.J.; Villagrá-Arnedo, C.J.; Satorre-Cuerda, R.; Compañ-Rosique, P.; Molina-Carmona, R.; Llorens-Largo, F. A Guide for Game-Design-Based Gamification. Informatics 2019, 6, 49. https://doi.org/10.3390/informatics6040049

Gallego-Durán FJ, Villagrá-Arnedo CJ, Satorre-Cuerda R, Compañ-Rosique P, Molina-Carmona R, Llorens-Largo F. A Guide for Game-Design-Based Gamification. Informatics. 2019; 6(4):49. https://doi.org/10.3390/informatics6040049

Chicago/Turabian StyleGallego-Durán, Francisco J., Carlos J. Villagrá-Arnedo, Rosana Satorre-Cuerda, Patricia Compañ-Rosique, Rafael Molina-Carmona, and Faraón Llorens-Largo. 2019. "A Guide for Game-Design-Based Gamification" Informatics 6, no. 4: 49. https://doi.org/10.3390/informatics6040049

APA StyleGallego-Durán, F. J., Villagrá-Arnedo, C. J., Satorre-Cuerda, R., Compañ-Rosique, P., Molina-Carmona, R., & Llorens-Largo, F. (2019). A Guide for Game-Design-Based Gamification. Informatics, 6(4), 49. https://doi.org/10.3390/informatics6040049