1. Introduction

Spatial autocorrelation is fundamental to spatial science (Getis [

1]). This is because it describes the similarity between signals on adjacent vertices. Strong positive (resp. negative) spatial autocorrelation occurs when most signals on adjacent vertices take similar (resp. dissimilar) values. If such clear tendencies do not exist, spatial autocorrelation is weak. Geary’s [

2]

c, which is a spatial generalization of the von Neumann [

3] ratio, has traditionally been a popular measure of spatial autocorrelation. There exists

See, e.g., de Jong et al. [

4] (Equation (

6)). Herein, we provide a new perspective on Geary’s

c. We discuss this using concepts from spectral graph theory/linear algebraic graph theory and, based on our analysis, provide a recommendation for applied studies.

To present our contributions more precisely, let us clarify the graph considered herein. Let

, where

, denote an undirected graph without loops and multiple edges. We assume that

n (the number of vertices) and

m (the number of edges) are such that

and

, respectively. Then,

m satisfies

. For

, let

and

. Then, for

,

,

,

if

, and

. Accordingly,

is a non-negative, symmetric, hollow, and nonzero matrix.

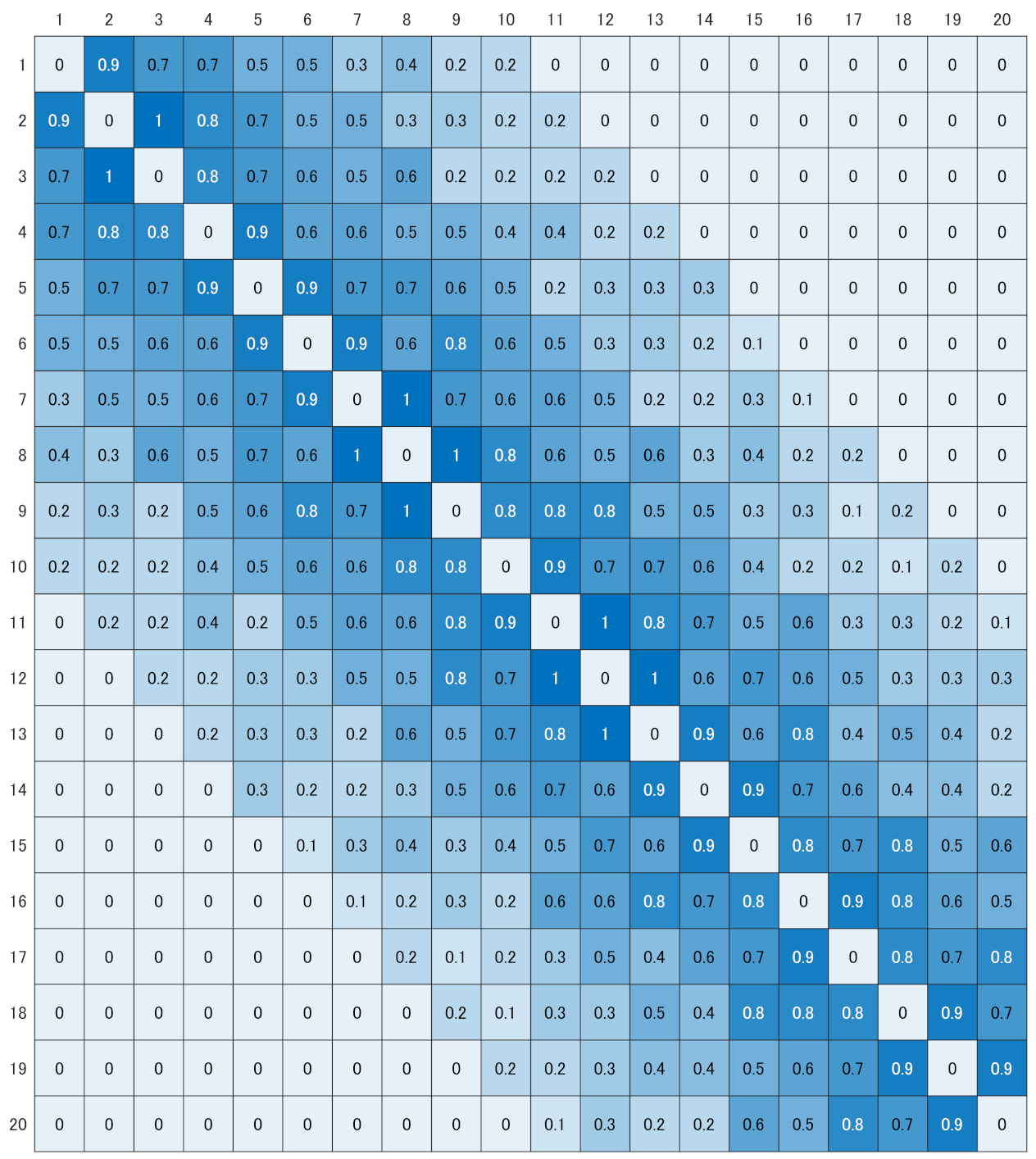

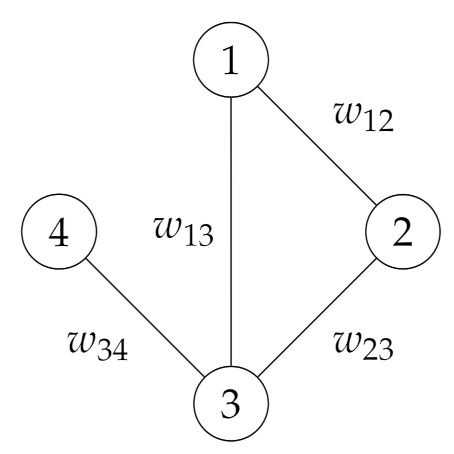

Example 1. As an example of , consider the graph such that and . See Figure 1, which depicts . In this case, given that and do not belong to E, the corresponding , denoted by , iswhich is a non-negative, symmetric, hollow, and nonzero matrix. Let

denote the observation (signal) on the vertex

i for

such that

, where

. Following Cliff and Ord [

5,

6,

7,

8], Geary’s

c is defined as

where

denotes the sum of all

for

, i.e.,

, which is positive by assumption. Note that, as stated, Geary’s

c is a spatial generalization of the von Neumann [

3] ratio:

Let

, where

for

, and

which is referred to as graph Laplacian. By assumption,

is a symmetric matrix.

Example 2. As an example of , we show , which denotes the graph Laplacian of . That is,which is a symmetric matrix. As

is a real symmetric matrix, it can be decomposed as

where

is an orthogonal matrix and

such that

. Concerning the eigenvalues of

, we assume

.

Remark 1. We note that by assuming we exclude the case such that . Then, it follows that We exclude it because in this case Geary’s c equals one for any . For more details, see the Appendix A.1. In spectral graph theory/linear algebraic graph theory, the following linear transformation:

where

, is referred to as graph Fourier transform of

. In addition,

and

for

are referred to as graph Laplacian eigenvalues and graph Laplacian eigenvectors, respectively (see Hammond et al. [

9] and Shuman et al. [

10]). Let

Then, given

,

can be represented as a linear combination of graph Laplacian eigenvectors,

, as follows:

Moreover, we have

which represents Parseval’s identity in the graph Fourier transform, see, e.g., Shuman et al. [

11]. (Proof of (

11) is provided in the

Appendix A.2).

Remark 2. (i) Harvey [12] (Equation (2.13)) represents Parseval’s identity in the Fourier representation of time series, which can be regarded as a special case of (11). See also Anderson [13] (Section 4.2.2). in Anderson [13] (Equations (14) and (21)) corresponds to in (6) for the case in which equals in Strang [14] (p. 136). (ii) The discrete cosine transform developed by Ahmed et al. [15] is an example of graph Fourier transform. For more details, see Appendix A.14. Let

denote the vector of the standard scores of

:

where

s denotes the sample standard deviation of

, i.e., the positive square root of

, and denote the graph Fourier transform of

by

:

Now, we are ready to state our contributions. As stated, this paper reconsiders Geary’s c using concepts from spectral graph theory. Our contributions herein are twofold.

We present three types of representations for it. As the first type of representation, we give two matrix form representations that use graph Laplacian . As the second type of representation, we express it using and . Recall that they are the graph Fourier transform of and , respectively. As the third type of representation, we show its expression using squared Pearson’s correlation coefficients between and for .

We illustrate that the spatial autocorrelation measured by Geary’s c is positive (resp. negative) if spatially smoother (resp. less smooth) graph Laplacian eigenvectors are dominant. Here, we note that is spatially smoother than for .

The organization of the paper is as follows.

Section 2 fixes some notations and presents key preliminary results for

in (

5).

Section 3 and

Section 4 present the contributions stated above in order.

Section 5 concludes the paper. In

Section 5, we provide a recommendation for applied studies.

3. Three Types of Representations for Geary’s

In this section, we present three types of representations for Geary’s

c: In

Section 3.1, we show that it can be represented using

. In

Section 3.2, we show that it can be expressed using

and

. In

Section 3.3, we show that it can be expressed using

for

. Subsequently, in

Section 3.4, we clarify the relationship between

,

, and

for

.

3.1. Graph Laplacian Representation

Geary’s

c in (

3) can be expressed in matrix notation as follows.

Proposition 1. (i) Geary’s c can be represented using as (ii) Geary’s c can also be represented using very succinctly as Remark 4. (i) (24) corresponds to de Jong et al. [4] (Equation (22)). The matrix in de Jong et al. [4] is in our notation. It is notable that de Jong et al. [4] derived this. (ii) (24) also corresponds to Lebichot and Saerens [20] (Equation (7)). Here, we point out that 2 in the equation should be removed. 3.2. Graph Fourier Transform Representation

In addition, given

, we have

Substituting (

26) and (

27) into (

24), it follows that

Likewise, Geary’s

c can also be represented as

Recall that

in (

28) (resp.

in (

29)) are graph Fourier transform of

(resp.

).

We summarize the properties obtained above in the following proposition.

Proposition 2. (i) Let and for . Then, Geary’s c can be expressed using aswhere both and are weighted averages of . In addition, satisfy (ii) Let for . Then, Geary’s c can be expressed using asand it is a weighted average of , which are non-negative. In addition, satisfy Remark 5. (i) Given , we have Given (30), multiplying (34) by yields We note that the bounds of Geary’s c given by (35) were derived by de Jong et al. [4]. In addition, given that by assumption, it follows that (ii) Let . Then, given , (32) can be rewritten as Thus, c is also a weighted average of .

From Proposition 2, we have the following results.

Corollary 1 implies that plotting or is valuable for detecting spatial autocorrelation in .

3.3. Pearson’s Correlation Coefficient Representation

In this section, we express Geary’s c using Pearson’s correlation coefficient.

Denote Geary’s

c for the case in which

equals

by

:

Then, we have the following results.

Lemma 2. can be expressed using as follows:which thus satisfy and . Remark 6. Given (35) and (39), (resp. ) is the lower (resp. upper) bound of Geary’s c. In addition, we have the following result.

Lemma 3. equals for .

Then, given Lemmata 2 and 3, we have the following representation of Geary’s c.

Proposition 3. For , Geary’s c can be expressed using Pearson’s correlation coefficients as In addition, such correlation coefficients satisfy .

Remark 7. Dray [21] represented Moran’s I using Pearson’s correlation coefficient. in Proposition 3 corresponds to Dray [21] (Equation (6)). From Proposition 3, we have the following results.

Corollary 2. For , it follows that Corollary 2 implies that plotting is also valuable for detecting spatial autocorrelation in .

3.4. Some Remarks

Here, we clarify the relationship between

,

, and

for

. They are related as

for

. Therefore,

,

, and

provide essentially the same information. Nevertheless, among them, we prefer

to

and

. This is because it satisfies

and thus its distribution appears similar to probability distribution.

We note that (

16) is obtainable by dividing (

11) by

, and therefore it is equivalent to Parseval’s identity in the graph Fourier transform in (

11). Likewise, (

33) is also equivalent to it. This is because (

33) is obtainable by dividing (

11) by

.

4. An Illustration of When Geary’s Becomes Greater or Lesser Than One

In this section, we illustrate that Geary’s

c becomes greater (resp. lesser) than one if spatially smoother (resp. less smooth) graph Laplacian eigenvectors are dominant. In other words, we show that the spatial autocorrelation measured by Geary’s

c is positive (resp. negative) if spatially smoother (resp. less smooth) graph Laplacian eigenvectors are dominant. Recall that, from (

22),

is spatially smoother than

for

.

For this purpose, let

where

a in (

44) is a real number. Then, it immediately follows that

and

Other than these, concerning , we have the following results.

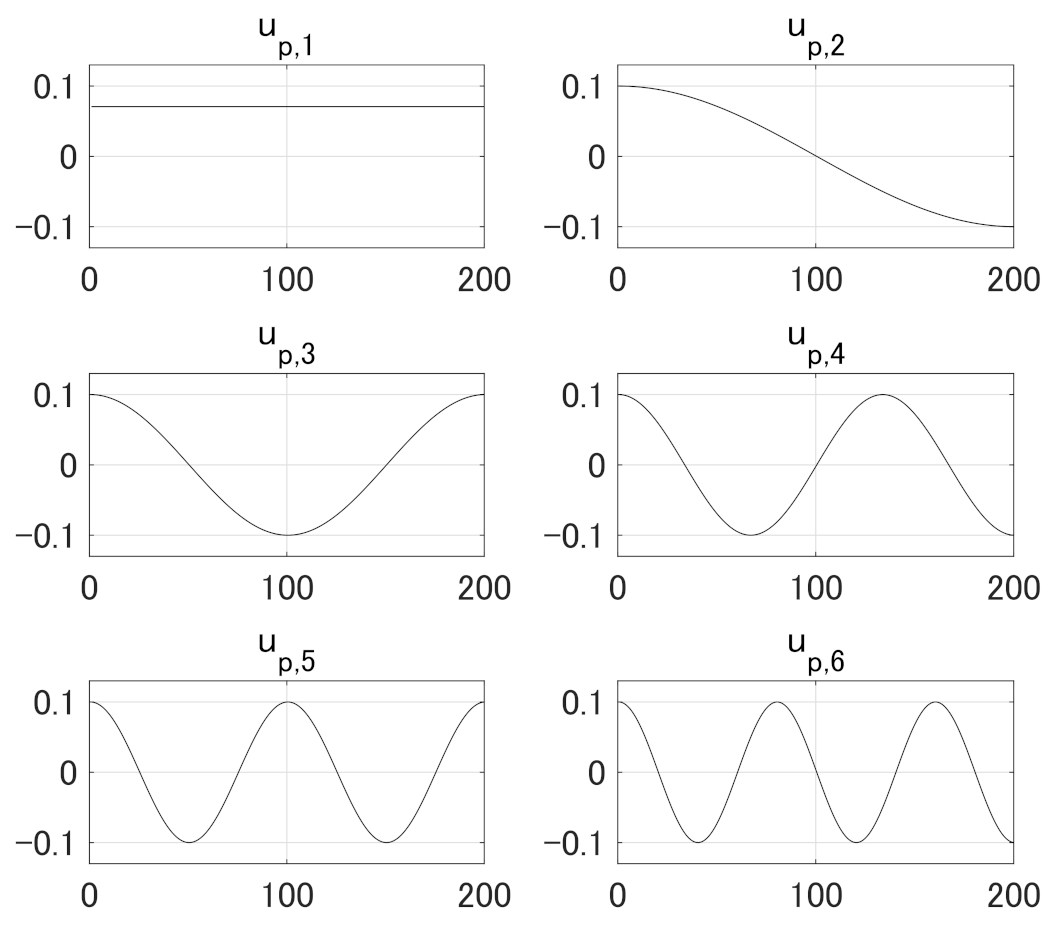

Figure 2 illustrates the results in Lemma 4. It plots

for

and

.

Let

where

. Here,

is a real number, and

for

are defined in (

44). Recall that

is a basis of

n-dimensional Euclidean space, and any vector in the space can be represented uniquely as a linear combination of

. In addition, we note that if

for

, then

. See (

10).

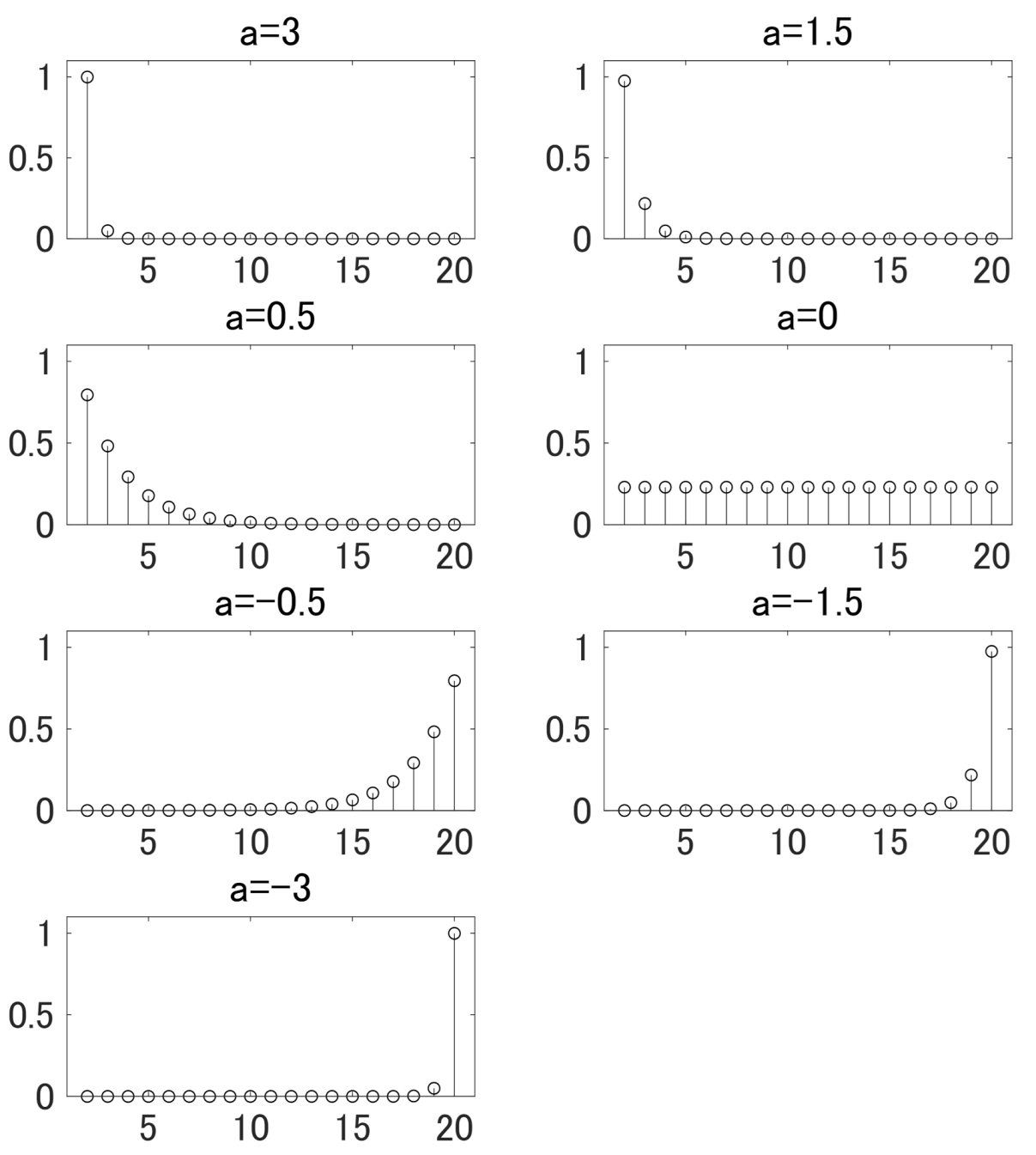

Given the results presented above on and the definition of , we have the following results.

Proposition 4. (i) c can be represented as (ii) satisfies the following inequalities: (iii) depends on a in (44) as - (i)

Recall that

(resp.

) denotes the minimum (resp. maximum) of the graph Laplacian eigenvalues,

, and

is the average of them. In addition, from (

7), it follows that

- (ii)

Lemma 4 and Proposition 4 imply that

- (a)

as a increases from 0 to ∞, spatially smoother graph Laplacian eigenvectors become more dominant, and accordingly becomes spatially smoother;

- (b)

as a decreases from 0 to , spatially less smooth graph Laplacian eigenvectors become more dominant, and accordingly becomes less spatially smoother; and

- (c)

if a equals 0, then all graph Laplacian eigenvectors contribute to equally.

Let

where

. Note that

represents Geary’s

c for

defined in (

47).

Then, we have the following results.

Proposition 5. (i) in (52) can be represented as follows: (ii) satisfies the following inequalities: (iii) depends on a in (44) as Remark 8. (i) From (54), it follows that does not affect . (ii) Given that for and , in (47) is a weighted average of the graph Laplacian eigenvalues, . By combining Lemma 4 and Proposition 5, it follows that (i) as a increases from 0 to ∞, spatially smoother graph Laplacian eigenvectors become more dominant, and accordingly the corresponding Geary’s c tends to , which is its lower bound; (ii) as a decreases from 0 to , spatially less smooth graph Laplacian eigenvectors become more dominant, and accordingly the corresponding Geary’s c tends to , which is its upper bound; and (iii) if a equals 0, then all graph Laplacian eigenvectors contribute to equally, and the corresponding Geary’s c equals 1.

Let us illustrate Propositions 4 and 5 by specifying

. We consider three

’s. The first one is

in (

2) with

,

,

, and

. As stated,

is a non-negative, symmetric, hollow, and nonzero matrix. The second one is

where

. This matrix is the adjacency matrix of a path graph

such that

and

. See

Figure A1, which depicts

where

. Observe that it is a non-negative, symmetric, hollow, and nonzero matrix. Some remarks on

are provided in the

Appendix A.14. The third one is

shown in heatmap style in

Figure A2. As shown in the figure,

is also a nonnegative, symmetric, hollow, and nonzero matrix.

Table 1 tabulates the results. LB and UB, respectively, represent lower bound and upper bound of

(resp.

) given by (

50) (resp. (

56)). From the table, we can confirm that these results clearly illustrate Propositions 4 and 5. That is to say, for all

’s, we can observe that as

a increases from 0 to 3 (resp. decreases from 0 to

),

becomes spatially smoother (resp. less smoother), and Geary’s

c tends to

(resp.

).

Remark 9. (i) If , then equals . Thus, if , then it follows that . (ii) If , then equals . Thus, if , then it follows that . (iii) If , then equals . Thus, if , then it follows that .

5. Concluding Remarks

Herein, we have provided a new perspective on Geary’s c. We reconsidered it using concepts from spectral graph theory/linear algebraic graph theory.

First, we demonstrated three types of representations for it. The first is expressions based on graph Laplacian (Proposition 1), the second is expressions based on graph Fourier transform (Proposition 2), and the third is an expression based on Pearson’s correlation coefficient (Proposition 3).

Second, we illustrated that the spatial autocorrelation measured by Geary’s

c is positive (resp. negative) if spatially smoother (resp. less smooth) graph Laplacian eigenvectors are dominant (Propositions 4 and 5 and

Table 1).

In closing, based on our analysis, we provide a recommendation for applied studies: For detecting spatial autocorrelation, in addition to calculating Geary’s

c, plotting

, which is the squared Pearson’s correlation coefficient between

and

, for

is valuable (see

Section 3.4 for a related discussion). This is because

it provides more detailed information on spatial autocorrelation measured by Geary’s c. Its usefulness is similar to frequency domain analysis of univariate time series.