- Feature Paper

- Article

A Boundary Control Problem for the Stationary Darcy–Brinkman–Jeffreys System

- Evgenii S. Baranovskii,

- Mikhail A. Artemov and

- Alexander V. Yudin

- + 1 author

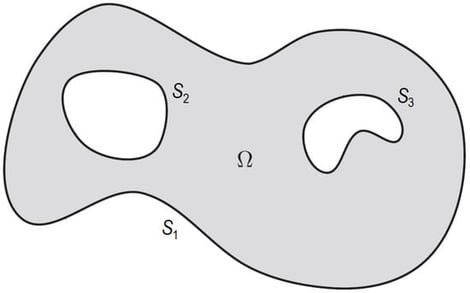

This paper deals with a boundary control problem for the Darcy–Brinkman–Jeffreys system describing 3D (or 2D) steady-state flows of an incompressible viscoelastic fluid through a porous medium. Applying the elliptic regularization method and arguments from the topological degree theory, we prove a theorem about the weak solvability of the corresponding boundary value problem under an inhomogeneous Dirichlet boundary condition. Using this theorem, we obtain sufficient conditions for the existence of optimal weak solutions minimizing a given cost function. Moreover, it is shown that the set of all optimal weak solutions is bounded and sequentially weakly closed in an appropriate function space.

1 March 2026