Exploring the Impact of Generative AI ChatGPT on Critical Thinking in Higher Education: Passive AI-Directed Use or Human–AI Supported Collaboration?

Abstract

1. Introduction

2. Literature Review

2.1. What Higher Education Gains and Risks in the Age of Generative AI

2.2. GenAI Impact on Critical Thinking

3. Theoretical Framework

- What are students’ self-reported perceptions of how Generative AI enhances their critical thinking?

- To what extent do students demonstrate critical thinking while using Generative AI?

- How do students perceive the impact of guided GenAI ChatGPT on their critical thinking?

4. Methodology

4.1. Design

4.2. Participants

4.3. Data Collection

4.3.1. GenAI Critical Thinking Survey

4.3.2. ChatGPT Scripts

4.3.3. Semi-Structured Interviews

5. Results

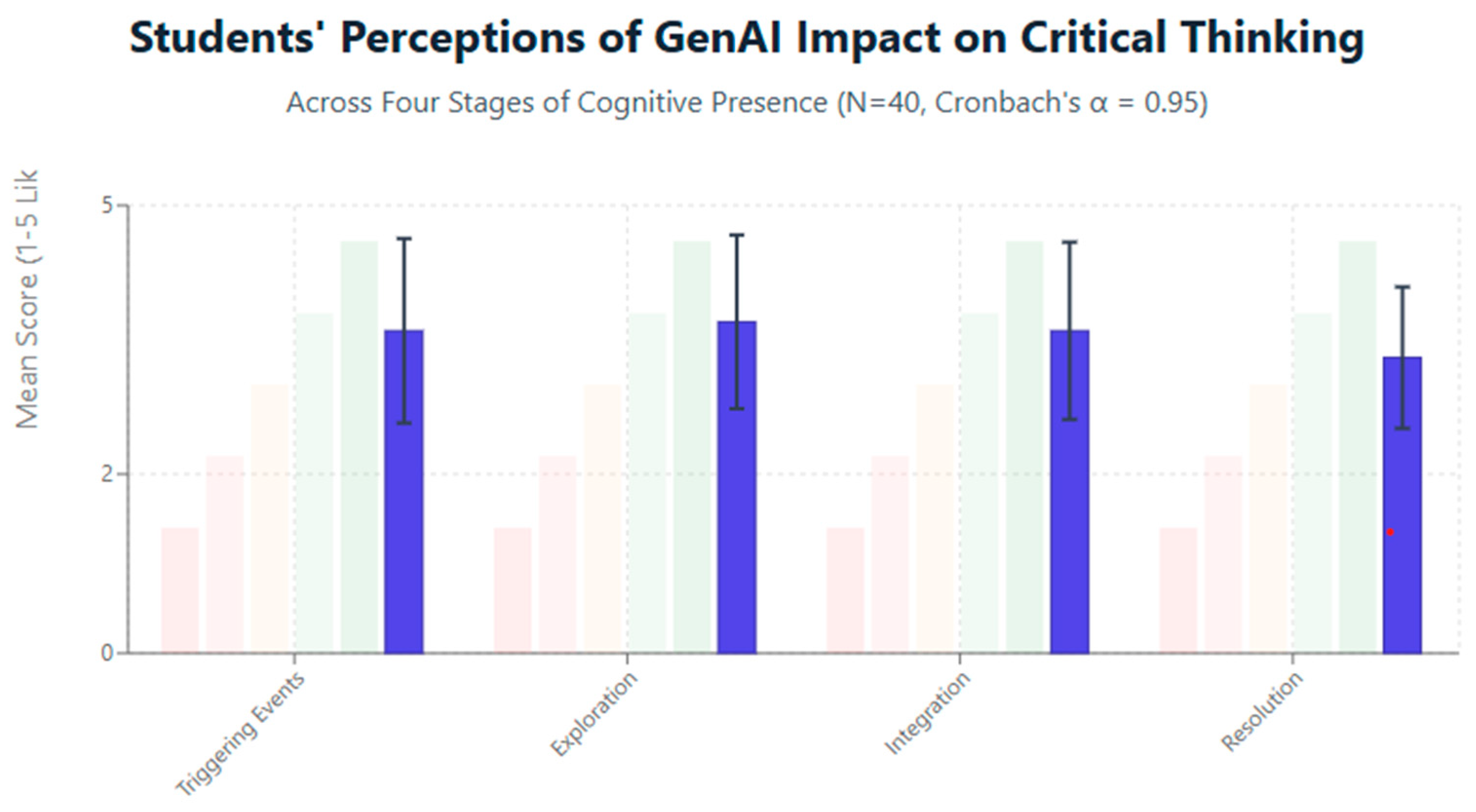

5.1. Students’ Perceptions of the Impact of Generative AI on Their Critical Thinking (Q1)

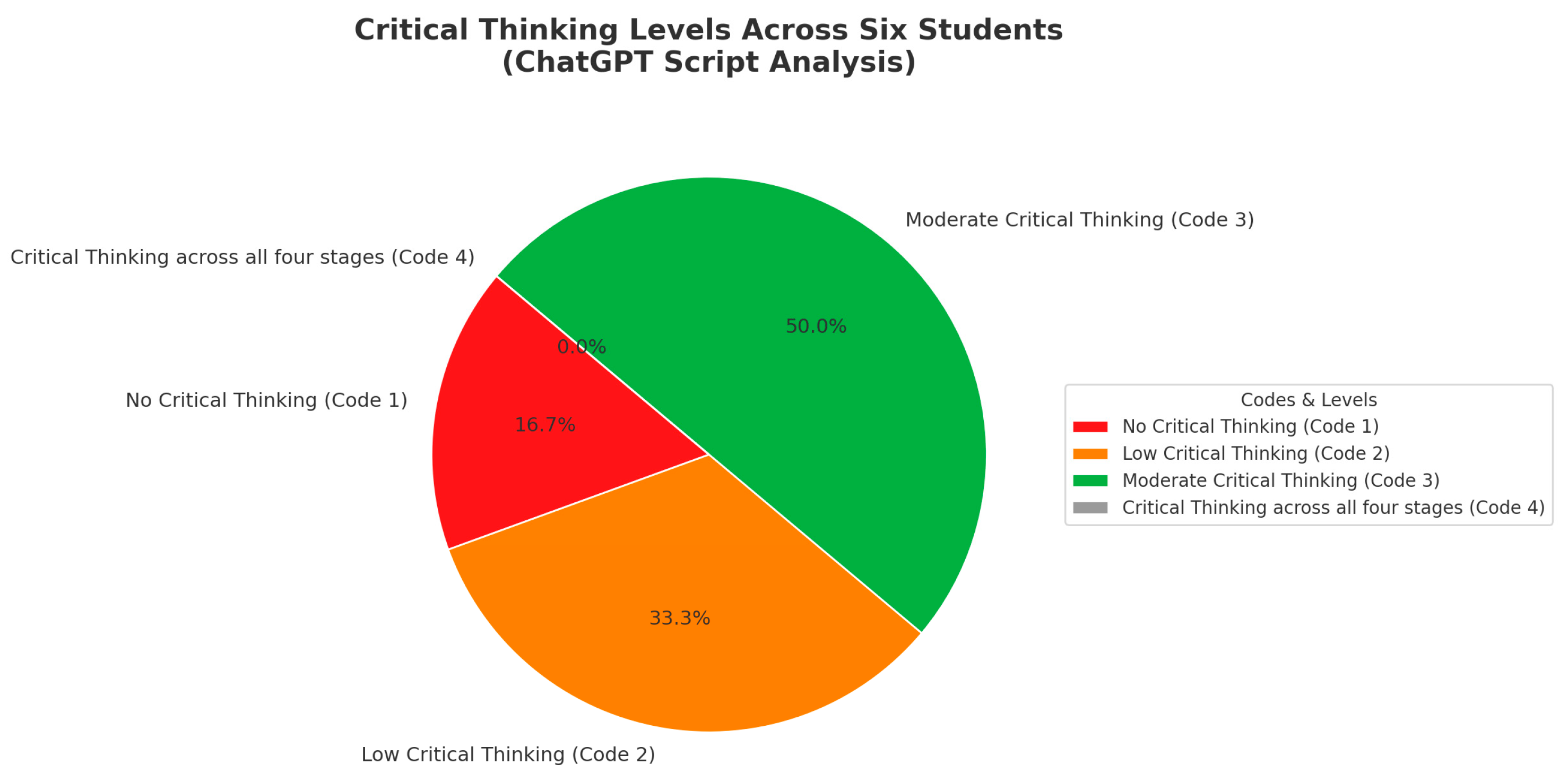

5.2. The Extent to Which Students Demonstrate Critical Thinking While Using GenAI (Q2)

5.2.1. Passive AI-Directed Interactions (Code 1–2; No/Low Critical Thinking)

5.2.2. Collaborative AI-Supported Interaction (Code 3; Moderate Critical Thinking)

5.3. The Impact of Guided GenAI ChatGPT Use on the Development of Critical Thinking (Q3)

5.3.1. Guided Use of GenAI Was Associated with Reported Improvements in Critical Thinking

5.3.2. Positive Impact of the Used Framework on GenAI Use

5.3.3. Critical Thinking Is Indispensable in Using GenAI

5.3.4. ChatGPT as a Scaffolding More Knowledgeable Other (MKO) Educational Tool

5.3.5. The Nature of the Human–ChatGPT Partnership

6. Discussion

6.1. What Do the Survey Responses Indicate?

6.2. Two Interaction Pathways with ChatGPT: AI Tutoring vs. Collaborative Personalized Support

6.3. Filling the Critical Thinking Gap Through Structured ChatGPT Use

7. Implications

7.1. How Can GenAI Be Positioned as a Thinking Partner?

7.2. GenAI Literacy and Structured Use of GenAI

7.3. Adapt Current Assessment Methods to GenAI

7.4. Toward AI-Mediated Inquiry Grounded in Human Oversight

8. Conclusions

8.1. Future Directions

8.2. Limitations

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| GenAI | Generative Artificial Intelligence |

| PI | Practical Inquiry |

| CP | Cognitive Presence |

| PICP | Practical Inquiry Cognitive Presence |

| CoI | Community of Inquiry |

| CT | Critical Thinking |

Appendix A

Appendix A.1. ChatGPT Scripts

| PICP Critical Thinking Stage Definitions | Code Frequency | Level of Critical Thinking | Definition of Code |

|---|---|---|---|

| 1. Triggering Events A sense of puzzlement that indicates a curiosity to ask a question or seek a solution for a problem. Then they start to ask GenAI a question to find an answer to the question or better solve a problem. | Code 1 (25%) One stage | No | Students demonstrate one cognitive stage only. Typically, a single, isolated question (one-shot or a single-level prompt). (triggering, exploration, integration, or resolution). This shows narrow engagement. This does not indicate the quality is low, but that the interaction lacks diversity in thinking. |

| 2. Exploration Diving and digging deeper to search for more information, looking at different ideas, and trying to understand the problem better through asking follow-up questions, brainstorming, or asking for some examples. | Code 2 (50%) Two stages | Low | Students ask at least two related questions (two-shot) covering two distinct stages (e.g., triggering and exploration or integration and resolution). These stages may appear in any order and show an emerging effort to deepen or apply thinking, but without full synthesis. |

| 3. Integration Trying to make meaning by comparing the responses from GenAI with their background knowledge, social schema, and other external resources for validation, to decide what makes sense and what does not, and whether to accept it or adapt it by adding what they need to reach a final outcome from their collaborative discussion with GenAI. | Code 3 (75%) Three stages | Moderate | Students ask at least three logically connected shots or questions covering three stages, with visible effort to connect, synthesize, and reflect on AI input. This may include linking ChatGPT responses to prior knowledge, identifying contradictions, or preparing for application. |

| 4. Resolution Students break down the complexity of the issue, problem, or idea to reach a conclusion or solution. They take everything they have learned and use it to organize their ideas, solve a problem, contextualize it, create something that fits the real situation, and apply it. | Code 4 (100%) Critical thinker The full cycle of four stages | Critical thinker | Students’ multi-shot questions cover all four stages (triggering → exploration → integration → resolution) in their interaction through multi-level shot questions and clarifications. This represents a full critical thinking cycle, showing curiosity, depth, synthesis, and contextualized application of knowledge. |

Appendix A.2. ChatGPT Script

| ChatGPT Script | Code | Number of Shots in Each Stage | Occurrence Ratio Scale with Critical Thinking Level |

|---|---|---|---|

| Chat 1: Kore asked 7 questions. | 1. Triggering event—sense of puzzlement | 7 (100%) | Code 1 (25%) No critical thinking |

| 2. Exploration—information exchange | 0 (0%) | ||

| 3. Integration—connecting ideas | 0 (0%) | ||

| 4. Resolution—applying new ideas | 0 (0%) | ||

| Chat 2: Mike asked 7 questions. | 1. Triggering event—sense of puzzlement | 3 (43%) | Code 2 (50%) Low level of critical thinking |

| 2. Exploration—information exchange | 4 (57%) | ||

| 3. Integration—connecting ideas | 0 (0%) | ||

| 4. Resolution—applying new ideas | 0 (0%) | ||

| Chat 3: Riley asked 15 questions. | 1. Triggering event—sense of puzzlement. | 10 (66.67%) | Code 2 (50%) Low level of critical thinking |

| 2. Exploration—information exchange. | 5 (33.33%) | ||

| 3. Integration—connecting ideas. | 0 (0%) | ||

| 4. Resolution—applying new ideas. | 0 (0%) | ||

| Chat 4: Kate asked 31 questions. | 1. Triggering event—sense of puzzlement | 13 (42%) | Code 3 (75%) Moderate level of critical thinking |

| 2. Exploration—information exchange | 14 (45.10%) | ||

| 3. Integration—connecting ideas | 4 (12.90%) | ||

| 4. Resolution—applying new ideas | 0 (0%) | ||

| Chat 5: Emily asked 35 questions. | 1. Triggering event—sense of puzzlement | 13 (37%) | Code 3 (75%) Moderate level of critical thinking |

| 2. Exploration—information exchange | 15 (43%) | ||

| 3. Integration—connecting ideas | 7 (20%) | ||

| 4. Resolution—apply new ideas | 0 (0%) | ||

| Chat 6: Robe asked 20 questions. | 1. Triggering event—sense of puzzlement | 6 (30%) | Code 3 (75%) Moderate level of critical thinking |

| 2. Exploration—information exchange | 10 (50%) | ||

| 3. Integration—connecting ideas | 4 (20%) | ||

| 4. Resolution—applying new ideas | 0 (0%) |

Appendix B. Interview

Appendix B.1. Interview Questions

- Could you tell me how you used GenAI ChatGPT to explore a daunting question or an issue that you found challenging?

- How did GenAI ChatGPT stimulate you to ask questions?

- What issues or questions did you discuss with GenAI ChatGPT that you found relevant and challenging?

- Can you describe how GenAI ChatGPT helped you examine the problem or issue at hand?

- Did GenAI ChatGPT’s responses stimulate your follow-up questions?

- How did GenAI ChatGPT help you brainstorm or search for relevant information?

- In what ways did GenAI ChatGPT help you integrate new information and ideas with your existing understanding of the topic?

- Can you provide an example of how GenAI ChatGPT helped you better understand the topic or an assignment?

- How did GenAI ChatGPT assist you in synthesizing information and making connections between different ideas or perspectives?

- Can you tell me about a time when you used GenAI ChatGPT to help you figure out a real-life problem or an assignment?

- How did you use critical thinking to determine if the information provided was helpful or not?

- Have you ever used GenAI ChatGPT to bring together different ideas or perspectives to find an answer to a question to apply it in a real-life situation or work?

- Does using GenAI ChatGPT require a critical thinker? Please explain your reasoning.

- Thinking back on your experience, how did applying the critical thinking framework (triggering events, exploration, integration, and resolution) I provided you influence your use of ChatGPT?

- Would you say it changed your perspective on ChatGPT from what you believed before?

Appendix B.2. Interview Coding and Thematic Analysis

| Themes | Robe Codes | Emily Codes |

|---|---|---|

| 1. Guided use of GenAI ChatGPT was associated with reported improvements in critical thinking, as indicated in the four stages of CP. General Codes: 1. Triggering Events Definition: A sense of puzzlement that indicates a curiosity to ask a question or seek a solution for a problem. Then they start to ask GenAI a question to find an answer to the question or better solve a problem. |

|

|

| 2. Exploration: Information on Exchange Definition: Diving and digging deeper to search for more information, look at different ideas, and try to understand the problem better through asking follow-up questions, brainstorming, or asking for some examples. |

|

|

| 3. Integration: Connecting Ideas Definition: Trying to make meaning by comparing the responses from GenAI with their background knowledge, social schema, and other external resources for validation, to decide what makes sense and what does not, and whether to accept it or adapt it by adding what they need to reach a final outcome from their collaborative discussion with GenAI. |

|

|

| 4. Resolution: Apply New Ideas Students break down the complexity of the issue, problem, or idea to reach a conclusion or solution. They take everything they have learned and use it to organize their ideas, solve a problem, contextualize it, create something that fits the real situation, and apply it. |

|

|

| 2. Positive Impact of the Used Framework on GenAI Use Full cycle of CT: Getting a complete image of how the four stages above impact their use of GenAI. |

|

|

| 3. Critical Thinking Is Indispensable in Using GenAI Definition: This theme emphasizes that while GenAI can support learning, students must bring their own critical thinking to the table. Effective use of ChatGPT requires reflective judgment, evaluation, and the ability to analyze and apply content independently. |

|

|

| 4. ChatGPT as a Scaffolding More Knowledgeable Other Educational Tool Definition: Students recognize ChatGPT as a practical educational support tool, especially for writing, note-taking, idea synthesis, and accessing resources. While they note its efficiency and usefulness, they also acknowledge the need for critical evaluation and effective time management when using it. |

|

|

| 5. The Nature of the Human–ChatGPT Partnership Definition: This theme captures how students describe their evolving relationship with ChatGPT, not just as a static tool but also as a responsive, personalized platform that adapts to their learning needs and feels more like a collaborative partner than a machine. |

|

|

References

- Akbar, R. A. (2023). Critical thinking as a twenty first century skill. Journal of Educational Research and Social Sciences Review (JERSSR), 3(1), 8–15. [Google Scholar]

- AlAli, R., & Wardat, Y. (2024). Enhancing classroom learning: ChatGPT’s integration and educational challenges. International Journal of Religion, 5(6), 971–985. [Google Scholar] [CrossRef]

- Alam, A. (2022). Employing adaptive learning and intelligent tutoring robots for virtual classrooms and smart campuses: Reforming education in the age of artificial intelligence. In Advanced computing and intelligent technologies: Proceedings of ICACIT 2022 (pp. 395–406). Springer Nature Singapore. [Google Scholar] [CrossRef]

- Baird, A. M., & Parayitam, S. (2019). Employers’ ratings of importance of skills and competencies college graduates need to get hired: Evidence from the New England region of USA. Education + Training, 61(5), 622–634. [Google Scholar] [CrossRef]

- Birenbaum, M. (2023). The chatbots’ challenge to education: Disruption or destruction? Education Sciences, 13(7), 711. [Google Scholar] [CrossRef]

- Blahopoulou, J., & Ortiz-Bonnin, S. (2025). Student perceptions of ChatGPT: Benefits, costs, and attitudinal differences between users and non-users toward AI integration in higher education. Education and Information Technologies, 1–24. [Google Scholar] [CrossRef]

- Braun, V., & Clarke, V. (2006). Using thematic analysis in psychology. Qualitative Research in Psychology, 3(2), 77–101. [Google Scholar] [CrossRef]

- Breivik, J. (2016). Critical thinking in online educational discussions measured as progress through inquiry phases: A discussion of the cognitive presence construct in the community of inquiry framework. International Journal of E-Learning & Distance Education, 31(1), 1. [Google Scholar]

- Castellanos-Reyes, D., Olesova, L., & Sadaf, A. (2025). Transforming online learning research: Leveraging GPT large language models for automated content analysis of cognitive presence. The Internet and Higher Education, 65, 101001. [Google Scholar] [CrossRef]

- Chan, C. K. Y., & Hu, W. (2023). Students’ voices on generative AI: Perceptions, benefits, and challenges in higher education. International Journal of Educational Technology in Higher Education, 20(1), 43. [Google Scholar] [CrossRef]

- Chang, C.-Y., Yang, C.-L., Jen, H.-J., Ogata, H., & Hwang, G.-H. (2024). Facilitating nursing and health education by incorporating ChatGPT into learning designs. Educational Technology and Society, 27(1), 215–230. [Google Scholar] [CrossRef]

- Cooper, G. (2023). Examining science education in ChatGPT: An exploratory study of generative artificial intelligence. Journal of Science Education and Technology, 32(3), 444–452. [Google Scholar] [CrossRef]

- Corbin, J., & Strauss, A. (2014). Basics of qualitative research: Techniques and procedures for developing grounded theory. Sage publications. [Google Scholar]

- Costa, I. C. P., Nascimento, M. C. D., Treviso, P., Chini, L. T., Roza, B. D. A., Barbosa, S. D. F. F., & Mendes, K. D. S. (2024). Using the Chat Generative Pre-trained Transformer in academic writing in health: A scoping review. Revista Latino-Americana de Enfermagem, 32, e4194. [Google Scholar] [CrossRef]

- Creswell, J. W., & Miller, D. L. (2000). Determining validity in qualitative inquiry. Theory into Practice, 39(3), 124–130. [Google Scholar] [CrossRef]

- Crompton, H., & Burke, D. (2024). The nexus of ISTE standards and academic progress: A mapping analysis of empirical studies. TechTrends, 68(4), 711–722. [Google Scholar] [CrossRef]

- Cukurova, M., & Miao, F. (2024). AI competency framework for teachers. UNESCO Publishing. [Google Scholar]

- Dai, Y., Lai, S., Lim, C. P., & Liu, A. (2023). ChatGPT and its impact on research supervision: Insights from Australian postgraduate research students. Australasian Journal of Educational Technology, 39(4), 74–88. [Google Scholar] [CrossRef]

- Dewey, J. (1933). How we think: A restatement of the relation of reflective thinking to the educative process. D.C. Heath & Co Publishers. [Google Scholar]

- Essel, H. B., Vlachopoulos, D., Essuman, A. B., & Amankwa, J. O. (2024). ChatGPT effects on cognitive skills of undergraduate students: Receiving instant responses from AI-based conversational large language models (LLMs). Computers and Education: Artificial Intelligence, 6, 100198. [Google Scholar] [CrossRef]

- Essien, A., Bukoye, O. T., O’Dea, X., & Kremantzis, M. (2024). The influence of AI text generators on critical thinking skills in UK business schools. Studies in Higher Education, 49, 865–882. [Google Scholar] [CrossRef]

- European Parliament. (2024, January 23). Amendments adopted by the European Parliament on 14 June 2023 on the proposal for a regulation of the European Parliament and of the council on laying down harmonised rules on artificial intelligence (Artificial Intelligence Act) and amending certain union legislative acts (Official Journal of the European Union C/2024/506). Official Journal of the European Union. Available online: http://data.europa.eu/eli/C/2024/506/oj (accessed on 1 January 2025).

- Fotaris, P., Mastoras, T., & Lameras, P. (2023). Designing educational escape rooms with generative AI: A framework and ChatGPT prompt engineering guide. In T. Spil, G. Bruinsma, & L. Collou (Eds.), Proceedings of the European conference on games-based learning (Vol. 2023-October, pp. 180–189). Dechema e.V. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85178996908&partnerID=40&md5=172cc451419a86f0f9a8a8d43b75ed9d (accessed on 1 May 2024).

- Freire, A. P., Cardoso, P. C. F., & Salgado, A. D. L. (2023, October 16–20). May we consult ChatGPT in our human-computer interaction written exam? An experience report after a professor answered yes. XXII Brazilian Symposium on Human Factors in Computing Systems (pp. 1–11), Maceió, Brazil. [Google Scholar] [CrossRef]

- Garrison, D. R. (2017). Cognitive presence and critical thinking. Available online: http://www.thecommunityofinquiry.org/editorial5 (accessed on 6 July 2023).

- Garrison, D. R. (2022). Shared metacognition in a community of inquiry. Online Learning, 26(1), 6–18. [Google Scholar] [CrossRef]

- Garrison, D. R., & Akyol, Z. (2013). The community of inquiry theoretical framework. In Handbook of distance education (pp. 104–120). Routledge. [Google Scholar]

- Garrison, D. R., Anderson, T., & Archer, W. (1999). Critical inquiry in a text-based environment: Computer conferencing in higher education. The Internet and Higher Education, 2(2–3), 87–105. [Google Scholar] [CrossRef]

- Garrison, D. R., Anderson, T., & Archer, W. (2001). Critical thinking, cognitive presence, and computer conferencing in distance education. American Journal of Distance Education, 15(1), 7–23. [Google Scholar] [CrossRef]

- Garrison, D. R., & Arbaugh, J. B. (2007). Researching the community of inquiry framework: Review, issues, and future directions. The Internet and Higher Education, 10(3), 157–172. [Google Scholar] [CrossRef]

- Garrison, D. R., & Archer, W. (2000). A transactional perspective on teaching and learning: A framework for adult and higher education. Advances in Learning and Instruction Series. Elsevier Science, Inc. [Google Scholar]

- Garrison, D. R., & Vaughan, N. D. (2008). Blended learning in higher education: Framework, principles, and guidelines. John Wiley & Sons. [Google Scholar]

- Gearing, R. E. (2004). Bracketing in research: A typology. Qualitative Health Research, 14(10), 1429–1452. [Google Scholar] [CrossRef]

- Greenhill, V. (2010). 21st century knowledge and skills in educator preparation. In Partnership for 21st century skills. ERIC. [Google Scholar]

- Gregorcic, B., Polverini, G., & Sarlah, A. (2024). ChatGPT as a tool for honing teachers’ Socratic dialogue skills. Physics Education, 59(4), 045005. [Google Scholar] [CrossRef]

- Guleria, A., Krishan, K., Sharma, V., & Kanchan, T. (2023). ChatGPT: Ethical concerns and challenges in academics and research. Journal of Infection in Developing Countries, 17(9), 1292–1299. [Google Scholar] [CrossRef]

- Guo, Y., & Lee, D. (2023). Leveraging ChatGPT for enhancing critical thinking skills. Journal of Chemical Education, 100(12), 4876–4883. [Google Scholar] [CrossRef]

- Jin, S., Zhong, Z., Li, K., & Kang, C. (2024). Investigating the effect of guided inquiry on learners with different prior knowledge in immersive virtual environments. Education and Information Technologies, 29(17), 22447–22473. [Google Scholar] [CrossRef]

- Kaczkó, É., & Ostendorf, A. (2023). Critical thinking in the community of inquiry framework: An analysis of the theoretical model and cognitive presence coding schemes. Computers & Education, 193, 104662. [Google Scholar] [CrossRef]

- Kestin, G., Miller, K., Klales, A., Milbourne, T., & Ponti, G. (2025). AI tutoring outperforms in-class active learning: An RCT introducing a novel research-based design in an authentic educational setting. Scientific Reports, 15(1), 17458. [Google Scholar] [CrossRef]

- Larson, B. Z., Moser, C., Caza, A., Muehlfeld, K., & Colombo, L. A. (2024). Critical thinking in the age of generative AI. Academy of Management Learning and Education, 23(3), 373–378. [Google Scholar] [CrossRef]

- Lee, S., & Park, G. (2024). Development and validation of ChatGPT literacy scale. Current Psychology, 43(21), 18992–19004. [Google Scholar] [CrossRef]

- Li, T., Ji, Y., & Zhan, Z. (2024). Expert or machine? Comparing the effect of pairing student teacher with in-service teacher and ChatGPT on their critical thinking, learning performance, and cognitive load in an integrated-STEM course. Asia Pacific Journal of Education, 44(1), 45–60. [Google Scholar] [CrossRef]

- Lipman, M. (1997). Thinking in community. Inquiry: Critical Thinking Across the Disciplines, 16(4), 6–21. [Google Scholar]

- Lodge, J. M., de Barba, P., & Broadbent, J. (2023). Learning with generative artificial intelligence within a network of co-regulation. Journal of University Teaching and Learning Practice, 20(7), 1–10. [Google Scholar] [CrossRef]

- Luther, T., Kimmerle, J., & Cress, U. (2024). Teaming up with an AI: Exploring human–AI collaboration in a writing scenario with ChatGPT. AI, 5(3), 1357–1376. [Google Scholar] [CrossRef]

- Miller, C. M., Patton, C. E., Escobedo, A. R., & Guzman-Bonilla, E. (2025). Whose wisdom? Human biases for decision support system source and scale. In Proceedings of the human factors and ergonomics society annual meeting (p. 10711813251360707). SAGE Publications. [Google Scholar]

- Nasr, N. R., Tu, C.-H., Sujo-Montes, L., Hossain, S., Haniya, S., & Yen, C. (2025, April 23–27). Generative artificial intelligence impact on critical thinking in higher education: A comprehensive bibliometric analysis and systematic review. Educational Research Association (AERA) Conference, Denver, CO, USA. [Google Scholar] [CrossRef]

- Neumann, M., Rauschenberger, M., & Schön, E. M. (2023, May). “We need to talk about ChatGPT”: The future of AI and higher education. In 2023 IEEE/ACM 5th international workshop on software engineering education for the next generation (SEENG) (pp. 29–32). IEEE. [Google Scholar] [CrossRef]

- Olivier, C., & Weilbach, L. (2024). Enhancing online learning experiences: A systematic review on integrating GenAI Chatbots into the community of inquiry framework. In Conference on e-business, e-services and e-society (pp. 77–89). Springer. [Google Scholar]

- Ouyang, F., & Jiao, P. (2021). Artificial intelligence in education: The three paradigms. Computers & Education: Artificial Intelligence, 2, 100020. [Google Scholar] [CrossRef]

- Rahman, M. M., & Watanobe, Y. (2023). ChatGPT for education and research: Opportunities, threats, and strategies. Applied Sciences, 13(9), 5783. [Google Scholar] [CrossRef]

- Ritz, E., Freise, L. R., & Li, M. M. (2024). Offloading to digital minds: How generative AI can help to craft jobs. In NeuroIS Retreat (pp. 11–20). Springer Nature. [Google Scholar]

- Rolim, V., Ferreira, R., Lins, R. D., & Gǎsević, D. (2019). A network-based analytic approach to uncovering the relationship between social and cognitive presences in communities of inquiry. The Internet and Higher Education, 42, 53–65. [Google Scholar] [CrossRef]

- Saldaña, J. (2021). The coding manual for qualitative researchers (4th ed.). SAGE Publications. [Google Scholar]

- Santiago, C. S., Embang, S. I., Acanto, R. B., Ambojia, K. W. P., Aperocho, M. D. B., Balilo, B. B., Cahapin, E. L., Conlu, M. T. N., Lausa, S. M., Laput, E. Y., Malabag, B. A., Paderes, J. J., & Romasanta, J. K. N. (2023). Utilization of writing assistance tools in research in selected higher learning institutions in the Philippines: A text mining analysis. International Journal of Learning, Teaching and Educational Research, 20(11), 259–284. [Google Scholar] [CrossRef]

- Suriano, R., Plebe, A., Acciai, A., & Fabio, R. A. (2025). Student interaction with ChatGPT can promote complex critical thinking skills. Learning and Instruction, 95, 102011. [Google Scholar] [CrossRef]

- Tam, W., Huynh, T., Tang, A., Luong, S., Khatri, Y., & Zhou, W. (2023). Nursing education in the age of artificial intelligence powered Chatbots (AI-Chatbots): Are we ready yet? Nurse Education Today, 129, 105917. [Google Scholar] [CrossRef]

- Tracy, S. J. (2013). Qualitative research methods: Collecting evidence, crafting analysis. John Wiley & Sons. [Google Scholar]

- Valova, I., Mladenova, T., & Kanev, G. (2024). Students’ perception of ChatGPT usage in education. International Journal of Advanced Computer Science and Applications, 15(1), 466–473. [Google Scholar] [CrossRef]

- Van den Berg, G., & Du Plessis, E. (2023). ChatGPT and Generative AI: Possibilities for its contribution to lesson planning, critical thinking and openness in teacher education. Education Sciences, 13(10), 998. [Google Scholar] [CrossRef]

- Walter, Y. (2024). Embracing the future of Artificial Intelligence in the classroom: The relevance of AI literacy, prompt engineering, and critical thinking in modern education. International Journal of Educational Technology in Higher Education, 21(1), 15. [Google Scholar] [CrossRef]

- Wang, J., & Fan, W. (2025). The effect of ChatGPT on students’ learning performance, learning perception, and higher-order thinking: Insights from a meta-analysis. Humanities and Social Sciences Communications, 12(1), 1–21. [Google Scholar] [CrossRef]

- Wójcik, S., Rulkiewicz, A., Pruszczyk, P., Lisik, W., Poboży, M., & Domienik-Karłowicz, J. (2023). Beyond ChatGPT: What does GPT-4 add to healthcare? The dawn of a new era. Cardiology Journal, 30(6), 1018–1025. [Google Scholar] [CrossRef]

- Yang, L., & Mohd, R. B. S. (2020). The relationship between critical thinking and the community of inquiry model: A quantitative study among EFL university students in China. International Journal of Adolescence and Youth, 25(1), 965–973. [Google Scholar] [CrossRef]

- Zhou, X., Teng, D., & Al-Samarraie, H. (2024). The mediating role of generative AI self-regulation on students’ critical thinking and problem-solving. Education Sciences, 14(12), 1302. [Google Scholar] [CrossRef]

| Demographic | Number | Percentage |

|---|---|---|

| Total participants | 40 | 100% |

| Gender (female) | 35 | 87.50% |

| Gender (male) | 5 | 12.50% |

| Age (18–24) | 25 | 62.50% |

| Age (25–34) | 8 | 20% |

| Age (35–44) | 4 | 10% |

| Age (45–54) | 3 | 7.50% |

| Ethnicity | White: 27, Asian: 5, Hispanic/Latino: 3, Other: 2, African American: 1, Native American/Alaska Native: 2 | 67.5% White, 12.5% Asian, 7.5% Hispanic/Latino, 5% Other, 2.5% African American, 5% Native American/Alaska Native |

| Academic level | Undergraduate: 25, graduate: 15 | 62.5% Undergraduate, 37.5% graduate |

| Disciplines | Elementary Education: 21, Early Childhood Education: 5, Curriculum and Instruction: 9, ESL/Bilingual Education: 3, Educational Technology: 1, Linguistics: 1 | Elementary Education (52.5%; 21 students), Early Childhood Education (15%; 5), Curriculum and Instruction (22.5%; 9), ESL/Bilingual Education (7.5%; 3), Educational Technology (2.5%; 1), and Linguistics (2.5%; 1). |

| Demographic | Number | Percentage |

|---|---|---|

| Total participants | 6 | 100% |

| Gender (female) | 6 | 100% |

| Educational background | Undergraduate/Elementary education | 100% Undergraduate |

| Age (18–24) | 6 | 100% |

| Ethnicity (White) | 6 | 100% |

| Demographic | Number | Percentage |

|---|---|---|

| Total participants | 2 | 100% |

| Gender (female) | 2 | 100% |

| Educational background | Undergraduate/Elementary education | 100% |

| Age (18–24) | 2 | 100% |

| Ethnicity (White) | 2 | 100% |

| Critical Thinking Skills | N | Minimum | Maximum | Mean | Std. Deviation |

|---|---|---|---|---|---|

| Triggering events | 40 | 1 | 5 | 3.6 | 1.03 |

| Exploration | 40 | 1 | 5 | 3.7 | 0.97 |

| Integration | 40 | 1 | 5 | 3.6 | 0.99 |

| Resolution | 40 | 1 | 5 | 3.3 | 0.79 |

| Average | 40 | 3.5 | 1.1 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nasr, N.R.; Tu, C.-H.; Werner, J.; Bauer, T.; Yen, C.-J.; Sujo-Montes, L. Exploring the Impact of Generative AI ChatGPT on Critical Thinking in Higher Education: Passive AI-Directed Use or Human–AI Supported Collaboration? Educ. Sci. 2025, 15, 1198. https://doi.org/10.3390/educsci15091198

Nasr NR, Tu C-H, Werner J, Bauer T, Yen C-J, Sujo-Montes L. Exploring the Impact of Generative AI ChatGPT on Critical Thinking in Higher Education: Passive AI-Directed Use or Human–AI Supported Collaboration? Education Sciences. 2025; 15(9):1198. https://doi.org/10.3390/educsci15091198

Chicago/Turabian StyleNasr, Nesma Ragab, Chih-Hsiung Tu, Jennifer Werner, Tonia Bauer, Cherng-Jyh Yen, and Laura Sujo-Montes. 2025. "Exploring the Impact of Generative AI ChatGPT on Critical Thinking in Higher Education: Passive AI-Directed Use or Human–AI Supported Collaboration?" Education Sciences 15, no. 9: 1198. https://doi.org/10.3390/educsci15091198

APA StyleNasr, N. R., Tu, C.-H., Werner, J., Bauer, T., Yen, C.-J., & Sujo-Montes, L. (2025). Exploring the Impact of Generative AI ChatGPT on Critical Thinking in Higher Education: Passive AI-Directed Use or Human–AI Supported Collaboration? Education Sciences, 15(9), 1198. https://doi.org/10.3390/educsci15091198