2. Methods

2.1. Participants

A total of 242 teachers in grades 3–12 participated in the study. The majority of respondents came from one school district (93% (

n = 225)) and 7% (

n = 17) came from other school districts located in the southeast of the United States. The sample was predominantly female (78.7%,

n = 185), with 20.9% (

n = 49) identifying as male, and 0.4% (

n = 1) preferring not to answer. Participants reported a range of teaching experience: the majority (59.2%,

n = 129) had more than 10 years of experience, while 22.0% (

n = 48) had 1–5 years, 15.1% (

n = 33) had 6–10 years, and 3.7% (

n = 8) had less than 1 year of experience. Further, they taught a range of grade levels with teachers from elementary grades 3–5 (28.9%;

n = 70), middle school grades 6–8 (21.5%;

n = 52), and high school grades 9–12 (38.4%;

n = 93) with 27 (11.2%) not reporting the primary grade they teach (see

Table 1 for detailed demographic information).

2.2. Instrument Development and Procedures

The survey instrument was developed to collect data on a range of variables, including demographic information (e.g., grade level taught, subject(s) taught, years of teaching experience, academic degrees, and gender), teachers’ knowledge and use of various AI educational tools, their familiarity with and understanding of AI in education, their confidence in using AI for various teaching-related practices, and their professional development experiences and needs.

The survey comprised four major sections. The first section focused on teachers’ awareness, familiarity, and understanding of AI tools and technologies. Participants were asked to indicate which categories of AI tools they were aware of, including writing tools (e.g., ChatGPT, Jasper), tutoring systems (e.g., Duolingo, Socratic), and grading and assessment platforms (e.g., Gradescope, Turnitin). They were also asked to identify specific tools (e.g., ChatGPT, Grammarly) they recognized from a provided list and to rate their familiarity with the concept of AI in education on a 5-point scale ranging from “Not at all familiar” to “Extremely familiar.” Similarly, understanding was assessed using a 5-point scale from “Very low” to “Very high.”

The second section examined how teachers use AI tools in their instructional practice. Teachers indicated whether they currently used AI tools in their teaching and, if so, how frequently they did so (e.g., daily, weekly, monthly). They were asked to identify their primary AI tool and to provide an example of how they used this AI tool in their teaching both through open-ended responses. Teachers also reported their feelings about using AI tools, selecting from a range of responses that included positive, neutral, stressed, and opposed sentiments. Satisfaction and confidence with specific tools (e.g., ChatGPT, Grammarly) and with their primary tool were rated on 5-point scales (very low to very high). Additionally, teachers identified perceived benefits from a list (e.g., increased engagement, increased student learning, time savings in grading/planning, personalized learning) and identified challenges through an open-ended response.

The third section assessed teachers’ confidence in performing various AI-related instructional tasks. Participants rated their confidence on six items: using AI tools in the classroom, integrating AI into lesson plans, integrating AI into grading, communicating with families about AI integration, communicating with their district about AI integration, and troubleshooting technical issues. Each item was rated on a 5-point scale from “Very unconfident” to “Very confident”.

The fourth section addressed teachers’ professional development experiences and support needs related to AI. Participants indicated whether they had received general training on AI tools (yes/no), who provided that training (in an open-ended response), and rated the helpfulness of that training using a 5-point scale from “Not at all helpful” to “Extremely helpful”. Teachers also identified the types of support they believed would increase their confidence in using AI and chose from a list including items such as, professional development workshops, access to tools, clear permission policies, and other (with a write-in response).

After the initial survey was designed, it was shared with two advanced degree researchers who had worked in elementary and secondary settings as English Language Arts teachers. Reviewers were asked to examine the clarity of each of the questions, its phrasing, and whether its classification under a specific section was appropriate and clearly addressed the intended construct (e.g., PD needs). Feedback was received on the phrasing of items, and on the classification of items under specific categories (e.g., knowledge, use, confidence, PD) and revisions were made. Next, the survey was sent to a group of three elementary and four secondary teachers who were asked to review the survey using the same criteria and then complete it. Teachers’ reviews were shared with the first author, who also held a focus-group meeting for teachers to share recommendations for revisions and to explain any areas of confusion. The survey items were then finalized, and the survey was created in Qualtrics. Following approval from the Institutional Review Board, study participants were invited to participate in the online survey. Participation was voluntary, and informed consent was obtained before starting the survey. Four reminders were provided every two weeks, and the survey was closed after eight weeks.

2.3. Data Analyses

Descriptive statistics and percentages were calculated to summarize participants’ understanding, usage, helpfulness, and confidence in using AI. Inferential statistics were employed to examine differences between elementary and secondary teachers. Prior to these main comparisons, preliminary analyses were conducted to explore differences between middle and high school teachers, but no statistically significant differences were observed on our key variables. Therefore, to simplify the presentation of results, we combined middle and high school teachers for all analyses. Chi-square tests of independence (χ2) were used to compare elementary and secondary teachers on categorical variables. The Phi coefficient, ϕ, or Cramer’s V were reported as measures of effect sizes for chi-square tests. Independent samples t-tests were conducted to compare mean scores between elementary and secondary teachers on Likert-scale data. Levene’s test for equality of variances was examined for each t-test to determine whether equal variances could be assumed, and results were reported accordingly. Cohen’s d was calculated and reported as a measure of effect size for t-tests.

Open-ended responses from the survey (e.g., details on PD providers) were reviewed and summarized thematically to provide additional context and detail to the quantitative findings (

Strauss & Corbin, 1990).

3. Results

Findings are reported by research question. Frequency and mean comparisons between elementary and secondary teachers can be found in

Table 2 and

Table 3, respectively.

RQ 1: What AI tools are teachers aware of, how familiar do teachers feel with AI in education, and how do teachers rate their understanding of AI in education? Does awareness, familiarity, and understanding differ between elementary and secondary teachers?

Awareness of AI Tools. Teachers were asked to select all AI tools or technologies categories they were aware of by responding to the question: “Which AI tools or technologies are you aware of?” Overall, the most widely recognized category was AI writing tools (e.g., ChatGPT, Jasper), with 90.0% of teachers indicating awareness. This was followed by AI tutoring systems (e.g., Duolingo, Socratic) (66.5%) and Grading and assessment tools (e.g., Gradescope, Turnitin) (40.6%). Fewer teachers reported awareness of other tools or technologies. Comparisons between elementary (

n = 76) and secondary (

n = 152) teachers (

Figure 1) revealed statistically significant differences on the following: AI Writing Tools (χ

2(1,

N = 215) = 9.40,

p = 0.002, ϕ = 0.209), AI Tutoring Systems (χ

2(1,

N = 215) = 4.04,

p = 0.044, ϕ = 0.137), Grading and Assessment Tools (χ

2(1,

N = 215) = 16.57,

p < 0.001, ϕ = 0.278), and Not Aware of Any (χ

2(1,

N = 215) = 12.21,

p < 0.001, ϕ = −0.238).

We next asked teachers about their awareness of

specific AI educational tools (i.e., “Which AI educational tools or AI educational technologies are you aware of?”). The list included widely used tools such as ChatGPT, Grammarly and others (e.g., Jasper, Quillbot, copy.ai, DreamBox), as well as an “Other” option for write-ins. ChatGPT was the most recognized tool, with 92.3% of teachers indicating they were aware of it. Grammarly also had high awareness at 84.1%. Awareness for other listed tools such as Knewton (2.6%), DreamBox (6.9%), Jasper (11.6%), copy.ai (10.3%), writesonic (2.6%), quillbot (20.6%), wordtune (2.1%), Scribbr (20.6%), and Hemingway Editor (3.4%) was considerably lower. A small percentage (3.0%) reported being unaware of any of the specific tools listed. Comparisons between elementary and secondary teachers (

Figure 2) regarding their awareness of specific AI educational tools revealed several statistically significant differences in awareness of ChatGPT (χ

2(1,

N = 215) = 14.181,

p < 0.001, ϕ = 0.257), Grammarly (χ

2(1,

N = 215) = 6.380,

p = 0.012, ϕ = 0.172), Quillbot (χ

2(1,

N = 215) = 8.473,

p = 0.004, ϕ = 0.199), Scribbr (χ

2(1,

N = 215) = 12.537,

p < 0.001, ϕ = 0.241), and copy.ai (χ

2(1,

N = 215) = 8.758,

p = 0.003, ϕ = 0.202). Additionally, a significantly higher percentage of elementary teachers selected “I am not aware of any” of the listed specific educational tools compared to secondary teachers, χ

2(1,

N = 215) = 9.311,

p = 0.002, ϕ = −0.208.

Approximately a quarter (23.6%) of teachers specified “Other” tools (beyond the pre-listed options), and a variety of tools were mentioned. The most frequently cited tool was Magic School, which was mentioned by over 30 teachers. Other tools that were mentioned multiple times or by several individual teachers included Brisk (mentioned 10 times), Khanmigo (mentioned 7 times), and Diffit (mentioned 4 times). Additionally, AI features within platforms like Canva and Quizizz/Quizlet were noted, alongside tools such as Perplexity, Photomath, and Turnitin. Several teachers also listed combinations of these and other tools.

3.1. Familiarity with the Concept of AI in Education

Teachers indicated their familiarity with the concept of AI in education on a 5-point scale (1 = Not at all familiar to 5 = Extremely familiar) by responding to the following question: How familiar are you with the concept of AI in education? Overall, approximately a quarter of teachers (26.4%) indicated they were “extremely” or “very familiar” with the concept of AI in education and a quarter of teachers (25.6%) indicated they were “not very” or “not at all familiar” with the concept of AI in education with around half (47.9%) reporting they were “somewhat familiar”. An independent samples t-test revealed that secondary teachers (M = 3.21, SD = 0.85) reported a significantly higher level of familiarity compared to elementary teachers (M = 2.52, SD = 0.92), t(212) = 5.42, p < 0.001, Cohen’s d = −0.79.

3.2. Understanding of AI in Education

Teachers rated their understanding of AI in education on a 5-point scale (1 = Very low to 5 = Very high) by answering: How would you rate your understanding of AI in education? Overall, 16.6% rated their understanding as “very high” or “high”, 46.4% rated their understanding as “moderate,” and 37.1% rated it as “low” or “very low”. Secondary teachers (M = 2.92, SD = 0.91) reported a significantly higher level of understanding compared to elementary teachers (M = 2.33, SD = 0.96), t(129.88) = 4.29, p < 0.001, Cohen’s d = −0.64.

RQ 2: How do teachers use AI tools in their instruction (including types of use and frequency), what primary tools they use, and what are their feelings, satisfaction, and confidence regarding these tools? Additionally, what benefits and challenges do they perceive from using AI in the classroom, and do these patterns differ between elementary and secondary teachers?

3.3. Current Use of AI Tools

Overall, out of 232 teachers who responded to the question “Do you currently use any AI tools in your teaching?” (yes, no), approximately half reported using AI tools in their teaching (n = 113, 46.7%). A chi-square test indicated a statistically significant association between teacher level and AI tool use, χ2(1, N = 215) = 5.285, p = 0.022, ϕ = 0.157, with a higher percentage of secondary teachers (52.4%) using AI tools compared to elementary teachers (35.7%). For the 113 teachers who reported using AI tools, we asked “How often do you incorporate AI tools into your teaching?” (response options included daily, weekly, monthly, rarely, and never). Around half of teachers reported integrating AI tools weekly (n = 58, 51.3%), followed by monthly (n = 23, 20.4%), rarely (n = 16, 14.2%), and daily (n = 13, 11.5%). A small number of teachers (n = 3, 2.5%) who initially indicated they use AI tools then selected “never” for frequency. A chi-square test indicated no statistically significant difference in the distribution of frequency of AI use between elementary and secondary teachers, χ2(4, N = 101) = 1.892, p = 0.756, Cramer’s V = 0.137.

We then asked teachers to specify how they use AI tools in their teaching with an open ended response. The most common use, mentioned by approximately 65 respondents, was lesson planning and content creation, where tools like ChatGPT and MagicSchool.AI were frequently used to generate outlines, presentations, exemplars, and guided notes. Also, 50 teachers said they used AI for creating assessments and questions, including quizzes, multiple-choice items, and state test-style questions. AI was also used by 35 teachers to provide feedback on student writing or generate exemplar essays, often through tools like Brisk and MagicSchool. Further, 30 teachers indicated that AI tools such as Diffit were used for text differentiation, including lowering Lexile levels for English Language Learners and special education students. Also, 25 teachers used AI for rubric creation and grading support, while 20 mentioned using it for professional tasks like drafting emails and newsletters. Additionally, 15 teachers used AI for creating visual aids and presentations using tools like Canva and Gemini. A smaller group (12 teachers) involved students in using AI tools under their guidance or with restrictions, while 8 teachers noted using AI to detect academic dishonesty. Finally, around 10 teachers shared specialized uses of AI, including support for music selection, coding, grant writing, and generating simulated data for research activities. These patterns highlight that teachers are integrating AI primarily as a time-saving tool that enhances planning, differentiation, assessment, and communication.

3.4. Teachers’ Feelings About Using AI Tools in Instruction

Teachers who reported using AI tools in their instruction (n = 113) were asked about their feelings regarding this practice by answering the question: What is the way you feel about using AI in your instruction? A majority (58.4%) expressed positive feelings regarding AI use. The most common sentiment, with 43.4% (n = 49) stating, “I feel great! They save me time.” Another 15.0% (n = 17) reported, “I feel great! Students learn how to work with those tools, too!” A smaller group (8.8%, n = 10) felt neutral (“I do not have any specific feelings about it. I just use it.”). Collectively, feelings of stress due to uncertainty about using AI tools, their correct application, or their effects on student learning were expressed by 8.9% (n = 10) of these AI-using teachers. A very small percentage (1.8%, n = 2) felt it was “not appropriate for students to have any exposure to AI tools.” A chi-square test did not find any differences in feelings about using AI tools in instruction between elementary (n = 25) and secondary (n = 76) teachers, χ2(8, N = 101) = 2.625, p = 0.956, Cramer’s V = 0.161.

Across the sample, one-fifth (22.1%, n = 25), selected “Other”. When asked to elaborate, the most common feelings were conflicted or mixed emotions (n = 10). Teachers appreciated AI’s time-saving and productivity benefits but were concerned about student misuse, ethical issues, and academic dishonesty. Five teachers voiced apprehension about students’ overreliance on AI, erosion of critical thinking and creativity, and uncertainties about accuracy, privacy, and plagiarism. Six teachers expressed excitement or strong support, citing AI’s effectiveness in enhancing instruction, providing examples, and streamlining tasks. Additionally, four teachers felt overwhelmed by the steep learning curve and additional cognitive load AI introduces to an already demanding profession.

3.5. Types of AI Tools Used in Instruction

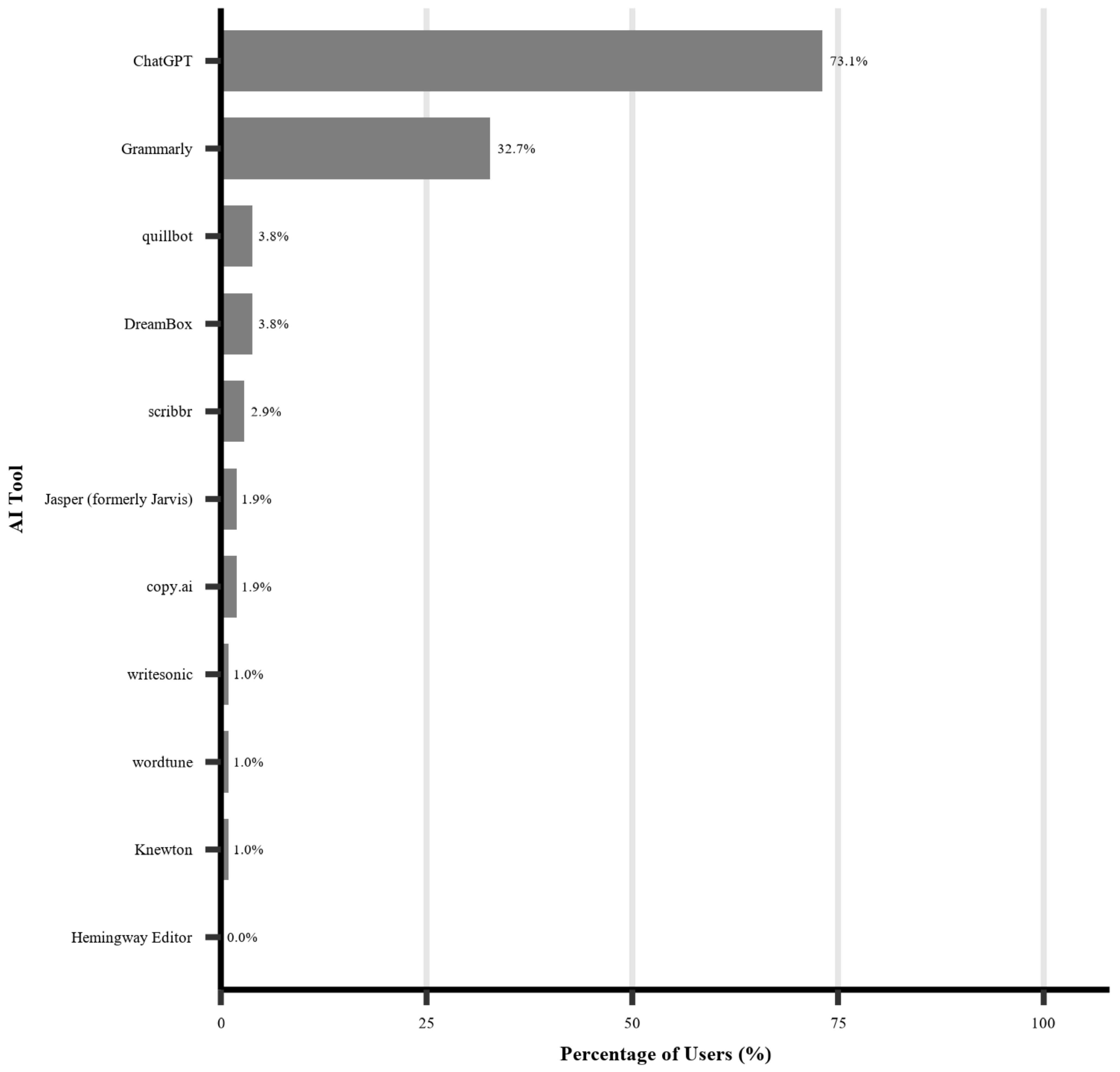

Teachers who reported using AI were asked to select from a list of AI tools or technology they use in their teaching by responding to the question: Which of the AI educational tools or AI educational technologies do you use in your instruction? The provided list included tools such as ChatGPT, Grammarly, Jasper, Quillbot, and others, along with an “Other” option for write in responses. Teachers reported using a variety of specific AI tools (

Figure 3). ChatGPT was the most frequently reported tool used (73.1%,

n = 76), followed by Grammarly (32.7%,

n = 34). Other AI tools listed were used by a smaller percentage of these respondents. Sixty-six teachers selected the “Other” option. Among the tools mentioned, Magic School was the most prominent, mentioned by 39.4% (

n = 26). Brisk was the next most common, cited by 13.6% (

n = 9). Other tools mentioned included Quizlet (6.1%,

n = 4), Perplexity (4.5%,

n = 3), Gemini/Notebook LM (4.5%,

n = 3), Canva (3.0%,

n = 2), Diffit (3.0%,

n = 2), and Kahnmingo (3.0%,

n = 2). Comparisons between elementary and secondary teachers revealed no differences in their use of ChatGPT or Grammarly.

Teacher satisfaction of use. We then asked teachers to rate their satisfaction with each of these AI tools on a 5-point scale from very low to very high (i.e., How would you rate your satisfaction about the use of this AI tool in your instruction?). However, due to the limited number of teachers reporting use of tools other than Grammarly and ChatGPT, only satisfaction scores for these two are detailed. Among the 76 teachers who reported using ChatGPT, satisfaction was overwhelming positive with nearly two-thirds (61.8%) rating it as “very high” or “high” while 36.8% indicating “moderate” satisfaction and only 1.3% indicating “low” satisfaction. An independent samples t-test comparing elementary (n = 16, M = 4.00, SD = 0.894) and secondary (n = 52, M = 3.85, SD = 0.826) teachers revealed no statistically significant difference in satisfaction scores; t(66) = 0.639, p = 0.525, Cohen’s d = 0.18.

For Grammarly, teachers reported high satisfaction as well, with 57.6% indicating “very high” or “high” satisfaction, 39.4% indicating “moderate” satisfaction, and only 3.0% reporting “low” satisfaction. A comparison between elementary (M = 4.00, SD = 1.054) and secondary teachers (M = 4.05, SD = 0.999) revealed no statistically significant differences; t(28) = −0.127, p = 0.900, Cohen’s d = −0.049.

Teacher confidence. Next, we asked teachers to rate their confidence about using these specific AI tools in their instruction using a 5-point scale ranging from very low to very high (i.e., How would you rate your confidence about the use of this AI tool in your instruction?). Similarly to satisfaction, confidence results are only reported for ChatGPT and Grammarly. For ChatGPT, the majority expressed moderate to high confidence (56.5%), 39.5% expressed “moderate” confidence, and 3.9% indicated “low” or “very low” confidence. An independent samples t-test compared confidence in using ChatGPT between elementary (n = 16, M = 4.00, SD = 1.10) and secondary (n = 52, M = 3.75, SD = 0.97) teachers and no statistically significant difference in confidence scores was found; t(66) = 0.876, p = 0.384, Cohen’s d = 0.25. For Grammarly, the majority (57.6%) reported “high” or “very high” confidence, 39.4% reported “moderate” confidence, and 3.0% reported “low” confidence (none indicated “very low” confidence). No statistically significant differences between elementary (n = 10, M = 4.20, SD = 0.92) and secondary (n = 20, M = 3.90, SD = 1.02) teachers were found; t(28) = 0.783, p = 0.440, Cohen’s d = 0.30.

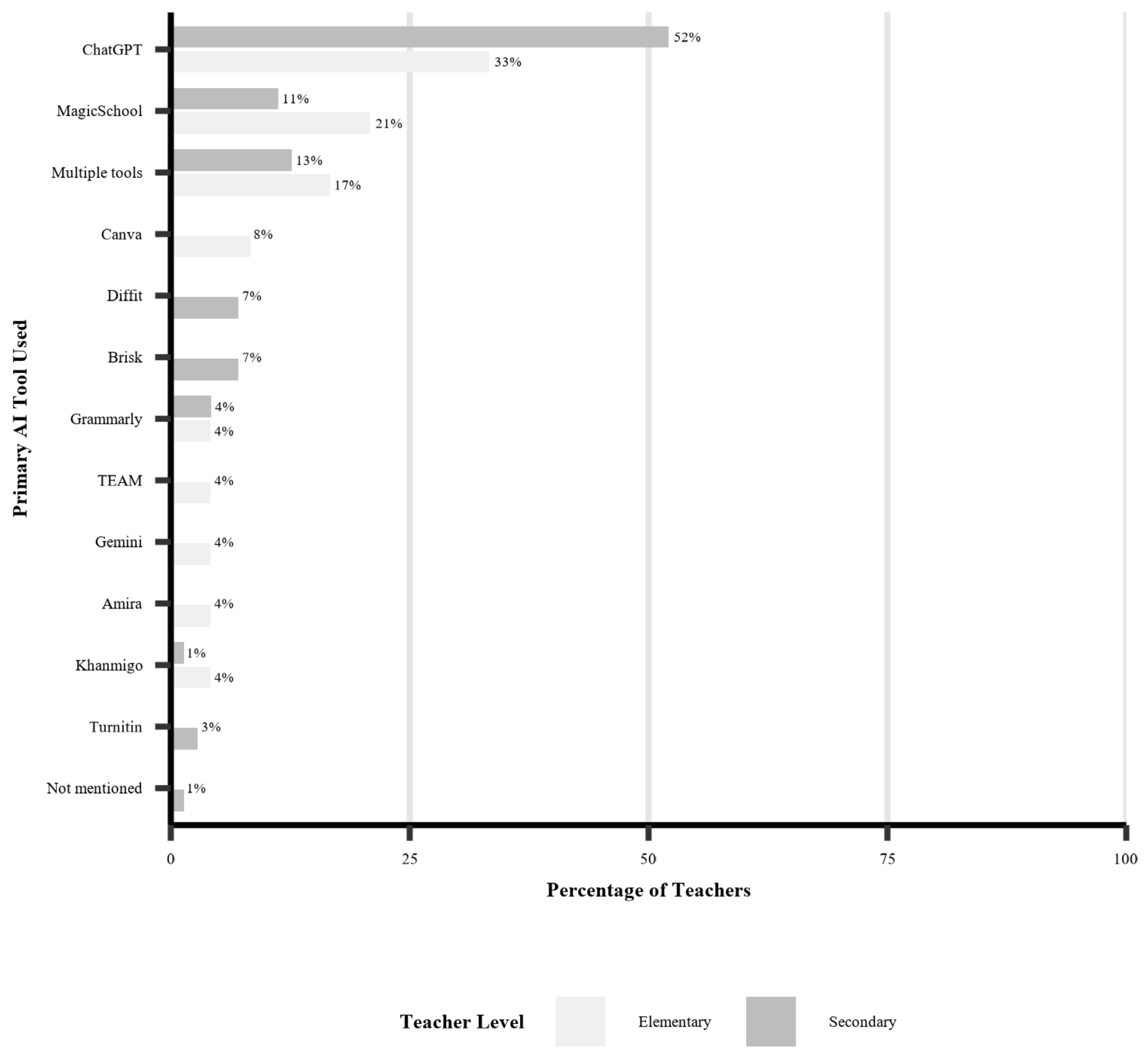

3.6. Primary AI Tool Use

Teachers who use AI were asked to specify their primary tool in an open-response format (“What is the AI tool you primarily use in your teaching?”). ChatGPT was the most frequently cited primary tool overall (49.5%,

n = 51), followed by Magic School (12.6%,

n = 13) and teachers reporting the use of multiple tools (12.6%,

n = 13). While a chi-square test indicated a statistically significant difference in the distribution of primary AI tools used between elementary and secondary teachers (

Figure 4), χ

2(12,

N = 95) = 22.578,

p = 0.032, Cramer’s V = 0.488, ChatGPT and MagicSchool were the most frequently used by both. For elementary teachers (

n = 24), the most common primary tools were ChatGPT (33.3%,

n = 8), MagicSchool (20.8%,

n = 5), and multiple tools (16.7%,

n = 4). For secondary teachers (

n = 71), ChatGPT was also the most common primary tool (52.1%,

n = 37), followed by multiple tools (12.7%,

n = 9), and MagicSchool (11.8%,

n = 8).

In addition, we asked teachers to rate their satisfaction with their primary AI tool on a 5-point scale from very low to very high. Overall, satisfaction was high with 29.2% indicating “very high”, 41.5% indicating “high”, and 29.5% reporting “moderate” satisfaction (none reported “low” or “very low” satisfaction). No statistically significant differences were found between elementary (n = 25, M = 4.00, SD = 0.764) and secondary (n = 73, M = 3.97, SD = 0.781) teachers, t(96) = 0.152, p = 0.879, Cohen’s d = 0.04. We then asked teachers to provide an example of how they use this AI tool in their teaching. The most frequent application, based on responses, is in lesson planning (38 responses), where AI is used to brainstorm ideas, align content to standards, and generate instructional materials. Assessment development follows closely (n = 28), with tools used to create quizzes, rubrics, and exemplar answers. Writing support (n = 25 mentions) is a major theme, both for students (prompting, editing, feedback) and for teachers (exemplar generation, rubric-aligned grading). Many educators (n = 22) also rely on AI to design worksheets and learning activities—from comprehension questions to graphic organizers. Teachers highlighted the value of AI in differentiating instruction (n = 17), using tools like Diffit to adjust lexile levels or tailor activities for English Language learners and students in special education. Other strong themes include their own productivity and use on time-saving tasks (emails, slide development).

Further, we asked teachers to rate their satisfaction and confidence with this specific tool on a 5-point scale from very low to very high. Overall, teachers reported high levels of satisfaction with their primary AI tool, with 29.2% indicating “very high”, 41.5% indicating “high”, and 29.5% reporting “moderate” satisfaction (none reported “low” or “very low” satisfaction). An independent samples t-test compared satisfaction scores between elementary (n = 25, M = 4.00, SD = 0.764) and secondary (n = 73, M = 3.97, SD = 0.781) teachers, and no statistically significant difference were noted; t(96) = 0.152, p = 0.879, Cohen’s d = 0.04. Similarly, teachers generally reported high levels of confidence with 61.5% indicating “very high” or “high” confidence, 31.3% indicating “moderate” confidence and 7.3% reporting “low” or “very low” confidence. No statistically significant differences in confidence scores were found between elementary (n = 24, M = 3.83, SD = 0.917) and secondary (n = 68, M = 3.74, SD = 0.940) teachers; t(90) = 0.442, p = 0.660, Cohen’s d = 0.10.

When teachers explained their satisfaction with AI tools, the most common reason cited was time savings, mentioned by 41 teachers. Many praised how AI streamlines tasks like planning, grading, and content creation. One teacher noted, “This tool saves me so much time,” while another shared, “It allows me to go on about my day.” Twenty-five teachers emphasized that AI provides a useful starting point, even if they need to personalize the output—“I will use what ChatGPT gives me, but will always reword it to be more personal and specific.” Eighteen teachers highlighted how AI tools improved their instructional quality, such as generating higher-level questions or supporting differentiation. However, 17 teachers expressed concern over inaccuracies or the need for revision, acknowledging AI’s value but noting that “there is always a margin of error.” Additionally, 14 teachers appreciated that the tools are easy to use, while 13 praised the quality of the output, especially for grammar and feedback. Some teachers (n = 10) raised philosophical concerns about AI’s impact on student thinking—“AI is chiseling away at the time and effort students put into thinking.” Others (n = 6) expressed skepticism over student misuse or overreliance, while 6 cautioned against using AI in unfamiliar content areas due to potential inaccuracies. Still, most teachers conveyed overall satisfaction, especially when using AI in tandem with their own expertise and judgment.

Teachers were also asked to explain their confidence in using AI for instruction and responses varied widely. Thirteen respondents expressed high confidence, citing frequent use and familiarity with the tools, including training others. Twelve described moderate confidence, using AI as a starting point but refining its outputs. Ten were still learning or becoming familiar with AI. Meanwhile, nine participants expressed cautious confidence, acknowledging AI’s usefulness but highlighting concerns about inaccuracies and the need to carefully craft prompts. Six respondents reported limited use or low familiarity, leading to lower confidence levels. Four participants linked their confidence to specific tools (e.g., Brisk, Quizizz, Diffit), suggesting tool-specific training may influence perceived competence.

3.7. Observed Benefits of Using AI in the Classroom

Teachers who reported using AI in their instruction were asked, “What benefits have you observed from using AI in your classroom?” The most frequently cited benefit was, “Time savings in grading/planning”, selected by 84.9% (n = 90) of teachers. This was followed by “Personalized learning” (50.9%, n = 54), “Improved student understanding” (42.5%, n = 45), and “Increased student engagement” (31.1%, n = 33). Additionally, 8.5% (n = 9) of teachers selected “other” benefits and provided an open-ended response. The benefits included creating instructional materials for multilingual students, assisting with accommodations, improving writing, increasing teacher confidence, providing timely feedback, and offering information without emotional bias. One respondent was uncertain about benefits, and another indicated a desire for more training. Chi-square tests showed no significant differences between elementary and secondary teachers in the observed benefits (p > 0.100 for all analyses).

We asked teachers to express some of the challenges they have faced with using AI in the classroom in an open-ended response. The most frequently reported challenge among research participants was student misuse of AI tools, noted by 41 teachers. This includes concerns about cheating, copying and pasting full responses, and bypassing the learning processes. Other significant challenges include the difficulty of crafting effective prompts (n = 10), AI inaccuracies (n = 9), and a lack of training or clear guidance for teachers (n = 9). Additionally, time constraints (n = 8) and the overwhelming variety of tools (n = 6) make integration difficult. Some educators also struggle with AI output lacking nuance (n = 6), ethical uncertainties (n = 5), and student readiness (n = 5). A few teachers noted their own limited familiarity with AI (n = 4), while access issues and technical limitations were each mentioned by two responders. Notably, 5 participants reported no major challenges, often because they limit student access or only use AI for planning.

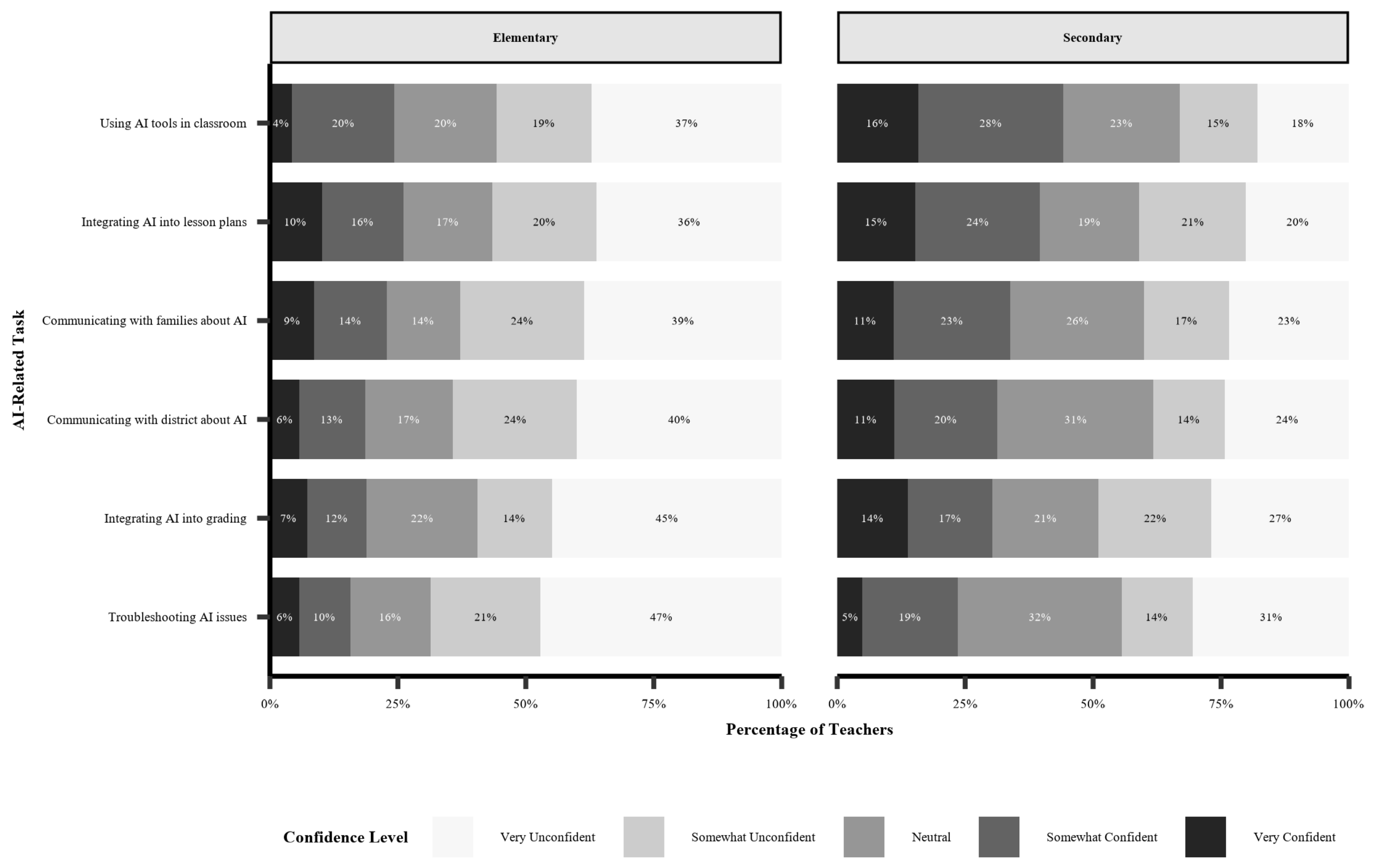

RQ 3: How confident are teachers in using specific AI tools and in performing various AI-related teaching practices? Are there differences between elementary and secondary teachers?

We asked teachers to rate their confidence on several AI-related items using a 5-point scale (1 =

Very Unconfident to 5 =

Very Confident) (i.e., “How confident do you feel about the following items?”). Percentages for each item are reported in

Figure 5 and

Figure 6. Independent samples

t-tests were conducted to compare confidence levels between elementary and secondary teachers. Regarding confidence in using AI tools in the classroom, secondary teachers (M = 3.09, SD = 1.338,

n = 145) reported significantly higher confidence in this area compared to elementary teachers (M = 2.36, SD = 1.286,

n = 70),

t(213) = −3.809,

p < 0.001, Cohen’s

d = −0.554. When considering integrating AI into lesson plans, secondary teachers (M = 2.94, SD = 1.370,

n = 144) again showed significantly higher confidence than elementary teachers (M = 2.43, SD = 1.388,

n = 69),

t(211) = −2.496,

p = 0.013, Cohen’s

d = −0.365. Confidence in integrating AI into grading was lower overall, but again secondary teachers (M = 2.68, SD = 1.388,

n = 145) were significantly more confident in this aspect than elementary teachers (M = 2.22, SD = 1.327,

n = 69),

t(212) = −2.325,

p = 0.021, Cohen’s

d = −0.340. For communicating with families about AI integration, secondary teachers (M = 2.81, SD = 1.323,

n = 145) had significantly higher confidence compared to elementary teachers (M = 2.30, SD = 1.344,

n = 70),

t(213) = −2.655,

p = 0.009, Cohen’s

d = −0.386. A similar pattern was observed for communicating with the district about AI integration, secondary teachers (M = 2.80, SD = 1.315,

n = 144) were significantly more confident than elementary teachers (M = 2.20, SD = 1.258,

n = 70),

t(212) = −3.169,

p = 0.002, Cohen’s

d = −0.462. Finally, troubleshooting technical issues related to AI tools garnered the lowest confidence overall, and secondary teachers (M = 2.53, SD = 1.240,

n = 144) were significantly more confident in troubleshooting than elementary teachers (M = 2.06, SD = 1.250,

n = 70),

t(212) = −2.637,

p = 0.009, Cohen’s

d = −0.384.

RQ 4: What trainings have teachers received on AI tools in general, how helpful was that training, and do these differ by elementary and secondary teachers? What supports or resources do teachers need to increase their confidence in using AI?

Overall, 24.0% (n = 56) of teachers reported having received training on AI tools in general. A chi-square test of independence indicated a significant difference between elementary and secondary teachers in receiving general AI training, with a higher percentage of secondary teachers (28.3%) reported receiving general AI training compared to elementary teachers (12.9%): χ2(1, N = 215) = 6.289, p = 0.012, ϕ = −0.171.

Of the teachers who received general AI training (n = 56), we asked who provided the training. Their open-ended responses indicated a range of sources. The most frequently mentioned providers included colleagues or peer teachers conducting sessions within their schools or districts; district-led PD days and initiatives, and external trainers, conferences, or organizations (e.g., “Ditch That Textbook,” TETA conference). Additionally, some teachers reported self-teaching efforts, utilizing online resources such as Khan Academy, or undertaking university coursework as their source of training.

We asked teachers who received general AI training (n = 56) to rate how helpful that training was on a 5-point scale (where 1 = Not at all helpful to 5 = Extremely helpful). Over half (57.1%) rated it as “extremely helpful” or “very helpful”, 28.6% found it “somewhat helpful,” and 14.3% found it “not very helpful” (no respondents selected “not at all helpful”). No statistically significant differences were found between elementary and secondary teachers, t(48) = −0.046, p = 0.964, Cohen’s d = −0.02. Of the 56 teachers who had received general AI training, 55.4% (n = 31) indicated this training was provided by their organization or state (no differences were found between elementary and secondary teachers, p = 0.363, ϕ = −0.129). Regarding the helpfulness of training provided by their organization or state (n = 30, on a 5-point scale where 1 = Not at all helpful to 5 = Extremely helpful), 46.7% found it “extremely helpful” or “very helpful”, 40.0% found it “somewhat helpful,” and 13.3% found it “not very helpful” (no respondents selected “not at all helpful”) (no difference were found between elementary and secondary teachers p = 0.763, Cohen’s d = −0.16).

Teachers were also asked about the types of support or resources that would help increase their confidence in using AI. The most frequently selected support was “Professional development workshops” (80.3%, n = 175), followed by “More information on best practices” (74.3%, n = 162), “Collaboration with other teachers” (72.9%, n = 159), “Clear permission policies” (67.4%, n = 147), “Access to AI tools” (62.8%, n = 137), “Professional Learning Communities” (42.2%, n = 92), and “Other” (3.7%, n = 8). Chi-square tests of independence compared the selections of elementary (n = 69) and secondary (n = 145) teachers. A significantly higher percentage of elementary teachers (88.4%) than secondary teachers (75.9%) indicated a need for “Professional development workshops,” χ2(1, N = 214) = 4.582, p = 0.032, ϕ = −0.146. No other statistically significant differences were found between elementary and secondary teachers (p’s > 0.100). Open-ended responses for “other” needs primarily centered on teachers expressing disinterest or ethical concerns regarding AI use in education, while others desired dedicated training opportunities, practice with low stakes, and clear school-wide policies on acceptable AI use.

4. Discussion

The present study aimed to investigate teachers’ knowledge and use of artificial intelligence (AI) tools within their educational settings. Specifically, this study sought to explore the extent to which educators independently utilized AI tools, identify the specific tools they employed and their confidence in their use, assess whether they had participated in any relevant training, and determine the types of PD they perceived as necessary. The findings of this study offer insights into grade 3 to 12 educators’ engagement with artificial intelligence (AI) tools, highlighting both the potential and the challenges associated with their integration into educational settings.

The study reveals notable disparity between educators’ general awareness of AI tools, such as ChatGPT, and their deeper familiarity and understanding of AI concepts. While 47.9% reported being “somewhat familiar” and 46.4% had a “moderate” understanding of AI, these figures suggest that knowledge about and familiarity with does not necessarily translate into comprehensive knowledge or effective application (

Wang & Fan, 2025). This aligns with findings from the RAND Corporation, which reported that only 25% of teachers utilized AI tools in their instructional planning or teaching during the 2023–2024 school year, with variations based on subject area and school characteristics (

Kaufman et al., 2024).

ChatGPT emerged as the most recognized and frequently used AI tool among educators, followed by Magic School AI. This trend mirrors broader patterns observed in the field, where tools like ChatGPT have gained prominence due to their versatility and user-friendly interfaces (

Wang & Fan, 2025). However, the study also indicates that the actual adoption of AI tools in teaching practices remains at a modest level, with less than half (46.7%) of teachers currently using AI tools. This suggests that while awareness and interest are high, the transition from knowledge and familiarity to consistent application in the classroom is still developing. Concentrated efforts are needed to make this transition systematic and methodical (

Kalantzis & Cope, 2025a;

Tan et al., 2025).

Moreover, the study highlights that secondary educators generally report higher levels of awareness, familiarity, understanding, and confidence in using AI tools compared to their elementary counterparts. This disparity may be attributed to several factors, including the complexity of content areas, greater exposure to technology, and possibly more flexible curricula at the secondary level. Secondary teachers are more likely to use AI tools for instructional planning, with variations also observed based on subject area and school characteristics (

Kaufman et al., 2024). These differences underscore the need for differentiated PD strategies tailored to the specific needs and contexts of educators at various educational levels.

In addition, findings show that educators who use specific AI tools like ChatGPT and Grammarly report positive feelings, satisfaction, and confidence in those tools. However, overall confidence in broader AI teaching practices, such as integrating AI into grading or troubleshooting technical issues, remains lower. This finding is consistent with research that suggests educators’ trust in AI is influenced by their self-efficacy and understanding of AI technologies (

Li & Wilson, 2025;

Wilson et al., 2021;

Viberg et al., 2024).

Wilson et al. (

2021) observed that although elementary teachers appreciated how Automated Writing Evaluation (AWE) systems saved time and allowed them to focus on higher-level writing concerns, they also felt automated feedback lacked personalization and did not account for students’ developmental stage and effort trajectory; thus, it was rather superficial (

Wilson et al., 2021). Further, concerns are raised on the accuracy of AI grading compared to humans with human-provided feedback being more accurate compared to AI-generated (

Atasoy & Moslemi Nezhad Arani, 2025). In addition, ethical issues surrounding fairness pose additional barriers to teacher trust. AWE systems might utilize algorithmic biases rooted in unrepresentative training data or skewed human rater norms, potentially disadvantaging certain demographic groups (

Bulut et al., 2024).

Further, the study reveals a significant gap in AI-related PD, with only 24.0% of teachers having received any general AI training. This is corroborated by findings from the EdWeek Research Center, which reported that 58% of teachers had received no training on AI tools. Despite this, there is a strong desire for PD, with 80.3% of teachers identifying “Professional development workshops” as a key need to increase their confidence in using AI tools. This indicates a clear demand for structured PD opportunities that equip educators with the knowledge and skills to effectively integrate AI into their teaching practices.

The findings underscore the need for deliberate strategies to support teachers’ use of AI, potentially involving educational institutions and teacher-preparation programs. This includes providing targeted PD, developing clear policies on AI usage, and ensuring equitable access to AI tools across diverse educational contexts (

Bulut et al., 2024). As highlighted by the RAND Corporation, disparities in AI adoption are evident, particularly between higher- and lower-poverty schools, suggesting that systemic support is crucial to ensure all educators can leverage AI to enhance teaching and learning.

4.1. Limitations

Several limitations should be considered when interpreting the findings of this study. First, the sample was composed primarily of teachers from a single school district, which may limit the generalizability of the results. While the district provided valuable insight into educator perceptions of AI, the specific policies, demographics, and culture of that district may not reflect those of other regions of the United States.

In addition, this study relied on self-reported data to assess teacher awareness, use, confidence, and perceived institutional support regarding AI. Self-reported measures are inherently susceptible to various biases, including social desirability and recall bias. As a result, the findings may not fully capture actual behaviors or competencies related to AI use in the classroom. Future research could incorporate observations to better examine teachers’ use of AI tools. Furthermore, the cross-sectional design of the study provides only a snapshot in time. This is particularly important given the rapid evolution of artificial intelligence technologies and their applications in education. Teacher perceptions, institutional support, and the availability of tools are likely to change significantly over a short period of time, as new tools and applications are developed. Thus, even though this information is valuable, it is temporary. Finally, while the study examined specific AI tools, the broader landscape of AI in education is vast and diverse. Findings from a subset of tools may not necessarily apply to emerging technologies.

4.2. Policy Implications

This study highlights several important considerations for education policy at both the district and state levels, especially considering recent national attention to AI integration in education. With the U.S. Department of Education placing increased emphasis on the ethical and effective use of AI in schools, it is imperative that educational institutions respond proactively. Indeed, the strong desire among educators for professional development (PD) opportunities focused on AI should translate into policy initiatives. States and districts should invest in accessible, high-quality, and sustained PD programs that are updated regularly to reflect the fast-changing AI landscape. These should not be one-off workshops but part of a comprehensive PD strategy.

We found meaningful differences between elementary and secondary educators in their experiences and needs related to AI. This suggests that PD and support mechanisms should not follow a one-size-fits-all model. Policymakers should support differentiated approaches that address the unique pedagogical contexts and technological readiness of teachers across grade levels. It should also be noted that teachers’ calls for “clear permission policies” signal a critical gap in existing policy frameworks. As AI tools raise questions around data privacy, academic integrity, and equity, districts must develop and disseminate robust ethical guidelines and usage protocols. Clear policies can empower teachers to innovate while ensuring student protections are in place. Policies should support leadership development, infrastructure investments, and a clear, district-wide vision for AI use. When institutions demonstrate strategic confidence, it may, in turn, increase teacher confidence and willingness to engage with AI.

4.3. Research Implications

The findings of this study also point to several important directions for future research. Most notably, there is an urgent need for longitudinal research that tracks how teacher awareness, confidence, and AI use evolve over time, especially as new tools are introduced and as PD initiatives scale. Future studies should also investigate the impact of AI integration on student outcomes; a dimension not explored here. Understanding how AI tools affect student learning, engagement, and equity will be critical for making informed decisions about their classroom use.

Additionally, there is a need to examine which types of PD are most effective in enhancing AI literacy and confidence among educators (also see

Tan et al., 2025;

Wang & Fan, 2025). Research should explore variations across teacher experience levels, subject areas, and school settings to inform more targeted PD design. Ethical considerations also warrant closer examination (e.g.,

Adeshola & Adepoju, 2023;

Lindebaum & Fleming, 2023). Teachers are increasingly confronted with issues related to data privacy, algorithmic bias, and academic integrity (

Kalantzis & Cope, 2025b), but little is known about how they currently navigate these challenges. Research should identify the specific supports teachers need to address these dilemmas responsibly. It is worth noting that the pace of research on AI in education may be constrained by limited funding. As AI becomes a more central component of teaching and learning, investment in educational research must keep pace. Increased funding is essential to support the sustained, rigorous study of AI’s pedagogical, ethical, and institutional implications. Thus, collaborations between institutions of higher education and school settings may be crucial.