Abstract

Laboratory courses are foundational in STEM education, traditionally reinforcing a linear view of scientific inquiry through the validation of pre-tested hypotheses. This perspective tends to overlook the iterative, tentative, and unpredictable nature of scientific research. To address this, we developed the “Designed-to-Fail Laboratory” (DtFL), an interdisciplinary pilot course that strategically employs structured experimental failures to foster student engagement with fundamental principles of Nature of Science (NOS). The DtFL relates inquiry-based learning with failure, challenging students to confront and reflect on failure as an intrinsic component of scientific practice. Using a mixed-methods approach with pre/post-surveys and student learning diaries, we evaluated the course’s impact on fostering NOS understanding and student engagement. Qualitative analyses revealed heightened cognitive and emotional engagement with NOS dimensions, including its empirical, tentative, and subjective aspects. Survey scores showed no statistically significant changes, underscoring the complexity of capturing nuanced shifts in NOS understanding. In their learning diaries, students highlighted the DtFL’s value in reshaping their perceptions of failure, promoting resilience, and bridging theory with authentic scientific inquiry. Our findings suggest that the DtFL provides a novel framework for integrating failure into laboratory pedagogy, and an opportunity for students to gain insights into NOS while fostering critical thinking and scientific literacy.

1. Introduction

In educational settings, the process of science is often presented as a series of precise, linear steps leading to expected results. However, this view rarely captures the highly dynamic, non-linear scientific process whose conclusions are subject to revision in light of new evidence, and are shaped by human interpretation, creativity, and the broader social and cultural context (Abd-El-Khalick et al., 1998; Cohen et al., 2007; Holbrook & Rannikmae, 2007; Lederman, 2007; McComas, 2015). The ever-evolving philosophical and conceptual framework that deals with what science is, how it works as a process and an enterprise, and the broader implications it has for knowledge and society has been referred to as Nature of Science (NOS).

Today, a growing body of literature emphasizes the importance of NOS instruction as a fundamental component of (science) education for creating scientifically literate citizens. The underlying hypothesis is that beyond subject matter knowledge, students should interact with and reflect on how scientific knowledge is generated, evaluated and applied to real-world contexts in order to develop higher-order cognitive skills essential for navigating real-world issues (Abd-El-Khalick & Lederman, 2000; Allchin et al., 2014; Almeida et al., 2023; Holbrook & Rannikmae, 2007; Höttecke & Allchin, 2020; Ramnarain, 2024; Ryder, 2001; Tairab et al., 2023). These skills include scientific reasoning, problem-solving, critical thinking, and metacognitive awareness, e.g., learning how to obtain and evaluate knowledge, or recognizing and correcting misconceptions (Ecevit & Kaptan, 2022; Engelmann et al., 2016; Forawi, 2016; Lin & Chiu, 2004; Yacoubian, 2020). Furthermore, NOS is increasingly recognized for its role in preparing students to address socio-scientific issues (SSIs), i.e., complex, often controversial real-world topics at the intersection of science, society, policy and ethics, such as climate change or vaccination mandates (Herman et al., 2022; McComas, 2020; Zeidler et al., 2019). For example, Höttecke and Allchin (2020) emphasized how navigating the challenges in science communication and social media, particularly those related to source credibility, is closely tied to public scientific literacy, and by extension, NOS education. Herman et al. (2024) recently reported that a socio-culturally sensitive, pandemic-responsive instruction embedding NOS elements within COVID-19-related contexts significantly improved post-secondary students’ acceptance and/or support of COVID-19 vaccines, as well as their resilience against misinformation and conspiracy theories.

Despite this strong theoretical and empirical support, implementing instructional designs that effectively promote NOS understanding remains a challenge (Abd-El-Khalick, 2013; Bugingo et al., 2024; Jain, 2024; Lederman & Lederman, 2019; McComas et al., 2020). Effective NOS instruction should be explicit (intentionally planned for), reflective (involving deliberate student reflection), and contextualized (grounded in relevant scientific contexts) (Lederman & Lederman, 2019; McComas et al., 2020). One commonly discussed instructional strategy aligned with these principles is inquiry-based learning (IBL) (Allchin et al., 2014; Constantinou et al., 2018). IBL is a student-centered pedagogical approach in which learners construct knowledge by engaging with scientific questions and problems in ways that mirror authentic scientific practice, rather than passively receiving information (Constantinou et al., 2018; Pedaste et al., 2015). This approach is particularly well-suited for both lecture- and laboratory-based teaching and offers a level of flexibility across disciplines that is commonly desired. However, despite its potential, current implementations of IBL often prioritize hands-on experimentation over deeper forms of cognitive engagement (such as exploration, explanation, or argumentation), thereby limiting its ability to support NOS understanding and the associated higher-order cognitive skills discussed above (Morris, 2025). While some instructional models attempt to address this issue by promoting critical and reflective inquiry within IBL implementations, they tend to overlook a fundamental element of scientific work that can prompt such inquiry: failure. Due to its association with negative emotions, such as frustration, disappointment, or embarrassment that are commonly viewed as potentially alienating to students (Allchin et al., 2014), failure is often not incorporated into IBL or systematically addressed via reflective practices when it occurs. We argue that when deliberately incorporated into inquiry-based instruction and accompanied by adequate scaffolding, failure can serve as a valuable tool to engage students with multiple aspects of NOS. This idea was previously suggested by various scholars; for example, Allchin (2012) stated “If the goal is to teach ‘how science works,’ then it seems equally important to teach, on some occasions, how science does not work.”. Similarly, Lederman and Lederman (2019) exemplified the exact steps of how failure can be leveraged to teach students about NOS, emphasizing that discussing disagreements and divergent results among groups can highlight critical aspects of NOS such as subjectivity, creativity, and the tentative nature of scientific knowledge. While the potential of failure to support NOS instruction has been acknowledged, to the best of our knowledge, there has been no empirical study that has focused on how and to what extent failure can support students’ understanding of NOS.

In this work, we present an inquiry-based laboratory course that incorporates structured failure as a central pedagogical tool for NOS instruction and refer to it as the “designed-to-fail laboratory” (DtFL). Our approach fundamentally differs in execution from traditional inquiry-based instruction (including variants of problem-based learning, project-based learning, and authentic research inquiry (Spronken-Smith, 2008)) by intentionally incorporating failure and systematically addressing it as an integral feature of the instructional design rather than an incidental or undesired outcome. With this approach, we aim to give students the opportunity to face uncertainty, ambiguity, and the iterative revision processes that characterize authentic scientific work, in order to enhance their understanding of key NOS aspects. By systematically addressing failure and reframing it as an inherent part of the process of science, we aim to prevent students from developing negative personal associations or feelings that might lead to their disengagement from science.

The DtFL diverges from other established instructional strategies that support NOS instruction, such as the use of history of science (HOS) and SSIs, in two key ways: the degree of flexibility in its design and the depth of student immersion in the scientific process. Unlike the use of HOS, which relies on historical narratives and replication of past experiments, or the use of SSIs, which primarily requires controversial real-world problems with implications on policy and ethics, we believe that the DtFL can be designed around a broad range of scientific topics for a wide range of age groups. This versatility might fill an important gap for NOS instruction in STEM (Science, Technology, Engineering, Mathematics) education, as HOS and SSI approaches are sometimes seen as competing with limited instructional time dedicated to core scientific content. Furthermore, the experience of failure in the DtFL is situated in the present and directly linked to students themselves, unlike the historical figures in HOS or distant decision-makers in SSIs, thereby engaging students more deeply in the scientific process and encouraging a potentially more meaningful shift in perspective. Importantly, by introducing failure after instruction has been provided, the DtFL also differs in execution from the prominent failure-oriented instructional model of “productive failure”, which emphasizes student failure prior to instruction to prime later learning (Kapur, 2008). This design choice reflects the reality of scientific research, where failure frequently occurs despite preparation and access to instruction (e.g., through shared protocols or published methods), due to the complex, ill-structured and unpredictable nature of scientific problems. With this first-time implementation of the DtFL, we aim to explore two research questions (RQs):

RQ1: How does failure affect the extent of students’ understanding of NOS in the DtFL?

RQ2: What are students’ perceptions of the DtFL format?

2. Methods

2.1. DtFL Module Integration and Structure

The DtFL was offered for the first time during the winter semester of 2023/2024 at Technische Universität Braunschweig, Germany, under the title “Introduction to BioMEMS Laboratory”. BioMEMS (Biological/biomedical Micro-Electro-Mechanical Systems) are miniaturized devices that integrate microfabrication technologies with biological components to support and advance the life sciences. This two-credit module, based on the European Credit Transfer and Accumulation System (ECTS), complemented the five-credit ECTS course “Introduction to BioMEMS”, with all instruction conducted in English. To ensure students had sufficient foundational knowledge, enrollment in the DtFL required prior attendance of the course. However, the specific BioMEMS system used in the DtFL and the research question the students were requested to study were deliberately not introduced during the lecture in order to foster an authentic research experience during the laboratory. The DtFL involved a total of sixteen students (see Section 2.2) divided into four groups to maximize hands-on time and encourage meaningful discussions. Each group was allocated one and a half days to complete a total of three experiments (see Section 2.3). Registration for the DtFL and the assignment of students to the groups were carried out on a first-come, first-serve basis. The grade was based on active participation in the experiments and discussions, as communicated to the students at the beginning of the course. Additionally, students were asked to complete anonymous surveys at the beginning and the end of the DtFL (see Section 2.4), and to maintain anonymous learning diaries throughout the course. To familiarize students with the concept of a learning diary and the expected nature of their entries, they received a brief instruction on learning diary keeping (see Supplementary Data, S1) (Enterprise Educators UK, n.d.). In the context of DtFL, learning diaries are meant to serve as a reflective tool for students to document and critically assess their learning experiences, providing valuable insights into emotional, cognitive, and metacognitive processes during learning, as per their original intent (Halbach, 2000; Munezero et al., 2013). To support honest reflection and engagement, students were encouraged to ask questions, share both positive and negative thoughts, and freely express ideas, without fear of mistakes or criticism. Furthermore, they were assured that the content of the surveys and diary entries would not influence their grades due to anonymity.

2.2. Student Demographics

Given the interdisciplinary nature of BioMEMS and the course being taught in English, the DtFL attracted students from diverse cultural and educational backgrounds. Of the sixteen participants, seven were male (43.75%) and nine were female (56.25%). Three students (18.75%) were pursuing their bachelor’s degrees. The remaining thirteen (81.25%) were enrolled in master’s programs. There was considerable variation in the number of laboratory courses students reported participating in during their university studies. On average, students estimated they had taken approximately 10 laboratory courses (standard deviation (SD) ≈ 7, median = 9, with statistics calculated using the lower end of their estimates). This suggests that some students might have been more familiar with the practical and cognitive skills commonly required in laboratory settings. Most of these laboratory courses were taken within the German higher education system.

2.3. Design and Execution of the DtFL

The DtFL centered around answering the following research question: “How does stretching affect the orientation of biological cells cultured on stiff and soft substrates?”. This question was presented to the students at the beginning of the laboratory course, along with a relevant theoretical study that served as the basis for the overarching research hypotheses the students were prompted to form (G.-K. Xu et al., 2018). To experimentally answer the research question, the students would first need to fabricate and test a BioMEMS device developed by our research group, designed to enable multi-directional stretching of cells for mechanobiological investigations. This device, termed “StretchView”, comprises a device body and a cell culture membrane attached to the device body, both made of polydimethylsiloxane (PDMS), a widely used silicone elastomer valued for its versatility, biocompatibility, and ease of use (Jaworski et al., 2025). PDMS is a two-part resin that consists of an elastomer base and a crosslinker. The ratios at which the elastomer and the crosslinker are mixed influences the mechanical properties of the resulting polymer, including its stiffness. Before experiments began, students were shown examples of StretchView systems, introduced to the device structure and function, and informed that they would need to fabricate such systems and use them to test their research hypotheses in order to answer the central research question. Students were then prompted to form an experimental plan with the guidance of the instructors. All groups arrived at the following steps: (1) fabrication of two StretchView devices, one incorporating a soft cell culture membrane and one incorporating a stiff cell culture membrane, (2) preparation of the finalized devices for cell culture, and (3) cell culture and cell stretching on differently stiff membranes. Within this framework, students were tasked to perform three consecutive experiments, each incorporating a deliberate point of failure. In the first experiment, students would be fabricating device bodies by mixing and then casting PDMS into a 3D-printed mold, curing at a specific temperature, and then unmolding the fabricated parts. For that, they were provided with a generalized, publicly available protocol published by a well-known university (see Supplementary Data, S2) (Israel Institute of Technology, n.d.). Although the protocol contained all basic process steps and no mistakes, it lacked necessary information related to part unmolding, meaning that regardless of how closely the instructions were followed, the unmolding process would fail (failure point 1). In the second experiment, students were tasked with researching, formulating and testing their own hypothesis related to how crosslinker concentration affects PDMS stiffness. Expectedly, students quickly arrived at the generalized information that an increased crosslinker concentration would lead to higher PDMS stiffness, and they based their hypotheses around this information. However, PDMS stiffness also depends on multiple other parameters, such as curing temperature and time, aging, or ultraviolet light exposure, all parameters that students did not consider when formulating a hypothesis (Cai et al., 2022; Charitidis et al., 2012; T. Xu et al., 2024). To challenge students’ simplified hypotheses (and lead them to failure point 2), we provided students with PDMS samples that were made using increasing crosslinker concentrations, but unbeknownst to them, were subjected to varying curing, aging, or storage conditions. Students experimentally measured stiffness using a custom-built tensile testing platform. The measurements resulted in stiffness values that did not fit the students’ expectations (failure point 2). In the third experiment, the students’ objective was to test their overarching hypotheses about cell orientation under biaxial stretching using the StretchView devices they fabricated. As cell culture experiments are inherently time consuming and cells can take several hours to days to respond to external stimuli, such as substrate stiffness, students did not perform these experiments during the laboratory course. Instead, instructors performed the experiments and presented students with images of cells that did not exhibit the expected orientations as a response to stretching and stiffness (failure point 3). The deviation between expected results and the experimental results obtained during the course could have occurred for various reasons, including the use of cell types that were not sensitive to stretching, insufficient time for the cells to respond to the stretching, or the use of inadequate visualization methods to observe the cells’ reactions.

After every failure point, students were gathered in a discussion session where they were guided by the instructors to critically reflect on their initial hypotheses, experimental processes and findings. After failure points 1 and 2, they were prompted to take a closer look at the literature, attempt to reformulate their “failed” hypotheses and redesign their experiments so that they were eventually able to successfully fabricate the cell stretching devices. Due to time constraints related to the cell culture process, the discussion session after failure point 3 solely focused on the analysis of potential reasons for the discrepancy between the expected and the acquired experimental results.

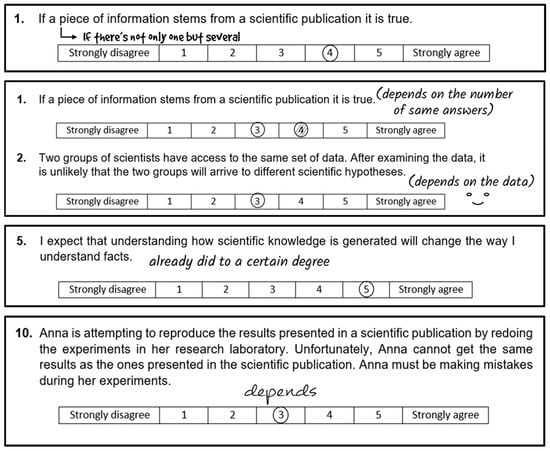

2.4. Data Collection and Analysis

In this exploratory study, we used a one-group, within subjects, pre-/post-test research design (Privitera & Ahlgrim-Delzell, 2019). To investigate our RQs, we adopted a convergent mixed-methods approach, where quantitative components (Likert-scale survey questions) and qualitative components (open-ended survey questions and student learning diaries) were collected and analyzed separately, then integrated during interpretation (Creswell & Plano Clark, 2018). In some discussions below, we additionally report on instructors’ observations of student interactions and reactions, in order to present a more complete picture. For the survey design, we adapted questions from the “Views of Nature of Science (VNOS)” and “Colorado Learning Attitudes about Science Survey (CLASS)” to align with the topics of interest in this study (Adams et al., 2006; N. G. Lederman et al., 2002). These adaptations accounted for the STEM backgrounds of our students and emphasized questions more relevant to experimental investigations. Additionally, certain questions initially intended to distinguish between scientific theories and laws were adapted to instead differentiate between scientific hypotheses and scientific facts, as this distinction was more pertinent to the context of failure in our study. Here, we define a scientific fact as a phenomenon that has been repeatedly confirmed through the analysis and interpretation of empirical evidence (National Academy of Sciences, 1998). This selective adaptation approach follows established practices in STEM education research, where validated instruments like VNOS and CLASS have been modified or partially used to better align with specific study contexts and constructs (e.g., Bell et al., 2003; Şahin, 2009; Semsar et al., 2011). The initial survey draft was validated through feedback from ten scientific staff members (doctoral students and postdocs). Based on their responses, revisions were made to finalize the survey, which can be found in Table 1.

Table 1.

Survey questions used in this study, grouped into the respective RQs and probed topics. For questions adapted from VNOS and CLASS, the source question is highlighted in parentheses (e.g., VNOS-FC-Q10 refers to question (Q) 10 from VNOS survey, Form C (FC)). The survey placement column shows the position of the questions in survey Part 1 (P1: open-ended questions) and Part 2 (P2: Likert-scale questions).

The finalized survey was administered to the students at the beginning and the end of the DtFL in two parts: open-ended questions (Part 1) and Likert-scale questions (Part 2). Part 1 was administered and collected before Part 2. To enable linking of different survey parts, as well as pre- and post-DtFL survey responses while maintaining anonymity, students were asked to choose an emblem (e.g., a shape or emoticon) and use it consistently on all survey sheets instead of their names. The post-DtFL surveys were administered before the final session of the module, where the purpose and design of the DtFL were disclosed to students. During this session, students provided consent for the anonymous use of all materials (including survey responses and diary entries) in research.

Answers to open-ended survey questions and the diary entries were digitalized, qualitatively or semi-quantitatively analyzed, and selected responses were highlighted. During digitalization, excerpts were used verbatim, including spelling or grammar errors. In rare instances, changes or additions needed to be made for context clarification. These were indicated with square brackets “[ ]”. To convey students’ emotional responses, emoticons or underlined phrases were retained. Responses to the Likert-scale questions were quantitatively analyzed using descriptive statistics and a Sign test for paired samples using the software “OriginPro 2022b”. The Wilcoxon signed-rank test for matched pairs could not be used due to the high number of ties in the data, which effectively reduced the sample size for the test (Ramachandran & Tsokos, 2021; Wilcoxon, 1945).

3. Results and Discussion

In this section, we present and discuss the results of our analysis for the two RQs guiding this study. Section 3.1 and Section 3.2 address RQ1 and RQ2, respectively, focusing on the analysis and interpretation of open-ended survey responses and learning diaries. In order to preserve the individuality of student perspectives while presenting illustrative survey responses and diary entries, we assigned a unique code to each student (i.e., S01 to S16) that we have consistently used throughout our analysis. Section 3.3 discusses students’ responses to Likert-scale survey items across both RQs and reflects on the limitations of this quantitative approach.

3.1. RQ1: How Does Failure Affect the Extent of Students’ Understanding of NOS in the DtFL?

We begin with the analysis of open-ended survey responses and learning diary entries to examine how structured failure elements in the DtFL contributed to students’ understanding of various aspects of NOS, following McComas’s NOS framework (see Supplementary Data, S3) (McComas, 2015, 2020; Njoku, 2011; Widowati et al., 2017). Our analysis revealed that as a response to failure, students engaged both emotionally and cognitively with multiple major aspects of NOS. Exceptions were the distinction between laws and theories, and the differentiation of science from engineering and technology, as these aspects were not addressed within the first implementation of the DtFL. While students often reflected on multiple NOS aspects in an interconnected manner rather than as discrete concepts in their writing, for clarity and readability, we present exemplary entries organized into thematic clusters based on specific NOS aspects.

3.1.1. NOS Aspect: Science Produces, Demands, and Relies on Empirical Evidence

A foundational principle of NOS is that scientific knowledge is built upon empirical evidence, that is, knowledge is obtained through systematic observation, measurement, and experimentation. For a scientific claim to be credible, it must be supported by accurate, reproducible, and verifiable data, and critically analyzed with attention to how it was generated and how it relates to existing knowledge. Because empirical evidence plays such a central role in the scientific enterprise, this NOS aspect naturally closely relates to others. The examples presented here specifically focus on how students engaged with collecting and validating empirical data as the foundation of scientific knowledge.

During the DtFL, students encountered experimental results that differed from their expectations or were difficult to interpret. These moments encouraged students to reflect on how they collected empirical data, and how they evaluated its reliability and validity. S10, for example, described problems with their stiffness measurements and how these affected the way they interpreted results: “Due to the measuring not being so stable & a bit rudimentary, I could expect the measurements to vary a bit. Afterward we tried to analyze our results. I expected that the stiffness & the stretch would decrease as the ratio grew smaller/crosslinker concentration grew, as I had read in a paper before we had begun working. Although the stretch length did somewhat decrease as I expected, the results were not statistically significant. The importance of keeping track of the parameters we use has been made clear, specially when comparing with already published results.” This reflection illustrates how some students began to think more carefully about the quality and the validity of the data they were collecting, as well as the importance of carefully selecting experimental parameters with (cross-lab) reproducibility in mind. Other students focused more explicitly on the requirement for replication and cross-validation of findings. For example, when reflecting on results that were inconsistent with their expectations, S05 laid out a systematic strategy for checking and contextualizing their data: “Our data did show a trend in the right direction, but it was not as linear as we maybe expected. I would remeasure the values, fabricate the devices again and check (double check) that the concentrations of the devices are right. If I then came to the same results, I would check the literature again. Which material exactly did they use? How did they measure everything? Maybe they used materials from a different brand or something that might influence the results. I would also have a look at different literature → maybe someone came to the same conclusions as I did. At the end (if I can’t really change my results) I would just accept them and choose the ratios of base:linker depending on what stiffness I need for my following experiments → choose the ratios that show a significant difference in stiffness (if that’s what I want to have later) I would also check the internet if I could come to an explanation for my data myself.” This entry exemplifies how the student prioritized the validity and reproducibility of their data before accepting them as truth, and they further addressed that empirical data should be contextualized and interpreted in the light of multiple resources (see also Section 3.1.6). S09 expanded on this understanding, stating the need for replications even when experimental results seemed to match their hypotheses: “Our hypothesis was supported by the experiments but further investigations (measurements, more replicates) are needed to be done.” A similar emphasis on the validity and reproducibility of empirical data also appeared in survey responses. For example, when explaining the difference between a scientific hypothesis and a scientific fact (P1-8), S15 revised their pre-DtFL definition of a fact from “something that is proven” to “something that is proven by experiments (in optimal cases more than once)”. While the prevalent use of the word “prove” in student entries and survey responses likely reflects the blending of daily and scientific language, an issue previously discussed in the literature (McComas, 2020), the subtle yet important shift in this entry signals progress as it suggests that establishing scientific facts requires empirical data, for example, as a result of experiments, and scientific credibility depends on their replication and verification.

3.1.2. NOS Aspect: There Is No Singular “Scientific Method”

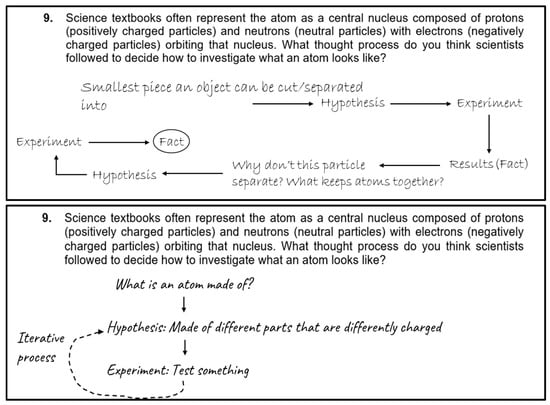

An enduring misconception that is still propagated in science education is that all scientific inquiry follows a rigid, linear set of steps often referred to as the “scientific method”: identifying a problem/asking a question, gathering information, forming a hypothesis, testing the hypothesis, recording and analyzing data, and reaching a conclusion. In reality, scientific investigations are diverse, with approaches differing depending on the questions asked, the tools available, and the disciplinary context. Moreover, scientific inquiry is inherently iterative, as inconclusive results or unexpected outcomes often lead to new questions, prompting further rounds of investigation and interpretation. As the DtFL was implemented within an engineering-focused course that emphasized experimental investigations, students were not exposed to the full diversity of inquiry types (i.e., descriptive and comparative investigations). However, the failure points embedded into the experimental investigations encouraged students to reflect on the iterative nature of science, as supported by several diary entries and open-ended survey responses. For example, when defining what constitutes an experiment (P1-6), S15’s pre-DtFL survey response evolved from “To clearify your hypothese[s]” to the post-DtFL survey response of “To clearify your hypotheses and investigate new stuff → actually, also to “create” new hypotheses for future exp[eriments]”, demonstrating a newfound recognition of experimentation as a part of a recursive and generative process rather than a one-way test of ideas. Others chose to specifically emphasize the role of iteration using arrows in their post-DtFL responses, a perspective absent from their pre-DtFL answers (see examples for P1-9 in Figure 1). In their diary, S05 identified failure as a stimulus for reflection, refinement and further inquiry: “Also the ‘failure’ of different parts of the experiment leads to more thinking about what we expected before, why we saw/didn’t see the results we expected and what we can do next time to improve.” S02 emphasized the role of repetition and adjustment, stating: “Redo the experiment to prove that your first experiment results are significant relevant/not. (…) Science also means trial & error.”

Figure 1.

S01’s (top) and S03’s (bottom) post-DtFL responses to P1-9, particularly emphasizing iteration using arrows and circular patterns, a perspective missing from their pre-DtFL responses. To ensure anonymity, students’ responses were redrawn digitally, and varying handwriting-style fonts were used to represent responses from different individuals. The original form and text were retained.

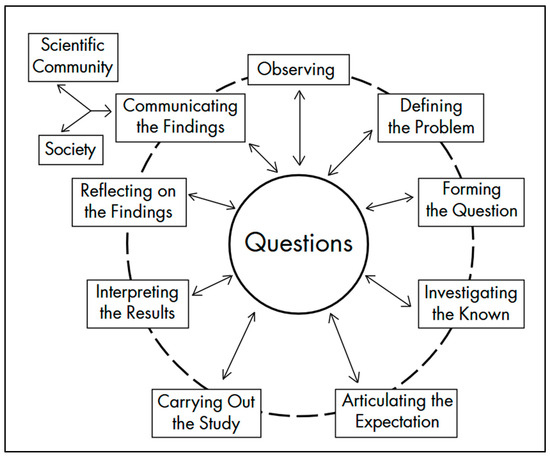

These reflections align closely with the “Inquiry Wheel” model proposed by Reiff, Harwood and Phillipson, which presents a more authentic alternative to the linear “scientific method” (Reiff et al., 2002; Robinson, 2004). Based on interviews with 52 science faculty members from nine science departments, the inquiry wheel positions questions at the center of scientific inquiry, surrounded by iterative, interconnected activities such as observation, investigation, and interpretation (see Figure 2). While not explicitly integrated into NOS frameworks like McComas’s (see Supplementary Data, S3), the inquiry wheel remains one of the widely used models conceptualizing the iterative nature of real-world scientific work. Beyond understanding this iterative nature of scientific inquiry, the DtFL appeared to foster an intuitive internalization of this model. S11 reflected: “What I loved about the course is that it was not only focused on bioMEMS, but the general practical part of scientific thinking. We were constantly coming up with hypothesis and debating them (…). What was important to me was to realize all the information that could potentially be drawn by asking (and further experimentation) these questions, which reminds me that the scientific method is cyclic and improves itself based on the iteration of these questions.” This quote illustrates how failure, rather than being experienced as a dead end, became a catalyst for curiosity and further questioning—the central engine of the inquiry wheel. A particularly telling example came from S16 who, pre-DtFL, stated that an incorrect research hypothesis cannot be a valid part of the process of scientific inquiry (P1-12), as it “can cause wrong direction of Researching or studying”. Post-DtFL, their answer changed entirely, stating: “Yes. incorrect hypothesis will make, Curiousity. Why result is not same as hypothesis. Then he/she will try to find the reason and that will make better and correct direction.” This transformation underscores the potential of structured failure to reinforce students’ understanding of science as an exploratory, evolving process. Given these examples, we find it surprising that failure—a universal and generative component of scientific work—is not explicitly represented in models such as the inquiry wheel or recognized within conceptualizations of scientific inquiry and NOS. We believe that representing failure as an inherent element of science, in interventions such as the DtFL but also beyond, offers a promising avenue for normalizing failure in scientific processes and decoupling it from its negative connotations. This shift towards accepting failure as an integral part of the process was reflected in students’ increasingly resilient and constructive attitudes towards setbacks, as humorously captured by S15’s diary entry: “And the most important part is to not get frustrated → if you cry, only cry to be ready for try no. 2:)”.

Figure 2.

“The Inquiry Wheel” presented as an alternative to “The Scientific Method”, reprinted with permission (Robinson, 2004). Copyright 2004, American Chemical Society. The wheel should not be interpreted as a cycle with stages following each other, but as a flexible process where investigations can start at any stage and be led by another depending on the nature of the investigation.

3.1.3. NOS Aspect: Scientific Knowledge Is the Product of Creative Thinking

Contrary to the portrayal of science as a rigid and purely logical enterprise, NOS recognizes creativity and imagination as driving forces at all stages of the scientific inquiry. Scientific knowledge significantly benefits from creative hypotheses and result interpretation, novel experimental designs, innovative problem-solving strategies, and the construction of explanatory models such as the inquiry wheel discussed above. However, students might not associate creativity with scientific practice when they are asked to follow established procedures with predictable outcomes (see also Section 3.2). Within the DtFL, the structured inclusion of failure and the need to reframe, rethink and iterate created opportunities for students to engage in creative thinking. A prominent experimental point that supported students’ creative engagement was failure point 1, where students could not unmold the device body (see Section 2.3). While brainstorming for solutions for this failure point, three students drew inspiration from baking and suggested “buttering the mold” for an easier release. This humorous but sincere suggestion illustrates creative problem solving, where students engaged in analogical reasoning by transferring a familiar practice from an everyday domain (baking) into a scientific context. Moments such as these show that structured failure can actively stimulate creative thinking and the generation of new ideas. As S02 noted: “Make iteration steps are a good way to think about a topic in an “outside of the box” way + promotes your creativity.”

Despite this experiential engagement, further mentions of creativity and its role in driving scientific inquiry were largely absent from students’ diary entries and open-ended survey responses. The survey responses to questions adapted from VNOS-Form C, which aim to assess students’ views of creativity and imagination in science, revealed no significant shift in how students conceptualized the creative and imaginative elements of scientific work. In fact, in some cases, references to creativity or personal influence diminished post-DtFL. For example, when asked about scientists’ decision-making processes when choosing which experiments to perform to test their hypotheses (P1-10), both pre- and post-DtFL responses mainly focused on rational and methodological factors such as scientists’ prior knowledge, the state of the literature, or the appropriate selection of dependent and independent variables. One pre-DtFL response (S03) mentioning “inspiration from other researchers” did not mention any human influence post-DtFL and focused on procedures such as iteration and validation instead. S07, who had a pre-DtFL response of “Perhaps they choose whichever experiments seems the most fun” changed their post-DtFL perspective to “Perhaps they choose to run the easiest and less complicated experiments first”. A similar trend was seen for P1-9, a question that addressed the thought processes scientists follow to investigate what an atom looks like. Responses varied widely and lacked mentions of human elements, except for S03 whose pre-DtFL response mentioned inspiration from planetary orbits. Post-DtFL, however, S03 focused on an iterative series of experiments, omitting any reference to creative or imaginative elements.

This mismatch between lived creative engagement and reported conceptions suggests that while the DtFL enabled students to practice creative thinking, it did not sufficiently promote metacognitive awareness of creativity as a defining feature of science. Although this observation aligns with prior findings in science education literature, which show that students often struggle to recognize creativity as an inherent element of science unless it is explicitly emphasized and reflected upon (Kind & Kind, 2007; Newton & Newton, 2009), it could be due to the fact that in DtFL during the solutions in the literature rather than to propose their own creative solutions as they lacked experience in this area of research. To strengthen the understanding of this creativity as a core NOS dimension, future iterations of the DtFL would benefit from incorporating more explicit prompts for reflection, such as asking students to pinpoint and name their inventive decisions, peer-discussion sessions that explicitly name and debrief creative choices, as well as discussions around the role of creativity and imagination. Additionally, a longer version of the course could allow the incorporation of more opportunities for creative engagement, including allowing students to explore multiple creative experimental ideas.

3.1.4. NOS Aspect: There Is Subjectivity and Bias in Science

While science is often idealized as an objective pursuit of truth, it is fundamentally a human activity shaped by prior knowledge, reasoning, insight, skill, and perspective. These human dimensions, although essential, introduce subjectivity and bias into scientific practice and communication. Recognizing the human element in the generation, selection, interpretation, and communication of empirical evidence is critical for understanding how scientific knowledge is produced, how divergent interpretations can arise from the same data, and why transparency, critique, and peer review function as essential safeguards in scientific work. In traditional laboratory courses, this subjective dimension is rarely emphasized as instruction tends to prioritize technical precision and correctness over deliberation or contextual reasoning. The DtFL, however, reverses this focus by structuring experiences around ambiguity and unexpected results, thereby offering students multiple opportunities to engage with the subjective nature of science. Throughout the DtFL, several student reflections illustrated a growing recognition that scientific procedures, and the knowledge created as their result, require contextual interpretation and evaluation. S14 wrote: “Are our results right? Yes, even though it doesn’t feel like it, when comparing to literature results. The thing is that other researchers followed different protocols. (…) Protocols are not always applicable for everyone.” Similarly, S04 reflected on how the experience of failure led them to critically reconsider their assumptions in data interpretation: “The experiment gave me a new insight into the execution of experiments and how to interpret data → with a grain of salt and tears or a combination of both. From our research in the morning I expected another result than what we created. But it really emphasises the importance of putting things into context and not just accepting ‘facts’ as they come but rather know their circumstances.” This perspective was expanded upon in S03’s entry, who recognized that the interpretation of scientific claims is shaped by readers’ understanding of the methodological and environmental contexts the empirical evidence is generated in, and warned against drawing conclusions from isolated statements: “Reading studies takes time! Only taking one sentence from a study and forming a hypothis out of it may cause difficulties, because you need to understand the parameters, environments, testing, etc. Definite statements can vary on the scope!” Continuing their entry, S03 acknowledged the challenge of reconciling conflicting pieces of information, and reflected on the responsibility to judge the validity and credibility of such findings: “Furthermore we learned that it can be quite challenging to find different results in your experiment compared to studies… Who do you question first? Yourself or your research colleague?…” Similarly, S15 highlighted the value of deliberation and credibility assessment in scientific inquiry: “The best part of this practical course was not the experiment itself but the understanding on how to organize an experiment and how to go through the process step by step. It teached me to have a different perspective on research and showed me the importance of having a discussing. Also what is important is the sources where to look up stuff and how valid these information is.” In this entry, the positive emphasis on having a discussion with peers and instructors suggests a growing awareness that subjective interpretations, when shared and critically examined, can enrich scientific understanding rather than undermine it. At the same time, some students began to reflect on how subjectivity can be introduced through social structures and authority. One particularly insightful entry (S03) pointed to the instructor’s influence in framing discussions: “I would have liked a little less help by [the instructor], since she had already seen the problem two times by wednesday, she was already kind of biased as to where the discussion should go.” This reflection demonstrates an awareness of how prior knowledge and authority can subtly constrain interpretative space, shaping which ideas are to be explored or dismissed in a collaborative setting. The fact that this student identified the subjective influences on scientific knowledge generation, rather than perceiving guidance as neutral, suggests a growing ability to view science as a process shaped by interaction and perspective, not just as procedures and facts. This broader view of science was perhaps most clearly captured by S14’s shift in response to the question whether scientific facts can ever change after they have been accepted as true (P1-7). In their pre-DtFL answer, S14 already acknowledged that scientific facts can change, but attributed this change primarily to uncovering and correcting experimental mistakes: “Yes it can. Science is ever moving forward. Also mistakes are made. Even if 20 people recalculate, redo experiments etc. some things might be unseen.” Their post-DtFL answer, however, highlighted how differences in interpretation, context, and experimental design can all lead to divergent conclusions, even when no mistakes are involved: “What is a scientific fact? A natural law or results from a paper? Results can be interpreted differently. Experiments have been done differently. Just because results are true in one case, doesn’t mean they are true in another.” This shift suggests that the DtFL may have helped students understand that scientific knowledge is inherently shaped by the conditions of its production and subjective interpretation.

3.1.5. NOS Aspect: Society and Culture Interact with Science and Vice Versa

Scientific knowledge does not develop in isolation; it is shaped by the cultural, historical, and societal context in which it emerges. Conversely, scientific discoveries and practices also influence society, shaping technology, policy, education, and public discourse. Recognizing this bidirectional relationship is an important part of understanding science as a human and context-bound enterprise. Given that the DtFL was implemented for the first time, its ability to directly address this NOS aspect was constrained by the experimental nature of the course design. Nonetheless, some students extended their reflections to include broader social and cultural contexts. S02 emphasized the importance of understanding how scientific knowledge is generated to navigate current societal challenges, such as misinformation and evaluation of scientific credibility: “My personal learning from this lab is huge I think. It is so important for us to understand how a scientific hypothesis is formed & how the researches are running. It should be a relevant topic not also for students maybe introducing it to kids in school. In our time there is a big problem with fake-news & I think so many people don’t know how to estimate that a source is credible/not credible (best example climate change/corona-virus).” This entry reflects an understanding that scientific literacy has social value beyond the university setting, particularly in an age where scientific claims can be widely misrepresented, misunderstood or misconstrued. S03 commented on the cultural framing of failure, suggesting that societal attitudes may shape how people experience mistakes in educational or scientific settings: “Especially us Germans are very used to a mistake-culture where giving wrong answers is bad and wrong answers are what teachers or professors say is wrong.” Here, S03 situates their learning experience in the context of cultural norms around correctness and authority, reflecting an understanding that societal and cultural values may influence how educational contents are taught, learnt, and internalized, especially in relation to failure and uncertainty. Together, these reflections suggest that even when the design and content of the course limits direct engagement with science-society interactions, some students still connect their personal experience of doing science to its broader social and cultural relevance. These insights support the idea that creating space for interpretation, discussion, and reflection upon failure, even within a technically focused laboratory setting, can help surface important themes about the interaction between science and society.

3.1.6. NOS Aspect: Scientific Knowledge Is Tentative and Self-Correcting, but Ultimately Durable

A central aspect of NOS is that conclusions derived from systematic scientific investigations may be revised as new evidence, methodologies or interpretations emerge. This aspect highlights the dynamic and evolving nature of scientific understanding while underscoring that scientific knowledge is still durable—offering the best available explanations grounded in empirical evidence. In science education, emphasizing the tentative nature of science without proper context has been considered pedagogically risky, as it may lead students to perceive scientific knowledge as unreliable and foster distrust (Mueller & Reiners, 2023). This concern is particularly relevant in the context of the DtFL, where students repeatedly encounter experimental failures and are exposed to scientific literature that is not in agreement with their experimental findings. However, our findings suggest that through instructor-guided discussion and reflection sessions, this risk did not materialize during the DtFL. On the contrary, students’ reflections indicated an increased recognition and appreciation of the complexity and conditionality of scientific conclusions. Instead of interpreting discrepancies as signs of unreliable science, students began to consider the factors that might explain different outcomes, a way of thinking necessary for comprehending why new evidence or interpretations might arise that initiate the revision of scientific knowledge. For example, when reflecting on disagreements between a piece of scientific literature and experimental results after failure point 2 (stiffness results, see Section 2.3), S16 wrote: “Here is what I overlooked. • Curing under different condition.—they cured at 90 °C for 15 min. • That paper was written in 1999. → 25 years difference. → Technological advancement, Manufacturing methods or quality improvements in Material etc can cause Result different. Through this, I learned that [I] must check not only the Result of paper, but also various conditions and factors that may affect the Results.” S14 reflected similarly on such disagreements, stating: “It was super interesting. (…) I realised every experiment is different. Just because my values are different from the values of another person it does not necessarily mean that my or their values are wrong. It is important to check if the same protocol, materials, substances, etc. (also person) were used. All these factors influence the results. But it is important not to just do stuff, but think about it first. Could it make sense? What is the expected outcome? Why is my outcome different from my expectation? All these questions helped me understand a scientific approach better.” These reflections, along with many other entry examples presented so far in the discussion of related NOS aspects, indicate a developing understanding that conflicting results are not necessarily errors to be corrected, but outcomes to be interpreted shaped by materials, methods, time, and context. Such recognition aligns with the tentative aspect of NOS, the idea that scientific knowledge evolves not because it is weak, but because details and explanations are refined and revised as knowledge grows. This recognition was further expanded by some of the responses given to the open-ended survey question which explored whether students perceived scientific facts as changeable (P-7). Pre-DtFL, S09 answered this survey question as: “Yes it does, because science always goes forward and new/better research methods get implemented, e.g., the key-lock principle of an enzyme-substrate reaction is now found not to be completely correct.” Post-DtFL, their answer was revised to: “Yes it can, because science moves and nearly every day new observations are made. I think some facts, like “the earth is moving” will not change in general but more detailed information will be found and discussed to get a even better understanding of our life.” Similarly, another pre-DtFL answer (S12) of “No, only the explanation for it could change or it could also apply to other parts.” was revised to “Still no, but in a certain way yes → more the surrounding information.” These answers reflect a developing recognition of the durability of scientific knowledge while simultaneously remaining open to revision.

The fact that the DtFL did not foster distrust toward science despite students encountering experimental results that frequently failed to match published findings was further supported by the analysis of additional open-ended survey questions. When questioned about resources they found trustworthy to base their scientific opinions on (P1-13), students commonly referred to scientific platforms (e.g., PubMed, Google Scholar), and academic journals published by certain publishers (e.g., Nature and Elsevier). Specific media outlets—such as newspapers, scientific magazines, or Youtube channels—and science professionals were also mentioned. The DtFL as an intervention did not seem to change what students deemed as trustworthy sources. Similarly, the semi-quantitative analysis of students’ responses regarding reasons for mismatches between literature and experimental findings (P1-14) showed little change after the DtFL. Human error remained the most frequently mentioned reason, while slight increases were observed for environmental conditions, literature reliability, and deviations from protocols (see Table 2). These changes, while modest, might suggest a growing recognition of the real-world complexity of producing reliable knowledge. We argue that students’ general acceptance of the tentative nature of science throughout the DtFL, instead of interpreting discrepant results as a sign of science’s unreliability, likely connects to their recognition of the non-linear and iterative nature of science (see Section 3.1.2). By encountering failure in a structured and reflective way, students seem to reframe disagreement and revision not as signs that science is broken, but as inherent elements of it.

Table 2.

Mentioned reasons for mismatch between literature and experimental findings: pre-and post-DtFL as recorded in question P1-14. All mentions were counted, even if several were cited by the same student in different forms. When categorizing student responses, statements that could reasonably fit into multiple categories were counted in all relevant categories. For instance, the statement “Mimicked the test badly” could imply both human error during experimental execution and deviations (intentional, for example, in order to save time, as well as unintentional) from the protocols used.

3.1.7. NOS Aspect: Science Is Limited in Its Ability to Answer All Questions

Science provides powerful tools for systematically investigating the natural world through empirical evidence, but it is also inherently limited. Scientific inquiry can only address questions that are empirically testable; it cannot resolve moral, ethical, or aesthetic questions. Furthermore, it is constrained by the methods, tools and assumptions of its time; and cannot offer certainty that holds true in all places for all times. Recognizing these limitations is key to developing a realistic view of what science can—and cannot—achieve. During the DtFL, multiple student reflections, some of which discussed above in relation to other NOS aspects, hinted at an emerging awareness of such limits. However, since the initial implementation of the DtFL was centered on experimental investigations, these reflections mostly focused on practical and methodological limits, rather than the broader question of what kind of questions science is able, or unable, to address. For example, S08 commented on the limits of science in relation to the complexity of method selection and reflected on how their view of scientific work had expanded: “Maybe I’ve never thought about it, but before that, we have always only learned methods, but never really been introduced to the lab part of a research field. That is so much more than methods. (…) Learning a few ways to reach the goal is important, but there are thousands out there. It is also important to understand that there are a thousand ways, that many are good, some are worse and how to decide what could be a good way, when to change the approach and in general how to deal with corne[r]s and dead ends in the research paths.” This reflection highlights not only methodological diversity and limitations, but also the lack of a singular pathway in scientific problem solving (see Section 3.1.2) and acknowledges the necessity in human judgement in the form of strategic decision-making in order to choose suitable methods for investigations (see Section 3.1.4). S02 reflected on the limits of science in relation to the time and expertise required for investigations, recognizing the effort it takes to design and carry out investigations: “You have to become an expert of the topic to clarify all the hypothesis.” This statement points to an important practical limit: scientific progress depends on people’s time, training, and access to knowledge. At the same time, it suggests a naive view that with enough expertise, all uncertainties can be resolved. In this way, this quote can be interpreted as a partial understanding of science’s limits, as it acknowledges practical constraints such as time, knowledge and training, but does not fully grapple with the fact that some questions may remain open not because we lack knowledge, but because there are limitations to what can be demonstrated or fully explained. A deeper level of reflection was shown by S05 who reconsidered the difference between a scientific hypothesis and a scientific fact (P1-8). Their pre-DtFL response to this question reflected a common idea shared by most students: “yes, I think there is a difference. A hypothesis implies that there is more research that needs to be done to proof it right or wrong, a fact is already proven to be right with scientific background.” Post-DtFL, this response was revised to: “actually, maybe there isn’t really a difference because how much research and how many “opinions” is needed to be sure enough to declare a hypothesis as a “fact”? but there might still be a difference in definitions (that I don’t know)” Here, S05 questions the degree of certainty required to declare a piece of scientific information a “fact”. This line of thinking shows a developing understanding that science does not deal with absolute certainty, and hints at problem of induction. Taken together, these reflections suggest that through their experience with unexpected results, conflicting data, and the need to make interpretive choices, some students began to engage with the practical and methodological limits of science.

3.2. RQ2: What Are Students’ Perceptions of the DtFL Format?

In this section, we examine student responses to the format of the DtFL via qualitative analysis of open-ended survey responses and student diary entries. Our analysis revealed a consistently positive reception of the structure and teaching approach of the DtFL. Students described their experience as reflective of authentic scientific practice and reported a sense of agency in conducting their own research. Despite repeated failure, students found the course highly engaging, with many students attributing their positive outlook to the supportive and attentive learning environment fostered throughout the course. The DtFL was compared favorably to traditional laboratory courses and was nominated by course participants for the “TU Teaching Awards 2024” of Technische Universität Braunschweig in the category of “Best Exercise/Best Laboratory Module”. It was subsequently granted this award by a student selection committee.

3.2.1. How Was the DtFL Perceived as a Research Experience?

One of the core design goals of the DtFL was to offer students a learning experience that emulates authentic scientific practices. Students were encouraged to come up with their own hypotheses and consider how to test them within a defined topic area, resembling an “open inquiry” format (Bell et al., 2005). In practice, however, their freedom to explore was necessarily limited, as instructors could not feasibly anticipate or support every possible idea or direction students might pursue within the constraints of a short-format course. Maintaining instructor control while providing students the feeling that they were guiding the research process required careful planning in terms of how the experiments were set up, where failure occurred, and how reflection was scaffolded. Despite this challenge, student reflections showed that the course was widely perceived as an authentic and meaningful representation of scientific practice. S02 wrote: “Lab gave a really good short introduction into the daily routine of scientific work. [It] shows the problems/challenges to deal with in scientific fields.” This perception was also supported by survey responses to the question P1-11, which asked whether students had been introduced to the topic of the scientific method or the process of scientific inquiry during their bachelor’s or master’s studies. Four students actively associated their learning experiences in the DtFL with the process of scientific inquiry. S01 explicitly answered that, despite being theoretically introduced to the process of scientific inquiry multiple times, the DtFL was the first instance in which they consciously applied it, which emphasizes that the DtFL helped students bridge the gap between the notion of scientific inquiry and actual scientific practice. Furthermore, despite its design limitations, the DtFL was successful in leading students to think that they had influence over the direction of their work. Many reflected positively on the opportunity to contribute ideas and make decisions, indicating a sense of ownership. S04 noted: “I really liked the design of the fachlabor, with the ‘selfmade’ research and thinking about the hypotheses.”, where “Fachlabor” refers to a laboratory course in German education system. Similarly, S03 wrote: “I really enjoyed the lab! I think it was pretty cool to come up with solutions by our own and discuss ideas.” These sentiments were deepened when comparing the DtFL to other laboratory courses. S14 reflected: “I have never had a lab session before, where we kind of had to figure out what to do ourselves, and I thought that was great, because it was unexpected and I could influence it. I liked that.” S09 wrote: “I liked the idea to do research on our own to prepare the experiments we are suppost to do → it’s more like in real science than in other lab courses w[h]ere we just get the theory and the plan for the experiments and no one asks you what you would do.”

Importantly, the use of guided discussion sessions between experiments, designed to support students’ reflection and sense-making, did not seem to diminish students’ perception of authenticity, or their sense of influence over the experimental processes. On the contrary, students repeatedly mentioned these sessions as highly valued, and a distinguishing feature compared to other laboratory courses. S02 remarked: “Also the discussions before/between the experiments are really good for understanding what we are doing next (in some lab I have no clue what we are doing before the experiment starts). [The discussions] helped a lot for understanding the things in practice.” S05 wrote: “I liked that we alternated between the lab and the seminar room to talk about why something didn’t work out and research what we could change the next time.” Discussion sessions also seemed to deepen students’ grasp of scientific practice, as S07 put: “I appriate that every time we discuss how to solve a problem. And that’s new for me, thinking what if my results are different with literature.” S04 even linked their learnings from these reflective discussions to the broader aims of science education (see Section 3.2.2): “I love the Fachlabor so far! It is so much more thoroughly thought through. There is actually some thought behind it and it shows. Until now, I never had another lab course, where you had to discuss and research (kind of) on your own. Usually, one gets handed a lab script, one learns it and then you will do these experiments. Often, most people don’t understand, why some of these things in the lab courses are performed as they are, you simply need to do it, no matter what. So I really appreciate this new approach of the Fachlabor being more open and discussion based, because that’s what engineering is about in the end, right? Not just doing things but knowing why and making plans for it.”

Even the later revelation that the course has been built on experiments that were intentionally designed to fail did not weaken students’ perceived authenticity of the course. In fact, five students reported that they found the course even more meaningful in retrospect. For example, S09 wrote: “Also now that I know this course was design to fail I even liked it more. Until now, most of the lab courses I did failed in one or another way and then we just got data generated by other groups to do the analysis of the results what I think is super stupid. I think to discuss what could went wrong and what could we do to make it better is more helpfull to get an insight in how science really works. → I would really recommend this course.” Taken together, these reflections suggest that the DtFL successfully created an experience students perceived as authentic and engaging. Although students were not given complete freedom, they experienced their involvement as active and impactful. The balance between instructor guidance and student autonomy, along with the use of structured failure and discussion, appears to have fostered a more realistic and rewarding understanding of scientific work compared to traditional laboratory formats, emphasizing the potential of the DtFL as an instructional method.

3.2.2. How Did Students Perceive the Educational Intent of the DtFL?

In addition to the positive feedback on the DtFL successfully mirroring real-world scientific practice, several students commented on the effort behind the course and its broader educational intent. Their reflections suggest that students recognized the DtFL as more than just a novel laboratory experience; they saw it as a part of a larger educational vision that aimed at equipping them with cognitive skills relevant beyond the laboratory. For example, when reflecting on an experiment that did not yield the expected results, S16 positively emphasized the value of independent problem-solving over receiving immediate answers: “The results were different from ours, so i asked tutor and professor about this, Instead of giving me the answer right away, they let me find the answer myself first. Their decision was absolutely right. (Thank you!) If they had told me the answer right away, I would have moved on to next step, without thinking about it myself. Here is what I overlooked…” This emphasis on independence and scientific problem-solving was also reflected in broader comments about the course design. S03 wrote: “I went into the lab without having any expectations. When I first opened the folder with an academic paper in it, I was kind of shocked. Despite the initial shock, I love the approach! I can see you really wanted to teach us something greater than BioMEMS, a scientific approach to problem solving. (…) For you guys to spend an entire week (not even counting the hours of preparation) is pretty cool!:) (…) Thank you for going out of your way to provide us with this learning experience!” S06 echoed the importance of being challenged to think independently: “And [I liked] also the idea of not giving us sollutions so we need to come up with ideas. I like the idea of not thinking just about your lecture but to look further and also want to make a difference in the normal day-to-day life of us.” These statements suggest that students appreciate and seek instructional approaches they perceive relevant to their daily lives. This need for more meaningful and transformative learning experiences was also articulated in statements such as: “In the beginning of the Fachlabor I was a bit confused about what and how were doing → but in the end I liked your approach very much → I love the ambition you have to change things, to try to find ways to improve the things that don’t work in our system → please keep that, that very valuable!” (S13), and “I really appreciate the effort that was put into this Fachlabor and genuinely think it should be a mandatory course in MINT-based bachelors.” (S04). Here, the term “MINT” refers to “Mathematik, Informatik, Naturwissenschaft, Technik”, the German equivalent of the English-language acronym “STEM”. Collectively, these reflections illustrate that students perceived the DtFL not merely as a course designed to teach scientific content, but as a valued effort to cultivate skills and ways of thinking that could make a lasting difference in how they engage with science.

3.2.3. What Was Students’ Emotional and Cognitive Response to Failure During the DtFL?

The goal of the DtFL was to use failure as a catalyst for strong emotional and cognitive engagement, with the purpose of fostering higher-order cognitive skills and developing students’ understanding of the broader aspects of NOS. Among the three experiments carried out during the DtFL, the first and second experiments (see Section 2.3) appeared to elicit the highest levels of engagement, as supported by learning diaries and instructors’ observations during the course. The first failure point, unmolding PDMS body devices from 3D-printed molds, stood out as a particularly engaging learning activity. Students frequently described this activity as frustrating, yet fun, motivating, and thought-provoking. S08 wrote: “The device wasn’t getting out of the mold that easily. Actually we havn’t even (yet) been able to get it out. However, it is quite some fun and I would like to try some more.” S16 reflected on how the unexpected failure led them to question their assumptions: “We received the mold from Tutor and worked on removing the cured PDMS from mold. However, no matter how hard we tried for several ten of minutes the PDMS could not be removed from the mold. There was nothing specifialy written about this in protocol. Ok. then why. I though[t] this was common.” Social dynamics also added to the engagement. When they failed to successfully unmold the devices, some students named their groups “Team Failure” or “Team Disappointment”, while others asked about other groups’ performances with an intention of comparison, or rated their own group’s performance as “the worst”. While these reflections were clearly grounded in frustration, they were framed in a humorous and playful manner, suggesting that the course environment allowed students to experience failure without being demoralized (see Section 3.2.4). In retrospect, six students also reflected on the educational value of the frustration they experienced. S03 wrote: “Looking back one thing that frustrated [me] was the time we could have saved by not going through the process of trying to take the PDMS without any release agent. However, I do understand why you wanted to teach us the frustration of scientific work and how you can overcome this by accurately analyzing the process and research.” S13 echoed this sentiment: “Realization, that there was no way to get the PDMS out of the mold made me feel very frustrated → beforehand we put a lot of ambition in it → but afterall it was very educational (but still frustrating)”. Beyond the educational value of frustration, these sentiments also demonstrate how low the perseverance and frustration levels are for some students, as the activity of PDMS unmolding lasted no more than 30 min, yet it was sufficient to evoke strong reactions. One could argue that traditional, ready-made laboratory courses contribute to a culture of low resilience and that incorporation of failure in education could cultivate grit. For some students, discussion sessions of similar duration elicited similar responses, as S14 wrote: “Sometimes I felt like we were running in circles. One person had an idea, then the discussion question was rephrased, but in the end we came to the same conclusion, the beginning idea. It felt like losing time, even though I know it is important to consider all points and identify their importance.” These reflections indicate that students largely leave laboratory courses with unrealistic expectations about the linearity and efficiency of the scientific process, underestimating the extent of iteration, discussion, and uncertainty in authentic research practice.

In contrast to the first two experiments, experiment three, in which students tested their initial hypotheses related to cell stretching (see Section 2.3), elicited noticeably less emotional and cognitive engagement. Student entries on this experiment were largely descriptive, with few instances of critical discussion or personal reflection. We hypothesize that this diminished engagement can be attributed to two main factors. First, experiment three involved significantly less hands-on work due to practical limitations, relying more heavily on literature interpretation and instructor-supplied data. Second, the complexity of data and expertise required for their interpretation may have obscured the element of failure, making it less recognizable and emotionally resonant. Based on these observations, we suggest that active hands-on participation and the immediate recognizability of failure are key drivers of emotional and cognitive engagement in failure-based learning environments like the DtFL. Importantly, while failure itself should be recognizable to students, its deliberate integration into the course structure should be concealed to preserve authenticity (see Section 3.2.1). In its first-time implementation, the DtFL largely achieved this balance: 15 out of 16 students did not realize that the experiments were designed to fail and found the reveal both surprising and valuable. S06 wrote: “I really, really liked the fachlabor because it was so much more fun and a lot different to all other lab courses I’ve had before. I think the idea of not letting us know, what could go wrong and just letting us experience it is really good because there’s a lot more thinking of why this happened and how to improve it.” Two students even humorously commented on the “acting skills” of the instructors, indirectly highlighting that the success of the DtFL depends not only on the design of experiments, but also on the instructors’ ability to convincingly guide and stage the experience. Only one student (S01) indicated that the failure design had become evident to them during the course: “I wasn’t impressed about it being a study/research project because the teaching method of failure became evident on the first day.”

An equally important goal of the DtFL was to help students establish a constructive relationship with failure, regardless of their initial response toward it. To support this, we designed each failure point to arise independently of student error, hypothesizing that this would help students decouple failure from personal inadequacy, and instead reframe it as an inherent feature of the scientific process. At the same time, we incorporated reflection on personal error-making into the discussion sessions to ensure a realistic portrayal of scientific work. This approach appeared to be effective, and students frequently framed their experiences with failure in constructive terms. S10 wrote: “Making us face failure and frustration was a good idea.”, and S12 reflected: “The idea of making ‘mistakes’ is great.”. Even students who reported not feeling strong negative emotions toward failure still described the course as impactful (S08): “Dear lab diary, I must admit that I neither feel like a disappointment, nor a failure… well maybe regarding my writing skills… However, that was great. I think I’m not that bad at scientific thinking, but I’m no where near I would like to. This course helped me for sure.” Overall, these reflections indicate that the DtFL was successful in its purpose of utilizing failure as a tool for fostering emotional and cognitive engagement with scientific inquiry, helping students reflect on aspects of NOS (see Section 3.1), and support them in establishing a more constructive relationship with failure.

3.2.4. How Did Students Perceive the Learning Environment of the DtFL?