Urban–Remote Disparities in Taiwanese Eighth-Grade Students’ Science Performance in Matter-Related Domains: Mixed-Methods Evidence from TIMSS 2019

Abstract

1. Introduction

- (1)

- Which TIMSS 2019 Grade 8 science items exhibit DIF that disadvantages students in remote areas of Taiwan, and what are the shared characteristics (e.g., item type, cognitive domain, content area) of these items?

- (2)

- What cognitive, experiential, or contextual factors explain the DIF patterns observed, particularly within the content domains where these patterns are most prevalent?

2. Literature Review

2.1. Urban–Remote Disparities in Science Education

2.2. DIF in Assessments and Science Education

2.3. Mixed-Methods Approaches in DIF Research

2.4. Repertory Grid Technique

2.5. Difficulties in Learning Matter-Related Concepts Among Middle School Students

2.6. Research Purposes

3. Materials and Methods

3.1. Quantitative Phase

3.1.1. Data Source

3.1.2. Quantitative Data Analysis

3.2. Qualitative Phase

3.2.1. Participants

3.2.2. Procedures

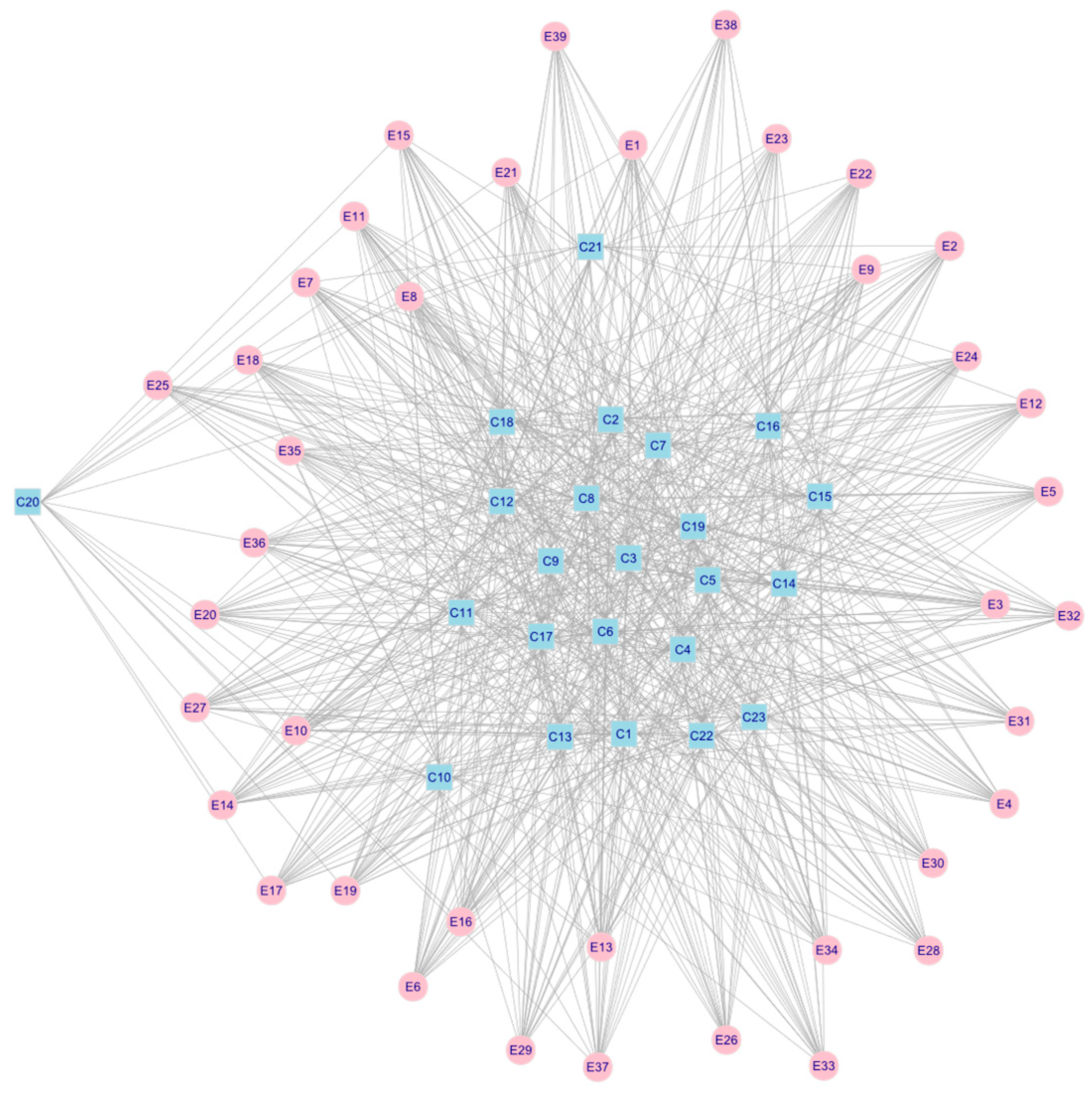

- Card Sorting: This stage aimed to initiate the process of identifying students’ initial conceptual frameworks by presenting 39 cards featuring matter-related terminologies (listed as E1–E39 in Appendix A). For each iteration, three cards were randomly selected, and students identified two that were more similar or related, explaining their rationale for the grouping and the contrast with the third card, thereby eliciting initial constructs through triadic comparison. All classification rationales were subsequently categorized and synthesized by two science education experts and two junior high school science teachers to derive the cognitive constructs underlying the students’ understanding of the 39 terminologies, providing a foundation for subsequent analysis.

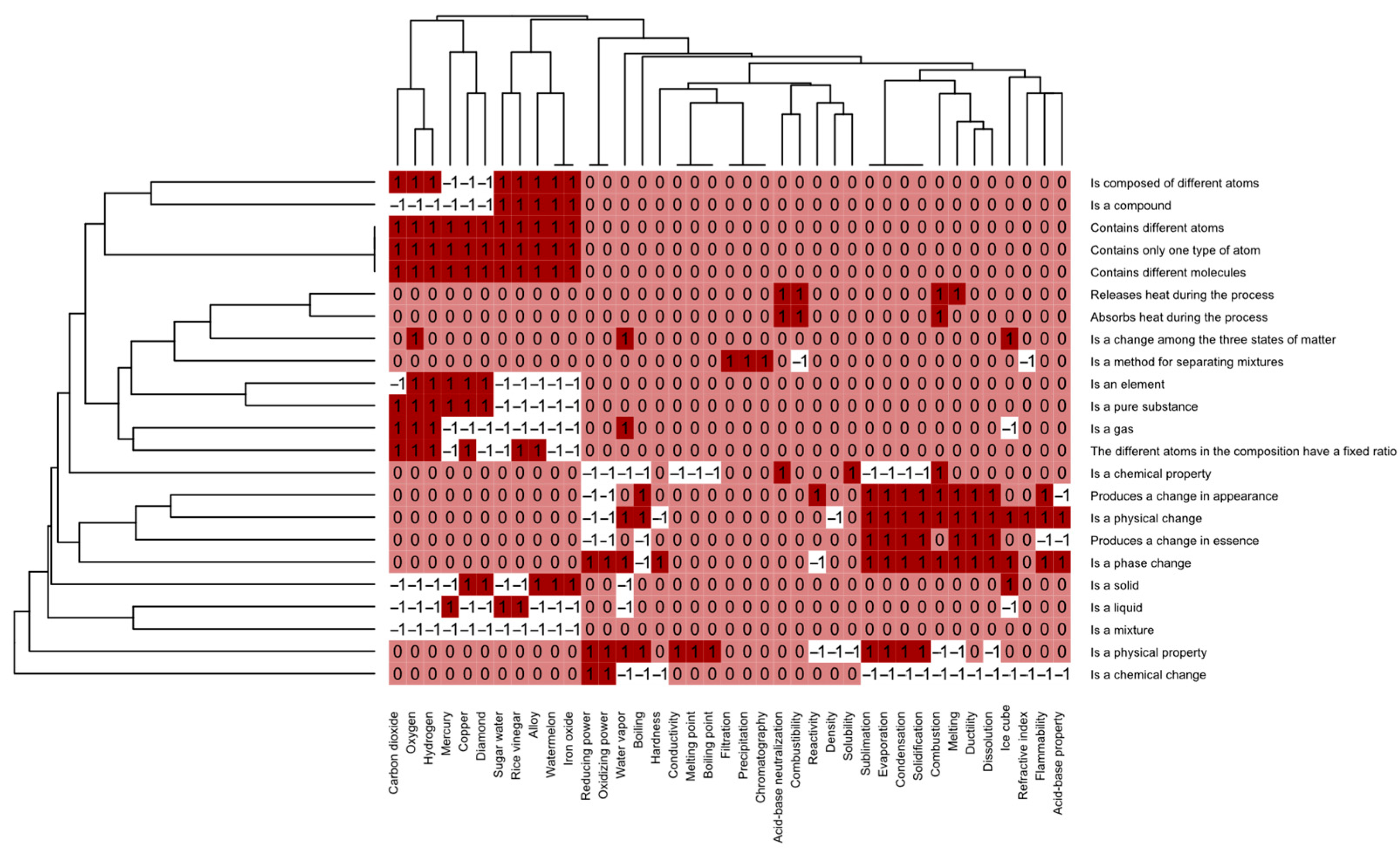

- Kelly Grid Technique: This stage sought to formalize and more deeply assess the understanding of cognitive structures, drawing on Kelly’s RGT (Kelly, 1991), by (a) presenting elements derived from card sorting (E1–E39 in Appendix A) and eliciting bipolar constructs via triadic comparison (e.g., “These two are compounds” vs. “That one is a mixture”); (b) constructing a grid with 39 predefined elements (rows) and student-generated constructs (columns) used to explain their card-sorting results; (c) rating each element–construct intersection on a trichotomous scale (1 = match, 0 = irrelevant, −1 = mismatch); and (d) debriefing through brief interviews to verify their ratings and better understand their reasoning, ensuring the accuracy of the grid data.

- Teacher Interviews: This stage aimed to validate the repertory grid findings and enhance triangulation by conducting semi-structured interviews with the two science teachers from the participating schools. These interviews confirmed our interpretations of the repertory grid analysis results, addressing potential biases in student responses and strengthening the reliability of the cognitive structural mappings across urban and remote groups.

3.2.3. Qualitative Data Analysis

- Individual Analysis

- Data Matrix and Preprocessing: This step was designed to organize raw data by producing a rating matrix (elements × constructs) on a trichotomous scale, treating elements as observations and constructs—derived from students’ card-sorting rationales and aligned with an expert reference framework—as variables to establish a baseline for cognitive mapping (Slater, 1977).

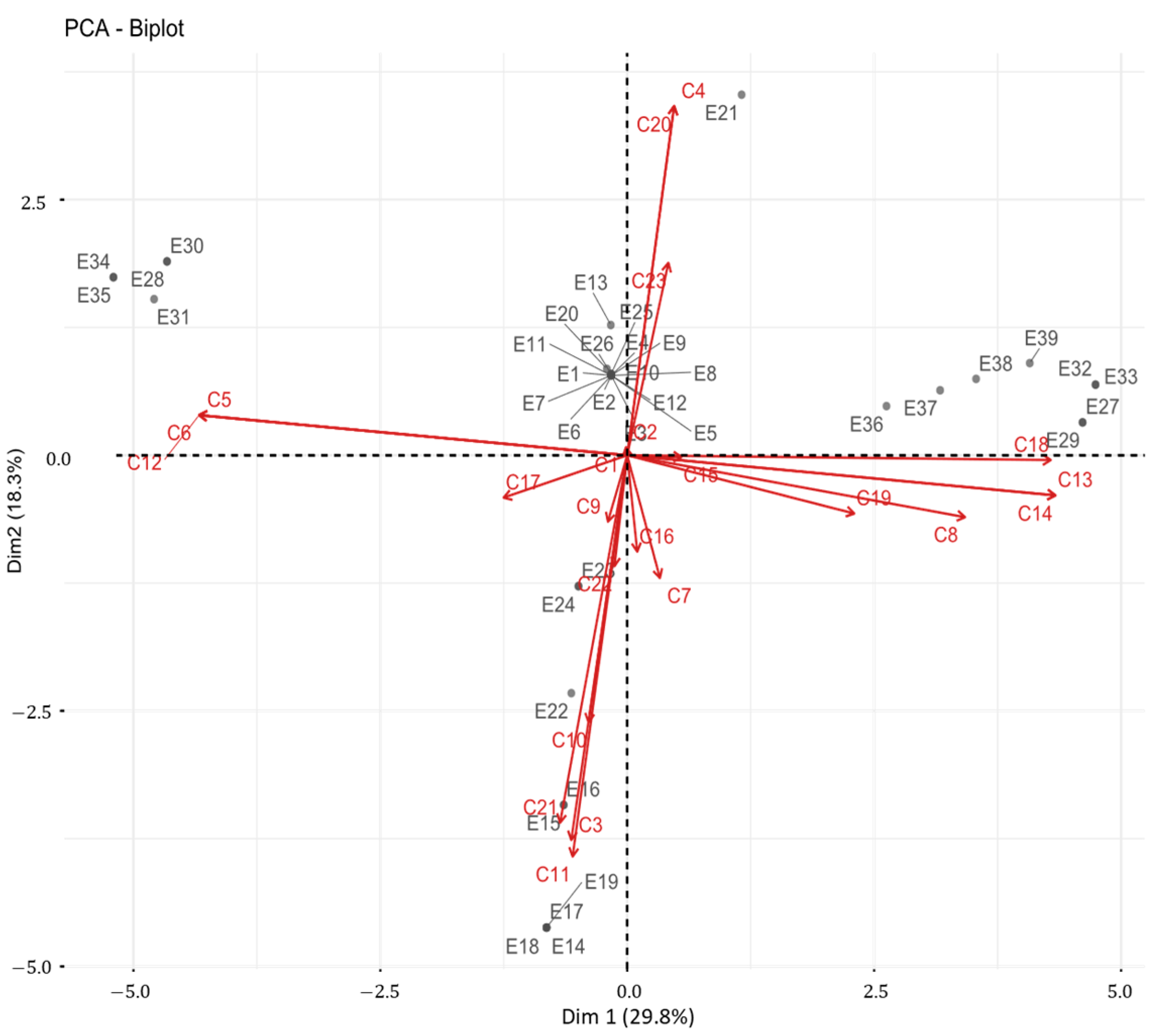

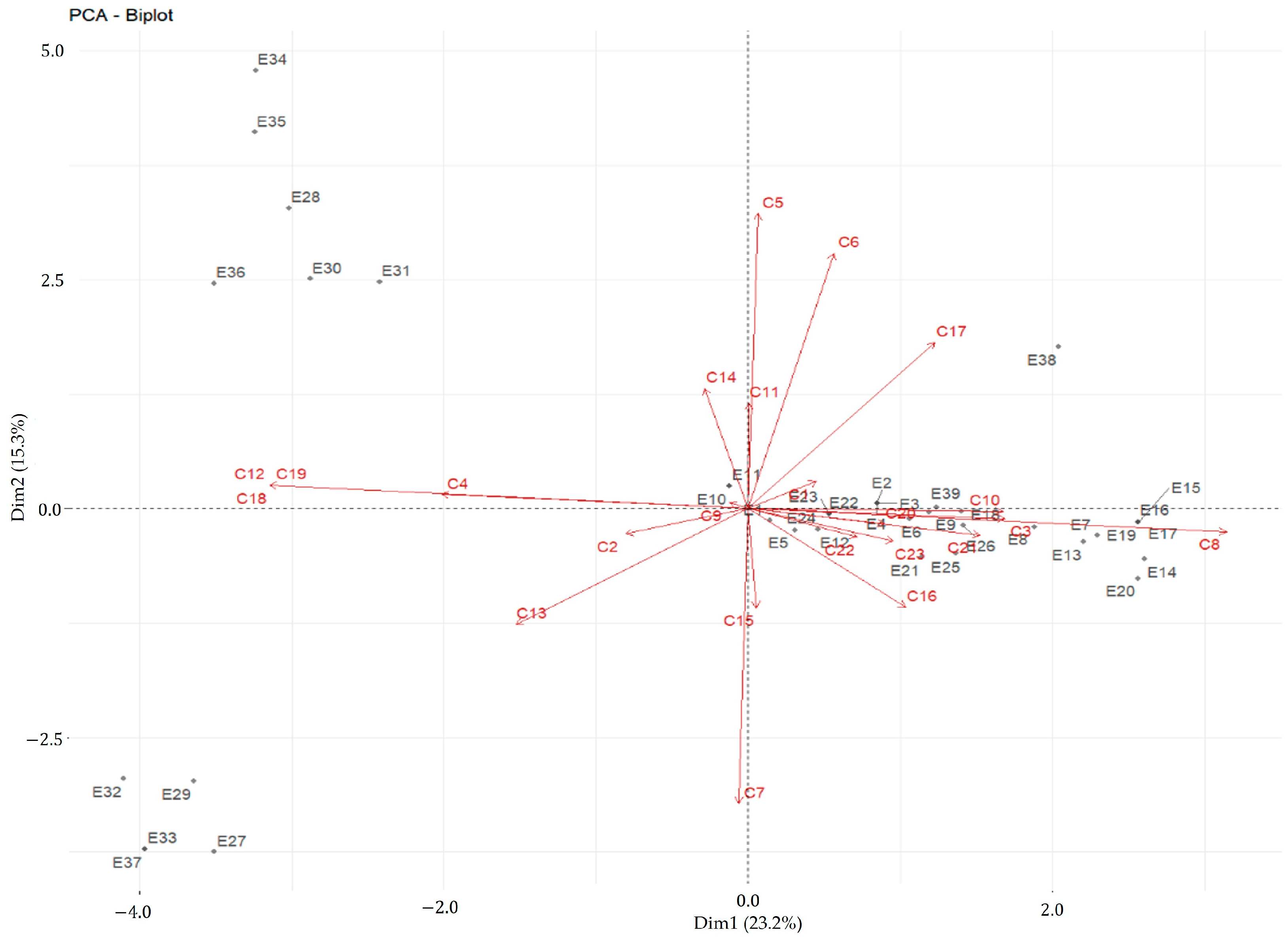

- Dimensionality Reduction: This step was designed to identify and map underlying cognitive dimensions in students’ understanding by using principal component analysis (PCA) to analyze z-scored constructs and report the eigenvalues, explained variance, and biplots of element scores and construct loadings, thereby visualizing correlations and cognitive organization (Fransella et al., 2004). Aggregated group matrices, derived from averaged ratings, enabled PCA comparisons across the urban and remote subgroups to highlight disparities in cognitive frameworks.

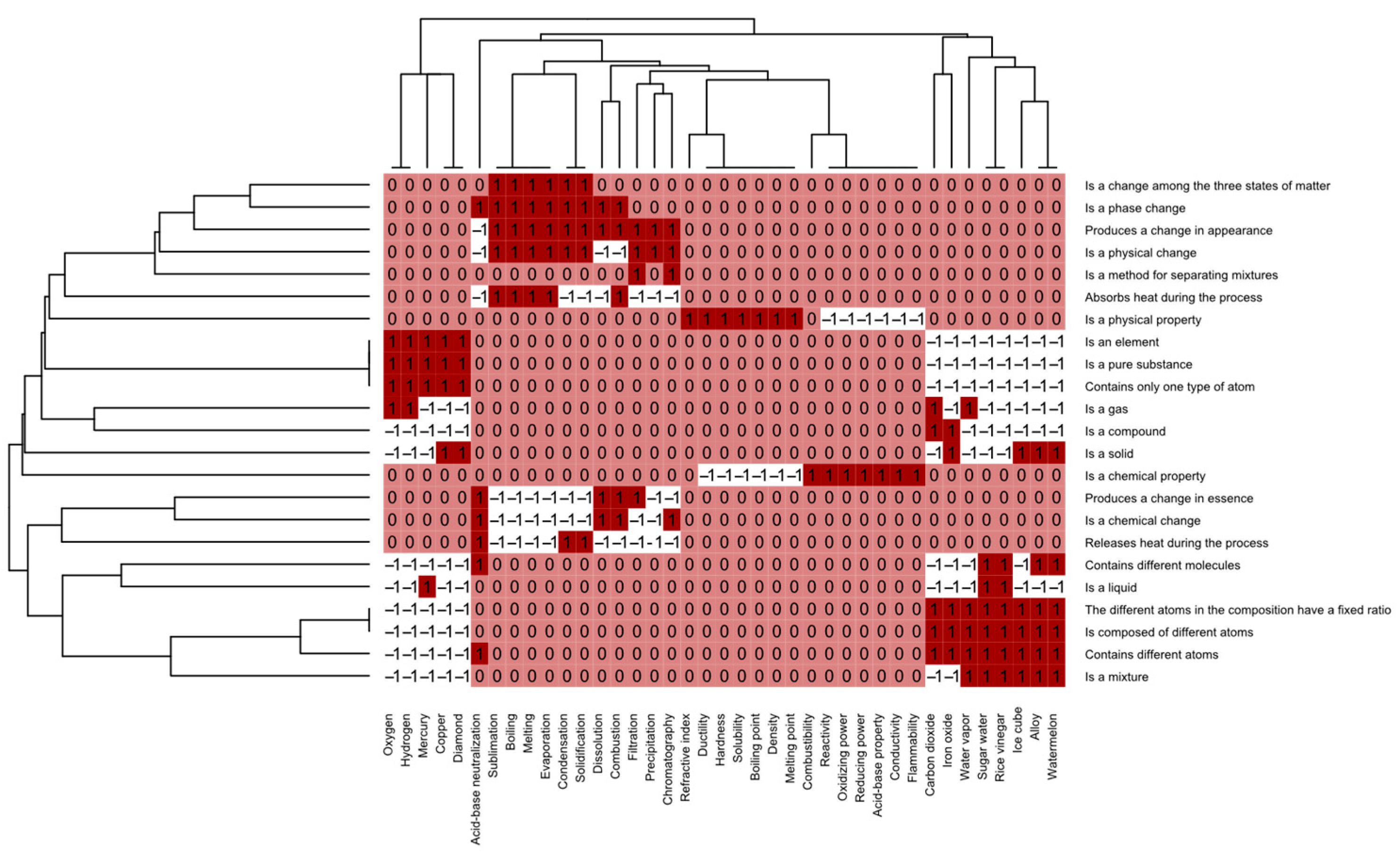

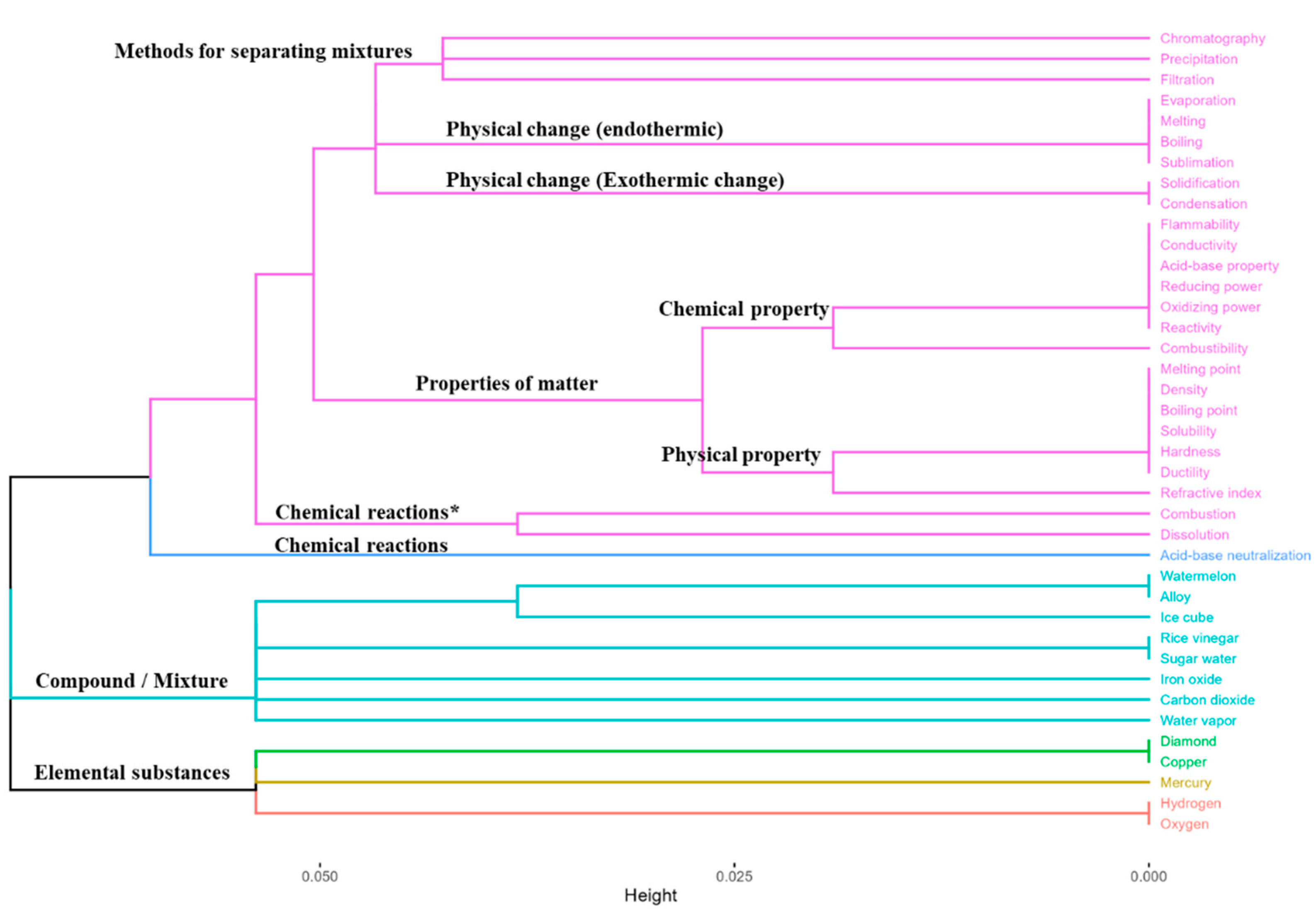

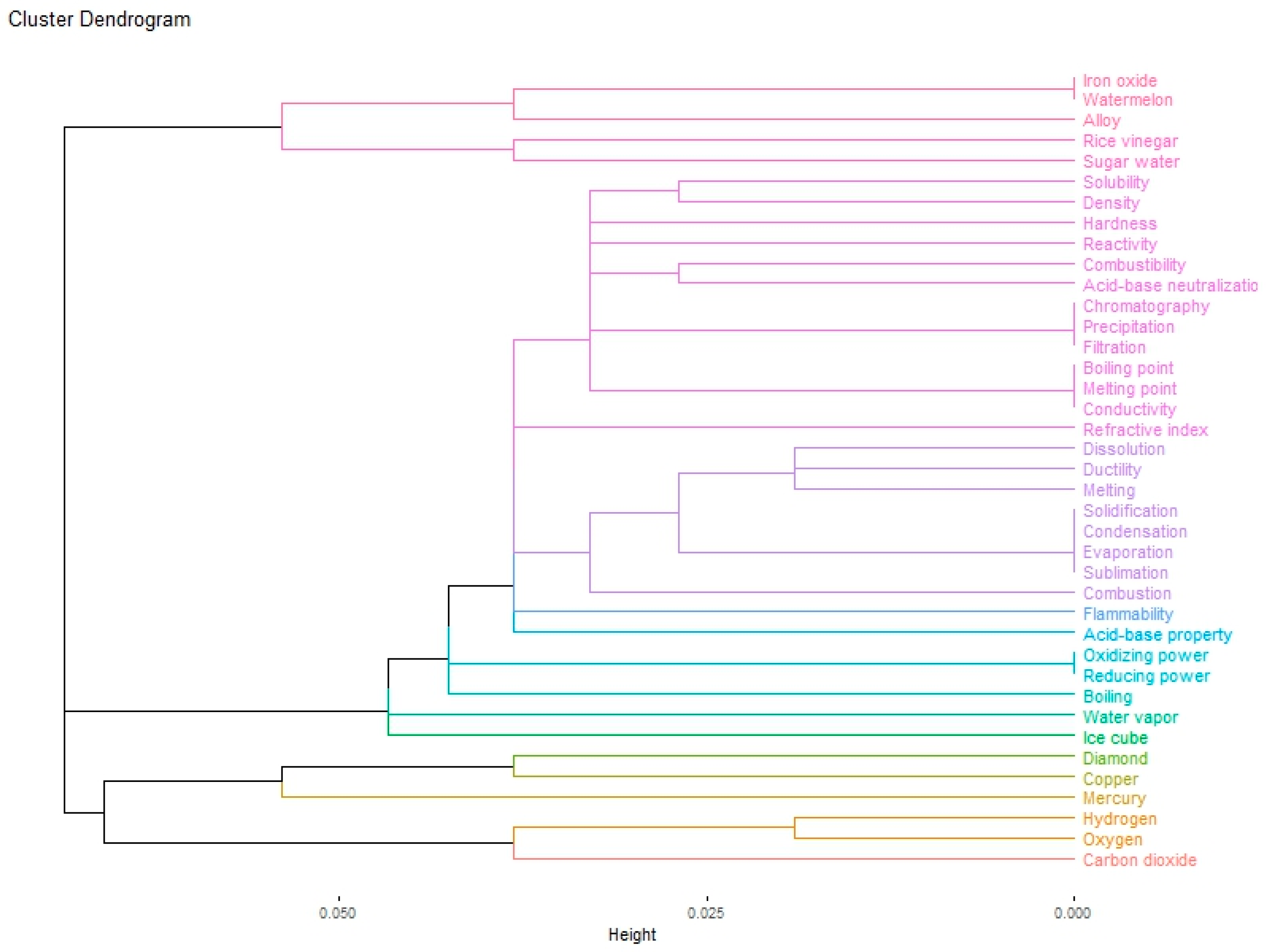

- Hierarchical Clustering and Seriation: We employed this technique to group related concepts by applying agglomerative hierarchical clustering (Ward’s linkage) to one-mode similarities (Pearson correlations converted to distances), producing dendrograms and seriated heatmaps to reveal coherent conceptual blocks; robustness checks with alternative linkages and bootstrapping ensured stability (Jankowicz, 2004).

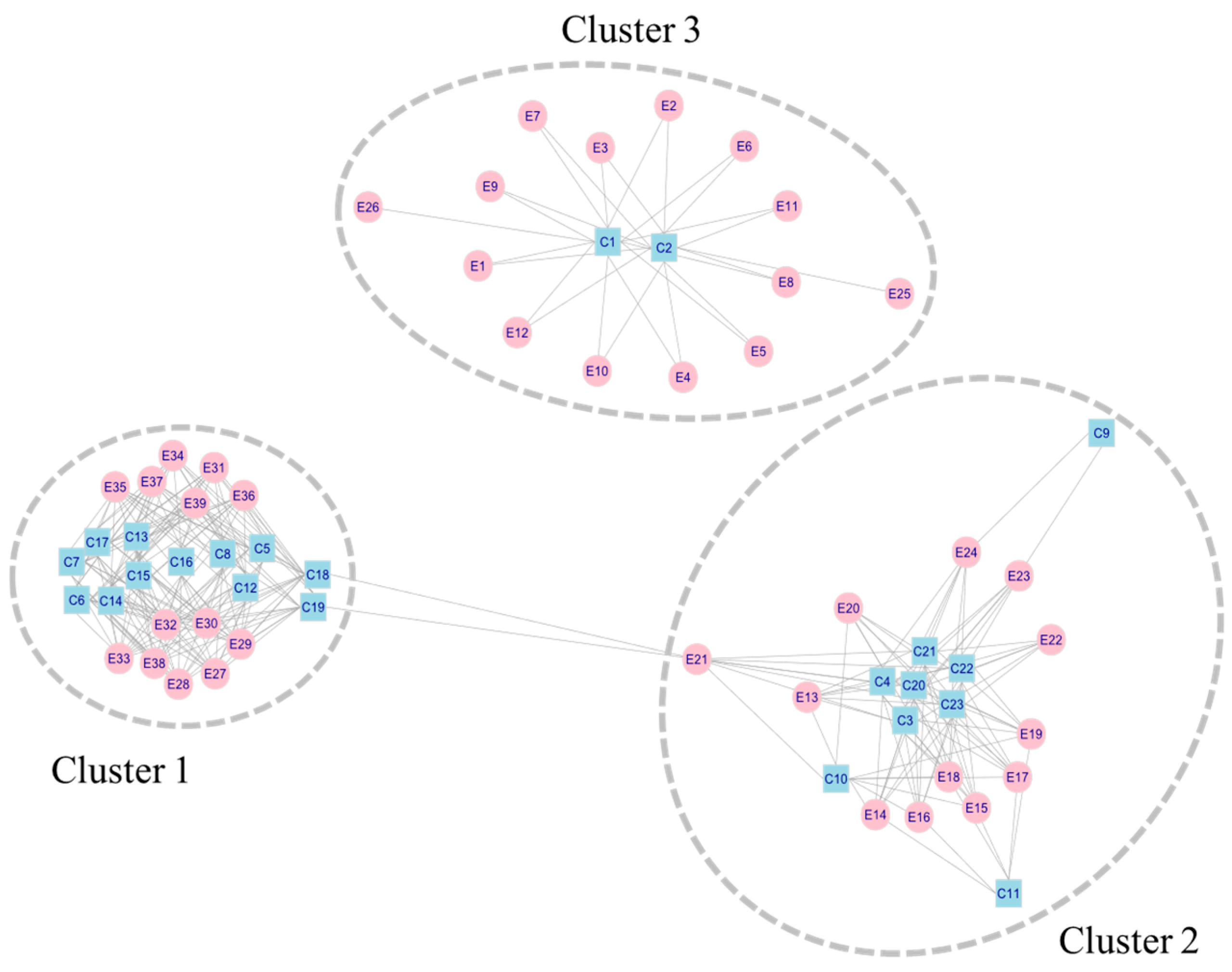

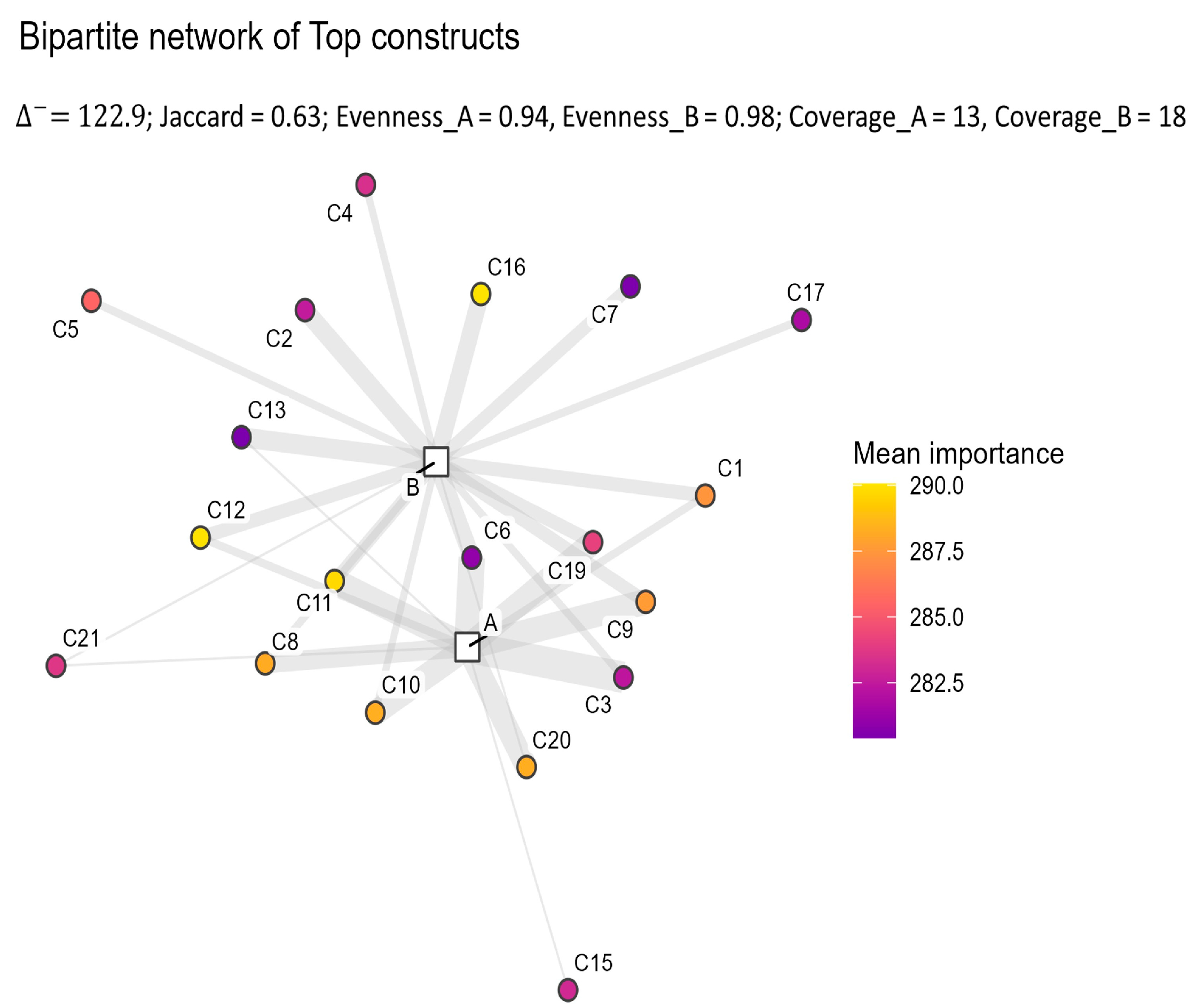

- Bipartite Graphs: Designed to enhance visualization, two-mode clustering projected the matrix into weighted adjacency networks for community detection, creating bipartite and one-mode graphs to depict element–construct clusters and reduce clutter via thresholding (Borgatti & Everett, 1997; Csardi et al., 2025). Convergent validity was examined by triangulating PCA groupings, hierarchical cluster memberships, and bipartite graphs with teacher interviews.

- 2.

- Group Comparisons

- (Mean Across Clusters): Let denote the mean importance of cluster in group . The overall between-group salience difference isThis provides a single magnitude of group difference corresponding to the heat-map.

- Jaccard Overlap of Top Sets: With and as the sets of clusters receiving at least one Top nomination in groups A and B, respectively, the overlap isquantifying the shared core of clusters (Manning et al., 2008).

- Evenness (Shannon Evenness of Top Weights): Top weights are normalized to . Shannon entropy is converted to evenness (bounded in [0,1]); higher values indicate a more even spread of attention across clusters (Strong, 2016).

- Coverage (Number of Top Clusters): This is the count of clusters with a non-zero Top weight in each group, indicating the breadth of concepts activated as Tops, which is comparable to two-mode coverage in bipartite networks (Latapy et al., 2008).

4. Results

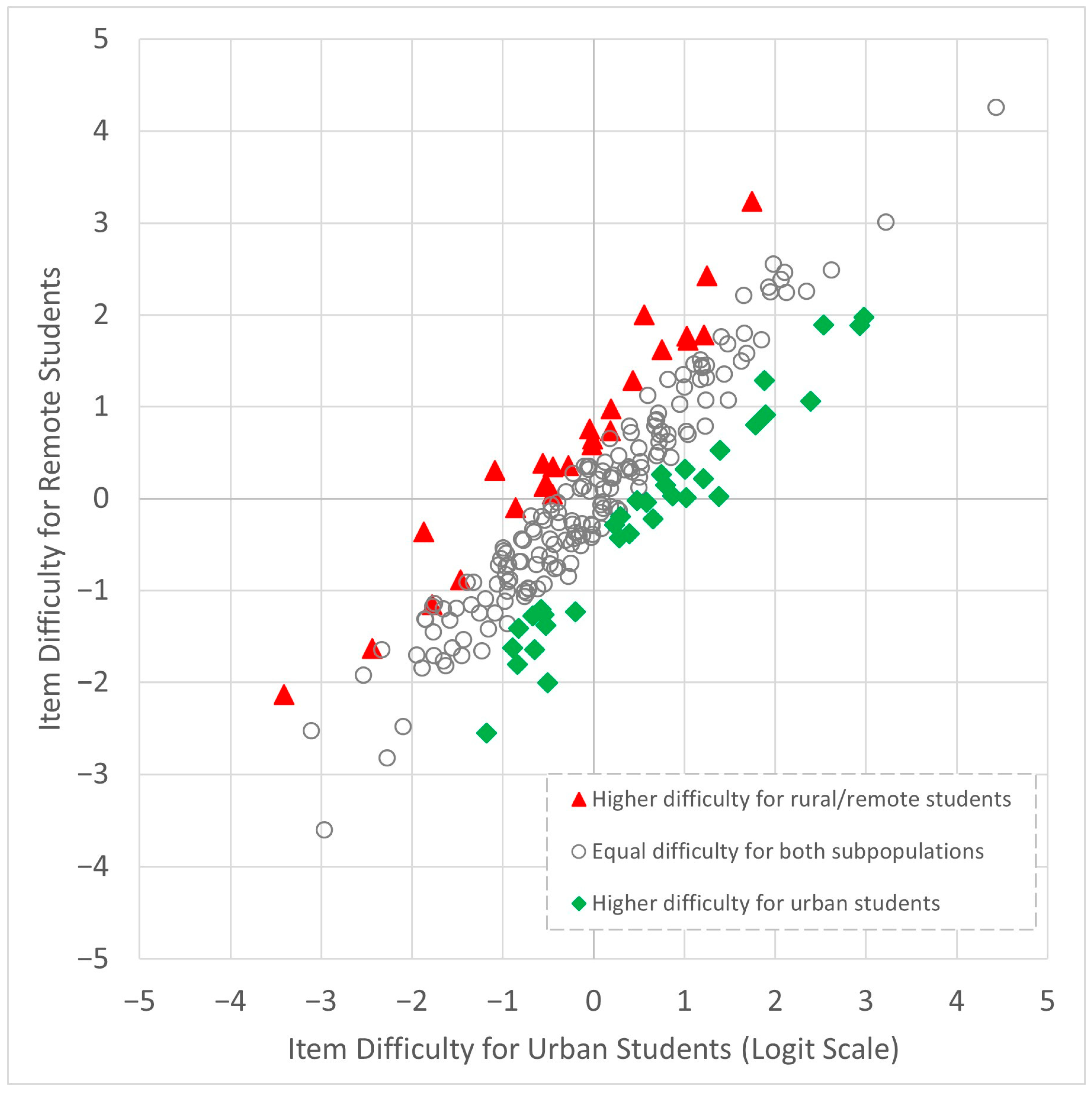

4.1. Findings from the Quantitative Phase

4.2. Findings from the Qualitative Phase

4.2.1. Card Sorting and Kelly Grid

4.2.2. Principal Component Analysis and Clustering on the Rating Matrix

- Example Analysis of an Urban Student’s Cognitive Structure (S1)

- 2.

- Example Analysis of a Remote Student’s Cognitive Structure (S7)

- Fragmentation. Similarity structures derived from the rating matrix yield three broad—but dispersed—blocks, with branch heights (dissimilarity) remaining high between putative modules; this is visible in multiple isolated branches and weak cross-links—for example, iron oxide sits scattered near mixtures without tight cohesion—contrasting with the more unified, hierarchical organization seen in the urban profile S1.

- Associative (non-hierarchical) categorization. The cluster content suggests associative linkages driven by surface/sensory cues rather than rule-governed taxonomies: the upper purple block loosely ties mixtures/solutions (e.g., watermelon, alloy, rice vinegar, sugar water) to laboratory properties and separation methods (solubility, density, hardness, reactivity, conductivity, refractive index, dissolution, ductility, filtration, chromatography, precipitation, boiling/melting point), implying a heuristic of “multi-component items identified via properties/separations” and not composition-first classification. A mid-level blue–green block aggregates processes/changes (melting, solidification, condensation, evaporation, sublimation, combustion) alongside reactivity cues (acid–base, oxidizing/reducing power, flammability), indicating a clean but associative separation of “process” from “property”, often organized based on visible energy or appearance. A bottom yellow–green block groups single/pure substances and states (e.g., ice cube, water vapor, diamond, copper, mercury, hydrogen, oxygen, carbon dioxide), frequently near boiling, hinting at a state/single-substance perspective with subgroups (e.g., diamond–copper–mercury) that resemble contextual affinities rather than superordinate categories in a hierarchy.

- Misconceptions. The structure exposes mixture–compound confusion—e.g., iron oxide drawn toward alloys/solutions, consistent with a “contains different atoms ⇒ multi-component” rule that overrides criteria such as fixed composition or physical separability (Johnson, 2000). Processes are prioritized over substance identity—e.g., combustion intermingled with phase changes—reflecting sensory-driven errors in distinguishing physical vs. chemical change (Stavy & Stachel, 1985; Talanquer, 2009). Finally, representational heuristics appear to overshadow particulate reasoning, e.g., regarding H2O forms (ice cube, steam) as “not pure” because they “contain different atoms”, rather than as the same pure substance across states (Nakhleh et al., 2005).

- 3.

- Reappraising RGT and Aggregation

- 4.

- Group-level comparison: urban vs. remote cognitive structures

5. Discussion

6. Conclusions

6.1. Implications

6.2. Limitations and Future Suggestions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DIF | differential item functioning |

| RGT | repertory grid technique |

| IRT | item response theory |

| PCM | partial credit model |

| PCA | principle component analysis |

| UNESCO | United Nations Education Scientific and Cultural Organization |

| TIMSS | Trends in International Mathematics and Science Study |

Appendix A

| Elements (E) | Constructs (C) |

|---|---|

| E1—density E2—melting point E3—boiling point E4—conductivity E5—solubility E6—hardness E7—ductility E8—flammability E9—acid-base property E10—reducing power E11—oxidizing power E12—reactivity E13—dissolution E14—melting E15—condensation E16—solidification E17—evaporation E18—boiling E19—sublimation E20—combustion E21—acid-base neutralization E22—precipitation E23—chromatography E24—filtration E25—combustibility E26—refractive index E27—sugar water E28—copper E29—rice vinegar E30—diamond E31—mercury E32—alloy E33—watermelon E34—oxygen E35—hydrogen E36—carbon dioxide E37—iron oxide E38—water vapor E39—ice cube | C1—is a physical property C2—is a chemical property C3—is a physical change C4—is a chemical change C5—is a pure substance C6—is an element C7—is a compound C8—is a mixture C9—is a method for separating mixtures C10—is a phase change C11—is a change among the three states of matter C12—contains only one type of atom C13—is composed of different atoms C14—The different atoms in the composition have a fixed ratio C15—is a solid C16—is a liquid C17—is a gas C18—contains different atoms C19—contains different molecules C20—produces a change in essence C21—produces a change in appearance C22—absorbs heat during the process C23—releases heat during the process |

References

- Amini, C., & Nivorozhkin, E. (2015). The urban–rural divide in educational outcomes: Evidence from Russia. International Journal of Educational Development, 44, 118–133. [Google Scholar] [CrossRef]

- Appels, L., De Maeyer, S., & Van Petegem, P. (2024). Re-thinking equity: The need for a multidimensional approach in evaluating educational equity through TIMSS data. Large-Scale Assessments in Education, 12(1), 38. [Google Scholar] [CrossRef]

- Bar, V., & Galili, I. (1994). Stages of children’s views about evaporation. International Journal of Science Education, 16(2), 157–174. [Google Scholar] [CrossRef]

- Benjamini, Y., & Hochberg, Y. (1995). Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society: Series B (Methodological), 57(1), 289–300. [Google Scholar] [CrossRef]

- Berk, R. A. (1982). Handbook of methods for detecting test bias. Johns Hopkins University Press. [Google Scholar]

- Borgatti, S. P. (2009). Social network analysis, two-mode concepts in. In R. A. Meyers (Ed.), Encyclopedia of complexity and systems science (pp. 8279–8291). Springer. [Google Scholar] [CrossRef]

- Borgatti, S. P., & Everett, M. G. (1997). Network analysis of 2-mode data. Social Networks, 19(3), 243–269. [Google Scholar] [CrossRef]

- Buck, G. A., Chinn, P. W. U., & Upadhyay, B. (2023). Science education in urban and rural contexts: Expanding on conceptual tools for urban-centric research. In N. G. Lederman, D. L. Zeidler, & J. S. Lederman (Eds.), Handbook of research on science education: Volume III (1st ed.). Routledge. [Google Scholar] [CrossRef]

- Cao, G., & Huo, G. (2025). Reassessing urban-rural education disparities: Evidence from England. Educational Studies, 1–20. [Google Scholar] [CrossRef]

- Chai, C.-W., Hung, M.-K., & Lin, T.-C. (2023). The impact of external resources on the development of an indigenous school in rural areas: Taking a junior high school in Taiwan as an example. Heliyon, 9(12), e22073. [Google Scholar] [CrossRef] [PubMed]

- Chang, C.-Y., Lee, C.-D., Lin, P.-J., Chang, M.-Y., Tsao, P.-S., Yang, W.-J., Hsiao, J.-T., & Chang, W.-N. (2021). Taiwan’s mathematics and science education in TIMSS 2019: Executive summary of the national report for Taiwan. Available online: http://www.sec.ntnu.edu.tw/timss2019/downloads/T19TWNexecutive.pdf (accessed on 1 July 2025).

- Chen, Y.-H. (2012). Cognitive diagnosis of mathematics performance between rural and urban students in Taiwan. Assessment in Education: Principles, Policy & Practice, 19(2), 193–209. [Google Scholar] [CrossRef]

- Chi, M. T. H. (2009). Active-Constructive-Interactive: A conceptual framework for differentiating learning activities. Topics in Cognitive Science, 1(1), 73–105. [Google Scholar] [CrossRef] [PubMed]

- Chi, M. T. H., & Wylie, R. (2014). The ICAP framework: Linking cognitive engagement to active learning outcomes. Educational Psychologist, 49(4), 219–243. [Google Scholar] [CrossRef]

- Creswell, J. W., & Plano Clark, V. L. (2017). Designing and conducting mixed methods research (3rd ed.). Sage. [Google Scholar]

- Csárdi, G., Nepusz, T., Müller, K., Horvát, S., Traag, V., Zanini, F., & Noom, D. (2025). igraph for R: R interface of the igraph library for graph theory and network analysis (v2.1.4). Zenodo. [Google Scholar] [CrossRef]

- Curry, L. A., Nembhard, I. M., & Bradley, E. H. (2009). Qualitative and mixed methods provide unique contributions to outcomes research. Circulation, 119(10), 1442–1452. [Google Scholar] [CrossRef]

- Edmonds, W. A., & Kennedy, T. D. (2017). An applied guide to research designs: Quantitative, qualitative, and mixed methods (2nd ed.). SAGE. [Google Scholar] [CrossRef]

- Enchikova, E., Neves, T., Toledo, C., & Nata, G. (2024). Change in socioeconomic educational equity after 20 years of PISA: A systematic literature review. International Journal of Educational Research Open, 7, 100359. [Google Scholar] [CrossRef]

- Ericsson, K. A., & Simon, H. A. (1984). Protocol analysis: Verbal reports as data. The MIT Press. [Google Scholar]

- Fishbein, B., Foy, P., & Yin, L. (2021). TIMSS 2019 user guide for the international database (2nd ed.). TIMSS & PIRLS International Study Center, Lynch School of Education and Human Development, Boston College and International Association for the Evaluation of Educational Achievement. [Google Scholar]

- Fransella, F., Bell, R., & Bannister, D. (2004). A manual for repertory grid technique (2nd ed.). Wiley. [Google Scholar]

- Gess, C., Wessels, I., & Blömeke, S. (2017). Domain-specificity of research competencies in the social sciences: Evidence from differential item functioning. Journal for Educational Research Online, 9(2), 11–36. [Google Scholar] [CrossRef]

- Gkagkas, V., Petridou, E., & Hatzikraniotis, E. (2025). Attitudes and interest of Greek students towards science. Education Sciences, 15(9), 1171. [Google Scholar] [CrossRef]

- Graham, L. (2024). The grass ceiling: Hidden educational barriers in rural England. Education Sciences, 14(2), 165. [Google Scholar] [CrossRef]

- Grand-Guillaume-Perrenoud, J. A., Geese, F., Uhlmann, K., Blasimann, A., Wagner, F. L., Neubauer, F. B., Huwendiek, S., Hahn, S., & Schmitt, K.-U. (2023). Mixed methods instrument validation: Evaluation procedures for practitioners developed from the validation of the Swiss Instrument for Evaluating Interprofessional Collaboration. BMC Health Services Research, 23(1), 83. [Google Scholar] [CrossRef] [PubMed]

- Hadenfeldt, J. C., Liu, X., & Neumann, K. (2014). Framing students’ progression in understanding matter: A review of previous research. Studies in Science Education, 50(2), 181–208. [Google Scholar] [CrossRef]

- Hammersley, M. (2023). Are there assessment criteria for qualitative findings? A challenge facing mixed methods research. Forum Qualitative Sozialforschung/Forum: Qualitative Social Research, 24(1), 1–15. [Google Scholar] [CrossRef]

- Happs, J. C., & Stead, K. (1989). Using the repertory grid as a complementary probe in eliciting student understanding and attitudes towards science. Research in Science & Technological Education, 7(2), 207–220. [Google Scholar] [CrossRef]

- Hill, P. W., McQuillan, J., Hebets, E. A., Spiegel, A. N., & Diamond, J. (2018). Informal science experiences among urban and rural youth: Exploring differences at the intersections of socioeconomic status, gender and ethnicity. Journal of STEM Outreach, 1(1), 1–12. [Google Scholar] [CrossRef]

- Huang, X. (2024). Research on the formation and evolution mechanism of the urban-rural education gap in China. Academic Journal of Humanities & Social Sciences, 7(7), 152–157. [Google Scholar] [CrossRef]

- Huang, X., Wilson, M., & Wang, L. (2016). Exploring plausible causes of differential item functioning in the PISA science assessment: Language, curriculum or culture. Educational Psychology, 36(2), 378–390. [Google Scholar] [CrossRef]

- Ingersoll, R. M., & Tran, H. (2023). Teacher shortages and turnover in rural schools in the U.S.: An organizational analysis. Educational Administration Quarterly, 59(2), 396–431. [Google Scholar] [CrossRef]

- Jankowicz, D. (2004). The easy guide to repertory grids. Wiley. [Google Scholar]

- Jen, T.-H., Lee, C.-D., Lo, P.-H., Chang, W.-N., & Chang, C.-Y. (2020). Chinese Taipei. In D. L. Kelly, V. A. S. Centurino, M. O. Martin, & I. V. S. Mullis (Eds.), TIMSS 2019 encyclopedia: Education policy and curriculum in mathematics and science. TIMSS & PIRLS International Study Center, Boston College. Available online: https://timssandpirls.bc.edu/timss2019/encyclopedia/chinese-taipei.html (accessed on 9 June 2025).

- Johnson, P. (2000). Children’s understanding of substances, part 1: Recognizing chemical change. International Journal of Science Education, 22(7), 719–737. [Google Scholar] [CrossRef]

- Johnston, M. P. (2014). Secondary data analysis: A method of which the time has come. Qualitative and Quantitative Methods in Libraries, 3(3), 619–626. [Google Scholar]

- Joo, S., Ali, U., Robin, F., & Shin, H. J. (2022). Impact of differential item functioning on group score reporting in the context of large-scale assessments. Large-scale Assessments in Education, 10(1), 18. [Google Scholar] [CrossRef]

- K-12 Education Administration, Ministry of Education. (2021). Standards for classification and recognition of schools in remote areas (Amended 11 March 2021). Ministry of Education, Taiwan. Available online: https://edu.law.moe.gov.tw/LawContent.aspx?id=GL001771 (accessed on 9 July 2025).

- Kelly, G. (1991). The psychology of personal constructs: Volume one: Theory and personality (1st ed.). Routledge. [Google Scholar] [CrossRef]

- Kish, L., & Frankel, M. R. (1974). Inference from complex samples. Journal of the Royal Statistical Society. Series B (Methodological), 36(1), 1–37. Available online: http://www.jstor.org/stable/2984767 (accessed on 9 July 2025). [CrossRef]

- Kolb, D. A. (2014). Experiential learning: Experience as the source of learning and development (2nd ed.). Pearson FT Press. [Google Scholar]

- Kryst, E. L., Kotok, S., & Bodovski, K. (2015). Rural/urban disparities in science achievement in post-socialist countries: The evolving influence of socioeconomic status. A Global View of Rural Education: Issues, Challenges and Solutions Part I, 2(4), 60–77. [Google Scholar]

- Latapy, M., Magnien, C., & Vecchio, N. D. (2008). Basic notions for the analysis of large two-mode networks. Social Networks, 30(1), 31–48. [Google Scholar] [CrossRef]

- Lin, B.-S., & Crawley, F. E., III. (1987). Classroom climate and science-related attitudes of junior high school students in Taiwan. Journal of Research in Science Teaching, 24(6), 579–591. [Google Scholar] [CrossRef]

- Lin, Y.-R., Hung, C.-Y., & Hung, J.-F. (2017). Exploring teachers’ meta-strategic knowledge of science argumentation teaching with the repertory grid technique. International Journal of Science Education, 39(2), 105–134. [Google Scholar] [CrossRef]

- Liu, R., & Bradley, K. D. (2021). Differential item functioning among English language learners on a large-scale mathematics assessment. Frontiers in Psychology, 12, 657335. [Google Scholar] [CrossRef] [PubMed]

- Manning, C., Raghavan, P., & Schütze, H. (2008). Introduction to information retrieval. Cambridge University Press. [Google Scholar] [CrossRef]

- Masters, G. N. (1982). A rasch model for partial credit scoring. Psychometrika, 47(2), 149–174. [Google Scholar] [CrossRef]

- McCloughlin, T. (2002). Repertory grid analysis as a form of concept mapping in science education research. Irish Educational Studies, 21(2), 25–32. [Google Scholar] [CrossRef]

- Mullis, I. V. S., Martin, M. O., Foy, P., Kelly, D. L., & Fishbein, B. (2020). TIMSS 2019 international results in mathematics and science. Available online: https://timssandpirls.bc.edu/timss2019/international-results/ (accessed on 12 December 2024).

- Nakhleh, M. B. (1992). Why some students don’t learn chemistry: Chemical misconceptions. Journal of Chemical Education, 69(3), 191. [Google Scholar] [CrossRef]

- Nakhleh, M. B., Samarapungavan, A., & Saglam, Y. (2005). Middle school students’ beliefs about matter. Journal of Research in Science Teaching, 42(5), 581–612. [Google Scholar] [CrossRef]

- Novak, J. D. (1990). Concept mapping: A useful tool for science education. Journal of Research in Science Teaching, 27(10), 937–949. [Google Scholar] [CrossRef]

- Novak, J. D., & Gowin, D. B. (1984). Learning how to learn. Cambridge University Press. [Google Scholar] [CrossRef]

- Nyshchuk, A., Nikolaev, S., & Romanov, O. (2023). Methodology for analyzing bitstreams based on the use of the Damerau–Levenshtein distance and other metrics. Cybernetics and Systems Analysis, 59(6), 919–927. [Google Scholar] [CrossRef]

- Opesemowo, O. A. G. (2025). Exploring undue advantage of differential item functioning in high-stakes assessments: Implications on sustainable development goal 4. Social Sciences & Humanities Open, 11, 101257. [Google Scholar] [CrossRef]

- Osborne, R. J., & Cosgrove, M. M. (1983). Children’s conceptions of the changes of state of water. Journal of Research in Science Teaching, 20(9), 825–838. [Google Scholar] [CrossRef]

- Rhinesmith, E., Anglum, J. C., Park, A., & Burrola, A. (2023). Recruiting and retaining teachers in rural schools: A systematic review of the literature. Peabody Journal of Education, 98(4), 347–363. [Google Scholar] [CrossRef]

- Robitzsch, A., Kiefer, T., & Wu, M. (2024). TAM: Test analysis modules (R package version 4.2-21). Available online: https://cran.r-project.org/web/packages/TAM (accessed on 3 August 2024).

- Rojon, C., McDowall, A., & Saunders, M. N. K. (2019). A novel use of honey’s aggregation approach to the analysis of repertory grids. Field Methods, 31(2), 150–166. [Google Scholar] [CrossRef]

- Rozenszajn, R., Kavod, G. Z., & Machluf, Y. (2021). What do they really think? the repertory grid technique as an educational research tool for revealing tacit cognitive structures. International Journal of Science Education, 43(6), 906–927. [Google Scholar] [CrossRef]

- Sandilands, D., Oliveri, M. E., Zumbo, B. D., & Ercikan, K. (2013). Investigating sources of differential item functioning in international large-scale assessments using a confirmatory approach. International Journal of Testing, 13(2), 152–174. [Google Scholar] [CrossRef]

- Scheuneman, J. D., & Gerritz, K. (1990). Using differential item functioning procedures to explore sources of item difficulty and group performance characteristics. Journal of Educational Measurement, 27(2), 109–131. [Google Scholar] [CrossRef]

- Slater, P. (1977). The measurement of intrapersonal space by grid technique (Volume 2): Dimensions of intrapersonal space. Wiley. [Google Scholar]

- Soeharto, S., & Csapó, B. (2021). Evaluating item difficulty patterns for assessing student misconceptions in science across physics, chemistry, and biology concepts. Heliyon, 7(11), e08352. [Google Scholar] [CrossRef]

- Solano-Flores, G., & Nelson-Barber, S. (2001). On the cultural validity of science assessments. Journal of Research in Science Teaching, 38(5), 553–573. [Google Scholar] [CrossRef]

- Stavy, R., & Stachel, D. (1985). Children’s ideas about ‘solid’ and ‘liquid’. European Journal of Science Education, 7(4), 407–421. [Google Scholar] [CrossRef]

- Strong, W. L. (2016). Biased richness and evenness relationships within Shannon–Wiener index values. Ecological Indicators, 67, 703–713. [Google Scholar] [CrossRef]

- Talanquer, V. (2009). On cognitive constraints and learning progressions: The case of “structure of matter”. International Journal of Science Education, 31(15), 2123–2136. [Google Scholar] [CrossRef]

- UNESCO & International Task Force on Teachers for Education 2030. (2024). Global report on teachers: Addressing teacher shortages and transforming the profession. UNESCO. [Google Scholar] [CrossRef]

- Van Vo, D., & Csapó, B. (2021). Development of scientific reasoning test measuring control of variables strategy in physics for high school students: Evidence of validity and latent predictors of item difficulty. International Journal of Science Education, 43(13), 2185–2205. [Google Scholar] [CrossRef]

- von Davier, M., Foy, P., Martin, M. O., & Mullis, I. V. S. (2020). Examining eTIMSS country differences between eTIMSS data and bridge data: A look at country-level mode of administration effects. In M. O. Martin, M. v. Davier, & I. V. S. Mullis (Eds.), Methods and procedures: TIMSS 2019 technical report (pp. 13.11–13.24). TIMSS & PIRLS International Study Center, Lynch School of Education, Boston College and International Association for the Evaluation of Educational Achievement. [Google Scholar]

- Wallwey, C., & Kajfez, R. L. (2023). Quantitative research artifacts as qualitative data collection techniques in a mixed methods research study. Methods in Psychology, 8, 100115. [Google Scholar] [CrossRef]

- Wang, L., Yuan, Y., & Wang, G. (2024). The construction of civil scientific literacy in China from the perspective of science education. Science & Education, 33(1), 249–269. [Google Scholar] [CrossRef]

- Winer, L. R., & Vazquez-abad, J. (1997). Repertory grid technique in the diagnosis of learner difficulties and the assessment of conceptual change in physics. Journal of Constructivist Psychology, 10(4), 363–386. [Google Scholar] [CrossRef]

- Wu, M., Tam, H. P., & Jen, T.-H. (2016). Educational measurement for applied researchers: Theory into practice. Springer. [Google Scholar] [CrossRef]

- Yezierski, E. J., & Birk, J. P. (2006). Misconceptions about the particulate nature of matter. Using animations to close the gender gap. Journal of Chemical Education, 83(6), 954. [Google Scholar] [CrossRef]

- Zarkadis, N., Stamovlasis, D., & Papageorgiou, G. (2020). Student ideas and misconceptions for the atom: A latent class analysis with covariates. International Journal of Physics and Chemistry Education, 12(3), 41–47. [Google Scholar] [CrossRef]

| Item Type | Number of Item | Proportion | |

|---|---|---|---|

| Total | DIF Items | ||

| Multiple-Choice | 107 | 7 | 6.54% |

| Constructed-Response | 104 | 19 | 18.27% |

| Cognitive Domain | Number of Item | Proportion | |

|---|---|---|---|

| Total | DIF Items | ||

| Knowing | 75 | 9 | 12.00% |

| Applying | 80 | 11 | 13.75% |

| Reasoning | 56 | 6 | 10.71% |

| Content Domain | Number of Item | Proportion | |

|---|---|---|---|

| Total | DIF Items | ||

| Cells and Their Functions | 14 | 2 | 14.29% |

| Characteristics and Life Processes of Organisms | 14 | 2 | 14.29% |

| Chemical Change | 10 | 1 | 10.00% |

| Composition of Matter | 11 | 4 | 36.36% |

| Diversity, Adaptation, and Natural Selection | 8 | 1 | 12.50% |

| Earth’s Processes, Cycles, and History | 17 | 2 | 11.76% |

| Earth’s Structure and Physical Features | 8 | 1 | 12.50% |

| Ecosystems | 24 | 2 | 8.33% |

| Electricity and Magnetism | 11 | 1 | 9.09% |

| Energy Transformation and Transfer | 8 | 1 | 12.50% |

| Human Health | 8 | 2 | 25.00% |

| Motion and Forces | 14 | 1 | 7.14% |

| Physical States and Changes in Matter | 12 | 3 | 25.00% |

| Properties of Matter | 21 | 3 | 14.29% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, K.-M.; Jen, T.-H.; Shang, Y.-W. Urban–Remote Disparities in Taiwanese Eighth-Grade Students’ Science Performance in Matter-Related Domains: Mixed-Methods Evidence from TIMSS 2019. Educ. Sci. 2025, 15, 1262. https://doi.org/10.3390/educsci15091262

Chen K-M, Jen T-H, Shang Y-W. Urban–Remote Disparities in Taiwanese Eighth-Grade Students’ Science Performance in Matter-Related Domains: Mixed-Methods Evidence from TIMSS 2019. Education Sciences. 2025; 15(9):1262. https://doi.org/10.3390/educsci15091262

Chicago/Turabian StyleChen, Kuan-Ming, Tsung-Hau Jen, and Ya-Wen Shang. 2025. "Urban–Remote Disparities in Taiwanese Eighth-Grade Students’ Science Performance in Matter-Related Domains: Mixed-Methods Evidence from TIMSS 2019" Education Sciences 15, no. 9: 1262. https://doi.org/10.3390/educsci15091262

APA StyleChen, K.-M., Jen, T.-H., & Shang, Y.-W. (2025). Urban–Remote Disparities in Taiwanese Eighth-Grade Students’ Science Performance in Matter-Related Domains: Mixed-Methods Evidence from TIMSS 2019. Education Sciences, 15(9), 1262. https://doi.org/10.3390/educsci15091262