A Structured AHP-Based Approach for Effective Error Diagnosis in Mathematics: Selecting Classification Models in Engineering Education

Abstract

1. Introduction

- To apply a structured and replicable AHP-based methodology for selecting theoretical frameworks in mathematics education.

- To identify the most appropriate classification model for diagnostic purposes in first-year engineering students.

- To provide a robust foundation for the future development of context-sensitive diagnostic tools and pedagogical intervention strategies.

2. Materials and Methods

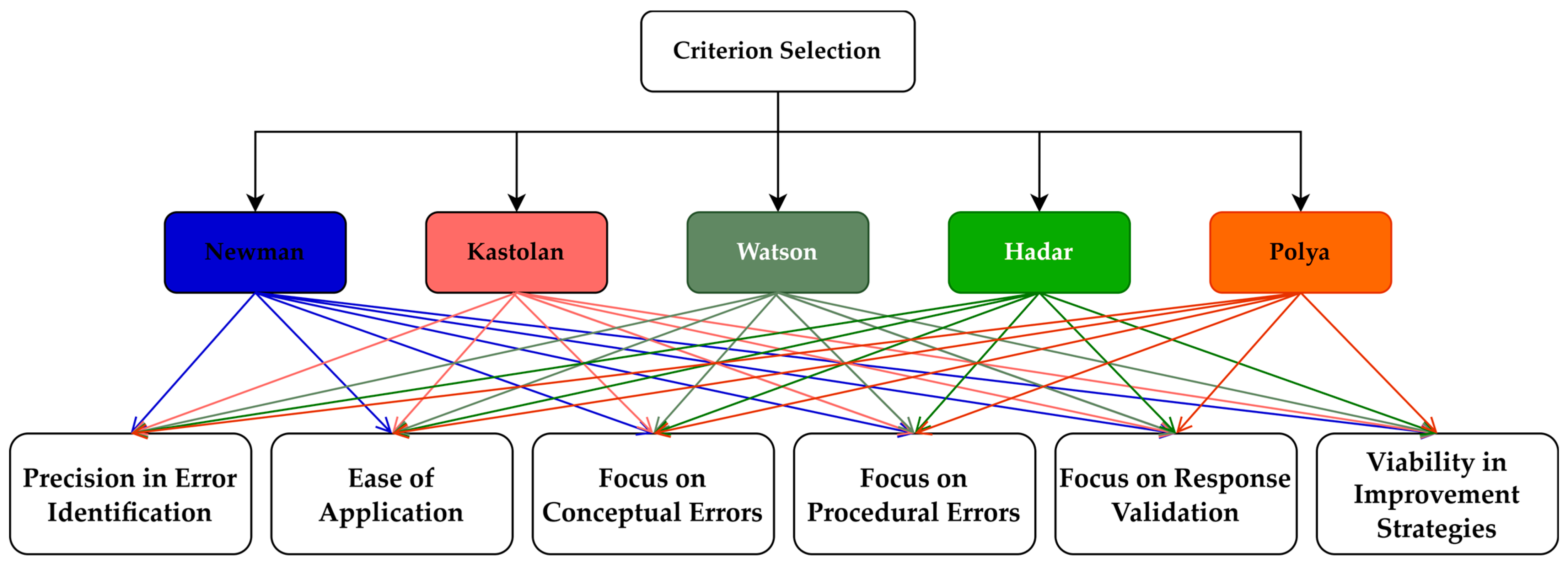

2.1. Criteria Selection and Justification

2.2. Multicriteria Evaluation Procedure Using the Analytic Hierarchy Process (AHP)

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Agoiz, Á. C. (2019). Errores frecuentes en el aprendizaje de las matemáticas en bachillerato. Cuadernos Del Marqués de San Adrián: Revista de Humanidades, 11, 129–141. [Google Scholar]

- Akram, M., & Adeel, A. (2023). Extended PROMETHEE method under multi-polar fuzzy sets. Studies in Fuzziness and Soft Computing, 430, 343–373. [Google Scholar] [CrossRef]

- Aksoy, N. C., & Yazlik, D. O. (2017). Student errors in fractions and possible causes of these errors. Journal of Education and Training Studies, 5(11), 219. [Google Scholar] [CrossRef]

- Alonso, J. A., & Lamata, M. T. (2006). Conistency in the analytic hierarchy process: A new approach. International Journal of Uncertainty, Fuzziness and Knowledge-Based Systems, 14(4), 445–459. [Google Scholar] [CrossRef]

- Anis, A., & Islam, R. (2015). The application of analytic hierarchy process in higher-learning institutions: A literature review. Journal for International Business and Entrepreneurship Development, 8(2), 166. [Google Scholar] [CrossRef]

- Arias Aristizábal, C. M. (2023). Errores que cometen los estudiantes de grado once de la Institución Educativa Nazario Restrepo cuando resuelven problemas con números racionales [Master’s thesis, Universidad Tecnológica de Pereira]. [Google Scholar]

- Arifin, S. A. N., & Maryono, I. (2023). Characteristics of student errors in solving geometric proof problems based on Newman’s theory. Union: Jurnal Ilmiah Pendidikan Matematika, 11(3), 528–537. [Google Scholar] [CrossRef]

- Armstrong, P. K., & Croft, A. C. (1999). Identifying the learning needs in mathematics of entrants to undergraduate engineering programmes in an English university. European Journal of Engineering Education, 24(1), 59–71. [Google Scholar] [CrossRef]

- Arroyo Valenciano, J. A. (2021). Las variables como elemento sustancial en el método científico. Revista Educación, 46(1), 621–631. [Google Scholar] [CrossRef]

- Barbosa, A., & Vale, I. (2021). A visual approach for solving problems with fractions. Education Sciences, 11(11), 727. [Google Scholar] [CrossRef]

- Berraondo, R., Pekolj, M., Pérez, N., & Cognini, R. (2004). Leo pero no comprendo. Una experiencia con ingresantes universitarios. Acta Latinoamericana de Matemática Educativa, 15(1), 131–136. [Google Scholar]

- Bolaños-González, H., & Lupiáñez-Gómez, J. L. (2021). Errores en la comprensión del significado de las letras en tareas algebraicas en estudiantado universitario. Uniciencia, 35(1), 1–18. [Google Scholar] [CrossRef]

- Booth, J. L., Barbieri, C., Eyer, F., & Pare-Blagoev, E. J. (2014). Persistent and pernicious errors in algebraic problem solving. The Journal of Problem Solving, 7(1), 3. [Google Scholar] [CrossRef]

- Booth, J. L., Lange, K. E., Koedinger, K. R., & Newton, K. J. (2013). Using example problems to improve student learning in algebra: Differentiating between correct and incorrect examples. Learning and Instruction, 25, 24–34. [Google Scholar] [CrossRef]

- Brandi, A. C., & Garcia, R. E. (2017, October 18–21). Motivating engineering students to math classes: Practical experience teaching ordinary differential equations. 2017 IEEE Frontiers in Education Conference (FIE) (pp. 1–7), Indianapolis, IN, USA. [Google Scholar] [CrossRef]

- Buhaerah, B., Jusoff, K., Nasir, M., & Dangnga, M. S. (2022). Student’s mistakes in solving problem based on watson’s criteria and learning style. Jurnal Pendidikan Matematika (JUPITEK), 5(2), 95–104. [Google Scholar] [CrossRef]

- Caronía, S., Zoppi, A. M., Polasek, M. d., Rivero, M., & Operuk, R. (2008). Un análisis desde la didáctica de la matemática sobre algunos errores en el álgebra. Obtenido de. Available online: https://funes.uniandes.edu.co/wp-content/uploads/tainacan-items/32454/1164520/Caronia2009Un.pdf (accessed on 30 May 2025).

- Chang, Q. (2014). Computer design specialty evaluation based on analytic hierarchy process theory. Energy Education Science and Technology Part A: Energy Science and Research, 32(6), 7865–7872. [Google Scholar]

- Chauraya, M., & Mashingaidze, S. (2017). In-service teachers’ perceptions and interpretations of students’ errors in mathematics. International Journal for Mathematics Teaching and Learning, 18(3), 273–279. [Google Scholar] [CrossRef]

- Checa, A. N., & Martínez-Artero, R. N. (2010). Resolución de problemas de matemáticas en las pruebas de acceso a la universidad. Errores significativos. Educatio Siglo XXI, 28(1), 317–341. [Google Scholar]

- Chen, J., Zhao, F., & Xing, H. (2018). Curriculum system of specialty group under the credit system of higher vocational colleges based on AHP structure analysis. IPPTA: Quarterly Journal of Indian Pulp and Paper Technical Association, 30(6), 841–849. [Google Scholar]

- Do, Q. H., & Chen, J.-F. (2013). Evaluating faculty staff: An application of group MCDM based on the fuzzy AHP approach. International Journal of Information and Management Sciences, 24(2), 131–150. [Google Scholar]

- Engelbrecht, J., & Harding, A. (2005). Teaching undergraduate mathematics on the internet. Educational Studies in Mathematics, 58(2), 253–276. [Google Scholar] [CrossRef]

- Faulkner, B., Earl, K., & Herman, G. (2019). Mathematical maturity for engineering students. International Journal of Research in Undergraduate Mathematics Education, 5(1), 97–128. [Google Scholar] [CrossRef]

- Fauzan, A., & Minggi, I. (2024). Analysis of students’ errors in solving sequence and series questions problems based on Hadar’s criteria in viewed from students’ mathematical abilities. Proceedings of International Conference on Educational Studies in Mathematics, 1(1), 259–264. [Google Scholar]

- Gamboa-Cruzado, J. G., Morante-Palomino, E. M., Rivero, C. A., Bendezú, M. L., & Fernández, D. M. M. F. (2024). Research on the classification and application of physical education teaching mode by neutrosophic analytic hierarchy process. International Journal of Neutrosophic Science, 23(3), 51–62. [Google Scholar] [CrossRef]

- Ganesan, R., & Dindyal, J. (2014). An investigation of students’ errors in logarithms. Mathematics Education Research Group of Australasia. [Google Scholar]

- Hadi, S., & Radiyatul, R. (2014). Metode pemecahan masalah menurut polya untuk mengembangkan kemampuan siswa dalam pemecahan masalah matematis di sekolah menengah pertama. EDU-MAT: Jurnal Pendidikan Matematika, 2(1), 53–61. [Google Scholar] [CrossRef]

- Harris, D., Black, L., Hernandez-Martinez, P., Pepin, B., & Williams, J. (2015). Mathematics and its value for engineering students: What are the implications for teaching? International Journal of Mathematical Education in Science and Technology, 46(3), 321–336. [Google Scholar] [CrossRef]

- Hester, P. T., & Bachman, J. T. (2009, October 14–17). Analytical hierarchy process as a tool for engineering managers. 30th Annual National Conference of the American Society for Engineering Management 2009, ASEM 2009 (pp. 597–602), Springfield, MI, USA. Available online: https://www.scopus.com/inward/record.uri?eid=2-s2.0-84879868104&partnerID=40&md5=31e0c0b124a9d6ba00c035beff1f571d (accessed on 25 June 2025).

- Hiebert, J., & Grouws, D. A. (2007). The effects of classroom mathematics teaching on students’ learning. Information Age Publishing. [Google Scholar]

- Hoth, J., Larrain, M., & Kaiser, G. (2022). Identifying and dealing with student errors in the mathematics classroom: Cognitive and motivational requirements. Frontiers in Psychology, 13, 1057730. [Google Scholar] [CrossRef]

- Hu, Q., Son, J.-W., & Hodge, L. (2022). Algebra teachers’ interpretation and responses to student errors in solving quadratic equations. International Journal of Science and Mathematics Education, 20(3), 637–657. [Google Scholar] [CrossRef]

- Huynh, T., & Sayre, E. C. (2019). Blending of conceptual physics and mathematical signs. arXiv, arXiv:1909.11618. [Google Scholar]

- Irham. (2020, October 19–20). Conceptual errors of students in solving mathematics problems on the topic of function. 3rd International Conference on Education, Science, And Technology (ICEST 2019), Makassar, Indonesia. [Google Scholar] [CrossRef]

- Ishizaka, A., & Labib, A. (2011). Review of the main developments in the analytic hierarchy process. Expert Systems with Applications, 38(11), 14336–14345. [Google Scholar] [CrossRef]

- Jaworski, B., & Matthews, J. (2011). Developing teaching of mathematics to first year engineering students. Teaching Mathematics and Its Applications, 30(4), 178–185. [Google Scholar] [CrossRef]

- Junpeng, P., Marwiang, M., Chinjunthuk, S., Suwannatrai, P., Chanayota, K., Pongboriboon, K., Tang, K. N., & Wilson, M. (2020). Validation of a digital tool for diagnosing mathematical proficiency. International Journal of Evaluation and Research in Education (IJERE), 9(3), 665. [Google Scholar] [CrossRef]

- Kontrová, L., Biba, V., & Šusteková, D. (2022). Teaching and exploring mathematics through the analysis of student’s errors in solving mathematical problems. European Journal of Contemporary Education, 11(1), 89–98. [Google Scholar] [CrossRef]

- Lee, K., Ng, S. F., Bull, R., Pe, M. L., & Ho, R. H. M. (2011). Are patterns important? An investigation of the relationships between proficiencies in patterns, computation, executive functioning, and algebraic word problems. Journal of Educational Psychology, 103(2), 269–281. [Google Scholar] [CrossRef]

- Levey, F. C., & Johnson, M. R. (2020, October 16–17). Fundamental mathematical skill development in engineering education. 2020 Annual Conference Northeast Section (ASEE-NE) (pp. 1–6), Bridgeport, CT, USA. [Google Scholar] [CrossRef]

- Lobato, J. (2008). When students don’t apply the knowledge you think they have, rethink your assumptions about transfer. In Making the connection (pp. 289–304). The Mathematical Association of America. [Google Scholar] [CrossRef]

- López-Díaz, M. T., & Peña, M. (2021). Mathematics training in engineering degrees: An intervention from teaching staff to students. Mathematics, 9(13), 1475. [Google Scholar] [CrossRef]

- Mahendran, P., Moorthy, M. B. K., & Saravanan, S. (2014). A fuzzy AHP approach for selection of measuring instrument for engineering college selection. Applied Mathematical Sciences, 41–44, 2149–2161. [Google Scholar] [CrossRef]

- Makonye, J. P., & Fakude, J. (2016). A study of errors and misconceptions in the learning of addition and subtraction of directed numbers in grade 8. Sage Open, 6(4), 2158244016671375. [Google Scholar] [CrossRef]

- Mallart Solaz, A. (2014). La resolución de problemas en la prueba de matemáticas de acceso a la universidad: Procesos y errores. Educatio Siglo XXI, 32(1), 233–254. [Google Scholar] [CrossRef][Green Version]

- Marpa, E. P. (2019). Common errors in algebraic expressions: A quantitative-qualitative analysis. International Journal on Social and Education Sciences, 1(2), 63–72. [Google Scholar] [CrossRef]

- Mauliandri, R., & Kartini, K. (2020). Analisis kesalahan siswa menurut kastolan dalam menyelesaikan soal operasi bentuk aljabar pada siswa smp. AXIOM: Jurnal Pendidikan Dan Matematika, 9(2), 107. [Google Scholar] [CrossRef]

- Morgan, A. T. (1990). A study of the difficulties experienced with mathematics by engineering students in higher education. International Journal of Mathematical Education in Science and Technology, 21(6), 975–988. [Google Scholar] [CrossRef]

- Mosia, M., Matabane, M. E., & Moloi, T. J. (2023). Errors and misconceptions in euclidean geometry problem solving questions: The case of grade 12 learners. Research in Social Sciences and Technology, 8(3), 89–104. [Google Scholar] [CrossRef]

- Movshovitz-Hadar, N., Zaslavsky, O., & Inbar, S. (1987). An empirical classification model for errors in high school mathematics. Journal for Research in Mathematics Education, 18(1), 3. [Google Scholar] [CrossRef]

- Mu, E., & Nicola, C. B. (2019). Managing university rank and tenure decisions using a multi-criteria decision-making approach. International Journal of Business and Systems Research, 13(3), 297–320. [Google Scholar] [CrossRef]

- Mukuka, A., Balimuttajjo, S., & Mutarutinya, V. (2023). Teacher efforts towards the development of students’ mathematical reasoning skills. Heliyon, 9(4), e14789. [Google Scholar] [CrossRef]

- Mulungye, M. M. (2016). Sources of students’ errors and misconceptions in algebra and influence of classroom practice remediation in secondary schools: Machakos sub-county, Kenya [Unpublished doctoral dissertation, Kenyatta University]. [Google Scholar]

- Musa, H., Rusli, R., Ilhamsyah, & Yuliana, A. (2021). Analysis of student errors in solving mathematics problems based on watson’s criteria on the subject of two variable linear equation system (SPLDV). EduLine: Journal of Education and Learning Innovation, 1(2), 125–131. [Google Scholar] [CrossRef]

- Nakakoji, Y., & Wilson, R. (2020). Interdisciplinary learning in mathematics and science: Transfer of learning for 21st century problem solving at university. Journal of Intelligence, 8(3), 32. [Google Scholar] [CrossRef]

- Ningsih, E. F., & Retnowati, E. (2020, December 7). Prior knowledge in mathematics learning. SEMANTIK Conference of Mathematics Education (SEMANTIK 2019), Yoyakarta, Indonesia. [Google Scholar] [CrossRef]

- Noutsara, S., Neunjhem, T., & Chemrutsame, W. (2021). Mistakes in mathematics problems solving based on newman’s error analysis on set materials. Journal La Edusci, 2(1), 20–27. [Google Scholar] [CrossRef]

- Nuraini, N. L. S., Cholifah, P. S., & Laksono, W. C. (2018, September 21–22). Mathematics errors in elementary school: A meta-synthesis study. 1st International Conference on Early Childhood and Primary Education (ECPE 2018), Malang, Indonesia. [Google Scholar] [CrossRef][Green Version]

- Nurhayati, R., & Retnowati, E. (2019). An analysis of errors in solving limits of algebraic function. Journal of Physics: Conference Series, 1320(1), 012034. [Google Scholar] [CrossRef]

- Nuryati, N., Purwaningsih, S., & Habinuddin, E. (2022). Analysis of errors in solving mathematical literacy analysis problems using newman. International Journal of Trends in Mathematics Education Research, 5(3), 299–305. [Google Scholar] [CrossRef]

- Papadouris, J. P., Komis, V., & Lavidas, K. (2024). Errors and misconceptions of secondary school students in absolute values: A systematic literature review. Mathematics Education Research Journal. [Google Scholar] [CrossRef]

- Pazos, A. L., & Salinas, M. J. (2012). Dificultades algebraicas en el aula de 1° bac. en ciencias y ciencias sociales. Investigación En Educación Matemática XVI, 417–426. [Google Scholar]

- Pianda, D. (2018). Categorización de errores típicos en ejercicios matemáticos cometidos por estudiantes de primer semestre de la universidad de nariño. Universidad de Nariño. [Google Scholar]

- Rafi, I., & Retnawati, H. (2018). What are the common errors made by students in solving logarithm problems? Journal of Physics: Conference Series, 1097, 012157. [Google Scholar] [CrossRef]

- Ramík, J. (2020). Applications in decision-making: Analytic hierarchy process—AHP revisited. Lecture Notes in Economics and Mathematical Systems, 690, 189–211. [Google Scholar] [CrossRef]

- Resnick, L. B., Nesher, P., Leonard, F., Magone, M., Omanson, S., & Peled, I. (1989). Conceptual bases of arithmetic errors: The case of decimal fractions. Journal for Research in Mathematics Education, 20(1), 8. [Google Scholar] [CrossRef]

- Rodríguez-Domingo, S., Molina, M., Cañadas, M. C., & Castro, E. (2015). Errores en la traducción de enunciados algebraicos entre los sistemas de representación simbólico y verbal. PNA. Revista de Investigación En Didáctica de La Matemática, 9(4), 273–293. [Google Scholar] [CrossRef]

- Roselizawati, H., Sarwadi, H., & Shahrill, M. (2014). Understanding students’ mathematical errors and misconceptions: The case of year 11 repeating students. Mathematics Education Trends and Research, 2014, 1–10. [Google Scholar] [CrossRef]

- Rosita, A., & Novtiar, C. (2021). Analisis kesalahan siswa smk dalam menyelesaikan soal dimensi tiga berdasarkan kategori kesalahan menurut watson. JPMI (Jurnal Pembelajaran Matematika Inovatif), 4(1), 193–204. [Google Scholar]

- Rushton, S. J. (2018). Teaching and learning mathematics through error analysis. Fields Mathematics Education Journal, 3(1), 4. [Google Scholar] [CrossRef]

- Saaty, T. L. (2008). Decision making with the analytic hierarchy process. International Journal of Services Sciences, 1(1), 83. [Google Scholar] [CrossRef]

- Sari, S. I., & Pujiastuti, H. (2022). Analisis kesalahan siswa dalam mengerjakan soal bilangan berpangkat dan bentuk akar berdasarkan kriteria kastolan. Proximal: Jurnal Penelitian Matematika Dan Pendidikan Matematika, 5(2), 21–29. [Google Scholar] [CrossRef]

- Saucedo, G. (2007). Categorización de errores algebraicos en alumnos ingresantes a la universidad. Itinerarios Educativos, 1(2), 22–43. [Google Scholar] [CrossRef]

- Sazhin, S. S. (1998). Teaching Mathematics to Engineering Students*. International Journal of Engineering Education, 14(2), 145–152. [Google Scholar]

- Sehole, L., Sekao, D., & Mokotjo, L. (2023). Mathematics conceptual errors in the learning of a linear function—A case of a technical and vocational education and training college in South Africa. The Independent Journal of Teaching and Learning, 18(1), 81–97. [Google Scholar] [CrossRef]

- Sidney, P. G., & Alibali, M. W. (2015). Making connections in math: Activating a prior knowledge analogue matters for learning. Journal of Cognition and Development, 16(1), 160–185. [Google Scholar] [CrossRef]

- Siegler, R. S., & Lortie-Forgues, H. (2015). Conceptual knowledge of fraction arithmetic. Journal of Educational Psychology, 107(3), 909–918. [Google Scholar] [CrossRef]

- Smahi, K., Labouidya, O., & El Khadiri, K. (2024). Towards effective adaptive revision: Comparative analysis of online assessment platforms through the combined AHP-MCDM approach. International Journal of Interactive Mobile Technologies, 18(17), 75–87. [Google Scholar] [CrossRef]

- Star, J. R., & Rittle-Johnson, B. (2008). Flexibility in problem solving: The case of equation solving. Learning and Instruction, 18(6), 565–579. [Google Scholar] [CrossRef]

- Strum, R. D., & Kirk, D. E. (1979). Engineering mathematics: Who should teach it and how? IEEE Transactions on Education, 22(2), 85–88. [Google Scholar] [CrossRef]

- Suciati, I., & Sartika, N. (2023). Students’ errors analysis in solving mathematics problems viewed from various perspectives. 12 Waiheru, 9(2), 149–158. [Google Scholar] [CrossRef]

- Suharti, S., Nur, F., & Alim, B. (2021). Polya steps for analyzing errors in mathematical problem solving. AL-ISHLAH: Jurnal Pendidikan, 13(1), 741–748. [Google Scholar] [CrossRef]

- Sukayasa, S. (2012). Pengembangan model pembelajaran berbasis fase-fase polya untuk meningkatkan kompetensi penalaran siswa SMP dalam memecahkan masalah matematika. AKSIOMA: Jurnal Pendidikan Matematika, 1(1), 46–54. [Google Scholar]

- Sulistyaningsih, D., Purnomo, E. A., & Purnomo, P. (2021). Polya’s problem solving strategy in trigonometry: An analysis of students’ difficulties in problem solving. Mathematics and Statistics, 9(2), 127–134. [Google Scholar] [CrossRef]

- Wedelin, D., Adawi, T., Jahan, T., & Andersson, S. (2015). Investigating and developing engineering students’ mathematical modelling and problem-solving skills. European Journal of Engineering Education, 40(5), 557–572. [Google Scholar] [CrossRef]

- Winarso, W., & Toheri, T. (2021). An analysis of students’ error in learning mathematical problem solving; the perspective of David Kolb’s theory. Turkish Journal of Computer and Mathematics Education (TURCOMAT), 12(1), 139–150. [Google Scholar] [CrossRef]

- Wiyah, R. B., & Nurjanah. (2021). Error analysis in solving the linear equation system of three variables using Polya’s problem-solving steps. Journal of Physics: Conference Series, 1882(1), 012084. [Google Scholar] [CrossRef]

- Yulmaini, Sanusi, A., Ariza Eka Yusendra, M., & Kholijah, S. (2020). implementation of analytic hierarchy process for determining priority criteria in higher education competitiveness development strategy based on RAISE++ model. Journal of Physics: Conference Series, 1529(2), 022064. [Google Scholar] [CrossRef]

- Zhang, D., & Wang, X. (2021, January 29–31). AHP-based evaluation model of multi-modal classroom teaching effect in higher vocational English. Proceedings—2021 2nd International Conference on Education, Knowledge and Information Management, ICEKIM 2021 (pp. 403–406), Xiamen, China. [Google Scholar] [CrossRef]

| Error Type | Indicator of Error | Example |

|---|---|---|

| Reading error | Fails to identify key information in the problem | at 6:00 p.m. At what time do the two trains cross paths? When reading the problem, the student does not correctly identify the departure and arrival times of the trains. He confuses the schedules and assumes that both trains leave at 3:00 p.m. and arrive at 6:00 p.m. Based on this incorrect information, he miscalculates that the trains cross at 4:30 p.m. (Arias Aristizábal, 2023) |

| Incorrectly determines the known data | as the ordinate to the origin (Saucedo, 2007). | |

| Uses self-created symbols without explaining their meaning | In a math problem, the task is to calculate the total construction cost of a building, knowing that the cost per square meter is 800,000 pesos and increases by 5% each year. The student writes the following expression: . Using these symbols without prior definition makes what they represent unclear, which could confuse the interpretation of the solution (Arroyo Valenciano, 2021). | |

| Comprehension error | Responds incorrectly due to a lack of understanding or incomplete identification of problem elements | A water tank has a total capacity of 500 L. It currently holds 350 L. How long will the tank be full if water is added at 15 L per minute? The student incorrectly interprets what is to be solved. He assumes that he must calculate how long it takes to fill the tank from zero, ignoring that it already contains 350 liters (Winarso & Toheri, 2021). |

| Writes a brief but unclear response, lacking sufficient argumentation when expressing what needs to be solved | A person has a rope 3 m long. He needs to cut the rope into 5 equal parts. How long will each part be? Student’s answer: Each part will be smaller than 3 m. The student writes something brief but unclear without providing a calculation or justifying his answer. He does not mention the necessary operation (division) or detail the procedure (Aksoy & Yazlik, 2017). | |

| Transformation error | Inaccuracy in converting information into mathematical formulas | in the statement “A number plus its consecutive is equal to another minus 2”, erring in the conversion from natural language to mathematical language (Rodríguez-Domingo et al., 2015). |

| Process skill error | Error when using arithmetic operations | , adding numerators and denominators together (Booth et al., 2014). |

| Incomplete procedures/steps | . (Agoiz, 2019) | |

| Encoding error | Writing answers inappropriately | . (Agoiz, 2019) |

| Answers are not appropriate to the context | , which is inadequate because the distance between two points cannot be negative in any mathematical context (Checa & Martínez-Artero, 2010). | |

| Inaccurate or inconsistent conclusions | , an answer that makes no sense (Marpa, 2019). |

| Error Type | Indicator of Error | Example |

|---|---|---|

| Conceptual Errors | Incorrect use of formulas or altered rules | (Agoiz, 2019). |

| Selection of inappropriate formulas | The student uses the area formula to find the solution for the perimeter of a rectangle, failing to apply the correct perimeter formula. | |

| Procedure Errors | Irregularity in problem-solving steps | . (Agoiz, 2019). |

| Inability to simplify | The given problem states that the sum of the first and the second numbers exceeds the third by two units; the second minus twice the first is ten units less than the third; and the sum of all three numbers is 24. The task is to determine the three numbers. The student sets up a 3 × 3 system of equations and solves it using Gaussian elimination, although a simple substitution method would suffice (Checa & Martínez-Artero, 2010). | |

| Interruption of the resolution process | , but fails to simplify the final expression further (Pianda, 2018). | |

| Technical Errors | Calculation errors | , the student incorrectly adds the indices of the roots, leading to an invalid operation (Agoiz, 2019). |

| Errors in notation or writing | , introducing an erroneous sign change (Agoiz, 2019). | |

| Inadequate substitution of values | and substitutes it incorrectly into the equation, leading to an invalid result (Caronía et al., 2008) |

| Error Type | Indicator of Error | Example |

|---|---|---|

| Inappropriate Data | Data does not match. | , a common error in basic algebra (Booth et al., 2013) |

| Misplaced data on the variable | (Siegler & Lortie-Forgues, 2015). | |

| Assigns known data to incorrect variables | (Booth et al., 2013) | |

| Inappropriate Procedure | Using the wrong formula | (Ningsih & Retnowati, 2020). |

| Do not write down the steps when solving problems | , without providing justification. (Barbosa & Vale, 2021). | |

| Skipping essential steps | , which leads to incorrect conclusions about the nature of the solutions (Booth et al., 2013). | |

| Missing Data | Omission of given data | , which leads to incorrect conclusions about the solutions (Siegler & Lortie-Forgues, 2015). |

| Omitted Conclusion | Fails to use the obtained data to draw conclusions | ) (Ningsih & Retnowati, 2020). |

| Response Level Conflict | Lack of readiness during the process | or a length but fails to justify whether this solution should be accepted or discarded, neglecting to consider the contextual constraints of the problem. (Barbosa & Vale, 2021). |

| Indirect Manipulation | Application of arbitrary reasoning | (Ningsih & Retnowati, 2020). but interrupts the process, possibly due to uncertainty about the solution, and switches to completing the square method. They rewrite the equation as to both sides, resulting in However, they simplify the right-hand side incorrectly, leading to an incorrect expression. This behavior exemplifies arbitrary reasoning and a lack of procedural consistency. The student alternates between methods without properly executing them, leading to repeated errors and unresolved confusion. |

| Skill Hierarchy Problem | Confusion in applying the hierarchy of mathematical operations | (Sidney & Alibali, 2015) |

| Above Other | Inappropriate reformulation of the question | , improperly combining operations and leading to an incorrect result. (Booth et al., 2014) |

| Omission of the response | as the solution, leaving the work incomplete without an explicit answer. (Lee et al., 2011) | |

| Disordered or inconsistent solution to the question | (Mulungye, 2016) |

| Error Type | Indicator of Error | Example |

|---|---|---|

| Misused data | The student does not exactly copy data from the problem | In the problem: In 2020, the cow population in City A was 1600, and in City B, it was 500. Each month, the population in City A increases by 25, and in City B by 10. At some point, the population in City A triples that of City B. Determine the population of cows in City A at that moment. For this problem, the student records Instead of correctly identifying the following: Initial population in City A: 1600. Initial population in City B: 500. Monthly increase in City A: 25. Monthly increase in City B: 10. The student failed to accurately record any required data from the problem and did not create an appropriate mathematical model (Fauzan & Minggi, 2024). |

| Students add data that is not appropriate. | without justification (Ningsih & Retnowati, 2020). | |

| Ignores the data provided | are equivalent (Ganesan & Dindyal, 2014) | |

| States a condition that is not needed | and compares it with the given line’s slope (Mallart Solaz, 2014). | |

| Interpreting information that does not follow the actual text | is a negative number. (Ganesan & Dindyal, 2014). | |

| Replacing the specified conditions with other inappropriate information | , the student unnecessarily assumes the line must also be perpendicular to another line instead of using the given slope (Mallart Solaz, 2014). | |

| Using the value of a variable for another variable | For the equation , transforming it into . This demonstrates the misuse of one variable as another (Rafi & Retnawati, 2018). | |

| Misinterpreted language | Students’ mistakes in translating mathematical symbols into everyday language | for “bags”) instead of treating them as mathematical variables. (Bolaños-González & Lupiáñez-Gómez, 2021). |

| Writing symbols of a concept with other symbols that have different meanings | In inequalities, students confuse the meaning of the greater than (>) and less than (<) signs. (Huynh & Sayre, 2019). | |

| Logically invalid inference | Mistakes are made when drawing incorrect conclusions from a problem | . ) that are not part of the original problem (Pazos & Salinas, 2012). |

| Distorted theorem or definition | Errors occur when students incorrectly apply formulas, theorems, or definitions that do not align with the problem. | . This error reflects a misapplication of logarithmic properties (Ganesan & Dindyal, 2014). |

| Unverified solution | Errors arise when students fail to verify each step against the final result, often because they rush through the problem without reviewing their work. | , as solutions without verifying their validity (Ganesan & Dindyal, 2014). |

| Technical error | Calculation errors | (Fauzan & Minggi, 2024). |

| Errors in quoting data | In the problem: In 2020, the cow population in City A was 1600, and in City B, it was 500. Each month, the population in City A increases by 25, and in City B by 10. At some point, the population in City A triples that of City B. Determine the population of cows in City A at that moment. The student records the data as (Fauzan & Minggi, 2024) Instead of correctly applying the formula | |

| Errors in manipulating symbols | incorrectly (Fauzan & Minggi, 2024). |

Afgan wants to visit a total of 24 beaches with his three friends (Boy, Mondy, and Reva). Afgan can only take two friends per day. During the visits:

| ||

| Error Type | Indicator of Error | Example |

| Understanding the problem | Students need to specify and identify what information is known, ask about the problem, and restate it in their own language. | . |

| Devising a plan | Students create a mathematical model, select an appropriate strategy that will be used, make estimates, and reduce things that are not related to the problem. | for each case, failing to relate it to the problem’s conditions. |

| Carrying out the plan | Students implement the plans and strategies chosen and arrange to solve the problem. | The number of beaches Boy visited in a day: days days days can be canceled on both sides without justification, leading to an erroneous result. |

| Looking back | Students review the solutions and results obtained from the problem-solving steps to avoid errors in their answers. | satisfy the initial equations. |

| Quantitative Value | Interpretation | Key Textual Indicators |

|---|---|---|

| 5 | High clarity, evidence, or applicability | “easy to identify”, “very easy”, “directly”, “evident”, “quickly recognized” |

| 4 | High, but with minor potential for confusion | “may be confused with…”, “quick but not direct”, “requires minimal analysis” |

| 3 | Moderate clarity or applicability; requires interpretation | “possibility of confusion”, “depends on the student”, “for the same reason”, “somewhat subjective” |

| 2 | Low clarity or applicability, though still identifiable | “difficult to identify”, “requires detailed analysis”, “not so clear” |

| 1 | Absent, not validated, or difficult to detect or apply | “not verified”, “not applicable”, “not focused”, “very difficult”, “unrelated to the concept” |

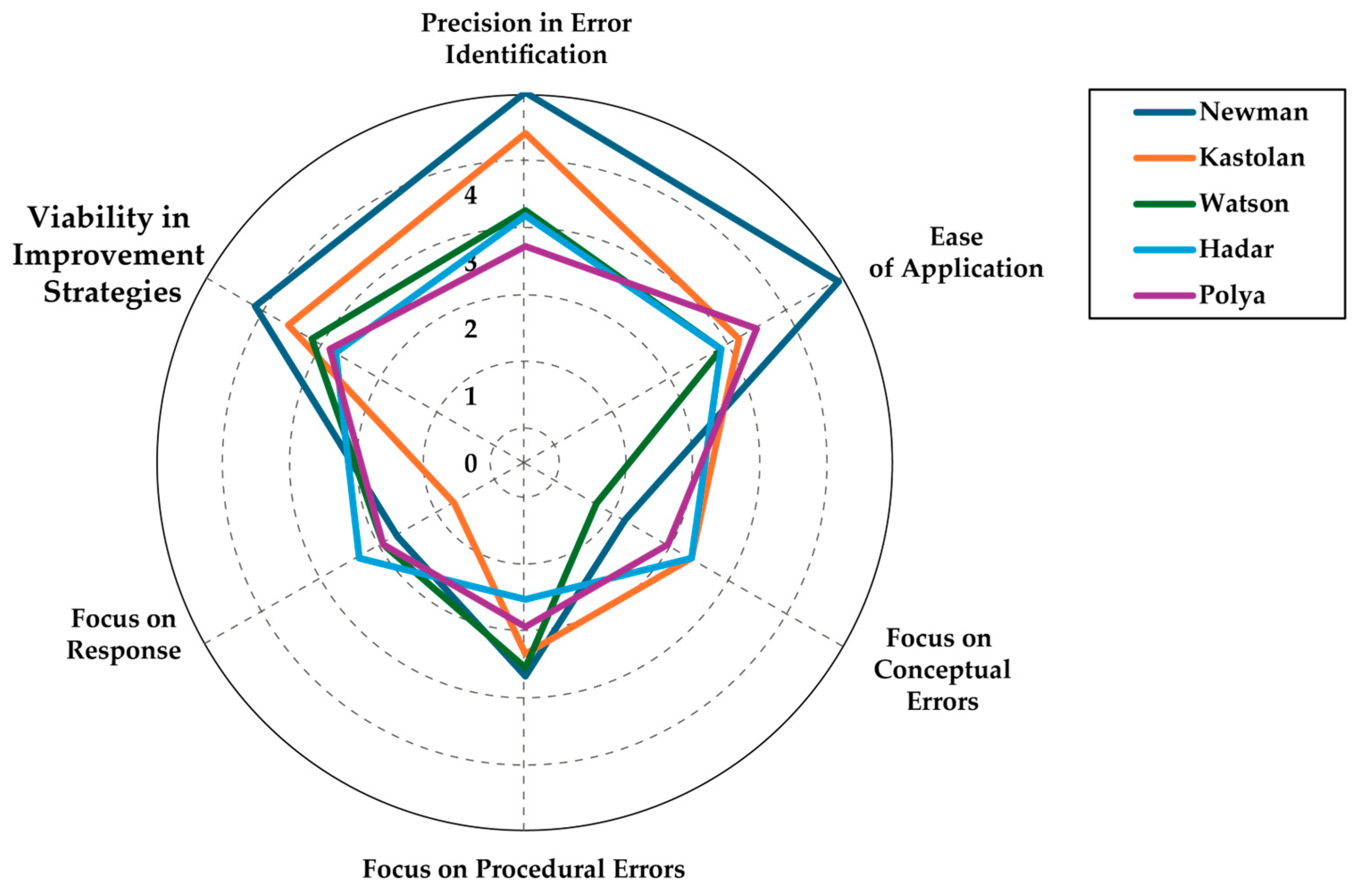

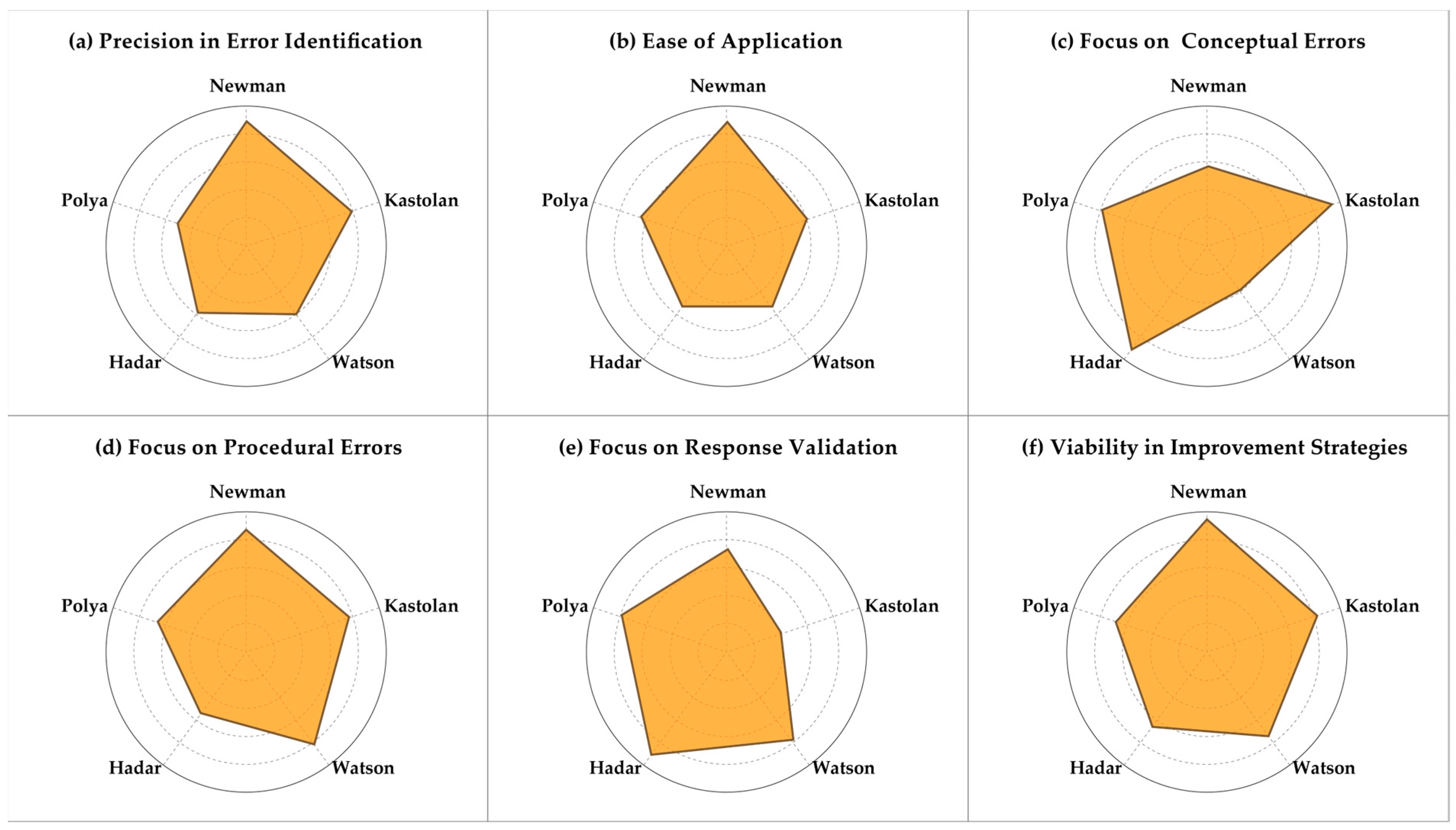

| Criterion | Precision in Error Identification | Ease of Application | Focus on Conceptual Errors | Focus on Procedural Errors | Focus on Response Validation | Viability in Improvement Strategies | Average |

|---|---|---|---|---|---|---|---|

| Newman | 4.5 | 4.4 | 1.4 | 1.8 | 1.8 | 3.8 | 3.1 |

| Kastolan | 4.0 | 3.0 | 2.3 | 2.3 | 1.0 | 3.3 | 2.7 |

| Watson | 3.1 | 2.8 | 1.0 | 2.5 | 2.0 | 3.0 | 2.4 |

| Hadar | 3.0 | 2.8 | 2.3 | 1.7 | 2.3 | 2.7 | 2.5 |

| Polya | 2.6 | 3.3 | 2.0 | 2.0 | 2.0 | 2.8 | 2.4 |

| Criterion | Precision in Error Identification | Ease of Application | Focus on Conceptual Errors | Focus on Procedural Errors | Focus on Response Validation | Viability in Improvement Strategies |

|---|---|---|---|---|---|---|

| Precision in Error Identification | 1 | 7 | 8 | 2 | 9 | 3 |

| Ease of Application | 1/7 | 1 | 2 | 1/5 | 5 | 1/5 |

| Focus on Conceptual Errors | 1/8 | 1/2 | 1 | 1/7 | 3 | 1/7 |

| Focus on Procedural Errors | 1/2 | 5 | 7 | 1 | 7 | 2 |

| Focus on Response Validation | 1/9 | 1/5 | 1/3 | 1/7 | 1 | 1/8 |

| Viability in Improvement Strategies | 1/3 | 5 | 7 | 1/2 | 8 | 1 |

| Criterion | Precision in Error Identification | Ease of Application | Focus on Conceptual Errors | Focus on Procedural Errors | Focus on Response Validation | Viability in Improvement Strategies | |

|---|---|---|---|---|---|---|---|

| Precision in Error Identification | 0.4520 | 0.3743 | 0.3158 | 0.5018 | 0.2727 | 0.4638 | 0.3967 |

| Ease of Application | 0.0646 | 0.0535 | 0.0789 | 0.0502 | 0.1515 | 0.0309 | 0.0716 |

| Focus on Conceptual Errors | 0.0565 | 0.0267 | 0.0395 | 0.0358 | 0.0909 | 0.0221 | 0.0453 |

| Focus on Procedural Errors | 0.2260 | 0.2674 | 0.2763 | 0.2509 | 0.2121 | 0.3092 | 0.2570 |

| Focus on Response Validation | 0.0502 | 0.0107 | 0.0132 | 0.0358 | 0.0303 | 0.0193 | 0.0266 |

| Viability in Improvement Strategies | 0.1507 | 0.2674 | 0.2763 | 0.1254 | 0.2424 | 0.1546 | 0.2028 |

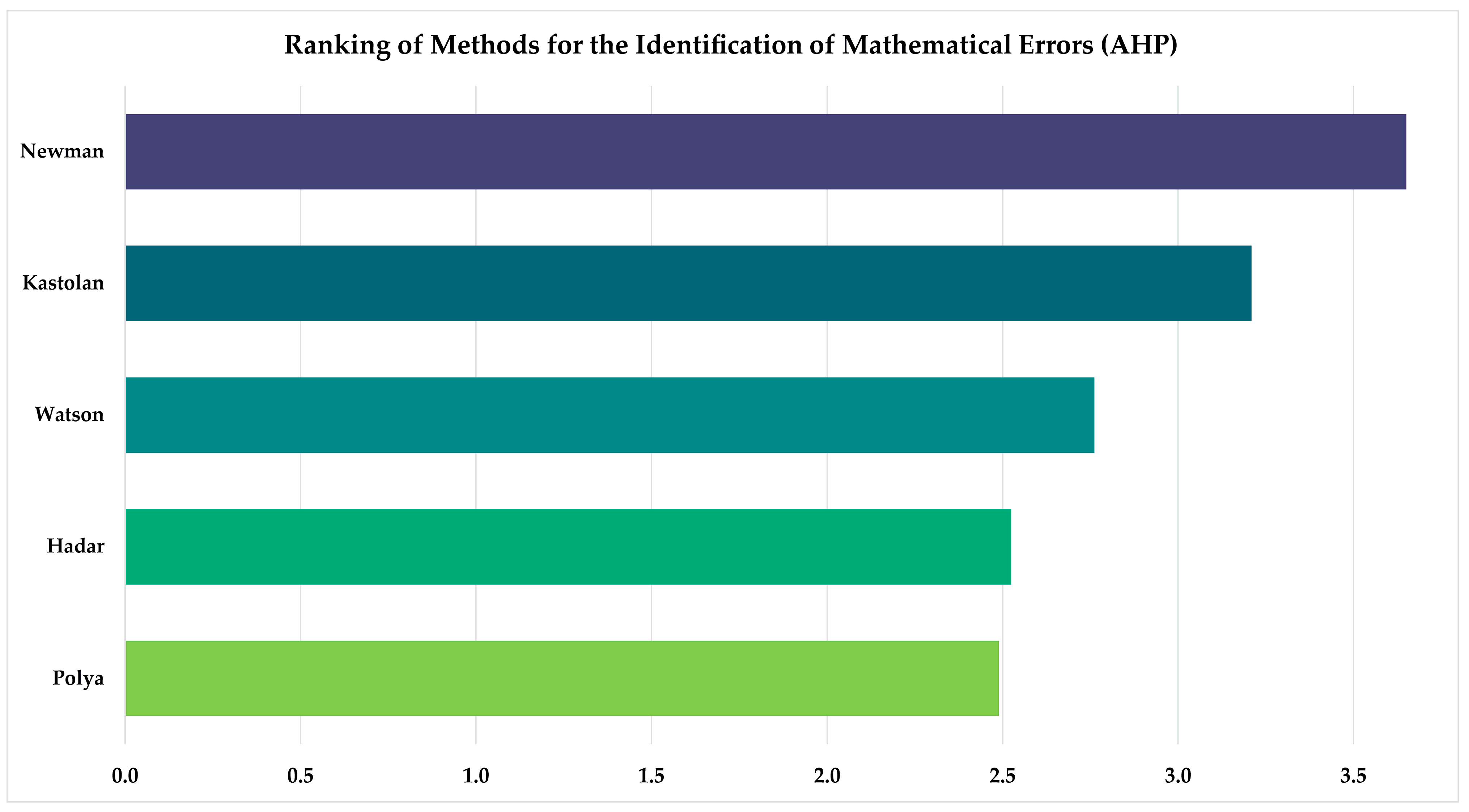

| Criteria | Precision in Error Identification | Ease of Application | Focus on Conceptual Errors | Focus on Procedural Errors | Focus on Response Validation | Viability in Improvement Strategies | Score | Ranking |

|---|---|---|---|---|---|---|---|---|

| Newman | 4.5 | 4.4 | 1.4 | 2.6 | 1.8 | 3.8 | 3.7 | 1 |

| Kastolan | 4.0 | 3.0 | 2.3 | 2.3 | 1.0 | 3.3 | 3.2 | 2 |

| Watson | 3.1 | 2.8 | 1.0 | 2.5 | 2.0 | 3.0 | 2.8 | 3 |

| Hadar | 3.0 | 2.8 | 2.3 | 1.7 | 2.3 | 2.7 | 2.5 | 4 |

| Polya | 2.6 | 3.3 | 2.0 | 2.0 | 2.0 | 2.8 | 2.5 | 5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Garcia Tobar, M.; Gonzalez Alvarez, N.; Martinez Bustamante, M. A Structured AHP-Based Approach for Effective Error Diagnosis in Mathematics: Selecting Classification Models in Engineering Education. Educ. Sci. 2025, 15, 827. https://doi.org/10.3390/educsci15070827

Garcia Tobar M, Gonzalez Alvarez N, Martinez Bustamante M. A Structured AHP-Based Approach for Effective Error Diagnosis in Mathematics: Selecting Classification Models in Engineering Education. Education Sciences. 2025; 15(7):827. https://doi.org/10.3390/educsci15070827

Chicago/Turabian StyleGarcia Tobar, Milton, Natalia Gonzalez Alvarez, and Margarita Martinez Bustamante. 2025. "A Structured AHP-Based Approach for Effective Error Diagnosis in Mathematics: Selecting Classification Models in Engineering Education" Education Sciences 15, no. 7: 827. https://doi.org/10.3390/educsci15070827

APA StyleGarcia Tobar, M., Gonzalez Alvarez, N., & Martinez Bustamante, M. (2025). A Structured AHP-Based Approach for Effective Error Diagnosis in Mathematics: Selecting Classification Models in Engineering Education. Education Sciences, 15(7), 827. https://doi.org/10.3390/educsci15070827