Integrating Digital Personalised Learning into Early-Grade Classroom Practice: A Teacher–Researcher Design-Based Research Partnership in Kenya

Abstract

1. Introduction

- What is the pedagogical role of the DPL tool in supporting learning?

- What is the pedagogical role of teachers in relation to the DPL tool?

2. Context

2.1. Defining Digital Personalised Learning

2.2. Comparing DPL Implementation Models

2.3. Investigating a Classroom-Integrated DPL Model in Kenya

3. Overarching Methodology

3.1. Theoretical Framework: Intermediate Theory Building

3.2. Methodology: Design-Based Research

3.3. Summary of Samples and Research Context

3.4. Informed Consent and Ethical Considerations

3.5. Overview of the DBR Phases

3.6. Presentation of the DBR Methods and Results

4. Five Phases of Design-Based Research: Methods and Results

4.1. Phase 1: Foundational Phase of Integrated, Multi-Disciplinary Methods

4.1.1. Design Conjecture and Iteration

4.1.2. Methods: Sample, Data Collection, and Analysis

4.1.3. Results

4.2. Phase 2: Iterative, Co-Learning Phase of Lesson Study

4.2.1. Design Conjecture and Iteration

4.2.2. Methods: Sample, Data Collection and Analysis

4.2.3. Results

4.3. Phase 3: A/B/C Software Testing at Scale

4.3.1. Design Conjecture and Iteration

4.3.2. Methods: Sample, Data Collection, and Analysis

4.3.3. Results

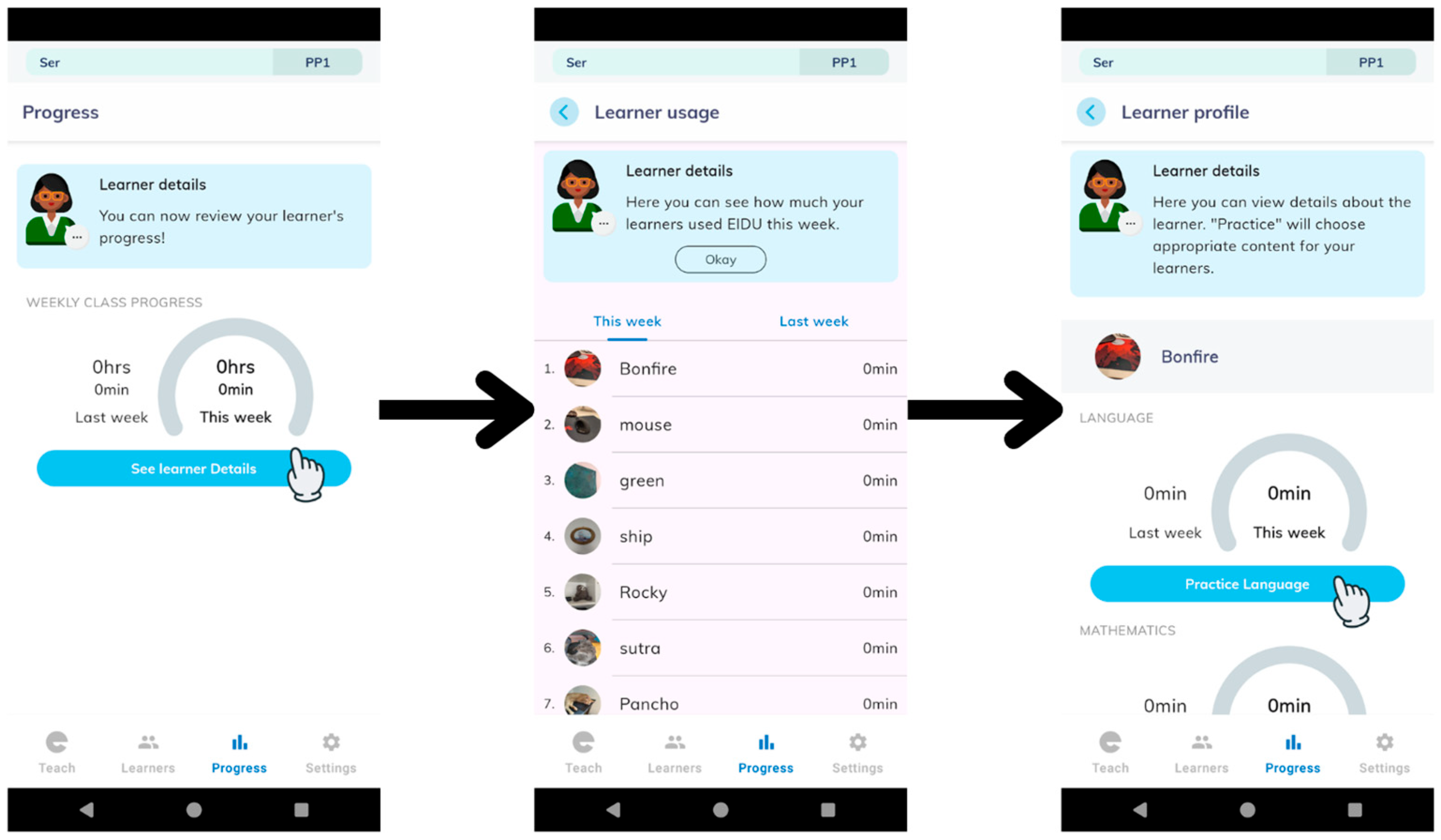

4.4. Phase 4: Mixed-Methods Hardware Pilot

4.4.1. Design Conjecture and Iteration

4.4.2. Methods: Sample, Data Collection, and Analysis

4.4.3. Results

4.5. Phase 5: Comparative Structured Pedagogy Pilot

4.5.1. Design Conjecture and Iteration

4.5.2. Methods: Sample, Data Collection, and Analysis

4.5.3. Results

5. Discussion

5.1. Limitations of the DBR Approach

5.2. Opportunities from the DBR Approach

5.3. The Pedagogical Role of the DPL Tool in Supporting Learning: A Chasm Between DPL Theory and Pedagogical Practice

5.4. The Pedagogical Role of Teachers in Relation to the DPL Tool: Altering Teaching Practices to Personalise DPL Implementation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CBC | Competency-based curriculum |

| DBR | Design-based research |

| DPL | Digital personalised learning |

| ECD | Early childhood development |

| EGM | Early Grade Mathematics |

| FGD | Focus group discussion |

| KII | Key informant interview |

| LCPPS | Low-cost private primary school |

| LMIC | Low- and middle-income country |

| LSTM | Long Short-Term Memory |

References

- Agyei, D. D. (2021). Integrating ICT into schools in Sub-Saharan Africa: From teachers’ capacity building to classroom implementation. Education and Information Technologies, 26(1), 125–144. [Google Scholar] [CrossRef]

- Aleven, V., McLaughlin, E. A., Glenn, R. A., & Koedinger, K. R. (2017). Instruction based on adaptive learning technologies. In R. E. Mayer, & P. Alexander (Eds.), Handbook of research on learning and instruction (2nd ed., pp. 522–560). Routledge. Available online: https://www.cs.cmu.edu/afs/.cs.cmu.edu/Web/People/aleven/Papers/2016/Aleven_etal_Handbook2016_AdaptiveLearningTechnologies_Prepub.pdf (accessed on 5 March 2025).

- Alrawashdeh, G. S., Fyffe, S., Azevedo, R. F. L., & Castillo, N. M. (2024). Exploring the impact of personalized and adaptive learning technologies on reading literacy: A global meta-analysis. Educational Research Review, 42, 100587. [Google Scholar] [CrossRef]

- Anderson, T., & Shattuck, J. (2012). Design-based research: A decade of progress in education research? Educational Researcher, 41(1), 16–25. [Google Scholar] [CrossRef]

- Angrist, N., Aurino, E., Patrinos, H. A., Psacharopoulos, G., Vegas, E., Nordjo, R., & Wong, B. (2023). Improving learning in low-and lower-middle-income countries. Journal of Benefit-Cost Analysis, 14(S1), 55–80. [Google Scholar] [CrossRef]

- Backfisch, I., Lachner, A., Stürmer, K., & Scheiter, K. (2021). Variability of teachers’ technology integration in the classroom: A matter of utility! Computers & Education, 166, 104159. [Google Scholar] [CrossRef]

- Bai, H. (2019). Preparing teacher education students to integrate mobile learning into elementary education. TechTrends, 63(6), 723–733. [Google Scholar] [CrossRef]

- Bakker, A. (2019). Design research in education: A practical guide for early career researchers. Routledge. [Google Scholar]

- Baylor, A. L., & Ritchie, D. (2002). What factors facilitate teacher skill, teacher morale, and perceived student learning in technology-using classrooms? Computers & Education, 39(4), 395–414. [Google Scholar] [CrossRef]

- Beetham, H., & Sharpe, R. (Eds.). (2013). Rethinking pedagogy for a digital age: Designing for 21st century learning. Routledge. [Google Scholar] [CrossRef]

- Bernacki, M. L., Greene, M. J., & Lobczowski, N. G. (2021). A systematic review of research on personalized learning: Personalized by whom, to what, how, and for what purpose(s)? Educational Psychology Review, 33(4), 1675–1715. [Google Scholar] [CrossRef]

- Bhutoria, A. (2022). Personalized education and artificial intelligence in the United States, China, and India: A systematic review using a human-in-the-loop model. Computers and Education: Artificial Intelligence, 3, 100068. [Google Scholar] [CrossRef]

- British Educational Research Association. (2018). Ethical guidelines for educational research (4th ed.). Available online: https://www.bera.ac.uk/researchers-resources/publications/ethical-guidelines-for-educational-research-2018 (accessed on 1 June 2024).

- Buhl, M., Hanghøj, T., & Henriksen, T. D. (2022). Reconceptualising design-based research: Between research ideals and practical implications. Nordic Journal of Digital Literacy, 17(4), 205–210. [Google Scholar] [CrossRef]

- Building Evidence in Education. (2015). Assessing the strength of evidence in the education sector. Available online: https://reliefweb.int/report/world/assessing-strength-evidence-education-sector (accessed on 1 June 2024).

- Daltry, R., Major, L., & Mbugua, C. (in press). Design and implementation factors for digital personalized learning in low-and middle-income countries. In M. Bernacki, C. Walkington, A. Emery, & L. Zhang (Eds.), Handbook of personalized learning. Routledge. [Google Scholar]

- De Melo, G., Machado, A., & Miranda, A. (2014). The impact of a one laptop per child program on learning: Evidence from Uruguay. IZA Discussion Paper No. 8489. Available online: https://docs.iza.org/dp8489.pdf (accessed on 7 February 2025).

- Dias, L. B. (1999). Integrating technology. Learning and Leading with Technology, 27(3), 10–21. Available online: https://citeseerx.ist.psu.edu/document?repid=rep1&type=pdf&doi=a3bd6816200436aed9eca8b63d0891250bec1a23 (accessed on 23 March 2025).

- Ertmer, P. A., Paul, A., Molly, L., Eva, R., & Denise, W. (1999). Examining teachers’ beliefs about the role of technology in the elementary classroom. Journal of Research on Computing in Education, 32(1), 54–72. [Google Scholar] [CrossRef]

- Fernandez, C., & Yoshida, M. (2004). Lesson study: A Japanese approach To improving mathematics teaching and learning. Routledge. [Google Scholar] [CrossRef]

- FitzGerald, E., Kucirkova, N., Jones, A., Cross, S., Ferguson, R., Herodotou, C., Hillaire, G., & Scanlon, E. (2018). Dimensions of personalisation in technology-enhanced learning: A framework and implications for design. British Journal of Educational Technology, 49(1), 165–181. [Google Scholar] [CrossRef]

- Friedberg, A. (2023). Can A/B testing at scale accelerate learning outcomes in low-and middle-income environments? In N. Wang, G. Rebolledo-Mendez, V. Dimitrova, N. Matsuda, & O. C. Santos (Eds.), Artificial intelligence in education. Posters and late breaking results, workshops and tutorials, industry and innovation tracks, practitioners, doctoral consortium and blue sky (Vol. 1831, pp. 780–787). Springer. [Google Scholar] [CrossRef]

- Gro, J. S. (2017). Personalized learning: The state of the field & future direction. Center for Curriculum Redesign. Available online: https://www.media.mit.edu/publications/personalized-learning/ (accessed on 30 January 2025).

- Hanfstingl, B., Rauch, F., & Zehetmeier, S. (2019). Lesson study, learning study and action research: Are there more differences than a discussion about terms and schools? Educational Action Research, 27(4), 455–459. [Google Scholar] [CrossRef]

- Hase, A., & Kuhl, P. (2024). Teachers’ use of data from digital learning platforms for instructional design: A systematic review. Educational Technology Research and Development, 72(4), 1925–1945. [Google Scholar] [CrossRef]

- Hennessy, S. (2014). Bridging between research and practice: Supporting professional development through collaborative studies of classroom teaching with technology. Brill. Available online: http://www.jstor.org/stable/10.1163/j.ctv29sfq0t (accessed on 2 March 2025).

- Hennessy, S., D’Angelo, S., McIntyre, N., Koomar, S., Kreimeia, A., Cao, L., Brugha, M., & Zubairi, A. (2022). Technology use for teacher professional development in low-and middle-income countries: A systematic review. Computers and Education, 3, 100080. [Google Scholar] [CrossRef]

- Hennessy, S., & Deaney, R. (2009). The impact of collaborative video analysis by practitioners and researchers upon pedagogical thinking and practice: A follow-up study. Teachers and Teaching, 15(5), 617–638. [Google Scholar] [CrossRef]

- Hoadley, C., & Campos, F. C. (2022). Design-based research: What it is and why it matters to studying online learning. Educational Psychologist, 57(3), 207–220. [Google Scholar] [CrossRef]

- Holmes, W., Anastopoulou, S., Schaumburg, H., & Mavrikis, M. (2018). Technology-enhanced personalised learning: Untangling the evidence. Robert Bosch Stiftung GmbH. Available online: http://www.studie-personalisiertes-lernen.de/en/ (accessed on 18 February 2025).

- Kelly, M. A. (2008). Bridging digital and cultural divides: TPCK for equity of access to technology. In P. Mishra, & M. J. Koehler (Eds.), Handbook of technological pedagogical content knowledge (TPCK) for educators. Routledge. [Google Scholar] [CrossRef]

- Kerkhoff, S. N., & Makubuya, T. (2022). Professional development on digital literacy and transformative teaching in a low-income country: A case study of rural Kenya. Reading Research Quarterly, 57(1), 287–305. [Google Scholar] [CrossRef]

- Knoop-van Campen, C. A. N., Wise, A., & Molenaar, I. (2023). The equalizing effect of teacher dashboards on feedback in K-12 classrooms. Interactive Learning Environments, 31(6), 3447–3463. [Google Scholar] [CrossRef]

- Lewis, C., Perry, R., & Murata, A. (2006). How should research contribute to instructional improvement? The case of lesson study. Educational Researcher, 35(3), 3–14. [Google Scholar] [CrossRef]

- Lin, L., Lin, X., Zhang, X., & Ginns, P. (2024). The personalized learning by interest effect on interest, cognitive load, retention, and transfer: A meta-analysis. Educational Psychology Review, 36, 88. [Google Scholar] [CrossRef]

- Liu, F., Ritzhaupt, A. D., Dawson, K., & Barron, A. E. (2017). Explaining technology integration in K-12 classrooms: A multilevel path analysis model. Educational Technology Research and Development, 65, 795–813. [Google Scholar] [CrossRef]

- Major, L., Daltry, R., Rahman, A., Plaut, D., Otieno, M., & Otieno, K. (2024). A dialogic design-based research partnership approach: Developing close-to-practice educational technology theory in Kenya. In A. Chigona, H. Crompton, & N. Tundra (Eds.), Global perspectives on teaching with technology: Theories, case studies, and integration strategies (pp. 246–264). Routledge. [Google Scholar] [CrossRef]

- Major, L., & Francis, G. A. (2020). Technology-supported personalised learning: Rapid evidence review. EdTech Hub Rapid Evidence Review. [Google Scholar] [CrossRef]

- Major, L., Francis, G. A., & Tsapali, M. (2021). The effectiveness of technology-supported personalised learning in low-and middle-income countries: A meta-analysis. British Journal of Educational Technology, 52(5), 1935–1964. [Google Scholar] [CrossRef]

- McKenney, S., & Reeves, T. (2018). Conducting educational design research. Routledge. [Google Scholar] [CrossRef]

- Molenaar, I. (2022). Towards hybrid human-AI learning technologies. European Journal of Education, 57, 632–645. [Google Scholar] [CrossRef]

- Molenaar, I., & Knoop-van Campen, C. A. (2019). How teachers make dashboard information actionable. IEEE Transactions on Learning Technologies, 12(3), 347–355. [Google Scholar] [CrossRef]

- Outhwaite, L., Ang, L., Herbert, E., Summer, E., & Van Herwegen, J. (2023). Technology and learning for early childhood and primary education. UNESCO. [Google Scholar] [CrossRef]

- Pelletier, C. (2024). Against personalised learning. International Journal of Artificial Intelligence in Education, 34(1), 111–115. [Google Scholar] [CrossRef]

- Pérez Echeverría, M., Cabellos, B., & Pozo, J.-I. (2025). The use of ICT in classrooms: The effect of the pandemic. Education and Information Technologies. [Google Scholar] [CrossRef]

- Reich, J. (2020). Failure to disrupt: Why technology alone can’t transform education. Harvard University Press. [Google Scholar] [CrossRef]

- Ritter, S., Murphy, A., & Fancsali, S. (2022, June 1). Curriculum-embedded experimentation. Third Workshop on A/B Testing and Platform-Enabled Research (Learning @ Scale 2022), New York, NY, USA. Available online: https://www.upgradeplatform.org/wp-content/uploads/2022/09/ABTestPlatLearn2022_Ritter_etal_DRAFT.pdf (accessed on 25 January 2025).

- Saldaña, J. (2021). The coding manual for qualitative researchers (4th ed.). SAGE. [Google Scholar]

- Sampson, D., & Karagiannidis, C. (2002). Personalised learning: Educational, technological and standardisation perspective. Interactive Educational Multimedia, 4, 24–39. Available online: https://www.researchgate.net/publication/228822599_Personalised_learning_Educational_technological_and_standardisation_perspective (accessed on 7 March 2025).

- Savi, A. O., Ruijs, N. M., Maris, G. K. J., & van der Maas, H. L. J. (2018). Delaying access to a problem-skipping option increases effortful practice: Application of an A/B test in large-scale online learning. Computers & Education, 119, 84–94. [Google Scholar] [CrossRef]

- Schmid, R., Pauli, C., Stebler, R., Reusser, K., & Petko, D. (2022). Implementation of technology-supported personalized learning—Its impact on instructional quality. The Journal of Educational Research, 115(3), 187–198. [Google Scholar] [CrossRef]

- Selwyn, N. (2020). ‘Just playing around with Excel and pivot tables’—The realities of data-driven schooling. Research Papers in Education, 37(1), 95–114. [Google Scholar] [CrossRef]

- Shiohira, K., & Holmes, W. (2023). Proceed with caution: The pitfalls and potential of AI and Education. In D. Araya, & P. Marber (Eds.), Augmented education in the global age artificial intelligence and the future of learning and work (1st ed.). Routledge. Available online: https://www.taylorfrancis.com/chapters/oa-edit/10.4324/9781003230762-11/proceed-caution-kelly-shiohira-wayne-holmes (accessed on 15 March 2025).

- Smørdal, O., Rasmussen, I., & Major, L. (2021). Supporting classroom dialogue through developing the Talkwall microblogging tool: Considering emerging concepts that bridge theory, practice, and design. Nordic Journal of Digital Literacy, 16(2), 50–64. [Google Scholar] [CrossRef]

- Sun, C., Major, L., Daltry, R., Moustafa, N., & Friedberg, A. (2024a). Teacher-AI collaboration in content recommendation for digital personalised learning among pre-primary learners in Kenya. In L@S ’24: Proceedings of the Eleventh ACM Conference on Learning @ Scale (pp. 346–350). Association for Computing Machinery. [Google Scholar] [CrossRef]

- Sun, C., Major, L., Moustafa, N., Daltry, R., & Friedberg, A. (2024b). Learner agency in personalised content recommendation: Investigating its impact in Kenyan pre-primary education. In Artificial intelligence in education. Posters and late breaking results, workshops and tutorials, industry and innovation tracks, practitioners, doctoral consortium and blue sky. AIED 2024. Springer. [Google Scholar] [CrossRef]

- Sun, C., Major, L., Moustafa, N., Daltry, R., Lazar, O., & Friedberg, A. (2024c). The impact of different personalisation algorithms on literacy and numeracy in Kenyan pre-primary education: A comparative study of summative and formative assessments results. In Companion proceedings 14th international conference on learning analytics & knowledge (LAK24) (pp. 109–111). Available online: https://www.solaresearch.org/wp-content/uploads/2024/03/LAK24_CompanionProceedings.pdf (accessed on 24 May 2025).

- Tailor, K. (2022). Evaluating the impact of technology-supported personalised learning interventions on the mathematics achievements of elementary students in India. Cambridge Educational Research e-Journal, 9, 198–209. [Google Scholar] [CrossRef]

- Tlili, A., Salha, S., Wang, H., Huang, R., Rudolph, J., & Weidong, R. (2024, July 1–4). Does personalization really help in improving learning achievement? A meta-analysis. 2024 IEEE International Conference on Advanced Learning Technologies (ICALT), Nicosia, Cyprus. [Google Scholar] [CrossRef]

- Tondeur, J., van Braak, J., Ertmer, P. A., & Ottenbreit-Leftwich, A. (2017). Understanding the relationship between teachers’ pedagogical beliefs and technology use in education: A systematic review of qualitative evidence. Educational Technology Research and Development, 65, 555–575. [Google Scholar] [CrossRef]

- UNESCO. (2023). Global education monitoring report, 2023: Technology in education: A tool on whose terms? UNESCO. [Google Scholar] [CrossRef]

- UNICEF. (2022). Trends in digital personalized learning in low-and middle-income countries. Available online: https://www.unicef.org/innocenti/reports/trends-digital-personalized-learning (accessed on 16 January 2025).

- Van Schoors, R., Elen, J., Raes, A., & Depaepe, F. (2021). An overview of 25 years of research on digital personalised learning in primary and secondary education: A systematic review of conceptual and methodological trends. British Journal of Educational Technology, 52, 1798–1822. [Google Scholar] [CrossRef]

- Van Schoors, R., Elen, J., Raes, A., Vanbecelaere, S., Rajagopal, K., & Depaepe, F. (2025). Teachers’ perceptions concerning digital personalized learning: Theory meet practice. Technology, Knowledge and Learning, 30, 833–859. [Google Scholar] [CrossRef]

- Vanbecelaere, S., & Benton, L. (2021). Technology-mediated personalised learning for younger learners: Concepts, design, methods and practice. British Journal of Educational Technology, 52, 1793–1797. [Google Scholar] [CrossRef]

- Vandewaetere, M., & Clarebout, G. (2014). Advanced technologies for personalized learning, instruction, and performance. In J. M. Spector, M. D. Merrill, M. J. Jan Elen, & Bishop (Eds.), Handbook of research on educational communications and technology (pp. 425–437). Springer. [Google Scholar] [CrossRef]

- Verbert, K., Ochoa, X., De Croon, R., Dourado, R. A., & De Laet, T. (2020, March 23–27). Learning analytics dashboards: The past, the present and the future. Proceedings of the Tenth International Conference on Learning Analytics & Knowledge, Frankfurt, Germany. [Google Scholar] [CrossRef]

- Wang, F., & Hannafin, M. J. (2005). Design-based research and technology-enhanced learning environments. Educational Technology Research and Development, 53(4), 5–23. [Google Scholar] [CrossRef]

- Warwick, P., Vrikki, M., Vermunt, J. D., Mercer, N., & van Halem, N. (2016). Connecting observations of student and teacher learning: An examination of dialogic processes in lesson study discussions in mathematics. ZDM—Mathematics Education, 48, 555–569. [Google Scholar] [CrossRef]

- Watters, A. (2021). Teaching machines: The history of personalized learning. MIT Press. [Google Scholar]

- Zhang, L., Basham, J. D., & Carter, R. A., Jr. (2022). Measuring personalized learning through the Lens of UDL: Development and content validation of a student self-report instrument. Studies in Educational Evaluation, 72, 101121. [Google Scholar] [CrossRef]

- Zheng, L., Long, M., Zhong, L., & Fosua Gyasi, J. (2022). The effectiveness of technology-facilitated personalized learning on learning achievements and learning perceptions: A meta-analysis. Education and Information Technologies, 27, 11807–11830. [Google Scholar] [CrossRef]

- Zubairi, A., Kreimeia, A., Jefferies, K., & Nicolai, S. (2021). EdTech to reach the most marginalised: A call to action [FP-ETH Position paper]. EdTech Hub. [Google Scholar] [CrossRef]

| Substitute | Supplementary | Integrative |

|---|---|---|

| Instruction delivered solely through DPL technology, in lieu of teaching. | DPL technology implemented outside of regular classroom instruction, with or without teacher guidance. | Coexistence of teachers and DPL technology, with the technology designed to facilitate teaching and learning and be curriculum-aligned. |

| DBR Phase | Sample Size | Research Context |

|---|---|---|

| Phase 1 | 22 teachers and their classes (c. 550 learners) from 6 schools; 6 headteachers; 6 early childhood development officers; and 6 EIDU staff | Pre-primary government schools in Mombasa county |

| Phase 2 | 6 teachers and their classes (c. 430 learners) from 3 schools | Pre-primary government schools in Mombasa county |

| Phase 3 | 366,906 learners from 4151 schools (post-test) and 253,184 learners from 3857 schools (pre-test) | Pre-primary government schools in 5 counties (Embu, Machakos, Makueni, Murang’a, Nakuru) |

| Phase 4 | 324 teachers from 149 schools; 10 classes (c. 560 learners) | Low-cost private primary schools in Nairobi |

| Phase 5 | 45 teachers from 20 schools | Low-cost private primary schools in Nairobi |

| DBR Phase | Conjecture | Iteration |

|---|---|---|

| Scoping and initiating dialogue (May 2022) | ||

| 1. Foundational phase of integrated multi-disciplinary methods (June–July 2022) | Integrating new technology into classrooms can have an unpredictable and complex impact on existing pedagogy. | No iteration, in order to investigate perspectives and practices in relation to the existing implementation of the DPL tool. |

| Implementation iteration workshop #1 (August 2022) | ||

| 2. Iterative, co-learning phase of lesson study (October–November 2022) | Implementation of the DPL tool into classrooms is influenced by factors including when it is used, by which learners and how many devices are available. | Iteration (by teachers) of which learners are prioritised to receive the DPL tool and the introduction of a second device into classrooms. |

| Implementation iteration workshop #2 (November 2022) | ||

| Innovation sandbox (April–November 2023) | ||

| Evaluating practical and theoretical contributions (November 2023–February 2024) | ||

| 3. A/B/C software testing at scale (January–March 2024) | Providing data from the DPL tool to teachers can influence pedagogical practice in a way which impacts device usage. | Iteration of the DPL tool’s teacher interface, with two groups receiving usage data via a dashboard, one of which has onboarding messages, and a further group not provided with the dashboard. |

| 4. Mixed-methods hardware pilot (March–October 2024) | Increasing the device-to-learner ratio will impact classroom management. | Iteration of hardware, with a 10:1 device-to-learner ratio implemented and headphones introduced as a mitigation strategy. |

| 5. Comparative structured pedagogy pilot (March–September 2024) | Teachers’ agency to decide which substrand is selected on the DPL tool determines the way in which the tool is aligned with classroom practice. | Iteration of the intervention, with one group of schools provided with digitised lesson plans aligned with the DPL content, and one group provided with the DPL functionality only. |

| Sub-RQ 1: What is the pedagogical role of the DPL tool in supporting learning? | Sub-RQ 2: What is the pedagogical role of teachers in relation to the DPL tool? | |

| Phase 1 | (1a) Variation in when the DPL tool is used during the school day | (1d) Distribution of the DPL tool |

| (1b) Teachers’ conceptualisations of the purpose of the DPL tool | ||

| (1c) Suggested improvements to the DPL tool | ||

| Phase 2 | (2a) Teachers’ reasoning for distributing the DPL tool at certain times during the school day | (2b) Teachers providing personal support to learners |

| (2c) Teachers personalising which learners receive the DPL tool | ||

| (2d) Impact of an additional device on class management | ||

| Phase 3 | (3a) Impact of the usage data dashboard on overall DPL tool usage | |

| (3b) Impact of the usage data dashboard on equality of use of the DPL tool | ||

| Phase 4 | (4a) Teachers’ conceptualisations of the purpose of the DPL tool | (4b) Impact of the increased device-to-learner ratio on teachers’ distribution of the DPL tool |

| (4c) Impact of introducing headphones on teachers | ||

| Phase 5 | (5a) Teachers’ reasoning for substrand selection on the DPL tool |

| Curriculum Alignment | Device Distribution | DPL Use |

|---|---|---|

|

|

|

| Top Three Classroom Activities During Which the DPL Tool Was Used by Learners (Frequency of Observed Use) | Proportion of All Occurrences of Each Activity During Which the DPL Tool Was Used |

|---|---|

| Break/lunchtime | 46% |

| Free time | 80% |

| Independent classwork | 44% |

| Curriculum Alignment | Device Distribution | DPL Use |

|---|---|---|

|

|

|

| Case School 1 | Case School 2 | Case School 3 | |

|---|---|---|---|

| School | Urban, c. 200 pre-primary learners | Peri-urban, c. 150 pre-primary learners | CBD, c. 80 pre-primary learners |

| PP1 class | 1 teacher (female) and c. 100 learners | 1 teacher (female), 1 teaching assistant (female), and c. 70 learners | 1 teacher (female), 1 teaching assistant (female), and c. 40 learners |

| PP2 class | 1 teacher (female) and c. 80 learners | 1 teacher (male) and c. 33 learners | 1 teacher (female), 1 teaching assistant (female), and c. 25 learners |

| Curriculum Alignment | Device Distribution | DPL Use |

|---|---|---|

|

|

|

| Pre-Test Usage | Post-Test Usage | |||

|---|---|---|---|---|

| Mean | SD | Mean | SD | |

| No Dashboard | 101.40 | 58.30 | 79.70 | 45.72 |

| Onboarding Dashboard | 103.01 | 59.21 | 83.50 | 49.69 |

| Dashboard | 101.49 | 58.57 | 79.59 | 45.13 |

| Coefficient | Z | p | |

|---|---|---|---|

| Pre-test usage | |||

| Intercept | 95.61 | 72.85 | <0.001 |

| Partition (Onboarding Dashboard) | 1.41 | 0.76 | 0.449 |

| Partition (Dashboard) | −0.17 | −0.09 | 0.928 |

| Post-test usage | |||

| Intercept | 79.66 | 70.33 | <0.001 |

| Partition (Onboarding Dashboard) | 3.81 | 2.37 | 0.018 |

| Partition (Dashboard) | −0.07 | −0.05 | 0.964 |

| Mean | SD | |

|---|---|---|

| No Dashboard | 57.70 | 35.86 |

| Onboarding Dashboard | 59.33 | 39.45 |

| Dashboard | 56.85 | 36.32 |

| Curriculum Alignment | Device Distribution | DPL Use |

|---|---|---|

|

|

|

| Curriculum Alignment | Device Distribution | DPL Use |

|---|---|---|

|

|

|

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Daltry, R.; Hinks, J.; Sun, C.; Major, L.; Otieno, M.; Otieno, K. Integrating Digital Personalised Learning into Early-Grade Classroom Practice: A Teacher–Researcher Design-Based Research Partnership in Kenya. Educ. Sci. 2025, 15, 698. https://doi.org/10.3390/educsci15060698

Daltry R, Hinks J, Sun C, Major L, Otieno M, Otieno K. Integrating Digital Personalised Learning into Early-Grade Classroom Practice: A Teacher–Researcher Design-Based Research Partnership in Kenya. Education Sciences. 2025; 15(6):698. https://doi.org/10.3390/educsci15060698

Chicago/Turabian StyleDaltry, Rebecca, Jessica Hinks, Chen Sun, Louis Major, Mary Otieno, and Kevin Otieno. 2025. "Integrating Digital Personalised Learning into Early-Grade Classroom Practice: A Teacher–Researcher Design-Based Research Partnership in Kenya" Education Sciences 15, no. 6: 698. https://doi.org/10.3390/educsci15060698

APA StyleDaltry, R., Hinks, J., Sun, C., Major, L., Otieno, M., & Otieno, K. (2025). Integrating Digital Personalised Learning into Early-Grade Classroom Practice: A Teacher–Researcher Design-Based Research Partnership in Kenya. Education Sciences, 15(6), 698. https://doi.org/10.3390/educsci15060698