Mentorship in the Age of Generative AI: ChatGPT to Support Self-Regulated Learning of Pre-Service Teachers Before and During Placements

Abstract

1. Introduction

- How can the Mentoring and Self-Regulated Learning Pyramid Model help conceptualise the role of gen-AI in supporting mentoring and SRL development during WIL placements?

- In what ways does the integration of gen-AI tools influence the development of SRL among PSTs during WIL placements?

- How can course design and implementation structures be optimised to use gen-AI effectively in preparing PSTs for SRL and autonomy in WIL?

2. Materials and Methods

2.1. Course Context

2.2. Data Collection Methods

2.3. Survey

- Mentor support (questions 1–5);

- SRL strategies (questions 6–9);

- Gen-AI usage (questions 10–20).

2.4. 1:1 Semi-Structured Interview

2.5. Placement Report Data

3. Results

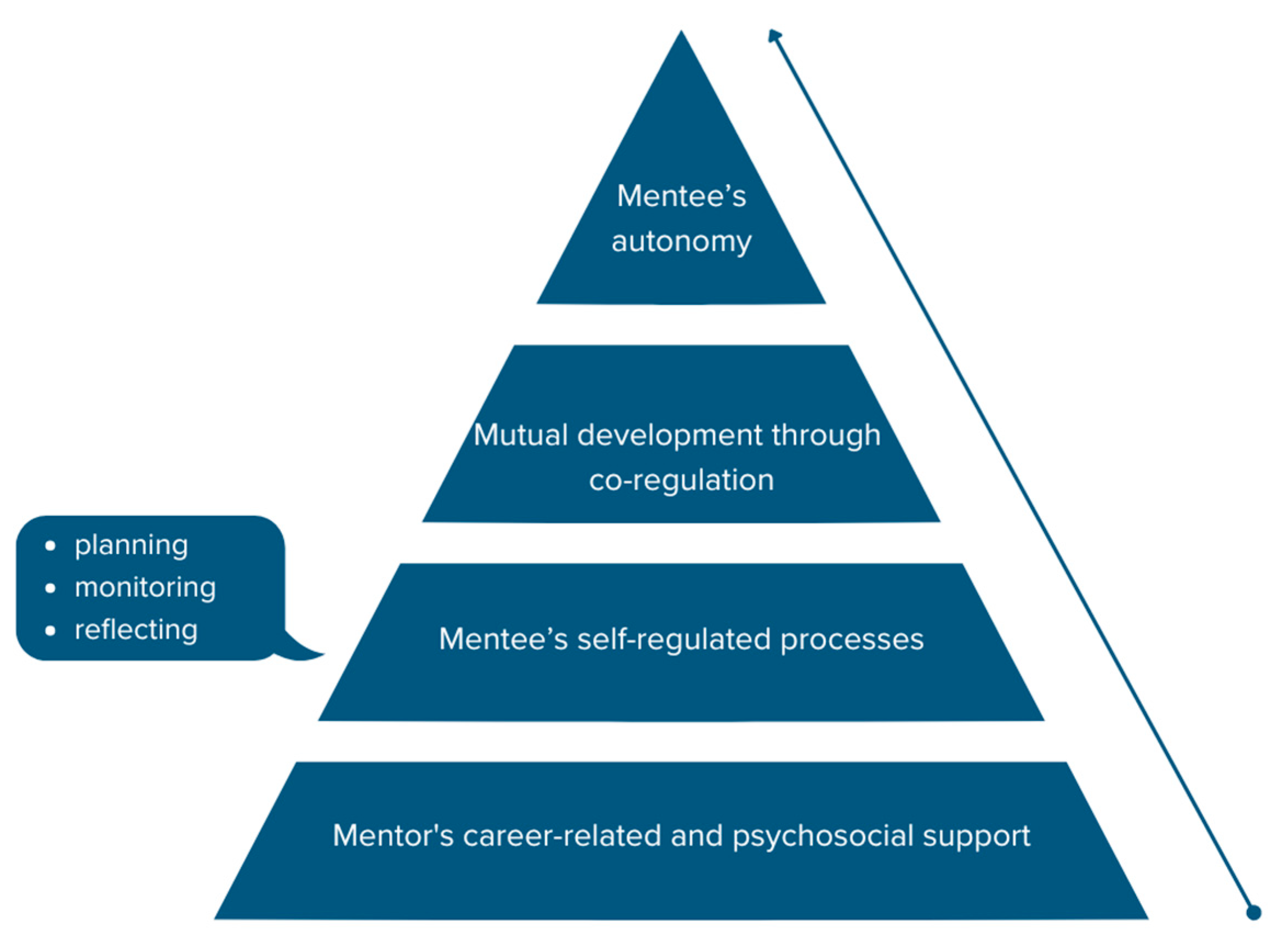

3.1. Research Question 1: Conceptualising the Role of Gen-AI in Mentoring and SRL Through the Mentoring and SRL Pyramid Model (MSPM)

“AI helps fill in the blanks when my mentor is too busy to explain something, but I still need my mentor to actually tell me what they expect from me in this school”.(PST4)

“AI sort of nudges you to see what you might’ve missed. Like, it’ll suggest, ‘Have you considered XYZ?’ It gets you to really reflect on things”.(PST2)

“If the technology is there, why not use it, right? […] I feel more confident about my choices when I can double-check with ChatGPT, but sometimes I feel stupid to have to rely on a machine to do something…”(PST1)

“Honestly, I didn’t even think of using AI for lesson structuring until my PST showed me what they were doing. Now I see it could actually save time—so we started using it together”.(MT4)

“At times, I can be too eager to implement AI suggestions, ‘cause you know, they sound so reasonable, and plausible […] then I realised AI can’t know the limitations of the situation’”.(PST8)

3.2. Research Question 2: The Influence of Gen-AI on SRL in WIL Placements

3.3. Research Question 3: Optimising Course Design for AI Integration in WIL Placements

“Mentors often didn’t have the time to provide feedback until long after the lesson […] I’d be left wondering if the lesson was any good […] so I would enter observations of how students reacted to my teaching and then AI would sort of explain it, rationalise it all”.

“My mentor typically listed all the things I did wrong in a lesson. They’re trying to be helpful but it can hit you hard. So I would pump their feedback through Chat for advice on how to improve and Chat would be so positive and encouraging, it made me feel better”.(PST9)

“Often my mentor would give me feedback on a lesson plan first thing in the morning of the same day I was due to teach it. That short time was only enough for me to get AI to help me adjust my notes before class, so I was able to act on the feedback in time”.

3.4. Placement Scores

“I saw [PST] using AI to create all sorts of documents, which is fine, but then also for how to manage behaviour in class. This didn’t work and some of the advice given would have failed in practice. I encouraged [PST] to rely on me for this and over time there was improvement”.

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| Gen-AI | Generative Artificial Intelligence |

| WIL | Work-Integrated Learning |

| PST | Pre-Service Teachers |

| SRL | Self-Regulated Learning |

| MSPM | Mentoring and Self-Regulated Learning Pyramid Model |

| APST | Australian Professional Standards for Teachers |

| ITE | Initial Teacher Education |

Appendix A

| Survey Questions | MSPM Theme | Average Response |

|---|---|---|

| 1. How often did your mentor teacher provide constructive feedback during your WIL placement? | Mentoring | 3.26 |

| 2. How clear was your mentor teacher about the criteria for evaluating your performance? | Mentoring | 3.19 |

| 3. To what extent did you feel supported by your mentor teacher in experimenting with new teaching methods? | Mentoring | 2.91 |

| 4. How would you rate the emotional support provided by your mentor teacher during challenging moments in the classroom? | Mentoring | 2.91 |

| 5. To what extent did your mentor teacher allow for autonomy in your teaching decisions? | Mentoring | 3.10 |

| 6. How often did you set specific teaching goals before each lesson during WIL placement? | SRL | 3.18 |

| 7. How often did you monitor your progress towards your teaching goals during your placement? | SRL | 3.58 |

| 8. To what extent did you adjust your teaching strategies based on feedback received during WIL? | SRL | 3.56 |

| 9. How confident are you in your ability to self-assess your teaching effectiveness after completing your placement? | SRL | 3.07 |

| 10. How supportive was your school site in your use of AI during your WIL processes? | AI | 3.23 |

| 11. How effective were AI tools in providing feedback about your WIL processes? | AI | 3.72 |

| 12. To what extent did AI tools help clarify mentor expectations for your performance during WIL placements? | AI | 3.21 |

| 13. How useful were AI tools in providing emotional support or reassurance during challenging moments of your WIL placement? | AI | 4.09 |

| 14. To what degree did AI tools assist in filling gaps in mentor feedback when mentor availability was limited? | AI | 4.25 |

| 15. To what extent did AI tools help you improve your behaviour management throughout the WIL placement? | AI | 3.00 |

| 16. How effectively did AI tools encourage you to reflect on your teaching performance after each lesson? | AI | 4.22 |

| 17. How useful were AI tools in suggesting adjustments to your teaching strategies based on feedback received during the placement? | AI | 4.27 |

| 18. How useful were AI tools in helping you set specific teaching goals during WIL placements? | AI | 4.19 |

| 19. To what degree did AI tools support your ability to self-assess and adjust your teaching strategies autonomously? | AI | 4.19 |

| 20. To what extent did AI tools reduce your stress during WIL placements? | AI | 3.41 |

References

- Al-Zahrani, A. M. (2024). Unveiling the shadows: Beyond the hype of AI in education. Heliyon, 10(9), e30696. [Google Scholar] [CrossRef] [PubMed]

- Ambrosetti, A. (2010). Mentoring and learning to teach: What do pre-service teachers expect to learn from their mentor teachers? International Journal of Learning, 17(9), 117–132. [Google Scholar] [CrossRef]

- Ambrosetti, A. (2014). Teaching and learning materials utilised in professional development for mentor teachers. IARTEM E-Journal, 6(1), 49–63. [Google Scholar]

- Ambrosetti, A., & Davis, S. (2016). Mentor. M/C Journal, 19(2). [Google Scholar] [CrossRef]

- Ambrosetti, A., & Dekkers, J. (2010). The interconnectedness of the roles of mentors and mentees in pre-service teacher education mentoring relationships. The Australian Journal of Teacher Education, 35(6), 42–55. [Google Scholar] [CrossRef]

- Anderson, M. K., Tenenbaum, L. S., Ramadorai, S. B., & Yourick, D. L. (2015). Near-peer mentor model: Synergy within mentoring. Mentoring & Tutoring: Partnership in Learning, 23(2), 149–163. [Google Scholar]

- Aprile, K. T., & Knight, B. A. (2020). The WIL to learn: Students’ perspectives on the impact of work-integrated learning placements on their professional readiness. Higher Education Research and Development, 39(5), 869–882. [Google Scholar] [CrossRef]

- Ayanwale, M. A., Adelana, O. P., Molefi, R. R., Adeeko, O., & Ishola, A. M. (2024). Examining artificial intelligence literacy among pre-service teachers for future classrooms. Computers and Education Open, 6, 100179. [Google Scholar] [CrossRef]

- Ballantyne, J., & Retell, J. (2020). Teaching careers: Exploring links between well-being, burnout, self-efficacy and praxis shock. Frontiers in Psychology, 10, 2250–2255. [Google Scholar] [CrossRef]

- Barbieri, W., & Nguyen, N. (2025). Generative AI as a “placement buddy”: Supporting pre-service teachers in work-integrated learning, self-management and crisis resolution. Australasian Journal of Educational Technology. [Google Scholar] [CrossRef]

- Bembenutty, H., Kitsantas, A., DiBenedetto, M. K., Wigfield, A., Greene, J. A., Usher, E. L., Bong, M., Cleary, T. J., Panadero, E., Mullen, C. A., & Chen, P. P. (2024). Harnessing motivation, self-efficacy, and self-regulation: Dale H. Schunk’s enduring influence. Educational Psychology Review, 36(4), 139. [Google Scholar] [CrossRef]

- Bipath, K. (2022). Enriching the professional identity of early childhood development teachers through mentorship. The Independent Journal of Teaching and Learning, 17(1), 137–150. [Google Scholar] [CrossRef]

- Böke, B. N., Petrovic, J., Zito, S., Sadowski, I., Carsley, D., Rodger, S., & Heath, N. L. (2024). Two for one: Effectiveness of a mandatory personal and classroom stress management program for preservice teachers. School Psychology, 39(3), 312. [Google Scholar] [CrossRef]

- Braun, V., & Clarke, V. (2022). Thematic analysis: A practical guide. SAGE Publications Ltd. [Google Scholar]

- Chang, L.-C., Chiu, C.-W., Hsu, C.-M., Liao, L.-L., & Lin, H.-L. (2019). Examining the implementation of teaching and learning interactions of transition cultural competence through a qualitative study of Taiwan mentors untaking the postgraduate nursing program. Nurse Education Today, 79, 74–79. [Google Scholar] [CrossRef]

- Cobern, W., Adams, B., & Kara, İ. (2020). Establishing survey validity: A practical guide. International Journal of Assessment Tools in Education, 7(3), 404–419. [Google Scholar] [CrossRef]

- Corcoran, R. P., & O’Flaherty, J. (2022). Social and emotional learning in teacher preparation: Pre-service teacher well-being. Teaching and Teacher Education, 110, 103563. [Google Scholar] [CrossRef]

- Ding, A.-C. E., Shi, L., Yang, H., & Choi, I. (2024). Enhancing teacher AI literacy and integration through different types of cases in teacher professional development. Computers and Education Open, 6, 100178. [Google Scholar] [CrossRef]

- Ding, J., Alroobaea, R., Baqasah, A. M., Althobaiti, A., & Miglani, R. (2022). Big data intelligent collection and network analysis based on artificial intelligence. Informatica (Ljubljana), 46(3), 383–392. [Google Scholar] [CrossRef]

- Doroudi, S. (2023). The intertwined histories of artificial intelligence and education. International Journal of Artificial Intelligence in Education, 33(4), 885–928. [Google Scholar] [CrossRef]

- Edwards, A., & Nutall, G. (2015). Praxis shock: A real challenge for novice teachers. Journal of Teacher Education, 66(3), 213–223. [Google Scholar]

- Ensher, E. A., & Murphy, S. E. (2011). The mentoring relationship challenges scale: The impact of mentoring stage, type, and gender. Journal of Vocational Behavior, 79(1), 253–266. [Google Scholar] [CrossRef]

- Gabay, V., Voyles, S., Algozzini, L., Batchelor, G., Makhanya, M., Blessinger, P., Hoffman, J., Blessinger, P., Makhanya, M., & Hoffman, J. (2019). Using virtual communities of practice to coach and mentor faculty to facilitate engaging critical consciousness. In Strategies for facilitating inclusive campuses in higher education (Vol. 17, pp. 103–116). Emerald Publishing Limited. [Google Scholar] [CrossRef]

- Gao, X., & Brown, G. T. L. (2023). The relation of students’ conceptions of feedback to motivational beliefs and achievement goals: Comparing Chinese international students to New Zealand domestic students in higher education. Education Sciences, 13(11), 1090. [Google Scholar] [CrossRef]

- Ghiţulescu, R. D. (2021). La relation mentorale. Journal of Humanistic and Social Studies, 12(2), 109–116. [Google Scholar]

- Hashem, R., Ali, N., El Zein, F., Fidalgo, P., & Abu Khurma, O. (2024). AI to the rescue: Exploring the potential of ChatGPT as a teacher ally for workload relief and burnout prevention. Research and Practice in Technology Enhanced Learning, 19, 23. [Google Scholar] [CrossRef]

- Hasson, J. D. (2018). Class placements and competing priorities. The Journal of Cases in Educational Leadership, 21(4), 25–33. [Google Scholar] [CrossRef]

- Hensen, B., Bekhter, D., Blehm, D., Meinberger, S., Klamma, R., Sacco, M., Arpaia, P., De Paolis, L. T., De Paolis, L. T., Sacco, M., & Arpaia, P. (2022). Mixed reality agents for automated mentoring processes. In Extended reality (Vol. 13446, pp. 3–16). Springer. [Google Scholar] [CrossRef]

- Hobson, A. J., Ashby, P., Malderez, A., & Tomlinson, P. D. (2009). Mentoring beginning teachers: What we know and what we don’t. Teaching and Teacher Education, 25(1), 207–216. [Google Scholar] [CrossRef]

- Hordvik, M., MacPhail, A., & Ronglan, L. T. (2019). Negotiating the complexity of teaching: A rhizomatic consideration of pre-service teachers’ school placement experiences. Physical Education and Sport Pedagogy, 24(5), 447–462. [Google Scholar] [CrossRef]

- Hudson, P. (2016). Forming the mentor-mentee relationship. Mentoring & Tutoring, 24(1), 30–43. [Google Scholar] [CrossRef]

- Jackson, D. (2015). Employability skill development in work-integrated learning: Barriers and best practice. Studies in Higher Education, 40(2), 350–367. [Google Scholar] [CrossRef]

- Kang, H. (2021). The role of mentor teacher–mediated experiences for preservice teachers. Journal of Teacher Education, 72(2), 251–263. [Google Scholar] [CrossRef]

- Karahan, E. (2023). Using video-elicitation focus group interviews to explore pre-service science teachers’ views and reasoning on artificial intelligence. International Journal of Science Education, 45(15), 1283–1302. [Google Scholar] [CrossRef]

- Karan, B., & Angadi, G. R. (2024). Potential risks of artificial intelligence integration into school education: A systematic review. Bulletin of Science, Technology & Society, 43(3–4), 67–85. [Google Scholar] [CrossRef]

- Katz-Buonincontro, J. (2022). How to interview and conduct focus groups (1st ed.). American Psychological Association. [Google Scholar]

- Kaufmann, E. (2021). Algorithm appreciation or aversion? Comparing in-service and pre-service teachers’ acceptance of computerized expert models. Computers and Education: Artificial Intelligence, 2, 100028. [Google Scholar] [CrossRef]

- Klamma, R., de Lange, P., Neumann, A. T., Hensen, B., Kravcik, M., Wang, X., Kuzilek, J., Troussas, C., Kumar, V., Kumar, V., & Troussas, C. (2020). Scaling mentoring support with distributed artificial intelligence. In Intelligent tutoring systems (Vol. 12149, pp. 38–44). Springer International Publishing AG. [Google Scholar] [CrossRef]

- Kong, S.-C., Korte, S.-M., Burton, S., Keskitalo, P., Turunen, T., Smith, D., Wang, L., Lee, J. C.-K., & Beaton, M. C. (2024). Artificial Intelligence (AI) literacy—An argument for AI literacy in education. Innovations in Education and Teaching International, 62, 477–483. [Google Scholar] [CrossRef]

- Kong, S.-C., & Liu, B. (2023). Supporting the self-regulated learning or primary school students with a performance-based assessment platform for programming education. Journal of Educational Computing Research, 61(5), 977–1007. [Google Scholar] [CrossRef]

- Koong, L., Hao-Chiang, T., Meng-Chun, W., Ta, H., & Lu, W.-Y. (2024). Application of wsq (watch-summary-question) flipped teaching in affective conversational robots: Impacts on learning emotion, self-directed learning and learning effectiveness of senior high school students. International Journal of Human-Computer Interaction, 41, 4319–4336. [Google Scholar] [CrossRef]

- Köbis, L., & Mehner, C. (2021). Ethical questions raised by AI-supported mentoring in higher education. Frontiers in Artificial Intelligence, 4, 624050. [Google Scholar] [CrossRef]

- Kuhn, C., Hagenauer, G., Gröschner, A., & Bach, A. (2024). Mentor teachers’ motivations and implications for mentoring style and enthusiasm. Teaching and Teacher Education, 139, 104441. [Google Scholar] [CrossRef]

- Lai, E. (2010). Getting in step to improve the quality of in-service teacher learning through mentoring. Professional Development in Education, 36(3), 443–469. [Google Scholar] [CrossRef]

- LaPalme, M., Luo, P., Cipriano, C., & Brackett, M. (2022). Imposter syndrome among pre-service educators and the importance of emotion regulation. Frontiers in Psychology, 13, 838575. [Google Scholar] [CrossRef]

- Le Cornu, R. J. (2012). School co-ordinators: Leaders of learning in professional experience. The Australian Journal of Teacher Education, 37(3), 18–33. [Google Scholar] [CrossRef]

- Lee, S., & Park, G. (2024). Development and validation of ChatGPT literacy scale. Current Psychology, 43(21), 18992–19004. [Google Scholar] [CrossRef]

- Loughland, T., Winslade, M., & Eady, M. J. (Eds.). (2023). Work-integrated learning case studies in teacher education: Epistemic reflexivity (1st ed.). Springer. [Google Scholar] [CrossRef]

- Ma, S., & Lei, L. (2024). The factors influencing teacher education students’ willingness to adopt artificial intelligence technology for information-based teaching. Asia Pacific Journal of Education, 44(1), 94–111. [Google Scholar] [CrossRef]

- Matthewman, L., Jodhan-Gall, D., Nowlan, J., O’Sullivan, N., & Patel, Z. (2018). Primed, prepped and primped: Reflections on enhancing student wellbeing in tertiary education. Psychology Teaching Review, 24(1), 67–76. [Google Scholar] [CrossRef]

- Mishra, P., Warr, M., & Islam, R. (2023). TPACK in the age of ChatGPT and Generative AI. Journal of Digital Learning in Teacher Education, 39(4), 235–251. [Google Scholar] [CrossRef]

- Moosa, M. (2018). Promoting quality learning experiences in teacher education: What mentor teachers expect from pre-service teachers during teaching practice. The Independent Journal of Teaching and Learning, 13(1), 57–68. [Google Scholar]

- Moulding, L. R., Stewart, P. W., & Dunmeyer, M. L. (2014). Pre-service teachers’ sense of efficacy: Relationship to academic ability, student teaching placement characteristics, and mentor support. Teaching and Teacher Education, 41, 60–66. [Google Scholar] [CrossRef]

- Mullen, C. A., & Tuten, E. M. (2010). Doctoral cohort mentoring interdependence, collaborative learning, and cultural change. Scholar-Practitioner Quarterly, 4, 11–32. [Google Scholar]

- Nam, C. S., Jung, J.-Y., & Lee, S. (2022). Human-centered artificial intelligence: Research and applications (1st ed.). Elsevier Science & Technology. [Google Scholar] [CrossRef]

- Ndebele, C., & Legg-Jack, D. W. (2024). Exploring the impact of mentors’ observation feedback on postgraduate pre-service teachers’ development. Prizren Social Science Journal, 8(2), 1–10. [Google Scholar] [CrossRef]

- Ng, E. M. W. (2016). Fostering pre-service teachers’ self-regulated learning through self-and peer assessment. Computers & Education, 98, 180–191. [Google Scholar]

- Nicol, D. J., & Macfarlane-Dick, D. (2006). Formative assessment and self-regulated learning: A model and seven principles of good feedback practice. Studies in Higher Education (Dorchester-on-Thames), 31(2), 199–218. [Google Scholar] [CrossRef]

- Nigate, D. A., Dawit, M. M., & Solomon, A. K. (2023). Mentoring during school practicum: Mentor-mentee relationship, roles assumed, and focus of feedback. International Journal of Work-Integrated Learning, 24(4), 491–502. [Google Scholar]

- Onen, A. S., & Ulusoy, F. M. (2015). Investigating of the relationship between pre-service teachers’ self-esteem and stress coping attitudes. Procedia—Social and Behavioral Sciences, 186, 613–617. [Google Scholar] [CrossRef]

- Palinkas, L. A., Horwitz, S. M., Green, C. A., Wisdom, J. P., Duan, N., & Hoagwood, K. (2015). Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Administration and Policy in Mental Health and Mental Health Services Research, 42(5), 533–544. [Google Scholar] [CrossRef]

- Pantachai, P., Homjan, S., Srikham, O., & Markjaroen, K. (2024). Factors affecting the adjustment of pre-service teachers. Journal of Education and Learning, 14(1), 282–288. [Google Scholar] [CrossRef]

- Pokrivcakova, S. (2023). Pre-service teachers’ attitudes towards artificial intelligence and its integration into EFL teaching and learning. Journal of Language and Cultural Education, 11(3), 100–114. [Google Scholar] [CrossRef]

- Porta, T., & Hudson, C. (2025). ‘I don’t know how to properly deal with challenging and complex behaviour’: Initial teacher education for pre-service teachers on behaviour management. Cogent Education, 12(1). [Google Scholar] [CrossRef]

- Ragins, B. R., & Kram, K. R. (2007). The handbook of mentoring at work: Theory, research and practice (1st ed.). SAGE Publications, Incorporated. [Google Scholar]

- Rodriguez-Gomez, D., Muñoz-Moreno, J. L., & Ion, G. (2024). Empowering teachers: Self-regulated learning strategies for sustainable professional development in initial teacher education at higher education institutions. Sustainability, 16(7), 3021. [Google Scholar] [CrossRef]

- Samarescu, N., Bumbac, R., & Zamfiroiu, A. (2024). Artificial intelligence in education: Next-gen teacher perspectives. Amfiteatru Economic, 26(65), 145–161. [Google Scholar] [CrossRef]

- Sanusi, I. T., Ayanwale, M. A., & Tolorunleke, A. E. (2024). Investigating pre-service teachers’ artificial intelligence perception from the perspective of planned behaviour theory. Computers and Education: Artificial Intelligence, 6, 100202. [Google Scholar] [CrossRef]

- Schunk, D., & Mullen, C. A. (2013). Toward a conceptual model of mentoring research: Integration with self-regulated learning. Educational Psychology Review, 25(3), 361–389. [Google Scholar] [CrossRef]

- Smith, C., & Worsfold, K. (2015). Unpacking the learning-work nexus: “Priming” as lever for high-quality learning outcomes in work-integrated learning curricula. Studies in Higher Education (Dorchester-on-Thames), 40(1), 22–42. [Google Scholar] [CrossRef]

- Smith-Ruig, T. (2014). Exploring the links between mentoring and work-integrated learning. Higher Education Research and Development, 33(4), 769–782. [Google Scholar] [CrossRef]

- Sperling, K., Stenberg, C.-J., McGrath, C., Åkerfeldt, A., Heintz, F., & Stenliden, L. (2024). In search of artificial intelligence (AI) literacy in teacher education: A scoping review. Computers and Education Open, 6, 100169. [Google Scholar] [CrossRef]

- Tise, J. C., Hernandez, P. R., & Schultz, P. W. (2023). Mentoring underrepresented students for success: Self-regulated learning strategies as a critical link between mentor support and educational attainment. Contemporary Educational Psychology, 75, 102233. [Google Scholar] [CrossRef]

- Trede, F., & Jackson, D. (2021). Educating the deliberate professional and enhancing professional agency through peer reflection of work-integrated learning. Active Learning in Higher Education, 22(3), 171–187. [Google Scholar] [CrossRef]

- Trent, J. (2023). How are preservice teachers discursively positioned during microteaching? The views of student teachers in Hong Kong. Australian Journal of Teacher Education, 48(6), 80–97. [Google Scholar] [CrossRef]

- Uzumcu, O., & Acilmis, H. (2024). Do innovative teachers use AI-powered tools more interactively? A study in the context of diffusion of innovation theory. Technology, Knowledge and Learning, 29, 1109–1128. [Google Scholar] [CrossRef]

- Wenham, K. E., Valencia-Forrester, F., & Backhaus, B. (2020). Make or break: The role and support needs of academic advisors in work-integrated learning courses. Higher Education Research & Development, 39(5), 1026–1039. [Google Scholar]

- Zhang, C., Schießl, J., Plößl, L., Hofmann, F., & Gläser-Zikuda, M. (2023). Acceptance of artificial intelligence among pre-service teachers: A multigroup analysis. International Journal of Educational Technology in Higher Education, 20, 49. [Google Scholar] [CrossRef]

- Zheng, Y., Zheng, X., Wu, C.-H., Yao, X., & Wang, Y. (2021). Newcomers’ relationship-building behavior, mentor information sharing and newcomer adjustment: The moderating effects of perceived mentor and newcomer deep similarity. Journal of Vocational Behavior, 125, 103519. [Google Scholar] [CrossRef]

- Zimmerman, B. J. (2002). Becoming a self-regulated learner: An overview. Theory into Practice, 41(2), 64–70. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nguyen, N.N.; Barbieri, W. Mentorship in the Age of Generative AI: ChatGPT to Support Self-Regulated Learning of Pre-Service Teachers Before and During Placements. Educ. Sci. 2025, 15, 642. https://doi.org/10.3390/educsci15060642

Nguyen NN, Barbieri W. Mentorship in the Age of Generative AI: ChatGPT to Support Self-Regulated Learning of Pre-Service Teachers Before and During Placements. Education Sciences. 2025; 15(6):642. https://doi.org/10.3390/educsci15060642

Chicago/Turabian StyleNguyen (Ruby), Ngoc Nhu, and Walter Barbieri. 2025. "Mentorship in the Age of Generative AI: ChatGPT to Support Self-Regulated Learning of Pre-Service Teachers Before and During Placements" Education Sciences 15, no. 6: 642. https://doi.org/10.3390/educsci15060642

APA StyleNguyen, N. N., & Barbieri, W. (2025). Mentorship in the Age of Generative AI: ChatGPT to Support Self-Regulated Learning of Pre-Service Teachers Before and During Placements. Education Sciences, 15(6), 642. https://doi.org/10.3390/educsci15060642